San Francisco Takes Legal Action Against AI-Generated Deepfake Nude Websites

12 Sources

12 Sources

[1]

San Francisco goes after websites that make AI deepfake nudes of women and girls

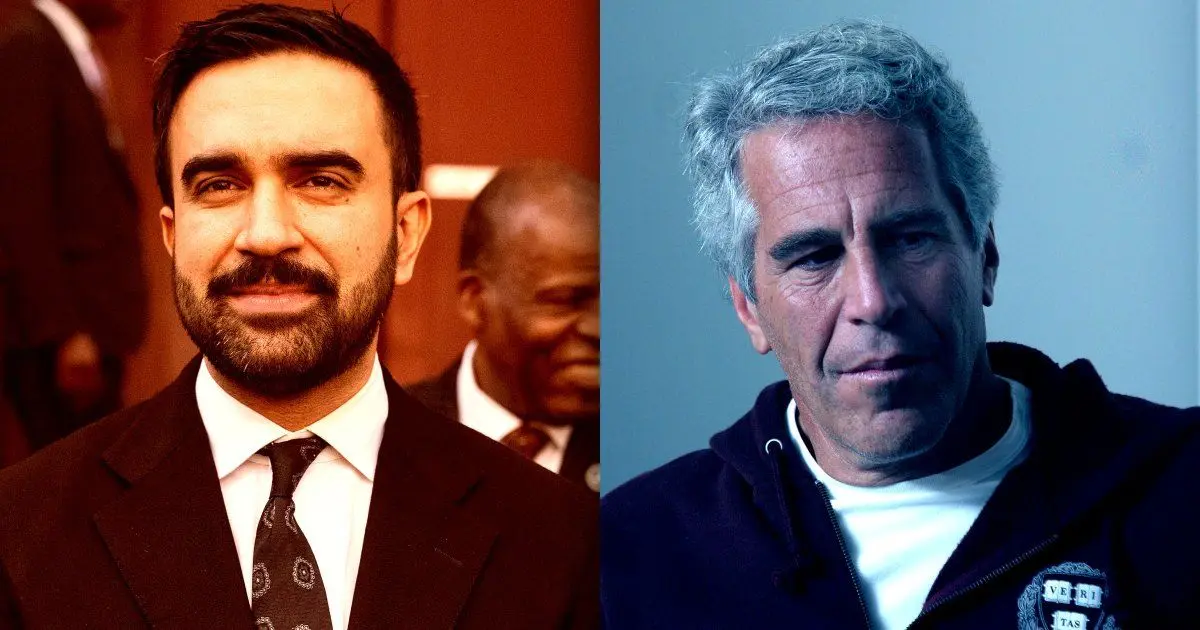

Nearly a year after AI-generated nude images of high school girls upended a community in southern Spain, a juvenile court this summer sentenced 15 of their classmates to a year of probation. But the artificial intelligence tool used to create the harmful deepfakes is still easily accessible on the internet, promising to "undress any photo" uploaded to the website within seconds. Now a new effort to shut down the app and others like it is being pursued in California, where San Francisco this week filed a first-of-its-kind lawsuit that experts say could set a precedent but will also face many hurdles. "The proliferation of these images has exploited a shocking number of women and girls across the globe," said David Chiu, the elected city attorney of San Francisco who brought the case against a group of widely visited websites based in Estonia, Serbia, the United Kingdom and elsewhere. "These images are used to bully, humiliate and threaten women and girls," he said in an interview with The Associated Press. "And the impact on the victims has been devastating on their reputation, mental health, loss of autonomy, and in some instances, causing some to become suicidal." The lawsuit brought on behalf of the people of California alleges that the services broke numerous state laws against fraudulent business practices, nonconsensual pornography and the sexual abuse of children. But it can be hard to determine who runs the apps, which are unavailable in phone app stores but still easily found on the internet. Contacted late last year by the AP, one service claimed by email that its "CEO is based and moves throughout the USA" but declined to provide any evidence or answer other questions. The AP is not naming the specific apps being sued in order to not promote them. "There are a number of sites where we don't know at this moment exactly who these operators are and where they're operating from, but we have investigative tools and subpoena authority to dig into that," Chiu said. "And we will certainly utilize our powers in the course of this litigation." Many of the tools are being used to create realistic fakes that "nudify" photos of clothed adult women, including celebrities, without their consent. But they've also popped up in schools around the world, from Australia to Beverly Hills in California, typically with boys creating the images of female classmates that then circulate widely through social media. In one of the first widely publicized cases last September in Almendralejo, Spain, a physician whose daughter was among a group of girls victimized last year and helped bring it to the public's attention said she's satisfied by the severity of the sentence their classmates are facing after a court decision earlier this summer. But it is "not only the responsibility of society, of education, of parents and schools, but also the responsibility of the digital giants that profit from all this garbage," Dr. Miriam al Adib Mendiri said in an interview Friday. She applauded San Francisco's action but said more efforts are needed, including from bigger companies like California-based Meta Platforms and its subsidiary WhatsApp, which was used to circulate the images in Spain. While schools and law enforcement agencies have sought to punish those who make and share the deepfakes, authorities have struggled with what to do about the tools themselves. In January, the executive branch of the European Union explained in a letter to a Spanish member of the European Parliament that the app used in Almendralejo "does not appear" to fall under the bloc's sweeping new rules for bolstering online safety because it's not a big enough platform. Organizations that have been tracking the growth of AI-generated child sexual abuse material will be closely following the San Francisco case. The lawsuit "has the potential to set legal precedent in this area," said Emily Slifer, the director of policy at Thorn, an organization that works to combat the sexual exploitation of children. A researcher at Stanford University said that because so many of the defendants are based outside the U.S., it will be harder to bring them to justice. Chiu "has an uphill battle with this case, but may be able to get some of the sites taken offline if the defendants running them ignore the lawsuit," said Stanford's Riana Pfefferkorn. She said that could happen if the city wins by default in their absence and obtains orders affecting domain-name registrars, web hosts and payment processors "that would effectively shutter those sites even if their owners never appear in the litigation."

[2]

San Francisco goes after websites that make AI deepfake nudes of women and girls

Nearly a year after AI-generated nude images of high school girls upended a community in southern Spain, a juvenile court this summer sentenced 15 of their classmates to a year of probation. But the artificial intelligence tool used to create the harmful deepfakes is still easily accessible on the internet, promising to "undress any photo" uploaded to the website within seconds. Now a new effort to shut down the app and others like it is being pursued in California, where San Francisco this week filed a first-of-its-kind lawsuit that experts say could set a precedent but will also face many hurdles. "The proliferation of these images has exploited a shocking number of women and girls across the globe," said David Chiu, the elected city attorney of San Francisco who brought the case against a group of widely visited websites based in Estonia, Serbia, the United Kingdom and elsewhere. "These images are used to bully, humiliate and threaten women and girls," he said in an interview with The Associated Press. "And the impact on the victims has been devastating on their reputation, mental health, loss of autonomy, and in some instances, causing some to become suicidal." The lawsuit brought on behalf of the people of California alleges that the services broke numerous state laws against fraudulent business practices, nonconsensual pornography and the sexual abuse of children. But it can be hard to determine who runs the apps, which are unavailable in phone app stores but still easily found on the internet. Contacted late last year by the AP, one service claimed by email that its "CEO is based and moves throughout the USA" but declined to provide any evidence or answer other questions. The AP is not naming the specific apps being sued in order to not promote them. "There are a number of sites where we don't know at this moment exactly who these operators are and where they're operating from, but we have investigative tools and subpoena authority to dig into that," Chiu said. "And we will certainly utilize our powers in the course of this litigation." Many of the tools are being used to create realistic fakes that "nudify" photos of clothed adult women, including celebrities, without their consent. But they've also popped up in schools around the world, from Australia to Beverly Hills in California, typically with boys creating the images of female classmates that then circulate widely through social media. In one of the first widely publicized cases last September in Almendralejo, Spain, a physician whose daughter was among a group of girls victimized last year and helped bring it to the public's attention said she's satisfied by the severity of the sentence their classmates are facing after a court decision earlier this summer. But it is "not only the responsibility of society, of education, of parents and schools, but also the responsibility of the digital giants that profit from all this garbage," Dr. Miriam al Adib Mendiri said in an interview Friday. She applauded San Francisco's action but said more efforts are needed, including from bigger companies like California-based Meta Platforms and its subsidiary WhatsApp, which was used to circulate the images in Spain. While schools and law enforcement agencies have sought to punish those who make and share the deepfakes, authorities have struggled with what to do about the tools themselves. In January, the executive branch of the European Union explained in a letter to a Spanish member of the European Parliament that the app used in Almendralejo "does not appear" to fall under the bloc's sweeping new rules for bolstering online safety because it's not a big enough platform. Organizations that have been tracking the growth of AI-generated child sexual abuse material will be closely following the San Francisco case. The lawsuit "has the potential to set legal precedent in this area," said Emily Slifer, the director of policy at Thorn, an organization that works to combat the sexual exploitation of children. A researcher at Stanford University said that because so many of the defendants are based outside the U.S., it will be harder to bring them to justice. Chiu "has an uphill battle with this case, but may be able to get some of the sites taken offline if the defendants running them ignore the lawsuit," said Stanford's Riana Pfefferkorn. She said that could happen if the city wins by default in their absence and obtains orders affecting domain-name registrars, web hosts and payment processors "that would effectively shutter those sites even if their owners never appear in the litigation."

[3]

San Francisco goes after websites that make AI deepfake nudes of women and girls

Nearly a year after AI-generated nude images of high school girls upended a community in southern Spain, a juvenile court this summer sentenced 15 of their classmates to a year of probation. But the artificial intelligence tool used to create the harmful deepfakes is still easily accessible on the internet, promising to "undress any photo" uploaded to the website within seconds. Now a new effort to shut down the app and others like it is being pursued in California, where San Francisco this week filed a first-of-its-kind lawsuit that experts say could set a precedent but will also face many hurdles. "The proliferation of these images has exploited a shocking number of women and girls across the globe," said David Chiu, the elected city attorney of San Francisco who brought the case against a group of widely visited websites based in Estonia, Serbia, the United Kingdom and elsewhere. "These images are used to bully, humiliate and threaten women and girls," he said in an interview with The Associated Press. "And the impact on the victims has been devastating on their reputation, mental health, loss of autonomy, and in some instances, causing some to become suicidal." The lawsuit brought on behalf of the people of California alleges that the services broke numerous state laws against fraudulent business practices, nonconsensual pornography and the sexual abuse of children. But it can be hard to determine who runs the apps, which are unavailable in phone app stores but still easily found on the internet. Contacted late last year by the AP, one service claimed by email that its "CEO is based and moves throughout the USA" but declined to provide any evidence or answer other questions. The AP is not naming the specific apps being sued in order to not promote them. "There are a number of sites where we don't know at this moment exactly who these operators are and where they're operating from, but we have investigative tools and subpoena authority to dig into that," Chiu said. "And we will certainly utilize our powers in the course of this litigation." Many of the tools are being used to create realistic fakes that "nudify" photos of clothed adult women, including celebrities, without their consent. But they've also popped up in schools around the world, from Australia to Beverly Hills in California, typically with boys creating the images of female classmates that then circulate widely through social media. In one of the first widely publicized cases last September in Almendralejo, Spain, a physician whose daughter was among a group of girls victimized last year and helped bring it to the public's attention said she's satisfied by the severity of the sentence their classmates are facing after a court decision earlier this summer. But it is "not only the responsibility of society, of education, of parents and schools, but also the responsibility of the digital giants that profit from all this garbage," Dr. Miriam al Adib Mendiri said in an interview Friday. She applauded San Francisco's action but said more efforts are needed, including from bigger companies like California-based Meta Platforms and its subsidiary WhatsApp, which was used to circulate the images in Spain. While schools and law enforcement agencies have sought to punish those who make and share the deepfakes, authorities have struggled with what to do about the tools themselves. In January, the executive branch of the European Union explained in a letter to a Spanish member of the European Parliament that the app used in Almendralejo "does not appear" to fall under the bloc's sweeping new rules for bolstering online safety because it's not a big enough platform. Organizations that have been tracking the growth of AI-generated child sexual abuse material will be closely following the San Francisco case. The lawsuit "has the potential to set legal precedent in this area," said Emily Slifer, the director of policy at Thorn, an organization that works to combat the sexual exploitation of children. A researcher at Stanford University said that because so many of the defendants are based outside the U.S., it will be harder to bring them to justice. Chiu "has an uphill battle with this case, but may be able to get some of the sites taken offline if the defendants running them ignore the lawsuit," said Stanford's Riana Pfefferkorn. She said that could happen if the city wins by default in their absence and obtains orders affecting domain-name registrars, web hosts and payment processors "that would effectively shutter those sites even if their owners never appear in the litigation."

[4]

San Francisco Goes After Websites That Make AI Deepfake Nudes of Women and Girls

Nearly a year after AI-generated nude images of high school girls upended a community in southern Spain, a juvenile court this summer sentenced 15 of their classmates to a year of probation. But the artificial intelligence tool used to create the harmful deepfakes is still easily accessible on the internet, promising to "undress any photo" uploaded to the website within seconds. Now a new effort to shut down the app and others like it is being pursued in California, where San Francisco this week filed a first-of-its-kind lawsuit that experts say could set a precedent but will also face many hurdles. "The proliferation of these images has exploited a shocking number of women and girls across the globe," said David Chiu, the elected city attorney of San Francisco who brought the case against a group of widely visited websites based in Estonia, Serbia, the United Kingdom and elsewhere. "These images are used to bully, humiliate and threaten women and girls," he said in an interview with The Associated Press. "And the impact on the victims has been devastating on their reputation, mental health, loss of autonomy, and in some instances, causing some to become suicidal." The lawsuit brought on behalf of the people of California alleges that the services broke numerous state laws against fraudulent business practices, nonconsensual pornography and the sexual abuse of children. But it can be hard to determine who runs the apps, which are unavailable in phone app stores but still easily found on the internet. Contacted late last year by the AP, one service claimed by email that its "CEO is based and moves throughout the USA" but declined to provide any evidence or answer other questions. The AP is not naming the specific apps being sued in order to not promote them. "There are a number of sites where we don't know at this moment exactly who these operators are and where they're operating from, but we have investigative tools and subpoena authority to dig into that," Chiu said. "And we will certainly utilize our powers in the course of this litigation." Many of the tools are being used to create realistic fakes that "nudify" photos of clothed adult women, including celebrities, without their consent. But they've also popped up in schools around the world, from Australia to Beverly Hills in California, typically with boys creating the images of female classmates that then circulate widely through social media. In one of the first widely publicized cases last September in Almendralejo, Spain, a physician whose daughter was among a group of girls victimized last year and helped bring it to the public's attention said she's satisfied by the severity of the sentence their classmates are facing after a court decision earlier this summer. But it is "not only the responsibility of society, of education, of parents and schools, but also the responsibility of the digital giants that profit from all this garbage," Dr. Miriam al Adib Mendiri said in an interview Friday. She applauded San Francisco's action but said more efforts are needed, including from bigger companies like California-based Meta Platforms and its subsidiary WhatsApp, which was used to circulate the images in Spain. While schools and law enforcement agencies have sought to punish those who make and share the deepfakes, authorities have struggled with what to do about the tools themselves. In January, the executive branch of the European Union explained in a letter to a Spanish member of the European Parliament that the app used in Almendralejo "does not appear" to fall under the bloc's sweeping new rules for bolstering online safety because it's not a big enough platform. Organizations that have been tracking the growth of AI-generated child sexual abuse material will be closely following the San Francisco case. The lawsuit "has the potential to set legal precedent in this area," said Emily Slifer, the director of policy at Thorn, an organization that works to combat the sexual exploitation of children. A researcher at Stanford University said that because so many of the defendants are based outside the U.S., it will be harder to bring them to justice. Chiu "has an uphill battle with this case, but may be able to get some of the sites taken offline if the defendants running them ignore the lawsuit," said Stanford's Riana Pfefferkorn. She said that could happen if the city wins by default in their absence and obtains orders affecting domain-name registrars, web hosts and payment processors "that would effectively shutter those sites even if their owners never appear in the litigation." Copyright 2024 The Associated Press. All rights reserved. This material may not be published, broadcast, rewritten or redistributed.

[5]

San Francisco goes after websites that make AI deepfake nudes of women and girls

Nearly a year after AI-generated nude images of high school girls upended a community in southern Spain, a juvenile court this summer sentenced 15 of their classmates to a year of probation. But the artificial intelligence tool used to create the harmful deepfakes is still easily accessible on the internet, promising to "undress any photo" uploaded to the website within seconds. Now a new effort to shut down the app and others like it is being pursued in California, where San Francisco this week filed a first-of-its-kind lawsuit that experts say could set a precedent but will also face many hurdles. "The proliferation of these images has exploited a shocking number of women and girls across the globe," said David Chiu, the elected city attorney of San Francisco who brought the case against a group of widely visited websites tied to entities in California, New Mexico, Estonia, Serbia, the United Kingdom and elsewhere. "These images are used to bully, humiliate and threaten women and girls," he said in an interview with The Associated Press. "And the impact on the victims has been devastating on their reputation, mental health, loss of autonomy, and in some instances, causing some to become suicidal." The lawsuit brought on behalf of the people of California alleges that the services broke numerous state laws against fraudulent business practices, nonconsensual pornography and the sexual abuse of children. But it can be hard to determine who runs the apps, which are unavailable in phone app stores but still easily found on the internet. Contacted late last year by the , one service claimed by email that its "CEO is based and moves throughout the USA" but declined to provide any evidence or answer other questions. The is not naming the specific apps being sued in order to not promote them. "There are a number of sites where we don't know at this moment exactly who these operators are and where they're operating from, but we have investigative tools and subpoena authority to dig into that," Chiu said. "And we will certainly utilize our powers in the course of this litigation." Many of the tools are being used to create realistic fakes that "nudify" photos of clothed adult women, including celebrities, without their consent. But they have also popped up in schools around the world, from Australia to Beverly Hills in California, typically with boys creating the images of female classmates that then circulate through social media. In one of the first widely publicized cases last September in Almendralejo, Spain, a physician who helped bring it to the public's attention after her daughter was among the victims said she is satisfied by the severity of the sentence their classmates are facing after a court decision earlier this summer. But it is "not only the responsibility of society, of education, of parents and schools, but also the responsibility of the digital giants that profit from all this garbage," Dr. Miriam Al Adib Mendiri said in an interview Friday. She applauded San Francisco's action but said more efforts are needed, including from bigger companies like California-based Meta Platforms and its subsidiary WhatsApp, which was used to circulate the images in Spain. While schools and law enforcement agencies have sought to punish those who make and share the deepfakes, authorities have struggled with what to do about the tools themselves. In January, the executive branch of the European Union explained in a letter to a Spanish member of the European Parliament that the app used in Almendralejo "does not appear" to fall under the bloc's sweeping new rules for bolstering online safety because it is not a big enough platform. Organizations that have been tracking the growth of AI-generated child sexual abuse material will be closely following the San Francisco case. The lawsuit "has the potential to set legal precedent in this area," said Emily Slifer, the director of policy at Thorn, an organization that works to combat the sexual exploitation of children. A researcher at Stanford University said that because so many of the defendants are based outside the U.S., it will be harder to bring them to justice. Chiu "has an uphill battle with this case, but may be able to get some of the sites taken offline if the defendants running them ignore the lawsuit," said Stanford's Riana Pfefferkorn. She said that could happen if the city wins by default in their absence and obtains orders affecting domain-name registrars, web hosts and payment processors "that would effectively shutter those sites even if their owners never appear in the litigation."

[6]

San Francisco goes after websites that make AI deepfake nudes of women and girls

Now a new effort to shut down the app and others like it is being pursued in California, where San Francisco this week filed a first-of-its-kind lawsuit that experts say could set a precedent but will also face many hurdles.Nearly a year after AI-generated nude images of high school girls upended a community in southern Spain, a juvenile court this summer sentenced 15 of their classmates to a year of probation. But the artificial intelligence tool used to create the harmful deepfakes is still easily accessible on the internet, promising to "undress any photo" uploaded to the website within seconds. Now a new effort to shut down the app and others like it is being pursued in California, where San Francisco this week filed a first-of-its-kind lawsuit that experts say could set a precedent but will also face many hurdles. "The proliferation of these images has exploited a shocking number of women and girls across the globe," said David Chiu, the elected city attorney of San Francisco who brought the case against a group of widely visited websites tied to entities in California, New Mexico, Estonia, Serbia, the United Kingdom and elsewhere. "These images are used to bully, humiliate and threaten women and girls," he said in an interview with The Associated Press. "And the impact on the victims has been devastating on their reputation, mental health, loss of autonomy, and in some instances, causing some to become suicidal." The lawsuit brought on behalf of the people of California alleges that the services broke numerous state laws against fraudulent business practices, nonconsensual pornography and the sexual abuse of children. But it can be hard to determine who runs the apps, which are unavailable in phone app stores but still easily found on the internet. Contacted late last year by the AP, one service claimed by email that its "CEO is based and moves throughout the USA" but declined to provide any evidence or answer other questions. The is not naming the specific apps being sued in order to not promote them. "There are a number of sites where we don't know at this moment exactly who these operators are and where they're operating from, but we have investigative tools and subpoena authority to dig into that," Chiu said. "And we will certainly utilize our powers in the course of this litigation." Many of the tools are being used to create realistic fakes that "nudify" photos of clothed adult women, including celebrities, without their consent. But they have also popped up in schools around the world, from Australia to Beverly Hills in California, typically with boys creating the images of female classmates that then circulate through social media. In one of the first widely publicized cases last September in Almendralejo, Spain, a physician who helped bring it to the public's attention after her daughter was among the victims said she is satisfied by the severity of the sentence their classmates are facing after a court decision earlier this summer. But it is "not only the responsibility of society, of education, of parents and schools, but also the responsibility of the digital giants that profit from all this garbage," Dr. Miriam Al Adib Mendiri said in an interview Friday. She applauded San Francisco's action but said more efforts are needed, including from bigger companies like California-based Meta Platforms and its subsidiary WhatsApp, which was used to circulate the images in Spain. While schools and law enforcement agencies have sought to punish those who make and share the deepfakes, authorities have struggled with what to do about the tools themselves. In January, the executive branch of the European Union explained in a letter to a Spanish member of the European Parliament that the app used in Almendralejo "does not appear" to fall under the bloc's sweeping new rules for bolstering online safety because it is not a big enough platform. Organizations that have been tracking the growth of AI-generated child sexual abuse material will be closely following the San Francisco case. The lawsuit "has the potential to set legal precedent in this area," said Emily Slifer, the director of policy at Thorn, an organization that works to combat the sexual exploitation of children. A researcher at Stanford University said that because so many of the defendants are based outside the U.S., it will be harder to bring them to justice. Chiu "has an uphill battle with this case, but may be able to get some of the sites taken offline if the defendants running them ignore the lawsuit," said Stanford's Riana Pfefferkorn. She said that could happen if the city wins by default in their absence and obtains orders affecting domain-name registrars, web hosts and payment processors "that would effectively shutter those sites even if their owners never appear in the litigation."

[7]

Boys are taking images of female classmates and using AI to deepfake nude photos. A landmark lawsuit could stop it.

Nearly a year after AI-generated nude images of high school girls upended a community in southern Spain, a juvenile court this summer sentenced 15 of their classmates to a year of probation. But the artificial intelligence tool used to create the harmful deepfakes is still easily accessible on the internet, promising to "undress any photo" uploaded to the website within seconds. Now a new effort to shut down the app and others like it is being pursued in California, where San Francisco this week filed a first-of-its-kind lawsuit that experts say could set a precedent but will also face many hurdles. "The proliferation of these images has exploited a shocking number of women and girls across the globe," said David Chiu, the elected city attorney of San Francisco who brought the case against a group of widely visited websites based in Estonia, Serbia, the United Kingdom and elsewhere. "These images are used to bully, humiliate and threaten women and girls," he said in an interview with The Associated Press. "And the impact on the victims has been devastating on their reputation, mental health, loss of autonomy, and in some instances, causing some to become suicidal." The lawsuit brought on behalf of the people of California alleges that the services broke numerous state laws against fraudulent business practices, nonconsensual pornography and the sexual abuse of children. But it can be hard to determine who runs the apps, which are unavailable in phone app stores but still easily found on the internet. Contacted late last year by the AP, one service claimed by email that its "CEO is based and moves throughout the USA" but declined to provide any evidence or answer other questions. The AP is not naming the specific apps being sued in order to not promote them. "There are a number of sites where we don't know at this moment exactly who these operators are and where they're operating from, but we have investigative tools and subpoena authority to dig into that," Chiu said. "And we will certainly utilize our powers in the course of this litigation." Many of the tools are being used to create realistic fakes that "nudify" photos of clothed adult women, including celebrities, without their consent. But they've also popped up in schools around the world, from Australia to Beverly Hills in California, typically with boys creating the images of female classmates that then circulate widely through social media. In one of the first widely publicized cases last September in Almendralejo, Spain, a physician whose daughter was among a group of girls victimized last year and helped bring it to the public's attention said she's satisfied by the severity of the sentence their classmates are facing after a court decision earlier this summer. But it is "not only the responsibility of society, of education, of parents and schools, but also the responsibility of the digital giants that profit from all this garbage," Dr. Miriam al Adib Mendiri said in an interview Friday. She applauded San Francisco's action but said more efforts are needed, including from bigger companies like California-based Meta Platforms and its subsidiary WhatsApp, which was used to circulate the images in Spain. While schools and law enforcement agencies have sought to punish those who make and share the deepfakes, authorities have struggled with what to do about the tools themselves. In January, the executive branch of the European Union explained in a letter to a Spanish member of the European Parliament that the app used in Almendralejo "does not appear" to fall under the bloc's sweeping new rules for bolstering online safety because it's not a big enough platform. Organizations that have been tracking the growth of AI-generated child sexual abuse material will be closely following the San Francisco case. The lawsuit "has the potential to set legal precedent in this area," said Emily Slifer, the director of policy at Thorn, an organization that works to combat the sexual exploitation of children. A researcher at Stanford University said that because so many of the defendants are based outside the U.S., it will be harder to bring them to justice. Chiu "has an uphill battle with this case, but may be able to get some of the sites taken offline if the defendants running them ignore the lawsuit," said Stanford's Riana Pfefferkorn. She said that could happen if the city wins by default in their absence and obtains orders affecting domain-name registrars, web hosts and payment processors "that would effectively shutter those sites even if their owners never appear in the litigation."

[8]

San Francisco goes after websites that make AI deepfake nudes of women and girls

Nearly a year after AI-generated nude images of high school girls upended a community in southern Spain, a juvenile court this summer sentenced 15 of their classmates to a year of probation. But the artificial intelligence tool used to create the harmful deepfakes is still easily accessible on the internet, promising to "undress any photo" uploaded to the website within seconds. Now a new effort to shut down the app and others like it is being pursued in California, where San Francisco this week filed a first-of-its-kind lawsuit that experts say could set a precedent but will also face many hurdles. "The proliferation of these images has exploited a shocking number of women and girls across the globe," said David Chiu, the elected city attorney of San Francisco who brought the case against a group of widely visited websites based in Estonia, Serbia, the United Kingdom and elsewhere. What can you do if someone makes deepfakes of you? (Unravel the complexities of our digital world on The Interface podcast, where business leaders and scientists share insights that shape tomorrow's innovation. The Interface is also available on YouTube, Apple Podcasts and Spotify.) "These images are used to bully, humiliate and threaten women and girls," he said in an interview with The Associated Press. "And the impact on the victims has been devastating on their reputation, mental health, loss of autonomy, and in some instances, causing some to become suicidal." The lawsuit brought on behalf of the people of California alleges that the services broke numerous state laws against fraudulent business practices, non-consensual pornography and the sexual abuse of children. But it can be hard to determine who runs the apps, which are unavailable in phone app stores but still easily found on the internet. Contacted late last year by the AP, one service claimed by email that its "CEO is based and moves throughout the USA" but declined to provide any evidence or answer other questions. The AP is not naming the specific apps being sued in order to not promote them. "There are a number of sites where we don't know at this moment exactly who these operators are and where they're operating from, but we have investigative tools and subpoena authority to dig into that," Chiu said. "And we will certainly utilise our powers in the course of this litigation." Many of the tools are being used to create realistic fakes that "nudify" photos of clothed adult women, including celebrities, without their consent. But they've also popped up in schools around the world, from Australia to Beverly Hills in California, typically with boys creating the images of female classmates that then circulate widely through social media. In one of the first widely publicised cases last September in Almendralejo, Spain, a physician whose daughter was among a group of girls victimised last year and helped bring it to the public's attention said she's satisfied by the severity of the sentence their classmates are facing after a court decision earlier this summer. But it is "not only the responsibility of society, of education, of parents and schools, but also the responsibility of the digital giants that profit from all this garbage," Dr. Miriam al Adib Mendiri said in an interview Friday. She applauded San Francisco's action but said more efforts are needed, including from bigger companies like California-based Meta Platforms and its subsidiary WhatsApp, which was used to circulate the images in Spain. While schools and law enforcement agencies have sought to punish those who make and share the deepfakes, authorities have struggled with what to do about the tools themselves. Musk's AI chatbot Grok generates deepfakes of PM Modi and other illegal images In January, the executive branch of the European Union explained in a letter to a Spanish member of the European Parliament that the app used in Almendralejo "does not appear" to fall under the bloc's sweeping new rules for bolstering online safety because it's not a big enough platform. Organisations that have been tracking the growth of AI-generated child sexual abuse material will be closely following the San Francisco case. The lawsuit "has the potential to set legal precedent in this area," said Emily Slifer, the director of policy at Thorn, an organization that works to combat the sexual exploitation of children. A researcher at Stanford University said that because so many of the defendants are based outside the U.S., it will be harder to bring them to justice. Chiu "has an uphill battle with this case, but may be able to get some of the sites taken offline if the defendants running them ignore the lawsuit," said Stanford's Riana Pfefferkorn. She said that could happen if the city wins by default in their absence and obtains orders affecting domain-name registrars, web hosts and payment processors "that would effectively shutter those sites even if their owners never appear in the litigation." Read Comments

[9]

San Francisco Sues 16 Websites That Let People Make Deepfake Nudes

San Francisco is suing 16 websites that use AI to help users "undress" or "nudify" photographs of women and girls. The websites leverage open-source AI models to turn images of real women into deepfake nudes without their consent. According to San Francisco City Attorney David Chiu, these websites were visited more than 200 million times in the first six months of 2024. "This investigation has taken us to the darkest corners of the internet, and I am absolutely horrified for the women and girls who have had to endure this exploitation," Chiu said in a statement. The deepfakes are used to "extort, bully, threaten, and humiliate women and girls," Chiu says. And victims "have found virtually no recourse or ability to control their own image." The lawsuit alleges violations of state and federal laws prohibiting deepfake pornography, revenge pornography, and child pornography, as well as violations of California's Unfair Competition Law. Chiu wants the websites shut down and for the court to ban site owners from doing this again. He's also seeking civil penalties. The site names have been redacted in the copy of the lawsuit posted by Chiu's office. Some of their owners are identified, others are unknown. Deepfake technology has been used to create non-consensual porn of major stars like Taylor Swift but also teenagers -- from Beverly Hills to New Jersey. "We all need to do our part to crack down on bad actors using AI to exploit and abuse real people, including children," Chiu says.

[10]

AI-driven deepfake sites that 'undress' women and girls face landmark...

San Francisco officials filed a landmark lawsuit against popular deepfake websites that use artificial intelligence to "undress" images of clothed women and girls. The city attorney's office is suing 16 of the most viewed AI "undressing" sites - which were collectively visited more than 200 million times in just the first half of 2024, the suit said. The websites allow users to upload images of real, clothed people - which the AI then "undresses" and turns into fake nude images. "[I]magine wasting time taking her out on dates, when you can just use [our website] to get her nudes," one of the deepfake websites said, according to the lawsuit. The lawsuit said the deepfake nudes are made without consent and are used to intimidate, bully and extort women and girls in California and across the country. "This investigation has taken us to the darkest corners of the internet, and I am absolutely horrified for the women and girls who have had to endure this exploitation," San Francisco City Attorney David Chiu said in a statement. "This is a big, multi-faceted problem that we, as a society, need to solve as soon as possible," he said. The "undressing" sites have broken federal and state laws banning revenge pornography, deepfake pornography and child pornography, the lawsuit said. The suit claimed the defendants also broke California's unfair competition law because "the harm they cause to consumers greatly outweighs any benefits associated with those practices." The city attorney's office is seeking civil penalties and the removal of the deepfake websites, as well as measures to prevent the site owners from creating deepfake pornography in the future. The lawsuit referenced a case from February 2024, when five students were expelled from a California middle school after creating and sharing AI-generated nude images of 16 eighth-grade students. "I feel like I didn't have a choice in what happened to me or what happened to my body," a victim of deepfake nudes said, according to the lawsuit. Another said she and her family live in "hopelessness and perpetual fear that, at any time, such images can reappear and be viewed by countless others." The lawsuit is the first to take on deepfake nude generators head-on. As the AI industry ramps up, deepfakes - or AI-generated and manipulated images - have become more mainstream. AI-generated images can often spread misinformation like wildfire. A deepfake of Pope Francis in a white Balenciaga puffer jacket went viral in 2023 - which many believed was real until news outlets began reporting otherwise. But often, deepfakes turn sinister. Fake nude images of children landed at the top of some search results on Microsoft and Google engines, according to an NBC News report in March. Non-consensual deepfake nudes of celebrities like Taylor Swift have circulated the web. The AI-generated nude images can often lead to sextortion schemes, when a victim is forced to pay money to prevent the release of fake images. "We have to be very clear that this is not innovation - this is sexual abuse," Chiu said. "We all need to do our part to crack down on bad actors using AI to exploit and abuse real people, including children."

[11]

San Francisco aims to take down AI undressing websites in new lawsuit

City Attorney David Chiu wants to stop 16 popular sites that create unauthorized nude deepfakes of women and children. San Francisco City Attorney David Chiu announced he intended to shut down 16 of the most popular AI "undressing" sites at a press conference on Thursday. reported that the City Attorney is accusing these sites of violating federal laws regarding revenge pornography, deepfake pornography and child pornography. Chiu's office also accused the sites of violating the state of California's unfair competition law because "the harm they cause to consumers greatly outweighs any benefits associated with those practices," according to filed in a California superior court. The complaint focuses on a total of 50 defendants Chiu intends to prosecute for operating undressing websites. Some of the defendants' and websites' names were redacted but it also publicly identifies a few companies that operate "some of the world's most popular websites that offer to nudify images of women and girls" such as Sol Ecom located in Florida, Briver in New Mexico and the UK-based Itai Tech Ltd. The only identified defendant in the complaint is Augustin Gribinets of Estonia, who is accused of owning an AI undressing site featuring unconsented images of women and children. These websites have generated over 200 million visits in a six-month period. The nonconsensual images of women and children on these sites "are used to bully, threaten and humiliate women and girls" as they gain more visitors "and this distressing trend shows no sign of abating," according to the complaint. The city's attorney cites one case in its legal complaint from February in which an AI undressing site generated images of 16 eighth grade students at a California middle school. The incident possibly refers to one that occurred at a Beverly Hills high school in which 16 students were circulating fake nude images of other students. The school district expelled five students for their involvement in disseminating the illicit images, according to the . Deepfake technology has become a major legal concern especially on the federal level. Last month, published a report on digital replicas and concluded that "a new law is needed." Just a few days later, a bipartisan group of senators introduced that would institute a new law protecting individuals from having their voice, face or body recreated with AI without their consent.

[12]

Popular AI "nudify" sites sued amid shocking rise in victims globally

"Nudify" sites may be fined for making it easy to "see anyone naked," suit says. San Francisco's city attorney David Chiu is suing to shut down 16 of the most popular websites and apps allowing users to "nudify" or "undress" photos of mostly women and girls who have been increasingly harassed and exploited by bad actors online. These sites, Chiu's suit claimed, are "intentionally" designed to "create fake, nude images of women and girls without their consent," boasting that any users can upload any photo to "see anyone naked" by using tech that realistically swaps the faces of real victims onto AI-generated explicit images. "In California and across the country, there has been a stark increase in the number of women and girls harassed and victimized by AI-generated" non-consensual intimate imagery (NCII) and "this distressing trend shows no sign of abating," Chiu's suit said. "Given the widespread availability and popularity" of nudify websites, "San Franciscans and Californians face the threat that they or their loved ones may be victimized in this manner," Chiu's suit warned. In a press conference, Chiu said that this "first-of-its-kind lawsuit" has been raised to defend not just Californians, but "a shocking number of women and girls across the globe" -- from celebrities like Taylor Swift to middle and high school girls. Should the city official win, each nudify site risks fines of $2,500 for each violation of California consumer protection law found. On top of media reports sounding alarms about the AI-generated harm, law enforcement has joined the call to ban so-called deepfakes. Chiu said the harmful deepfakes are often created "by exploiting open-source AI image generation models," such as earlier versions of Stable Diffusion, that can be honed or "fine-tuned" to easily "undress" photos of women and girls that are frequently yanked from social media. While later versions of Stable Diffusion make such "disturbing" forms of misuse much harder, San Francisco city officials noted at the press conference that fine-tunable earlier versions of Stable Diffusion are still widely available to be abused by bad actors. In the US alone, cops are currently so bogged down by reports of fake AI child sex images that it's making it hard to investigate child abuse cases offline, and these AI cases are expected to continue spiking "exponentially." The AI abuse has spread so widely that "the FBI has warned of an uptick in extortion schemes using AI generated non-consensual pornography," Chiu said at the press conference. "And the impact on victims has been devastating," harming "their reputations and their mental health," causing "loss of autonomy," and "in some instances causing individuals to become suicidal." Suing on behalf of the people of the state of California, Chiu is seeking an injunction requiring nudify site owners to cease operation of "all websites they own or operate that are capable of creating AI-generated" non-consensual intimate imagery (NCII) of identifiable individuals. It's the only way, Chiu said, to hold these sites "accountable for creating and distributing AI-generated NCII of women and girls and for aiding and abetting others in perpetrating this conduct." He also wants an order requiring "any domain-name registrars, domain-name registries, webhosts, payment processors, or companies providing user authentication and authorization services or interfaces" to "restrain" nudify site operators from launching new sites to prevent any further misconduct. Chiu's suit redacts the names of the most harmful sites his investigation uncovered but claims that in the first six months of 2024, the sites "have been visited over 200 million times." While victims typically have little legal recourse, Chiu believes that state and federal laws prohibiting deepfake pornography, revenge pornography, and child pornography, as well as California's unfair competition law, can be wielded to take down all 16 sites. Chiu expects that a win will serve as a warning to other nudify site operators that more takedowns are likely coming. "We are bringing this lawsuit to get these websites shut down, but we also want to sound the alarm," Chiu said at the press conference. "Generative AI has enormous promise, but as with all new technologies, there are unanticipated consequences and criminals seeking to exploit them. We must be clear that this is not innovation. This is sexual abuse."

Share

Share

Copy Link

San Francisco's city attorney has filed a lawsuit against websites creating AI-generated nude images of women and girls without consent. The case highlights growing concerns over AI technology misuse and its impact on privacy and consent.

San Francisco's Groundbreaking Lawsuit

San Francisco City Attorney David Chiu has taken a bold step in the fight against AI-generated deepfake pornography by filing a lawsuit against two websites accused of creating and sharing non-consensual nude images of women and girls

1

. This legal action marks the first of its kind by a government entity in the United States, targeting the misuse of artificial intelligence technology in creating sexually explicit content without consent2

.The Defendants and Their Operations

The lawsuit names two websites, their owners, and associated companies as defendants. These sites allegedly use AI tools to "undress" photos of women, charging users a fee for this service

3

. The city attorney's office reports that hundreds of thousands of women have fallen victim to this practice, with their images being manipulated without their knowledge or consent.Impact on Victims and Society

The creation and distribution of these non-consensual deepfake images have far-reaching consequences. Victims, including minors, suffer from severe emotional distress, reputational damage, and privacy violations

4

. The lawsuit emphasizes that this practice disproportionately affects women and girls, perpetuating harmful gender-based exploitation and violence.Legal Grounds and Potential Consequences

San Francisco's lawsuit alleges multiple violations, including unlawful business practices, violation of privacy rights, and failure to verify the age and consent of individuals depicted in the images

5

. The city seeks to shut down these websites, obtain monetary penalties, and establish a precedent for holding such operations accountable.Broader Implications for AI Regulation

This case highlights the urgent need for comprehensive regulations governing AI technology use, especially in creating and manipulating visual content. As AI capabilities advance rapidly, lawmakers and tech companies face increasing pressure to develop ethical guidelines and legal frameworks to protect individuals from exploitation and abuse.

Related Stories

Public Response and Support

The lawsuit has garnered support from various quarters, including victims' rights advocates and tech ethicists. Many see this as a crucial step in addressing the darker implications of AI technology and its potential for misuse in violating personal privacy and dignity.

Challenges in Enforcement

While the lawsuit represents a significant move, enforcing any resulting judgments may prove challenging. The global nature of the internet and the potential for these operations to relocate or rebrand pose ongoing difficulties for regulators and law enforcement agencies in combating such practices effectively.

References

Summarized by

Navi

Related Stories

Recent Highlights

1

French Police Raid X Office as Grok Investigation Expands to Include Holocaust Denial Claims

Policy and Regulation

2

OpenAI launches Codex MacOS app with GPT-5.3 model to challenge Claude Code dominance

Technology

3

Anthropic releases Claude Opus 4.6 as AI model advances rattle software stocks and cybersecurity

Technology