SK hynix Leads the Charge in Next-Gen AI Memory with World's First 12-Layer HBM4 Samples

5 Sources

5 Sources

[1]

Micron, Samsung, and SK Hynix preview new HBM4 memory for AI acceleration

Recap: The AI accelerator race is driving rapid innovation in high-bandwidth memory technologies. At this year's GTC event, memory giants Samsung, SK Hynix, and Micron previewed their next-generation HBM4 and HBM4e solutions coming down the pipeline. While data center GPUs are transitioning to HBM3e, the memory roadmaps revealed at Nvidia GTC make it clear that HBM4 will be the next big step. Computerbase attended the event and noted that this new standard enables some serious density and bandwidth improvements over HBM3. SK Hynix showcased its first 48GB HBM4 stack composed of 16 layers of 3GB chips running at 8Gbps. Likewise, Samsung and Micron had similar 16-high HBM4 demos, with Samsung claiming that speeds will ultimately reach 9.2Gbps within this generation. We should expect 12-high 36GB stacks to become more mainstream for HBM4 products launching in 2026. Micron says that its HBM4 solution will boost performance by over 50 percent compared to HBM3e. However, memory makers are already looking beyond HBM4 to HBM4e and staggering capacity points. Samsung's roadmap calls for 32Gb per layer DRAM, enabling 48GB and even 64GB per stack with data rates between 9.2-10Gbps. SK Hynix hinted at 20 or more layer stacks, allowing for up to 64GB capacities using their 3GB chips on HBM4e. These high densities are critical for Nvidia's forthcoming Rubin GPUs aimed at AI training. The company revealed Rubin Ultra will utilize 16 stacks of HBM4e for a colossal 1TB of memory per GPU when it arrives in 2027. Nvidia claims that with four chiplets per package and a 4.6PB/s bandwidth, Rubin Ultra will enable a combined 365TB of memory in the NVL576 system. While these numbers are impressive, they come at an ultra-premium price tag. VideoCardz notes that consumer graphics cards seem unlikely to adopt HBM variants anytime soon. The HBM4 and HBM4e generation represent a critical bridge for enabling continued AI performance scaling. If memory makers can deliver on their aggressive density and bandwidth roadmaps over the next few years, it will massively boost data-hungry AI workloads. Nvidia and others are counting on it.

[2]

SK hynix ships world's first 12-layer HBM4 samples to customers, ready for NVIDIA Rubin AI GPUs

TL;DR: SK hynix has begun sampling its 12-Hi HBM4 memory modules, featuring 36GB capacity and 2TB/sec bandwidth, to NVIDIA for its Rubin AI GPUs. This marks a 60% performance increase over HBM3E. SK hynix has started sampling its next-gen 12-Hi HBM4 memory modules to customers -- namely NVIDIA, which will be using HBM4 on its new Rubin AI GPUs -- the world's first HBM4. During NVIDIA's recent GTC 2025 event, AI memory partner SK hynix announced it was unveiling its new 12-Hi HBM4, 12-Hi HBM3E (for NVIDIA's new GB300 AI GPU), and new SOCAMM memory modules. SK hynix's' new HBM4 memory uses its Advanced MR-MUF process, pushing capacities up to 36GB which makes it the highest amongst 12-Hi HBM products. We know that NVIDIA's new Rubin and Rubin Ultra AI GPU platforms will use HBM4 memory, thanks to its high speeds we have up to 2TB/sec for the first time, a 60% performance jump over HBM3E SK hynix said: "The samples were delivered ahead of schedule based on SK hynix's technological edge and production experience that have led the HBM market, and the company is to start the certification process for the customers. SK hynix aims to complete preparations for mass production of 12-layer HBM4 products within the second half of the year, strengthening its position in the next-generation AI memory market". "The 12-layer HBM4 provided as samples this time feature the industry's best capacity and speed which are essential for AI memory products. The product has implemented bandwidth capable of processing more than 2TB (terabytes) of data per second for the first time. This translates to processing data equivalent to more than 400 full-HD movies (5GB each) in a second, which is more than 60 percent faster than the previous generation, HBM3E"". "SK hynix also adopted the Advanced MR-MUF process to achieve the capacity of 36GB, which is the highest among 12-layer HBM products. The process, of which competitiveness has been proved through a successful production of the previous generation, helps prevent chip warpage, while maximizing product stability by improving heat dissipation. Following its achievement as the industry's first provider to mass produce HBM3 in 2022, and 8- and 12-high HBM3E in 2024. SK hynix has been leading the AI memory market by developing and supplying HBM products in a timely manner". Justin Kim, President & Head of AI Infra at SK hynix said: "We have enhanced our position as a front-runner in the AI ecosystem following years of consistent efforts to overcome technological challenges in accordance with customer demands. We are now ready to smoothly proceed with the performance certification and preparatory works for mass production, taking advantage of the experience we have built as the industry's largest HBM provider".

[3]

SK Hynix Starts Sampling World's First 12-Layer HBM4 Samples With Up To 36 GB Capacity & 2 TB/s Datarate, 12-Hi HBM3e & SOCAMM Showcased Too

SK Hynix has just announced its next-gen 12-Hi HBM3e & SOCAMM memory alongside the sampling of the world's first 12-Hi HBM4 samples. SK Hynix Begins Sampling Next-Gen 12-High HBM4 Memory Alongside HBM3E Memory That Powers NVIDIA's Latest GB300 AI Chip, SOCAMM Is Also Ready SK Hynix has been moving forward rapidly with its innovative memory products for the industry's leading computing hardware, such as high-performance data center GPUs. It has announced that it will unveil its leading 12-high HBM3E and the SOCAMM memories at the GTC 2025 event, which is currently being held from 17th to 21st March in Jose, California. The company has been a fierce competitor to Samsung and Micron and has manufactured the latest SOCAMM (Small Outline Compression Attached Memory Module) for NVIDIA's powerful AI chips. This is based on the popular CAMM memory, which is used on NVIDIA's chips but will be a low-power DRAM. SK Hynix SOCAMM memory will help bump up the memory capacity drastically, increasing the performance in AI workloads while remaining power-efficient. Apart from SOCAMM, SK Hynix will also showcase its 12-High HBM3E memory that it supplied to NVIDIA for manufacturing the latest Blackwell GB300 GPUs. SK Hynix exclusively made a deal with NVIDIA for the GB300 AI chip and has a strong lead against its competitors already. SK Hynix had already mass-produced 12H HBM3E in September last year, while Samsung will roughly need several more months to catch up to SK Hynix. SK Hynix's top executives will showcase the products at the GTC event and will include personalities like CEO Kwak Noh-Jung, President & Head of AI Infra CMO Juseon Kim, and Head of Global S&M, Lee Sangrak. The samples were delivered ahead of schedule based on SK hynix's technological edge and production experience that have led the HBM market, and the company is to start the certification process for the customers. SK hynix aims to complete preparations for mass production of 12-layer HBM4 products within the second half of the year, strengthening its position in the next-generation AI memory market. The 12-layer HBM4 provided as samples this time feature the industry's best capacity and speed which are essential for AI memory products. The product has implemented bandwidth capable of processing more than 2TB (terabytes) of data per second for the first time. This translates to processing data equivalent to more than 400 full-HD movies (5GB each) in a second, which is more than 60 percent faster than the previous generation, HBM3E. SK hynix also adopted the Advanced MR-MUF process to achieve the capacity of 36GB, which is the highest among 12-layer HBM products. The process, of which competitiveness has been proved through a successful production of the previous generation, helps prevent chip warpage, while maximizing product stability by improving heat dissipation. via SK Hynix Finally, the company will also showcase its leading 12-high HBM4 memory, which is currently under development and is being sampled to leading customers, including NVIDIA, who will be using it on the Rubin series of GPUs. The 12-Hi HBM4 memory brings up to 36 GB capacity per stack and also features data rates of up to 2 TB/s. The company is set to mass-produce the 12-H HBM4 memory in the second half of 2025 and will utilize TSMC's 3nm process node.

[4]

SK hynix ships HBM4 samples for clients for 1st time in world

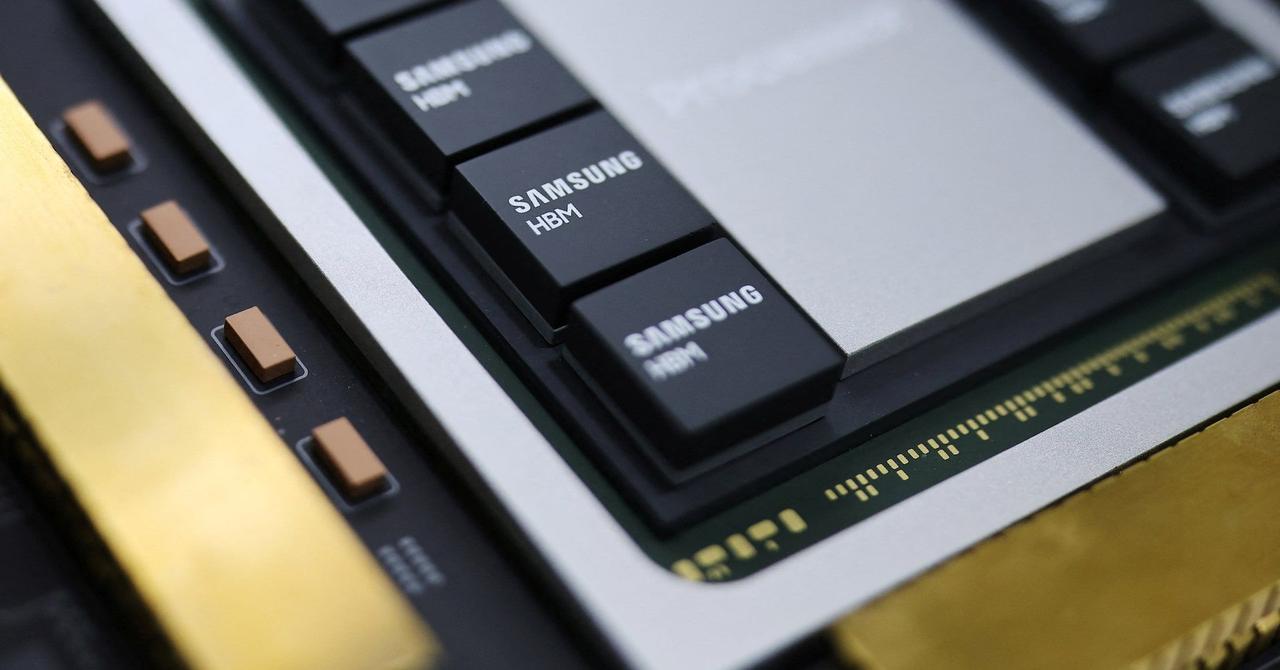

SK hynix's 12-layer high-bandwidth memory 4 sample chips / Courtesy of SK hynix By Nam Hyun-woo SK hynix said Wednesday it shipped the samples of the next-generation high-bandwidth memory 4 (HBM4) chip to clients for the first time in the world, cementing its position as the leading supplier of the memory chip specific to artificial intelligence (AI). According to the chipmaker, it recently provided samples of its 12-layer HBM4 to major clients for qualification tests. HBM4 is the sixth generation of HBM, which plays a critical role in accelerating processes for graphics processing units (GPUs) and other AI accelerator chips. The company said it shipped HBM4 samples earlier than its initial plan and will begin the qualification process soon with its clients, aiming to comple preparations for mass production within the second half of this year. The company was initially set to begin mass-producing HBM4 in 2026 but apparently expedited the plan due to clients' demands. In November, SK Group Chairman Chey Tae-won said that Nvidia CEO Jensen Huang had asked to move up the supply timeline for HBM4 by six months. SK hynix said the sample chip delivers world-class speeds essential for AI memory and achieves a bandwidth capable of processing more than 2 terabytes of data per second, a level equivalent to processing over 400 full-HD movies in just one second. This is over a 60 percent speed increase compared to the previous fifth-generation HBM3e chips. The sample also offers the highest capacity among comparable 12-layer memory products, based on SK hynix's advanced mass reflow-molded underfill process. The company said this process effectively controls chip warping and improves heat dissipation, enhancing product stability. By providing HBM4 samples to clients, SK hynix is expected to consolidate its leadership in the global HBM market. SK Group Chairman Chey Tae-won, right, listens to Nvidia CEO Jensen Huang at Nvidia's headquarters in Silicon Valley in this photo uploaded on Chey's Instagram page, April 24. Captured from Chey's Instagram page SK hynix became the first in the chip industry to mass-produce fourth-generation HBM3 in 2022 and continued with 8-layer and 12-layer HBM3e in 2024. Buoyed by this timely supply, SK hynix is now the main HBM supplier for Nvidia GPUs and the most profitable memory chipmaker in Korea, surpassing rival Samsung Electronics in profit last year. "We have been pushing technological boundaries to meet customer demands and established ourselves as a leader in the AI ecosystem," SK hynix President of AI Infra Kim Ju-seon said. "Leveraging our extensive experience as the industry's largest HBM supplier, we will ensure smooth progress in qualification and preparations for mass production."

[5]

SK hynix Ships World's First 12-Layer HBM4 Samples to Customers By Investing.com

SEOUL, South Korea, March 18, 2025 /PRNewswire/ -- SK hynix Inc. (or "the company", www.skhynix.com) announced today that it has shipped the samples of 12-layer HBM4, a new ultra-high performance DRAM for AI, to major customers for the first time in the world. The samples were delivered ahead of schedule based on SK hynix's technological edge and production experience that have led the HBM market, and the company is to start the certification process for the customers. SK hynix aims to complete preparations for mass production of 12-layer HBM4 products within the second half of the year, strengthening its position in the next-generation AI memory market. The 12-layer HBM4 provided as samples this time feature the industry's best capacity and speed which are essential for AI memory products. The product has implemented bandwidth capable of processing more than 2TB (terabytes) of data per second for the first time. This translates to processing data equivalent to more than 400 full-HD movies (5GB each) in a second, which is more than 60 percent faster than the previous generation, HBM3E. Bandwidth: In HBM products, bandwidth refers to the total data capacity that one HBM package can process per second. SK hynix also adopted the Advanced MR-MUF process to achieve the capacity of 36GB, which is the highest among 12-layer HBM products. The process, of which competitiveness has been proved through a successful production of the previous generation, helps prevent chip warpage, while maximizing product stability by improving heat dissipation. Following its achievement as the industry's first provider to mass produce HBM3 in 2022, and 8- and 12-high HBM3E in 2024, SK hynix has been leading the AI memory market by developing and supplying HBM products in a timely manner. "We have enhanced our position as a front-runner in the AI ecosystem following years of consistent efforts to overcome technological challenges in accordance with customer demands," said Justin Kim, President & Head of AI Infra at SK hynix. "We are now ready to smoothly proceed with the performance certification and preparatory works for mass production, taking advantage of the experience we have built as the industry's largest HBM provider." About SK hynix Inc. SK hynix Inc., headquartered in Korea, is the world's top tier semiconductor supplier offering Dynamic Random Access Memory chips ("DRAM") and flash memory chips ("NAND flash") for a wide range of distinguished customers globally. The Company's shares are traded on the Korea Exchange, and the Global Depository shares are listed on the Luxembourg Stock Exchange. Further information about SK hynix is available at www.skhynix.com, news.skhynix.com.

Share

Share

Copy Link

SK hynix has begun sampling its groundbreaking 12-layer HBM4 memory, offering unprecedented capacity and bandwidth for AI acceleration. This development marks a significant leap in memory technology for AI applications.

SK hynix Unveils Groundbreaking 12-Layer HBM4 Memory

In a significant leap forward for AI memory technology, SK hynix has announced the successful sampling of the world's first 12-layer High Bandwidth Memory 4 (HBM4) to major customers

1

. This development marks a crucial milestone in the ongoing race to enhance AI acceleration capabilities, with SK hynix solidifying its position as a leader in the AI memory market.Unprecedented Performance and Capacity

The new 12-layer HBM4 samples boast impressive specifications that set them apart in the industry:

- Bandwidth: Capable of processing over 2 terabytes (TB) of data per second, equivalent to processing more than 400 full-HD movies in just one second

2

. - Speed Improvement: More than 60% faster than the previous generation HBM3E

3

. - Capacity: Achieves an industry-leading 36GB capacity, the highest among 12-layer HBM products

4

.

Advanced Manufacturing Process

SK hynix has implemented its Advanced MR-MUF (Mass Reflow-Molded Underfill) process in the production of HBM4. This innovative technique:

- Prevents chip warpage

- Maximizes product stability

- Improves heat dissipation

2

Accelerated Timeline and Market Impact

The company has expedited its HBM4 development and production schedule:

- Samples delivered ahead of the initial plan

- Mass production preparations targeted for the second half of 2025

- Original mass production was set for 2026, but timeline moved up by approximately six months

1

Related Stories

Industry Collaboration and Future Prospects

SK hynix's HBM4 development is closely tied to the needs of major players in the AI industry:

- NVIDIA is expected to use HBM4 in its upcoming Rubin series of GPUs

3

. - The company aims to strengthen its position in the next-generation AI memory market

2

.

Broader Industry Trends

The development of HBM4 is part of a larger trend in the memory industry:

- Other major players like Samsung and Micron are also working on HBM4 solutions

5

. - Future iterations like HBM4e are already being planned, with potential for even higher capacities and speeds

5

.

As AI workloads continue to demand more from memory systems, the race to develop faster and higher-capacity solutions like HBM4 is likely to intensify, driving innovation in the semiconductor industry and enabling more powerful AI applications in the coming years.

References

Summarized by

Navi

[2]

Related Stories

SK Hynix Leads the Charge in HBM4 Development, Promising Unprecedented Performance for AI

12 Sept 2025•Technology

Samsung gains ground in HBM4 race as Nvidia production ignites AI memory battle with SK Hynix, Micron

02 Jan 2026•Technology

Next-Gen HBM Memory Race Heats Up: SK Hynix and Micron Prepare for HBM3E and HBM4 Production

22 Dec 2024•Technology

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology