Tech giants race to embed AI in schools as experts warn of risks to student development

2 Sources

2 Sources

[1]

Tech Giants Pushing AI Into Schools Is a Huge, Ethically Bankrupt Experiment on Innocent Children That Will Likely End in Disaster

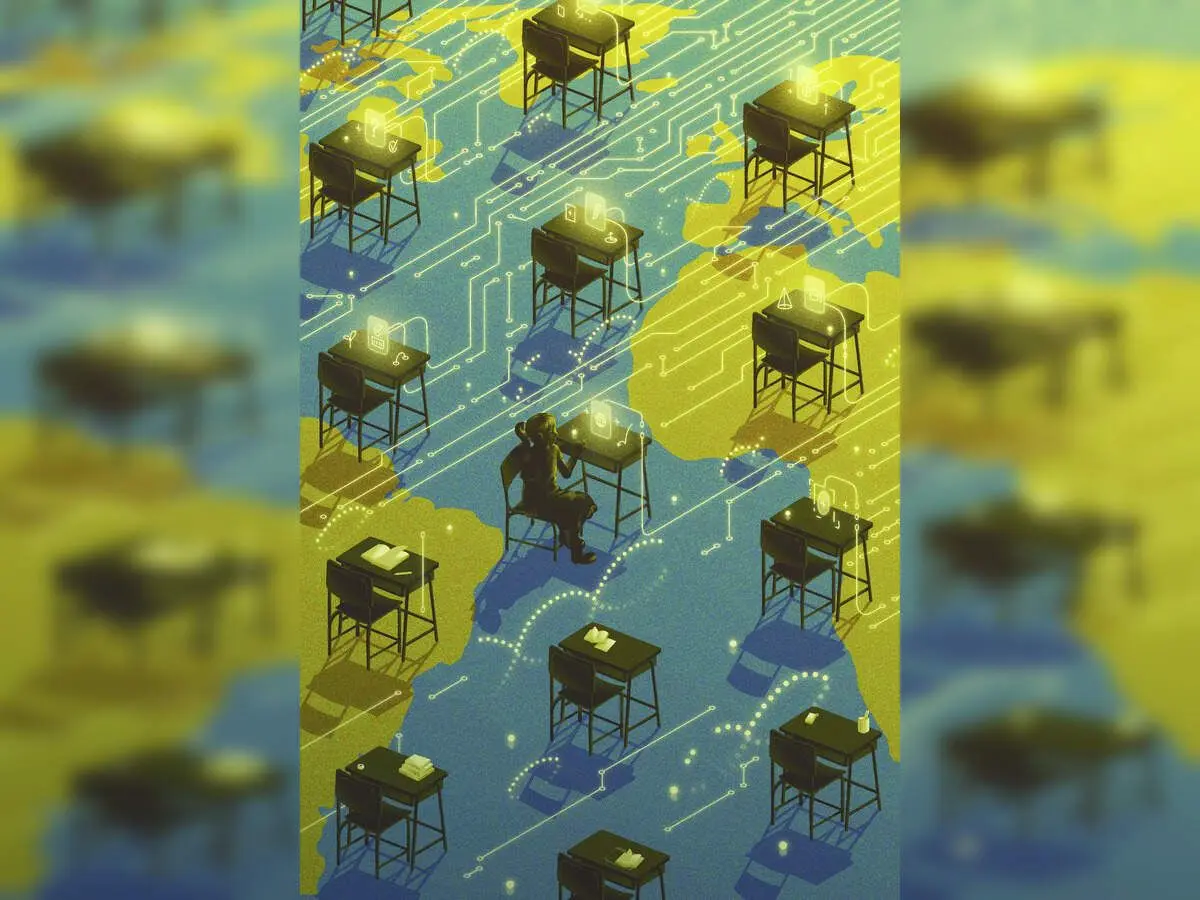

The tech industry is making sure kids will be hooked on AI for generations -- by proactively sinking its tendrils deep into the education system, long before we understand the effects of the tech on young minds. Top leaders in the space, from Microsoft to OpenAI, are pouring millions of dollars into schools, colleges, and universities, often providing students with access to their AI products. The justification, touted in a fresh New York Times piece by both by tech companies and the educators receiving the funding, is that the tools will accelerate learning and prepare students for a world driven by AI. But the reality outside of this hype is a lot murkier and darker. Some research suggests that AI actually inhibits learning, with one notable study conducted by researchers from Microsoft and Carnegie Mellon finding that it atrophies critical thinking skills. Even more urgently, the safety of AI chatbots is looking more dubious by the day, as significant media and clinical attention is being paid toward the phenomenon of so-called AI psychosis, in which users -- many of them teens and young adults -- are driven into delusional mental spirals through their interactions with human-sounding AIs. Some of these spirals have even to led to suicide and murder. New tech always causes friction in the realm of education, and teachers once clutched their pearls about the calculator, too. But never before has a tool so thoroughly outsourced the act of cognition -- nevermind acted as a personal assistant, friend, or lover. Worst of all is that AI companies are rapidly making inroads into education before the dust can settle on any of these urgent questions. In the US, Miami-Dade County Public Schools, the third-largest school system in the country, deployed a version of Google's Gemini chatbot for its more than 100,000 high school students, the NYT noted. On the other side of the learning dynamic, Microsoft, OpenAI, and Anthropic, have poured more than $23 million into one of the largest teacher's unions in the nation to provide members with training to use their AI products. Abroad, Elon Musk's AI company, xAI, announced last month what it called the "world's first nationwide AI-powered education program" to deploy its chatbot Grok to more than 5,000 public schools in El Salvador. Last June, Microsoft partnered with the Ministry of Education in Thailand to provide free online lessons on using AI to hundreds of thousands students, per the NYT, before later announcing free AI training for nearly as many teachers. Some experts fear that we're making the same mistake as with the global push to expand access to computers, known as One Laptop per Child, which did not improve students' scores or their cognitive abilities, according to studies cited by the Times. "With One Laptop per Child, the fallouts included wasted expenditure and poor learning outcomes," Steven Vosloo, a digital policy specialist at UNICEF, wrote in a recent post spotted by the NYT. "Unguided use of AI systems may actively de-skill students and teachers." There's an argument to be made that exposing children in a controlled environment like a school might better prepare them for their inevitable encounters with AI chatbots, providing them with the nous to safely and effectively use them. Yet top AI companies with billions in the bank have shown they're, so far, unable to keep a tight leash on their tools and ensure they're consistently safe. (OpenAI recently admitted that its own data showed that perhaps half million ChatGPT users every were having conversations that showed signs of psychosis, a revelation that hasn't deterred it from letting its large language models power children's toys.) We're still only beginning to grapple with the consequences that another digital innovation, social media, has had on children and teens -- and now the tech industry wants to rush into the next digital experiment before we even know whether it's safe. The truth, clearly, is that AI companies have no idea if their products are safe or helpful for students. But in the rush to carve out market share in a competitive space, they aren't wasting any time finding out.

[2]

Tech giants are racing to embed AI in schools around the globe - The Economic Times

Governments worldwide are introducing generative AI in schools, partnering with US tech firms like Microsoft, OpenAI and xAI. Advocates cite efficiency and personalization, while researchers and groups such as UNICEF warn of misinformation, cheating and weakened critical thinking, prompting cautious pilots and AI literacy programs.In early November, Microsoft said it would supply artificial intelligence tools and training to more than 200,000 students and educators in the United Arab Emirates. Days later, a financial services company in Kazakhstan announced an agreement with OpenAI to provide ChatGPT Edu, a service for schools and universities, for 165,000 educators in Kazakhstan. Last month, xAI, Elon Musk's artificial intelligence company, announced an even bigger project with El Salvador: developing an AI tutoring system, using the company's Grok chatbot, for more than 1 million students in thousands of schools there. Fueled partly by American tech companies, governments around the globe are racing to deploy generative AI systems and training in schools and universities. Some U.S. tech leaders say AI chatbots -- which can generate humanlike emails, create class quizzes, analyze data and produce computer code -- can be a boon for learning. The tools, they argue, can save teachers time, customize student learning and help prepare young people for an "AI-driven" economy. But the rapid spread of the new AI products could also pose risks to young people's development and well-being, some children's and health groups warn. A recent study from Microsoft and Carnegie Mellon University found that popular AI chatbots may diminish critical thinking. AI bots can produce authoritative-sounding errors and misinformation, and some teachers are grappling with widespread AI-assisted student cheating. Silicon Valley for years has pushed tech tools like laptops and learning apps into classrooms, with promises of improving education access and revolutionizing learning. Still, a global effort to expand school computer access -- a program known as "One Laptop per Child" -- did not improve students' cognitive skills or academic outcomes, according to studies by professors and economists of hundreds of schools in Peru. Now, as some tech boosters make similar education access and fairness arguments for AI, children's agencies like UNICEF are urging caution and calling for more guidance for schools. "With One Laptop per Child, the fallouts included wasted expenditure and poor learning outcomes," Steven Vosloo, a digital policy specialist at UNICEF, wrote in a recent post. "Unguided use of AI systems may actively de-skill students and teachers." Education systems across the globe are increasingly working with tech companies on AI tools and training programs. In the United States, where states and school districts typically decide what to teach, some prominent school systems recently introduced popular chatbots for teaching and learning. In Florida alone, Miami-Dade County Public Schools, the nation's third-largest school system, rolled out Google's Gemini chatbot for more than 100,000 high school students. And Broward County Public Schools, the nation's sixth-biggest school district, introduced Microsoft's Copilot chatbot for thousands of teachers and staff members. Outside the United States, Microsoft in June announced a partnership with the Ministry of Education in Thailand to provide free online AI skills lessons for hundreds of thousands of students. Several months later, Microsoft said it would also provide AI training for 150,000 teachers in Thailand. OpenAI has pledged to make ChatGPT available to teachers in government schools across India. The Baltic nation of Estonia is trying a different approach, with a broad new national AI education initiative called "AI Leap." The program was prompted partly by a recent poll showing that more than 90% of the nation's high schoolers were already using popular chatbots like ChatGPT for schoolwork, leading to worries that some students were beginning to delegate school assignments to AI. Estonia then pressed U.S. tech giants to adapt their AI to local educational needs and priorities. Researchers at the University of Tartu worked with OpenAI to modify the company's Estonian-language service for schools so it would respond to students' queries with questions rather than produce direct answers. Introduced this school year, the "AI Leap" program aims to teach educators and students about the uses, limits, biases and risks of AI tools. In its pilot phase, teachers in Estonia received training on OpenAI's ChatGPT and Google's Gemini chatbots. "It's critical AI literacy," said Ivo Visak, the chief executive of the AI Leap Foundation, an Estonian nonprofit that is helping to manage the national education program. "It's having a very clear understanding that these tools can be useful -- but at the same time these tools can do a lot of harm." Estonia also recently held a national training day for students in some high schools. Some of those students are now using the bots for tasks like generating questions to help them prepare for school tests, Visak said. "If these companies would put their effort not only in pushing AI products, but also doing the products together with the educational systems of the world, then some of these products could be really useful," Visak added. This school year, Iceland started its own national AI pilot in schools. Now several hundred teachers across the country are experimenting with Google's Gemini chatbot or Anthropic's Claude for tasks like lesson planning, as they aim to find helpful uses and to pinpoint drawbacks. Researchers at the University of Iceland will then study how educators used the chatbots. Students won't use the chatbots for now, partly out of concern that relying on classroom bots could diminish important elements of teaching and learning."If you are using less of your brain power or critical thinking -- or whatever makes us more human -- it is definitely not what we want," said Thordis Sigurdardottir, the director of Iceland's Directorate of Education and School Services. Tinna Arnardottir and Frida Gylfadottir, two teachers participating in the pilot at a high school outside Reykjavik, say the AI tools have helped them create engaging lessons more quickly. Arnardottir, a business and entrepreneurship teacher, recently used Claude to make a career exploration game to help her students figure out whether they were more suited to jobs in sales, marketing or management. Gylfadottir, who teaches English, said she had uploaded some vocabulary lists and then used the chatbot to help create exercises for her students. "I have fill-in-the-blank word games, matching word games and speed challenge games," Gylfadottir said. "So before they take the exam, I feel like they're better prepared." Gylfadottir added that she was concerned about chatbots producing misinformation, so she vetted the AI-created games and lessons for accuracy before asking her students to try them. Gylfadottir and Arnardottir said they also worried that some students might already be growing dependent on -- or overly trusting of -- AI tools outside school. That has made the Icelandic teachers all the more determined, they said, to help students learn to critically assess and use chatbots. "They are trusting AI blindly," Arnardottir said. "They are maybe losing motivation to do the hard work of learning, but we have to teach them how to learn with AI.

Share

Share

Copy Link

Microsoft, OpenAI, and xAI are deploying AI tools across schools globally, from Miami-Dade's 100,000 students using Google Gemini to El Salvador's nationwide AI tutoring system. While companies promise enhanced learning, research shows AI chatbots may diminish critical thinking skills. UNICEF and educators urge caution, comparing the rush to the failed One Laptop per Child initiative.

Tech Giants Deploy AI Tools in Schools Worldwide

Tech giants are moving aggressively to embed AI in schools across the globe, partnering with governments and educational institutions to deploy their AI tools and training programs. Microsoft announced plans to supply AI tools and training to more than 200,000 students and educators in the United Arab Emirates, while OpenAI secured an agreement to provide ChatGPT Edu for 165,000 educators in Kazakhstan

2

. The most ambitious project comes from xAI, Elon Musk's AI company, which announced what it calls the "world's first nationwide AI-powered education program" to deploy its Grok chatbot as an AI tutoring system for more than 1 million students across 5,000 public schools in El Salvador1

2

.

Source: ET

In the United States, Miami-Dade County Public Schools, the nation's third-largest school system, rolled out Google Gemini chatbot for more than 100,000 high school students

1

2

. Microsoft, OpenAI, and Anthropic have poured more than $23 million into one of the largest teacher's unions in the nation to provide members with training on using their AI products1

. Companies justify pushing AI into schools by arguing that AI chatbots can save teachers time, enable personalized learning, and prepare students for an AI-driven economy2

.Research Reveals Concerns About Critical Thinking and Learning Outcomes

The rapid integration of generative AI into educational systems has sparked alarm among researchers and child welfare organizations. A recent study from Microsoft and Carnegie Mellon University found that popular AI chatbots may actually diminish critical thinking skills

1

2

. The research suggests that AI in education actually inhibits learning rather than enhancing it, with the technology atrophying students' cognitive abilities1

.

Source: Futurism

Steven Vosloo, a digital policy specialist at UNICEF, drew parallels to the failed One Laptop per Child initiative, warning that history may be repeating itself. "With One Laptop per Child, the fallouts included wasted expenditure and poor learning outcomes," Vosloo wrote. "Unguided use of AI systems may actively de-skill students and teachers"

1

. Studies of hundreds of schools in Peru showed that the global effort to expand computer access did not improve students' cognitive skills or academic outcomes2

.Ethical Concerns Mount Over Student Safety and Misinformation

Beyond learning outcomes, significant ethical concerns surround the safety of AI tools in schools. AI chatbots can produce authoritative-sounding errors and misinformation, while teachers grapple with widespread AI-assisted student cheating

2

. More troubling is the phenomenon of AI psychosis, in which users—many of them teens and young adults—are driven into delusional mental spirals through interactions with human-sounding AI. Some of these cases have led to suicide and murder1

.OpenAI recently admitted that its own data showed perhaps half a million ChatGPT users were having conversations showing signs of psychosis, yet this hasn't deterred the company from letting its large language models power children's toys

1

. Top AI companies with billions in funding have proven unable to keep a tight leash on their tools and ensure consistent student safety1

. The rush to deploy AI tools in schools is happening before society has fully grappled with the consequences of social media on children and teens1

.Related Stories

Estonia Pioneers Alternative Approach Focused on AI Literacy

While most nations embrace rapid deployment of AI in education, Estonia is taking a more measured approach with its "AI Leap" national education initiative. The program was prompted by a poll showing more than 90% of the nation's high schoolers were already using chatbots like ChatGPT for schoolwork, raising concerns that students were delegating assignments to AI

2

.Estonia pressed U.S. tech companies to adapt their AI to local educational needs. Researchers at the University of Tartu worked with OpenAI to modify the company's Estonian-language service so it would respond to students' queries with questions rather than direct answers

2

. "It's critical AI literacy," said Ivo Visak, chief executive of the AI Leap Foundation. "It's having a very clear understanding that these tools can be useful—but at the same time these tools can do a lot of harm"2

. The program aims to teach educators and students about the uses, limits, biases, and risks of integrating AI into educational systems2

.References

Summarized by

Navi

Related Stories

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation