Over 100 AI nudify apps found on Google and Apple app stores despite policies banning them

9 Sources

9 Sources

[1]

Dozens of nudify apps found on Google and Apple's app stores

Removing or restricting access to Grok's AI image editor might not be enough to stop the flood of nonconsensual sexualized images being generated by AI. A report from the Tech Transparency Project (TTP) released on Tuesday found dozens of AI "nudify" apps similar to Grok on Google and Apple's platforms, as previously reported by CNBC. TTP identified 55 apps on the Google Play Store and 48 on Apple's App Store that can "digitally remove the clothes from women and render them completely or partially naked or clad in a bikini or other minimal clothing." These apps were downloaded over 705 million times worldwide, generating $117 million in revenue. According to CNBC, Google has suspended "several" of the apps TTP spotted and Apple has removed 28 of them (two of which were later restored). This is not the first report on AI nudify apps slipping through the cracks -- Apple and Google had to respond to a similar report from 404 Media in 2024. TTP's report comes in response to calls for Apple and Google to remove X and Grok from their app stores after Grok generated millions of nonconsensual sexualized images, mainly of women and children. Grok and X are now facing investigations in the EU and in the UK, as well as a lawsuit from at least one victim. Apple and Google have addressed the apps in TTP's report, but X and Grok remain freely available on both companies' app stores. As The Verge's Elizabeth Lopatto has pointed out, Apple and Google were quick to remove the ICEBlock app, "while allowing X to generate degrading images of a woman ICE killed."

[2]

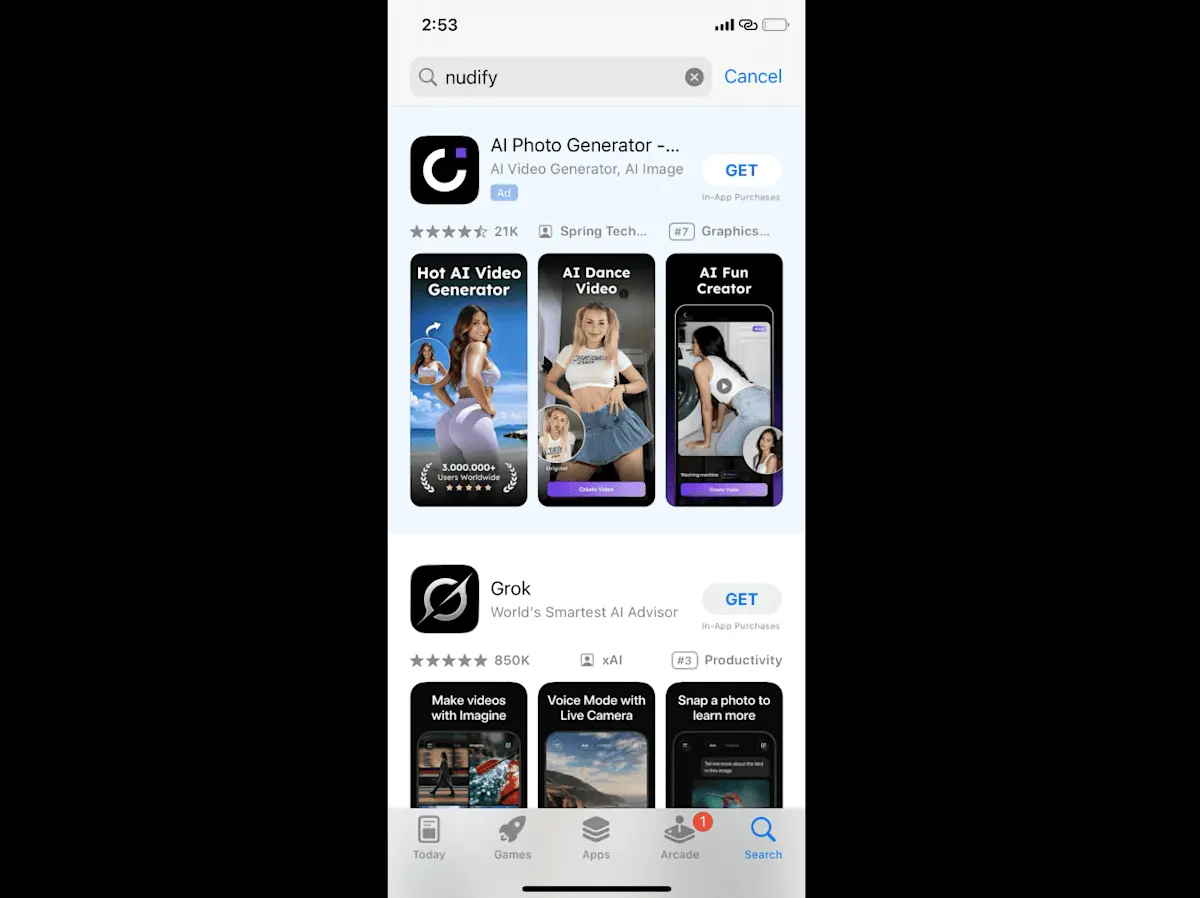

Nudify apps get past Google, Apple app moderation

Researchers with the Tech Transparency Project found all sorts of apps that let users create fake non-consensual nudes of real people The mobile app emperors have no clothes. Apple and Google have made millions of dollars from AI apps that let users undress people even as both companies claim to ban such software from their stores, according to a new study. Researchers with the nonprofit Tech Transparency Project found 55 such apps in Google's Play store and 47 in Apple's App Store. All told, those apps were downloaded 705 million times and have generated $117 million in revenue, they say. The group published its research on Tuesday in the wake of global outrage over users employed Elon Musk's AI bot, Grok, to strip people of their clothing. Regulators in the UK have weighed banning the app. "Grok is really the tip of the iceberg," Katie Paul, director of Tech Transparency Project, told The Register. "The posts were happening on X so the world could see them. But even more graphic content can be created by some of these other apps which is what really got us into this issue." In response to The Register's inquiry, Google said while its investigation is ongoing, it has removed several apps. "When violations of our policies are reported to us, we investigate and take appropriate action," a Google spokesperson said via email. "Most of the apps referenced in this report have been suspended from Google Play for violations of our policies." Apple did not reply to an email from The Register seeking comment. According to the investigation, Apple also had sponsored ads, another revenue generator, when users searched for items that should have been banned under its policy, Paul said. "When you search the word 'nudify' in the App Store, according to their policy, nothing should come up, but not only does Grok come up first, Apple had a sponsored ad for a nudify app," she said. "That app is one of those that they removed after we reached out. Not only was the app on the App Store, Apple was profiting from ads to promote it when people searched the word 'nudify.'" The Tech Transparency Project tested the free versions of the apps using a clothed AI-generated model, then prompted the apps to render the woman completely or partially nude. With the face-swap apps, TTP tested whether the app would superimpose the face of a fully-clothed woman onto an image of a nude woman. In both cases, they were successful, showing how easy it is to create non-consensual intimate imagery. The apps were all available as of Jan. 21. However, Apple removed 25 of those apps after the researchers contacted them. Google has suspended several, and said it was mounting a review of the apps that were in its store, Paul said. This latest report from Tech Transparency Project comes about a month after it found both companies' app marketplaces selling apps developed by entities that were sanctioned by the U.S. government because of their ties to the war in the Ukraine and human rights abuses in China. In that case, the nonprofit found 52 apps in Apple's App Store with direct connections to Russian, Chinese, and other companies that are under economic sanctions enforced by the U.S. Treasury Department. All of the apps listed a developer, seller, copyright holder, or other information on their App Store page that matched with a U.S.-sanctioned entity. That earlier investigation found that the Google Play Store had a similar problem, with 18 apps connected to U.S.-sanctioned organizations, roughly a third of the number identified in the Apple App Store. Paul said that, after that investigation published in December, both companies pulled the apps and posted jobs for compliance officers to review apps for sanctions. What's concerning to Paul is what it means for consumers if Google and Apple are willing to brazenly flout laws that can land them in economic trouble with their home governments. "That was sanctions. That was an area where there are legal consequences. You can imagine the other harmful apps that are out there where there aren't legal consequences," Paul said. "With the nudify apps, we found a lot of concerning issues with both app stores. What's really concerning is that some of these apps were marketed for children ages 9 and up. These aren't just being marketed to adults, but they're are also being pushed to kids." Another concern: data privacy laws in China that require companies to hand over data to that government. "When it comes to nudify apps, that means these non-consensual nude images of American citizens would be in the hands of the Chinese government," Paul said. "So that's a major privacy violation on top of being a national security risk, particularly if there are political figures who have non consensual nude imagery created of them and then that gets into the hands of the Chinese government." Paul said the breakdown appears to lay between policy and moderation. While the policies clearly state these apps are not allowed, in practice they are proliferating. "We live in a world where we can't disconnect from this technology," she told The Register. "These companies market themselves on how safe and trustworthy their app stores are. If you go into their app development pages, they claim to have very stringent review processes - including screening for sanctions - but we don't see that being applied." ®

[3]

Apple and Google reportedly still offer dozens of AI 'nudify' apps

A recent investigation by an online advocacy organization called the Tech Transparency Project (TTP) found that the Apple App Store and Google Play Store . These are AI applications that create nonconsensual and sexualized images, which is a clear violation of both companies' store policies. All told, the investigation found 55 of this type of app in the Google Play Store and 47 in the Apple App Store. Both platforms also still offer access to xAI's Grok, which is likely the most famous in the world. "Apple and Google are supposed to be vetting the apps in their stores. But they've been offering dozens of apps that can be used to show people with minimal or no clothing -- making them ripe for abuse," said Michelle Kuppersmith, an executive director at the nonprofit that runs TTP. The apps identified by the report have been collectively downloaded over 700 million times and generated more than $117 million in revenue. Google and Apple get a cut of this money. Many of the apps named in the investigation are rated as suitable for teens and children. DreamFace, for instance, is rated suitable for ages 13 and up in the Google Play Store and ages nine and up in the Apple App Store. Both companies have responded to the investigation. Apple says it has removed 24 apps from its store, . However, that falls shy of the 47 apps discovered by TTP researchers. A Google spokesperson has said the company suspended several apps referenced in the report for violating store policies, but declined to say how many apps it has removed. This report comes after Elon Musk's Grok was found to be generating sexualized images of both . All told, the AI chatbot generated around three million sexualized images and 22,000 that involved children . Representatives from the company haven't really responded to these allegations, except to send an that read "Legacy Media Lies." Musk has also stated that he is "not aware of any naked underage images generated by Grok. Literally zero." X's safety account "anyone using or prompting Grok to make illegal content will suffer the same consequences as if they upload illegal content." Grok has proven to be more forthcoming than actual humans at the company, as the chatbot apologized for creating sexualized images of minors.

[4]

Apple, Google host dozens of AI 'nudify' apps like Grok, report finds

The Apple and Google Play app stores are hosting dozens of "nudify" apps that can take photos of people and use artificial intelligence to generate nude images of them, according to a report Tuesday from an industry watchdog. A review of the two app stores conducted in January by Tech Transparency Project found 55 nudify apps on Google Play and 47 in the Apple App Store, according to the organization's report that was shared exclusively with CNBC. After being contacted by TPP and CNBC last week, an Apple spokesperson on Monday said that the company removed 28 apps identified in the report. The iPhone maker said it also alerted developers of other apps that they risk removal from the Apple App Store if guideline violations aren't addressed. Two of the apps removed by Apple were restored to the store after the developers resubmitted new versions that addressed guideline concerns, a spokesman for the company told CNBC. "Both companies say they are dedicated to the safety and security of users, but they host a collection of apps that can turn an innocuous photo of a woman into an abusive, sexualized image," TTP wrote in its report about Apple and Google. TTP told CNBC on Monday that a review of the Apple App Store found that only 24 apps were removed by the tech company. A Google spokesperson told CNBC that the company suspended several apps referenced in the report for violating its app store's policies, saying that it investigates when policy violations are reported. The company declined to say specifically how many apps it had removed, because its investigation into the apps identified by TTP was ongoing. The report comes after Elon Musk's xAI faced backlash earlier this month when its Grok AI tool responded to user prompts that it generate sexualized photos of women and children. The watchdog organization identified these apps on the two stores by searching for terms like "nudify" and "undress" to find apps, and tested those apps using AI-generated images of fully clothed women. The project tested two types of apps -- those that used AI to render the images of the women without clothes, as well as "face swap" apps that superimposed the original women's faces onto images of nude women. "It's very clear, these are not just 'change outfit' apps," Katie Paul, TPP's director, told CNBC. "These were definitely designed for non-consensual sexualization of people."

[5]

Google and Apple hosted dozens of AI "nudify" apps despite platform policies

Many of these apps were removed following the report, but several are still available for download. AI is one of the most powerful tools available these days, and you can do so much with it. But, like any tool, it can be used for good or bad. Over the past few weeks, we've witnessed social media users using X's Grok AI chatbot in lewd ways, primarily to create sexualized imagery of women without their consent. As it turns out, it's not just Grok that has been at the center of this undressing scandal, as both the Apple App Store and the Google Play Store allegedly hosted "nudify" apps. Tech Transparency Project found 55 apps in the Google Play Store that allowed for creating nude images of women, while the Apple App Store hosted 47 such apps, with 38 being common between the two stores. These apps were available as of last week, though the report mentions that Google and Apple subsequently removed 31 and 25 apps, respectively, after the list of these nudify apps was shared with them. For their investigation, the Tech Transparency Project searched for terms like "nudify" and "undress" across the two app stores and found dozens of results. Many of these apps used AI to either generate videos or images from user prompts, or to superimpose the face of one person onto another's body, i.e., "face swapping." Alarmingly, apps like DreamFace (an AI image/video generator, which is still available on the Google Play Store but has been removed from the Apple App Store) presented no resistance when users input lewd prompts to show naked women. The app allows users to create one video a day for free using prompts, after which they have to subscribe to paid features. The report cites AppMagic statistics to say that the app has generated $1 million in revenue. Keep in mind that Google and Apple both charge a significant percentage (up to 30%) on in-app purchases, such as subscriptions, effectively profiting from such harmful apps. Similarly, Collart is another AI image/video generator that is still available on the Google Play Store but has been removed from the Apple App Store. This app is said to not only accept prompts to nudify women, but would also accept prompts to depict them in pornographic situations with no apparent restrictions. These are just two examples, but the report mentions several more with damning evidence. Face swap apps are even more harmful and predatory, as they superimpose faces that the user potentially knows onto naked bodies. Apps like RemakeFace are still available on both the Google Play Store and the Apple App Store at the time of writing, and the report confirms that they could easily be used to create non-consensual nudes of women. Both the Google Play Store and the Apple App Store prohibit apps that depict sexual nudity. But as the report revealed, these apps were distributed through app stores (with in-app subscriptions) despite their apparent violation of those stores' policies. It's clear that app stores haven't kept up with the spread of AI deepfake apps that can "nudify" people without their permission. The platforms have a clear responsibility to protect users, and we hope they tighten their guidelines and focus more on proactive monitoring of such apps rather than reacting to reports. We reached out to Google and Apple for comments on this matter. We'll update this article when we hear back from the companies.

[6]

Report claims App Store hosts nonconsensual AI undressing apps

Today, the Tech Transparency Project released a report concluding that "nudify" apps are widely available and easily found on the App Store and Google's Play Store. Here are the details. Some nudifying apps even advertise on the App Store While Grok has recently drawn attention for AI-generated nonconsensual sexualized images, including cases involving minors, the problem itself is not new. In fact, the problem obviously predates generative AI, as traditional image-editing tools have long enabled the creation of this kind of abusive content. What has changed over the past couple of years is the scale and the fact that the barrier to generating such images in seconds is now near-nonexistent. Unsurprisingly, many apps have spent the past few years attempting to capitalize on this new capability, with some openly advertising it. All of this happened while Apple fought Epic Games and other app developers in antitrust lawsuits, where Apple insisted that part of the reason it charges up to 30% in commissions is to make the App Store safer through automated and manual app review systems. And while Apple does work to prevent fraud, abuse, and other violations of the App Store guidelines, sometimes apps and entire app categories can fall through the cracks. Just last year, 9to5Mac highlighted a large number of apps purporting (or heavily suggesting) to be OpenAI's Sora 2 app, some of which charged steep weekly subscription fees. Now, given the renewed attention that Grok brought to AI-powered nudifying tools, the Tech Transparency Project (TTP) has published a report detailing how they easily found undressing apps in the App Store and on Google Play. From the report: The apps identified by TTP have been collectively downloaded more than 705 million times worldwide and generated $117 million in revenue, according to AppMagic, an app analytics firm. Because Google and Apple take a cut of that revenue, they are directly profiting from the activity of these apps. Google and Apple are offering these apps despite their apparent violation of app store policies. The Google Play Store prohibits "depictions of sexual nudity, or sexually suggestive poses in which the subject is nude" or "minimally clothed." It also bans apps that "degrade or objectify people, such as apps that claim to undress people or see through clothing, even if labeled as prank or entertainment apps." The report also notes that simple searches for terms like "nudify" or "undress" are enough to surface undressing apps, some of which explicitly advertise around those keywords. The TTP says that apps included in this report "fell into two general categories: apps that use AI to generate videos or images based on a user prompt, and 'face swap' apps that use AI to superimpose the face of one person onto the body of another." To test these apps, the TTP used AI-generated images of fake women and limited its testing to each app's free features. As a result, 55 Android apps and 47 iOS apps complied with the requests. At least one of these apps was rated for ages 9 and up in the App Store. The TTP's report goes on to explain in detail how easily they created these images and shows an ample variety of censored results to make its case. Here's an account of their tests on one of the apps: "With the iOS version, the app, when fed the same text prompt to remove the woman's top, failed to generate an image and gave a sensitive content warning. But a second request to show the woman dancing in a bikini was successful. The home screen of both the iOS and Google Play versions of the app offer multiple AI video templates including "tear clothes," "chest shake dance," and "bend over."" The report concludes that while the apps cited in the report may represent "just a fraction" of what's available, they "suggest the companies are not effectively policing their platforms or enforcing their own policies when it comes to these types of apps". To read the full report, follow this link.

[7]

Google, Apple hosted dozens of nudify apps, report reveals

A new investigation claims that the Apple and Google app stores hosted dozens of so-called "nudify" AI apps, despite such apps violating the companies' rules. The Transparency Project (TTP), a group affiliated with the Kennedy School of Government at Harvard University, found dozens of apps in both stores that digitally remove clothes, rendering the subjects naked or nearly naked. It found 55 such apps in the Google Play Store and 47 in the Apple App Store. Wrote TTP: "The apps identified by TTP have been collectively downloaded more than 705 million times worldwide and generated $117 million in revenue, according to AppMagic, an app analytics firm. Because Google and Apple take a cut of that revenue, they are directly profiting from the activity of these apps." Both tech giants have responded to the TTP report. Apple told CNBC it had removed 28 apps identified in the TTP report, while Google told the outlet it had "suspended several apps" while noting its investigation was ongoing. However, the TTP concludes that both App Stores need to do more to stop non-consensual deepfakes. "TTP's findings show that Google and Apple have failed to keep pace with the spread of AI deepfake apps that can 'nudify' people without their permission," the report states. "Both companies say they are dedicated to the safety and security of users, but they host a collection of apps that can turn an innocuous photo of a woman into an abusive, sexualized image." The report from TTP comes on the heels of the controversy surrounding Elon Musk and xAI's Grok, which is under investigation in several countries for creating sexualized, nonconsensual images. A Mashable investigation found that Grok lacks basic safety guardrails to prevent deepfakes. In addition, researchers say Grok has created more than 3 million sexualized images -- including more than 20,000 that appeared to depict children -- over an 11-day period between Dec. 29 and Jan. 8. With the dawn of the AI age, sexual deepfakes will continue to be a major issue for tech companies moving forward.

[8]

It's not just Grok: Apple and Google app stores are infested with nudifying AI apps

We tend to think of the Apple App Store and Google Play Store as digital "walled gardens" - safe, curated spaces where dangerous or sleazy content gets filtered out long before it hits our screens. But a grim new analysis by the Tech Transparency Project (TTP) suggests that the walls have some serious cracks. The report reveals a disturbing reality: both major storefronts are currently infested with dozens of AI-powered "nudify" apps. These aren't obscure tools hidden on the dark web; they are right there in the open, allowing anyone to take an innocent photo of a person and digitally strip the clothing off them without their consent. Earlier this year, the conversation around this technology reached a fever pitch when Elon Musk's AI, Grok, was caught generating similar sexualized images on the platform X. But while Grok became the lightning rod for public outrage, the TTP investigation shows it was just the tip of the iceberg. A simple search for terms like "undress" or "nudify" in the app stores brings up a laundry list of software designed specifically to create non-consensual deepfake pornography. The scale of this industry is frankly staggering We aren't talking about a few rogue developers slipping through the cracks. According to the data, these apps have collectively racked up over 700 million downloads. They have generated an estimated $117 million in revenue. And here is the uncomfortable truth: because Apple and Google typically take a commission on in-app purchases and subscriptions, they are effectively profiting from the creation of non-consensual sexual imagery. Every time someone pays to "undress" a photo of a classmate, a coworker, or a stranger, the tech giants get their cut. The human cost of this technology cannot be overstated. These tools weaponize ordinary photos. A selfie from Instagram or a picture from a yearbook can be twisted into explicit material used to harass, humiliate, or blackmail victims. Advocacy groups have been screaming about this for years, warning that "AI nudification" is a form of sexual violence that disproportionately targets women and, terrifyingly, minors. So, why are they still there? Both Apple and Google have strict policies on paper that ban pornographic and exploitative content. The problem is enforcement. It has become a digital game of Whac-A-Mole. When a high-profile report comes out, the companies might ban a few specific apps, but the developers often just tweak the logo, change the name slightly, and re-upload the exact same code a week later. The automated review systems seem completely incapable of keeping up with the rapid evolution of generative AI. For parents and everyday users, this is a wake-up call. We can no longer assume that just because an app is on an "official" store, it is safe or ethical. As AI tools get more powerful and easier to access, the safeguards we relied on in the past are failing. Until regulators step in - or until Apple and Google decide to prioritize safety over commission fees - our digital likenesses remain uncomfortably vulnerable.

[9]

New Report Reveals Dozens of Nudify Apps in Major App Stores

The identified apps have been downloaded over 705 million times Artificial intelligence (AI) has emerged as a transformative technology which has boosted multiple industries and tasks that could only be done manually earlier. However, it also comes with its share of downsides. One of them was exposed when, in December, xAI's chatbot Grok, which is integrated across X (formerly Twitter), started complying with requests to generate sexually suggestive image edits of users' photos. While developers have now improved the guardrails to limit such edits, it appears that AI-powered "nudifying" apps are mushrooming everywhere. Multiple "Nudifying" AI Apps Surface Online According to a report from the Tech Transparency Project (TTP), a research initiative by watchdog group Campaign for Accountability, dozens of AI-powered apps that generate either completely or partially undressed images and videos from uploaded photos have surfaced online. The report claims to have identified as many as 55 such apps in Google's Play Store and 47 apps in Apple's App Store. The watchdog conducted an investigation and claims that these identified apps have collectively been downloaded more than 705 million times worldwide. Citing the app analytics firm AppMagic, it claimed that the apps have generated $117 million (roughly Rs. 1,074 crore) in revenue, highlighting that developers behind these apps are also profiting from such activities. TTP claimed it found these apps by searching for keywords such as "nudify" and "undress" on both marketplaces. Some of these apps even appeared as top results. Gadgets 360 staff members also verified the claims in a couple of these apps on the Play Store and the App Store. To test the capability, we used fake models generated using AI. Note: We have taken a conscious decision not to share the names of any of these apps to ensure they are not misused and that people's privacy is not violated. As per the report, each of these apps provided the undressing feature as a free offering. While some of them stopped at just undressing, others are said to also recreate their likeness in pornographic situations. TTP claimed that it has shared a list of the identified apps with both Apple and Google Gadgets 360 reached out to Google for comment, but the company did not respond at the time of writing. We will update the story in case they provide a statement. Both companies did respond to CNBC. A Google spokesperson reportedly told the publication that several apps referenced in the report were suspended for violating its policies, and that the investigation was ongoing. On the other hand, an Apple spokesperson told CNBC that 28 apps identified in the report were removed.

Share

Share

Copy Link

A Tech Transparency Project investigation uncovered 102 AI nudify apps across Google Play and Apple App Store that generate nonconsensual sexualized images. These apps were downloaded 705 million times and generated $117 million in revenue. While both companies removed dozens of apps after the report, the findings expose significant gaps in content moderation and raise questions about platform accountability.

Tech Transparency Project Exposes Massive Moderation Failure

The Tech Transparency Project (TTP) released a damning report Tuesday revealing that app stores operated by Google and Apple host dozens of AI nudify apps capable of digitally undressing individuals without their consent

1

. The investigation identified 55 such apps on the Google Play Store and 47 on the Apple App Store, with 38 appearing on both platforms5

. These applications, which clearly violate platform policy violations, were collectively downloaded over 705 million times worldwide and generated $117 million in revenue1

.

Source: Android Authority

Both Google and Apple take significant cuts—up to 30 percent—from in-app purchases and subscriptions, meaning they directly profited from apps designed to generate nonconsensual sexualized images

5

. Katie Paul, director of Tech Transparency Project, told reporters that Grok is "really the tip of the iceberg," noting that "even more graphic content can be created by some of these other apps"2

.How Google and Apple App Moderation Failed Users

Researchers discovered these apps by searching terms like "nudify" and "undress" across both app stores

4

. They tested free versions using AI-generated images of fully clothed women, prompting the AI image editor tools to render subjects completely or partially nude. Face-swapping apps were also tested, successfully superimposing clothed women's faces onto images of nude bodies2

. "It's very clear, these are not just 'change outfit' apps," Paul told CNBC. "These were definitely designed for non-consensual sexualization of people"4

.

Source: Engadget

Apple removed 28 apps after being contacted by TTP and CNBC, though two were later restored after developers resubmitted versions claiming to address guideline concerns

4

. Google suspended several apps but declined to specify exact numbers, stating its investigation remains ongoing4

. This marks the second time both companies have faced similar reports—404 Media documented comparable issues in 20241

.Disturbing Marketing to Children and National Security Risks

Perhaps most alarming is how these apps were marketed. DreamFace, an AI image and video generator still available on Google Play Store at the time of reporting, was rated suitable for ages 13 and up on Google's platform and ages nine and up on Apple's

3

. The app accepts lewd prompts to show naked women and generates $1 million in revenue5

. "These aren't just being marketed to adults, but they're also being pushed to kids," Paul emphasized2

.Data privacy concerns compound the issue. Paul warned that apps with connections to China could mean non-consensual deepfake nudes of American citizens end up in the hands of the Chinese government due to data-sharing laws. "That's a major privacy violation on top of being a national security risk, particularly if there are political figures who have non-consensual nude imagery created of them," she said

2

.Related Stories

The Grok Connection and Selective Enforcement

The Tech Transparency Project report arrives amid global outrage over Elon Musk's xAI chatbot Grok, which generated approximately three million sexualized images, including 22,000 involving children

3

. Grok and X now face investigations in the EU and UK, plus lawsuits from victims1

. Yet both companies' app stores continue offering access to X and Grok despite removing other nudify apps.Apple even displayed sponsored ads for nudify apps when users searched banned terms. "When you search the word 'nudify' in the App Store, according to their policy, nothing should come up, but not only does Grok come up first, Apple had a sponsored ad for a nudify app," Paul revealed

2

. The selective enforcement raises questions about content moderation priorities—both companies swiftly removed the ICEBlock app while allowing deepfake technology to flourish1

.

Source: 9to5Mac

What This Means for Platform Accountability

This investigation follows TTP's December report finding 52 apps in Apple's App Store and 18 in Google Play Store connected to U.S.-sanctioned entities tied to the war in Ukraine and human rights abuses in China

2

. Both companies subsequently removed those apps and posted jobs for compliance officers. "That was sanctions. That was an area where there are legal consequences," Paul noted. "You can imagine the other harmful apps that are out there where there aren't legal consequences"2

.The gap between policy and enforcement remains stark. While both platforms prohibit apps depicting sexual nudity, nonconsensual imagery tools operated freely, generating substantial revenue. Apps like RemakeFace—capable of creating non-consensual deepfake nudes through face-swapping—remained available on both stores even after the investigation went public

5

. Michelle Kuppersmith, an executive director at the nonprofit running TTP, stated: "Apple and Google are supposed to be vetting the apps in their stores. But they've been offering dozens of apps that can be used to show people with minimal or no clothing—making them ripe for abuse"3

.References

Summarized by

Navi

[2]

[5]

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Anthropic and Pentagon clash over AI safeguards as $200 million contract hangs in balance

Policy and Regulation