TensorWave Deploys AMD Instinct MI355X GPUs, Boosting AI Cloud Performance

2 Sources

2 Sources

[1]

TensorWave deploys AMD Instinct MI355X GPUs in its cloud platform

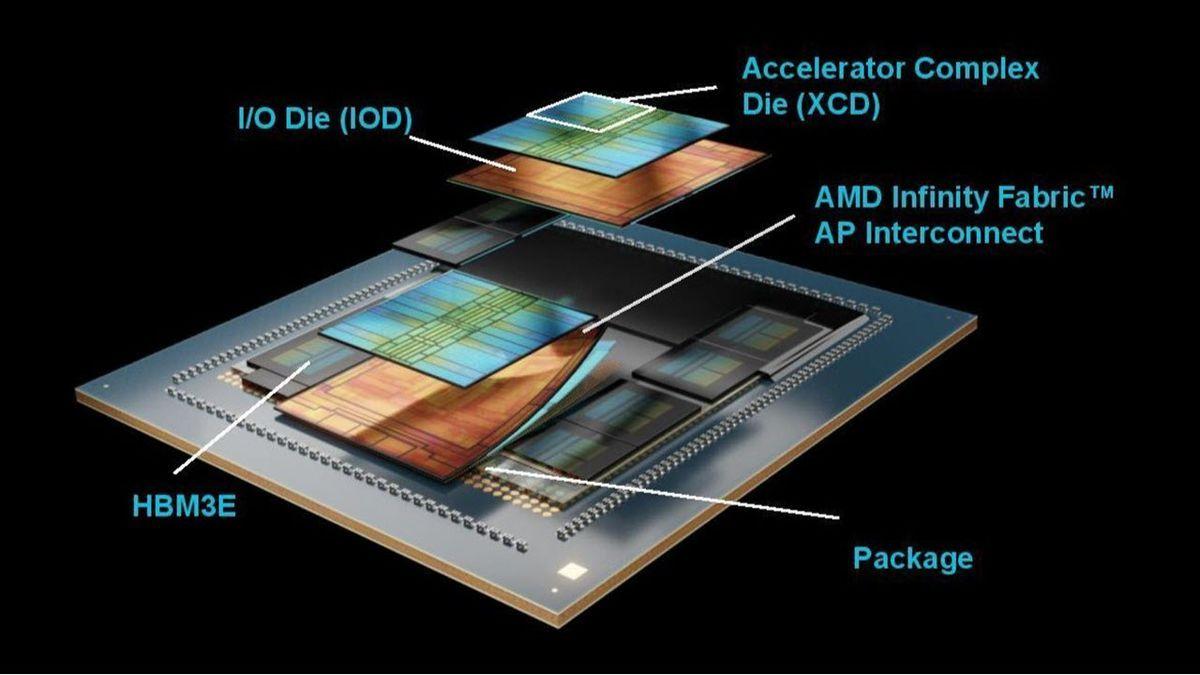

TensorWave, a leader in AMD-powered AI infrastructure solutions, today announced the deployment of AMD Instinct MI355X GPUs in its high-performance cloud platform. As one of the first cloud providers to bring the AMD Instinct MI355X to market, TensorWave enables customers to unlock next-level performance for the most demanding AI workloads -- all with unmatched white-glove onboarding and support. The new AMD Instinct MI355X GPU is built on the 4th Gen AMD CDNA architecture and features 288GB of HBM3E memory and 8TB/s memory bandwidth, optimized for generative AI training, inference, and high-performance computing (HPC). TensorWave's early adoption allows its customers to benefit from the MI355X's compact, scalable design and advanced architecture, delivering high-density compute with advanced cooling infrastructure at scale. "TensorWave's deep specialization in AMD technology makes us a highly optimized environment for next-gen AI workloads," said Piotr Tomasik, president at TensorWave, in a statement. "With the Instinct MI325X now deployed on our cloud and Instinct MI355X coming soon, we're enabling startups and enterprises alike to achieve up to 25% efficiency gains and 40% cost reductions, results we've already seen with customers using our AMD-powered infrastructure." TensorWave's exclusive use of AMD GPUs provides customers with an open, optimized AI software stack powered by AMD ROCm, avoiding vendor lock-in and reducing total cost of ownership. Its focus on scalability, developer-first onboarding, and enterprise-grade SLAs makes it the go-to partner for organizations prioritizing performance and choice. "AMD Instinct MI350 series GPUs deliver breakthrough performance for the most demanding AI and HPC workloads," said Travis Karr, corporate vice president of business development, Data Center GPU Business, AMD, in a statement. "The AMD Instinct portfolio, together with our ROCm open software ecosystem, enables customers to develop cutting-edge platforms that power generative AI, AI-driven scientific discovery, and high-performance computing applications." TensorWave is also currently building the largest AMD-specific AI training cluster in North America, advancing its mission to democratize access to high-performance compute. By delivering end-to-end support for AMD-based AI workloads, TensorWave empowers customers to seamlessly transition, optimize, and scale within an open and rapidly evolving ecosystem.

[2]

TensorWave Accelerates AI with AMD Instinct MI355X Deployment

For those of us watching the AI space, the news of TensorWave today is worth noting. It just announced the deployment of AMD Instinct MI355X GPUs within its high-performance cloud platform. This isn't just another spec bump; it puts TensorWave at the forefront as one of the early cloud providers integrating this cutting-edge hardware, aiming squarely at supercharging the most demanding AI workloads. So, what's under the hood of this MI355X? It's built on the 4th Gen AMD CDNA architecture, sporting a hefty 288GB of HBM3E memory and an impressive 8TB/s memory bandwidth. Those aren't just numbers; they translate directly into serious horsepower, optimized for everything from generative AI training to inference and intense high-performance computing (HPC) applications. TensorWave's quick adoption means their customers are positioned to benefit from this advanced architecture, delivering high-density compute with the necessary advanced cooling infrastructure at scale. In AI, getting your hands on this kind of capability early can be a significant differentiator. "TensorWave's deep specialization in AMD technology makes us a highly optimized environment for next-gen AI workloads," Piotr Tomasik, co-founder and President at TensorWave, said. With the MI325X already deployed and the MI355X on the horizon, the company claims its customers are seeing up to 25% efficiency gains and 40% cost reductions. A key aspect of TensorWave's strategy is their exclusive reliance on AMD GPUs. This isn't just a technical choice; it's a strategic one. It allows them to offer an open, optimized AI software stack powered by AMD ROCm. The big win here? It actively seeks to mitigate vendor lock-in, a perennial concern for anyone building out serious AI infrastructure, and potentially drives down the total cost of ownership. They're also emphasizing a developer-first onboarding approach and enterprise-grade SLAs, which speaks to a focus on practical usability and reliability. "AMD Instinct portfolio, together with our ROCm open software ecosystem, enables customers to develop cutting-edge platforms that power generative AI, AI-driven scientific discovery, and high-performance computing applications," Travis Karr, corporate vice president of business development, Data Center GPU Business, at AMD, said. And if all that weren't enough, TensorWave is also in the process of building what they claim will be North America's largest AMD-specific AI training cluster. This is more than just expansion; it's about democratizing access to powerful compute. By providing comprehensive support for AMD-based AI workloads, TensorWave is clearly aiming to make the transition, optimization, and scaling of AI operations smoother within this evolving ecosystem. In essence, TensorWave's latest move isn't just about deploying new hardware; it's about solidifying a particular vision for the future of AI infrastructure: one that prioritizes performance, openness, and cost-effectiveness. It's a development that will likely resonate with many looking to scale their AI ambitions without being constrained

Share

Share

Copy Link

TensorWave, an AMD-powered AI infrastructure provider, has deployed AMD Instinct MI355X GPUs in its cloud platform, offering enhanced performance for AI workloads with significant efficiency gains and cost reductions.

TensorWave Leads with AMD Instinct MI355X GPU Deployment

TensorWave, a frontrunner in AMD-powered AI infrastructure solutions, has announced the integration of AMD Instinct MI355X GPUs into its high-performance cloud platform. This strategic move positions TensorWave as one of the first cloud providers to offer this cutting-edge technology, enabling customers to harness unprecedented performance for demanding AI workloads

1

2

.Advanced GPU Specifications and Capabilities

Source: VentureBeat

The AMD Instinct MI355X GPU, built on the 4th Gen AMD CDNA architecture, boasts impressive specifications:

- 288GB of HBM3E memory

- 8TB/s memory bandwidth

- Optimized for generative AI training, inference, and high-performance computing (HPC)

These features allow TensorWave to deliver high-density compute with advanced cooling infrastructure at scale, catering to the most intensive AI and HPC applications

1

.Performance Gains and Cost Efficiency

TensorWave's early adoption of the AMD Instinct MI355X is already yielding significant benefits for its customers:

- Up to 25% efficiency gains

- 40% cost reductions

Piotr Tomasik, president at TensorWave, emphasized the company's deep specialization in AMD technology, stating, "We're enabling startups and enterprises alike to achieve up to 25% efficiency gains and 40% cost reductions, results we've already seen with customers using our AMD-powered infrastructure"

1

2

.Open Ecosystem and Vendor Independence

A key advantage of TensorWave's exclusive use of AMD GPUs is the provision of an open, optimized AI software stack powered by AMD ROCm. This approach offers several benefits:

- Avoids vendor lock-in

- Reduces total cost of ownership

- Provides customers with greater flexibility and choice in their AI infrastructure

1

2

Expanding AI Infrastructure

TensorWave is not stopping at GPU deployment. The company is currently building what it claims will be the largest AMD-specific AI training cluster in North America. This initiative aligns with TensorWave's mission to democratize access to high-performance compute

1

2

.Related Stories

Industry Perspective

Travis Karr, corporate vice president of business development, Data Center GPU Business at AMD, commented on the collaboration: "The AMD Instinct portfolio, together with our ROCm open software ecosystem, enables customers to develop cutting-edge platforms that power generative AI, AI-driven scientific discovery, and high-performance computing applications"

1

2

.Future Implications

TensorWave's deployment of AMD Instinct MI355X GPUs and its focus on an open, AMD-powered ecosystem signifies a shift in the AI infrastructure landscape. By prioritizing performance, openness, and cost-effectiveness, TensorWave is positioning itself as a key player in shaping the future of AI compute solutions

2

.As the AI industry continues to evolve rapidly, TensorWave's approach may serve as a model for other providers looking to offer flexible, high-performance solutions while avoiding the pitfalls of vendor lock-in.

References

Summarized by

Navi

Related Stories

TensorWave Secures $43M Funding to Challenge Nvidia's AI GPU Dominance with AMD-Powered Cloud

08 Oct 2024•Technology

TensorWave Secures $100M to Expand AMD-Powered AI Infrastructure

14 May 2025•Technology

AMD Unveils Next-Gen AI Accelerators: MI325X and MI355X to Challenge Nvidia's Dominance

11 Oct 2024•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology