Wikipedia at 25 faces existential threat from AI while signing major licensing deals

7 Sources

7 Sources

[1]

At 25, Wikipedia Now Faces Its Most Existential Threat -- Generative A.I.

Wikipedia had to fight to establish its legitimacy -- and now it faces a new existential threat posed by generative AI Ian Ramjohn remembers the first time he edited Wikipedia. It was 2004, when the site was just three years old, and its information about the government of his home nation of Trinidad and Tobago was a decade out of date. But with little more than his Internet connection, he corrected the page in minutes. "That was huge," he says. "I got hooked pretty much right away." Ramjohn is an ecologist by training, and for more than a decade, he has worked at the nonprofit organization Wiki Education, a spinoff of the Wikimedia Foundation, another nonprofit that hosts the site. For a decade before that, even while he taught in several adjunct professor roles in the U.S., Ramjohn was also a dedicated Wikipedian, as the site's editors are known, editing articles on Trinidadian history, as well as topics such as figs and palms. He started editing early enough to have watched Wikipedia's credibility evolve. It began as a site that was strongest in niche topics -- pop culture and tech were overindexed -- only to grow into the Internet's first stop for background on an enormous range of subjects, science included. Whether you want a list of microorganisms that have been exposed to the vacuum of space, a description of every bone in the human body or a guide to the mountains of Jupiter's supervolcanic moon Io -- for many readers, it's still the quickest way to catch up on a topic. On supporting science journalism If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today. But now, 25 years after Wikipedia's founding, it is losing visitors. The Wikimedia Foundation has reported that human page views fell about 8 percent over certain months in 2025 compared with 2024. An external analysis of data from the company Similarweb conducted by the consulting firm Kepios found that, when counting total average monthly visits, the site lost more than one billion visits between 2022 and 2025. Wikimedia and outside researchers have argued that artificial-intelligence-powered searches plays a part because these searches reduce the number of people who click through to source sites. Wikipedia's trust infrastructure -- which includes citations, edit histories, talk pages and enforceable policies -- was shaped in part by disputes among editors and visitors to the site over the coverage of evolution, climate change and health topics. What happens when a reference site's content still matters, but fewer people visit, cite or edit it? Beloved and Reviled Wikipedia launched on January 15, 2001. The Y2K panic was in the past, Save The Last Dance ruled the box office, and the site's co-founder Jimmy Wales posted the first home page of what he envisioned would become a fast, free crowdsourced encyclopedia. The experiment grew quickly: By 2003, the English-language site had 100,000 articles. And by 2005, Wikipedia was the Internet's most popular reference site. In its earliest days, the website relied on people to write about what they knew, which resulted in contributors citing weak sources, including personal blogs. As readership and editing surged, Wikipedians no longer all knew one another. As Wikipedia's editor ranks swelled, the site codified guidelines such as prioritizing neutrality and flagging unsupported claims for removal, Ramjohn says. Editors began enforcing sourcing more consistently. Early controversies over how Wikipedia presented topics such as evolution, compared with "intelligent design," as well as debates over how to convey the science and denial of climate change, also led to stricter enforcement and, in some high-conflict cases, even page protection on articles, Ramjohn recounts. But even as it took steps to become more trustworthy and its popularity surged, using Wikipedia as a source remained discouraged by many instructors, especially in higher education. "There was always a lot of 'Don't use Wikipedia,'" says Diana Park, a science librarian at Oregon State University, who has co-taught a course about the website. A central concern was skepticism about the website's accuracy because of its open-editing policy. What later became clear is that that very feature could also weed out errors and address outright vandalism. "I think the part of Wikipedia that people don't know is the peer review," Park says. "Wikipedia isn't this Wild West of information, where anyone can just put anything. There are people holding what is there accountable." P. D. Magnus, a philosopher of science at the University at Albany, State University of New York, learned this firsthand in 2008, when he conducted an experiment in which 36 factual errors were inserted into articles about prominent historical philosophers. More than a third were removed within 48 hours -- a ratio that stood when he repeated the experiment 15 years later, in 2023. (Never fear -- on both occasions, he removed any remaining inaccuracies after the 48-hour window.) Researchers have repeatedly evaluated Wikipedia's accuracy, often finding it comparable to that of traditional encyclopedias. "It's not a source you would go to if your life were on the line," Magnus says. "But it's a perfectly good source for lots of information where there isn't much of a stake in getting it right." An Educational Tool As Wikipedia's use grew, some educators softened their stance, encouraging its use to find leads to sources that students could dig into directly. Others took a different approach, assigning students to edit Wikipedia entries -- many through Wiki Education. Jennifer Glass, a biogeochemist at Georgia Institute of Technology, is one of those professors; she has incorporated Wikipedia editing into her teaching since 2018. She wanted a student project that emphasized the concise and technical but understandable writing style that the site uses. And although she hadn't done much editing for Wikipedia herself, she was impressed by the website's breadth of content. Each semester, her students write one article from scratch about a topic they research, from dolomitization to the tropopause. Glass says the project teaches them the value of institutional access to published literature and the skill of fact-checking their writing line by line. In the full course on Wikipedia that Park has co-taught at Oregon State University, she has had a similar experience. "It's always kind of a joy to see students take charge of something that they've been told for so long is wrong," she says. Digging deep into Wikipedia -- learning how it has come to be and how to present information on the site -- teaches students how verifiability, talk pages and edit histories work -- and how to trace claims back to primary sources. "It's about being able to use it in the right situation and time," Park says. Another Wikipedia? The ability to evaluate the quality of information, as well as the skills required to present accurate knowledge online, may matter even more now, 25 years into Wikipedia's existence. The importance of information literacy has only grown with the rise of content generated by AI and large language models (LLMs), sparking new debates about reliability, accountability and correction. Many tech companies have rapidly deployed this kind of AI usage, including Google, which has introduced AI-generated summaries atop some search results. The LLMs feeding this technology, trained on large datasets drawn from the web, produce fluent answers simply by predicting the next word, without providing back-up for each claim. But as in the earliest days of Wikipedia, there's no public edit history of AI-generated texts. Recall the experience that hooked Ramjohn on editing Wikipedia -- the power to fix what was wrong on the Internet, no intermediary required. Users can't correct an AI summary the way they can fix a sentence on Wikipedia. In fact, Magnus attributes Wikipedia's success to four factors: its community, its firm editing policies, the ability to review a page's entire history and the noncommercial nature of the site. AI summarizers generally lack Wikipedia's public accountability and transparent governance. And Wikipedia's supporters worry that even after the site has weathered its 25 years, generative AI may draw attention and traffic away from it. "Definitely LLMs are an existential threat to Wikipedia," Ramjohn says. Fewer visitors mean fewer new editors for Wikipedia, and less frequent visits mean slower correction of errors added to the site -- even as Wikipedians report that they have been struggling to keep up with a growing volume of AI-generated text. If fewer people visit and fewer people edit, the system that made Wikipedia self-correcting -- and unusually resilient -- could weaken.

[2]

At 25, Wikipedia embodies what the internet could have been - but can it survive AI?

Wikipedia is the world's most popular online encyclopedia.It is the most successful open data project of all time.However, AI brings new challenges and long-term threats. Today, when people ask, 'Where was Madonna born?' 'Who won the 1999 Super Bowl?' or 'Who's the current world classical chess champion?' (Bay City, Michigan, the Denver Broncos, and Gukesh Dommaraju), they turn to Wikipedia. Or, to be more exact, if they Google the answer, Wikipedia is the top source, but Google's AI Overview is what they'll see at the search results page. It's Wikipedia writers, however, who did the research for the answers. Also: Even Linus Torvalds is vibe coding now Twenty-five years ago, it was another story. Before 15 January 2001, if you did a Google search, your answers to those earlier questions would have come from a Madonna fan site, ESPN, and the Internet Chess Club. On that day, a small nonprofit launched what seemed like a utopian idea, an encyclopedia that anyone could edit. Today, it's one of the top 10 websites in the world, cited in court rulings, academic papers, and journalism. And yet, volunteers and donations still run it, without a single ad in sight. Wikipedia started as a side project of Nupedia. This obscure project was Jimmy Wales' and Larry Sanger's first attempt to create a peer-reviewed encyclopedia. Nupedia was launched in March 2000 as a free online encyclopedia, written and peer‑reviewed by subject-matter experts through a seven‑step approval process. It failed badly. Also: I tried Grokipedia, the AI-powered anti-Wikipedia. Here's why neither is foolproof In its first months of existence. Nupedia, a free, online encyclopedia written and peer‑reviewed by subject-matter experts under a seven‑step approval process, produced a mere two dozen articles. Wikipedia, which allows anyone to write and edit articles, soared in popularity after its 2001 launch. It quickly became the most successful open collaboration experiment ever. Today, Wikipedia boasts over six million English-language articles and content in over 320 languages. Early skeptics doubted it would last. How could a website that anyone could change produce reliable facts? In 2005, Nature famously compared Wikipedia's accuracy to Encyclopedia Britannica and found surprisingly little difference. Two and a half decades later, Wikipedia remains fallible, but self-correcting. Errors get fixed faster than they'd be noticed in print. Its openness, paradoxically, is also its safeguard. As Wales said at the time, Wikipedia was both self-policing and self-cleaning. That's not to say Wikipedia is perfect, far from it. Wikipedia has spent 25 years walking a tightrope between openness and abuse, and most of its growing pains come from that contradiction. The biggest challenges have been maintaining reliability at scale, keeping a small volunteer community from burning out, and defending the project against political and legal attacks. Also: Linux will be unstoppable in 2026 - but one open-source legend may not survive There are some areas, Wikipedia editors admit, they've been found lacking. The site's open-edit model has encouraged systemic biases (gender, racial, national, and ideological), especially since most editors are male and concentrated in North America and Europe. New and minority editors often report a hostile climate of harassment, cliques, and "ownership" of articles that drives people away and feeds long-running "editor retention" efforts. Even setting these issues aside, that doesn't mean the platform's immune to abuse. Edit wars, coordinated disinformation campaigns, and cultural bias persist. The Wikimedia Foundation has had to double down on anti-manipulation policies, while editors wage daily battles to keep political and corporate spin in check. Still, the community itself, with over 250,000 active editors, remains its greatest defense. Despite their best efforts, corporations, governments, and PR firms have repeatedly attempted to launder reputations through undisclosed paid editing, sockpuppet networks, and conflict‑of‑interest campaigns. For example, in 2012, a pair of senior Wikipedia editors was found to be writing and editing articles at the request of their clients for a fee. Since then, numerous other instances of Wikipedia editors collaborating to profit from writing and editing biased articles have emerged. It's an ongoing problem. Also: My 11 favorite Linux distributions of all time, ranked The site also suffers from a long history of "edit wars" and politicized editing around topics like biographies, climate, and geopolitics, which can turn article pages into battlegrounds instead of neutral references. Indeed, controversial pages, such as those on the Arab-Israeli conflict, caste topics in India, and Donald Trump, can't be touched by most editors. That said, Wikipedia also helped to birth some key open technologies. The MediaWiki engine that powers Wikipedia also runs countless internal wikis, from NASA to Mozilla. Its open API and structured data project, Wikidata, quietly underpins parts of modern AI and search indexing. When you ask your phone a factual question, odds are the answer traces back, in part, to Wikipedia's structured metadata. Unlike social media platforms fueled by outrage and engagement metrics, Wikipedia thrives on consensus and transparency. Its talk pages are messy democratic forums -- more C-SPAN than TikTok. And that's precisely why Wikipedia has lasted. It resists the dopamine economy. Wikipedia's future is another matter. Donations fund its servers and staff, but editor participation is aging. Recruiting new contributors, especially from outside the English-speaking world, remains a challenge. The Wikipedia Foundation, now led by Maryana Iskander, is experimenting with partnerships, mobile tools, and even AI-assisted editing while insisting that human judgment stays central. However, AI is also hurting Wikipedia. After cleaning up AI bot noise in 2025, Wikimedia reported that genuine human page views declined by about 8% year-on-year in recent months. Wikipedia traffic analysis indicated that nearly all of the multi‑year decline was attributable to people no longer clicking on Wikipedia search links. According to SimilarWeb, ChatGPT is now the world's fifth-favorite website, while Wikipedia has dropped to ninth. Could Wikipedia die? That question might sound alarmist, but it was only a few years ago that Stack Overflow was everyone's favorite programming website. Stack Overflow's traffic began to fall when ChatGPT arrived. As AI programming has become commonplace, Stack Overflow's decline has accelerated. In December 2025, only 3,862 questions were posted on Stack Overflow, representing a 78% drop from the previous December. Also: You're reading more AI-generated content than you think At 25, Wikipedia embodies what the internet could have been: user-powered, open, and accountable. Wikipedia may have its warts, and it's far from perfect, but it's transparent about its imperfections. That's more than can be said for many tech giants. Wikipedia's quiet resilience proves that trust earned collectively can scale. The encyclopedia anyone can edit has outlived the dot-com boom, Web 2.0, and the first wave of AI hype. Whether it can survive the AI-enabled web of the next 25 years remains an open question; one that, fittingly, anyone can edit.

[3]

Wikipedia at 25: can its original ideals survive in the age of AI?

Around the turn of the century, the internet underwent a transformation dubbed "web 2.0". The world wide web of the 1990s had largely been read-only: static pages, hand-built homepages, portal sites with content from a few publishers. Then came the dotcom crash of 2000 to 2001, when many heavily financed, lightly useful internet businesses collapsed. In the aftermath, surviving companies and new entrants leaned into a different logic that the author-publisher Tim O'Reilly later described as "harnessing collective intelligence": platforms rather than pages, participation rather than passive consumption. And on January 15 2001, a website was born that seemed to encapsulate this new era. The first entry on its homepage read simply: "This is the new WikiPedia!" Wikipedia wasn't originally conceived as a not-for-profit website. In its early phase, it was hosted and supported through co-founder Jimmy Wales's for-profit search company, Bomis. But two years on, the Wikimedia Foundation was created as a dedicated non-profit to steward Wikipedia and its sibling projects. Wikipedia embodied the web 2.0 dream of a non-hierarchical, user-led internet built on participation and sharing. One foundational idea - volunteer human editors reviewing and authenticating content incrementally after publication - was highlighted in a 2007 Los Angeles Times report about Wales himself trying to write an entry for a butcher shop in Gugulethu, South Africa. His additions were reverted or blocked by other editors who disagreed about the significance of a shop they had never heard of. The entry finally appeared with a clause that neatly encapsulated the platform's self-governance model: "A Wikipedia article on the shop was created by the encyclopedia's co-founder Jimmy Wales, which led to a debate on the crowdsourced project's inclusion criteria." As a historical sociologist of artificial intelligence and the internet, I find Wikipedia revealing not because it is flawless, but because it shows its workings (and flaws). Behind almost every entry sits a largely uncredited layer of human judgement: editors weighing sources, disputing framing, clarifying ambiguous claims and enforcing standards such as verifiability and neutrality. Often, the most instructive way to read Wikipedia is to read its revision history. Scholarship has even used this edit history as a method - for example, when studying scientific discrepancies in the developnent of Crispr gene-editing technology, or the unfolding history of the 2011 Egyptian revolution. The scale of human labour that goes into Wikipedia is easy to take for granted, given its disarming simplicity of presentation. Statista estimates 4.4 billion people accessed the site in 2024 - over half the world and two-thirds of internet users. More than 125 million people have edited at least one entry. Wikipedia carries no advertising and does not trade in users' data - central to its claim of editorial independence. But users regularly see fundraising banners and appeals, and the Wikimedia Foundation has built paid services to manage high-volume reuse of its content - particularly by bots scraping it for AI training. The foundation's total assets now stand at more than US$310 million (£230 million). 'Wokepedia' v Grokipedia At 25, Wikipedia can still look like a rare triumph for the original web 2.0 ideals - at least in contrast to most of today's major open platforms, which have turned participation into surveillance advertising. Some universities, including my own, have used the website's anniversary to soothe fears about student use of generative AI. We panicked about students relying on Wikipedia, then adapted and carried on. The same argument now suggests we should not over-worry about students relying on generative AI to do their work. This comparison is sharpened by the rapid growth of Elon Musk's AI-powered version of Wikipedia (or "Wokepedia", as Musk dismissively refers to it). While Grokipedia uses AI to generate most of its entries, some are near-identical to Wikipedia's (all of which are available for republication under creative commons licensing). Grokipedia entries cannot be directly edited, but registered users can suggest corrections for the AI to consider. Despite only launching on October 27 2025, this AI encyclopedia already has more than 5.6 million entries, compared with Wikipedia's total of over 7.1 million. So, if Grokipedia overtakes its much older rival in scale at least, which now seems plausible, should we see this as the end of the web 2.0 dream, or simply another moment of adaptation? Credibility tested AI and the human-created internet have always been intertwined. Voluntary sharing is exploited for AI training with contested consent and thin attribution. Models trained on human writing generate new text that pollutes the web as "AI slop". Wikipedia has already collided with this. Editors report AI-written additions and plausible citations that fail on checking. They have responded with measures such as WikiProject AI Cleanup, which offers guidance on how to detect generic AI phrasing and other false information. But Wales does not want a full ban on AI within Wikipedia's domain. Rather, he has expressed hope for human-machine synergy, highlighting AI's potential to bring more non-native English contributors to the site. Wikipedia also acknowledges it has a serious gender imbalance, both in terms of entries and editors. Wikipedia's own credibility has regularly been tested over its 25-year history. High-profile examples include the John Seigenthaler Sr biography hoax, when an unregistered editor falsely wrote about the journalist's supposed ties to the Kennedy assasinations, and the Essjay controversy, in which a prominent editor was found to have fabricated their education credentials. There have also been recurring controversies over paid- or state-linked conflicts of interest, including the 2012 Wiki-PR case, when volunteers traced patterns to a firm and banned hundreds of accounts. These vulnerabilities have seen claims of political bias gain traction. Musk has repeatedly framed Wikipedia and mainstream outlets as ideologically slanted, and promoted Grokipedia as a "massive improvement" that needed to "purge out the propaganda". As Wikipedia reaches its 25th anniversary, perhaps we are witnessing a new "tragedy of the commons", where volunteered knowledge becomes raw material for systems that themselves may produce unreliable material at scale. Ursula K. Le Guin's novel The Dispossessed (1974) dramatises the dilemma Wikipedia faces: an anarchist commons survives only through constant maintenance, while facing the pull of a wealthier capitalist neighbour. According to the critical theorist McKenzie Wark: "It is not knowledge which is power, but secrecy." AI often runs on closed, proprietary models that scrape whatever is available. Wikipedia's counter-model is public curation with legible histories and accountability. But if Google's AI summaries and rankings start privileging Grokipedia, habits could change fast. This would repeat the "Californian ideology" that journalist-author Wendy M. Grossman was warned about in the year Wikipedia launched - namely, internet openness becoming fuel for Silicon Valley market power. Wikipedia and generative AI both alter knowledge circulation. One is a human publishing system with rules and revision histories. The other is a text production system that mimics knowledge without reliably grounding it. The choice, for the moment at least, is all of ours.

[4]

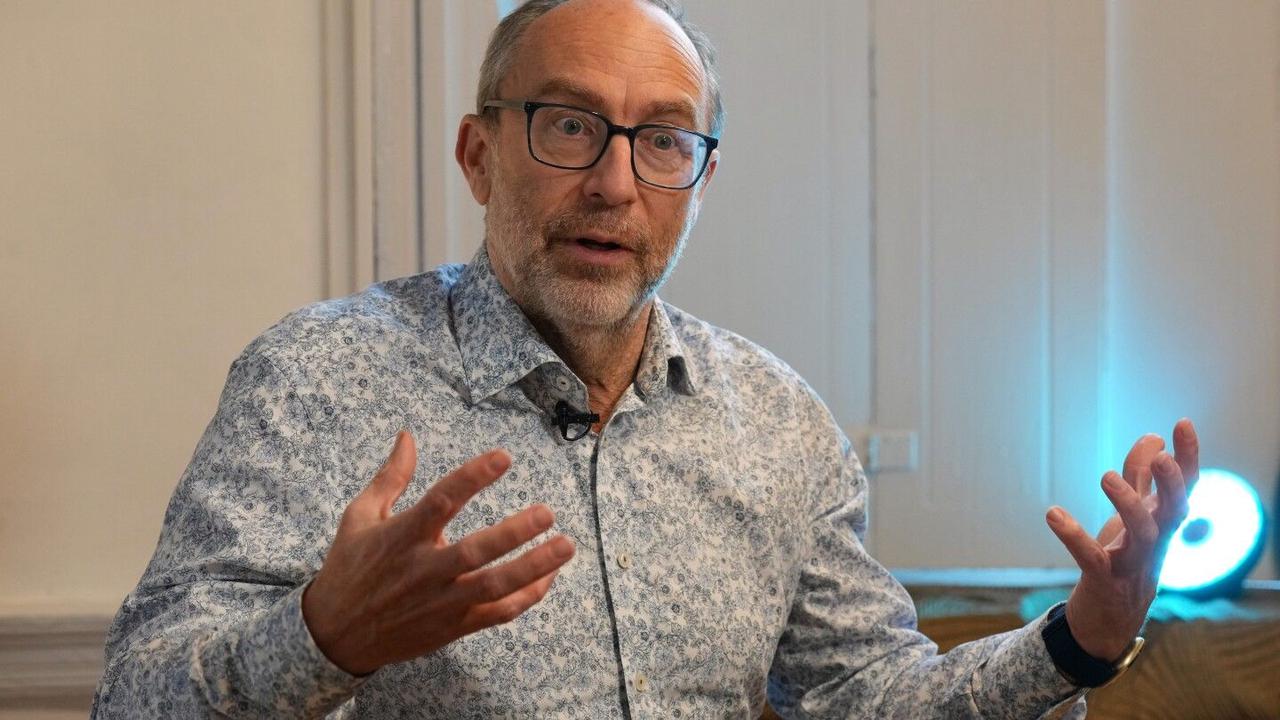

Wikipedia unveils new AI licensing deals as it marks 25th birthday

LONDON (AP) -- Wikipedia unveiled new business deals with a slew of artificial intelligence companies on Thursday as it marked its 25th anniversary. The online crowdsourced encyclopedia revealed that it has signed licensing deals with AI companies including Amazon, Meta Platforms, Perplexity, Microsoft and France's Mistral AI. Wikipedia is one of the last bastions of the early internet, but that original vision of a free online space has been clouded by the dominance of Big Tech platforms and the rise of generative AI chatbots trained on content scraped from the web. Aggressive data collection methods by AI developers, including from Wikipedia's vast repository of free knowledge, has raised questions about who ultimately pays for the artificial intelligence boom. The nonprofit that runs the site signed Google as one of its first customers in 2022 and announced other agreements last year with smaller AI players like search engine Ecosia. The new deals will help one of the world's most popular websites monetize heavy traffic from AI companies. They're paying to access Wikipedia content "at a volume and speed designed specifically for their needs," the Wikimedia Foundation said. It did not provide financial or other details. While AI training has sparked legal battles elsewhere over copyright and other issues, Wikipedia founder Jimmy Wales said he welcomes it. "I'm very happy personally that AI models are training on Wikipedia data because it's human curated," Wales told The Associated Press in an interview. "I wouldn't really want to use an AI that's trained only on X, you know, like a very angry AI," Wales said, referring to billionaire Elon Musk's social media platform. Wales said the site wants to work with AI companies, not block them. But "you should probably chip in and pay for your fair share of the cost that you're putting on us." The Wikimedia Foundation, a nonprofit group that runs Wikipedia, last year urged AI developers to pay for access through its enterprise platform and said human traffic had fallen 8%. Meanwhile, visits from bots, sometimes disguised to evade detection, were heavily taxing its servers as they scrape masses of content to feed AI large language models. The findings highlighted shifting online trends as search engine AI overviews and chatbots summarize information instead of sending users to sites by showing them links. Wikipedia is the ninth most visited site on the internet. It has more than 65 million articles in 300 languages that are edited by some 250,000 volunteers. The site has become so popular in part because its free for anyone to use. "But our infrastructure is not free, right?" Wikimedia Foundation CEO Maryana Iskander said in a separate interview in Johannesburg, South Africa. It costs money to maintain servers and other infrastructure that allows both individuals and tech companies to "draw data from Wikipedia," said Iskander, who's stepping down on Jan. 20, and will be replaced by Bernadette Meehan. The bulk of Wikipedia's funding comes from 8 million donors, most of them individuals. "They're not donating in order to subsidize these huge AI companies," Wales said. They're saying, "You know what, actually you can't just smash our website. You have to sort of come in the right way." Editors and users could benefit from AI in other ways. The Wikimedia Foundation has outlined an AI strategy that Wales said could result in tools that reduce tedious work for editors. While AI isn't good enough to write Wikipedia entries from scratch, it could, for example, be used to update dead links by scanning the surrounding text and then searching online to find other sources. "We don't have that yet but that's the kind of thing that I think we will see in the future." Artificial intelligence could also improve the Wikipedia search experience, by evolving from the traditional keyword method to more of a chatbot style, Wales said. "You can imagine a world where you can ask the Wikipedia search box a question and it will quote to you from Wikipedia," he said. It could respond by saying "here's the answer to your question from this article and here's the actual paragraph. That sounds really useful to me and so I think we'll move in that direction as well. " Reflecting on the early days, Wales said it was a thrilling time because many people were motivated to help build Wikipedia after he and co-founder Larry Sanger, who departed long ago, set it up as an experiment. However, while some might look back wistfully on what seems now to be a more innocent time, Wales said those early days of the internet also had a dark side. "People were pretty toxic back then as well. We didn't need algorithms to be mean to each other," he said. "But, you know, it was a time of great excitement and a real spirit of possibility." Wikipedia has lately found itself under fire from figures on the political right, who have dubbed the site "Wokepedia" and accused it of being biased in favor of the left. Republican lawmakers in the U.S. Congress are investigating alleged "manipulation efforts" in Wikipedia's editing process that they said could inject bias and undermine neutral points of view on its platform and the AI systems that rely on it. A notable source of criticism is Musk, who last year launched his own AI-powered rival, Grokipedia. He has criticized Wikipedia for being filled with "propaganda" and urged people to stop donating to the site. Wales said he doesn't consider Grokipedia a "real threat" to Wikipedia because it's based on large language models, which are the troves of online text that AI systems are trained on. "Large language models aren't good enough to write really quality reference material. So a lot of it is just regurgitated Wikipedia," he said. "It often is quite rambling and sort of talks nonsense. And I think the more obscure topic you look into, the worse it is." He stressed that he wasn't singling out criticism of Grokipedia. "It's just the way large language models work." Wales say he's known Musk for years but they haven't been in touch since Grokipedia launched. "'How's your family?' I'm a nice person, I don't really want to pick a fight with anybody." ____ AP writer Mogomotsi Magome in Johannesburg contributed to this report

[5]

Wikipedia unveils new AI licensing deals as it marks 25th birthday

LONDON -- Wikipedia unveiled new business deals with a slew of artificial intelligence companies on Thursday as it marked its 25th anniversary. The online crowdsourced encyclopedia revealed that it has signed licensing deals with AI companies including Amazon, Meta Platforms, Perplexity, Microsoft and France's Mistral AI. Wikipedia is one of the last bastions of the early internet, but that original vision of a free online space has been clouded by the dominance of Big Tech platforms and the rise of generative AI chatbots trained on content scraped from the web. Aggressive data collection methods by AI developers, including from Wikipedia's vast repository of free knowledge, has raised questions about who ultimately pays for the artificial intelligence boom. The nonprofit that runs the site signed Google as one of its first customers in 2022 and announced other agreements last year with smaller AI players like search engine Ecosia. The new deals will help one of the world's most popular websites monetize heavy traffic from AI companies. They're paying to access Wikipedia content "at a volume and speed designed specifically for their needs," the Wikimedia Foundation said. It did not provide financial or other details. While AI training has sparked legal battles elsewhere over copyright and other issues, Wikipedia founder Jimmy Wales said he welcomes it. "I'm very happy personally that AI models are training on Wikipedia data because it's human curated," Wales told The Associated Press in an interview. "I wouldn't really want to use an AI that's trained only on X, you know, like a very angry AI," Wales said, referring to billionaire Elon Musk's social media platform. Wales said the site wants to work with AI companies, not block them. But "you should probably chip in and pay for your fair share of the cost that you're putting on us." The Wikimedia Foundation, a nonprofit group that runs Wikipedia, last year urged AI developers to pay for access through its enterprise platform and said human traffic had fallen 8%. Meanwhile, visits from bots, sometimes disguised to evade detection, were heavily taxing its servers as they scrape masses of content to feed AI large language models. The findings highlighted shifting online trends as search engine AI overviews and chatbots summarize information instead of sending users to sites by showing them links. Wikipedia is the ninth most visited site on the internet. It has more than 65 million articles in 300 languages that are edited by some 250,000 volunteers. The site has become so popular in part because its free for anyone to use. "But our infrastructure is not free, right?" Wikimedia Foundation CEO Maryana Iskander said in a separate interview in Johannesburg, South Africa. It costs money to maintain servers and other infrastructure that allows both individuals and tech companies to "draw data from Wikipedia," said Iskander, who's stepping down on Jan. 20, and will be replaced by Bernadette Meehan. The bulk of Wikipedia's funding comes from 8 million donors, most of them individuals. "They're not donating in order to subsidize these huge AI companies," Wales said. They're saying, "You know what, actually you can't just smash our website. You have to sort of come in the right way." Editors and users could benefit from AI in other ways. The Wikimedia Foundation has outlined an AI strategy that Wales said could result in tools that reduce tedious work for editors. While AI isn't good enough to write Wikipedia entries from scratch, it could, for example, be used to update dead links by scanning the surrounding text and then searching online to find other sources. "We don't have that yet but that's the kind of thing that I think we will see in the future." Artificial intelligence could also improve the Wikipedia search experience, by evolving from the traditional keyword method to more of a chatbot style, Wales said. "You can imagine a world where you can ask the Wikipedia search box a question and it will quote to you from Wikipedia," he said. It could respond by saying "here's the answer to your question from this article and here's the actual paragraph. That sounds really useful to me and so I think we'll move in that direction as well. " Reflecting on the early days, Wales said it was a thrilling time because many people were motivated to help build Wikipedia after he and co-founder Larry Sanger, who departed long ago, set it up as an experiment. However, while some might look back wistfully on what seems now to be a more innocent time, Wales said those early days of the internet also had a dark side. "People were pretty toxic back then as well. We didn't need algorithms to be mean to each other," he said. "But, you know, it was a time of great excitement and a real spirit of possibility." Wikipedia has lately found itself under fire from figures on the political right, who have dubbed the site "Wokepedia" and accused it of being biased in favor of the left. Republican lawmakers in the U.S. Congress are investigating alleged "manipulation efforts" in Wikipedia's editing process that they said could inject bias and undermine neutral points of view on its platform and the AI systems that rely on it. A notable source of criticism is Musk, who last year launched his own AI-powered rival, Grokipedia. He has criticized Wikipedia for being filled with "propaganda" and urged people to stop donating to the site. Wales said he doesn't consider Grokipedia a "real threat" to Wikipedia because it's based on large language models, which are the troves of online text that AI systems are trained on. "Large language models aren't good enough to write really quality reference material. So a lot of it is just regurgitated Wikipedia," he said. "It often is quite rambling and sort of talks nonsense. And I think the more obscure topic you look into, the worse it is." He stressed that he wasn't singling out criticism of Grokipedia. "It's just the way large language models work." Wales say he's known Musk for years but they haven't been in touch since Grokipedia launched. "'How's your family?' I'm a nice person, I don't really want to pick a fight with anybody." ____ AP writer Mogomotsi Magome in Johannesburg contributed to this report

[6]

At 25, Wikipedia Navigates a Quarter-Life Crisis in the Age of A.I.

As A.I. search reshapes how people get answers, the encyclopedia confronts falling page views and a fight for relevance. Traffic to Wikipedia, the world's largest online encyclopedia, naturally ebbs and flows with the rhythms of daily life -- rising and falling with the school calendar, the news cycle or even the day of the week -- making routine fluctuations unremarkable for a site that draws roughly 15 billion page views a month. But sustained declines tell a different story. Last October, the Wikimedia Foundation, the nonprofit that oversees Wikipedia, disclosed that human traffic to the site had fallen 8 percent in recent months as a growing number of users turned to A.I. search engines and chatbots for answers. Sign Up For Our Daily Newsletter Sign Up Thank you for signing up! By clicking submit, you agree to our <a href="http://observermedia.com/terms">terms of service</a> and acknowledge we may use your information to send you emails, product samples, and promotions on this website and other properties. You can opt out anytime. See all of our newsletters "I don't think that we've seen something like this happen in the last seven to eight years or so," Marshall Miller, senior director of product at the Wikimedia Foundation, told Observer. Launched on Jan. 15, 2001, Wikipedia turns 25 today. This milestone comes at a pivotal point for the online encyclopedia, which is straddling a delicate line between fending off existential risks posed by A.I. and avoiding irrelevance as the technology transforms how people find and consume information. "It's really this question of long-term sustainability," Lane Becker, senior director of earned revenue at the Wikimedia Foundation, told Observer. "We'd like to make it at least another 25 years -- and ideally much longer." While it's difficult to pinpoint Wikipedia's recent traffic declines on any single factor, it's evident that the drop coincides with the emergence of A.I. search features, according to Miller. Chatbots such as ChatGPT and Perplexity often cite and link to Wikipedia, but because the information is already embedded in the A.I.-generated response, users are less likely to click through to the source, depriving the site of page views. Yet the spread of A.I.-generated content also underscores Wikipedia's central role in the online information ecosystem. Wikipedia's vast archive -- more than 65 million articles across over 300 languages -- plays a prominent role within A.I. tools, with the site's data scraped by nearly all large language models (LLMs). "Yes, there is a decline in traffic to our sites, but there may well be more people getting Wikipedia knowledge than ever because of how much it's being distributed through those platforms that are upstream of us," said Miller. Surviving in the era of A.I. Wikipedia must find a way to stay financially and editorially viable as the internet changes. Declining page views not only mean that fewer visitors are likely to donate to the platform, threatening its main source of revenue, but also risk shrinking the community of volunteer editors who sustain it. Fewer contributors would mean slower content growth, ultimately leaving less material for LLMs to draw from. Metrics that track volunteer participation have already begun to slip, according to Miller. While noting that "it's hard to parse out all the different reasons that this happens," he conceded that the Foundation has "reason to believe that declines in page views will lead to declines in volunteer activity." To maintain a steady pipeline of contributors, users must first become aware of the platform and understand its collaborative model. That makes proper attribution by A.I. tools essential, Miller said. Beyond simply linking to Wikipedia, surfacing metadata -- such as when a page was last updated or how many editors contributed -- could spur curiosity and encourage users to engage more deeply with the platform. Tech companies are becoming aware of the value of keeping Wikipedia relevant. Over the past year, Microsoft, Mistral AI, Perplexity AI, Ecosia, Pleias and ProRata have joined Wikimedia Enterprise, a commercial product that allows corporations to pay for large-scale access and distribution of Wikipedia content. Google and Amazon have long been partners of the platform, which was launched in 2021. The basic premise is that Wikimedia Enterprise customers can access content from Wikipedia at a higher volume and speed while helping sustain the platform's mission. "I think there's a growing understanding on the part of these A.I. companies about the significance of the Wikipedia dataset, both as it currently exists and also its need to exist in the future," said Becker. Wikipedia is hardly alone in this shift. News organizations, including CNN, the Associated Press and The New York Times, have struck licensing deals with A.I. companies to supply editorial content in exchange for payment, while infrastructure providers like Cloudflare offer tools that allow websites to charge A.I. crawlers for access. Last month, the licensing nonprofit Creative Commons announced its support of a "pay-to-crawl" approach for managing A.I. bots. Preparing for an uncertain future Wikipedia itself is also adapting to a younger generation of internet users. In an effort to make editing Wikipedia more appealing, the platform is working to enhance its mobile edit features, reflecting the fact that younger audiences are far more likely to engage on smartphones than desktop computers. Younger users' preference for social video platforms such as YouTube and TikTok has also pushed Wikipedia's Future Audiences team -- a division tasked with expanding readership -- to experiment with video. The effort has already paid off, producing viral clips on topics ranging from Wikipedia's most hotly disputed edits to the courtship dance of the black-footed albatross and Sino-Roman relations. The organization is also exploring a deeper presence on gaming platforms, another major draw for younger users. Evolving with the times also means integrating A.I. further within the platform. Wikipedia has introduced features such as Edit Check, which offers real-time feedback on whether a proposed edit fits a page, and is developing features like Tone Check to help ensure articles adhere to a neutral point of view. A.I.-generated content has also begun to seep onto the platform. As of August 2024, roughly 5 percent of newly created English articles on the site were produced with the help of A.I., according to a Princeton study. Seeing this as a problem, Wikipedia introduced a "speedy deletion" policy that allows editors to quickly remove content that shows clear signs of being A.I.-generated. Still, the community remains divided over whether using A.I. for tasks such as drafting articles is inherently problematic, said Miller. "There's this active debate." From streamlining editing to distributing its content ever more widely, Wikipedia is betting that A.I. can ultimately be an ally rather than an adversary. If managed carefully, the technology could help accelerate the encyclopedia's mission over the next 25 years -- as long as it doesn't bring down the encyclopedia first. "Our whole thing is knowledge dissemination to anyone that wants it, anywhere that they want it," said Becker. "If this is how people are going to learn things -- and people are learning things and gaining value from the information that our community is able to bring forward -- we absolutely want to find a way to be there and support it in ways that align with our values."

[7]

Wikipedia unveils new AI licensing deals as it marks 25th birthday

LONDON -- Wikipedia unveiled new business deals with a slew of artificial intelligence companies on Thursday as it marked its 25th anniversary. The online crowdsourced encyclopedia revealed that it has signed licensing deals with AI companies including Amazon, Meta Platforms, Perplexity, Microsoft and France's Mistral AI. Wikipedia is one of the last bastions of the early internet, but that original vision of a free online space has been clouded by the dominance of Big Tech platforms and the rise of generative AI chatbots trained on content scraped from the web. Aggressive data collection methods by AI developers, including from Wikipedia's vast repository of free knowledge, has raised questions about who ultimately pays for the artificial intelligence boom. The nonprofit that runs the site signed Google as one of its first customers in 2022 and announced other agreements last year with smaller AI players like search engine Ecosia. The new deals will help one of the world's most popular websites monetize heavy traffic from AI companies. They're paying to access Wikipedia content "at a volume and speed designed specifically for their needs," the Wikimedia Foundation said. It did not provide financial or other details. While AI training has sparked legal battles elsewhere over copyright and other issues, Wikipedia founder Jimmy Wales said he welcomes it. "I'm very happy personally that AI models are training on Wikipedia data because it's human curated," Wales told The Associated Press in an interview. "I wouldn't really want to use an AI that's trained only on X, you know, like a very angry AI," Wales said, referring to billionaire Elon Musk's social media platform. Wales said the site wants to work with AI companies, not block them. But "you should probably chip in and pay for your fair share of the cost that you're putting on us." The Wikimedia Foundation, a nonprofit group that runs Wikipedia, last year urged AI developers to pay for access through its enterprise platform and said human traffic had fallen 8%. Meanwhile, visits from bots, sometimes disguised to evade detection, were heavily taxing its servers as they scrape masses of content to feed AI large language models. The findings highlighted shifting online trends as search engine AI overviews and chatbots summarize information instead of sending users to sites by showing them links. Wikipedia is the ninth most visited site on the internet. It has more than 65 million articles in 300 languages that are edited by some 250,000 volunteers. The site has become so popular in part because its free for anyone to use. "But our infrastructure is not free, right?" Wikimedia Foundation CEO Maryana Iskander said in a separate interview in Johannesburg, South Africa. It costs money to maintain servers and other infrastructure that allows both individuals and tech companies to "draw data from Wikipedia," said Iskander, who's stepping down on Jan. 20, and will be replaced by Bernadette Meehan. The bulk of Wikipedia's funding comes from 8 million donors, most of them individuals. "They're not donating in order to subsidize these huge AI companies," Wales said. They're saying, "You know what, actually you can't just smash our website. You have to sort of come in the right way." Editors and users could benefit from AI in other ways. The Wikimedia Foundation has outlined an AI strategy that Wales said could result in tools that reduce tedious work for editors. While AI isn't good enough to write Wikipedia entries from scratch, it could, for example, be used to update dead links by scanning the surrounding text and then searching online to find other sources. "We don't have that yet but that's the kind of thing that I think we will see in the future." Artificial intelligence could also improve the Wikipedia search experience, by evolving from the traditional keyword method to more of a chatbot style, Wales said. "You can imagine a world where you can ask the Wikipedia search box a question and it will quote to you from Wikipedia," he said. It could respond by saying "here's the answer to your question from this article and here's the actual paragraph. That sounds really useful to me and so I think we'll move in that direction as well. " Reflecting on the early days, Wales said it was a thrilling time because many people were motivated to help build Wikipedia after he and co-founder Larry Sanger, who departed long ago, set it up as an experiment. However, while some might look back wistfully on what seems now to be a more innocent time, Wales said those early days of the internet also had a dark side. "People were pretty toxic back then as well. We didn't need algorithms to be mean to each other," he said. "But, you know, it was a time of great excitement and a real spirit of possibility." Wikipedia has lately found itself under fire from figures on the political right, who have dubbed the site "Wokepedia" and accused it of being biased in favor of the left. Republican lawmakers in the U.S. Congress are investigating alleged "manipulation efforts" in Wikipedia's editing process that they said could inject bias and undermine neutral points of view on its platform and the AI systems that rely on it. A notable source of criticism is Musk, who last year launched his own AI-powered rival, Grokipedia. He has criticized Wikipedia for being filled with "propaganda" and urged people to stop donating to the site. Wales said he doesn't consider Grokipedia a "real threat" to Wikipedia because it's based on large language models, which are the troves of online text that AI systems are trained on. "Large language models aren't good enough to write really quality reference material. So a lot of it is just regurgitated Wikipedia," he said. "It often is quite rambling and sort of talks nonsense. And I think the more obscure topic you look into, the worse it is." He stressed that he wasn't singling out criticism of Grokipedia. "It's just the way large language models work." Wales say he's known Musk for years but they haven't been in touch since Grokipedia launched. "'How's your family?' I'm a nice person, I don't really want to pick a fight with anybody."

Share

Share

Copy Link

Wikipedia celebrates its 25th anniversary confronting a fundamental challenge to its survival. The crowdsourced encyclopedia has lost over one billion visits between 2022 and 2025 as AI-powered search engines and chatbots increasingly summarize information without directing users to source sites. Meanwhile, the Wikimedia Foundation announced new AI licensing deals with Amazon, Meta Platforms, Microsoft, Perplexity, and Mistral AI to monetize the heavy bot traffic straining its infrastructure.

Wikipedia Confronts Declining Traffic in AI-Dominated Information Landscape

Wikipedia reached a milestone birthday on January 15, 2025, marking 25 years since Jimmy Wales and co-founder Larry Sanger launched what seemed like an improbable experiment: an encyclopedia that anyone could edit

1

. The platform evolved from a niche reference site into the ninth most visited site on the internet, with more than 65 million articles in 300 languages edited by some 250,000 volunteers4

. Yet this anniversary arrives amid an existential threat from generative AI that challenges the very foundation of the human-edited platform.

Source: The Conversation

The decline in Wikipedia page views tells a stark story. The Wikimedia Foundation reported that human traffic fell about 8 percent over certain months in 2025 compared with 2024

1

. External analysis from consulting firm Kepios, using data from Similarweb, found the site lost more than one billion visits between 2022 and 20251

. Researchers point to AI-powered search engines as a primary factor, with search engine AI overviews and chatbots now summarizing information instead of sending users to source sites by showing them links4

.AI Licensing Deals Offer Revenue Path for Human-Curated Data

Facing this challenge, Wikipedia unveiled AI licensing deals with major technology companies including Amazon, Meta Platforms, Perplexity, Microsoft, and France's Mistral AI

4

. These agreements allow AI companies to access Wikipedia content "at a volume and speed designed specifically for their needs," though the Wikimedia Foundation did not disclose financial details4

. The nonprofit previously signed Google as one of its first customers in 20224

.

Source: AP

Jimmy Wales expressed support for AI training on human-curated data, stating: "I'm very happy personally that AI models are training on Wikipedia data because it's human curated. I wouldn't really want to use an AI that's trained only on X, you know, like a very angry AI"

4

. However, Wales emphasized that AI companies "should probably chip in and pay for your fair share of the cost that you're putting on us"4

. Visits from bots, sometimes disguised to evade detection, were heavily taxing Wikipedia's servers as they scrape masses of content to feed large language models5

.Human Editors Battle AI Slop and Maintain Verifiability Standards

The platform's trust infrastructure relies on human editors who enforce policies around verifiability and neutrality through citations, edit histories, and talk pages

1

. This system emerged from early disputes over coverage of evolution, climate change, and health topics that shaped Wikipedia's credibility1

. Now editors report encountering AI-written additions and plausible citations that fail verification checks, as models trained on human writing generate new text that pollutes the web as AI slop3

.The emergence of Grokipedia, Elon Musk's AI-powered alternative, intensifies the competitive pressure. Launched on October 27, 2025, Grokipedia already has more than 5.6 million entries compared with Wikipedia's total of over 7.1 million

3

. Unlike Wikipedia, Grokipedia entries cannot be directly edited, though registered users can suggest corrections for the AI to consider3

.Related Stories

Web 2.0 Ideals Meet Infrastructure Costs in AI Era

Wikipedia embodied the Web 2.0 dream when it launched in 2001, representing "harnessing collective intelligence" through platforms built on participation rather than passive consumption

3

. The site carries no advertising and does not trade in users' data, maintaining its claim of editorial independence3

. The bulk of funding comes from 8 million donors, most of them individuals4

. The Wikimedia Foundation's total assets now stand at more than $310 million3

.

Source: Scientific American

Wikimedia Foundation CEO Maryana Iskander, who is stepping down on January 20 to be replaced by Bernadette Meehan, noted the infrastructure challenge: "But our infrastructure is not free, right? It costs money to maintain servers and other infrastructure that allows both individuals and tech companies to draw data from Wikipedia"

4

. Wales emphasized that donors "are not donating in order to subsidize these huge AI companies"4

.Looking ahead, the Wikimedia Foundation has outlined an AI strategy that could benefit volunteers. While AI isn't capable of writing Wikipedia entries from scratch, it could reduce tedious work for editors by updating dead links or evolving the search experience from traditional keyword methods to a more conversational chatbot style

5

. Wales envisions users asking questions and receiving quoted answers with direct paragraph references5

. What remains uncertain is whether Wikipedia's model of transparency and collaborative editing can sustain relevance when fewer people visit, cite, or contribute to the platform that AI systems increasingly depend upon for training data.References

Summarized by

Navi

[1]

[3]

Related Stories

Wikipedia Faces Traffic Decline Amid AI Summaries and Changing Information Habits

17 Oct 2025•Technology

Wikipedia signs AI licensing deals with Microsoft, Meta, and Amazon to sustain operations

15 Jan 2026•Business and Economy

Wikipedia's Battle Against AI-Generated Content: Editors Mobilize to Maintain Reliability

09 Aug 2025•Technology

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation