X open sources Grok-powered algorithm as regulatory pressure mounts globally

3 Sources

3 Sources

[1]

X open sources its algorithm: 5 ways businesses can benefit

Elon Musk's social network X (formerly known as Twitter) last night released some of the code and architecture of its overhauled social recommendation algorithm under a permissive, enterprise-friendly open source license (Apache 2.0) on Github, allowing for commercial usage and modification. This is the algorithm that decides which X posts and accounts to show to which users on the social network. The new X algorithm, as opposed toto the manual heuristic rules and legacy models in the past, is based on a "Transformer" architecture powered by its parent company, xAI's, Grok AI language model. This is a significant release for enterprises who have brand accounts on X, or whose leaders and employees use X to post company promotional messages, links, content, etc -- as it now provides a look at how X evaluates posts and accounts on the platform, and what criteria go into it deciding to show a post or specific account to users. Therefore, it's imperative for any businesses using X to post promotional and informational content to understand how the X algorithm works as best as they can, in order to maximize their usage of the platform. To analogize: imagine trying to navigate a hike through a massive woods without a map. You'd likely end up lost and waste time and energy (resources) trying to get to your destination. But with a map, you could plot your route, look for the appropriate landmarks, check your progress along the way, and revise your path as necessary to stay on track. X open sourcing its new transformer-based recommendation algorithm is in many ways just this -- providing a "map" to all those who use the platform on how to achieve the best performance they (and their brands) can. Here is the technical breakdown of the new architecture and five data-backed strategies to leverage it for commercial growth. The "Red Herring" of 2023 vs. The "Grok" Reality of 2026 In March 2023, shortly after it was acquired by Musk, X also open sourced its recommendation algorithm. However, the release revealed a tangled web of "spaghetti code" and manual heuristics and was criticized by outlets like Wired (where my wife works, full disclosure) and organizations including the Center for Democracy and Technology, as being too heavily redacted to be useful. It was seen as a static snapshot of a decaying system. The code released on January 19, 2026, confirms that the spaghetti is gone. X has replaced the manual filtering layers with a unified, AI-driven Transformer architecture. The system uses a RecsysBatch input model that ingests user history and action probabilities to output a raw score. It is cleaner, faster, and infinitely more ruthless. But there is a catch: The specific "weighting constants" -- the magic numbers that tell us exactly how much a Like or Reply is worth -- have been redacted from this release. Here are the five strategic imperatives for brands operating in this new, Grok-mediated environment. 1. The "Velocity" Window: You Have 30 Minutes to Live or Die In the 2023 legacy code, content drifted through complex clusters, often finding life hours after posting. The new Grok architecture is designed for immediate signal processing. Community analysis of the new Rust-based scoring functions reveals a strict "Velocity" mechanic. The lifecycle of a corporate post is determined in the first half-hour. If engagement signals (clicks, dwells, replies) fail to exceed a dynamic threshold in the first 15 minutes, the post is mathematically unlikely to breach the general "For You" pool. Thus, the takeaway for business data leads is to coordinate your internal comms and advocacy programs with military precision. "Employee advocacy" can no longer be asynchronous. If your employees or partners engage with a company announcement two hours later, the mathematical window has likely closed. You must front-load engagement in the first 10 minutes to artificially spike the velocity signal. 2. The "Reply" Trap: Why Engagement Bait is Dead In 2023, data suggested that an author replying to comments was a "cheat code" for visibility. In 2026, this strategy has become a trap. While early analysis circulated rumors of a "75x" boost for replies, developers examining the new repository have confirmed that the actual weighting constants are hidden. More importantly, X's Head of Product, Nikita Bier, has explicitly stated that "Replies don't count anymore" for revenue sharing, in a move designed to kill "reply rings" and spam farms. Bier clarified that replies only generate value if they are high-quality enough to generate "Home Timeline impressions" on their own merit. As this is the case, businesses should stop optimizing for "reply volume" and start optimizing for "reply quality." The algorithm is actively hostile toward low-effort engagement rings. Businesses and individuals should not reply incessantly to every comment with emojis or generic thanks. They should only reply if the response adds enough value to stand alone as a piece of content in a user's feed. 3. X Is Basically Pay-to-Play, Now The 2023 algorithm used X paid subscription status as one of many variables. The 2026 architecture simplifies this into a brutal base-score reality. Code analysis reveals that before a post is evaluated for quality, the account is assigned a base score. X accounts that are "verified" by paying the monthly "Premium" subscription ($3 per month for individual account Premium Basic, $200/month for businesses) receive a significantly higher ceiling (up to +100) compared to unverified accounts, which are capped (max +55). Therefore, if your brand, executives, or key spokespeople are not verified (X Premium or Verified Organizations), you are competing with a handicap. For a business looking to acquire customers or leads via X, verification is a mandatory infrastructure cost to remove a programmatic throttle on your reach. 4. The "Report" Penalty: Brand Safety Requires De-escalation The Grok model has replaced complex "toxicity" rules with a simplified feedback loop. While the exact weight of someone filing a "Report" on your X post or account over objectionable or false material is hidden in the new config files, it remains the ultimate negative signal. In a system driven by AI probabilities, a "Report" or "Block" signal trains the model to permanently dissociate your brand from that user's entire cluster. In practice, this means "rage bait" or controversial takes are now incredibly dangerous for brands. It takes only a tiny fraction of users utilizing the "Report" function to tank a post's visibility entirely. Your content strategy must prioritize engagement that excites users enough to reply, but never enough to report. 5. OSINT as a Competency: Watch the Execs, Not Just the Repo The most significant takeaway from today's release is what is missing. The repository provides the architecture (the "car"), but it hides the weights (the "fuel"). As X user @Tenobrus noted, the repo is "barebones" regarding constants. This means you cannot rely solely on the code to dictate strategy. You must triangulate the code with executive communications. When Bier announces a change to "revenue share" logic, you must assume it mirrors a change in the "ranking" logic. Therefore, data decision makers should assign a technical lead to monitor both the xai-org/x-algorithm repository and the public statements of the Engineering team. The code tells you *how* the system thinks; the executives tell you *what* it is currently rewarding. Summary: The Code is the Strategy The Grok-based transformer architecture is cleaner, faster, and more logical than its predecessor. It does not care about your legacy or your follower count. It cares about Velocity and Quality. The Winning Formula: 1. Verify to secure the base score. 2. Front-load engagement to survive the 30-minute velocity check. 3. Avoid "spammy" replies; focus on standalone value. 4. Monitor executive comms to fill in the gaps left by the code. In the era of Grok, the algorithm is smarter. Your data and business strategy using X ought to be, too.

[2]

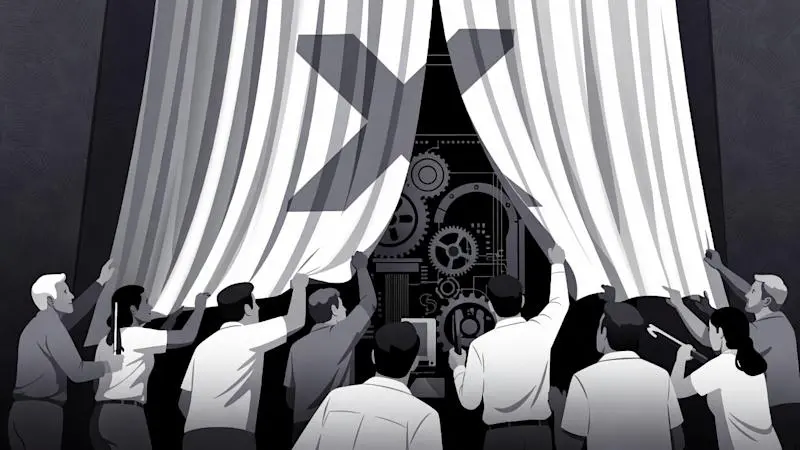

Elon Musk's X Open-Sources Grok-Powered Algorithm Driving 'For You' Feed

The release follows owner Elon Musk's pledge to update the algorithm every four weeks, accompanied by comprehensive developer notes. Elon Musk's social media platform X delivered on its promise to pull back the curtain on one of social media's most closely guarded secrets Tuesday, releasing the machine learning architecture that determines what posts appear in users' feeds. "We have open-sourced our new 𝕏 algorithm, powered by the same transformer architecture as xAI's Grok model," X's engineering team tweeted. "We know the algorithm is dumb and needs massive improvements, but at least you can see us struggle to make it better in real-time and with transparency," Musk tweeted following the release. "No other social media companies do this." The release follows through on a pledge Musk made last week when he posted that he would "make the new X algorithm, including all code used to determine what organic and advertising posts are recommended to users, open source in 7 days." He promised updates would be "repeated every 4 weeks, with comprehensive developer notes, to help you understand what changed." The GitHub repository details a Grok-based transformer model that ranks X's 'For You' feed posts by predicting user actions like likes and replies, using end-to-end machine learning without hand-engineered features, built in Rust and Python for modular retrieval and scoring. The algorithm retrieves content from two sources: in-network posts from accounts users follow and out-of-network posts discovered through ML-based retrieval, combining both through a scoring system that predicts engagement probabilities for each post. Midhun Krishna M, co-founder and CEO of LLM cost tracker TknOps.io, said the open-source release could change industry standards. "By exposing the Grok-based transformer architecture, X is essentially handing developers a blueprint to understand, and potentially improve upon, recommendation systems that have been black boxes for years," he told Decrypt. "This level of transparency could force other platforms to follow suit or explain why they won't." "Creators can learn what works and adjust without blindly gaming the system, while clearer incentives benefit regular users and lead to better content," he added. When asked whether the open-source code could help users determine what makes posts go viral, Grok itself analyzed the algorithm and identified five key factors. These include engagement predictions based on user history for likes and reposts, content novelty and relevance with timely personalized posts scoring higher, diversity scoring that limits repeated authors, a balance between followed accounts and ML-suggested posts, and negative signals from blocks and mutes that lower scores. X under scrutiny The release comes amid heightened scrutiny of X's AI initiatives, as last week, X revoked API access for InfoFi projects that rewarded users for platform engagement, with Head of Product Nikita Bier declaring the company would "no longer allow apps that reward users for posting on X" due to AI-generated spam concerns. Recently, X restricted Grok's image generation and editing features to paid subscribers only and implemented technical measures to prevent editing images of real people after the chatbot was used to create non-consensual sexualized images, including those of minors, prompting regulators around the world to open investigations that could lead to enforcement action.

[3]

xAI's turbulent week: Open source code, a 120M euro fine, and global Grok bans

Deepfake crisis triggers Grok bans as X reveals algorithm code It has been a defining week for Elon Musk's "everything app," but perhaps not the one he intended. On Tuesday, January 20, X (formerly Twitter) officially open-sourced its recommendation algorithm, fulfilling a long-standing promise of transparency. Yet, this technical milestone arrives in the middle of a regulatory storm, sandwiching the company between a massive €120 million fine from the European Union and outright service bans in Indonesia and Malaysia caused by Grok's safety failures. For observers of the tech industry, the juxtaposition is stark: X is voluntarily opening its "black box" code to the public while simultaneously being punished by global governments for what that black box produces. Also read: Elon Musk denies Grok AI created illegal images, blames adversarial hacks Inside "Phoenix" The code release on GitHub offers the first concrete look at X's new architectural core, internally dubbed "Phoenix." The most significant revelation is the complete erasure of the old Twitter. According to the documentation, X has eliminated "every single hand-engineered feature" from its ranking system. The manual boosts for video content, the penalties for external links, and the complex web of if-then rules that governed the timeline for a decade are gone. In their place is a Grok-based transformer model. This marks a shift from "heuristic" ranking (rules written by humans) to "probabilistic" ranking (predictions made by AI). The new system relies on two main components: * Thunder: A pipeline for fetching in-network posts (from accounts you follow). * Phoenix Retrieval: A vector-based system that finds out-of-network content by matching semantic similarities. Both feed into the Phoenix Scorer, a model derived directly from the Grok-1 architecture. Instead of following a human rule like "promote tweets with images," the model analyzes a user's sequence of historical actions to predict the probability of 15 different future interactions, ranging from a "Like" to a "Block." Also read: Grok vs Indian Govt: Why Musk's AI is facing serious scrutiny in India Musk himself described the current iteration as "dumb" and in need of massive improvement, framing the open-source release as a way to "crowdsource" optimization. However, moving to a pure-AI model could make the platform less transparent in practice; while we can see the code that trains the model, we cannot see the billions of weights inside the model that actually decide why a specific post goes viral. The €120 million "transparency" fine While X invites developers to inspect its code, European regulators have penalized it for hiding critical data. The European Commission's €120 million (approx. $140 million) fine, levied under the Digital Services Act (DSA), focuses on three specific failures of transparency that contradict Musk's "open book" narrative. First are the deceptive "blue checks" that the EU ruled as a "dark pattern." By allowing anyone to purchase verification without ID checks, the platform deceives users about the authenticity of accounts, a violation of DSA consumer protection rules. Regulators found that X failed to provide a searchable, transparent archive of advertisements, making it impossible for researchers to track disinformation campaigns or malicious ads.The fine also cited X's refusal to grant academic researchers access to public data, effectively blinding external watchdogs. The deepfake crisis: Grok bans in Asia Perhaps the most damaging development for X's reputation this week is the tangible harm caused by its AI tools. While the EU fine is bureaucratic, the bans in Southeast Asia are visceral reactions to safety failures. Both Indonesia and Malaysia have temporarily blocked access to Grok (and by extension, parts of the X Premium experience) following a surge in non-consensual sexualized images (NCII). Users exploited Grok's image generation capabilities to "digitally undress" women and minors, creating deepfake pornography that spread rapidly on the platform. Unlike the text-based controversies of the past, this involves the direct generation of illegal content. The Indonesian Ministry of Communication and Informatics cited a "complete lack of effective guardrails" in Grok 2.0. The UK's communications regulator, Ofcom, has also launched a formal investigation, threatening similar blocks if safety protocols aren't immediately overhauled. X is attempting a high-wire act. By open-sourcing "Phoenix," it hopes to win back the trust of the technical community and prove its commitment to free speech and transparency. But code on GitHub does not absolve a platform of its tangible impact. As long as the "dumb" algorithm amplifies deepfakes and the "verified" badge deceives users, X remains in a precarious position - technically open, but functionally broken.

Share

Share

Copy Link

Elon Musk's X released the code behind its new AI-driven recommendation algorithm on GitHub, revealing a Grok-based transformer architecture that decides what users see. The move toward transparency arrives amid a €120 million EU fine for deceptive practices and service bans in Indonesia and Malaysia over deepfake content generated by Grok.

X Algorithm Reveals Grok-Powered Transformation

Elon Musk's social network X has released the code and architecture of its overhauled social recommendation algorithm under an Apache 2.0 open source license on GitHub, allowing commercial usage and modification

1

. The X algorithm, which determines what posts and accounts appear in users' feeds, now relies on a transformer architecture powered by xAI's Grok AI language model rather than the manual heuristic rules that governed the platform previously1

.

Source: VentureBeat

"We have open-sourced our new 𝕏 algorithm, powered by the same transformer architecture as xAI's Grok model," X's engineering team announced

2

. Elon Musk himself acknowledged the system's shortcomings, tweeting that "the algorithm is dumb and needs massive improvements, but at least you can see us struggle to make it better in real-time and with transparency"2

. He pledged to update the algorithm every four weeks with comprehensive developer notes.How the Machine Learning Algorithm Works

The GitHub repository details a complete architectural overhaul internally dubbed "Phoenix." According to documentation, X has eliminated "every single hand-engineered feature" from its ranking system

3

. The new system retrieves content from two sources: in-network posts from accounts users follow and out-of-network posts discovered through machine learning-based retrieval, combining both through a scoring system that predicts engagement probabilities for each post2

.The architecture includes Thunder, a pipeline for fetching in-network posts, and Phoenix Retrieval, a vector-based system that finds out-of-network content by matching semantic similarities

3

. Both feed into the Phoenix Scorer, a model derived directly from the Grok-1 architecture that analyzes a user's sequence of historical actions to predict the probability of 15 different future interactions.

Source: Decrypt

Critical Insights for Business Strategy

Community analysis of the open-source code reveals a strict "Velocity" mechanic that determines post performance within the first 30 minutes

1

. If engagement signals like clicks, dwells, and replies fail to exceed a dynamic threshold in the first 15 minutes, posts are mathematically unlikely to reach the general For You feed1

. This represents a fundamental shift in content strategy for brands operating on the platform.Grok itself analyzed the algorithm and identified five key factors driving visibility: engagement predictions based on user history for likes and reposts, content novelty and relevance with timely personalized posts scoring higher, diversity scoring that limits repeated authors, a balance between followed accounts and ML-suggested posts, and negative signals from blocks and mutes that lower scores

2

.Notably, X's Head of Product Nikita Bier stated that "Replies don't count anymore" for revenue sharing, designed to kill "reply rings" and spam farms

1

. Replies only generate value if they are high-quality enough to generate "Home Timeline impressions" on their own merit, fundamentally changing user engagement tactics.Transparency Meets Regulatory Backlash

The open-source release arrives during intense regulatory scrutiny. The European Commission levied a €120 million fine under the Digital Services Act, citing three specific transparency failures

3

. Regulators found that X's blue check system operates as a "dark pattern" by allowing anyone to purchase verification without ID checks, deceiving users about account authenticity. The platform also failed to provide a searchable, transparent archive of advertisements and refused to grant academic researchers access to public data3

.

Source: Digit

Related Stories

AI Initiatives Face Safety Crisis

Both Indonesia and Malaysia temporarily blocked access to Grok following a surge in non-consensual sexualized images, with users exploiting Grok's image generation capabilities to create deepfake pornography

3

. The Indonesian Ministry of Communication and Informatics cited a "complete lack of effective guardrails" in Grok 2.0, while the UK's communications regulator Ofcom launched a formal investigation threatening similar blocks.Last week, X revoked API access for InfoFi projects that rewarded users for platform engagement, with Bier declaring the company would "no longer allow apps that reward users for posting on X" due to AI-generated spam concerns

2

. The platform also restricted Grok's image generation and editing features to paid subscribers only and implemented technical measures to prevent editing images of real people.Industry Impact on Recommendation Systems

Midhun Krishna M, co-founder and CEO of LLM cost tracker TknOps.io, suggested the release could change industry standards. "By exposing the Grok-based transformer architecture, X is essentially handing developers a blueprint to understand, and potentially improve upon, recommendation systems that have been black boxes for years," he told Decrypt . "This level of transparency could force other platforms to follow suit or explain why they won't."

However, the shift to a pure-AI model raises questions about practical transparency. While developers can inspect the code that trains the model, they cannot see the billions of weights inside the model that actually decide why specific posts go viral

3

. The specific weighting constants that reveal exactly how much a Like or Reply is worth have been redacted from this release1

, limiting the practical utility for brand safety and content strategy optimization.References

Summarized by

Navi

[1]

Related Stories

Elon Musk pledges to open source X algorithm in seven days amid mounting regulatory pressure

11 Jan 2026•Technology

Grok AI launches video generator as governments probe millions of sexualized deepfakes

27 Jan 2026•Technology

Grok 4 Launch Marred by Controversy: xAI's Latest AI Model Raises Ethical Concerns

10 Jul 2025•Technology

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology