Adobe Launches Content Authenticity App to Protect Creators and Combat AI-Generated Fakes

9 Sources

9 Sources

[1]

Adobe wants to create a robots.txt styled indicator for images used in AI training | TechCrunch

For years, websites included information about what kind of crawlers were not allowed on their site with a robots.txt file. Adobe, which wants to create a similar standard for images, has added a tool to content credentials with an intention to give them a bit more control over what is used to train AI models. Convincing AI companies to actually adhere to Adobe's standard may be the primary challenge, especially considering AI crawlers are already known to ignore requests in the robots.txt. file. Content credentials are information in a media file's metadata used to identify authenticity and ownership. It's a type of implementation of the Coalition for Content Provenance and Authenticity (C2PA), a standard for content authenticity. Adobe is releasing a new web tool to let creators attach content credentials to all image files, even if they are not created or edited through its own tools. Plus, it's providing a way for creators to signal to AI companies that they shouldn't use that particular image for training models. Adobe's new web app, called Adobe Content Authenticity App, lets users attach their credentials, including name and social media accounts, to a file. Users can attach these credentials to up to 50 JPG or PNG files in one go. Adobe is partnering with LinkedIn to make use of the Microsoft-owned platform's verification program. This helps in proving that the person attaching the credentials to an image has a verified name on LinkedIn. Users can also attach their Instagram or X profiles to an image, but there is no integration with verification of these platforms. The same app lets users tick a box to signal their images shouldn't be used for model training. While the field is present on the app and subsequently on an image's metadata with content credentials, Adobe hasn't signed an agreement with any of the AI model creators to adopt this standard. The company said it's in talks with all top AI model developers to convince them to use and respect this standard. Adobe's intentions are in the right place to provide an indicator to model makers for AI training data, but the initiative won't work if the companies don't agree to the standard or don't respect the indicator. Last year, Meta's implementation of labels to auto-tag images on its platform caused an uproar as photographers complained about their edited images being tagged with a "Made with AI" label. Meta later changed the label to "AI info." This development highlighted that while Meta and Adobe both are part of the C2PA steering committee, there is a difference in implementation across different platforms. Andy Parson, Senior Director of the Content Authenticity Initiative at Adobe, said the company built the new content credential app with creators. Given that regulations around copyright and AI training data are scattered across the world, the company wants to give creators a way to signal their intent about AI platforms with the app. "Content creators want a simple way to indicate that they don't want their content to be used for gen AI training. We have heard from small creators and agencies that they want more control over their creations [in terms of AI training on their content]," Parson told TechCrunch. Adobe is also releasing a Chrome extension for users to identify images with content credentials. The company said with the content credentials app, it uses a mix of digital fingerprinting, open source watermarking, and crypto metadata to embed metadata in various pixels of an image, so even if the image is modified, the metadata stays intact. This means users can use the Chrome extension to check content credentials on platforms like Instagram that don't natively support the standard. Users will see a small "CR" symbol on an image if they have content credentials attached to them. In a world where there is a lot of debate around AI and art, Parson says C2PA doesn't believe in opining or directing what is art. But he believes content credentials could be an important marker for ownership. "There is the grey area [of when an image is edited using AI, but it is not 100% AI-generated], and what we are saying is to allow artists and creators to sign their work and claim attribution for it. This doesn't mean the IP is legitimate or it is copyrightable, but just indicates that someone made it," Parson said. Adobe is said while its new tool is designed for images, it wants to add support for video and audio down the line as well.

[2]

Adobe and LinkedIn Are Teaming Up to Help Creators Verify Images' Authenticity

Katelyn is a writer with CNET covering social media, AI and online services. She graduated from the University of North Carolina at Chapel Hill with a degree in media and journalism. You can often find her with a novel and an iced coffee during her time off. Adobe's next step forward in its content authenticity efforts is here. The Adobe content authenticity app is now in public beta, available for anyone to try. With the app, you can attach content credentials to all your digital images and photos. Content credentials are a kind of invisible digital signature that's added after a project is finished. Right now, you can add them to images, with support for videos and audio coming soon. Besides your name, content credentials can include your social media handles, personal website and can disclose any AI usage. You can also use these credentials to signify that you don't want your work to be used to train AI models. One of the best parts about the app is that you don't need a Creative Cloud subscription to use it. So even if you don't want to pay for Adobe programs, you can quickly sign up for a free Adobe account and use the app to create content credentials and apply them to your digital work. Creators who post their work online know that it's all too easy for people to steal, misattribute or erase the original creator from a piece of work. That's where the new partnership with LinkedIn comes in to give content credentials a little more security. Currently, LinkedIn offers three types of verifications on its platform: identity, workplace and educational. You likely already have at least one if you're a semiregular LinkedIn user. You can get a workplace verification by using your work email, or you can get an identity verification using a form of government-issued ID. LinkedIn's new "Verified on LinkedIn" program will help people use these verifications in other corners of the internet. If you're verified on LinkedIn, those credentials will appear in your Adobe content credentials account. You'll also be able to apply your LinkedIn verifications on TrustRadius, G2 and UserTesting. "Using Verified on LinkedIn, users will be able to use the verifications they've completed on LinkedIn to show who they are across the different online platforms they use, boosting trust, confidence and credibility," Oscar Rodriguez, vice president of trust at LinkedIn, said in a statement. When you're inside the content authenticity app, you can batch apply credentials to up to 50 images at a time -- a highly requested feature that came out of the private beta, Andy Parsons, senior director of content authenticity at Adobe, told CNET in an interview. You can also use the content credentials app to inspect tags added to other images. The content credential browser extension is also available, if you want the ability to view credentials wherever you scroll online. Content provenance, or how we know where a piece of content originates, is more important than ever in the age of AI. The content credential app is the result of Adobe's involvement with a larger group called the Coalition for Content Provenance and Authenticity, or C2PA. The group advocates for an open technical standard to help people easily see where an image, video or another piece of content came from. Other members include Google, Meta and OpenAI -- all heavyweights in the generative AI market. LinkedIn is now also joining, but its parent company, Microsoft, is a longtime member.

[3]

This Free Adobe App Will Safeguard Your Photo's Authenticity. I'm All In on That

Katie is a UK-based news reporter and features writer. Officially, she is CNET's European correspondent, covering tech policy and Big Tech in the EU and UK. Unofficially, she serves as CNET's Taylor Swift correspondent. You can also find her writing about tech for good, ethics and human rights, the climate crisis, robots, travel and digital culture. She was once described a "living synth" by London's Evening Standard for having a microchip injected into her hand. Almost a decade ago I was sitting in a cafe in Berlin when a flyer for a local music festival caught my eye. It wasn't the lineup that had drawn my attention, but rather a picture of a bell tent decorated in fairy lights selling people on the pricey glamping offering. I knew immediately that it was false advertising. How? Because the picture was mine. I'd taken the photo at a campsite not in Germany, not at a festival, but at a small family-owned campsite on the northeast coast of England. I'd published the photo on a travel blog I ran at the time, and it made its way to Pinterest and from there to the cafe in Berlin. In the here and now, any creative work we put online is more at risk than ever - not just from straightforward theft, but from being used to train the AI that increasingly dominates our world. This is why Adobe, which is used by creatives who work across many artistic mediums, has invested so heavily in providing people with ways to retain ownership of their work even when it's been published online for the whole world to access. At the Adobe Max Creativity Conference in London on Thursday, the company announced that it had released its Content Authenticity app, first announced back in October, for anyone to download. The app allows you to attach a digital watermark to your creative work that links it to your name and public profiles, and, crucially, allows you to say if you don't want your content to be used to train AI. It's clear that as much as Adobe wants to protect the work of its customers, it's thinking much bigger than that. Adobe's content authenticity standards are already attached to work made using its platforms, so this standalone app isn't about boosting its own business. Crucially, and unlike almost all other Adobe tools, you don't need a Creative Cloud account to download the app, and it's free. "It truly is for anybody," said Andy Parsons, senior director of Adobe's content authenticity initiative, in an interview. "Everyone should have that sort of last-mile ability to have attribution for their work." Parsons showed me how, via a browser plug-in, you can see the content authenticity signature of photos posted to Instagram. Previously this was something that only professionals might be able to do to their images, but now the option is open for anyone to take advantage of. And I firmly believe they should. I know I will. I don't consider myself to be a content creator, but I do publish photos I take on CNET and I post regularly on my public Instagram. I've already had my photos taken and used for commercial purposes - if it can happen to me, it could happen to anyone. The idea that my photos or videos would be used to train AI is something I like even less. Talking with creators at Adobe Max, I found genuine excitement about the Content Authenticity app. "Discoverability is how people like me get work," said photographer, writer and content creator Jon Devo. He's more comfortable sharing his best work on the internet when he's able to ensure it can be linked back to him even if it's picked up by aggregator accounts. "There's stuff that I've created over the last five, six years that I was hesitant to share, because I was like, if this picks up, and if people like it and people share it, it's never going to be connected back to me," he said. "I already have a bank of content that I can start sharing once these things become ubiquitous - so that's what, for me, makes it game-changing." Devo feels encouraged by the fact Adobe had partnered with other major tech companies to create its content credential standards. The open standard is key for widespread adoption, says Parsons. Increasingly it's being baked directly into our tech, such as the Samsung Galaxy S25. But for the rest of us who don't have content credentials ready to go on our phones, there's the new app. Even though to me, and to content creators like Devo, the stakes feel higher than ever, not everyone will be convinced they need the protection offered by content attribution. I asked Parsons what he would say to persuade someone to download the app. "The world is changing very quickly," he said. "It's incontrovertibly true that you're going to want to have certain expressions attached to your content." "Instead of saying, why do it? My question back would be, why not?"

[4]

Is that image real or AI? Now Adobe's got an app for that - here's how to use it

Now available as a public beta, Adobe's new Adobe Content Authenticity app lets creators apply Content Credentials metadata to protect their work. The growing popularity of generative AI image generators has created a two-pronged problem: Creators struggle to protect their work from being used to train the models, while the rest of us struggle to distinguish between what's real and what's generated. Adobe's Content Authenticity app seeks to solve the issue on both fronts. On Thursday, Adobe announced its free Adobe Content Authenticity app would be available as a public beta. With the app, creators can apply Content Credentials, a secure type of cryptographic metadata that includes the content's vital ingredients, such as the creator's name, time, date created, and tools used in their work. Adobe's Content Credentials stay throughout the entire lifecycle of the work, even if a screenshot of the content is taken, giving creators the peace of mind that their content will always be properly attributed. The creator is in complete control of what is attached to their digital work, including their verified name, which is confirmed through LinkedIn or links to social media accounts. Another important element is being able to delineate whether you, as a creator, want your work used in generating AI training and usage. Most generative AI text-to-image models are trained by scraping the web for all of its visual content, which means that an artist's work can then be reproduced in other works by future users of the image generator. With Content Credentials, users can only signal their preferences. Even though some companies may choose to ignore the signal, the hope is that they will take into account the creator's wishes. Since Content Credentials stay with the work forever, as legislation around the matter evolves and opt-out regulations are deployed, the user preference may come into play. Using the Adobe Content Authentic app, adding Content Credentials is as easy and intuitive as adding regular metadata. Most importantly, users can batch-apply Content Credentials to up to 50 JPG or PNG files at a time, regardless of whether they were made with Adobe apps or not. To double-check an image's authenticity and learn more about its Content Credentials, you can use the Inspect tool within the Adobe Content Credentials web application or the Content Authenticity extension for Google Chrome. The process is as easy as dragging and dropping the content you want to learn more about. Then the app will immediately tell you if Content Credentials were found or if there were any matches online. Adobe says it is working toward support for larger file sizes and more media types, such as video and audio. The broader plan is to integrate the Creative Cloud apps, such as Photoshop and Adobe Lightroom, with the Content Authenticity app to create one hub for managing Content Credentials across different apps. Lastly, LinkedIn will expand its support for Content Credentials in the upcoming months by including the credentials applied on the Content Authenticity app on its platform. As seen in the image above, photos uploaded with Content Credentials will appear with a "Cr" pin, which a user can then scroll over to view the details, such as the creator's name.

[5]

Adobe's new app helps credit creators and fight AI fakery

Jess Weatherbed is a news writer focused on creative industries, computing, and internet culture. Jess started her career at TechRadar, covering news and hardware reviews. Adobe has a new tool that makes it easier for creatives to be reliably credited for their work, even if somebody takes a screenshot of it and reposts it across the web. The Content Authenticity web app launching in public beta today allows invisible, tamper-resistant metadata to be embedded into images and photographs to help identify who owns them. The new web app was initially announced in October and builds on Adobe's Content Credentials attribution system. Artists and creators can attach information directly into their work, including links to their social media accounts, websites, and other attributes that can be used to identify them online. The app can also track the editing history of images, and helps creatives to prevent AI from training on them. For additional security, Adobe's Content Authenticity app and Behance portfolio platform -- which can also be embedded within Content Credentials -- allow creators to authenticate their identity via LinkedIn verification. That should make it harder for people to link Content Credentials to fake online profiles, but given LinkedIn isn't exactly known for its creative community (yet), it's also a likely dig at X. Then known as Twitter, X was previously one of the founding members behind Adobe's Content Authenticity Initiative in 2019, before withdrawing from the partnership and transforming its verification system into a paid subscription reward under Elon Musk's ownership. The Content Authenticity web app is "currently free" while in beta, according to Adobe, though the company hasn't mentioned if this will change when it becomes generally available. All you need is an Adobe account (which doesn't require you to have an active Creative Cloud subscription). Any images you want to apply Content Credentials to don't need to have been edited or created using one of Adobe's other apps. While Adobe apps like Photoshop can already embed Content Credentials into images, the Content Authenticity web app not only gives users more control over what information to attach, but also enables up to 50 images to be tagged in bulk rather than individually. Only JPEG and PNG files are supported for now, but Adobe says that support for larger files and additional media, including video and audio, is "coming soon." Creators can also use the app to apply tags to their work that signal to AI developers that they don't have permission to use it for AI training. This is far more efficient than opting out with each AI provider directly -- which usually requires protections to be applied to each image individually -- but there's no guarantee that these tags will be acknowledged or honored by every AI company. Adobe says it's working with policymakers and industry partners to "establish effective, creator-friendly opt-out mechanisms powered by Content Credentials." For now, it's one protection of many that users can apply to their work to prevent AI models from training on it, alongside systems like Glaze and Nightshade. Andy Parsons, Senior Director of Content Authenticity at Adobe, told The Verge that third-party AI protections are unlikely to interfere with Content Credentials, allowing creatives to apply them to their work harmoniously. The Content Authenticity app isn't just for creative professionals, however, as it allows anyone to see if images they find online have Content Credentials applied, just like the Content Authenticity extension for Google Chrome that launched last year. The web app's inspect tool will recover and display Content Credentials even if image hosting platforms have wiped it, alongside editing history where available which can reveal whether generative AI tools were used to make or manipulate the image. The bonus is that the Chrome extension and inspection tool don't rely on third-party support, making content easy to authenticate on platforms where images are routinely shared without attribution. With increasingly accessible AI editing apps also making manipulations harder to detect, Adobe's Content Authenticity tools may also help to prevent some people from being misled by convincing online deepfakes.

[6]

Adobe's New App Protects Your Creations From AI, and I Hope It Sticks

At its London Max conference, Adobe announced several new AI models, including Firefly Image Model 4, Firefly Image Model 4 Ultra, and Video Model, all of which promise to make AI-generated content more realistic than ever. The company also said that its products will now be able to use generative AI models from other big players in the space, including Google and OpenAI. But one announcement at the event stood out to me, as its intention is not to foster unbridled AI content generation, but rather to rein it in. The company introduced the free Adobe Content Authenticity app, which lets creators protect their work by adding secure attribution metadata. It verifies the creators' identity and social media accounts and adds a signal as to whether AI models should use the work for training purposes. The app works by attaching metadata described by the Content Authenticity Initiative, which Adobe spearheaded but enjoys support from members like The Associated Press, Canon, Shutterstock, and The Wall Street Journal. I fully believe that this initiative is just as important as all the new AI models that can create ever-more-realistic images and video, and I don't see much about it from other AI companies. It reminds me of Mozilla's Do Not Track initiative for browsers from a decade ago, which was meant to protect user privacy. I desperately hope this content authenticity drive succeeds where Do Not Track failed, as it's the only way artists and digital creators can thrive in the age of AI. Why Is Content Authenticity Important? Adobe is smart to get involved with this issue. After all, its customers, the artists and designers who use Photoshop, Illustrator, and Premiere Pro, are among those most worried about AI stealing their work. At the London Max event, Adobe's VP of product marketing for Creative Cloud Deepa Subramaniam said, "One of the biggest concerns we hear from the creative community today is the misuse and representation of their work online." She positioned the Content Authenticity labels as the modern, secure equivalent of the way artists used to sign their work. When PCMag's senior security analyst Kim Key dug into this issue, she wrote that the Content Credential metadata "reveal[s] cryptographic data that verifies the validity of an image and confirms when and how the content was created." Andy Parsons, Adobe's Content Authenticity director, told Key that "math doesn't lie, people do." This makes a lot of sense. Previously, you could only apply the Content Credential metadata if you had an expensive Leica camera or exclusively used Adobe's Creative Cloud applications. The new app, now in public beta, allows anyone to apply them. The system applies a "pin" or clickable icon to view info about the contents' provenance and any subsequent edits. Can the Content Authenticity App Succeed? There's still one problem with the system: Adoption. The Content Credentials pin that the app applies is by no means universal. PCMag senior reporter Emily Forlini argues that the other AI vendors, including Apple, Google, and Microsoft, need to label lable their AI creations as such, and I couldn't agree more. This was the downfall of the aforementioned Do Not Track initiative and the Electronic Frontier Foundation's follow-up. Both went nowhere because big tech didn't embrace them. Google, in particular, just about single-handedly killed Do Not Track by dissuading users from even turning it on in Chrome. Websites didn't respect the Do Not Track signal either, presumably in the interest of ad profits. This all seems awfully familiar with how AI trains on artistic works without permission. I can only hope that Adobe's Content Authenticity initiative doesn't suffer the same fate. Some rays of hope include the company's involvement with other interested parties. Subramaniam notes that Content Authenticity leverages LinkedIn's Verified on LinkedIn feature to let creators verify their identity in the new app, for instance. But AI vendors still need to buy in. That includes Google, Meta, Microsoft, OpenAI, and especially AI image generators like Midjourney and Stable Diffusion. Unfortunately, Subramaniam had nothing to say on that front. If the big AI vendors don't respect Adobe's "do not train" tag, the initiative could be for naught. Let's hope history doesn't repeat itself, and that artists and designers get the protections they deserve.

[7]

Why Photographers Should Care About the New Content Authenticity App

Adobe just released the public beta of its free Content Authenticity app, and photographers should take notice. With technology moving so quickly right now, especially that of artificial intelligence, photographers face increasing challenges getting proper attribution for their work and proving that their images are the real article -- not AI. Adobe has gotten a lot of heat in the past couple of years for many good reasons, but one thing we can hopefully agree on is that Adobe's Content Authenticity Initiative is a noble -- and increasingly important -- part of the broader Adobe ecosystem. With Adobe Content Authenticity, creators can apply Content Credentials to all of their digital work. These credentials are secure metadata that allows the creator of a file to share information about themselves and their work. Essentially, it works like a signature, digitally providing attribution to yourself as the creator. However, it also does so much more. Adobe writes, "One thing we've heard consistently in our conversations with creators is that they struggle to secure proper attribution for their work online. The concerns highlight a critical gap: Creators need a reliable way to verify their identities and receive credit for what they produce. Without this, creators risk losing control of their work, missing future opportunities, or, worse, watching others use or profit from their work without their consent. "To address this, we're excited to collaborate with LinkedIn to integrate its new Verified on LinkedIn feature into the Content Authenticity app (as well as the Behance platform), giving creators a way to attach their verified identity to their work. As an extension of this collaboration, LinkedIn has joined the Adobe-led Content Authenticity Initiative as a member, joining over 4,500 members committed to driving the widespread adoption of Content Credentials." This move is marked by the Content Authenticity Initiative (CAI) along with Coalition for Content Provenance and Authenticity (C2PA), a consortium of over 100 companies working together to create technology with secure metadata, invisible and undetectable watermarking, and breakthrough digital fingerprinting. This type of content credential is known as "durable," and remains securely connected throughout the life cycle of content even if a screenshot is taken. Faced with a newly chaotic media landscape made up of generative AI and other heavily manipulated content, alongside authentic real photographs, video, and audio, it is becoming increasingly difficult to know what to trust. The promise of Content Credentials is that they can combine secure metadata, undetectable watermarks, and content fingerprinting to offer the most comprehensive solution for expressing content provenance for audio, video, and images. Secure metadata: This is verifiable information about how content was made that is baked into the content itself, in a way that cannot be altered without leaving evidence of alteration. A Content Credential can tell us about the provenance of any media or composite. It can tell us whether a video, image, or sound file was created with AI or captured in the real world with a device like a camera or audio recorder. Because Content Credentials are designed to be chained together, they can indicate how content may have been altered, what content was combined to produce the final content, and even what device or software was involved in each stage of production. The various provenance bits can be combined in ways that preserve privacy and enable creators, fact checkers, and information consumers to decide what's trustworthy, what's not, and what may be satirical or purely creative. Watermarking: This term is often used in a generic way to refer to data that is permanently attached to content and hard or impossible to remove. For our purposes here, I specifically refer to watermarking as a kind of hidden information that is not detectable by humans. It embeds a small amount of information in content that can be decoded using a watermark detector. State-of-the-art watermarks can be impervious to alterations such as the cropping or rotating of images or the addition of noise to video and audio. Importantly, the strength of a watermark is that it can survive rebroadcasting efforts like screenshotting, pictures of pictures, or re-recording of media, which effectively remove secure metadata. Fingerprinting: This is a way to create a unique code based on pixels, frames, or audio waveforms that can be computed and matched against other instances of the same content, even if there has been some degree of alteration. Think of the way your favorite music-matching service works, locating a specific song from an audio sample you provide. The fingerprint can be stored separately from the content as part of the Content Credential. When someone encounters the content, the fingerprint can be re-computed on the fly and matched against a database of Content Credentials and its associated stored fingerprints. The advantage of this technique is it does not require the embedding of any information in the media itself. It is immune to information removal because there is no information to remove. So, we have three techniques that can be used to inform consumers about how media came to be. If each of these techniques were robust enough to ensure the availability of rich provenance no matter where the content goes, we would have a versatile set of measures, each of which could be applied where optimal and as appropriate. In addition to providing digital signatures, another key aspect of Content Credentials is that this metadata will also provide provenance for the origins of the image beyond just who the creator is and how that content was created. When generative AI models are applied to a file, that information will be relayed and noted in the file's credentials. Next, Content Credentials can be used to signal preferences to other generative AI models, which is crucial for creators who do not want their content used by AI. Adobe explains, "With our Firefly family of models, we've taken the most creator-friendly approach to AI, training only on content we have permission to use. However, not all generative AI models follow this same approach and we believe there's more the industry can do to support and protect creators. With the Generative AI Training and Usage Preference, creators can use Content Credentials to signal to other generative AI models that they don't want their content used for training -- helping to lay the foundation for creator choice. Thanks to the durability of Adobe's Content Credentials, creators who note their training preference today via the app are well-positioned to have it recognized when global opt-out regulations take shape and as more companies start respecting creator preferences. Adobe is working closely with policymakers and industry partners to establish effective, creator-friendly opt-out mechanisms powered by Content Credentials." Viewing of Content Credentials will initially be done by creators and consumers using the content authenticity extension for Google Chrome or the inspect tool within the app. As a new service just entering its public beta today, Adobe shared that it looks to integrate the content authenticity app, "with Creative Cloud apps that support Content Credentials like Photoshop, Adobe Lightroom and more, ultimately making it the centralized hub for creators managing their Content Credentials preferences across Adobe apps." As to applying credentials to images, Adobe explains, "Creators can now apply Content Credentials to up to 50 JPG or PNG files at once -- whether the content was made with Adobe apps or not. It's a fast, intuitive way to add Content Credentials to both new and legacy content. Support for larger files and more media types, including video and audio, is coming soon." This marks a massive step in the direction of stemming image theft, providing a thread back to the original creator when an image is shared online, as well as transparency regarding whether or not artificial intelligence was used in an image's creation. Adobe also shared that other companies are looking to support Content Credentials as it takes fledgling steps towards becoming the industry standard. "In the coming months, LinkedIn also plans to expand its support for Content Credentials by displaying the attribution information attached with the Content Authenticity app directly on its platform (see mock up above). This will effectively connect 'trust signals' across platforms, meaning if a photographer uploads a photo to the Content Authenticity app and signs it with Content Credentials, including their verified identity, LinkedIn will display the credentials via a 'Cr' pin when they share it -- allowing audiences to scroll over and view the credentials with the creator's verified name, effectively affirming attribution," Adobe says. The hope is that by LinkedIn taking the first step in adopting Content Credentials support, other social media and sites will follow suit. As photographers, this move by the multiple companies working together with the Content Authenticity Initiative, C2PA, and Adobe finally looks like a tangible step towards helping to protect our work online in what feels like the Wild West of photo theft and AI imagery, confusing viewers into what is even real anymore.

[8]

You can now try Adobe's new app to digitally sign your artwork for free

First announced in October, Adobe's Content Authenticity app is now in public beta, and anyone can try it for free. The app allows people to add "Content Credentials" to their digital work -- invisible and secure metadata that shares information about the creator. AI can't edit it out like a watermark and it still works even when someone screenshots the original file. You can add various information to your Content Credentials, such as your name (which can be verified via LinkedIn) and your social media accounts. You can also express your preferences toward generative AI training. This is an experimental feature aiming to get a headstart on future AI regulation that Adobe hopes will respect the creator's choice regarding training data. Once you've verified your name and connected your social media accounts, you can attach your credentials to images in batches of up to 50 files. At the moment, it only works with JPG and PNG files but it doesn't matter whether you made them with Adobe apps or not. The company also says support for different types of media such as video and audio will be coming soon. Recommended Videos If you want to view the Content Credentials for an image you have, you can add Adobe's extension for Chrome or upload your file in the web app. According to Adobe, LinkedIn will also start displaying Content Credentials on its platform, so you can click any applicable image you see to look at an artist's information. The authentication system will eventually come to other Adobe apps as well, making it easy to "digitally sign" anything you create using Adobe products. If you have any photos or artwork you want to sign, you can head to the web app now to set up your profile and start attaching your credentials to your work. The beta is currently available for free, but we don't know yet if the pricing will change once the app launches officially.

[9]

The new Content Authenticity web app launches at Adobe MAX London: can it solve one of AI art's biggest problems?

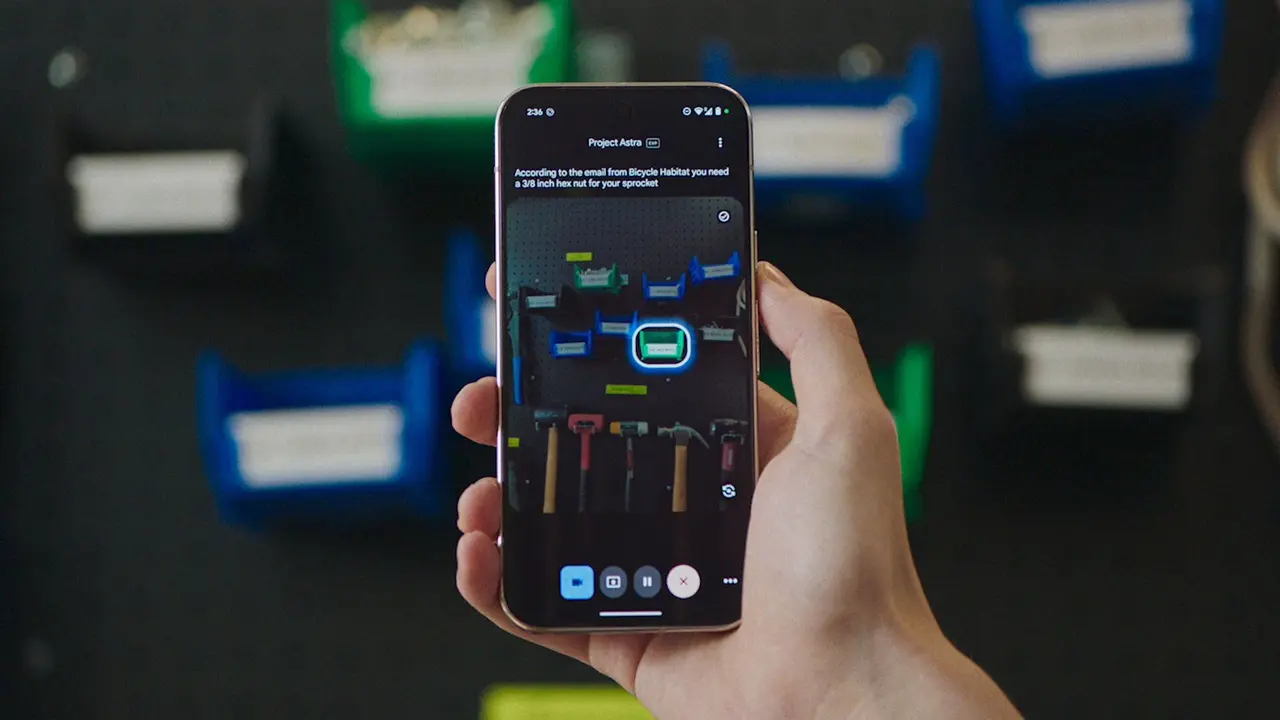

As AI art and AI-generated images rapidly become the norm across many creative industries, the question of ownership, consent, and authenticity has taken centre stage. It's why Adobe has put so much time into creating the Content Authenticity 'nutrition label', which now has a web app everyone can use. Adobe, a longtime champion of creative professionals, but also a developer of its own 'ethical' AI model Firefly, has had its critics over the last couple of years, but with new tools that aim to redefine how digital content is attributed, verified and used, Adobe aims to please both pro artists and its new 'casual' creatives. In theory, with this one tool, Adobe hopes to draw a line in the sand, that can, from here on out, arm artists with the knowledge of how an image was created and eventually how it can or can't be used. (We've seen in Project Know How the impressive way this tech can be implemented to track image ownership.) The Adobe Content Authenticity web app, as well as a robust system of Content Credentials, part of a broader initiative the company believes could become a global standard for digital provenance, at least is a step in the right direction for artists fearful of AI and its impact. Andy Parsons, the Senior Director of Content Authenticity Initiative at Adobe, sat down with me to explain how the new Content Authenticity web app will equip creators with something long overdue: real agency over their work in an increasingly AI-dominated world. "This is akin to an artist signing their work," Andy explains. "Just like a painter or photographer might sign a physical piece, this allows creators to digitally sign their work with verified credentials." With Adobe's new free web application, Adobe Content Authenticity, anyone with a Creative Cloud account can upload an image and sign it. This signature isn't a watermark or a logo. It's a cryptographic, verifiable identity tag that tells the world who made it, where it originated, and, crucially, what the creator wants done with it. These Content Credentials serve as a digital "nutrition label" that can travel with the content wherever it's shared online. The idea is a good one, even if it does rely on people, and studios, to adopt it as a standard. Initially available for images, this technology is built on an open standard (C2PA) developed in collaboration with companies like Google, Sony, Meta and OpenAI. Video and audio support is on the horizon, suggesting the potential for a cross-medium shift in how digital ownership and intent are shown and used more widely. (Part of Adobe's broader plan to tackle AI and copyright protection.) One of the most useful tools found in Content Credentials is the ability for artists to indicate their preference regarding generative AI training. As Andy tells me, this marks the start of a system where "someone can sign their work and indicate an AI training and usage preference that, over time, we think will be respected". Importantly, this isn't about impeding training with technical tricks like data poisoning. Instead, Adobe is betting on a standards-based, opt-in / opt-out idea tied directly to the media asset itself. If adopted widely by platforms and regulators, it could become a cornerstone of future AI development practices, one where creator consent is a requirement, not an afterthought. Adobe is in frequent conversation with policymakers in the EU, UK, and elsewhere about how regulations could formalise this kind of creator-driven consent model. "We think this method of connecting the opt-out of training to the media that follows it wherever it goes is the simplest and most referred way to do that," says Andy. But there are many 'ifs' and 'buts' to the greater use of Content Credentials, with a reliance on models and platforms playing along to a voluntary system. But (three's another pesky 'but'), it feels like the first step on a tall ladder to a place artists can feel more empowered. Adding another layer of trust is Adobe's integration of verified identities. Digital artists and creators can now attach their real names, social handles, and even LinkedIn-verified credentials to their signed content. This helps solve the critical "who made this?" problem in a world where generative AI can mimic nearly any style or voice. Adobe plans to deepen this system further by integrating with platforms like Behance Pro, its freelancing and licensing marketplace. The combination of verified credentials and content usage preferences could unlock new opportunities in licensing, job opportunities and even monetisation. The success of this initiative hinges not only on Adobe's tools but on adoption by platforms and other major players. The good news? It's already happening. According to Andy Google is just one major platform that has begun showing Content Credentials in YouTube and Google Image Search. "They're adopting the standard quite rapidly," says Andy, who remains optimistic about broader platform buy-in, but also admits currently such platforms won't host the Content Credentials app natively. That said, Meta, OpenAI and others are also participating in the C2PA steering committee, signalling a growing recognition that attribution and ethical use are not fringe concerns. These are central to the future of content creation, and given how these platforms depend on creators and artists for content, perhaps it's only time before they are forced to adopt Content Credentials as a standard. Still, Parsons acknowledges that progress won't happen overnight. "This is like building a two-sided marketplace," he says. "You need the supply side - creators and tools - and the consumption side - platforms and browsers that display this data meaningfully." While some might view Adobe's move as a response to criticism over AI tools like Firefly, Andy makes it clear this is not about winning back trust. "This is a tool that our creators have asked for," he states. "We're not charging anything for this. We think it needs to exist." In fact, Adobe's plan to embed a centralised preferences hub across Creative Cloud apps shows just how deeply its integrating creator and artist feedback. Whether editing a PSD file in Photoshop or working in Lightroom or Adobe Express, he tells me users can set and carry their preferences across the ecosystem. I joke, about being a 'lazy artist' and want this kind of thing done at a click, setup once and carried across all exported files. And that's just how Content Credentials can work. Ultimately, Adobe's Content Authenticity Initiative isn't just about labelling what's AI-made or human-made, it's about giving creators back their voice in a digital landscape increasingly shaped by algorithms and mistrust. "I don't think we're forcing anyone's hand," Andy tells me earnestly. "We believe that human creativity should be enhanced, not eclipsed, by AI, and creators deserve the right to decide how their work is used." With Content Credentials and the new web app, Adobe is laying the groundwork for a more ethical, transparent and creator-empowering future, one where attribution and AI can truly coexist. That will, for many, still sound like a future they don't want to be a part of, as AI 'art' shouldn't exist and human-made art should take precedence. But at least this could, if taken up widely, help prevent art being used to train AI models, or at least empower artists to identify when, where and how their work has been 'scraped'. For creatives navigating this new AI world, Content Credentials could be more than a neat feature but in fact a lifeline.

Share

Share

Copy Link

Adobe releases a free web app that allows creators to attach Content Credentials to their work, helping to verify authenticity, protect against AI training, and ensure proper attribution.

Adobe Introduces Content Authenticity App

Adobe has launched a new free web application called the Adobe Content Authenticity App, now available in public beta. This tool aims to address the growing concerns surrounding image authenticity and creator rights in the age of AI-generated content

1

2

3

.Key Features of the Content Authenticity App

The app allows creators to attach Content Credentials, a form of secure cryptographic metadata, to their digital images. These credentials include:

- Creator's name and social media accounts

- Time and date of creation

- Tools used in the work

- Option to signal whether the image can be used for AI training

Importantly, users can batch-apply these credentials to up to 50 JPG or PNG files at once, regardless of whether they were created using Adobe tools

1

4

.Verification and LinkedIn Partnership

To enhance credibility, Adobe has partnered with LinkedIn for identity verification. Creators can link their LinkedIn profiles to their Content Credentials, providing an additional layer of authenticity

2

4

.Protecting Against AI Training

The app includes a feature allowing creators to indicate their preference against their work being used for AI model training. While this doesn't guarantee compliance from all AI companies, it establishes a clear signal of the creator's intent

1

3

.Broader Implications for Content Authenticity

The Content Authenticity Initiative, of which this app is a part, represents a significant step in addressing issues of digital content provenance. It aims to:

- Help creators protect their work from unauthorized use

- Assist consumers in distinguishing between authentic and AI-generated content

- Potentially influence future legislation around content rights and AI training data

3

5

Related Stories

Technology Behind Content Credentials

Adobe employs a mix of digital fingerprinting, open-source watermarking, and crypto metadata to embed information within image pixels. This ensures that the metadata remains intact even if the image is modified

1

.Accessibility and Future Plans

The Content Authenticity app is free and doesn't require an Adobe Creative Cloud subscription. Adobe plans to expand support to larger file sizes and additional media types, including video and audio

4

5

.Industry Impact and Adoption

While Adobe's initiative is promising, its effectiveness will largely depend on widespread adoption by both creators and AI companies. The company is in talks with major AI model developers to encourage respect for these standards

1

.As the digital landscape continues to evolve, tools like the Content Authenticity app may become increasingly crucial in maintaining trust and protecting creators' rights in the online world.

References

Summarized by

Navi

[1]

Related Stories

Recent Highlights

1

Elon Musk merges SpaceX with xAI, plans 1 million satellites to power orbital data centers

Business and Economy

2

SpaceX files to launch 1 million satellites as orbital data centers for AI computing power

Technology

3

Google Chrome AI launches Auto Browse agent to handle tedious web tasks autonomously

Technology