Adobe Unveils Firefly Image 5 with Custom Models and Multi-Platform AI Integration at Max 2025

18 Sources

18 Sources

[1]

Adobe Firefly Image 5 brings support for layers, will let creators make custom models | TechCrunch

Adobe said on Tuesday that it is launching the latest iteration of its image generation model, Firefly Image 5. The company is also adding more features to the Firefly website, support for more third-party models, the ability to generate speech and soundtracks. Notably, the update allows artists to come up with their own image models using their existing art. Image 5 model can now work at native resolutions of up to 4 megapixels, a massive increase from the previous gen model, which could natively generate images at 1 megapixels but then would upscale them to 4 megapixels. The new model is also better at rendering humans, the company said. Image 5 also enables layered and prompt-based editing -- the model treats different objects as layers and allows you to edit them using prompts, or use tools like resize and rotate. The company said it makes sure that when you edit these layers, the image's details and integrity are not compromised. Adobe's Firefly site has supported third-party models from AI labs like OpenAI, Google, Runway, Topaz, and Flu to augment its appeal to its creative customer base, and now the company is taking that a step further by letting users create custom models based on their art style. Currently in a closed beta, this feature lets users drag and drop assets, such as images, illustrations and sketches, to create a custom image model based on their style. The company is also adding some new features to its Firefly website, which was redesigned earlier this year. The site now lets you use the prompt box to switch between generating images or videos, choose which AI model you want to work with, change aspect ratios, and more. The site's home page now features your files and recent generation history, and you also get shortcuts to other Adobe apps (these were previously housed in a menu). Adobe has also redesigned the video generation and editing tool to support layers and timeline-based editing. This design change is currently only available in a private beta, and will be rolled out to users eventually. Firefly is also getting two new audio features: Users can now employ AI prompts to generate entire soundtracks and speech -- using models from ElevenLabs -- for videos. There's also a new way to easily come up with prompts: just add keywords and sections by selecting words from a word cloud. As its competitors like Canva add AI to their platforms, Adobe is trying to cater to new-age creators who are increasingly using AI in their workflows. "We're thinking of the target audience for Firefly as what we call creators or next-generation creative professionals. I think there are these emergent creatives that are GenAI-oriented. They love to use GenAI in all their workloads," Alexandru Costin, the company's VP of generative AI, told TechCrunch over a call. He added that with Firefly, the company now has more freedom to add new features and play around with the interface as it doesn't have to adhere to the muscle memory of creative professionals who might be used to certain workflows in Adobe's existing Creative Cloud tools.

[2]

Adobe's new AI tool lets you fix your photos with simple text commands - try it today

The Prompt to Edit feature lets you edit images using natural language. OpenAI's launch of DALL-E 2 in 2022 ignited an AI text-to-image generator craze, with dozens of tools mlaunching during the past few years. Yet, the use cases for these image generators remain fairly narrow, and they lack everyday, realistic applications for the average person. Adobe's latest image model wants to change that issue. Also: The most popular AI image and video generator might surprise you - here's why people use it At its annual Adobe Max creativity conference, the company unveiled its new Adobe Firefly Image Model 5, which the company describes as its most advanced image generation and editing model yet. Beyond creating high-quality images, the model can also help you edit your existing pictures using AI. After watching demos of the editing features, I think the model solves a significant issue for users. The new model can now generate images in native 4MP resolution, which have more context and have almost twice as many pixels as 1080p, resulting in finer details. Adobe boasts that the results are high-quality images with "photorealistic" results. Also: Why open source may not survive the rise of generative AI Adobe says this attention to detail means the model can tackle challenging tasks, such as anatomically accurate portraits, natural movement, and multi-layered compositions. The real magic of this approach comes to life in the new photo-editing features. Sometimes you'll take a shot that has potential but needs a couple of tweaks to be perfect. Whether the tweaks are simple or complex, they often require you to become familiar with editing software and to click the correct buttons to make adjustments, leading to a more complicated and time-consuming process. With Adobe's new tool, you can just ask the AI to make edits, and the work is done. As the name implies, the new Prompt to Edit tool, powered by Firefly Image Model 5, lets you use a conversational prompt to have an action performed on your photo. I got to see a live demo of the feature in action before the release and was pretty amazed. In the demo, the person uploaded an image of her dog sitting behind a fence. Then she asked the tool to remove the fence from the picture. Within seconds, the fence was removed from the photo of the dog, with AI filling in the spaces where the item previously stood to produce a realistic-looking image. Google launched a similar feature, called Edit with Ask Photos, with the launch of the Google Pixel 10 earlier this year, and I found that tool just as handy. Also: Are Sora 2 and other AI video tools risky to use? Here's what a legal scholar says Building on this feature is Layered Image Editing, which maintains the image's composition accuracy as you make adjustments to its elements. For example, in the sizzle video, numerous items are displayed on a surface, but the user can then select one, drag and drop it, resize it, and even tweak it with a prompt. This task is typically a very complex process in Photoshop that requires multiple tools, steps, and precision. The Prompt to Edit feature is now available to Firefly customers, supporting Firefly Image Model 5 and partner models from Black Forest Labs, Google, and OpenAI. Firefly Image Model 5 is available in beta today. Lastly, while shown at Adobe Max, the Layered Image Editing feature is still in development.

[3]

Adobe Max 2025: all the latest creative tools and AI announcements

Adobe has kicked off its annual Max design conference, where it'll be giving us a first glimpse at the latest updates coming to its Creative Cloud apps and Firefly AI models. The creative software giant is launching new generative AI tools that make digital voiceovers and custom soundtracks for videos, and adding AI assistants to Express and Photoshop for web that edit entire projects using descriptive prompts. And that's just the start, because Adobe is planning to eventually bring AI assistants to all of its design apps.

[4]

Adobe and Google team up to offer more AI models and YouTube integration

Adobe and Google have confirmed a deepening of their partnership in a move which will see more of the latter's AI models become available across different applications. Announced at Adobe Max 2025, the news means model families including Gemini, Veo and Imagen are now embedded across Adobe Firefly, Photoshop, Express, Premiere and GenStudio, giving creatives access to leading models directly within their Adobe workflows. Furthermore, enterprise customers can customize them with proprietary brand data using Vertex AI and Firefly Foundry to ensure they remain consistent with brand guidelines. In a sign of true interoperability and collaboration, Google and Adobe will work together on coordinated go-to-market activities to demonstrate how creatives are set to benefit. While the companies' ads may not be integral to the news, the fact that the two companies are working together to democratize access to AI is. For too long, vendor lock-in has proven costly for consumers and companies alike, who have been forced to navigate a complex environment of ecosystems. "Our partnership with Google Cloud combines Adobe's creative DNA with Google's AI models to usher in a new era of creative expression for creators and creative professionals," Adobe CEO Shantanu Narayen explained. Google Cloud CEO Thomas Kurian said that integrating Google's models into Adobe's "trusted creative ecosystem" gives "everyone... the AI tools and platforms they need to dramatically speed up content creation." The two companies also came together to streamline Shorts productions for YouTube via the Premiere mobile app. The 'Create for YouTube Shorts' feature enables content creators to jump on emerging and popular trends with quick editing tools, supported by AI-generated audio and visual effects, to post short-form, vertical videos to the social network. "Our goal at YouTube is to meet creators where they are and give everyone the tools they need to make storytelling easier and connect with their audiences," YouTube Engineering VP Scott Silver shared. A separate 'Create for YouTube Shorts' section will be coming to the Premiere mobile app "soon."

[5]

Adobe's End-Of-Year Updates Are All AI, and Sometimes Not Even Its Own AI

Adobe MAX kicks off this week and, historically, that has meant a large drop of updates across the company's portfolio of apps. That is technically true this year too, but everything is revolving around AI -- and sometimes, that doesn't even mean Adobe's own technology. Adobe already opened the door to using AI models from competitors earlier this year, and that continues as now Generative Fill in Photoshop can swap from Adobe's Firefly model to using Google Gemini 2.5 Flash Image or Black Forest Labs FLUX.1 Kontext. Further, Generative Upscale can swap over to using Topaz Labs' AI upscale technology, too. Adobe is also bringing the beta Harmonize feature into full production, a tool that promises to blend and match light, color, and tone across disparate images to make them appear as though they were taken at the same time. "We're delivering several groundbreaking AI tools and models into creative professionals' go-to apps, so they can harness the tremendous economic and creative opportunities presented by the rising global demand for creative content," Deepa Subramaniam, vice president of product marketing, creative professionals, at Adobe, says. "With AI that gives creative professionals more power, precision, and control -- and time-savings -- Creative Cloud is truly the creative professional's best friend." While the bulk of updates to Adobe's creative apps appear to be centered around using other companies' AI models, Adobe is also updating Firefly to Image 5 (beta), which it calls its "most advanced image generation and editing model yet." Adobe says it can generate images in native 4 megapixel resolution without upscaling and also "excels" at creating photo-realistic details, such as lighting and fine-detail texture. Adobe's other first-party updates are all in beta. The company is testing an AI Object Mask in Premiere Pro, which it says can automatically identify and isolate people and objects in video frames so that they can be edited and tracked without manual rotoscoping. The video editor is also getting rectangle, ellipse, and pen masking tools (also in beta), which Adobe says can be used to isolate specific areas in a video frame so that they can be adjusted more directly. Premiere Pro is also getting a beta Vector Mask tool, which is a redesigned option that promises faster tracking. Lightroom is getting a lone beta update in Assisted Culling, which Adobe describes as a customizable tool that helps quickly identify the best images in large photo collections, with the ability to filter for different levels of focus, angles, and sharpness. The production features of Generative Fill and Generative Upscale with partner models, as well as Harmonize, are all available today. The other beta features launch into the public beta versions of Adobe's apps today, too. Adobe is also giving Creative Cloud Pro and Firefly plan subscribers unlimited image generations with Firefly and partner models (including video generations) through December 1.

[6]

AppleInsider.com

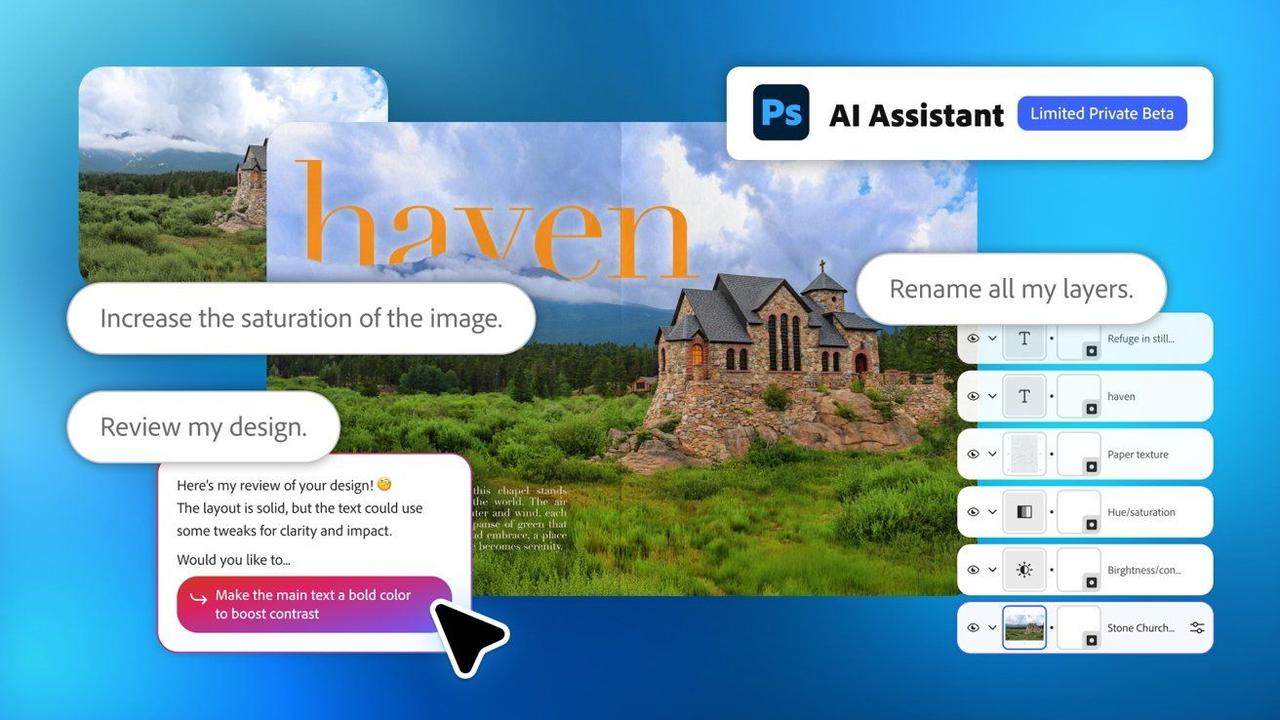

Adobe is expanding its suite of generative AI features across Firefly, Creative Cloud, and enterprise tools, with new updates aimed at faster workflows and integrated content creation. Adobe is sharing details about its latest AI tools and updates at Adobe MAX 2025. The company highlighted new Firefly features, Creative Cloud improvements, and enterprise-focused AI innovations during the conference. Adobe says the updates will make it easier for creators to produce and manage content across its Creative Cloud ecosystem. Many of these features are in either public or private beta, and are now powered by Firefly and partner model integrations. Firefly for end-to-end production Firefly now supports full video and audio production workflows. Generate Soundtrack, in public beta, uses Adobe's Firefly Audio Model to create original, licensed instrumental tracks that automatically sync with your video footage. Generate Speech, currently in public beta, transforms text into realistic voiceovers in a variety of languages. It also creates emphasis and controls tempo for life-like delivery. Users can arrange, cut, and arrange clips in a multitrack timeline using Firefly's new web-based video editor, which is currently in private beta. The editor has tools for adding titles, music, and voiceovers in browser. With the help of Firefly Image Model 5, Prompt to Edit enables creators to use natural language to explain how they wish to alter images. This feature allows for fine-grained image adjustments. Firefly Boards adds collaborative ideation tools that turn brainstorming sessions into visual layouts. A new Rotate Object feature converts 2D images into 3D perspectives for faster concept development. AI updates to Creative Cloud apps To make editing and post-production more efficient, Adobe is integrating new AI features into Photoshop, Lightroom, and Premiere Pro. According to the company, the updates give professionals more speed, accuracy, and creative control. New partner models, such as Black Forest Labs FLUX.1 Kontext and Google Gemini 2.5 Flash, are now supported by Generative Fill in Photoshop. These integrations maintain visual coherence and lighting while enabling more accurate edits through text prompts. Premiere Pro's new AI Object Mask, in public beta, can automatically identify and isolate people or objects in a scene. It speeds up color grading, blurring, and applying effects to moving backgrounds. Lightroom gains Assisted Culling, also in public beta, to help photographers quickly sort large image collections. The feature identifies the sharpest, best-composed shots and filters by angle, focus, and sharpness. YouTube partnership YouTube and Adobe have partnered to provide Premiere Pro tools to content producers of short videos. The Premiere mobile app will soon introduce Create for YouTube Shorts, a new content creation area. The integration will give users access to Adobe templates, transitions, and visual effects optimized for YouTube's short-form video format. The feature will be accessible directly from YouTube once it launches. Enterprise tools and model access Adobe's GenStudio platform adds new tools for enterprise-scale AI content creation. Firefly Foundry enables businesses to train private AI models using proprietary data for consistent, brand-safe outputs. The company is also expanding access to AI models from partners including Google, OpenAI, Runway, ElevenLabs, and Topaz Labs. Firefly Custom Models, now in private beta, let users generate visuals in their own style for consistent branding. Conversational AI experiences For conversational creation, Adobe is rolling out agentic AI assistants throughout its ecosystem. Using natural language prompts, the assistants help users finish challenging tasks while retaining complete creative control. With Adobe Express's AI Assistant, which is currently in public beta, users can go from idea to completed project in a matter of minutes. The private beta version of Photoshop for the web's AI Assistant automates tedious editing tasks while maintaining human oversight. Project Moonlight, a private beta in Firefly, uses social insights to generate new ideas and manages AI assistants across Adobe apps. Additionally, Adobe previewed early integrations for tools like Adobe Express with third-party platforms, such as ChatGPT.

[7]

Adobe MAX 2025: The Creative Suite gets a major AI boost across products

Here is a rundown of all the new stuff happening in Adobe productsAdobe MAX often described as the "Comic-Con for creatives" kicked off this week in Los Angeles, bringing with it a flood of new AI updates across Photoshop, Premiere Pro, Illustrator, and Lightroom. This year's theme was clear: precision, speed, and control for creative professionals who are increasingly relying on AI to keep up with the rising demand for content. Adobe isn't introducing AI for the first time -- Firefly, the company's generative engine, has been at the center of its push toward intelligent tools for over a year now. But what stood out this time was how much deeper AI has been woven into Creative Cloud -- from single-click masking in video to natural blending in image composites, and even a conversational AI assistant inside Photoshop and Express that you can literally "talk" to. One of the biggest highlights this year is Photoshop's new Generative Fill upgrade, now powered by multiple AI models including Google's Gemini 2.5 Flash Image, Black Forest Labs' FLUX.1 Kontext, and Adobe's own Firefly models. The update gives users more control over prompts, helping preserve lighting, tone, and perspective even in complex edits. Then there's Generative Upscale, which now integrates Topaz Labs' AI -- allowing creators to take low-resolution or cropped assets and upscale them to 4K without losing detail. It's a huge win for editors who work with legacy media or compressed assets. A new tool called Harmonize caught quite a bit of attention too -- it automatically matches light and color when you composite people or objects into new backgrounds. Essentially, it handles the heavy lifting of blending, leaving creators to fine-tune the art. On the video side, Premiere Pro is getting a much-needed AI makeover. Features like AI Object Mask and Fast Vector Mask can now automatically detect and isolate moving subjects in a frame -- something that would've previously required tedious manual rotoscoping. In short, color grading and effects work just got a lot faster. Adobe's Firefly engine -- now a suite in its own right -- also got a major upgrade. The new Firefly Image Model 5, available in public beta, can now generate native 4MP images (without the need for post-upscaling) and handle intricate details like lighting, reflections, and anatomy with more realism. It also powers a new "Prompt to Edit" feature -- type what you want to change in an image, and it does the rest. For professionals or brands looking to maintain a consistent visual language, Adobe is also rolling out Firefly Custom Models. Think of it as training your own AI model, in your style. Just drag and drop reference images, and it learns from them -- privately and securely. This feature is currently in private beta, but it's shaping up to be one of the most powerful tools for creative teams who produce content at scale. Another interesting addition is Adobe's move into "agentic AI." Inside Photoshop (on the web), there's now an AI Assistant that behaves more like a creative partner. You can ask it to perform tasks ("adjust lighting," "make this look cinematic"), or even get real-time tutorials while you work. The assistant can be toggled off at any time if you'd rather tweak settings manually -- a nod to those who prefer control over automation. Adobe is also expanding its collaborative side with Firefly Boards -- a shared space where teams can ideate visually. The new update lets you rotate objects in 3D, bulk-download assets, and even export as PDFs -- all within the same board. And for those managing massive content pipelines, Firefly Creative Production allows batch editing of thousands of images at once -- from background replacements to color grading -- through a no-code interface. A number of these features -- like Generative Fill, Harmonize, and Generative Upscale -- are available in Photoshop today. Premiere's AI Object Mask and new masking tools are in public beta, as is Lightroom's Assisted Culling, which automatically helps pick your best shots from a batch. Firefly's new Image Model 5 and Prompt to Edit are open to all users, while Custom Models and Creative Production are rolling out in private beta next month. Through December 1, Creative Cloud Pro and Firefly plan subscribers can enjoy unlimited image and video generations across all models. This year's MAX made one thing clear Adobe is betting on AI not as a gimmick, but as an extension of the creative process. The tools on display weren't about replacing professionals, but helping them move faster, maintain creative control, and scale their work.

[8]

Creators Can Now Edit YouTube Shorts Directly in Adobe Premiere Mobile

At the recent Adobe MAX 2025 event, Adobe announced a new partnership with YouTube to integrate Premiere Mobile editing directly into YouTube Shorts. This feature will allow creators to edit YouTube Shorts using professional-grade tools, effects, and AI features through the Adobe Premiere mobile app for free. You will soon see a new "Edit in Adobe Premiere" option inside the YouTube Shorts creation interface. Tapping the option will open Adobe Premiere in a special "Create for YouTube Shorts" mode. Here, you can create vertical videos with templates specifically made for Shorts. You can also choose pre-made transitions, text presets, and effects. Next, Adobe is offering its Firefly AI tool for quick AI video editing, background edits, and color correction. The best part is that with just one tap in Adobe Premiere, you can publish the video as YouTube Shorts. This feature is first coming on iPhones. Basically, creators now have access to professional-level editing capabilities on mobile. While TikTok has CapCut video editor and Instagram has Edits for Reel editing, YouTube now brings direct integration with Adobe Premiere on mobile. Apart from that, at the MAX 2025 event, Adobe announced several new AI features for its creative suite. Adobe Firefly is getting support for custom models which can be trained using just 6-12 images. In addition, layered image editing is coming and it will support higher resolution output. Adobe is also adding the ability to generate soundtrack and speech inside Firefly. Next, users can now use third-party AI models from Google, Flux, etc. for the Generative Fill feature in Adobe Photoshop. And on the web version of Photoshop, you can chat with a chatbot-style AI assistant to edit images conversationally.

[9]

Adobe and Google Cloud Forge Strategic Alliance to Redefine Creative AI

Adobe and Google Cloud announced an expanded strategic partnership to deliver the next generation of AI-powered creative technologies. The partnership brings together Adobe's decades of creative expertise with Google's advanced AI models -- including Gemini, Veo, and Imagen -- to usher in a new era of creative expression. Through the partnership, Adobe customers, including business professionals, creators, creative professionals and enterprises, will have access to Google's latest AI models, integrated directly into Adobe apps like Adobe Firefly, Photoshop, Adobe Express, Premiere and more. Enterprise customers will also be able to access the models through Adobe GenStudio, and in the future, leverage Adobe Firefly Foundry to customize and deploy brand-specific AI models that generate on-brand content at scale.

[10]

Adobe brings AI deeper into creativity with Firefly, Photoshop, and more at MAX 2025

Apple may bring vapor chamber cooling to future iPad Pro modelsAt its annual MAX 2025 event in Los Angeles, Adobe pushed the boundaries of creative tech once again -- this time leaning harder into AI. The company announced a sweeping set of updates across Firefly, Creative Cloud, Express, and GenStudio, all built around a single idea: making AI an assistant, not a replacement, for creators. The highlight was Firefly Image Model 5, now in public beta, which promises sharper 4MP native resolution and more photorealistic results for prompt-based editing. Adobe also unveiled AI assistants across apps like Photoshop, Express, and Firefly, designed to let users describe what they want in plain language and refine it using familiar creative tools -- essentially bringing a conversational layer to the design process. What stood out this year was the openness. Adobe is integrating models from Google, OpenAI, Runway, ElevenLabs, and Topaz Labs, giving users the flexibility to choose how they create. For professionals, new features like Generative Upscale, AI Object Mask, and Assisted Culling make bulk editing, video cleanup, and image selection faster without losing creative control. On the enterprise side, GenStudio continues to evolve as a full content supply chain platform, now enhanced with generative tools and integrations with Amazon Ads, LinkedIn, and TikTok. Meanwhile, Firefly Foundry gives brands the option to train custom AI models in their own visual language -- a move that could redefine how companies scale on-brand creative output. Adobe's message this year was clear: AI isn't here to do the work for you, it's here to help you move faster, iterate smarter, and stay in control of your craft.

[11]

Adobe expands AI tools across creative platforms at MAX 2025 (ADBE:NASDAQ)

Adobe (NASDAQ:ADBE) on Tuesday announced a broad expansion of artificial intelligence features across its creative applications at Adobe MAX 2025, introducing new AI assistants and models aimed at transforming the creative process for professionals and enterprises. The Photoshop-maker, launched AI assistants across Adobe aims to enhance its creative applications with new AI tools, increasing differentiation for creative professionals and enterprises versus competitors. Enterprise users gain access to streamlined content supply chains via new integrations with major platforms and no-code bulk image editing capabilities. The CEO believes Adobe's stock is undervalued, justifying ongoing share buybacks to enhance shareholder value.

[12]

Adobe partners with YouTube and Google Cloud to expand AI and creative tools

At Adobe MAX 2025, Adobe announced two new collaborations with YouTube and Google Cloud to enhance creative workflows and expand access to AI-powered tools. The partnerships combine Adobe's creative ecosystem with YouTube's global creator platform and Google's advanced AI technologies to deliver new ways for creators and enterprises to produce, edit, and publish content. Adobe and YouTube introduced a new Create for YouTube Shorts space within the Adobe Premiere mobile app, enabling users to edit and share videos directly to YouTube Shorts. The integration is designed to simplify short-form video creation and provide creators with professional-grade tools in a mobile-first format. This feature allows creators to produce and share short-form videos easily using Adobe's video editing tools within the YouTube ecosystem. Adobe also announced an expanded strategic partnership with Google Cloud to integrate Google's latest AI models -- Gemini, Veo, and Imagen -- into Adobe's creative tools. The collaboration aims to provide creators and enterprises with advanced AI capabilities for image, video, and design generation.

[13]

Google just became Adobe's secret weapon

This could be the ultimate partnership for content creators. While a mountain of AI features form the majority of the announcements from Adobe Max in Los Angeles this week, the company also revealed a series of partnerships, with many third party generative models now integrated into Creative Cloud. But Adobe told Creative Bloq there's one partner it's particularly excited about: Google. Not only has Adobe integrated Google's own AI models into its tools, including Gemini, Veo, and Imagen, but it's also partnered with the Google-owned YouTube, letting users create for YouTube shorts directly in the Premiere mobile app. (For every announcement in one place, check out our Adobe Max 2025 coverage). "[Google and Adobe's] partnership brings together Adobe's decades of creative expertise with Google's advanced AI models -- including Gemini, Veo, and Imagen -- to usher in a new era of creative expression," Adobe announced this week. When I asked Rajan Vashisht, head of machine learning engineering at Adobe, which new partnership he was most excited about, he immediately named Google, explaining how to ability to use Adobe's Firefly Model 5 alongside Google's Nano Banana offers much more choice to users. YouTube's Scott Silver joined Adobe's Ely Greenfield on stage at MAX to share news of their Premiere mobile app partnership. ""Our goal at YouTube is to meet creators where they are and give everyone the tools they need to make storytelling easier and connect with their audiences." It's easy to see why the latter partnership could be a huge win for Adobe. Baked-in features could turn Premiere mobile into a bonafide 'YouTube Shorts app'. At a time when short-form content is, for better or worse, everywhere, becoming synonymous with creating it sounds like the ultimate goal for a mobile video editing app.

[14]

Adobe is being smarter about AI video than I ever expected

I'll admit it: I was prepared to roll my eyes at Adobe's video announcements this week. Another AI feature that saves you "hours of time"? Another tool that "unleashes creativity"? We've heard it all before, usually followed by a bunch of workflow changes nobody asked for. But Adobe's Max 2025's video announcements have genuinely surprised me, and not because they're flashy. They're surprising because they're sensible. The headline news is Adobe Premiere mobile integrating directly with YouTube Shorts. You'll be able to tap Edit in Adobe Premiere right inside YouTube, access proper editing tools, and publish without bouncing between apps. This matters because Adobe is finally acknowledging reality: most people making videos today aren't cutting feature films or corporate promos. They're making Shorts, Reels and TikToks, often as their entire income stream. Once, Adobe treated social video like a slightly embarrassing side hustle. Now, that's all changed. The partnership gives YouTube creators access to Premiere's tools, including effects, transitions and templates designed specifically for Shorts, without the friction of exporting and uploading separately. In other words, it's meeting creators where they already are, rather than demanding they come to Adobe's world. For more on Adobe's announcements, see the latest 2025 Sneaks and Adobe's 'conversational editing'. Another highlight is AI Object Mask in Premiere Pro, which identifies and isolates people or objects for colour grading and effects. Absolutely no one is going to be miss masking objects frame-by-frame. Great stuff. There's also a new web-based video editor coming, which positions Firefly as an end-to-end video platform. Whether anyone wants yet another video editor is debatable, but let's reserve judgement until it arrives. What strikes me most about these announcements is that Adobe seems to have learned something from watching creators flee to CapCut, Descript and countless other tools that don't require a mortgage to license. The Premiere mobile app is free. The YouTube integration is removing friction rather than adding it. The AI features target genuine pain points rather than "innovation" for its own sake. Of course, there's still plenty to be cynical about. Adobe hasn't mentioned pricing for the AI features beyond noting they require "paid subscriptions". The company's track record of adding features then upselling them is well-established. There's also the question of whether this makes YouTube more dependent on Adobe, or Adobe more dependent on YouTube. If the partnership ends, do creators lose access to their templates and workflows? But I'm choosing cautious optimism here, because Adobe appears to be making decisions based on what creators actually do, rather than what Adobe wants them to do. In the past, that's been rarer than it should have been. The real test, of course, will be whether these tools actually ship and work as advertised. Adobe has a habit of announcing features in private beta that take years to reach the public. But for once, they seem to be pointing in the right direction; even if I never expected to say that about Adobe and video.

[15]

Adobe and Google Cloud partner to integrate AI models into creative apps By Investing.com

LOS ANGELES - Adobe (NASDAQ:ADBE), a prominent software industry player with an impressive 89% gross profit margin and market capitalization of $152 billion, and Google Cloud announced an expanded strategic partnership to integrate Google's advanced AI models into Adobe's creative applications, according to a press release statement issued at Adobe MAX conference. According to InvestingPro analysis, Adobe currently trades below its Fair Value, suggesting potential upside for investors. Under the partnership, Adobe customers will gain access to Google's AI models including Gemini, Veo, and Imagen directly within Adobe applications such as Firefly, Photoshop, Express, and Premiere. The integration aims to help users generate higher-quality images and videos with greater precision. Enterprise customers will also be able to customize Google's AI models through Adobe Firefly Foundry using their proprietary data to create brand-specific content at scale. Google Cloud's Vertex AI platform will support this customization while providing data commitments that customer information will not be used to train Google's foundation models. "Our partnership with Google Cloud brings together Adobe's creative DNA and Google's AI models to empower creators and brands to push the boundaries of what's possible," said Shantanu Narayen, chair and chief executive officer of Adobe, in the press release. Thomas Kurian, chief executive officer of Google Cloud, added that the integration gives "everyone, from creators and creative professionals to large global brands, the AI tools and platforms they need to dramatically speed up content creation." The announcement follows Adobe's recent partnership with YouTube, which will bring Premiere's video editing tools to YouTube Shorts through a new creation space called Create for YouTube Shorts, coming soon to the Premiere mobile app. The companies did not disclose financial terms of the partnership or specific launch dates for the integrated features. In other recent news, Adobe announced several AI-powered enhancements to its GenStudio platform, aimed at improving personalized content creation for businesses across various marketing channels. These additions, unveiled during Adobe MAX, include Firefly Design Intelligence and Firefly Creative Production for Enterprise. Additionally, Adobe has partnered with YouTube to integrate its Premiere mobile app with YouTube Shorts, allowing creators to access professional-level video editing tools directly within the platform. This new feature, "Create for YouTube Shorts," offers editing capabilities such as effects, transitions, and templates. Furthermore, Adobe introduced an AI Assistant in beta for Adobe Express, providing users with a conversational design experience to simplify content creation. The company also launched its Premiere video editing application for iPhone, offering mobile creators free access to professional editing tools, including multi-track timeline editing and 4K HDR support. In a separate development, Morgan Stanley downgraded Adobe's stock from Overweight to Equalweight, citing slower monetization of AI features as a concern. The downgrade also included a revised price target of $450, down from $520, reflecting the firm's view on Adobe's Digital Media annual recurring revenue growth. This article was generated with the support of AI and reviewed by an editor. For more information see our T&C.

[16]

Adobe's personalised AI generators could change creative software forever

Firefly Foundry will let businesses create branded images, video, vectors and 3D models. Adobe has been rolling out masses of AI-powered features in programs like Photoshop and Premiere Pro over the past couple of years, but the news at the Adobe MAX 2025 conference in LA today goes beyond just more of the same. The software giant is moving beyond the one-size-fits-all approach to generative AI and putting personalisation at the heart of its approach. It's launching the options of custom AI models for both brands and individual creators, and it's adding agentic AI assistants that can read users' social media and propose ideas for things to post. Put together, the changes are geared towards creating a much more versatile and tailored ecosystem of creative software. For enterprise users, Adobe has announced the launch of Adobe Firefly Foundry. The company will work directly with businesses to create tailored generative AI models unique to their brand. Trained on entire catalogs of existing IPs, these "deeply tuned" proprietary Adobe Firefly Foundry models will be built on top of existing Firefly models and will be able to generated image, video, audio, vector and even 3D based on brands' own content . Firefly Foundry is intended to addresses the challenges in achieving on-brand consistency across materials generated by AI. Adobe says it will allow brands to scale on-brand content production, create new customer experiences and extend their IP. While other AI companies provide customisable base models, Adobe has a possible advantage in that it believes its models are commercially safe because they were trained on licensed material. Adobe's also rolling out customisable models for creators. These are simpler, working directly in the Firefly app and Firefly Boards. The company says creators can "easily personalise their own AI models to generate entire series of assets with visual consistency in their own, unique style". There's a waitlist for early access to the private beta. Also at Adobe MAX LA, Adobe showcased new conversational AI assistants that will connect to users' social media accounts and give them ideas for things to post (among other things). Dubbed Project Moonlight, the new assistants will be powered by agentic AI to provide a conversational interface that connects across Adobe apps to helps users make whatever assets they need. The idea is that users describe in their own words what they want to accomplish, or how they want something to look and feel, and the AI assistants will help them achieve that. Creators will be able to ask their AI assistant for personalised advice and suggestions. It seems that the models will also draw insights from creators' social channels, picking up on content that has done well, with the aim of helping users to brainstorm ideas and create new content faster. Adobe's also expanding its new strategy of adding third-party AI models to its software, including models from Black Forest Labs, Google, Luma AI, OpenAI and Runway, which will be integrated directly into the Adobe platform as they're released. Today, Adobe announced the addition of new partners ElevenLabs and Topaz Labs along with more models from existing partners. Generative Upscale in Photoshop now uses Topaz Labs' technology to upscale low-resolution images to 4K. Other new AI tools include AI Object Mask in Premiere (public beta) to help identify and isolate people and objects in video frames, and Assisted Culling in Lightroom (public beta) to help photographers quickly identify the best images in large collections of photos. There's also a new proprietary AI model in Firefly: Image Model 5 (public beta). It can generate images in native 4MP resolution without upscaling, and provides improved realism in lighting and texture. The new model also adds much more powerful image editing capabilities, with a new Prompt to Edit tool that lets users describe how they want to edit an image. Adobe says Layered Image Editing is in development for precise, context-aware compositing that keeps changes coherent.

[17]

Adobe to Integrate Google AI Models in Apps

Adobe is expanding its partnership with Google Cloud to offer Google's AI models in its apps. The latest models from Alphabet unit Google will be integrated directly into Adobe Firefly, Photoshop, Adobe Express, Premiere and GenStudio, the San Jose, Calif., software company said Tuesday. Adobe's enterprise customers will also be able to customize their own AI models that are tailored to customers' brands. Earlier Tuesday, Adobe also announced that it would collaborate with YouTube to offer new features to help customers edit and create videos. Adobe has been investing in AI with a goal of pivoting more toward helping customers generate content, rather than just editing it. Shares are down 19% this year, as investors are getting anxious about seeing the company monetize its AI efforts. The company made some progress in its fiscal third quarter, as AI-first annual recurring revenue surpassed $250 million, the original target the company had aimed to hit by the end of this year. Write to Katherine Hamilton at [email protected]

[18]

At MAX 2025, Adobe CEO Shantanu Narayen says creativity is entering a new era with Firefly at the center

Adobe now supports AI models from Google, OpenAI, Runway, and others. At MAX 2025, happening in Los Angeles, Adobe CEO Shantanu Narayen said creativity is entering a new era" as the company unveiled its most expansive set of AI-powered tools yet. These upgrades are led by major improvements in Firefly, Adobe's generative AI platform. But, bunch of new AI-powered features have been announced across Adobe platforms, including Premiere Pro, Photoshop, Express and more. These new capabilities show why MAX is world's biggest conference for creativity. "We are investing in creativity to ensure that creators move faster and create more," Narayen said. "With Firefly, you have more options now, and our vision is to make it a one-stop solution from ideation to creation." Firefly now serves as an all-in-one creative hub, integrating AI tools for audio, video, and design alongside new Firefly Boards, which is a collaborative ideation space for creators and professionals. Each Firefly model will have its own "character," Narayen noted, allowing users to work across Adobe apps without switching contexts. "Our new generative AI features will allow creators to have pixel-level perfection," he said, highlighting Firefly's ability to produce end-to-end video, compose soundtracks and voiceovers, and edit thousands of images in bulk through Firefly Creative Production. Adobe also infused Creative Cloud apps with new AI-powered capabilities such as Generative Fill and Generative Upscale in Photoshop, and AI Object Mask in Premiere. These tools, Narayen said, are designed to enhance and not replace human creativity. "It's the creators who bring the creativity which cannot be replaced," he added. Also read: Grokipedia: Elon Musk's free AI encyclopedia that no one asked for Alongside its proprietary Firefly models, Adobe now supports AI models from Google, OpenAI, Runway, and others, giving creators flexibility to choose the best fit for their projects. The new Firefly Custom Models let creators personalise AI generation in their own artistic style. Adobe's AI assistants, powered by conversational and agentic AI, are being integrated directly into Firefly, Photoshop and Adobe Express to help anyone turn ideas into content through simple natural language prompts. "Creativity has always been at the core of Adobe's mission," Narayen said. "With Firefly, we're empowering every creator to bring their imagination to life faster, smarter, and with greater freedom than ever before."

Share

Share

Copy Link

Adobe launches its most advanced image generation model, Firefly Image 5, featuring native 4MP resolution, layered editing, and custom model creation capabilities. The company also deepens partnerships with Google and other AI providers to offer creators access to multiple AI models within Adobe's ecosystem.

Adobe Launches Most Advanced Image Generation Model

Adobe unveiled Firefly Image 5 at its annual Max creativity conference, marking a significant leap in AI-powered image generation and editing capabilities

1

. The new model represents Adobe's most advanced image generation technology yet, featuring native 4-megapixel resolution generation—a substantial improvement from the previous generation's 1-megapixel native resolution that required upscaling2

.

Source: ZDNet

The enhanced model excels at creating photorealistic details, including anatomically accurate portraits, natural movement, and multi-layered compositions. Adobe emphasizes that the higher resolution provides more context and nearly twice as many pixels as 1080p, resulting in significantly finer details and improved image quality

2

.Revolutionary Layered Editing and Prompt-Based Controls

Firefly Image 5 introduces groundbreaking layered and prompt-based editing capabilities that treat different objects as separate layers, allowing users to edit them using natural language prompts or traditional tools like resize and rotate

1

. The company ensures that when editing these layers, the image's details and integrity remain uncompromised.

Source: AppleInsider

The new "Prompt to Edit" feature enables users to make complex photo adjustments using simple conversational commands. In demonstrations, users successfully removed objects like fences from photos with AI automatically filling in the spaces to produce realistic-looking results

2

. This functionality addresses a significant pain point for users who previously needed extensive software knowledge to perform similar edits.Custom Model Creation for Personalized AI

One of the most notable additions is the ability for artists to create custom image models using their existing artwork. Currently in closed beta, this feature allows users to drag and drop assets such as images, illustrations, and sketches to develop personalized AI models that reflect their unique artistic style

1

. This development represents a significant step toward democratizing AI model creation for individual creators.Strategic Partnerships and Multi-Platform Integration

Adobe has significantly expanded its AI ecosystem through deeper partnerships with major technology companies. The collaboration with Google brings Gemini, Veo, and Imagen model families directly into Adobe Firefly, Photoshop, Express, Premiere, and GenStudio

4

. Enterprise customers can customize these models with proprietary brand data using Vertex AI and Firefly Foundry to maintain brand consistency.Adobe's Creative Cloud apps now support AI models from multiple providers, including Google Gemini 2.5 Flash Image, Black Forest Labs FLUX.1 Kontext, and Topaz Labs' AI upscale technology

5

. This multi-vendor approach represents a shift away from traditional vendor lock-in practices, giving creators more flexibility in their AI tool selection.Related Stories

Enhanced Creative Workflow Features

The updated Firefly website features a redesigned interface that allows users to switch between generating images or videos through a single prompt box, choose specific AI models, and adjust aspect ratios seamlessly

1

. The homepage now displays user files and recent generation history, with direct shortcuts to other Adobe applications.Adobe has also introduced new audio capabilities, enabling AI-generated soundtracks and speech using models from ElevenLabs. A new prompt generation system allows users to create prompts by selecting words from a word cloud, simplifying the creative process

1

.YouTube Integration and Content Creation Tools

The partnership with Google extends to content creation with the introduction of "Create for YouTube Shorts" feature in the Premiere mobile app. This tool enables content creators to quickly produce short-form vertical videos with AI-generated audio and visual effects, allowing them to capitalize on emerging trends more efficiently

4

.

Source: Beebom

References

Summarized by

Navi

[1]

Related Stories

Adobe Firefly adds prompt-based video editing and third-party AI models to compete in AI video race

16 Dec 2025•Technology

Adobe Unveils Firefly AI Video Generation Amid Expansion and Controversy

13 Sept 2024

Adobe Unveils Next-Gen Firefly AI Models and Integrates Third-Party AI Services

24 Apr 2025•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology