AI and Brain Activity Reveal Insights into Other-Race Face Recognition

2 Sources

2 Sources

[1]

Neural Processing Gaps Explain Other-Race Face Blindness - Neuroscience News

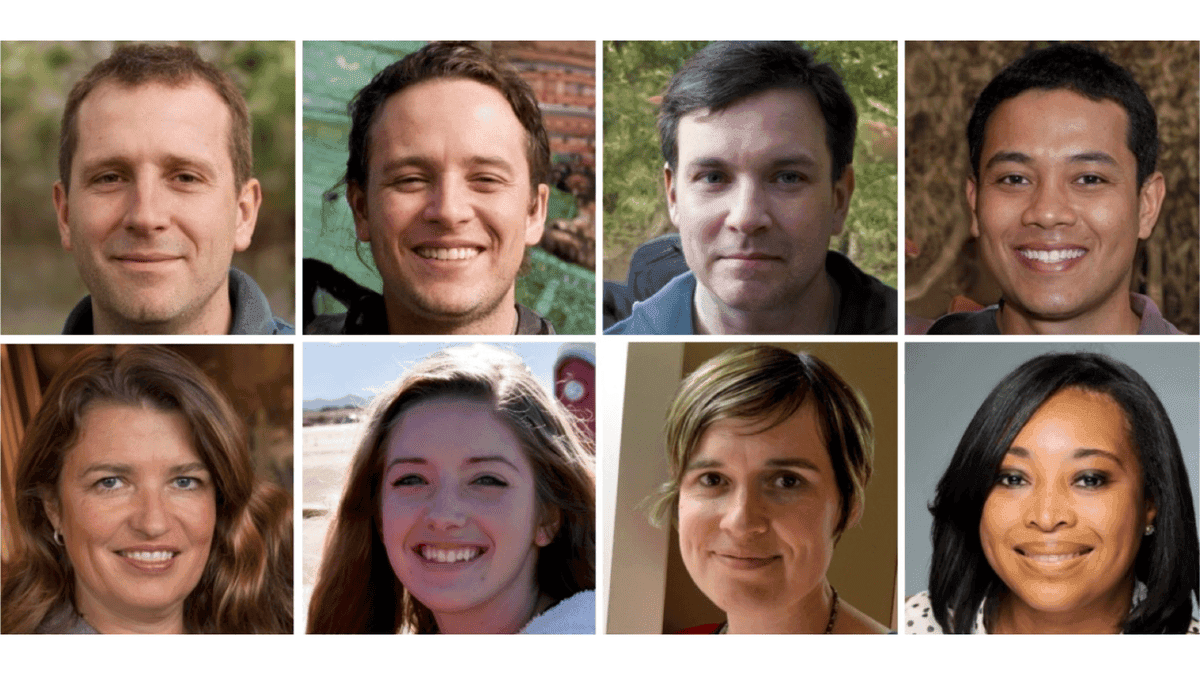

Summary: Researchers have combined artificial intelligence and EEG brain activity data to better understand the Other-Race Effect (ORE), where people recognize faces of their own race more accurately than others. Studies revealed that participants processed other-race faces with less neural detail, seeing them as more average, younger, and more expressive. This lack of distinct processing contributes to difficulties in recognition and may reinforce implicit biases. The findings have real-world implications, from improving facial recognition software and eyewitness testimony to helping address social bias and advancing mental health diagnostics. U of T Scarborough researchers have harnessed artificial intelligence (AI) and brain activity to shed new light on why we struggle to accurately recognize faces of people from different races. Across a pair of studies, researchers explored the Other-Race-Effect (ORE), a well-known phenomenon in which people recognize faces of their own race more easily than others. They combined AI and brain activity collected through EEG (electroencephalography) to reveal new insights into how we perceive other-race faces, including visual distortions more deeply ingrained in our brain than previously thought. "What we found was striking -- people are so much better at seeing the facial details of people from their own race," says Adrian Nestor, associate professor in the Department of Psychology and co-author of the studies. "This is important because we should want to know why we have trouble recognizing faces from other races, and what influence that might have on behaviour." In one study, published earlier this year in the journal Behavior Research Methods, the researchers used generative AI to look at individual responses to seeing images of faces. Two groups of participants (one East Asian, one white) were shown a series of faces on a computer screen and asked to rate them based on similarity. The researchers were able to generate visual representations of faces using a generative adversarial network (GAN), a type of AI that can be trained to create life-like images. Using the GAN's image generating ability, the researchers were able to see the mental images the study participants had of faces. They discovered that faces from the same race were reconstructed more accurately than those from different races, and that people tend to see faces of other races as more average looking. One surprising finding was that faces from other races, when reconstructed, also appear younger. What's happening in the brain A second study, recently published in the journal Frontiers, looked more closely at brain activity that might be involved to explain ORE. Brain activity, which occurs in the first 600 milliseconds of seeing the images, was used to digitally reconstruct how the participants visually process faces in their mind. If it sounds like mind-reading, it kind of is. Nestor's lab first showed the potential of harnessing EEG for visual perception back in 2018. Since then the algorithms they used have improved significantly. Using EEG data, researchers found that the brain processes faces from the same race and faces from different races in distinct ways. Neural recordings associated with visual perception showed less differentiation for other-race faces. "When it comes to other-race faces, the brain responses were less distinct, indicating that these faces are processed more generally and with less detail," says Moaz Shoura, a PhD student in Nestor's lab and co-author of the studies. "This suggests that our brains tend to group other-race faces together, leading to less accurate recognition and reinforcing ORE." One of the most intriguing findings from this study was that other-race faces appeared not just more average-looking, but also younger and more expressive in the minds of the participants, even when they weren't. "This could explain why people often have difficulty recognizing faces from other races. The brain isn't processing facial appearance as distinctly and accurately," says Nestor. Potential real-world applications The research, which received funding from a Natural Sciences and Engineering Research Council of Canada (NSERC) grant, might have far-reaching implications. Nestor says it could open up possibilities for understanding how bias forms in the brain. It could also be used to improve facial recognition software, gather more accurate eyewitness testimony, or even as a diagnostic tool for mental health disorders such as schizophrenia or borderline personality disorder. "It's important to know exactly how people experience distortions in their emotional perception," says Nestor. For example, he says by seeing exactly what's going on in a person's mind who has trouble perceiving disgust or who misinterprets positive emotions as negative ones, it can help with diagnosing mental health disorders and with developing treatments. Shoura adds that by further exploring the effect of perceptual bias, it might help in a range of social situations, from job interviews to combating racial bias. "If we can better understand how the brain processes faces, we can develop strategies to reduce the impact bias can have when we first meet face-to-face with someone from another race." Unraveling other‑race face perception with GAN‑based image reconstruction The other-race effect (ORE) is the disadvantage of recognizing faces of another race than one's own. While its prevalence is behaviorally well documented, the representational basis of ORE remains unclear. This study employs StyleGAN2, a deep learning technique for generating photorealistic images to uncover face representations and to investigate ORE's representational basis. To this end, we collected pairwise visual similarity ratings with same- and other-race faces across East Asian and White participants exhibiting robust levels of ORE. Leveraging the significant overlap in representational similarity between the GAN's latent space and perceptual representations in human participants, we designed an image reconstruction approach aiming to reveal internal face representations from behavioral similarity data. This methodology yielded hyper-realistic depictions of face percepts, with reconstruction accuracy well above chance, as well as an accuracy advantage for same-race over other-race reconstructions, which mirrored ORE in both populations. Further, a comparison of reconstructions across participant race revealed a novel age bias, with other-race face reconstructions appearing younger than their same-race counterpart. Thus, our work proposes a new approach to exploiting the utility of GANs in image reconstruction and provides new avenues in the study of ORE.

[2]

AI and brain activity reveal how we perceive faces from other racial groups differently

University of Toronto Scarborough researchers have harnessed artificial intelligence (AI) and brain activity to shed new light on why we struggle to accurately recognize faces of people from different races. Across a pair of studies, researchers explored the Other-Race-Effect (ORE), a well-known phenomenon in which people recognize faces of their own race more easily than others. They combined AI and brain activity collected through EEG (electroencephalography) to reveal new insights into how we perceive other-race faces, including visual distortions more deeply ingrained in our brain than previously thought. "What we found was striking -- people are so much better at seeing the facial details of people from their own race," says Adrian Nestor, associate professor in the Department of Psychology and co-author of the studies. "This is important because we should want to know why we have trouble recognizing faces from other races, and what influence that might have on behavior." In one study, published earlier this year in the journal Behavior Research Methods, the researchers used generative AI to look at individual responses to seeing images of faces. Two groups of participants (one East Asian, one white) were shown a series of faces on a computer screen and asked to rate them based on similarity. The researchers were able to generate visual representations of faces using a generative adversarial network (GAN), a type of AI that can be trained to create life-like images. Using the GAN's image generating ability, the researchers were able to see the mental images the study participants had of faces. They discovered that faces from the same race were reconstructed more accurately than those from different races, and that people tend to see faces of other races as more average looking. One surprising finding was that faces from other races, when reconstructed, also appear younger. A second study, recently published in the journal Frontiers in Human Neuroscience, looked more closely at brain activity that might be involved to explain ORE. Brain activity, which occurs in the first 600 milliseconds of seeing the images, was used to digitally reconstruct how the participants visually process faces in their mind. If it sounds like mind-reading, it kind of is. Nestor's lab first showed the potential of harnessing EEG for visual perception back in 2018. Since then the algorithms they used have improved significantly. Using EEG data, researchers found that the brain processes faces from the same race and faces from different races in distinct ways. Neural recordings associated with visual perception showed less differentiation for other-race faces. "When it comes to other-race faces, the brain responses were less distinct, indicating that these faces are processed more generally and with less detail," says Moaz Shoura, a Ph.D. student in Nestor's lab and co-author of the studies. "This suggests that our brains tend to group other-race faces together, leading to less accurate recognition and reinforcing ORE." One of the most intriguing findings from this study was that other-race faces appeared not just more average-looking, but also younger and more expressive in the minds of the participants, even when they weren't. "This could explain why people often have difficulty recognizing faces from other races. The brain isn't processing facial appearance as distinctly and accurately," says Nestor. Potential real-world applications The research could have far-reaching implications. Nestor says it could open up possibilities for understanding how bias forms in the brain. It could also be used to improve facial recognition software, gather more accurate eyewitness testimony, or even as a diagnostic tool for mental health disorders such as schizophrenia or borderline personality disorder. "It's important to know exactly how people experience distortions in their emotional perception," says Nestor. For example, he says that seeing exactly what's going on in a person's mind who has trouble perceiving disgust or who misinterprets positive emotions as negative ones can help with diagnosing mental health disorders and with developing treatments. Shoura adds that by further exploring the effect of perceptual bias, it might help in a range of social situations, from job interviews to combating racial bias. "If we can better understand how the brain processes faces, we can develop strategies to reduce the impact bias can have when we first meet face-to-face with someone from another race."

Share

Share

Copy Link

Researchers at the University of Toronto Scarborough have combined AI and EEG data to explore the Other-Race Effect, revealing how our brains process faces from different racial groups differently.

AI and EEG Combine to Unravel the Other-Race Effect

Researchers at the University of Toronto Scarborough have made significant strides in understanding the Other-Race Effect (ORE), a phenomenon where people recognize faces of their own race more easily than those of other races. By combining artificial intelligence (AI) and electroencephalography (EEG) data, the team has uncovered new insights into how our brains process faces from different racial groups

1

2

.Innovative Methodology

The research team, led by Associate Professor Adrian Nestor, employed a novel approach using generative AI and brain activity analysis. In one study, they used a generative adversarial network (GAN) to visualize participants' mental representations of faces. Another study utilized EEG data to reconstruct how participants visually process faces in their minds

1

.Key Findings

The studies revealed several striking results:

- Same-race faces were reconstructed more accurately than other-race faces.

- Other-race faces were perceived as more average-looking.

- Surprisingly, other-race faces appeared younger when reconstructed.

- Brain responses to other-race faces were less distinct, indicating more generalized processing

2

.

Neural Processing Differences

EEG data analysis showed that the brain processes same-race and other-race faces differently. Neural recordings associated with visual perception demonstrated less differentiation for other-race faces, suggesting that our brains tend to group these faces together

2

.Implications for Bias and Recognition

These findings provide valuable insights into why people often struggle to recognize faces from other races. The research suggests that the brain processes facial appearance of other-race faces less distinctly and accurately, which may contribute to recognition difficulties and reinforce implicit biases

1

.Related Stories

Potential Real-World Applications

The research has far-reaching implications across various fields:

- Facial Recognition Software: Improving accuracy and reducing bias.

- Eyewitness Testimony: Enhancing the reliability of identifications.

- Mental Health Diagnostics: Potential use in diagnosing disorders like schizophrenia or borderline personality disorder.

- Social Bias: Developing strategies to combat racial bias in various settings, including job interviews

2

.

Future Directions

The researchers emphasize the importance of further exploring perceptual bias to develop strategies for reducing its impact in social interactions. By better understanding how the brain processes faces, we may be able to mitigate the effects of bias when meeting people from different racial backgrounds

2

.References

Summarized by

Navi

[1]

Related Stories

AI-Generated Faces Fool Even Super Recognizers, But Five-Minute Training Boosts Detection

27 Dec 2025•Science and Research

AI Breakthrough: Predicting Human Thoughts and Revealing Brain Insights

11 Sept 2024

UCLA Study Reveals Striking Similarities Between Biological Brains and AI in Social Interactions

03 Jul 2025•Science and Research

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology