AI Companions and Teens: The Growing Concern Over Digital Relationships

2 Sources

2 Sources

[1]

'No children should be having a relationship with AI,' says author of 'The Anxious Generation': How parents can limit use of chatbots

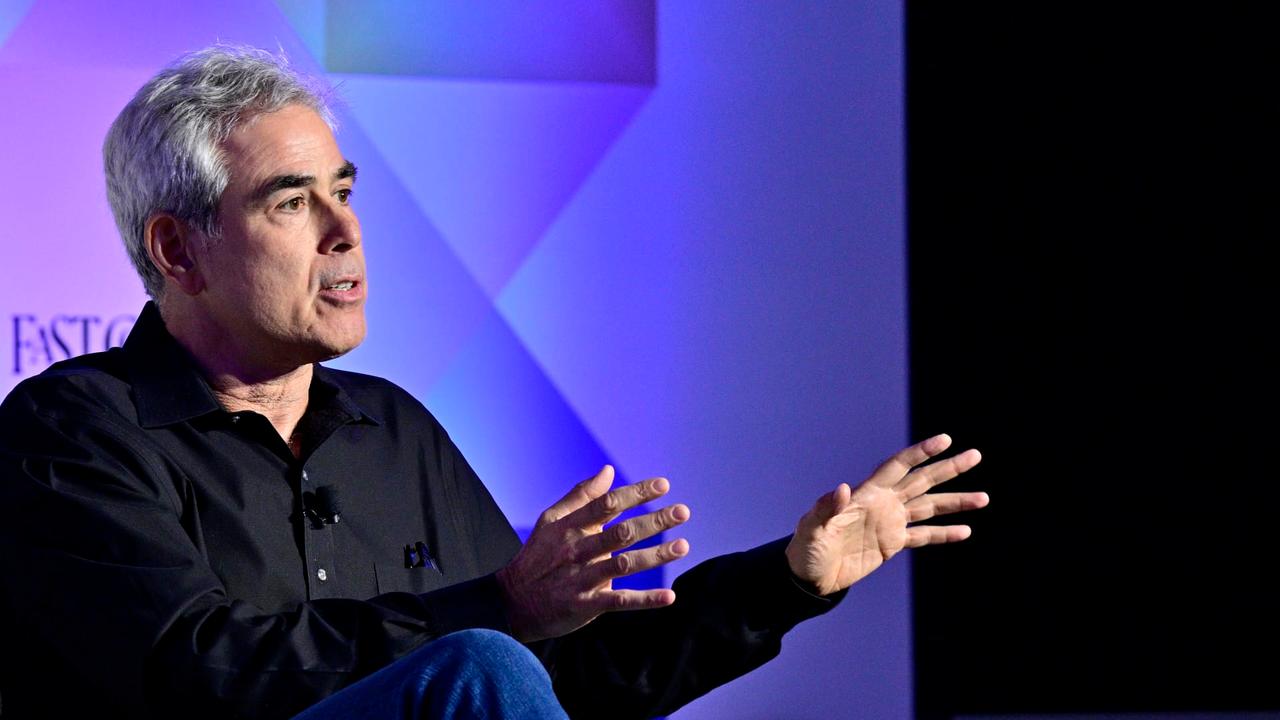

More and more teens are interacting with chatbots like Open AI's ChatGPT, Character.AI and Meta AI. A majority, 72% of teens ages 13 to 17 have used AI companions at least once, according to a July 2025 report by nonprofit Common Sense Media. More than half, 52% interact with the platforms at least a few times a month, and 13% are daily users. While teen use of chatbots can be fairly benign -- 46% say they use them as a tool or program -- for some, the reliance and relationship can go much deeper, sometimes to tragic ends. Last week, parents of teens who died by suicide after extensive chatbot use testified before Congress about the dangers of this new tech. NYU Stern School of Business professor and bestselling author of "The Anxious Generation" Jonathan Haidt has spent the last few years sounding the alarm about teen tech use. He's also been giving parents actionable steps they can take to protect their kids' mental health. AI chatbots are "incredibly dangerous," Haidt told CNBC Make It at the Fast Company Innovation Festival last week. "We have deaths. We have delusions in adults as well. And right now, the most dangerous thing seems to be the relationships, the long conversations." If kids are using AI as a tool to learn and to find information it's "generally a good thing," Haidt says. In fact, by high school, they'll probably have to use it for assignments. The problem arises when the use of this tech takes a wrong turn and kids start to build relationships with it. That's where, Haidt says, it's prudent for parents to set boundaries. To ensure kids are only turning to AI chatbots as a tool, parents can set rules about how they use them at home. They can restrict use only to a shared device like a family computer. Haidt advises parents set a limit on how long their kids can engage in "conversation" with a chatbot and suggests no more than 30 rounds of back and forth. In the stories that ended tragically, conversations were "1,000s and 1,000s of rounds" and that's what made the difference, he says. In an August 2025 blog post that addressed the rapid adoption of ChatGPT and the app's safety, OpenAI wrote "our safeguards work more reliably in common, short exchanges. We have learned over time that these safeguards can sometimes be less reliable in long interactions." Haidt contends that because tech companies "have a long track record of harming children at an industrial scale," the onus is on parents to enforce strict rules around using their products. "No children should be having a relationship with AI," he says. Want to be your own boss? Sign up for Smarter by CNBC Make It's new online course, How To Start A Business: For First-Time Founders. Find step-by-step guidance for launching your first business, from testing your idea to growing your revenue. Sign up today with coupon code EARLYBIRD for an introductory discount of 30% off the regular course price of $127 (plus tax). Offer valid September 16 through September 30, 2025. Plus, sign up for CNBC Make It's newsletter to get tips and tricks for success at work, with money and in life, and request to join our exclusive community on LinkedIn to connect with experts and peers.

[2]

Teens are turning to AI for friendship: New report warns parents to step in before it's too late, suggests what they can do

A new Common Sense Media report reveals 72% of U.S. teens have used AI companions, with 13% chatting daily. While many use them for curiosity or fun, one-third seek emotional support or friendships, raising concerns of replacing human bonds. Experts warn long conversations can deepen dependency and expose teens to harmful content. Parents are urged to set boundaries, encourage open dialogue, and reinforce that AI is a tool, not a substitute for real relationships. The teenage years have always been a search for connection, but in 2025, that search increasingly extends into conversations with machines. A new report from Common Sense Media, "Talk, Trust, and Trade-Offs: How and Why Teens Use AI Companions," reveals that 72% of U.S. teens have used AI companions such as Character.AI, Replika, or ChatGPT in a conversational role. More than half (52%) are regular users, and 13% chat with AI daily. While many teens view AI companions as tools for curiosity or entertainment, one-third use them for emotional support, friendship, or even flirtation. The concern, experts say, is that these digital bonds can sometimes deepen into relationships that replace human connection. The report highlights that nearly one in three teens has chosen to share something serious with an AI instead of turning to friends or family. About a quarter admit to sharing personal details like their name or location. For adolescents still developing social and emotional skills, this shift raises red flags. Despite this, most teens remain clear-eyed. Nearly 80% spend more time with real friends than with AI, and two-thirds say human conversations are more satisfying. But researchers caution that even a small number of harmful experiences -- such as exposure to unsafe advice, offensive stereotypes, or dangerous suggestions -- can have serious consequences when scaled to millions of young users. NYU professor Jonathan Haidt, author of The Anxious Generation, has been outspoken about the risks of extended AI use among teens. As per reports from CNBC Make It, speaking at the Fast Company Innovation Festival, Prof. Haidt warned, "No children should be having a relationship with AI. The most dangerous thing seems to be the long conversations." He recommends parents set limits -- no more than 30 rounds of back-and-forth with chatbots -- to ensure teens use AI for information, not emotional dependency. OpenAI itself has acknowledged that its safety systems are more reliable in short exchanges than in lengthy conversations. The tragic testimonies of parents whose children died after excessive chatbot use before Congress last week underscore the urgency of the issue. Experts stress that parents do not need to be technology experts to make a difference. The Common Sense Media report urges families to: "Teens may experiment with AI the way previous generations experimented with music or video games," the report notes. "But unlike those, AI companions are designed to mimic intimacy." AI companions can help teens practice social skills, brainstorm ideas, or learn languages. In fact, nearly 40% of users report applying lessons from AI chats in real life, especially in starting conversations. But until tech companies introduce stricter safeguards, researchers recommend keeping AI companions firmly in the "tool" category rather than letting them drift into "friend." For parents, that means drawing a line between healthy exploration and risky reliance. As Haidt puts it: "The onus is on parents to enforce strict rules, because tech companies have a long track record of harming children at scale." The challenge is clear: teaching teens that while AI can talk like a friend, it cannot be one.

Share

Share

Copy Link

A new report reveals widespread use of AI chatbots among teens, raising concerns about the impact on mental health and social relationships. Experts urge parents to set boundaries and emphasize human connections.

The Rise of AI Companions Among Teens

A recent report by Common Sense Media has shed light on a growing trend among teenagers: the widespread use of AI companions. The study reveals that a staggering 72% of U.S. teens aged 13 to 17 have used AI chatbots at least once, with 52% interacting with these platforms multiple times a month and 13% engaging daily

1

2

.The Appeal and Risks of AI Relationships

While many teens view AI companions as tools for learning or entertainment, a concerning trend is emerging. One-third of users are turning to these digital entities for emotional support, friendship, or even flirtation

2

. This shift raises red flags for experts who worry about the potential replacement of human connections with artificial ones.Jonathan Haidt, NYU Stern School of Business professor and author of "The Anxious Generation," warns that AI chatbots can be "incredibly dangerous" when relationships develop through long conversations

1

. The risk is particularly high for adolescents still developing social and emotional skills.Tragic Consequences and Congressional Attention

The potential dangers of excessive AI chatbot use have recently come to the forefront of national attention. Last week, parents of teens who died by suicide after extensive chatbot use testified before Congress, highlighting the urgent need for action and regulation in this space

1

.Expert Recommendations for Parents

Experts are urging parents to take an active role in managing their children's interactions with AI companions. Professor Haidt advises setting strict limits on chatbot use, suggesting no more than 30 rounds of back-and-forth conversation

1

. Other recommendations include:- Restricting AI use to shared devices like family computers

- Encouraging open dialogue about AI interactions

- Reinforcing that AI is a tool, not a substitute for real relationships

- Setting clear boundaries on sharing personal information with AI

2

Related Stories

The Dual Nature of AI Companions

Despite the concerns, AI companions are not without potential benefits. Nearly 40% of teen users report applying lessons from AI chats in real life, particularly in starting conversations and practicing social skills

2

. However, experts stress the importance of keeping AI firmly in the "tool" category rather than allowing it to drift into the realm of friendship.Industry Response and Ongoing Challenges

OpenAI, the company behind ChatGPT, has acknowledged that their safeguards work more reliably in short exchanges rather than long interactions

1

. This admission underscores the need for continued development of safety measures in AI technology, especially as it relates to vulnerable populations like teenagers.As the landscape of AI companions continues to evolve, the challenge for parents, educators, and policymakers is clear: finding a balance between the potential benefits of AI as a learning tool and the risks of over-reliance on digital relationships. The goal is to ensure that teens maintain healthy human connections while navigating the increasingly AI-integrated world around them.

References

Summarized by

Navi

Related Stories

72% of U.S. Teens Use AI Companions: Study Reveals Widespread Adoption and Potential Risks

15 Jul 2025•Technology

AI Companions for Teens: A Double-Edged Sword of Connection and Concern

06 Aug 2025•Technology

Teens Increasingly Turn to AI Companions for Advice and Friendship, Raising Concerns

23 Jul 2025•Technology

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology