AI Enhances Brain Tumor Detection with Camouflage-Inspired Transfer Learning

3 Sources

3 Sources

[1]

Camouflage detection boosts neural networks for brain tumor diagnosis

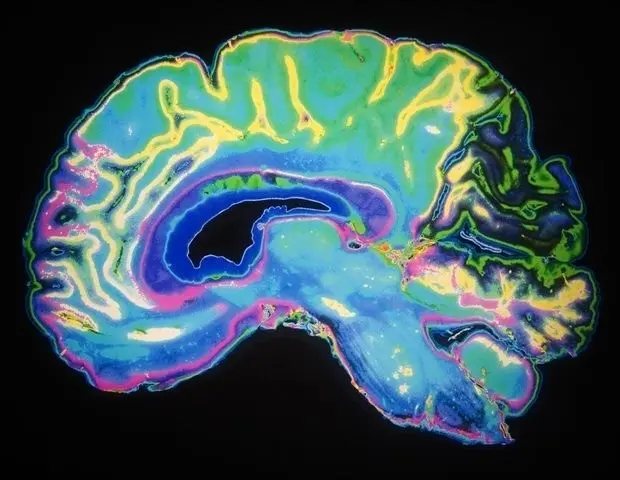

By Dr. Chinta SidharthanReviewed by Lily Ramsey, LLMNov 21 2024 Neural networks trained with a camouflage detection step show enhanced accuracy and sensitivity in identifying brain tumors from MRI scans, mimicking expert radiologists. Study: Deep learning and transfer learning for brain tumor detection and classification. Image Credit: Elif Bayraktar/Shutterstock.com In a recent study published in Biology Methods and Protocols, researchers examined the use of convolutional neural networks (CNNs) and transfer learning to improve brain tumor detection in magnetic resonance imaging (MRI) scans. Using CNNs pre-trained on detecting animal camouflage for transfer learning, the study investigated whether this unconventional step could enhance the accuracy of CNNs in identifying gliomas and improve diagnostic support in medical imaging. Background Artificial intelligence (AI) and deep learning models, including CNNs, have made significant strides in medical imaging, especially in the detection and classification of complex patterns in tasks such as tumor detection. Furthermore, CNNs excel at learning and recognizing features from images, allowing them to categorize unseen data accurately. Additionally, transfer learning -- a process where pre-trained models are adapted to new but related tasks -- can enhance the effectiveness of CNNs, especially in image-based applications where data may be limited. While numerous CNNs have been trained on large datasets for brain tumor detection, the inherent similarities between normal and cancerous tissues continue to present challenges. About the study The present study used a combination of CNN-based models and transfer learning techniques to explore the classification of brain tumors using MRI scans. The researchers used a main dataset that consisted of T1-weighted and T2-weighted post-contrast MRI images showing three types of gliomas -- astrocytomas, oligodendrogliomas, and oligoastrocytomas -- as well as normal brain images. Data for glioma MRIs were obtained from online sources, while the Veterans Affairs Boston Healthcare System provided normal brain MRIs. While the researchers used manual image preprocessing, which involved cropping and resizing, no additional spatial normalization that could introduce bias was performed. The study was unique in its use of a CNN pre-trained on detection of camouflaged animals, and the researchers hypothesized that training the CNN on patterns in camouflaged animals might enhance the network's sensitivity to subtle features in brain MRIs. They believed that parallels could be drawn between discriminating cancerous tissues and cells from the healthy tissue surrounding the tumor and detecting animals using natural camouflage to hide. This pre-trained model was used as a baseline for transfer learning in the neural networks used in the study, namely, T1Net and T2Net, to classify T1- and T2-weighted MRIs, respectively. Furthermore, to analyze the performance of the CNN beyond parameters such as accuracy, the study employed explainable AI (XAI) techniques. The study mapped the feature spaces using principal component analysis to visualize data distribution. At the same time, DeepDreamImage provided visual interpretations of internal patterns, and Gradient-Weighted Class Activation Mapping or Grad-CAM saliency maps highlighted the critical areas in MRI scans used by the network for classification. Cumulatively, these methods offered insights into the decision-making processes of the CNN and the impact of camouflage transfer learning on classification outcomes. Results The study showed that transferring learning from animal camouflage detection improved the performance of CNN in tasks involving the classification of brain tumors. Notably, transfer learning significantly boosted the accuracy for the T2-weighted MRI model, achieving 92.20% accuracy, which was a significant increase from the non-transfer model's 83.85% accuracy. This improvement was statistically significant with a p-value of 0.0035 and substantially enhanced the classification accuracy for astrocytomas. For T1-weighted MRI scans, the transfer-trained network showed an accuracy of 87.5%, though this improvement was not statistically significant. Furthermore, the feature spaces generated from both models after transfer learning indicated improved generalization ability, particularly for T2Net. Compared to baseline models, the transfer-trained networks displayed a clearer separation between tumor categories, with the T2 transfer model showing enhanced distinction, especially for astrocytomas. The DeepDreamImage visualizations provided additional detail, showing more defined and distinct 'feature prints' for each glioma type in transfer-trained networks compared to baseline models. This distinction suggested that transfer learning from camouflage detection helped networks better identify key tumor characteristics, potentially by generalizing from subtle camouflage patterns. Moreover, the GradCAM saliency maps revealed that both T1 and T2 networks focused on both tumor areas and surrounding tissues during classification. This was similar to the diagnostic process used by human radiologists for examining tissue distortion adjacent to tumors, indicating that the transfer-trained networks could detect more subtle, relevant features in MRI scans. Conclusions In summary, the study indicated that transfer learning from networks pre-trained on the detection of animal camouflage improved the performance of CNNs in classifying brain tumors in MRI scans, especially with T2-weighted images. This approach enhanced the networks' ability to detect subtle tumor features and increased classification accuracy. These findings support the potential for unconventional training sources to enhance neural network performance in complex medical imaging tasks, offering a promising direction for future AI-assisted diagnostic tools. Journal reference: Rustom, F., Moroze, E., Parva, P., Ogmen, H., & Yazdanbakhsh, A. (2024). Deep learning and transfer learning for brain tumor detection and classification. Biology Methods and Protocols. 9(1). doi:10.1093/biomethods/bpae080. https://academic.oup.com/biomethods/article/9/1/bpae080/7903126

[2]

AI Improves Brain Tumor Detection - Neuroscience News

Summary: AI models trained on MRI data can now distinguish brain tumors from healthy tissue with high accuracy, nearing human performance. Using convolutional neural networks and transfer learning from tasks like camouflage detection, researchers improved the models' ability to recognize tumors. This study emphasizes explainability, enabling AI to highlight the areas it identifies as cancerous, fostering trust among radiologists and patients. While slightly less accurate than human detection, this method demonstrates promise for AI as a transparent tool in clinical radiology. A new paper in Biology Methods and Protocols, published by Oxford University Press, shows that scientists can train artificial intelligence models to distinguish brain tumors from healthy tissue. AI models can already find brain tumors in MRI images almost as well as a human radiologist. Researchers have made sustained progress in artificial intelligence (AI) for use in medicine. AI is particularly promising in radiology, where waiting for technicians to process medical images can delay patient treatment. Convolutional neural networks are powerful tools that allow researchers to train AI models on large image datasets to recognize and classify images. In this way the networks can "learn" to distinguish between pictures. The networks also have the capacity for "transfer learning." Scientists can reuse a model trained on one task for a new, related project. Although detecting camouflaged animals and classifying brain tumors involves very different sorts of images, the researchers involved in this study believed that there was a parallel between an animal hiding through natural camouflage and a group of cancerous cells blending in with the surrounding healthy tissue. The learned process of generalization - the grouping of different things under the same object identity - is essential to understanding how network can detect camouflaged objects. Such training could be particularly useful for detecting tumors. In this retrospective study of public domain MRI data, the researchers investigated how neural network models can be trained on brain cancer imaging data while introducing a unique camouflage animal detection transfer learning step to improve the networks' tumor detection skills. Using MRIs from public online repositories of cancerous and healthy control brains (from sources including Kaggle, the Cancer Imaging Archive of NIH National Cancer Institute, and VA Boston Healthcare System), the researchers trained the networks to distinguish healthy vs cancerous MRIs, the area affected by cancer, and the cancer appearance prototype (what type of cancer it looks like). The researchers found that the networks were almost perfect at detecting normal brain images, with only 1-2 false negatives, and distinguishing between cancerous and healthy brains. The first network had an average accuracy of 85.99% at detecting brain cancer, the other had an accuracy rate of 83.85%. A key feature of the network is the multitude of ways in which its decisions can be explained, allowing for increased trust in the models from medical professionals and patients alike. Deep models often lack transparency, and as the field grows the ability to explain how networks perform their decisions becomes important. Following this research, the network can generate images that show specific areas in its tumor-positive or negative classification. This would allow radiologists to cross-validate their own decisions with those of the network and add confidence, almost like a second robotic radiologist who can show the telltale area of an MRI that indicates a tumor. In the future, the researchers here believe it will be important to focus on creating deep network models whose decisions can be described in intuitive ways, so artificial intelligence can occupy a transparent supporting role in clinical environments. While the networks struggled more to distinguish between types of brain cancer in all cases, it was still clear they had distinct internal representation in the network. The accuracy and clarity improved as the researchers trained the networks in camouflage detection. Transfer learning led to an increase in accuracy for the networks. While the best performing proposed model was about 6% less accurate than standard human detection, the research successfully demonstrates the quantitative improvement brought on by this training paradigm. The researchers here believe that this paradigm, combined with the comprehensive application of explainability methods, promotes necessary transparency in future clinical AI research. "Advances in AI permit more accurate detection and recognition of patterns," said the paper's lead author, Arash Yazdanbakhsh. "This consequently allows for better imaging-based diagnosis aid and screening, but also necessitate more explanation for how AI accomplishes the task. Aiming for AI explainability enhances communication between humans and AI in general. "This is particularly important between medical professionals and AI designed for medical purposes. Clear and explainable models are better positioned to assist diagnosis, track disease progression, and monitor treatment." Deep Learning and Transfer Learning for Brain Tumor Detection and Classification Convolutional neural networks (CNNs) are powerful tools that can be trained on image classification tasks and share many structural and functional similarities with biological visual systems and mechanisms of learning. In addition to serving as a model of biological systems, CNNs possess the convenient feature of transfer learning where a network trained on one task may be repurposed for training on another, potentially unrelated, task. In this retrospective study of public domain MRI data, we investigate the ability of neural network models to be trained on brain cancer imaging data while introducing a unique camouflage animal detection transfer learning step as a means of enhancing the networks' tumor detection ability. Training on glioma and normal brain MRI data, post-contrast T1-weighted and T2-weighted, we demonstrate the potential success of this training strategy for improving neural network classification accuracy. Qualitative metrics such as feature space and DeepDreamImage analysis of the internal states of trained models were also employed, which showed improved generalization ability by the models following camouflage animal transfer learning. Image saliency maps further this investigation by allowing us to visualize the most important image regions from a network's perspective while learning. Such methods demonstrate that the networks not only 'look' at the tumor itself when deciding, but also at the impact on the surrounding tissue in terms of compressions and midline shifts. These results suggest an approach to brain tumor MRIs that is comparable to that of trained radiologists while also exhibiting a high sensitivity to subtle structural changes resulting from the presence of a tumor.

[3]

AI models can be trained to distinguish brain tumors from healthy tissue

Oxford University Press USANov 20 2024 A new paper in Biology Methods and Protocols, published by Oxford University Press, shows that scientists can train artificial intelligence models to distinguish brain tumors from healthy tissue. AI models can already find brain tumors in MRI images almost as well as a human radiologist. Researchers have made sustained progress in artificial intelligence (AI) for use in medicine. AI is particularly promising in radiology, where waiting for technicians to process medical images can delay patient treatment. Convolutional neural networks are powerful tools that allow researchers to train AI models on large image datasets to recognize and classify images. In this way the networks can "learn" to distinguish between pictures. The networks also have the capacity for "transfer learning." Scientists can reuse a model trained on one task for a new, related project. Although detecting camouflaged animals and classifying brain tumors involves very different sorts of images, the researchers involved in this study believed that there was a parallel between an animal hiding through natural camouflage and a group of cancerous cells blending in with the surrounding healthy tissue. The learned process of generalization - the grouping of different things under the same object identity - is essential to understanding how network can detect camouflaged objects. Such training could be particularly useful for detecting tumors. In this retrospective study of public domain MRI data, the researchers investigated how neural network models can be trained on brain cancer imaging data while introducing a unique camouflage animal detection transfer learning step to improve the networks' tumor detection skills. Using MRIs from public online repositories of cancerous and healthy control brains (from sources including Kaggle, the Cancer Imaging Archive of NIH National Cancer Institute, and VA Boston Healthcare System), the researchers trained the networks to distinguish healthy vs cancerous MRIs, the area affected by cancer, and the cancer appearance prototype (what type of cancer it looks like). The researchers found that the networks were almost perfect at detecting normal brain images, with only 1-2 false negatives, and distinguishing between cancerous and healthy brains. The first network had an average accuracy of 85.99% at detecting brain cancer, the other had an accuracy rate of 83.85%. A key feature of the network is the multitude of ways in which its decisions can be explained, allowing for increased trust in the models from medical professionals and patients alike. Deep models often lack transparency, and as the field grows the ability to explain how networks perform their decisions becomes important. Following this research, the network can generate images that show specific areas in its tumor-positive or negative classification. This would allow radiologists to cross-validate their own decisions with those of the network and add confidence, almost like a second robotic radiologist who can show the telltale area of an MRI that indicates a tumor. In the future, the researchers here believe it will be important to focus on creating deep network models whose decisions can be described in intuitive ways, so artificial intelligence can occupy a transparent supporting role in clinical environments. While the networks struggled more to distinguish between types of brain cancer in all cases, it was still clear they had distinct internal representation in the network. The accuracy and clarity improved as the researchers trained the networks in camouflage detection. Transfer learning led to an increase in accuracy for the networks. While the best performing proposed model was about 6% less accurate than standard human detection, the research successfully demonstrates the quantitative improvement brought on by this training paradigm. The researchers here believe that this paradigm, combined with the comprehensive application of explainability methods, promotes necessary transparency in future clinical AI research. Advances in AI permit more accurate detection and recognition of patterns. This consequently allows for better imaging-based diagnosis aid and screening, but also necessitate more explanation for how AI accomplishes the task. Aiming for AI explainability enhances communication between humans and AI in general. This is particularly important between medical professionals and AI designed for medical purposes. Clear and explainable models are better positioned to assist diagnosis, track disease progression, and monitor treatment." Arash Yazdanbakhsh, paper's lead author Oxford University Press USA Journal reference: Rustom, F., et al. (2024). Deep learning and transfer learning for brain tumor detection and classification. Biology Methods and Protocols. doi.org/10.1093/biomethods/bpae080.

Share

Share

Copy Link

A new study shows that AI models using convolutional neural networks and transfer learning from camouflage detection can improve brain tumor identification in MRI scans, approaching human-level accuracy while offering explainable results.

AI Models Improve Brain Tumor Detection with Innovative Transfer Learning

A groundbreaking study published in Biology Methods and Protocols has demonstrated that artificial intelligence (AI) models can be trained to distinguish brain tumors from healthy tissue with remarkable accuracy

1

. The research, led by Arash Yazdanbakhsh, introduces a novel approach using convolutional neural networks (CNNs) and transfer learning from camouflage detection to enhance brain tumor identification in magnetic resonance imaging (MRI) scans.Innovative Approach: Camouflage Detection for Tumor Identification

The study's unique aspect lies in its use of CNNs pre-trained on detecting camouflaged animals. Researchers hypothesized that the skills learned in identifying hidden animals could translate to detecting subtle differences between cancerous and healthy brain tissues

2

. This unconventional approach aimed to improve the network's sensitivity to nuanced features in brain MRIs.Methodology and Data Sources

The research team utilized a dataset comprising T1-weighted and T2-weighted post-contrast MRI images showing various types of gliomas and normal brain images. Data sources included public repositories such as Kaggle, the Cancer Imaging Archive of NIH National Cancer Institute, and the Veterans Affairs Boston Healthcare System

3

.Impressive Results and Accuracy

The study revealed significant improvements in tumor detection accuracy:

- T2-weighted MRI model achieved 92% accuracy, a substantial increase from 83% in the non-transfer model.

- T1-weighted MRI scans showed 87% accuracy after transfer learning.

- Overall, the networks demonstrated near-perfect detection of normal brain images, with only 1-2 false negatives.

Related Stories

Explainable AI: Enhancing Trust and Transparency

A key feature of this research is the focus on explainable AI (XAI) techniques:

- DeepDreamImage visualizations provided more defined 'feature prints' for each glioma type in transfer-trained networks.

- GradCAM saliency maps revealed that networks focused on both tumor areas and surrounding tissues, mimicking the diagnostic process of human radiologists.

- The network can generate images highlighting specific areas in its tumor classification, allowing radiologists to cross-validate their decisions

1

.

Implications for Clinical Practice

While the best-performing model was about 6% less accurate than standard human detection, the research demonstrates significant potential for AI in clinical radiology:

- The AI models could serve as a "second robotic radiologist," providing additional confidence in diagnoses.

- The explainable nature of the AI decisions promotes transparency and trust among medical professionals and patients.

- This approach could lead to faster and more accurate imaging-based diagnoses, potentially reducing delays in patient treatment

2

.

References

Summarized by

Navi

[2]

Related Stories

Recent Highlights

1

OpenAI secures $110 billion funding round from Amazon, Nvidia, and SoftBank at $730B valuation

Business and Economy

2

Trump orders federal agencies to ban Anthropic after Pentagon dispute over AI surveillance

Policy and Regulation

3

Google releases Nano Banana 2 AI image model with Pro quality at Flash speed

Technology