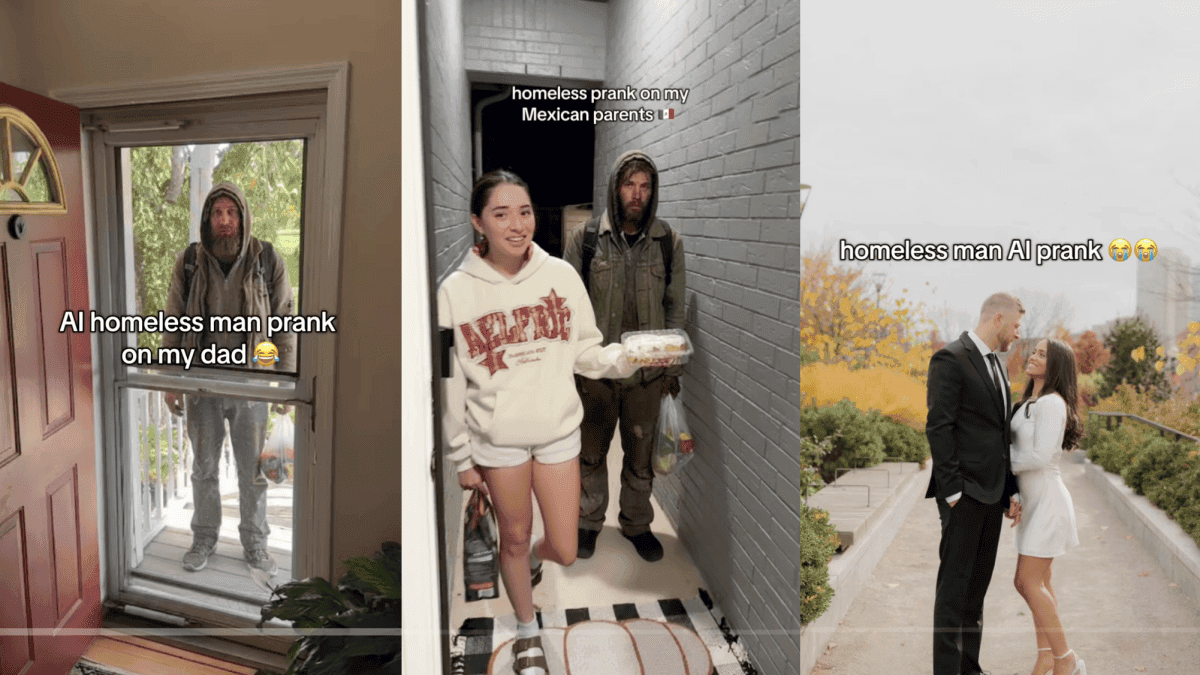

AI-Generated 'Homeless Intruder' Prank Sparks Police Warnings and Ethical Concerns

6 Sources

6 Sources

[1]

Police are asking kids to stop pulling AI homeless man prank

We've been so worried about deepfaked politicians, AI musicians, virtual actresses, and phony satellite imagery that we didn't even consider the dangers posed by precocious teenagers. Kids are using AI to create images of a disheveled, seemingly unhoused person in their home and sending them to their parents. Understandably, they're not thrilled and in some instances call the police. The prank has gone viral on TikTok and, in addition to giving parents agita, has become a headache for law enforcement. The premise is simple enough: kids use Snapchat's AI tools to create images of a grimy man in their home and tell their parents they let them in to use the bathroom, take a nap, or just get a drink of water. Often they say the person claims to know the parents from work or college. And then, predictably, the parents lose their cool and demand they kick the man out. The kids, of course, record the whole thing, and post their parents reactions to TikTok, where some of the clips have millions of views. Where things go from problematic to potentially dangerous is when the prank carries on for too long and parents call the authorities. Calls of a home invasion, especially involving children are treated as high priority by police, so pranks like this tie up valuable resources and could actually put the pranksters in danger. Round Rock Police Patrol Division Commander Andy McKinney told NBC that it could even "cause a SWAT response." The Salem, MA police department summed it up best in a statement saying, "this prank dehumanizes the homeless, causes the distressed recipient to panic and wastes police resources. Police officers who are called upon to respond do not know this is a prank and treat the call as an actual burglary in progress thus creating a potentially dangerous situation." So, while we all love a good prank, maybe let this one go.

[2]

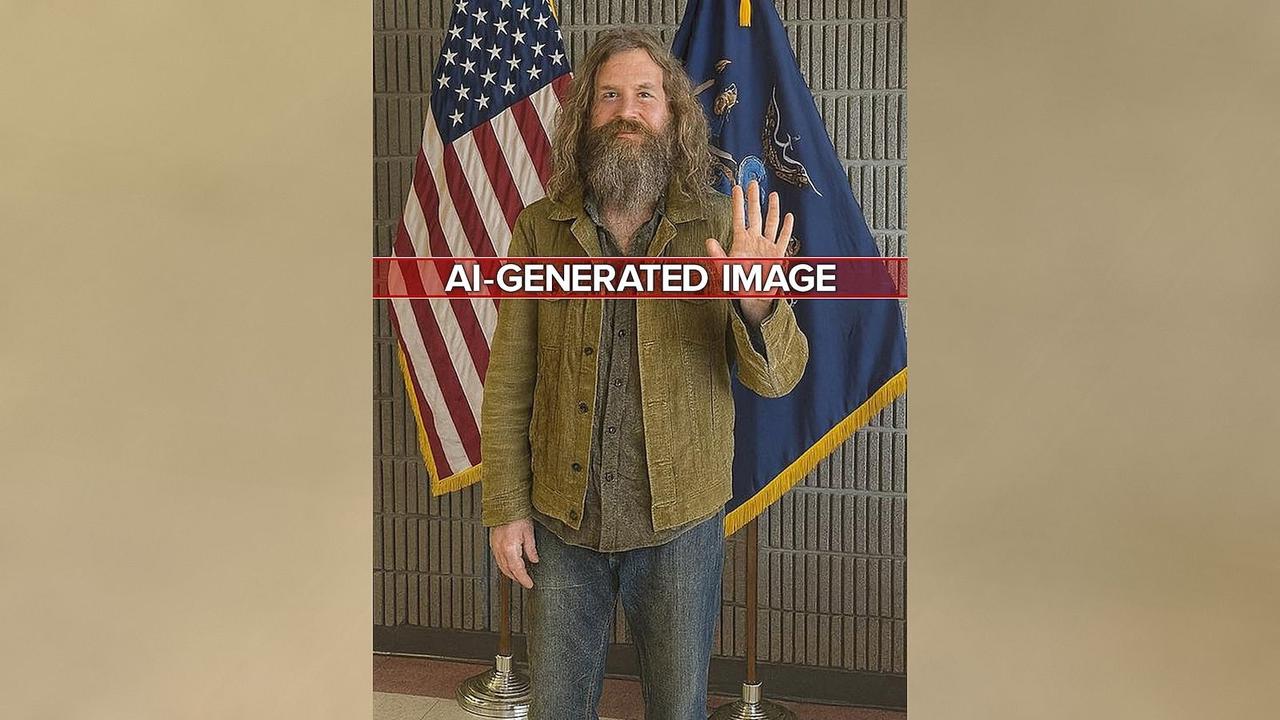

Police Warn Against Pranking People With A.I. Images of Homeless Intruder

Law enforcement departments in several states said that an online trend in which people are tricked into believing a homeless man is in their homes could have dangerous consequences. Police departments in at least four states are warning about an online trend in which people are using A.I.-generated images to trick friends and relatives into believing that a homeless man had invaded their homes. They say that the "disturbing" and "dangerous" prank causes panic and wastes public resources, as it can result in police officers responding to nonexistent emergencies. The stunt received attention after hundreds of videos with hashtags like #aihomelessprank and #homelessmanprank circulated on TikTok; at least one video raked in over a million views. The prank works like this: A person texts a friend or family member an A.I.-generated picture of a homeless man knocking at the door, eating in the kitchen or lying in bed at the home. The sender often claims that the man had said he was an acquaintance and had asked to come inside or for food. Then, the person who receives the picture reacts, often with concern or panic. The prankster then includes the reaction in a video posted to social media. The pranks can cause panic, waste resources and are dangerous, according to police agencies in Massachusetts, Michigan, New York and Wisconsin. The police said they had responded to multiple calls of home intrusions, only for officers dispatched to the scene to learn that the break-ins were fake. "There are so many scenarios that could go wrong," said Capt. John Burke of the Salem Police Department in Massachusetts. If a worried family member calls to report an intruder with a weapon, armed officers dispatched to the scene could confront an unaware house occupant or neighbor, creating a potentially dangerous situation, he said. Causing panic and distress to an older person could prompt a medical complication, he added. The Shawano County Sheriff's Office in Wisconsin, the Yonkers Police Department in New York and the West Bloomfield Police Department in Michigan all said in their statements that the prank "isn't funny" and could be dangerous for both the police and the public. "It's a real safety risk for officers who are responding and for the family members who are home if our officers get there before the prank is revealed," the Yonkers Police Department said in its statement. The prank also "dehumanizes the homeless," the Salem Police Department said in its statement. It noted that someone who causes emergency services to be dispatched to a nonexistent emergency could face a prison term of up to two and a half years or a fine of up to $1,000. TikTok did not comment directly on the homeless man prank but has said that creators are required to label content generated by A.I. and that the company was aggressive in removing content that violates guidelines. A.I. has been used by women in a similar prank in which images of muscular, shirtless plumbers are generated in pictures of bathrooms and kitchens to elicit jealous reactions from boyfriends. Videos of the pranks involving homeless men and shirtless plumbers have been shared hundreds of times and have generated thousands of comments. Tutorials on how to use A.I. to create those images have also gained traction online. As A.I. hoaxes become more common, law enforcement agencies will need to find a new way to classify and discipline people who generate false reports, the police say. "I look at it like a new version of swatting," Captain Burke of the Salem Police Department said. "It's a really, really terrible idea."

[3]

Creators use AI to prank family with fake 'homeless intruders'

TikTok's latest trend uses AI to fake home intrusions -- and it's dangerous Credit: TikTok / mmmjoemele / julieandcorey / julieandcorey / There's a new TikTok trend, and it's dangerous, manipulative, and feeds off the dehumanization of people facing housing insecurity. People are using AI to generate false images of "homeless" men entering their houses to trick their parents, roommates, or partners. In one viral video, creator Joe Mele used AI to create an image of someone who looks unhoused standing on the other side of his screened front door. He sent the picture to his dad with the text: "Hey dad there's this guy at the front door, he says he knows you?" "No I don't know him," his dad seemingly said. "What does he want?" "He said you guys went to school together, I invited him in," Mele responded along with another AI-generated photo of the man sitting on his couch. "JOE PICK UP THE PHONE," his dad responds. "I DON'T KNOW HIM!!!!!!!!" Followed by, "Hello???" along with three missed calls. "He said he's hungry, grabbing a quick snack," Mele sent again with another AI-generated photo of the same AI-generated man taking food out of an open refrigerator. "PICK UP THE PHONE," his dad said. "Are you getting my calls?" along with a screenshot of seven missed calls. This goes on for some time, as Mele tells it. Mele sends an AI-generated photo of the man using his dad's toothbrush and sleeping in his dad's bed. The video has wracked up over 10.4 million views, and it's not the only one. There are dozens of videos with thousands of of views all following the same trend, many of which use Google Gemini AI, according to one user. Google recently added its new Nano Banana Ai image tool to Gemini, which makes it easy to edit photos. Of course, Mele's entire video could be some kind of scripted skit, but Mele's hardly the only one making videos like this. Not all parents, roommates, and partners respond with panicked texts and phone calls, as intended. Some respond with an immediate call to the police. The BBC reported that Dorset Police have received calls based on the prank, and asked people to "please attempt to check it isn't a prank before [dialing] 999" if they "receive a message and pictures similar to the above antics from friends or family." The Salem Police Department in Massachusetts also posted a news release about the trend, calling the prank "stupid and potentially dangerous." Not only does the prank involve manipulating loved ones, but it's also a pretty blatant dehumanization of people facing housing insecurity, depicting them as scary, dirty, or invasive -- all harmful stereotypes -- and using them as a prop for a joke. "This prank dehumanizes the homeless, causes the distressed recipient to panic and wastes police resources," the City of Salem Police Department wrote. "Police officers who are called upon to respond do not know this is a prank and treat the call as an actual burglary in progress thus creating a potentially dangerous situation."

[4]

Police issue warning about AI-assisted "homeless man" home invasion social media prank

Paula Wethington is a digital producer at CBS Detroit. She previously held digital content roles at NEWSnet, Gannett/USA Today network and The Monroe News in Michigan. She is a graduate of the University of South Carolina. A trending social media prank involving AI-altered photos appearing so as to simulate a home invasion has police asking the community to be on the alert for such content. The West Bloomfield Police Department explains that a trend nicknamed the "AI Homeless Man Prank" has been picking up in popularity on social media platforms such as TikTok. As part of that trend, someone uses artificial intelligence-based photo manipulation techniques to create fake images of an intruder inside a home, with the intent "to scare family or roommates," police said. Such an instance has been reported to West Bloomfield police, Monday's report said. In the meantime, the Michigan officers learned that the Yonkers Police Department in New York State was reporting such instances of these pranks a few days earlier. "While it may seem like a joke, this 'prank' isn't funny," West Bloomfield police said. The implications include causing panic among the residents, the possibility of dangerous or violent reactions, and wasting time and resources of emergency responders. "Parents: Please talk to your kids about the real consequences of these trends. What seems funny online could have serious emotional and or legal consequences," West Bloomfield police said. The Yonkers police department also urged asking questions of roommates or family members before calling 911 to report such an image. "Make sure it's real. Make sure your family members know you're going to call 911 and to tell you THEN if it's a prank, before it's too late," they said.

[5]

'AI homeless man prank' on social media prompts concern from local authorities

He's gray-haired, bearded, wearing no shoes and standing at Rae Spencer's doorstep. "Babe," the content creator wrote in a text to her husband. "Do you know this man? He says he knows you?" Spencer's husband, who responded immediately with "no," appeared to express shock when she then sent more images of the man pictured inside their home, sitting on their couch and taking a nap on their bed. She said he FaceTimed her, "shaking" in fear. But the man wasn't real. Spencer, based in St. Augustine, Florida, had created the images using an artificial intelligence-based generator. She sent them to her husband and posted their exchange to TikTok as part of a viral trend that some online refer to as the "AI homeless man prank." Over 5 million people have liked Spencer's video on TikTok, where the hashtag #homelessmanprank populated more than 1,200 videos, most of them related to the recent trend. Some have also used the hashtag #homelessman to post their videos, all of which center on the idea of tricking people into believing that there is a stranger inside their home. Several people have also posted tutorials about how to make the images. The trend has also spread to other social media platforms, including Snapchat and Instagram. As the prank gains traction online, local authorities have started issuing warnings to participants -- who they say primarily are teens -- about the dangers of misusing AI to spread false information. "Besides being in bad taste, there are many reasons why this prank is, to put it bluntly, stupid and potentially dangerous," police officials in Salem, Massachusetts, wrote on their website this month. "This prank dehumanizes the homeless, causes the distressed recipient to panic and wastes police resources. Police officers who are called upon to respond do not know this is a prank and treat the call as an actual burglary in progress thus creating a potentially dangerous situation." Even overseas, some local officials have reported false home invasions tied to the trend. In the United Kingdom, Dorset Police issued a warning after the force deployed resources when it received a "call from an extremely concerned parent" last week, only to learn it was a prank, according to the BBC. An Garda Síochána, Ireland's national police department, also wrote a message on its Facebook and X pages, sharing two recent images authorities received that were made using generative AI tools. The prank is the latest example of AI's ability to deceive through fake imagery. The proliferation of photorealistic AI image and video generators in recent years has given rise to an internet full of AI-made "slop": media of fake people and scenarios that -- despite exhibiting telltale signs of AI -- fool many people online, especially older internet users. As the technologies grow more sophisticated, many find it even harder to distinguish between what's real and what's fake. Last year, Katy Perry shared that her own mother was tricked by an AI-generated image of her attending the Met Gala. Even if most such cases don't involve nefarious intent, the pranks underscore how easily AI can potentially manipulate real people. With the recent release of Sora 2, an OpenAI employee touted the video generator's ability to create realistic security video of CEO Sam Altman stealing from Target -- a clip that drew concern from some who worry about how AI might be used to carry out mass manipulation campaigns. AI image and video generators typically put watermarks on their outputs to indicate the use of AI. But users can easily crop them out. It's unclear which specific AI models were used in many of the video pranks. When NBC News asked OpenAI's ChatGPT to "generate an image of a homeless man in my home," the bot replied, "I can't create or edit an image like that -- it would involve depicting a real or implied person in a situation of homelessness, which could be considered exploitative or disrespectful." Asked the same question, Gemini, Google's AI assistant, replied: "Absolutely. Here is the image you requested." OpenAI and Google didn't immediately respond to requests for comment. Representatives for Snap and Meta (which owns Instagram) didn't provide comments. Reached for comment, TikTok said it added labels to videos that NBC News had flagged related to the trend to clarify that they are AI-generated content. TikTok also referred NBC News to its Community Guidelines, which require creators to label AI-generated or significantly edited content that shows realistic-looking scenes or people. Oak Harbor, Washington, police officials warned that "AI tools can create highly convincing images, and misinformation can spread quickly, causing unnecessary fear or diverting public safety resources." The police department issued a statement after a social media post appeared to show "a homeless individual was present on the Oak Harbor High School Campus." The claim turned out to be a hoax, officials said. The police department said it's working with the school district to investigate the incident and "address the dissemination of this fabricated content." No specific laws address that type of AI misuse directly. But in at least one instance, in Brown County, Ohio, charges were brought. "We want to be clear: this behavior is not a 'prank' -- it is a crime," the sheriff's department, which reported two separate recent incidents related to the trend this month, wrote recently on Facebook. "Both juveniles involved have been criminally charged for their roles in these incidents." The sheriff's department didn't say what the suspects were charged with. It didn't respond to a request for comment. In its message in Massachusetts, the Salem Police Department advised "pranksters" to "Think Of The Consequences Before You Prank," citing a state law that penalizes people who engage in "Willful and malicious communication of false information to public safety answering points." In Round Rock, Texas, Andy McKinney, commander of the Patrol Division of the Round Rock Police Department, warned NBC News that such cases "could have consequences" in the future. The department recently responded to two home invasion calls in a single weekend, both which stemmed from prank texts. One of the calls was from a mom who "believed it was real." "You know, pranks, even though they can be innocent, can have unintended consequences," he said. "And oftentimes young people don't think about those unattended consequences and the families they may impact, maybe their neighbors, and so a real-life incident could be happening with one of their neighbors, and they're draining resources, thinking this is going to be fun." For now, Round Rock police are treating such incidents as educational opportunities. "We want to encourage parents and family members to have open conversations and talk about" these things with their kids, McKinney said. "Like: 'Hey, I know things are funny. I know that sometimes online trends are fun, but we need to think about the dangers before we can do them.'"

[6]

Police departments issue warnings on AI 'homeless man' prank

The viral TikTok trend has prompted police warnings over safety concerns. A viral trend involving artificially generated images of a "homeless man" has prompted police warnings over safety concerns, with officials calling it "stupid and potentially dangerous." The trend uses AI image generators to create realistic photos depicting a disheveled man appearing to be at someone's door or inside their home. In the videos, pranksters send these AI-generated photos to friends or relatives. The recipients' panicked reactions, sometimes resulting in emergency calls, are then recorded and posted online for entertainment and "likes." Police departments in Michigan, New York and Wisconsin have issued public warnings about the AI prank. The Yonkers Police Department in New York posted an AI-generated image demonstrating the prank on Facebook , noting that the prank has happened "a few times" already. "Here's the problem: officers are responding FAST using lights-and-sirens to what sounds like a call of a real intruder -- and only getting called off once everyone realizes it was a joke," the police department wrote on Facebook. "That's not just a waste of resources... it's a real safety risk for officers who are responding and for the family members who are home if our officers get there before the prank is revealed and rush into the home to apprehend this 'intruder' that doesn't exist." The Salem Police Department in Massachusetts also issued an alert earlier this month after receiving multiple 911 calls linked to the prank. Salem Police Capt. John Burke told "Good Morning America" that the prank is "eliciting a lot of fear." He said the department has investigated three incidents so far, all of which involved people genuinely believing a break-in was happening. In one case, Burke said a person thought a relative had picked up a hitchhiker who was now threatening them, prompting a 911 call from someone who had no idea the situation was a prank. Burke emphasized that the prank wastes emergency resources and can easily lead to dangerous misunderstandings. "You're causing your friends or your family to panic," he said. "You're tying up a police, public safety answering point, a 911, dispatch center. You're wasting the police resources." Burke added, of the police response, "There is the chance when you know we're responding to these incidents, we don't know at first if it's real, we have to handle every situation like it's a potential real situation." In its warning, the Salem Police Department also condemned the prank for dehumanizing the homeless and spreading fear for entertainment. The department warned that pranksters could face criminal charges under Massachusetts law for making false reports to emergency services, even if no one is hurt. Burke urged social media users to think twice before participating in trends that could endanger others. "If you're thinking this is funny, or you're thinking this is something you may want to try, please think twice, on behalf of friends and family," he said. "And then also, don't, don't, don't risk it, you getting yourself in trouble, or having to go to court, being charged with this ... it's easily preventable."

Share

Share

Copy Link

A viral TikTok trend using AI to create fake images of homeless intruders has led to police warnings and raised concerns about the misuse of AI technology, wasted resources, and the dehumanization of homeless individuals.

The Rise of the 'AI Homeless Man Prank'

A new TikTok trend has emerged, causing concern among law enforcement agencies and raising ethical questions about the use of artificial intelligence (AI). The 'AI Homeless Man Prank' involves using AI-generated images to trick friends and family members into believing that a homeless person has entered their home

1

2

3

.Pranksters create realistic images of disheveled individuals in various locations within a home, such as the front door, living room, or even bedroom. They then send these images to unsuspecting recipients, often claiming that they've let the person in or that the individual claims to know the recipient

1

2

.

Source: Mashable

Police Warnings and Public Safety Concerns

Law enforcement agencies across multiple states have issued warnings about the potentially dangerous consequences of this prank

2

4

5

. Police departments in Massachusetts, Michigan, New York, and Wisconsin have reported responding to false home invasion calls, only to discover that they were the result of this AI-generated hoax2

.

Source: The Verge

Captain John Burke of the Salem Police Department in Massachusetts emphasized the risks: 'There are so many scenarios that could go wrong.' He explained that if a worried family member reports an intruder with a weapon, armed officers dispatched to the scene could confront an unaware house occupant or neighbor, creating a potentially dangerous situation

2

.Ethical Concerns and Social Media Response

The trend has sparked debates about the ethical implications of using AI for pranks and the dehumanization of homeless individuals. The Salem Police Department stated that the prank 'dehumanizes the homeless, causes the distressed recipient to panic and wastes police resources'

1

3

.Social media platforms have begun to address the issue. TikTok, where many of these prank videos have gone viral, has added labels to flagged videos to clarify that they contain AI-generated content. The platform's Community Guidelines require creators to label AI-generated or significantly edited content that shows realistic-looking scenes or people

5

.Related Stories

AI Technology and Misinformation Concerns

This trend highlights the growing concern about the potential misuse of AI technology to create and spread misinformation. The ability of AI tools to generate highly convincing images has raised alarms about the ease with which false information can be created and disseminated

5

.

Source: ABC News

Some AI platforms, such as OpenAI's ChatGPT, have implemented safeguards against generating potentially exploitative or disrespectful content. However, other AI tools remain accessible for creating such images

5

.Legal and Social Consequences

Authorities have warned that participating in this prank could lead to serious consequences. In Massachusetts, for example, causing emergency services to be dispatched to a nonexistent emergency could result in a prison term of up to two and a half years or a fine of up to $1,000

2

.As AI-generated hoaxes become more common, law enforcement agencies are grappling with how to classify and discipline those who generate false reports. Captain Burke of the Salem Police Department compared it to a 'new version of swatting,' emphasizing the severity of the issue

2

.References

Summarized by

Navi

Related Stories

AI-Generated 'Homeless Man' Prank Sparks Police Concerns and Highlights AI Misuse

17 Oct 2025•Technology

AI-Generated Death Threats Become Disturbingly Realistic as Technology Advances

01 Nov 2025•Technology

FBI warns criminals weaponize AI to create convincing virtual kidnapping scams with fake photos

06 Dec 2025•Policy and Regulation

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy