AI-Generated Passwords Are Fundamentally Weak and Vulnerable to Cracking Within Hours

2 Sources

2 Sources

[1]

LLM-generated passwords 'fundamentally weak,' experts say

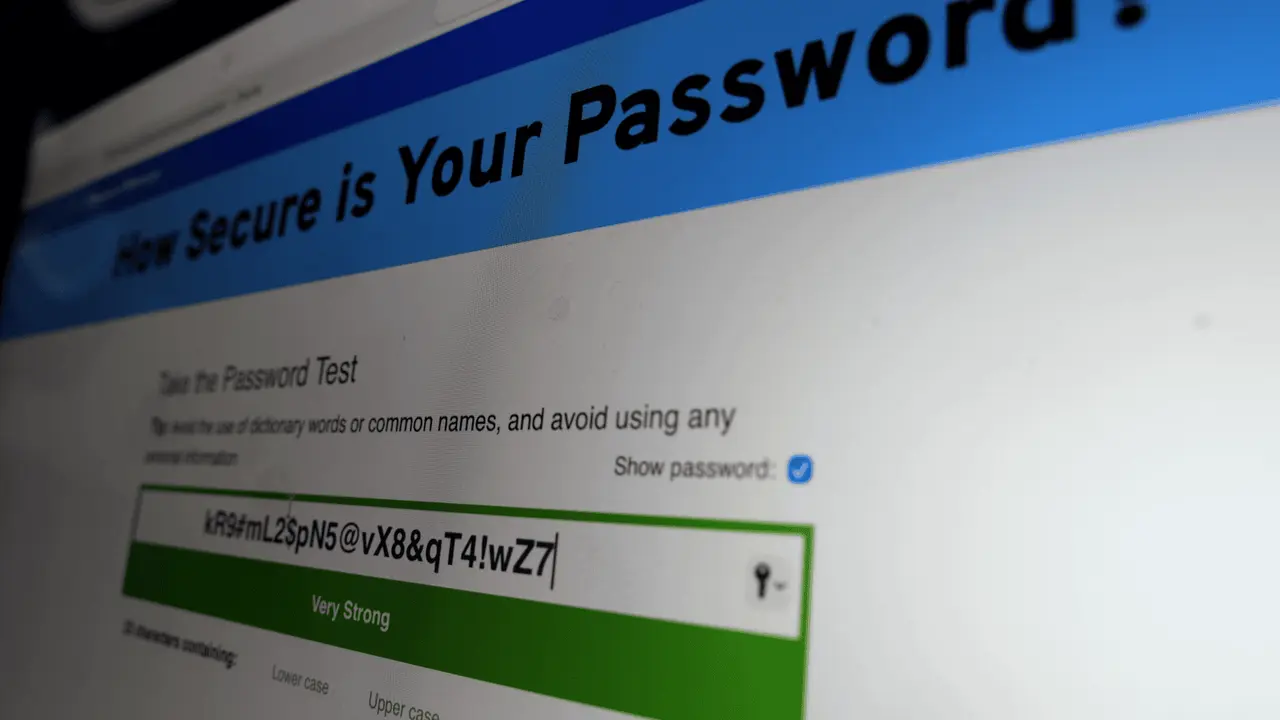

Seemingly complex strings are actually highly predictable, crackable within hours Generative AI tools are surprisingly poor at suggesting strong passwords, experts say. AI security company Irregular looked at Claude, ChatGPT, and Gemini, and found all three GenAI tools put forward seemingly strong passwords that were, in fact, easily guessable. Prompting each of them to generate 16-character passwords featuring special characters, numbers, and letters in different cases, produced what appeared to be complex passphrases. When submitted to various online password strength checkers, they returned strong results. Some said they would take centuries for standard PCs to crack. The online password checkers passed these as strong options because they are not aware of the common patterns. In reality, the time it would take to crack them is much less than it would otherwise seem. Irregular found that all three AI chatbots produced passwords with common patterns, and if hackers understood them, they could use that knowledge to inform their brute-force strategies. The researchers took to Claude, running the Opus 4.6 model, and prompted it 50 times, each in separate conversations and windows, to generate a password. Of the 50 returned, only 30 were unique (20 duplicates, 18 of which were the exact same string), and the vast majority started and ended with the same characters. Irregular also said there were no repeating characters in any of the 50 passwords, indicating they were not truly random. Tests involving OpenAI's GPT-5.2 and Google's Gemini 3 Flash also revealed consistencies among all the returned passwords, especially at the beginning of the strings. The same results were seen when prompting Google's Nano Banana Pro image generation model. Irregular gave it the same prompt, but to return a random password written on a Post-It note, and found the same Gemini password patterns in the results. The Register repeated the tests using Gemini 3 Pro, which returns three options (high complexity, symbol-heavy, and randomized alphanumeric), and the first two generally followed similar patterns, while option three appeared more random. Notably, Gemini 3 Pro returned passwords along with a security warning, suggesting the passwords should not be used for sensitive accounts, given that they were requested in a chat interface. It also offered to generate passphrases instead, which it claimed are easier to remember but just as secure, and recommended users opt for a third-party password manager such as 1Password, Bitwarden, or the iOS/Android native managers for mobile devices. Irregular estimated the entropy of the LLM-generated passwords using the Shannon entropy formula and by understanding the probabilities of where characters are likely to appear, based on the patterns displayed by the 50-password outputs. The team used two methods of estimating entropy, character statistics and log probabilities. They found that 16-character entropies of LLM-generated passwords were around 27 bits and 20 bits respectively. For a truly random password, the character statistics method expects an entropy of 98 bits, while the method involving the log probabilities of the LLM itself expects an entropy of 120 bits. In real terms, this would mean that LLM-generated passwords could feasibly be brute-forced in a few hours, even on a decades-old computer, Irregular claimed. Knowing the patterns also reveals how many times LLMs are used to create passwords in open source projects. The researchers showed that by searching common character sequences across GitHub and the wider web, queries return test code, setup instructions, technical documentation, and more. Ultimately, this finding may usher in a new era of password brute-forcing, Irregular said. It also cited previous comments made by Dario Amodei, CEO at Anthropic, who said last year that AI will likely be writing the majority of all code, and if that's true, then the passwords it generates won't be as secure as expected. "People and coding agents should not rely on LLMs to generate passwords," said Irregular. "Passwords generated through direct LLM output are fundamentally weak, and this is unfixable by prompting or temperature adjustments: LLMs are optimized to produce predictable, plausible outputs, which is incompatible with secure password generation." The team also said that developers should review any passwords that were generated using LLMs and rotate them accordingly. It added that the "gap between capability and behavior likely won't be unique to passwords," and the industry should be aware of that as AI-assisted development and vibe coding continues to gather pace. ®

[2]

Are you using an AI-generated password? It might be time to change it

When you do, it quickly generates one, telling you confidently that the output is strong. In reality, it's anything but, according to research shared exclusively with Sky News by AI cybersecurity firm Irregular. The research, which has been verified by Sky News, found that all three major models - ChatGPT, Claude, and Gemini - produced highly predictable passwords, leading Irregular co-founder Dan Lahav to make a plea about using AI to make them. "You should definitely not do that," he told Sky News. "And if you've done that, you should change your password immediately. And we don't think it's known enough that this is a problem." Predictable patterns are the enemy of good cybersecurity, because they mean passwords can be guessed by automated tools used by cybercriminals. But because large language models (LLMs) do not actually generate passwords randomly and instead derive results based on patterns in their training data, they are not actually creating a strong password, only something that looks like a strong password - an impression of strength which is in fact highly predictable. Some AI-made passwords need mathematical analysis to reveal their weakness, but many are so regular that they are clearly visible to the naked eye. A sample of 50 passwords generated by Irregular using Anthropic's Claude AI, for instance, produced only 23 unique passwords. One password - K9#mPx$vL2nQ8wR - was used 10 times. Others included K9#mP2$vL5nQ8@xR, K9$mP2vL#nX5qR@j and K9$mPx2vL#nQ8wFs. When Sky News tested Claude to check Irregular's research, the first password it spat out was K9#mPx@4vLp2Qn8R. OpenAI's ChatGPT and Google's Gemini AI were slightly less regular with their outputs, but still produced repeated passwords and predictable patterns in password characters. Google's image generation system NanoBanana was also prone to the same error when it was asked to produce pictures of passwords on Post-its. 'Even old computers can crack them' Online password checking tools say these passwords are extremely strong. They passed tests conducted by Sky News with flying colours: one password checker found that a Claude password wouldn't be cracked by a computer in 129 million trillion years. But that's only because the checkers are not aware of the pattern, which makes the passwords much weaker than they appear. "Our best assessment is that currently, if you're using LLMs to generate your passwords, even old computers can crack them in a relatively short amount of time," says Mr Lahav. This is not just a problem for unwitting AI users, but also for developers, who are increasingly using AI to write the majority of their code. Read more from Sky News: Do AI resignations leave the world in 'peril'? Can governments ever keep up with big tech? AI-generated passwords can already be found in code that is being used in apps, programmes and websites, according to a search on GitHub, the most widely-used code repository, for recognisable chunks of AI-made passwords. For example, searching for K9#mP (a common prefix used by Claude) yielded 113 results, and k9#vL (a substring used by Gemini) yielded 14 results. There were many other examples, often clearly intended to be passwords. Most of the results are innocent, generated by AI coding agents for "security best practice" documents, password strength-testing code, or placeholder code. However, Irregular found some passwords in what it suspected were real servers or services and the firm was able to get coding agents to generate passwords in potentially significant areas of code. "Some people may be exposed to this issue without even realising it just because they delegated a relatively complicated action to an AI," said Mr Lahav, who called on the AI companies to instruct their models to use a tool to generate truly random passwords, much like a human would use a calculator. What should you do instead? Graeme Stewart, head of public sector at cybersecurity firm Check Point, had some reassurance to offer. "The good news is it's one of the rare security issues with a simple fix," he said. "In terms of how big a deal it is, this sits in the 'avoidable, high-impact when it goes wrong' category, rather than 'everyone is about to be hacked'." Other experts observed that the problem was passwords themselves, which are notoriously leaky. "There are stronger and easier authentication methods," said Robert Hann, global VP of technical solutions at Entrust, who recommended people use passkeys such as face and fingerprint ID wherever possible. And if that's not an option? The universal advice: pick a long, memorable phrase, and don't ask an AI. Sky News has contacted OpenAI, while Google and Anthropic declined to comment.

Share

Share

Copy Link

AI cybersecurity firm Irregular reveals that ChatGPT, Claude, and Gemini produce passwords with predictable patterns despite appearing strong. Research shows these AI-generated passwords have entropy as low as 20-27 bits compared to 98-120 bits for truly random passwords, making them crackable within hours even on decades-old computers. Experts urge immediate password changes for anyone using LLM password generation.

Large Language Models Create Deceptively Weak Passwords

AI-generated passwords from leading chatbots appear secure but harbor dangerous vulnerabilities, according to research from AI cybersecurity firm Irregular

1

2

. The company tested ChatGPT, Claude, and Gemini, finding that all three large language models produced fundamentally weak passwords with predictable password patterns that hackers could exploit. When Irregular prompted Claude's Opus 4.6 model 50 times to generate 16-character passwords with special characters, numbers, and mixed-case letters, only 30 unique passwords emerged. Twenty duplicates appeared, with 18 being the exact same string: K9#mPx$vL2nQ8wR2

. The vast majority started and ended with identical characters, and none contained repeating characters—clear evidence these weren't truly random.

Source: The Register

Password Security Illusion Masks Critical Flaws

Online password checkers rated these AI-generated passwords as extremely strong, with some estimating they would take centuries or even 129 million trillion years to crack

2

. This creates a dangerous illusion. Password security depends on unpredictability, but because large language models derive results from training data patterns rather than true randomness, they create only the appearance of strength.

Source: Sky News

Irregular co-founder Dan Lahav told Sky News: "You should definitely not do that. And if you've done that, you should change your password immediately"

2

. Tests with OpenAI's GPT-5.2 and Google's Gemini 3 Flash revealed similar consistencies, particularly at the beginning of password strings1

.Entropy Analysis Reveals Alarming Weakness

Irregular measured password strength using the Shannon entropy formula and character probability analysis. The findings were stark: 16-character passwords generated through LLM password generation showed entropy levels around 27 bits using character statistics and 20 bits using log probabilities

1

. Truly random passwords should register 98 bits and 120 bits respectively using these methods. This massive entropy gap means AI-generated passwords are vulnerable to cracking within hours, even on decades-old computers1

. "Our best assessment is that currently, if you're using LLMs to generate your passwords, even old computers can crack them in a relatively short amount of time," Lahav explained2

.Related Stories

Developers Face Hidden AI Password Vulnerability Risks

The problem extends beyond individual users to developers increasingly relying on AI coding assistants. Searching GitHub for recognizable Claude password patterns like K9#mP returned 113 results, while Gemini patterns like k9#vL yielded 14 results

2

. These AI-generated passwords appear in test code, setup instructions, technical documentation, and potentially live servers. Anthropic CEO Dario Amodei predicted AI will write the majority of code1

, amplifying this cybersecurity risk. Irregular warned that "passwords generated through direct LLM output are fundamentally weak, and this is unfixable by prompting or temperature adjustments: LLMs are optimized to produce predictable, plausible outputs, which is incompatible with secure password generation"1

.What Users Should Do About This Security Gap

Experts recommend immediate action for anyone who has used ChatGPT, Claude, Gemini, or other AI tools for password creation. Graeme Stewart from Check Point called it an "avoidable, high-impact when it goes wrong" issue with a simple fix: change those passwords now

2

. Developers should review and rotate any LLM-generated passwords in their code. Looking forward, the industry needs to address what Irregular calls the "gap between capability and behavior" as AI-assisted development accelerates1

. Robert Hann from Entrust advocates for alternative authentication methods like passkeys using face and fingerprint ID2

. When passwords remain necessary, experts universally advise choosing long, memorable phrases and using dedicated password managers like 1Password or Bitwarden for secure password generation—never asking an AI1

.References

Summarized by

Navi

[1]

Related Stories

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology