AI-Powered 'Digital Twin' of Mouse Visual Cortex Revolutionizes Brain Research

4 Sources

4 Sources

[1]

Scientists build 'digital twin' of mouse brain

Eventually, I believe it will be possible to build digital twins of at least parts of the human brain." The new model is an example of a foundation model, a relatively new class of AI models capable of learning from large datasets, then applying that knowledge to new tasks and new types of data - or what researchers call "generalizing outside the training distribution." (ChatGPT is a familiar example of a foundation model that can learn from vast amounts of text to then understand and generate new text.) "In many ways, the seed of intelligence is the ability to generalize robustly," Tolias said. "The ultimate goal - the holy grail - is to generalize to scenarios outside your training distribution." To train the new AI model, the researchers first recorded the brain activity of real mice as they watched movies - made-for-people movies. The films ideally would approximate what the mice might see in natural settings. "It's very hard to sample a realistic movie for mice, because nobody makes Hollywood movies for mice," Tolias said. But action movies came close enough. Mice have low-resolution vision - similar to our peripheral vision - meaning they mainly see movement rather than details or color. "Mice like movement, which strongly activates their visual system, so we showed them movies that have a lot of action," Tolias said. Over many short viewing sessions, the researchers recorded more than 900 minutes of brain activity from eight mice watching clips of action-packed movies, such as Mad Max. Cameras monitored their eye movements and behavior. The researchers used the aggregated data to train a core model, which could then be customized into a digital twin of any individual mouse with a bit of additional training. These digital twins were able to closely simulate the neural activity of their biological counterparts in response to a variety of new visual stimuli, including videos and static images. The large quantity of aggregated training data was key to the digital twins' success, Tolias said. "They were impressively accurate because they were trained on such large datasets." Though trained only on neural activity, the new models could generalize to other types of data. The digital twin of one particular mouse was able to predict the anatomical locations and cell type of thousands of neurons in the visual cortex as well as the connections between these neurons. The researchers verified these predictions against high-resolution, electron microscope imaging of that mouse's visual cortex, which was part of a larger project to map the structure and function of the mouse visual cortex in unprecedented detail. The results of that project, known as MICrONS, was published simultaneously in Nature. Because a digital twin can function long past the lifespan of a mouse, scientists could perform a virtually unlimited number of experiments on essentially the same animal. Experiments that would take years could be completed in hours, and millions of experiments could run simultaneously, speeding up research into how the brain processes information and the principles of intelligence. "We're trying to open the black box, so to speak, to understand the brain at the level of individual neurons or populations of neurons and how they work together to encode information," Tolias said. In fact, the new models are already yielding new insights. In another related study, also simultaneously published in Nature, researchers used a digital twin to discover how neurons in the visual cortex choose other neurons with which to form connections. Scientists had known that similar neurons tend to form connections, like people forming friendships. The digital twin revealed which similarities mattered the most. Neurons prefer to connect with neurons that respond to the same stimulus - the color blue, for example - over neurons that respond to the same area of visual space. "It's like someone selecting friends based on what they like and not where they are," Tolias said. "We learned this more precise rule of how the brain is organized." The researchers plan to extend their modeling into other brain areas and to animals, including primates, with more advanced cognitive capabilities. "Eventually, I believe it will be possible to build digital twins of at least parts of the human brain," Tolias said. "This is just the tip of the iceberg."

[2]

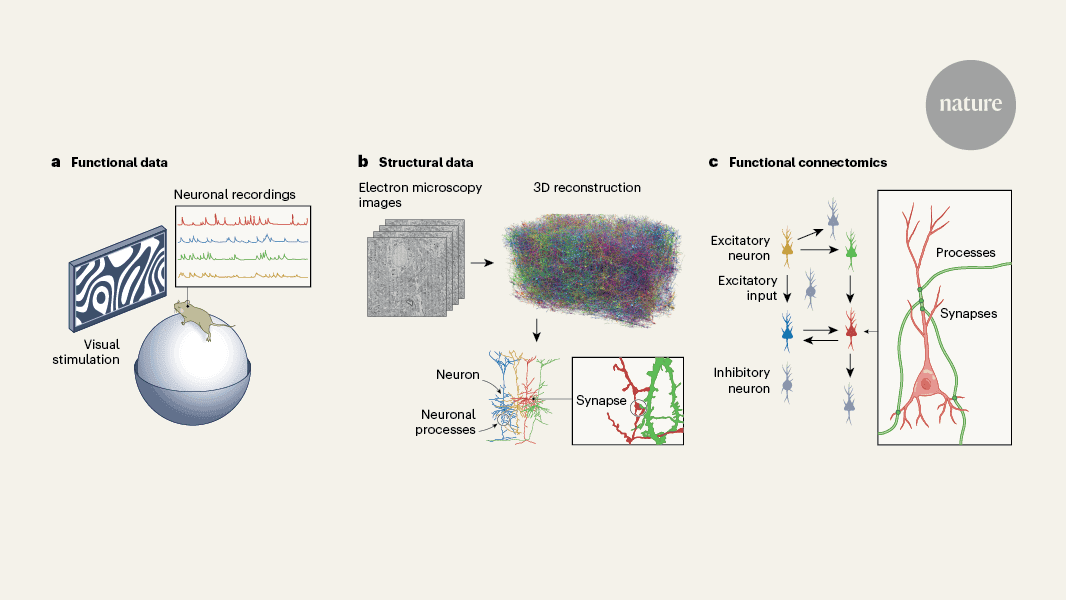

AI models of the brain could serve as 'digital twins' in research

Much as a pilot might practice maneuvers in a flight simulator, scientists might soon be able to perform experiments on a realistic simulation of the mouse brain. In a new study, Stanford Medicine researchers and collaborators used an artificial intelligence model to build a "digital twin" of the part of the mouse brain that processes visual information. The digital twin was trained on large datasets of brain activity collected from the visual cortex of real mice as they watched movie clips. It could then predict the response of tens of thousands of neurons to new videos and images. Digital twins could make studying the inner workings of the brain easier and more efficient. "If you build a model of the brain and it's very accurate, that means you can do a lot more experiments," said Andreas Tolias, PhD, Stanford Medicine professor of ophthalmology and senior author of the study published April 10 in Nature. "The ones that are the most promising you can then test in the real brain." The lead author of the study is Eric Wang, PhD, a medical student at Baylor College of Medicine. Beyond the training distribution Unlike previous AI models of the visual cortex, which could simulate the brain's response to only the type of stimuli they saw in the training data, the new model can predict the brain's response to a wide range of new visual input. It can even surmise anatomical features of each neuron. The new model is an example of a foundation model, a relatively new class of AI models capable of learning from large datasets, then applying that knowledge to new tasks and new types of data -- or what researchers call "generalizing outside the training distribution." (ChatGPT is a familiar example of a foundation model that can learn from vast amounts of text to then understand and generate new text.) "In many ways, the seed of intelligence is the ability to generalize robustly," Tolias said. "The ultimate goal -- the holy grail -- is to generalize to scenarios outside your training distribution." Mouse movies To train the new AI model, the researchers first recorded the brain activity of real mice as they watched movies -- made-for-people movies. The films ideally would approximate what the mice might see in natural settings. "It's very hard to sample a realistic movie for mice, because nobody makes Hollywood movies for mice," Tolias said. But action movies came close enough. Mice have low-resolution vision -- similar to our peripheral vision -- meaning they mainly see movement rather than details or color. "Mice like movement, which strongly activates their visual system, so we showed them movies that have a lot of action," Tolias said. Over many short viewing sessions, the researchers recorded more than 900 minutes of brain activity from eight mice watching clips of action-packed movies, such as Mad Max. Cameras monitored their eye movements and behavior. The researchers used the aggregated data to train a core model, which could then be customized into a digital twin of any individual mouse with a bit of additional training. Accurate predictions These digital twins were able to closely simulate the neural activity of their biological counterparts in response to a variety of new visual stimuli, including videos and static images. The large quantity of aggregated training data was key to the digital twins' success, Tolias said. "They were impressively accurate because they were trained on such large datasets." Though trained only on neural activity, the new models could generalize to other types of data. The digital twin of one particular mouse was able to predict the anatomical locations and cell type of thousands of neurons in the visual cortex as well as the connections between these neurons. The researchers verified these predictions against high-resolution, electron microscope imaging of that mouse's visual cortex, which was part of a larger project to map the structure and function of the mouse visual cortex in unprecedented detail. The results of that project, known as MICrONS, was published simultaneously in Nature. Opening the black box Because a digital twin can function long past the lifespan of a mouse, scientists could perform a virtually unlimited number of experiments on essentially the same animal. Experiments that would take years could be completed in hours, and millions of experiments could run simultaneously, speeding up research into how the brain processes information and the principles of intelligence. "We're trying to open the black box, so to speak, to understand the brain at the level of individual neurons or populations of neurons and how they work together to encode information," Tolias said. In fact, the new models are already yielding new insights. In another related study, also simultaneously published in Nature, researchers used a digital twin to discover how neurons in the visual cortex choose other neurons with which to form connections. Scientists had known that similar neurons tend to form connections, like people forming friendships. The digital twin revealed which similarities mattered the most. Neurons prefer to connect with neurons that respond to the same stimulus -- the color blue, for example -- over neurons that respond to the same area of visual space. "It's like someone selecting friends based on what they like and not where they are," Tolias said. "We learned this more precise rule of how the brain is organized." The researchers plan to extend their modeling into other brain areas and to animals, including primates, with more advanced cognitive capabilities. "Eventually, I believe it will be possible to build digital twins of at least parts of the human brain," Tolias said. "This is just the tip of the iceberg." Researchers from the University Göttingen and the Allen Institute for Brain Science contributed to the work. The study received funding from the Intelligence Advanced Research Projects Activity, a National Science Foundation NeuroNex grant, the National Institute of Mental Health, the National Institute of Neurological Disorders and Stroke (grant U19MH114830), the National Eye Institute (grant R01 EY026927 and Core Grant for Vision Research T32-EY-002520-37), the European Research Council and the Deutsche Forschungsgemeinschaft.

[3]

AI-powered digital twin of mouse visual cortex

Stanford MedicineApr 10 2025 Much as a pilot might practice maneuvers in a flight simulator, scientists might soon be able to perform experiments on a realistic simulation of the mouse brain. In a new study, Stanford Medicine researchers and collaborators used an artificial intelligence model to build a "digital twin" of the part of the mouse brain that processes visual information. The digital twin was trained on large datasets of brain activity collected from the visual cortex of real mice as they watched movie clips. It could then predict the response of tens of thousands of neurons to new videos and images. Digital twins could make studying the inner workings of the brain easier and more efficient. If you build a model of the brain and it's very accurate, that means you can do a lot more experiments. The ones that are the most promising you can then test in the real brain." Andreas Tolias, PhD, Study Senior Author and Professor, Stanford Medicine The lead author of the study is Eric Wang, PhD, a medical student at Baylor College of Medicine. Beyond the training distribution Unlike previous AI models of the visual cortex, which could simulate the brain's response to only the type of stimuli they saw in the training data, the new model can predict the brain's response to a wide range of new visual input. It can even surmise anatomical features of each neuron. The new model is an example of a foundation model, a relatively new class of AI models capable of learning from large datasets, then applying that knowledge to new tasks and new types of data - or what researchers call "generalizing outside the training distribution." (ChatGPT is a familiar example of a foundation model that can learn from vast amounts of text to then understand and generate new text.) "In many ways, the seed of intelligence is the ability to generalize robustly," Tolias said. "The ultimate goal - the holy grail - is to generalize to scenarios outside your training distribution." Mouse movies To train the new AI model, the researchers first recorded the brain activity of real mice as they watched movies - made-for-people movies. The films ideally would approximate what the mice might see in natural settings. "It's very hard to sample a realistic movie for mice, because nobody makes Hollywood movies for mice," Tolias said. But action movies came close enough. Mice have low-resolution vision - similar to our peripheral vision - meaning they mainly see movement rather than details or color. "Mice like movement, which strongly activates their visual system, so we showed them movies that have a lot of action," Tolias said. Over many short viewing sessions, the researchers recorded more than 900 minutes of brain activity from eight mice watching clips of action-packed movies, such as Mad Max. Cameras monitored their eye movements and behavior. The researchers used the aggregated data to train a core model, which could then be customized into a digital twin of any individual mouse with a bit of additional training. Accurate predictions These digital twins were able to closely simulate the neural activity of their biological counterparts in response to a variety of new visual stimuli, including videos and static images. The large quantity of aggregated training data was key to the digital twins' success, Tolias said. "They were impressively accurate because they were trained on such large datasets." Though trained only on neural activity, the new models could generalize to other types of data. The digital twin of one particular mouse was able to predict the anatomical locations and cell type of thousands of neurons in the visual cortex as well as the connections between these neurons. The researchers verified these predictions against high-resolution, electron microscope imaging of that mouse's visual cortex, which was part of a larger project to map the structure and function of the mouse visual cortex in unprecedented detail. The results of that project, known as MICrONS, was published simultaneously in Nature. Opening the black box Because a digital twin can function long past the lifespan of a mouse, scientists could perform a virtually unlimited number of experiments on essentially the same animal. Experiments that would take years could be completed in hours, and millions of experiments could run simultaneously, speeding up research into how the brain processes information and the principles of intelligence. "We're trying to open the black box, so to speak, to understand the brain at the level of individual neurons or populations of neurons and how they work together to encode information," Tolias said. In fact, the new models are already yielding new insights. In another related study, also simultaneously published in Nature, researchers used a digital twin to discover how neurons in the visual cortex choose other neurons with which to form connections. Scientists had known that similar neurons tend to form connections, like people forming friendships. The digital twin revealed which similarities mattered the most. Neurons prefer to connect with neurons that respond to the same stimulus - the color blue, for example - over neurons that respond to the same area of visual space. "It's like someone selecting friends based on what they like and not where they are," Tolias said. "We learned this more precise rule of how the brain is organized." The researchers plan to extend their modeling into other brain areas and to animals, including primates, with more advanced cognitive capabilities. "Eventually, I believe it will be possible to build digital twins of at least parts of the human brain," Tolias said. "This is just the tip of the iceberg." Stanford Medicine Journal reference: Wang, E. Y., et al. (2025). Foundation model of neural activity predicts response to new stimulus types. Nature. doi.org/10.1038/s41586-025-08829-y

[4]

Digital Mouse Brain Twin Offers New Window Into Neural Function - Neuroscience News

Summary: Researchers have created an AI-powered "digital twin" of the mouse visual cortex that can accurately simulate neural responses to visual input, including movies. Unlike earlier models, this digital twin generalizes beyond its training data, predicting neuron behavior and structure with remarkable accuracy. Trained on 900 minutes of brain recordings, the model allows researchers to run limitless experiments quickly and efficiently. This advancement may revolutionize how we study intelligence, brain disorders, and eventually, the human brain. Much as a pilot might practice maneuvers in a flight simulator, scientists might soon be able to perform experiments on a realistic simulation of the mouse brain. In a new study, Stanford Medicine researchers and collaborators used an artificial intelligence model to build a "digital twin" of the part of the mouse brain that processes visual information. The digital twin was trained on large datasets of brain activity collected from the visual cortex of real mice as they watched movie clips. It could then predict the response of tens of thousands of neurons to new videos and images. Digital twins could make studying the inner workings of the brain easier and more efficient. "If you build a model of the brain and it's very accurate, that means you can do a lot more experiments," said Andreas Tolias, PhD, Stanford Medicine professor of ophthalmology and senior author of the study published April 10 in Nature. "The ones that are the most promising you can then test in the real brain." The lead author of the study is Eric Wang, PhD, a medical student at Baylor College of Medicine. Beyond the training distribution Unlike previous AI models of the visual cortex, which could simulate the brain's response to only the type of stimuli they saw in the training data, the new model can predict the brain's response to a wide range of new visual input. It can even surmise anatomical features of each neuron. The new model is an example of a foundation model, a relatively new class of AI models capable of learning from large datasets, then applying that knowledge to new tasks and new types of data -- or what researchers call "generalizing outside the training distribution." (ChatGPT is a familiar example of a foundation model that can learn from vast amounts of text to then understand and generate new text.) "In many ways, the seed of intelligence is the ability to generalize robustly," Tolias said. "The ultimate goal -- the holy grail -- is to generalize to scenarios outside your training distribution." Mouse movies To train the new AI model, the researchers first recorded the brain activity of real mice as they watched movies -- made-for-people movies. The films ideally would approximate what the mice might see in natural settings. "It's very hard to sample a realistic movie for mice, because nobody makes Hollywood movies for mice," Tolias said. But action movies came close enough. Mice have low-resolution vision -- similar to our peripheral vision -- meaning they mainly see movement rather than details or color. "Mice like movement, which strongly activates their visual system, so we showed them movies that have a lot of action," Tolias said. Over many short viewing sessions, the researchers recorded more than 900 minutes of brain activity from eight mice watching clips of action-packed movies, such as Mad Max. Cameras monitored their eye movements and behavior. The researchers used the aggregated data to train a core model, which could then be customized into a digital twin of any individual mouse with a bit of additional training. Accurate predictions These digital twins were able to closely simulate the neural activity of their biological counterparts in response to a variety of new visual stimuli, including videos and static images. The large quantity of aggregated training data was key to the digital twins' success, Tolias said. "They were impressively accurate because they were trained on such large datasets." Though trained only on neural activity, the new models could generalize to other types of data. The digital twin of one particular mouse was able to predict the anatomical locations and cell type of thousands of neurons in the visual cortex as well as the connections between these neurons. The researchers verified these predictions against high-resolution, electron microscope imaging of that mouse's visual cortex, which was part of a larger project to map the structure and function of the mouse visual cortex in unprecedented detail. The results of that project, known as MICrONS, was published simultaneously in Nature. Opening the black box Because a digital twin can function long past the lifespan of a mouse, scientists could perform a virtually unlimited number of experiments on essentially the same animal. Experiments that would take years could be completed in hours, and millions of experiments could run simultaneously, speeding up research into how the brain processes information and the principles of intelligence. "We're trying to open the black box, so to speak, to understand the brain at the level of individual neurons or populations of neurons and how they work together to encode information," Tolias said. In fact, the new models are already yielding new insights. In another related study, also simultaneously published in Nature, researchers used a digital twin to discover how neurons in the visual cortex choose other neurons with which to form connections. Scientists had known that similar neurons tend to form connections, like people forming friendships. The digital twin revealed which similarities mattered the most. Neurons prefer to connect with neurons that respond to the same stimulus -- the color blue, for example -- over neurons that respond to the same area of visual space. "It's like someone selecting friends based on what they like and not where they are," Tolias said. "We learned this more precise rule of how the brain is organized." The researchers plan to extend their modeling into other brain areas and to animals, including primates, with more advanced cognitive capabilities. "Eventually, I believe it will be possible to build digital twins of at least parts of the human brain," Tolias said. "This is just the tip of the iceberg." Researchers from the University Göttingen and the Allen Institute for Brain Science contributed to the work. Funding: The study received funding from the Intelligence Advanced Research Projects Activity, a National Science Foundation NeuroNex grant, the National Institute of Mental Health, the National Institute of Neurological Disorders and Stroke (grant U19MH114830), the National Eye Institute (grant R01 EY026927 and Core Grant for Vision Research T32-EY-002520-37), the European Research Council and the Deutsche Forschungsgemeinschaft. Foundation model of neural activity predicts response to new stimulus types The complexity of neural circuits makes it challenging to decipher the brain's algorithms of intelligence. Recent breakthroughs in deep learning have produced models that accurately simulate brain activity, enhancing our understanding of the brain's computational objectives and neural coding. However, it is difficult for such models to generalize beyond their training distribution, limiting their utility. The emergence of foundation models trained on vast datasets has introduced a new artificial intelligence paradigm with remarkable generalization capabilities. Here we collected large amounts of neural activity from visual cortices of multiple mice and trained a foundation model to accurately predict neuronal responses to arbitrary natural videos. This model generalized to new mice with minimal training and successfully predicted responses across various new stimulus domains, such as coherent motion and noise patterns. Beyond neural response prediction, the model also accurately predicted anatomical cell types, dendritic features and neuronal connectivity within the MICrONS functional connectomics dataset. Our work is a crucial step towards building foundation models of the brain. As neuroscience accumulates larger, multimodal datasets, foundation models will reveal statistical regularities, enable rapid adaptation to new tasks and accelerate research.

Share

Share

Copy Link

Stanford Medicine researchers create an AI model that accurately simulates the mouse visual cortex, opening new avenues for understanding brain function and potentially leading to human brain modeling.

Stanford Researchers Develop AI 'Digital Twin' of Mouse Visual Cortex

In a groundbreaking study published in Nature, Stanford Medicine researchers have created an artificial intelligence (AI) model that functions as a 'digital twin' of the mouse visual cortex. This innovative approach promises to revolutionize brain research by allowing scientists to conduct virtually unlimited experiments on a highly accurate simulation of the brain

1

.The Foundation Model Approach

The new AI model is classified as a foundation model, a recent development in AI capable of learning from large datasets and applying that knowledge to new tasks and data types. This ability to "generalize outside the training distribution" is considered a crucial aspect of intelligence

2

.Training the Digital Twin

To create this digital twin, researchers recorded over 900 minutes of brain activity from eight mice as they watched action-packed movie clips. The mice, with their low-resolution vision similar to human peripheral vision, primarily respond to movement. The aggregated data was used to train a core model, which could then be customized for individual mice

3

.Impressive Accuracy and Generalization

The digital twins demonstrated remarkable accuracy in simulating neural activity in response to new visual stimuli, including both videos and static images. Notably, the model could predict anatomical features of neurons and their connections, which were verified against high-resolution electron microscope imaging

4

.Accelerating Brain Research

This breakthrough has significant implications for brain research:

- Unlimited experiments: Scientists can perform countless experiments on the digital twin, far outlasting the lifespan of a real mouse.

- Time efficiency: Studies that would take years can now be completed in hours.

- Parallel processing: Millions of experiments can be run simultaneously.

Related Stories

New Insights into Neural Connections

The digital twin has already yielded new insights into how neurons in the visual cortex form connections. Researchers discovered that neurons prefer to connect based on shared stimulus responses rather than spatial proximity, providing a more precise understanding of brain organization

1

.Future Prospects

While this study focused on the mouse visual cortex, researchers plan to extend their modeling to other brain areas and animals with more advanced cognitive capabilities. Dr. Andreas Tolias, the senior author of the study, believes that it may eventually be possible to build digital twins of parts of the human brain

2

.This breakthrough represents a significant step forward in neuroscience and AI research, potentially leading to deeper understanding of brain function, improved treatments for neurological disorders, and advancements in artificial intelligence.

References

Summarized by

Navi

[1]

[3]

[4]

Related Stories

Digital Brain Twins: A New Frontier in Mental Health and Cognitive Care

14 Oct 2025•Health

AI-Powered CellTransformer Creates Most Detailed Mouse Brain Map to Date

07 Oct 2025•Science and Research

MovieNet: Brain-Inspired AI Revolutionizes Video Analysis with Human-Like Accuracy

10 Dec 2024•Science and Research

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology