AI's Growing Influence in Scientific Writing: Study Reveals Widespread Use Across Disciplines

4 Sources

4 Sources

[1]

One-fifth of computer science papers may include AI content

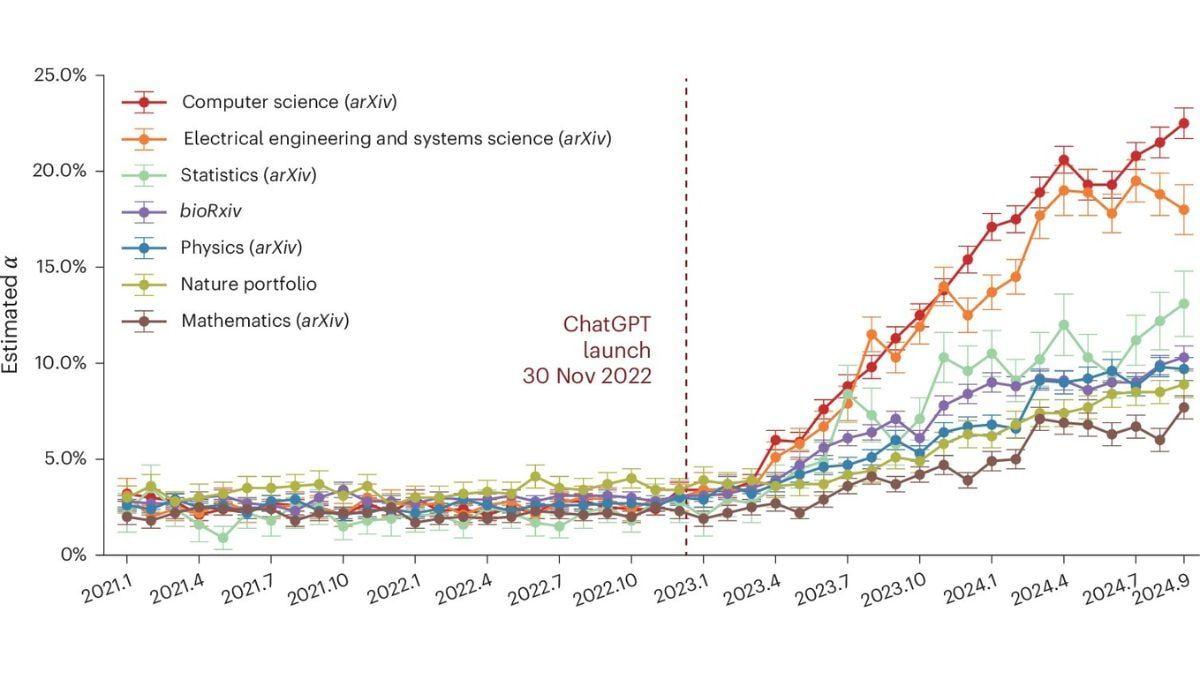

A massive, cross-disciplinary look at how often scientists turn to artificial intelligence (AI) to write their manuscripts has found steady increases since 2022, when OpenAI's text-generating chatbot ChatGPT burst onto the scene. In some fields, the use of such generative AI has become almost routine, with up to 22% of computer science papers showing signs of input from the large language models (LLMs) that underlie the computer programs. The study, which appears today in Nature Human Behaviour, analyzed more than 1 million scientific papers and preprints published between 2020 and 2024, primarily looking at abstracts and introductions for shifts in the frequency of telltale words that appear more often in AI-generated text. "It's really impressive stuff," says Alex Glynn, a research literacy and communications instructor at the University of Louisville. The discovery that LLM-modified content is more prevalent in areas such as computer science could help guide efforts to detect and regulate the use of these tools, adds Glynn, who was not involved in the work. "Maybe this is a conversation that needs to be primarily focused on particular disciplines." When ChatGPT was first released, many academic journals -- hoping to avoid a flood of papers written in whole or part by computer programs -- scrambled to create policies limiting the use of generative AI. Soon, however, researchers and online sleuths began to identify numerous scientific manuscripts and peer-review reports that showed blatant signs of being written with the help of LLMs, including anomalous phrases such as "regenerate response" or "my knowledge cutoff." Some investigators, such as University of Toulouse computer scientist Guillaume Cabanac, started putting together lists of papers that contained these "smoking guns." Since March 2024, Glynn has been compiling Academ-AI, a database that documents suspected instances of AI use in scientific papers. "On the surface, it's quite amusing," Glynn says. "But the implications of it are quite troubling." In particularly obvious cases, the author of a dubious study will explicitly identify itself as an AI language model and recommend the reader search out more reliable sources of information. But LLMs are also notorious for "hallucinating" false or misleading information, Glynn explains, and when obviously AI-generated papers are published despite multiple rounds of peer review and editing, it raises concerns about journals' quality control. Unfortunately, as AI technology has advanced and authors who use it have become more proficient at covering their tracks, spotting its handiwork has gotten harder. One study from 2023 found, for example, that researchers who read medical journal abstracts generated by ChatGPT failed to identify one-third of them as written by machine. Existing AI detection software tends to be unreliable as well, with some tools showing bias against nonnative English writers. In response, scientists have looked for subtler signs of LLM use. A study published last month in Science Advances, for example, searched more than 15 million papers indexed by PubMed between 2010 and 2024 for "excess words" that began to appear more frequently than expected after ChatGPT was released. That research, led by University of Tübingen data scientist Dmitry Kobak, revealed that about one in seven biomedical research abstracts published in 2024 was probably written with the help of AI. James Zou, a computational biologist at Stanford University and co-author of the new study, took a similar approach to survey multiple fields. He and his colleagues took paragraphs from papers written before ChatGPT was developed and used an LLM to summarize them. The team then prompted the LLM to generate a full paragraph based on that outline and used both texts to train a statistical model of word frequency. It learned to pick up on likely signs of AI-written material based on a higher frequency of words such as "pivotal," "intricate," or "showcase," which are normally rare in scientific writing. The researchers applied the model to the abstracts and introductions of 1,121,912 preprints and journal-published papers from January 2020 to September 2024 on the preprint servers arXiv and bioRxiv and in 15 Nature portfolio journals. The analysis revealed a sharp uptick in LLM-modified content just months after the release of ChatGPT in November 2022. "It takes people a while -- many months, sometimes years -- to write a paper," Zou says. The fact that this trend appeared so quickly "means that people were very quickly using it right from the start." Certain disciplines exhibited faster growth than others, which Zou says may reflect differing levels of familiarity with AI technology. "We see the biggest increases in the areas that are actually closest to AI," he explains. By September 2024, 22.5% of computer science abstracts showed evidence of LLM modification, with electrical systems and engineering sciences coming in close second -- compared with just 7.7% of math abstracts. Percentages were also comparatively smaller for disciplines such as biomedical science and physics, but Zou notes that LLM usage is increasing across all domains: "The large language model is really becoming, for good or for bad, an integral part of the scientific process itself." "This is very solid statistical modeling," says Kobak, who notes that the true frequency of AI use may be even higher, because authors may have started to scrub "red flag" words from manuscripts to avoid detection. The word "delve," for example, started to appear much more frequently after the launch of ChatGPT, only to dwindle once it became a widely recognized hallmark of AI-generated text. Although the new study primarily looked at abstracts and introductions, Kobak worries authors will increasingly rely on AI to write sections of scientific papers that reference related works. That could eventually cause these sections to become more similar to one another and create a "vicious cycle" in the future, in which new LLMs are trained on content generated by other LLMs. Zou, for his part, is interested in learning how scientists might exploit AI beyond the actual writing process. He and his colleagues are currently planning a conference where the writers and reviewers are all AI, which they hope will demonstrate whether and how these technologies can independently generate new hypotheses, techniques, and insights. "I expect that there will be some quite interesting findings," he says. "I also expect that there will be a lot of interesting mistakes."

[2]

How scientists caught AI writing their colleagues' papers

A massive new study scanned more than one million scientific papers for signs of AI and the results were overwhelming: AI is everywhere. The study, published this week in Nature Human Behaviour, analyzed preprints and papers published from 2020 to 2024 by searching for some of the signature traces that AI-generated tools leave behind. While some authors deceptively using AI might slip up and leave obvious clues in the text - chunks of a prompt or conspicuous phrases like "regenerate response," for instance - they have become savvier and more subtle over time. In the study, the researchers created a statistical model of word frequency using snippets of abstracts and introductions written before the advent of ChatGPT and then fed them through a large language model. By comparing the texts, the researchers found hidden patterns in the AI-written text to look for, including a high frequency of specific words like "pivotal," "intricate," and "showcase," which aren't common in human-authored science writing.

[3]

Study Reveals Growing Use of ChatGPT in Scientific Papers Across Multiple Disciplines

Lower adoption in mathematics and Nature portfolio journals At the launch of ChatGPT during November 2022, industries from speech writing to legal services are using it. This led to a spark in the Large Language Models across the globe. Academics have been keen to speed the writing and publishing procedure. This has naturally started to navigate how systems can lighten the scholarly load. To investigate the effects of ChatGPT on scientific writing, a research team took on the challenge of analyzing over 1.12 million papers and preprints from arXiv, bioRxiv, as well as journals from the Nature portfolio. As per Phys org, thier findings, which were published in Nature Human Behavior, proposed a novel population-level approach for estimating the increase of LLM-derived text from 2020 to 2024 by tracking minute changes in word frequencies. Their results showed that the most AI-influenced sections of text were abstracts and introductions, whereas description of methods and detailed description of experiments were less impacted, perhaps because LLMs are primarily stronger at summarizing than detailing complex processes. Gradually, the impact of AI extended into multiple fields of study, with the most notable growth occurring in computer science, which has always been at the intersection of AI. Further analysis of the data revealed some distinct trends. LLM usage was more pronounced among authors who submitted preprints, likely due to the pressure to publish. Papers issued in a smaller size also tended to show more AI influence. Papers below 5,000 words, as well as those in fiercely contested fields, demonstrated more AI influence. The way that science is written, and the way that it is shared is going to change for many years to come, and this change is driven by the development of AI. This change places many factors that are crucial for the research community in the limelight, such as originality, transparency, or the preservation of the publishing process in an AI-enhanced world.

[4]

Computer science research papers show fastest uptake of AI use in writing, analysis finds - The Economic Times

The study team said that shorter papers and authors posting pre-prints more often showed a higher rate of AI use in writing papers, suggesting that researchers trying to produce a higher quantity of writing are more likely to rely on LLMs.Researchers analysed the use of large language models in over a million pre-print and published scientific papers between 2020 and 2024, and found the largest, fastest growth in use of the AI systems in computer science papers -- of up to 22 %. Powered by artificial intelligence (AI), large language models are trained on vast amounts of text and can therefore respond to human requests in the natural language. Researchers from Stanford University and other institutes in the US looked at 1,121,912 pre-print papers in the archives 'arXiv' and 'bioRxiv', and published papers across Nature journals from January 2020 to September 2024. Focussing on how often words commonly used by AI systems appeared in the papers, the team estimated the involvement of a large language model -- ChatGPT in this study -- in modifying content in a research paper. Results published in the journal Nature Human Behaviour "suggest a steady increase in LLM (large language model) usage, with the largest and fastest growth estimated for computer science papers (up to 22%)." The researchers also estimated a greater reliance on AI systems among pre-print papers in the archive 'bioRxiv' written by authors from regions known to have a lower number of English-language speakers, such as China and continental Europe. However, papers related to mathematics and those published across Nature journals showed a lower evidence of use of AI in modifying content, according to the analysis. The study team said that shorter papers and authors posting pre-prints more often showed a higher rate of AI use in writing papers, suggesting that researchers trying to produce a higher quantity of writing are more likely to rely on LLMs. "These results may be an indicator of the competitive nature of certain research areas and the pressure to publish quickly," the team said. The researchers also looked at a smaller number of papers to understand how scholars disclose use of AI in their writing. An inspection of randomly selected 200 computer science papers that were uploaded to the pre-print archive 'arXiv' in February 2024 revealed that "only two out of the 200 papers explicitly disclosed the use of LLMs during paper writing". Future studies looking at disclosure statements might help to understand researchers' motivation for using AI in writing papers. For example, policies around disclosing LLM usage in academic writing may still be unclear, or scholars may have other motivations for intentionally avoiding to disclose use of AI, the authors said. A recent study, published in the journal Science, estimated that at least 13% of research abstracts published in 2024 could have taken help from a large language model, as they included more of 'style' words seen to be favoured by these AI systems. Researchers from the University of Tubingen, Germany, who analysed more than 15 million biomedical papers published from 2010 to 2024, said that AI models have caused a drastic shift in the vocabulary used in academic writing.

Share

Share

Copy Link

A comprehensive study analyzing over 1 million scientific papers reveals a significant increase in AI-generated content, particularly in computer science, raising questions about academic integrity and the future of scientific publishing.

AI's Growing Influence in Scientific Writing

A groundbreaking study published in Nature Human Behaviour has revealed a significant increase in the use of artificial intelligence (AI) in scientific writing across various disciplines. The research, which analyzed over 1.1 million scientific papers and preprints from 2020 to 2024, found that up to 22% of computer science papers showed signs of AI-generated content

1

.

Source: ET

Methodology and Key Findings

Researchers from Stanford University and other institutions developed a sophisticated statistical model to detect subtle signs of AI involvement in scientific papers. The model was trained to identify words and phrases more commonly used by large language models (LLMs) such as ChatGPT

2

.Key findings include:

- A sharp uptick in LLM-modified content shortly after ChatGPT's release in November 2022.

- Computer science papers showed the highest rate of AI use, with up to 22% of abstracts displaying evidence of LLM modification.

- Electrical systems and engineering sciences followed closely behind computer science in AI adoption.

- Mathematics and physics exhibited lower rates of AI use, but an increasing trend was observed across all disciplines

1

.

Implications for Academic Publishing

The rapid adoption of AI in scientific writing raises several concerns:

-

Academic Integrity: The study found that only two out of 200 randomly selected computer science papers explicitly disclosed the use of LLMs, highlighting potential issues with transparency

4

. -

Quality Control: The publication of AI-generated content despite peer review and editing processes raises questions about journals' quality control measures

1

.

Source: Fast Company

- Pressure to Publish: Shorter papers and preprints showed higher rates of AI use, suggesting that researchers under pressure to publish quickly may be more likely to rely on LLMs

4

.

Related Stories

Global Trends and Disciplinary Differences

The study revealed interesting patterns in AI adoption across different regions and disciplines:

-

Preprint papers from regions with fewer English-language speakers, such as China and continental Europe, showed greater reliance on AI systems

4

. -

Abstracts and introductions were more likely to contain AI-generated content compared to methods and detailed experimental descriptions

3

. -

Papers published in Nature portfolio journals showed lower evidence of AI use

4

.

Source: Gadgets 360

Future Implications and Challenges

The increasing use of AI in scientific writing presents both opportunities and challenges for the research community. James Zou, a co-author of the study, noted that "The large language model is really becoming, for good or for bad, an integral part of the scientific process itself"

1

.As AI continues to shape the landscape of scientific publishing, the academic community must grapple with issues of originality, transparency, and the preservation of the publishing process in an AI-enhanced world

3

. The development of clear policies for disclosing LLM usage and improving AI detection methods will be crucial in maintaining the integrity of scientific literature in the years to come.References

Summarized by

Navi

[1]

[2]

[3]

Related Stories

AI Co-Authorship in Research: A Growing Trend and Its Implications

04 Sept 2024

OpenAI launches dedicated team to position AI as scientific collaborator across research fields

26 Jan 2026•Technology

AI Revolutionizes Corporate Communications: Study Reveals Widespread Adoption in Press Releases

03 Oct 2025•Technology

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology