AMD's 2027 Mega Pod: A Leap in AI Computing with 256 Instinct MI500 GPUs

2 Sources

2 Sources

[1]

AMD preps Mega Pod with 256 Instinct MI500 GPUs, Verano CPUs -- Leak suggests platform with better scalability than Nvidia will arrive in 2027

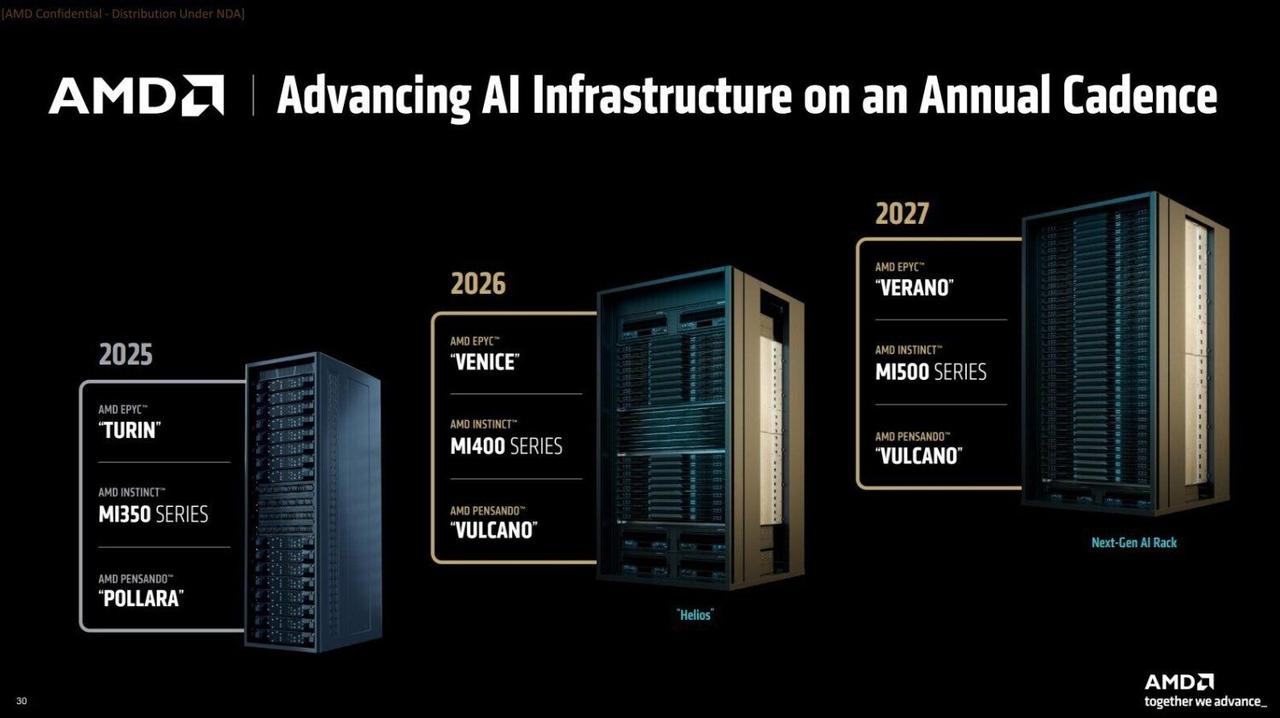

When AMD gave a glimpse at its 2027 rack-scale AI solution earlier this year, the company only said it would use all-new EPYC 'Verano' CPU and Instinct MI500-series GPU accelerators as well as feature more compute blades than its 2026 Helios rack-scale offering. SemiAnalysis this week shared some additional information about the product and revealed that it will pack 256 Instinct MI500-series GPUs. With early plans, however, it's possible details can change. AMD's 2027 rack-scale 'MI500 Scale Up MegaPod' will pack 64 EPYC 'Verano' CPUs and 256 Instinct MI500-series GPU packages, which will exceed 72 GPUs inside AMD's Helios due in 2026 as well as Nvidia's Kyber-based NVL576 featuring 144 Rubin Ultra accelerators (that pack four compute chiplets within a GPU package). SemiAnalysis gives no performance estimates for the 'MI500 Scale Up MegaPod,' though it is natural to expect the new solution to be considerably more powerful than AMD's 2026 Helios due to a larger number of GPU packages and revamped microarchitecture. The planned 'MI500 UAL256' architecture (the name is tentative, of course) spans three linked racks. Each of the two side racks is expected to include 32 compute trays packing one EPYC 'Verona' CPU and four Instinct MI500-series accelerators, while the central rack houses 18 trays dedicated to UALink switches. In total, the system comprises 64 compute trays serving 256 GPU modules. Compared to Nvidia's Kyber VR300 NVL576 pod with 144 GPUs, AMD's MI500 UAL256 configuration offers around 78% more GPU packages per system. However, it remains to be seen whether AMD's MI500 MegaPod offers competitive performance compared to the mighty NVL576, which is set to feature 147 TB of HBM4 memory and 14,400 FP4 PFLOPS. AMD's MI500 UAL256 will use liquid cooling both for compute and networking trays, which is not surprising given that AI GPUs that are becoming more power-hungry and creating more heat. AMD's MI500 MegaPod is expected to become available in late 2027, which is around the time when Nvidia's VR300 NVL576 Kyber machines are likely to debut. If this is the case, then both AMD and Nvidia will ramp up production of their Instinct MI500-series and Rubin Ultra-based rack-scale solutions in 2028.

[2]

AMD Instinct MI500 UAL256 Mega Pod should scale up to 256 GPUs, 64 EPYC 'Verano' CPUs

TL;DR: AMD will launch its next-gen Instinct MI500 Scale Up Mega Pod in 2027, featuring 256 MI500 AI GPUs across three racks and next-gen EPYC "Verano" CPUs. This system surpasses NVIDIA's upcoming AI servers in GPU count and includes advanced 800G networking with TSMC 3nm Vulcano switches for high-performance AI workloads. AMD will release its next-gen Instinct MI500 Scale Up Mega Pod with a huge 256 x MI500 AI chips spread across three interconnected racks, with next-gen AMD EPYC "Verano" CPUs. The new MI500 Scale Up Mega Pod will feature 256 physical GPU packages, reports SemiAnalysis on X, versus just 144 physical GPU packages for NVIDIA's next-gen Kyber VR300 NVL576 AI server. Each of the outer racks would house 32 compute trays per rack, with 18 switch trays in the middle, for a total of 64 compute trays per Mega Pod. Each of the new Mega Pods would feature 256 physical/logical GPU packages, compared to 144 physical/logical GPU packages for NVIDIA's upcoming Kyber VR300 NVL576 AI servers with new Vera Rubin AI GPUs inside. Instinct MI500 UAL256 will be AMD's second-gen rack-scale AI system, after the upcoming Instinct MI450X IF128 known as "Helios" which will be launching in 2H 2026. AMD's new Instinct MI500 UAL256 Scale Up Mega Pod is expected to launch in 2027, which is the year we'll see the next-gen Instinct MI500 AI accelerator and new EPYC "Verano" CPU launched. Each of AMD's new Instinct MI500 UAL256 Scale Up Mega Pod will split the compute into two 32-rack cabinets, with a central rack connecting them and the 18 switching trays for networking, with each of the networking trays featuring four 102.4T "Vulcano" switch ASICs, designed for 800G external throughput, fabbed on TSMC's 3nm process node. AMD recently detailed a few things at its recent Advancing AI event, but we still don't know if AMD will be increasing the CPU core count above 256 cores per package for EPYC "Venice". The new EPYC "Verano" will continue living on with the Socket SP7 ecosystem, which means we'll see the same memory and PCIe interfaces introduced in 2026, including PCIe Gen6, UALink, and Ultra Ethernet.

Share

Share

Copy Link

AMD plans to launch a powerful AI computing solution in 2027, featuring 256 Instinct MI500 GPUs and next-gen EPYC 'Verano' CPUs, potentially outscaling Nvidia's offerings.

AMD's Ambitious AI Computing Solution for 2027

AMD is gearing up to make a significant leap in the AI computing landscape with its upcoming 'MI500 Scale Up MegaPod' set to launch in 2027. This ambitious project aims to push the boundaries of AI processing capabilities, potentially outpacing competitors like Nvidia in terms of scalability and performance

1

2

.Architecture and Components

The 'MI500 Scale Up MegaPod' is designed as a three-rack system, showcasing AMD's commitment to high-density computing:

- GPUs: 256 Instinct MI500-series GPU packages

- CPUs: 64 EPYC 'Verano' processors

- Layout: Two side racks with 32 compute trays each, and a central rack with 18 trays for UALink switches

1

Each compute tray will house one EPYC 'Verano' CPU and four Instinct MI500-series accelerators, resulting in a total of 64 compute trays serving 256 GPU modules

1

.

Source: TweakTown

Networking and Cooling

AMD is not just focusing on raw computing power but also on the critical aspects of connectivity and thermal management:

- Networking: The central rack will feature 18 switching trays, each equipped with four 102.4T "Vulcano" switch ASICs

- Connectivity: Designed for 800G external throughput

- Manufacturing: Vulcano switches will be fabricated on TSMC's 3nm process node

- Cooling: Both compute and networking trays will utilize liquid cooling, addressing the increasing heat generation of AI GPUs

1

2

Comparison with Nvidia

AMD's MegaPod is positioning itself as a formidable competitor to Nvidia's offerings:

- AMD: 256 GPU packages per system

- Nvidia's Kyber VR300 NVL576: 144 Rubin Ultra accelerators

This configuration gives AMD approximately 78% more GPU packages per system compared to Nvidia's solution

1

. However, it's important to note that raw GPU count doesn't necessarily translate directly to performance superiority.Timeline and Industry Impact

The MI500 MegaPod is expected to become available in late 2027, aligning with the probable debut of Nvidia's VR300 NVL576 Kyber machines

1

. This timing suggests that both AMD and Nvidia will likely ramp up production of their respective rack-scale solutions in 2028, potentially sparking intense competition in the high-performance AI computing market.

Source: Tom's Hardware

Related Stories

Technological Continuity

While pushing boundaries, AMD is also maintaining some consistency:

- The new EPYC "Verano" will continue to use the Socket SP7 ecosystem

- This ensures compatibility with memory and PCIe interfaces introduced in 2026, including PCIe Gen6, UALink, and Ultra Ethernet

2

Uncertainties and Future Developments

Despite the exciting prospects, some details remain unclear:

- Performance estimates for the 'MI500 Scale Up MegaPod' are not yet available

- It's uncertain whether AMD will increase the CPU core count above 256 cores per package for EPYC "Venice"

2

As the launch date approaches, more details are likely to emerge, providing a clearer picture of how AMD's solution will stack up against competitors in real-world AI workloads.

References

Summarized by

Navi

[1]

Related Stories

AMD unveils Helios rack-scale AI system with 72 MI455X accelerators and 256-core EPYC Venice

06 Jan 2026•Technology

AMD Challenges NVIDIA with Ambitious Rack-Scale AI Accelerators for 2026

17 May 2025•Technology

AMD Unveils Instinct MI400 and MI500 AI Accelerators to Challenge NVIDIA's Market Dominance

12 Nov 2025•Technology

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology