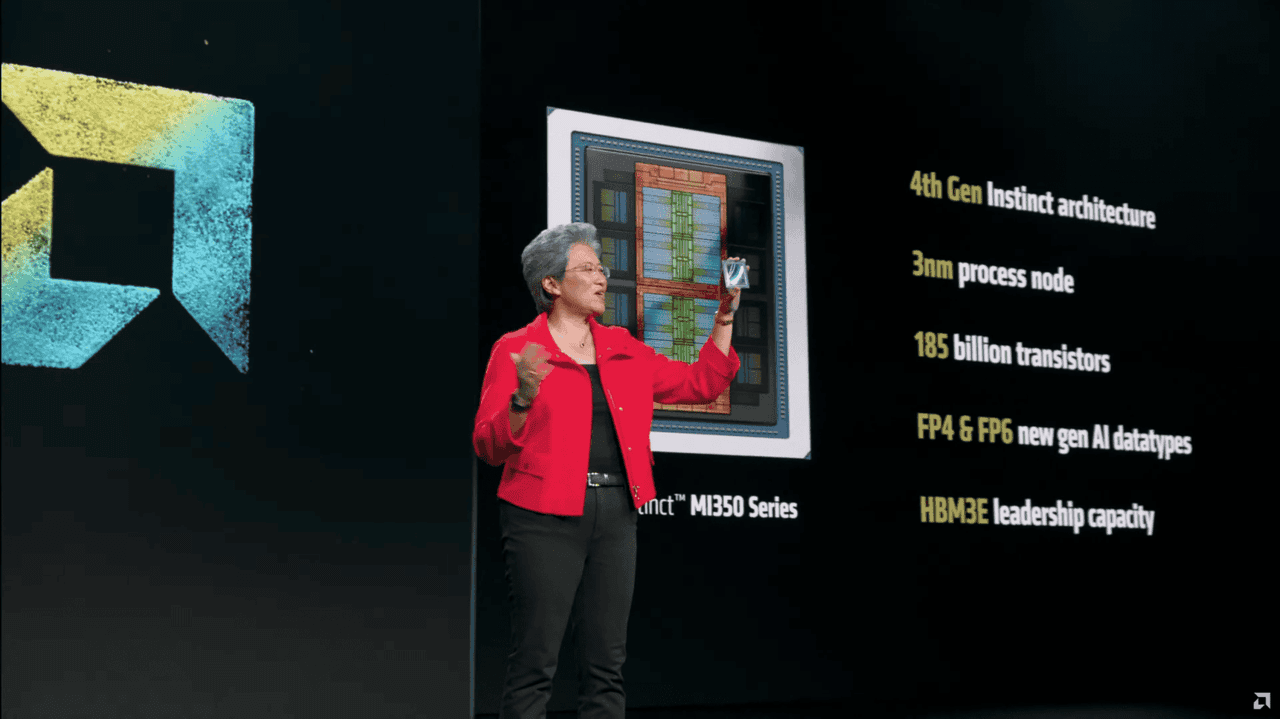

AMD Unveils Powerful MI350X and MI355X AI GPUs, Challenging Nvidia's Dominance

22 Sources

22 Sources

[1]

AMD announces MI350X and MI355X AI GPUs, claims up to 4X generational gain, up to 35X faster inference performance

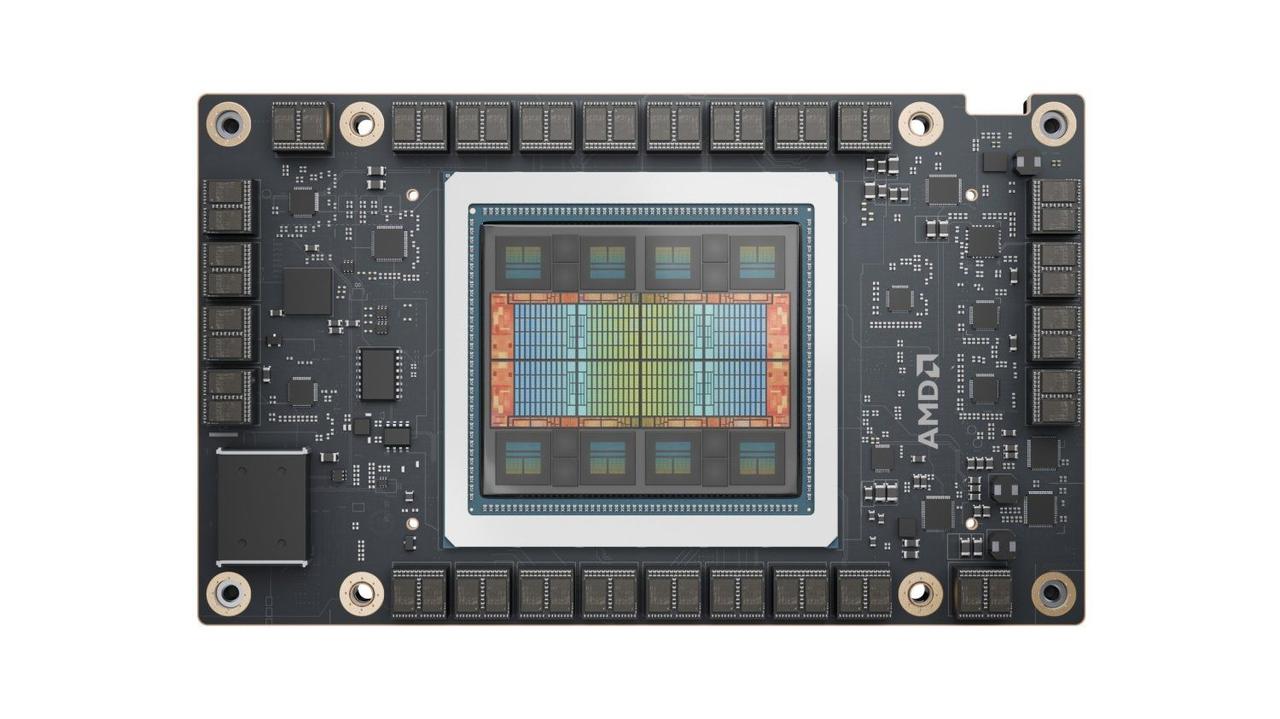

AMD unveiled its new MI350X and MI355X GPUs for AI workloads here at its Advancing AI 2025 event in San Jose, California, claiming the new accelerators offer a 3X performance boost over the prior-gen MI300X, positioning the company to improve its competitive footing against its market-leading rival, Nvidia. AMD claims a 4X increase in "AI compute performance" compared to prior-generation models and a 35X increase in inference performance, much of which it achieved by transitioning to the CDNA 4 architecture and utilizing a smaller, more advanced process node for the compute chiplets. These two MI300 Series AI GPUs will power AMD rack-level solutions for the remainder of the year and into 2026 as the company builds to its MI400 rollout. The MI350X and MI355X share an identical underlying design, featuring up to 288GB of HBM3E memory, up to 8 TB/s of memory bandwidth, and new support for the FP4 and FP6 data types. However, the MI350X is geared for air-cooled solutions with a lower Total Board Power (TBP), while the MI355X pushes power consumption up a notch for liquid-cooled systems geared for the highest performance possible. AMD will not release an APU version of this chip like it did with last generation's MI300A, which featured both CPU and GPU cores on a single die. In contrast, this generation will have GPU-only designs. AMD's MI355X comes with 1.6 times the HBM3E memory capacity of Nvidia's competing GB200 and B200 GPUs, but delivers the same 8TB/s of memory bandwidth. AMD claims a 2X advantage in peak FP64 / FP32 over Nvidia's chips, which isn't surprising given Nvidia's optimization focus on the more AI-friendly lower-precision formats. Notably, MI350's FP64 matrix performance has been halved compared to MI300X, though vector performance drops by roughly 4% gen-over-gen. As we move down to lower-precision formats, such as FP16, FP8, and FP4, you can see that AMD generally matches or slightly exceeds the Nvidia comparables. One notable standout is FP6 performance, which runs at FP4 rates, which AMD sees as a differentiating feature. As we've also seen with Nvidia's competing chips, the new design and heightened performance also come with increased power consumption, which tops out at a 1,400W Total Board Power (TBP) for the liquid-cooled high-performance MI355X model. That's a marked increase from the MI300X's 750W and the MI325X's 1,000W thermal envelopes. AMD says this increase in performance density allows its customers to cram more performance into a single rack, thereby decreasing the all-important performance-per-TCO (total cost of ownership) metric, which quantifies performance-per-dollar at the rack level. The new chips feature numerous advancements in performance, but the fundamental design principles of merging 3D and 2.5D packaging technology remain unchanged, with the former being used to fuse the Accelerator Compute Dies (XCD) with the I/O Dies (IOD), while the latter is used to connect the IOD to each other and the 12-Hi HBM3E stacks. The chip features eight total XCD chiplets, each with 32 compute units (CU) enabled, for a total of 256 CU (AMD has four CU in reserve per XCD to improve yield; these are disabled as needed). The XCD's transition from 5nm with the prior generation to dies fabbed on TSMC's N3P process node for the MI350 series. The total chip weilds a whopping 185 billion transistors, a 21% increase in the transistor budget over the prior generation's 153 billion. Additionally, while the I/O Die (IOD) remains on the N6 process node, AMD has reduced the IOD from four tiles to two to simplify the design. This reorganization enabled AMD to double the Infinity Fabric bus width, improving bi-sectional bandwidth to up to 5.5 TB/s, while also reducing power consumption by lowering the bus frequency and voltage. This reduces uncore power requirements, allowing more power to be spent on compute. As with the MI300 series, the Infinity Cache (memory-side cache) sits in front of the HBM3E (32MB of cache per HBM stack). The completed processor connects to the host via a PCIe 5.0 x16 interface and presents itself as a single logical device to the host. The GPU communicates with other chips via seven Infinity Fabric links, providing a total of 1,075 GB/s of throughput. Both the MI350X and MI355X come in an OAM form factor and drop into the standardized UBB form factor servers (OCP spec), the same as prior-gen MI300X. AMD says this speeds time to deployment. The chips communicate with one another via an all-to-all topology with eight accelerators per node communicating across 153.6 GB/s bi-directional Infinity Fabric links. Each node is powered by two of AMD's fifth-gen EPYC 'Turin' chips. AMD supports all forms of networking, but positions its new Pollara Ultra Ethernet Consortium-capable NICs (UEC) as an optimum scale-out solution, while the Ultra Accelerator Link (UAL) interconnect is employed for scale-up networking. AMD offers both Direct Liquid Cooling (DLC) and Air Cooled (AC) racks. The DLC racks feature 128 MI355X GPUs and 36TB of HBM3E, thanks to the increased density provided by the liquid cooling subsystem, which enables the use of smaller node form factors. The AC solutions top out at 64 GPUs and 18TB of HBM3E, utilizing larger nodes to dissipate the thermal load via air cooling. AMD has tremendously increased its focus on unleashing the power of rack-scale architectures, a glaring deficiency relative to Nvidia. AMD has undertaken a series of acquisitions and developed a strong and expanding roster of partner OEMs to further its goals. As one would expect, AMD shared some of its performance projections and benchmarks against not only its own prior-gen systems, but also against Nvidia comparables. As always, take vendor-provided benchmarks with a grain of salt. We have included the test notes below for your perusal. AMD claims an eight-GPU MI355X setup ranges from 1.3X faster with four MI355X vs four DGX GB200 in Llama 3.1 405B, to 1.2X faster with eight MI355X against an eight-GPU B200 HGX config in inference in DeepSeek R1, and equivalent preformance with in Llama 3.1 405B. AMD says the MI355X provides up to 4.2X more performance over the MI300X in AI Agent and Chatbot workloads, along with strong gains ranging from 2.6X to 3.8X in content generation, summarization, and conversational AI work. Other generational highlights include a 3X generational improvement in DeepSeek R1 and a 3.3X gain in Llama 4 Maverick. We will update this article as AMD shares more details during its keynote, which is occurring now.

[2]

AMD says Instinct MI400X GPU is 10X faster than MI300X, will power Helios rack-scale system with EPYC 'Venice' CPUs

Will at least double performance compared to existing MI355X-based solutions. AMD gave a preview of its first in-house designed rack-scale solution called Helios at its Advancing AI event on Thursday. The system is set to be based on the company's next-generation EPYC 'Venice' processors, will use its Instinct MI400-series accelerator, and will rely on network connections featuring the upcoming Pensando network cards. Overall, the company says that the flagship MI400X is 10 times more powerful than the MI300X, which is a remarkable progress given that the MI400X will be released about three years after the MI300X. When it comes to rack-scale solutions for AI, AMD clearly trails behind Nvidia. This is going to change a bit this year as cloud service providers (such as Oracle OCI), OEMs, and ODMs will build and deploy rack-scale solutions based on the Instinct MI350X-series GPUs, but those systems will not be designed by AMD, and they will have to interconnect each 8-way system using Ethernet, not low-latency high-bandwidth interconnects like NVLink. The real change will occur next year with the first AMD-designed rack-scale system called Helios, which will use Zen 6-powered EPYC 'Venice' CPUs, CDNA 'Next'-based Instinct MI400-series GPUs, and Pensando 'Vulcano' network interface cards (NICs) that are rumored to increase the maximum scale-up world size to beyond eight GPUs, which will greatly enhance their capabilities for training and inference. The system will adhere to OCP standards and enable next-generation interconnects such as Ultra Ethernet and Ultra Accelerator Link, supporting demanding AI workloads. "So let me introduce the Helios AI rack," said Andrew Dieckman, corporate VP and general manager of AMD's data center GPU business. "Helios is one of the system solutions that we are working on based on the Instinct MI400-series GPU, so it is a fully integrated AI rack with EPYC CPUs, Instinct MI400-series GPUs, Pensando NICs, and then our ROCm stack. It is a unified architecture designed for both frontier model training as well as massive scale inference [that] delivers leadership compute density, memory bandwidth, scale out interconnect, all built in an open OCP-compliant standard supporting Ultra Ethernet and UALink." From a performance point of view, AMD's flagship Instinct MI400-series AI GPU (we will refer to it as to Instinct MI400X, though this is not the official name, and we will also call the CDNA Next as CDNA 5) doubles performance from the Instinct MI355X and increases memory capacity by 50% and bandwidth by more than 100%. While the MI355X delivers 10 dense FP4 PFLOPS, the MI400X is projected to hit 20 dense FP4 PFLOPS. Overall, the company says that the flagship MI400X is 10 times more powerful than the MI300X, which is a remarkable progress given that the MI400X will be released about three years after the MI300X. "When you look at our product roadmap and how we continue to accelerate, with MI355X, we have taken a major leap forward [compared to the MI300X]: we are delivering 3X the amount of performance on a broad set of models and workloads, and that is a significant uptick from the previous trajectory we were on from the MI300X with the MI325X," said Dieckman. "Now, with the Instinct MI400X and Helios, we bend that curve even further, and Helios is designed to deliver up to 10X more AI performance on the the most advanced frontier models in the high end." The new MI400X accelerator will also surpass Nvidia's Blackwell Ultra, which is currently ramping up. However, when it comes to comparison with Nvidia's next-generation Rubin R200 that delivers 50 dense FP4 PFLOPS, AMD's MI400X will be around 2.5 times slower. Still, AMD will have an ace up its sleeve, which is memory bandwidth and capacity (see tables for details). Similarly, Helios will outperform Nvidia's Blackwell Ultra-based NVL72 and Rubin-based NVL144. However, it remains to be seen how Helios will stack against NVL144 in real-world applications. Also, it will be extremely hard to beat Nvidia's NVL576 both in terms of compute performance and memory bandwidth in 2027, though by that time, AMD will likely roll out something new. At least, this is what AMD communicated at its Advancing AI event this week: the company plans to continue evolving its integrated AI platforms with next-generation GPUs, CPUs, and networking technology, extending its roadmap well into 2027 and beyond.

[3]

AMD zeroes in on Nvidia's Blackwell with MI350-series GPUs

Nvidia's Blackwell accelerators have been on the market for just over six months, and AMD says it's already achieved performance parity with the launch of its MI350-series GPUs on Thursday. Based on all-new CDNA 4 and refined chiplet architectures, the GPUs seek to shake Nvidia's grip on the AI infrastructure market, boasting up to 10 petaFLOPS of sparse FP4 (double that if you manage to find a workload that can take advantage of sparsity) on the MI355X, 288 GB of HBM3E, and 8 TBps of memory bandwidth. For those keeping score, AMD's latest Instincts aim to match Nvidia's most powerful Blackwell GPUs on floating point performance and memory bandwidth - two of the most important metrics when it comes to AI training and inference. This bears out in AMD's benchmarks, which show a pair of MI355Xs going toe-to-toe with Nvidia's dual-GPU GB200 Superchip in Llama 3.1 405B. As with all vendor supplied benchmarks, take these with a grain of salt. In fact, at least on paper, AMD's latest chips aren't that far behind Nvidia's 288 GB Blackwell Ultra GPUs announced this spring. When the parts start shipping next quarter, they'll not only close the gap on memory capacity, but will offer up to 50 percent higher perf over AMD's MI350 series albeit only for dense FP4. At FP8, FP16, or BF16, AMD and Nvidia are in a dead heat. And speaking of heat, at 1.4 kW, you'll need a liquid-cooling loop to tame the MI355X's tensor cores and unleash its full potential. For those for whom liquid cooling isn't practical, AMD is also offering the MI350X, which trades about 8 percent of peak performance for a slightly more reasonable 1 kW TDP. However, in the real world, we're told that the performance delta is actually closer to 20 percent as the liquid-cooled part's larger power limit allows it to boost higher for longer. On that note, let's take a closer look at the silicon powering AMD's latest Instincts. Peel back the heat spreader of either chip and you'll find a familiar assortment of compute dies surrounded by high-bandwidth memory. To the untrained eye, the MI350 series' bare silicon looks a heck of a lot like Nvidia's Blackwell or even Intel's Gaudi3. This is just what AI accelerators look like in 2025. However, as is often the case, looks can be misleading, and that's certainly true of AMD's Instinct line. Rather than two reticle-sized compute dies as we see in the Intel and Nvidia's accelerators, AMD's Instinct accelerators use a combination of TSMC's 2.5D packaging and 3D hybrid bonding tech to stitch multiple smaller compute and I/O chiplets into one big silicon subsystem. In the case of the MI350 series, it is quite similar to what we saw with the original MI300X back in 2023. It features eight XCD GPU dies fabbed using TSMC's 3nm process tech that are vertically stacked on top of a pair of 6nm I/O dies. Each compute chiplet now features 36 CDNA 4 compute units (CUs), 32 of which are actually active, backed by 4 MB of shared L2 cache, for 256 CUs total across eight chiplets, while the chip's 288 GB of HBM3E memory is backed by 256 MB "Infinity" cache. Meanwhile, the Infinity Fabric-Advanced Package interconnect used to shuttle data between the I/O dies has been upgraded to 5.5 TBps of bisectional bandwidth, up from between 2.4 TBps and 3 TBps last gen. According to AMD Fellow and Instinct SoC Chief Architect Alan Smith, this wider interconnect reduced the amount of energy per bit required for chip-to-chip communications. While AMD's GPUs may have closed the gap in performance with Nvidia's Blackwell accelerators, the company still has a long way to go in system design. Unlike Nvidia's Blackwell accelerators - which can be bought in rackscale, HGX, and PCIe form factors - AMD's MI350-series will only be offered in an eight-GPU configuration. "We felt that this direct connected eight GPU architecture was still well positioned for a large set of the models that would be out there in the 2025 to 2026 time frame," Corporate Vice President Josh Friedrich told the press ahead of AMD's Advancing AI event on Thursday. "We felt introducing a more revolutionary change to a proprietary rack type architecture, and the challenges that can come from Introducing that prematurely was something that we wanted to avoid." As you can see from the graphic below, the design features eight MI350-series chips connected via AMD's Infinity Fabric in an all-to-all scale-up topology. The GPUs are then connected to a pair of x86 CPUs along with up to eight 400 Gbps NICs via PCIe 5.0 switches. Each system will offer up to 2.25 TB of HBM3E memory, and between 147 and 160 petaFLOPS of sparse FP4 compute depending on whether you opt for liquid or air cooling. Naturally, AMD would like to see its Instinct accelerators paired with its Epyc CPUs and Pensando Pollara 400 NICs, but there's nothing stopping vendors from building systems around an Intel processor or ConnectX InfiniBand networking. In fact, that's exactly the configuration Microsoft used for its ND-MI300X-v5 instances. With the launch of the MI350-series, AMD is moving toward denser rack deployments. As GPU power consumption has increased, we've seen a trend toward larger server chassis, with some as large as ten rack units. But, with the move to liquid cooling, AMD now anticipates densities as high as 16 nodes and 128 accelerators per rack. AMD didn't offer specifics as to system-level power consumption, but based on what we've seen of Nvidia's HGX systems, we anticipate both of them to draw somewhere between 14 and 18 kW. Even on the air-cooled side of things, AMD expects to see racks with as many as eight nodes and 64 accelerators, which will almost certainly require the use of rear-door heat exchangers. These higher rack densities set the tone for AMD's first rack-scale systems scheduled to launch alongside its MI400-series chips next year. AMD says its MI350-series accelerators are shipping to customers and expects to see wide-scale deployments in cloud and hyperscale datacenters, including an AI compute cluster in Oracle OCI containing 131,072 accelerators. By our estimate, the completed system will be capable of churning out more than 2.6 zettaFLOPS of the sparsest FP4 compute AMD's MI355Xs can muster. Meanwhile, for those looking to deploy on-prem, MI350-series systems will be offered by Dell, HPE, and Supermicro. ®

[4]

AMD shines a light on its Helios rack-scale compute platform

House of Zen's biggest iron yet boasts 72 MI400 GPUs, 260 TBps of UALink bandwidth, and 2.9 exaFLOPS of FP4 AMD offered its best look yet at the rack-scale architecture that'll underpin its MI400-series GPUs in 2026 at its Advancing AI event in San Jose on Thursday. Codenamed Helios, the massive double-wide rack system is designed for large-scale frontier model training and inference serving. It'll be powered by 72 accelerators that have been made to look like one great big GPU by using Ultra Accelerator Link (UALink) to pool their compute and memory resources. If you're not familiar, UALink is a relatively new interconnect tech designed to provide an alternative to NVLink - the technology that made Nvidia's own rack-scale systems like the GB200 and GB300 NVL72 possible. Helios's six dozen MI400s will come equipped with 432 GB of HBM4 memory good for 19.6 TBps of bandwidth and 40 petaFLOPS of 4-bit floating performance. The GPUs will be paired to AMD's sixth-gen Epyc Venice CPUs, though the exact ratio of CPUs to GPUs in Helios remains to be seen. Meanwhile, AMD's Pensando Vulcano superNICs are said to provide each accelerator with 300 GBps of scale-out bandwidth over what we assume are 12x 200 Gbps Ultra Ethernet links. As for who's going to supply the UALink switches necessary to make all this compute behave like one great big system, AMD isn't saying. But if we had to guess, it'll probably be Broadcom. You may recall a little over a year ago when AMD opened up its Infinity Fabric interconnect tech to partners - a technology that turned out to be foundational to the UALink standard - Broadcom was among the first to throw its weight behind it. With that said, we are told that it's going to be just as fast as NVLink, delivering 3.6 TBps of bandwidth per accelerator. Put together, AMD expects the system to deliver 2.9 exaFLOPS of FP4 performance (presumably with sparsity) for inference and up to 1.4 exaFLOPS of FP8 for training, putting it in direct contention with Nvidia's Vera Rubin NVL144 rack systems due out next year. Announced at GTC this spring, Nvidia's Vera Rubin NVL144 will be equipped with 72 dual GPU Rubin SXM modules with two GPUs per package along with an unspecified number of a custom 88-core CPU called Vera. Altogether, Nvidia claims it will deliver up to 3.6 exaFLOPS at FP4 and 1.2 exaFLOPS at FP8. While the two rack systems will deliver roughly the same level of performance, AMD claims Helios will offer 50 percent higher memory capacity and bandwidth at 31 TBps and 1.4 PBps versus 20 TBps and 936 TBps on the VR-NVL144. "These advantages translate directly into faster model training and better inferencing performance and hugely advantaged economics for our customers," Andrew Dieckmann, corporate VP and GM of AMD's datacenter GPU business, said during a press conference ahead of the Advancing AI keynote on Thursday. But as you might have noticed, that additional memory and bandwidth comes at the expense of the system being a whole lot fatter than Nvidia's NVL designs. Because AMD didn't share power targets for its MI400-series rack systems, it's hard to say how the machine will compare in terms of performance per watt. Given how power-constrained datacenters are already in the wake of the AI boom, energy efficiency could end up having an outsized influence on which systems bit barn operators deploy. Alongside Helios, AMD also teased an even denser rack-scale compute platform due out in 2027 that'll utilize its Verano Epyc CPUs, MI500 GPUs, and Vulcano NICs. While details on that system remain thin, we do know that it'll have to contend with Nvidia's 600 kW Kyber rack systems, which will feature 144 Rubin Ultra sockets and are expected to deliver 15 exaFLOPS of peak FP4 performance and 144 TB HBM4E, good for 4.6 PBps of bandwidth. ®

[5]

AMD says its wild new 1400W AI GPU can beat Nvidia's best Blackwell offering

Summary AMD's new AI GPUs can rival Nvidia's most powerful options, according to AMD's benchmarks. Software optimizations contribute significantly to performance improvements. Next-gen MI400X & MI500X GPUs are on the horizon, setting high expectations. For the first time, it looks like AMD is catching to Nvidia when it comes to AI GPUs. At its Advancing AI event, AMD launched the Instinct MI350X and MI355X, both of which are already shipping to customers. According to AMD, the 1400-watt MI355X can match and sometimes even exceed Nvidia's B200 -- currently the most powerful data center GPU that Nvidia produces -- depending on the data type. The MI350X and MI355X arrive less than a year after AMD launched the MI325X, and the company says it will continue to push out new Instinct GPUs for data center users each year. Related Intel vs. AMD: A GPU dilemma for price-conscious gamers in 2025 Intel vs. AMD will no longer be just about CPUs, as Battlemage goes head-to-head against RDNA 4 Posts 1 AMD says the MI355X can beat Blackwell A big chunk of performance comes from software Close AMD says its new Instinct GPUs deliver somewhere between a 2.4x and nearly a 4x improvement over the MI325X, but the vast majority of that improvement doesn't come from hardware. It comes from software. Simply through optimizing ROCm -- AMD's open-source software stack that's an answer to Nvidia CUDA -- AMD says it's delivered a 3x performance improvement in AI training and 3.5x improvement in AI inference on the same hardware. The MI350X and MI355X are obviously benefiting greatly from those software advancements. Make no mistake, though: these GPUs pack in the hardware. The MI350X and MI355X come with identical specs, but the MI355X runs at 1400W instead of 1000W like the MI350X. Both GPU models come with 288GB of HBM3 memory, and they're both built on a 3nm node with AMD's CDNA 4 architecture -- a seperate architecture from RDNA 4 that's available on GPUs like the RX 9070 XT. That power difference just means that the MI350X is available in both air and liquid-cooled deployments, while the MI355X is only available with liquid cooling. Close It doesn't sound like AMD is going to stop the momentum it has drummed up with its Instinct GPUs, either. It provided a preview of its next-gen MI400X that's set to launch next year, which AMD's Andrew Dieckmann says will be the top-performing AI GPU on the market. We'll see what Nvidia has to say about that, assuming it'll top the Blackwell B200 sometime next year. AMD says it'll offer MI400X racks with next-gen EPYC chips, codenamed Venice. Beyond that, AMD says its MI500X GPU is coming in 2027 alongside EPYC Verano. Although AMD is already shipping the MI350X and MI355X to customers now, systems using the GPU will be available broadly from partners starting in the third quarter, including Dell, HP Enterprise, Supermicro, Gigabyte, and ASRock Rack. These racks obviously cost hundreds of thousands -- if not millions -- of dollars, but they're still interesting to gawk at from afar. Now, if you'll excuse me, I'm going to boot up my 16GB RX 9060 XT and salivate over terabytes of HBM memory sitting somewhere in a data center.

[6]

AMD debuts AMD Instinct MI350 Series accelerator chips with 35X better inferencing

AMD unveiled its comprehensive end-to-end integrated AI platform vision and introduced its open, scalable rack-scale AI infrastructure built on industry standards at its annual Advancing AI event. The Santa Clara, California-based chip maker announced its new AMD Instinct MI350 Series accelerators, which are four times faster on AI compute and 35 times faster on inferencing than prior chips. AMD and its partners showcased AMD Instinct-based products and the continued growth of the AMD ROCm ecosystem. It also showed its powerful, new, open rack-scale designs and roadmap that bring leadership Rack Scale AI performance beyond 2027. "We can now say we are at the inference inflection point, and it will be the driver," said Lisa Su, CEO of AMD, in a keynote at the Advancing AI event. AMD unveiled the Instinct MI350 Series GPUs, setting a new benchmark for performance, efficiency and scalability in generative AI and high-performance computing. The MI350 Series, consisting of both Instinct MI350X and MI355X GPUs and platforms, delivers a four times generation-on-generation AI compute increase and a 35 times generational leap in inferencing, paving the way for transformative AI solutions across industries. AMD demonstrated end-to-end, open-standards rack-scale AI infrastructure -- already rolling out with AMD Instinct MI350 Series accelerators, 5th Gen AMD Epyc processors and AMD Pensando Pollara network interface cards (NICs) in hyperscaler deployments such as Oracle Cloud Infrastructure (OCI) and set for broad availability in 2H 2025. AMD also previewed its next generation AI rack called Helios. It will be built on the next-generation AMD Instinct MI400 Series GPUs, the Zen 6-based AMD Epyc Venice CPUs and AMD Pensando Vulcano NICs. "I think they are targeting a different type of customer than Nvidia," said Ben Bajarin, analyst at Creative Strategies, in a message to GamesBeat. "Specifically I think they see the neocloud opportunity and a whole host of tier two and tier three clouds and the on-premise enterprise deployments." Bajarin added, "We are bullish on the shift to full rack deployment systems and that is where Helios fits in which will align with Rubin timing. But as the market shifts to inference, which we are just at the start with, AMD is well positioned to compete to capture share. I also think, there are lots of customers out there who will value AMD's TCO where right now Nvidia may be overkill for their workloads. So that is area to watch, which again gets back to who the right customer is for AMD and it might be a very different customer profile than the customer for Nvidia." The latest version of the AMD open-source AI software stack, ROCm 7, is engineered to meet the growing demands of generative AI and high-performance computing workloads -- while dramatically improving developer experience across the board. (Radeon Open Compute is an open-source software platform that allows for GPU-accelerated computing on AMD GPUs, particularly for high-performance computing and AI workloads). ROCm 7 features improved support for industry-standard frameworks, expanded hardware compatibility, and new development tools, drivers, APIs and libraries to accelerate AI development and deployment. In her keynote, Su said, "Opennesss should be more than just a buzz word." The Instinct MI350 Series exceeded AMD's five-year goal to improve the energy efficiency of AI training and high-performance computing nodes by 30 times, ultimately delivering a 38 times improvement. AMD also unveiled a new 2030 goal to deliver a 20 times increase in rack-scale energy efficiency from a 2024 base year, enabling a typical AI model that today requires more than 275 racks to be trained in fewer than one fully utilized rack by 2030, using 95% less electricity. AMD also announced the broad availability of the AMD Developer Cloud for the global developer and open-source communities. Purpose-built for rapid, high-performance AI development, users will have access to a fully managed cloud environment with the tools and flexibility to get started with AI projects - and grow without limits. With ROCm 7 and the AMD Developer Cloud, AMD is lowering barriers and expanding access to next-gen compute. Strategic collaborations with leaders like Hugging Face, OpenAI and Grok are proving the power of co-developed, open solutions. The announcement got some cheers from folks in the audience, as the company said it would give attendees developer credits. Broad Partner Ecosystem Showcases AI Progress Powered by AMD AMD customers discussed how they are using AMD AI solutions to train today's leading AI models, power inference at scale and accelerate AI exploration and development. Meta detailed how it has leveraged multiple generations of AMD Instinct and Epyc solutions across its data center infrastructure, with Instinct MI300X broadly deployed for Llama 3 and Llama 4 inference. Meta continues to collaborate closely with AMD on AI roadmaps, including plans to leverage MI350 and MI400 Series GPUs and platforms. Oracle Cloud Infrastructure is among the first industry leaders to adopt the AMD open rack-scale AI infrastructure with AMD Instinct MI355X GPUs. OCI leverages AMD CPUs and GPUs to deliver balanced, scalable performance for AI clusters, and announced it will offer zettascale AI clusters accelerated by the latest AMD Instinct processors with up to 131,072 MI355X GPUs to enable customers to build, train, and inference AI at scale. Microsoft announced Instinct MI300X is now powering both proprietary and open-source models in production on Azure. HUMAIN discussed its landmark agreement with AMD to build open, scalable, resilient and cost-efficient AI infrastructure leveraging the full spectrum of computing platforms only AMD can provide.Cohere shared that its high-performance, scalable Command models are deployed on Instinct MI300X, powering enterprise-grade LLM inference with high throughput, efficiency and data privacy. In the keynote, Red Hat described how its expanded collaboration with AMD enables production-ready AI environments, with AMD Instinct GPUs on Red Hat OpenShift AI delivering powerful, efficient AI processing across hybrid cloud environments. "They can get the most out of the hardware they're using," said the Red Hat exec on stage. Astera Labs highlighted how the open UALink ecosystem accelerates innovation and delivers greater value to customers and shared plans to offer a comprehensive portfolio of UALink products to support next-generation AI infrastructure.Marvell joined AMD to share the UALink switch roadmap, the first truly open interconnect, bringing the ultimate flexibility for AI infrastructure.

[7]

AMD debuts new flagship MI350 data center graphics cards with 185B transistors - SiliconANGLE

AMD debuts new flagship MI350 data center graphics cards with 185B transistors Advanced Micro Devices Inc. today introduced a new line of artificial intelligence chips that it says can outperform Nvidia Corp.'s Blackwell B200 at some tasks. The Instinct MI350 series, as the product family is called, includes two graphics cards. There's the top-end MI355X, which relies on liquid cooling to dissipate heat. It's joined by a scaled-down chip called the Instinct MI350X that trades off some performance for lower operating temperatures. That allows it to use fans instead of liquid cooling, an often simpler arrangement for data center operators. "With flexible air-cooled and direct liquid-cooled configurations, the Instinct MI350 Series is optimized for seamless deployment, supporting up to 64 GPUs in an air-cooled rack and up to 128 in a direct liquid-cooled and scaling up to 2.6 exaFLOPS of FP4 performance," Vamsi Boppana, the senior vice president of AMD's Artificial Intelligence Group, detailed in a blog post. The MI350 series is based on a three-dimensional, 10-chiplet design. Eight of the chiplets contain compute circuits made using Taiwan Semiconductor Manufacturing Co.'s latest three-nanometer process. They sit atop two six-nanometer I/O chiplets that function as the MI350's base layer and also manage the flow of data inside the processor. Both the MI355X and the MI350X ship with 288 gigabytes of HBM3E memory. That's a variety of fast, high-capacity RAM widely used in AI chips. Like AMD's new graphics cards, HBM3E devices feature a three-dimensional design in which layers of circuits are stacked atop one another. HBM3E theoretically supports up to 16 vertically-layered RAM layers. Some memory devices based on the technology also include additional features. Micron Technology Inc.'s latest HBM3E chips, for example, ship with a so-called Memory Built-In Self-Test module. It reduces the amount of specialized equipment needed to develop AI chips that include HBM3E memory. According to AMD, the MI350 series features 60% more memory than Nvidia's flagship Blackwell B200 graphics cards. The company is also promising faster performance for some workloads. AMD says that MI350 chips can process 8-bit floating point numbers 10% faster than the B200 and 4-bit floating point numbers more than twice as fast. Floating point numbers are the basic units of data that AI models use to perform calculations. The largest such data units contain 64 bits, while the smallest have 4. The MI350's support for 4-bit floating point, or FP4, data is one of the improvements it introduces over earlier AMD graphics cards. The fewer bits there are in a floating point number, the quicker it can be processed. As a result, AI models often compress large floating points into smaller ones to speed up calculations. MI350's support for the smallest, 4-bit floating points will make it easier to perform compression in order to speed up AI workloads. In practice, the new speed optimizations allow a single chip from the MI350 series to run an AI mode with up to 520 billion parameters. AMD is also promising a 40% increase in tokens per dollar compared to competing products. AMD will make the MI350 available in 8-chip server configurations. According to the company, the machines will provide up to 160 petaflops of performance for some FP4 workloads. One petaflop corresponds to 1,000 trillion computations per second. Further down the line, AMD plans to launch a line of rack systems called Helios. The systems will combine chips from the upcoming Instinct MI400 chip series, the successor to the MI350, with the company's central processing units. AMD will also add in its Pensando data processing units, which offload infrastructure management tasks from an AI cluster's other chips. On the software side, Helios will ship with the company's ROCm platform. It's a collection of developer tools, application programming interfaces and other components that can be used to program AMD graphics cards. The company debuted a new version of ROCm in conjunction with the debut of the MI350 and Helios. ROCm 7.0, as the latest release is called, enables AI models to perform inference more than 3.5 times faster than before. It can also triple the performance of training workloads. According to AMD, the speedup is partly the fruit of optimizations that allow ROCm 7.0 to manage data movement more efficiently. The software is also better at distributed inference. That's the task of spreading an inference workload across multiple graphics cards to accelerate processing.

[8]

AMD Instinct MI350 GPUs with 288GB HBM3E and 1400W TDP Announced

AMD has unveiled its latest Instinct MI350 series GPUs designed specifically for data center AI tasks. These new GPUs use the CDNA 4 architecture and are built mostly on TSMC's cutting-edge 3nm process technology, combined with 6nm chips handling memory interfaces and cache. The MI350X and MI355X models each contain eight compute dies, with 32 compute units on each, adding up to 256 compute units in total. This is a big jump compared to AMD's consumer RX 9070 XT, which has only 64 compute units. To connect all these dies, AMD uses TSMC's CoWoS-S packaging, which stacks the compute and I/O dies efficiently. The main reason for the big performance gains with these GPUs is their support for new low-precision floating point formats called FP4 and FP6. These formats trade off some accuracy but let the GPU process data much faster, which is ideal for AI workloads that can tolerate reduced precision. AMD says these new GPUs are about four times faster in AI tasks compared to their previous MI325X models. They also upgraded the memory to 288GB of HBM3E, increasing bandwidth from 6TB/s to 8TB/s, helping feed the powerful compute units with data faster. The MI355X, which is the top-end model, has a power draw of up to 1400 watts and connects to systems using PCIe 5.0 x16. These GPUs are expected to be available starting in the third quarter of 2025. Alongside the new hardware, AMD is releasing ROCm 7, a software platform designed to improve AI training and inference workflows. ROCm 7 offers better support for popular AI frameworks like SGLang and vLLM and is optimized for enterprise environments. Later this year, ROCm will also support AI development on AMD's Ryzen and Radeon hardware running Windows, broadening the software's reach beyond data centers. Looking ahead, AMD plans to further develop its AI ecosystem by combining powerful EPYC CPUs, Instinct GPUs, and advanced network interface cards from Pensando that support 400 Gbps Ultra Ethernet. The 2026 roadmap includes the launch of EPYC Venice processors built on a 2nm process, along with the Instinct MI400 GPUs, which are expected to double the performance of the MI355X and offer up to 432GB of the newer HBM4 memory. Beyond that, AMD will introduce the EPYC Verano CPUs and Instinct MI500 GPUs in 2027, although detailed information on these models is not yet public.

[9]

AMD's new Instinct MI350 series AI chips launch this week: 30x energy effiiency goals in 2025

As an Amazon Associate, we earn from qualifying purchases. TweakTown may also earn commissions from other affiliate partners at no extra cost to you. AMD will be launching its new Instinct MI350 series AI accelerators this week during the ISC25 keynote, where AMD CTO Mark Papermaster will unveil the company's next-gen Instinct MI350 series AI chips offering up to 35x improvements in AI inferencing capabilities, and more. We know that the AMD Instinct MI350X AI accelerator will boast up to 288GB of HBM3E memory, and inside, be powered by AMD's next-gen "CDNA 4" architecture. The new Instinct MI350 series AI accelerators will better compete with NVIDIA's new Blackwell AI GPUs, bridging the gap of AI performance between AMD and NVIDIA later this year. AMD's new Instinct MI350 series AI accelerators should find a position in the market between NVIDIA's now-on-the-market Blackwell B200 AI GPUs, as well as its beefed-up Blackwell Ultra B300 AI GPUs. One of the biggest flexes from AMD and its new Instinct MI355X AI chips will be the 30x improvement in energy efficiency, which if its goals are met (the company says it'll hit that this year) then it would be a massive win against NVIDIA and could see AMD on top in the AI GPU game (that is, until NVIDIA launches its new HBM4-powered Rubin R100 AI GPU later this year, and into the market in 2026). We will know everything about AMD's new Instinct MI350 series AI accelerators later this week, and it'll be good to see some true competition in the AI GPU space as NVIDIA has been dominating for far too long on its own.

[10]

AMD's new Instinct MI355X AI GPU has up to 288GB HBM3E memory, 1400W peak board power

As an Amazon Associate, we earn from qualifying purchases. TweakTown may also earn commissions from other affiliate partners at no extra cost to you. AMD is gearing up to unleash its new CDNA 4 architecture inside of its new Instinct MI350 series AI accelerators, with its new flagship Instinct MI355X featuring 288GB of HBM3E memory and 1400W of peak board power. AMD CTO Mark Papermaster unveiled the company's new Instinct MI350 series and new MI355X for AI and HPC at the ISC 2025 event recently, with 1400W of peak board power being close to double what the company's previous-gen Instinct AI accelerator consumed, according to new reports from ComputerBase. We can expect the full unveiling of AMD's next-gen CDNA 4-based Instinct MI350 series AI accelerators during a livestream later this week on Thursday, with the new Instinct MI350 series AI chips based on the optimized CDNA 4 architecture. CDNA 4 is effective in supporting formats, something that was previously a downside for AMD. In addition to FP16, not only is FP8 supported, but also FP6 and FP4, which are gaining traction in the AI business, so we should expect the new CDNA 4-based Instinct MI350 series AI chips to boast twice the performance compared to FP8. AMD's new flagship Instinct MI355X AI accelerator will have 288GB of HBM3E memory and higher clock speeds than the MI350X, as well as up to 1400W of power consumption (instead of 1000W on the MI350). AMD's new CDNA 4-based Instinct MI350 series AI chips will compete with NVIDIA's current GB200 and new GB300 "Blackwell Ultra" AI GPUs later this year.

[11]

AMD launches Instinct MI350 series AI chips: 185 billion transistors, 288GB HBM3E memory

As an Amazon Associate, we earn from qualifying purchases. TweakTown may also earn commissions from other affiliate partners at no extra cost to you. AMD launched its new Instinct MI350 series AI accelerators today, rocking 185 billion transistors, up to 288GB of HBM3E memory, FB4 and FP6 support, and more. The new Instinct MI350 series AI chips were launched during AMD's huge Advancing AI event, where it also unveiled its new Zen 6-based EPYC "Venice" CPUs, a tease of its next-next-gen Zen 7-based EPYC "Verano" CPUs, as well as a tease of its new Instinct MI400 and even next-next-gen Instinct MI500 series AI accelerators. AMD's new Instinct MI350 series AI accelerators are based on the company's new CDNA 4 architecture, and fabbed on TSMC's 3nm process node. Inside, the chip itself features 185 billion transistors and comes in two different variants: the MI350X and MI355X, offered in both air-cooled, and liquid-cooled configurations. The new Instinct MI350 series features a total of 256 compute units with 128 stream processors making 16,384 cores. This means we have lower core counts than the Instinct MI325 and MI300 series AI chips, which feature 304 compute units and a max core clock of 19,456. The compute units inside of the Instinct MI350 series are split into 8 zones, each with its own XCD, and each XCD packing 32 compute units. The XCDs are based on TSMC's N3P process node, with the dual I/O dies based on TSMC's older 6nm process node. Inside of the IOD we have 128 HBM3E channels, the Infinity Cache, and 4th Gen Infinity Fabric Links. AMD says that its new Instinct MI350 series AI chips have 20 PFLOPs of FP4/FP6 compute performance, which is a huge 4x uplift over the previous-gen Instinct AI chips. HBM3E also provides faster transfer speeds with up to 288GB of HBM3E on both variants, with 256MB of new Infinity Cache on the new Instinct MI350 series AI chips. The new HBM3E memory is on 8 stacks, with each stack featuring 36GB of HBM3E in 12-Hi stacks, with the new chips also packing UBB8, which is a new Rapid AI infrastructure deployment standard, which allows for faster deployment of air- and liquid-cooled nodes. AMD shared some competitive metrics of its new Instinct MI355X, which offers 8 TB/s of aggregate memory bandwidth, 79 TFLOPs of FP64, 5 PFLOPs of FP16, 10 PFLOPs of FP8, and 20 PFLOPs of FP6/FP4 compute. AMD says that a full rack of liquid-cooling will pack 96-128 Instinct MI350 series AI GPUs with up to 36TB of HBM3E memory, 2.6 Exaflops of FP4 compute, 1.3 Exaflops of FP8 compute, and will power AMD's EPYC "Turin" CPUs based on the Zen 5 architecture, alongside the new Pollara 400 interconnect solution.

[12]

AMD confirms it's using Samsung's latest HBM3E 12-Hi memory for its new Instinct MI350 AI GPUs

As an Amazon Associate, we earn from qualifying purchases. TweakTown may also earn commissions from other affiliate partners at no extra cost to you. AMD has confirmed that its newly-announced Instinct MI350 series AI accelerators are using Samsung's latest HBM3E 12-Hi memory. Samsung Electronics has been almost famously tripping over trying to get HBM certification from NVIDIA for use in its AI GPUs, but now AMD's just-announced Instinct MI350 and MI355X AI accelerators are using Samsung and Micron's new 12-Hi HBM3E memory. Samsung has been supplying HBM to AMD for a while now, but this is the first time that AMD has confirmed it. AMD's new Instinct MI350 series AI accelerators boast 185 billion transistors and up to 288GB of HBM3E memory, but the company also teased its next-gen Instinct MI400 series AI chips that will feature up to 432GB of next-gen HBM4 memory. AMD said that its new Helios AI server racks will feature 72 x Instinct MI400 series GPUs with 31TB of HBM4 per rack, featuring 10x the AI computing power over its newly-announced Instinct MI355X-based server rack. In a new report from Business Korea and one of their insiders, who said: "If Samsung Electronics fails to make a comeback with HBM4, where basic specifications are changing, the gap between Samsung Electronics and SK hynix will only widen further. As AMD is also trying to narrow the gap with NVIDIA through its newly designed MI400 series, Samsung Electronics must solidify the partnership established with the MI350 series". Samsung has been hard at work on nailing its HBM memory as its South Korean memory rival SK hynix has been dominating for quite some time now, while US-based Micron has been steadily improving its flow of HBM memory to AMD and NVIDIA. The confirmation today from AMD that it's using Samsung HBM3E memory is definitely a positive step for Samsung moving forward.

[13]

AMD's Instinct MI350 Series AI Accelerator To Launch This Week; Team Red Targets a Whopping 30x Increase In Energy Efficiency By 2025

AMD plans to step up its AI game with a brand-new lineup, the Instinct MI350 series, which will offer customers significant performance upgrades. Team Red hasn't been very active in releasing new AI hardware, at least on a pace similar to NVIDIA, given that AMD's core focus has been building up a software ecosystem that rivals CUDA to some extent. AMD currently operates on a yearly product roadmap, and the last time we saw a release was the Instinct MI325X AI accelerator, which was the firm's response to NVIDIA's Blackwell. At the ISC25 keynote, AMD's CTO Mark Papermaster revealed that the next-gen Instinct MI350 AI lineup is said to launch this week, on Thursday, offering up to a 35x improvement in inferencing capabilities and much more. While AMD didn't disclose specifics about the MI350 AI accelerator, we know how it could pan out. The GPU is said to feature a 3nm process node, offer up to 288 GB HBM3E memory, and be a direct competitor to NVIDIA's Blackwell lineup. To top it all off, the MI350 is said to feature AMD's next-gen "CDNA 4" architecture, which will show us how far AMD has come along in terms of generational gaps between its AI architectures, likely giving us a better rundown on the future of AMD's plans. The MI350 would likely position itself somewhere between Blackwell and Blackwell Ultra, and given how far ROCm has evolved, AMD will definitely see adoption by partners. AMD's CTO also discussed how the firm has managed to scale up perf/watt figures with their AI offerings, revealing that with the next-gen MI355X AI accelerator, they expect up to a 30x increase in efficiency figures, successfully delivering on expectations and being ahead of its counterparts. AMD says that architectural and package innovation has led them to this stage, and the efficiency figures are expected to see an uptrend in the future. It would be interesting to see how the industry reacts to the MI350 AI accelerator, since AMD has shown huge anticipation of this release in the past. This would likely tell us where the company is heading in the AI hardware segment as well.

[14]

AMD: Instinct MI350 GPUs Use Memory Edge To Best Nvidia's Fastest AI Chips

At its Advancing AI event, the chip designer says the 288-GB HBM3e capacity of the forthcoming Instinct MI355X and MI350X data center GPUs help the processors provide better or similar AI performance compared to Nvidia's Blackwell-based AI chips. AMD said its forthcoming Instinct MI350 series GPUs provide greater memory capacity and better or similar AI performance compared to Nvidia's fastest Blackwell-based chips, which the company called "significantly more expensive." Set to launch in the third quarter, the MI355X and MI350X GPUs will be supported by two dozen OEM and cloud partners, including Dell Technologies, Hewlett Packard Enterprise, Cisco Systems, Oracle and Supermicro. [Related: 9 AMD Acquisitions Fueling Its AI Rivalry With Nvidia] The company said it plans to announce more OEM and cloud partners in the future. "The list continues to grow as we continue to bring on significant new partners," said Andrew Dieckmann, corporate vice president and general manager of data center GPU at AMD, in a Wednesday briefing with journalists and analysts. The chip designer revealed the MI350 series GPUs and its rack-scale solutions on Thursday at its Advancing AI 2025 event in San Jose, Calif. The company is also expected to provide the first details of the MI400 series that is set for release next year. The Santa Clara, Calif.-based company has made significant investments to develop data center GPUs and associated products that can go head-to-head with the fastest chips and systems from Nvidia, which earned more than double the revenue of AMD and Intel combined for the first quarter, according to a recent CRN analysis. "We are delivering on a relentless annual innovation cadence with these products," Dieckman said. Featuring 185 billion transistors, the MI350 series is built using TSMC's 3-nanometer manufacturing process. The MI355X and MI350X both feature 288 GB of HBM3e memory, which is higher than the 256-GB capacity of its MI325X and roughly 60 percent higher than the capacity of Nvidia's B200 GPU and GB200 Superchip, according to AMD. The company said this allows each GPU to support an AI model with up to 520 billion parameters on a single chip. The memory bandwidth for both GPUs is 8 TBps, which it said is the same as the B200 and GB200. The MI355X -- which has a thermal design power of up to 1,400 watts and is targeted for liquid-cooled servers -- can provide up to 20 petaflops of peak 6-bit floating point (FP6) and 4-bit floating point (FP4) performance. AMD claimed that the FP6 performance is two times higher than what is possible with the GB200 and more than double that of the B200. FP4 performance, on the other hand, is the same as the GB200 and 10 percent faster than the B200, according to the company. The MI355X can also perform 10 petaflops of peak 8-bit floating point (FP8), which AMD said is on par with the GB200 but 10 percent faster than the B200; five petaflops of peak 16-bit floating point (FP16), which it said is on par with the GB200 but 10 percent faster than the B200; and 79 teraflops of 64-bit floating point (FP64), which it said is double that of the GB200 and B200. The MI350X, on the other hand, can perform up to 18.4 petaflops of peak FP4 and FP6 math with a thermal design power of up to 1,000 watts, which the company said makes it suited for both air- and liquid-cooled servers. AMD said the MI355X "delivers the highest inference throughput" for large models, with the GPU providing roughly 20 percent better performance for the DeepSeek R1 model and approximately 30 percent better performance for a 405-billion-parameter Llama 3.1 model than the B200. Compared to the GB200, the company said the MI355X is on par when it comes to the same 405-billion-paramater Llama 3.1 model. The company did not provide performance comparisons to Nvidia's Blackwell Ultra-based B300 and GB300 chips, which are slated for release later this year. Dieckmann noted that AMD achieved the performance advantages using open-source frameworks like SGLang and vLLM in contrast to Nvidia's TensorRT-LLM framework, which he claimed to be proprietary even though the rival has published it on GitHub under the open-source Apache License 2.0. The MI355X's inference advantage over the B200 allows the GPU to provide up to 40 percent more tokens per dollar, which Dieckmann called a "key value proposition" against Nvidia. "We have very strong performance at economically advantaged pricing with our customers, which delivers a significantly cheaper cost of inferencing," he said. As for training, AMD said the MI355X is roughly on par with the B200 for 70-billion-parameter and 8-billion-parameter Llama 3 models. But for fine-tuning, the company said the GPU is roughly 10 percent faster than the B200 for the 70-billion-parameter Llama 2 model and approximately 13 percent faster than the GB200 for the same model. Compared to the MI300X that debuted in late 2023, the company said the MI355X has significantly higher performance across a broad range of AI inference use cases, running 4.2 times faster for an AI agent and chat bot, 2.9 times faster for content generation, 3.8 times faster for summarization and 2.6 times faster for conversational AI -- all based on a 405-billion-paramater Llama 3.1 model. AMD claimed that the MI355X is also roughly 3 times faster for the DeepSeek R1 model, 3.2 times faster for the 70-billion-parameter Llama 3.3 model and 3.3 times faster for the Llama 4 Maverick model -- all for inference. The company said the MI350 GPUs will be paired with its fifth-generation EPYC CPUs and Pollara NICs for rack-scale solutions. The highest-performance rack solution will require direct liquid cooling and consist of 128 MI355X GPUs and 36 TB of HBM3e memory to perform 2.6 exaflops of FP4. A second liquid-cooled option will consist of 96 GPUs and 27 TB of HBM3e to perform 2 exaflops of FP4. The air-cooled rack solution, on the other hand, will consist of 64 MI350X GPUs and 18 TB of HBM3e to perform 1.2 exaflops of FP4. AMD did not say during the Wednesday briefing how much power these rack solutions will require.

[15]

AMD's Next-Gen Instinct MI400 Accelerator Doubles The Compute To 40 PFLOPs, Equipped With 432 GB HBM4 Memory at 19.6 TB/s & Launches In 2026

In addition to its MI350 series, AMD is also giving us a glimpse of what to expect from its next-gen Instinct MI400 series, which launches in 2026. AMD Instinct MI400 Features 2x More AI Compute Than MI350 Series, 50% More Memory, Almost 2.5x Bandwidth Increase With HBM4 With new details shared for the Instinct MI400 accelerator, it looks like AMD is once again going to go big on the hardware side, essentially doubling the compute capability. The official metrics now list the MI400 as a 40 PFLOP (FP4) & 20 PFLOP (FP8) product, which doubles the compute capability of the MI350 series, which launched today. In addition to the compute capability, AMD is also going to leverage HBM4 memory for its Instinct MI400 series. The new chip will offer a 50% memory capacity uplift from 288GB HBM3e to 432GB HBM4. The HBM4 standard will offer a massive 19.6 TB/s bandwidth, more than double that of the 8 TB/s for the MI350 series. The GPU will also feature a 300 GB/s scale-out bandwidth/per GPU, so some big things are coming in the next generation of Instinct. As per previous details, the Instinct MI400 accelerator will feature up to four XCDs (Accelerated Compute Dies), increasing the count from two XCDs per AID on the MI300. That said, there will be two AIDs (Active Interposer Dies) on the MI400 accelerator, and this time, there will be separate Multimedia and I/O dies as well. For each AID, there will be a dedicated MID tile, and this will offer efficient communication between the compute units and the I/O interfaces compared to what we had in previous generations. Even on the MI350, AMD uses Infinity Fabric for inter-die communication. So, it's a big change to the MI400 accelerators, which are aimed at large-scale AI training and inference tasks and are going to be based on the CDNA-Next architecture, which is probably going to be rebranded to UDNA as part of the red team's unification strategy of the RDNA and CDNA architectures.

[16]

AMD Packs 432 GB Of HBM4 Into Instinct MI400 GPUs For Double-Wide AI Racks

Packing 432 GB of HBM4 memory, the Instinct MI400 series GPUs due next year will provide 50 percent more memory capacity and bandwidth than Nvidia's upcoming Vera Rubin platform. They will power rack-scale solutions that offers the same GPU density as Vera Rubin's rack. AMD revealed that its Instinct MI400-based, double-wide AI rack systems coming next year will provide 50 percent more memory capacity and bandwidth than Nvidia's upcoming Vera Rubin platform while offering roughly the same compute performance. The chip designer shared the first details Thursday during AMD CEO Lisa Su's keynote at its Advancing AI event in San Jose, Calif. The company also shared many more details for its MI350X GPUs and its corresponding products that will hit the market later this year. [Related: 9 AMD Acquisitions Fueling Its AI Rivalry With Nvidia] Andrew Dieckman, corporate vice president and general manager of data center GPU at AMD, said in a Wednesday briefing that the MI400 series "will be the highest-performing AI accelerator in 2026 for both large-scale training as well as inferencing." "It will bring leadership performance due to the memory capacity, memory bandwidth, scale-up bandwidth and scale up bandwidth advantages," he said of the MI400 series and the double-wide "Helios" AI server rack it will power. Set to launch in 2026, AMD said the MI400 GPU series will be capable of performing 40 petaflops of 4-bit floating point (FP4) and 20 petaflops of 8-bit floating point (FP8), double that of the flagship MI355X landing this year. Compared to the MI350 series, the MI400 series will increase memory capacity to 432 GB based on the HBM4 standard, which will give it a memory bandwidth of 19.6 TBps, more than double that of the previous generation. The MI400 series will also sport a scale-out bandwidth capacity of 300 GBps per GPU. AMD plans to pair the MI400 series with its next-generation EPYC "Venice" CPU and Pensando "Vulcano" NIC to power the Helios AI rack. The Helios rack will consist of 72 MI400 GPUs, giving it 31 TB of HBM4 memory capacity, 1.4 PBps of memory bandwidth and 260 TBps of scale-up bandwidth. This will make it capable of 2.9 exaflops of FP4 and 1.4 exaflops of FP8. The rack will also have a scale-out bandwidth of 43 TBps. Compared to Nvidia's Vera Rubin platform that is set to launch next year, AMD said the Helios rack will come with the same number of GPUs and scale-up bandwidth plus roughly the same FP4 and FP8 performance. At the same time, the company said Helios will offer 50 percent greater HBM4 memory capacity, memory bandwidth and scale-out bandwidth. In a rendering of the Helios, the rack appeared to be wider than Nvidia's rack-scale solutions such as the GB200 NVL72 platform. Dieckmann said Helio is a double-wide rack, which AMD and its key partners felt was the "right design point between complexity [and] reliability." The executive said the rack's wider size was a "trade-off" to achieve Helios' performance advantages, including the 50 percent greater memory bandwidth. "That trade-off was deemed to be a very acceptable trade-off because these large data centers tend not to be square footage constrained. They tend to be megawatts constrained. And so we think this is the right design point for the market based upon what we're delivering," Dieckmann said.

[17]

AMD Instinct MI350 Launched: 3nm, 185 Billion Transistors, 288 GB HBM3E Memory, FP4 & FP6 Support, MI355X 35x Faster Than MI300 & 2.2x Faster Than Blackwell B200

AMD has officially launched its next-gen Instinct MI350 series, which includes the MI350X and the flagship MI350X, with up to 185 billion transistors. AMD Instinct MI350 Powers Next-Gen AI With Brand New 3nm Process Node, 20 PFLOPs of AI Compute & New Format Support Today, AMD has officially launched its Instinct MI350 series of HPC / AI GPUs, which come equipped with a brand new CDNA 3 architecture based on TSMC's 3nm process node. The chip itself features 185 billion transistors and comes in two flavors, the MI350X and the faster MI355X, offered in both air and liquid-cooled configurations. The new chips support the latest FP6 and FP4 AI data types and are equipped with massive HBM3e memory capacities. Just talking about the AI compute uplift, AMD claims that the Instinct MI350 series offers 20 PFLOPs of FP4/FP6 compute, which is a 4x gen-on-gen performance uplift. With HBM3e, you get faster data transfer speeds with a super-high capacity of 288 GB on both variants. The chips are also equipped with UBB8, which is a new Rapid AI infrastructure deployment standard, allowing faster deployment of air and liquid-cooled nodes. Coming to the competitive metrics shared by AMD for its MI355X, the chip offers 8 TB/s of aggregate memory bandwidth, 79 TFLOPs of FP64, 5 PFLOPs of FP16, 10 PFLOPs of FP8, and 20 PFLOPs of FP6/FP4 compute. These numbers are for the flagship 1400W configuration of the Instinct MI355X chip. A thing to note is that both the MI350X and MI355X utilize the same die, but the 355X comes with a higher TDP rating. The following are the numbers compared against the competition: But how does Instinct MI355X compare to the last-gen MI300 series? Well, AMD just showed a massive 35x leap in Inference performance using Llama 3.1 405B (Throughput), and that's a huge increase. For the full MI350 series platform, the new Instinct ecosystem will offer up to 8x MI355 series GPUs with 2.3 TB of HBM3e memory, 64 TB/s of total bandwidth, 0.63 PFLOPs of FP64, 81 PFLOPs of FP8 & 161 PFLOPs of FP6/FP4 compute performance. With the official metrics out of the way, we can talk about the actual performance metrics in a range of AI tests presented by AMD. Once again, we start with the MI355X vs MI300X performance comparisons, and the new chips offer anywhere from a 2.8x to 4.2x increase in AI: There's also another metric that compares the AMD Instinct MI355X with various popular AI workloads, such as DeepSeek R1, Llama 4 and Llama 3.1 and the new chips simply decimate the MI300X series: The Instinct MI355X also is compared to the B200 and the GB200 servers from the competition and shows a 1.2-1.3x increase. In Llama 3.1 405B in FP4 mode, the new Instinct AI chips offer the same performance as the much more expensive Blackwell GB200 server from NVIDIA, which adds to AMD's pref/$ goals. AMD also confirmed that while the Instinct MI350 series launch today, the next-generation MI400 series is already in the works and is planned for launch in 2026.

[18]

AMD Introduces AI-Focused MI350 Series, Part of 'Vision for an Open AI' Ecosystem

Advanced Micro Devices announced several new artificial-intelligence-focused products including its MI350 Series accelerators. The chip maker said it partnered with prominent companies such as Meta Platforms, OpenAI, Oracle and Microsoft as it seeks an open AI ecosystem. At AMD's Advancing AI event, the Santa Clara, Calif. company said Meta "detailed how Instinct MI300X is broadly deployed for Llama 3 and Llama 4 inference," while OpenAI CEO Sam Altman discussed "OpenAI's close partnership with AMD on AI infrastructure, with research and GPT models on Azure in production on MI300X, as well as deep design engagements on MI400 Series platforms." The MI350 Series consists of Instinct MI350X and MI355X graphics-processing units and platforms, AMD said. Rival chip-maker Nvidia in March 2024 introduced its Blackwell platform to run generative AI. Write to Josh Beckerman at [email protected]

[19]

Micron HBM Designed into Leading AMD AI Platform

Micron high bandwidth memory (HBM3E 36GB 12-high) and the AMD Instinct™ MI350 Series GPUs and platforms support the pace of AI data center innovation and growth A Media Snippet accompanying this announcement is available in this link. BOISE, Idaho, June 12, 2025 (GLOBE NEWSWIRE) -- Micron Technology, Inc. (Nasdaq: MU) today announced the integration of its HBM3E 36GB 12-high offering into the upcoming AMD Instinct™ MI350 Series solutions. This collaboration highlights the critical role of power efficiency and performance in training large AI models, delivering high-throughput inference and handling complex HPC workloads such as data processing and computational modeling. Furthermore, it represents another significant milestone in HBM industry leadership for Micron, showcasing its robust execution and the value of its strong customer relationships. Micron HBM3E 36GB 12-high solution brings industry-leading memory technology to AMD Instinct™ MI350 Series GPU platforms, providing outstanding bandwidth and lower power consumption. The AMD Instinct MI350 Series GPU platforms, built on AMD advanced CDNA 4 architecture, integrate 288GB of high-bandwidth HBM3E memory capacity, delivering up to 8 TB/s bandwidth for exceptional throughput. This immense memory capacity allows Instinct MI350 series GPUs to efficiently support AI models with up to 520 billion parameters -- on a single GPU. In a full platform configuration, Instinct MI350 Series GPUs offers up to 2.3TB of HBM3E memory and achieves peak theoretical performance of up to 161 PFLOPS at FP4 precision, with leadership energy efficiency and scalability for high-density AI workloads. This tightly integrated architecture, combined with Micron's power-efficient HBM3E, enables exceptional throughput for large language model training, inference and scientific simulation tasks -- empowering data centers to scale seamlessly while maximizing compute performance per watt. This joint effort between Micron and AMD has enabled faster time to market for AI solutions. "Our close working relationship and joint engineering efforts with AMD optimize compatibility of the Micron HBM3E 36GB 12-high product with the Instinct MI350 Series GPUs and platforms. Micron's HBM3E industry leadership and technology innovations provide improved TCO benefits to end customers with high performance for demanding AI systems," said Praveen Vaidyanathan, vice president and general manager of Cloud Memory Products at Micron. "The Micron HBM3E 36GB 12-high product is instrumental in unlocking the performance and energy efficiency of AMD Instinct™ MI350 Series accelerators," said Josh Friedrich, corporate vice president of AMD Instinct Product Engineering at AMD. "Our continued collaboration with Micron advances low-power, high-bandwidth memory that helps customers train larger AI models, speed inference and tackle complex HPC workloads." Micron HBM3E 36GB 12-high product is now qualified on multiple leading AI platforms. For more information on Micron's HBM product portfolio, visit: High-bandwidth memory | Micron Technology Inc. Micron Technology, Inc. is an industry leader in innovative memory and storage solutions, transforming how the world uses information to enrich life for all. With a relentless focus on our customers, technology leadership, and manufacturing and operational excellence, Micron delivers a rich portfolio of high-performance DRAM, NAND, and NOR memory and storage products through our Micronand Crucialbrands. Every day, the innovations that our people create fuel the data economy, enabling advances in artificial intelligence (AI) and compute-intensive applications that unleash opportunities -- from the data center to the intelligent edge and across the client and mobile user experience. To learn more about Micron Technology, Inc. (Nasdaq: MU), visit micron.com. © 2025 Micron Technology, Inc. All rights reserved. Information, products, and/or specifications are subject to change without notice. Micron, the Micron logo, and all other Micron trademarks are the property of Micron Technology, Inc. All other trademarks are the property of their respective owners. ____________________________ Data rate testing estimates are based on shmoo plot of pin speed performed in a manufacturing test environment. Power and performance estimates are based on simulation results of workload uses cases.

[20]

AMD Introduces Its Open, Scalable Rack-Scale AI Infrastructure Built on Industry Standards At Its 2025 Advancing AI Event

AMD delivered its comprehensive, end-to-end integrated AI platform vision and introduced its open, scalable rack-scale AI infrastructure built on industry standards at its 2025 Advancing AI event. AMD and its partners showcased: How they are building the open AI ecosystem with the new AMD Instinct? MI350 Series accelerators. The continued growth of the AMD ROCm? ecosystem. The company?s powerful, new, open rack-scale designs and roadmap that bring leadership rack-scale AI performance beyond 2027. AMD Delivers Leadership Solutions to Accelerate an Open AI Ecosystem AMD announced a broad portfolio of hardware, software and solutions to power the full spectrum of AI: AMD unveiled the Instinct MI350 Series GPUs, setting a new benchmark for performance, efficiency and scalability in generative AI and high-performance computing. The MI350 Series, consisting of both Instinct MI350X and MI355X GPUs and platforms, delivers a 4x, generation-on-generation AI compute increase and a 35x generational leap in inferencing, paving the way for transformative AI solutions across industries. MI355X also delivers significant price-performance gains, generating up to 40% more tokens-per-dollar compared to competing solutions. AMD demonstrated end-to-end, open-standards rack-scale AI infrastructure?already rolling out with AMD Instinct MI350 Series accelerators, 5th Gen AMD EPYC? processors and AMD Pensando? Pollara NICs in hyperscaler deployments such as Oracle Cloud Infrastructure (OCI) and set for broad availability in 2H 2025. AMD also previewed its next generation AI rack called ?Helios. ? It will be built on the next-generation AMD Instinct MI400 Series GPUs ? which compared to the previous generation are expected to deliver up to 10x more performance running inference on Mixture of Experts models, the ?Zen 6?-based AMD EPYC ?Venice? CPUs and AMD Pensando ?Vulcano? NICs. More details are available in this blog post. The latest version of the AMD open-source AI software stack, ROCm 7, is engineered to meet the growing demands of generative AI and high-performance computing workloads?while dramatically improving developer experience across the board. ROCm 7 features improved support for industry-standard frameworks, expanded hardware compatibility and new development tools, drivers, APIs and libraries to accelerate AI development and deployment. More details are available in this blog post from Anush Elangovan, AMD CVP of AI Software Development. The Instinct MI350 Series exceeded AMD?s five-year goal to improve the energy efficiency of AI training and high-performance computing nodes by 30x, ultimately delivering a 38x improvement. AMD also unveiled a new 2030 goal to deliver a 20x increase in rack-scale energy efficiency from a 2024 base year, enabling a typical AI model that requires more than 275 racks to be trained in fewer than one fully utilized rack by 2030, using 95% less electricity. More details are available in this blog post from Sam Naffziger, AMD SVP and Corporate Fellow. AMD also announced the broad availability of the AMD Developer Cloud for the global developer and open-source communities. Purpose-built for rapid, high-performance AI development, users will have access to a fully managed cloud environment with the tools and flexibility to get started with AI projects ? and grow without limits. With ROCm 7 and the AMD Developer Cloud, AMD is lowering barriers and expanding access to next-gen compute. Strategic collaborations with leaders like Hugging Face, OpenAI and Grok are proving the power of co-developed, open solutions. Broad Partner Ecosystem Showcases AI Progress Powered by AMD: Seven of the 10 largest model builders and Al companies are running production workloads on Instinct accelerators. Among those companies are Meta, OpenAI, Microsoft and xAI, who joined AMD and other partners at Advancing AI, to discuss how they are working with AMD for AI solutions to train today?s leading AI models, power inference at scale and accelerate AI exploration and development: Meta detailed how Instinct MI300X is broadly deployed for Llama 3 and Llama 4 inference. Meta shared excitement for MI350 and its compute power, performance-per-TCO and next-generation memory. Meta continues to collaborate closely with AMD on AI roadmaps, including plans for the Instinct MI400 Series platform. OpenAI CEO Sam Altman discussed the importance of holistically optimized hardware, software and algorithms and OpenAI?s close partnership with AMD on AI infrastructure, with research and GPT models on Azure in production on MI300X, as well as deep design engagements on MI400 Series platforms. Oracle Cloud Infrastructure (OCI) is among the first industry leaders to adopt the AMD open rack-scale AI infrastructure with AMD Instinct MI355X GPUs. OCI leverages AMD CPUs and GPUs to deliver balanced, scalable performance for AI clusters, and announced it will offer zettascale AI clusters accelerated by the latest AMD Instinct processors with up to 131,072 MI355X GPUs to enable customers to build, train and inference AI at scale. HUMAIN discussed its landmark agreement with AMD to build open, scalable, resilient and cost-efficient AI infrastructure leveraging the full spectrum of computing platforms only AMD can provide. Microsoft announced Instinct MI300X is now powering both proprietary and open-source models in production on Azure. Cohere shared that its high-performance, scalable Command models are deployed on Instinct MI300X, powering enterprise-grade LLM inference with high throughput, efficiency and data privacy. Red Hat described how its expanded collaboration with AMD enables production-ready AI environments, with AMD Instinct GPUs on Red Hat OpenShift AI delivering powerful, efficient AI processing across hybrid cloud environments. Astera Labs highlighted how the open UALink ecosystem accelerates innovation and delivers greater value to customers and shared plans to offer a comprehensive portfolio of UALink products to support next-generation AI infrastructure. Marvell joined AMD to highlight its collaboration as part of the UALink Consortium developing an open interconnect, bringing the ultimate flexibility for AI infrastructure.

[21]

AMD Introduces AI-Focused MI350 Series, Part of 'Vision for an Open AI' Ecosystem -- Update

Advanced Micro Devices unveiled its MI350 Series accelerators and several other new artificial-intelligence-focused products as it looks to challenge industry rival Nvidia. The MI350 Series consists of Instinct MI350X and MI355X graphics-processing units and platforms, AMD said. Along with the debut of the new offerings, AMD said it partnered with prominent companies such as Meta Platforms, OpenAI, Oracle and Microsoft as it seeks an open AI ecosystem. At AMD's Advancing AI event, the Santa Clara, Calif., company said Meta "detailed how Instinct MI300X is broadly deployed for Llama 3 and Llama 4 inference," while OpenAI CEO Sam Altman discussed "OpenAI's close partnership with AMD on AI infrastructure, with research and GPT models on Azure in production on MI300X, as well as deep design engagements on MI400 Series platforms." Oracle said Thursday that it "will be among the first hyperscalers to offer an AI supercomputer with AMD Instinct MI355X GPUs," while Micron Technology and Supermicro also issued AMD-related updates. AMD said its Helios AI rack, scheduled for 2026, would be built on its next-generation Instinct MI400 Series GPUs. The company also said the AMD Developer Cloud would be broadly available for developer and open-source communities. Rival chip-maker Nvidia in March 2024 introduced its Blackwell platform to run generative AI. Nvidia announced various collaborations this week, including agreements with L'Oreal and Novo Nordisk. Write to Josh Beckerman at [email protected]

[22]

AMD Challenges NVIDIA with MI350 Series, ROCm 7, and Free Developer Cloud