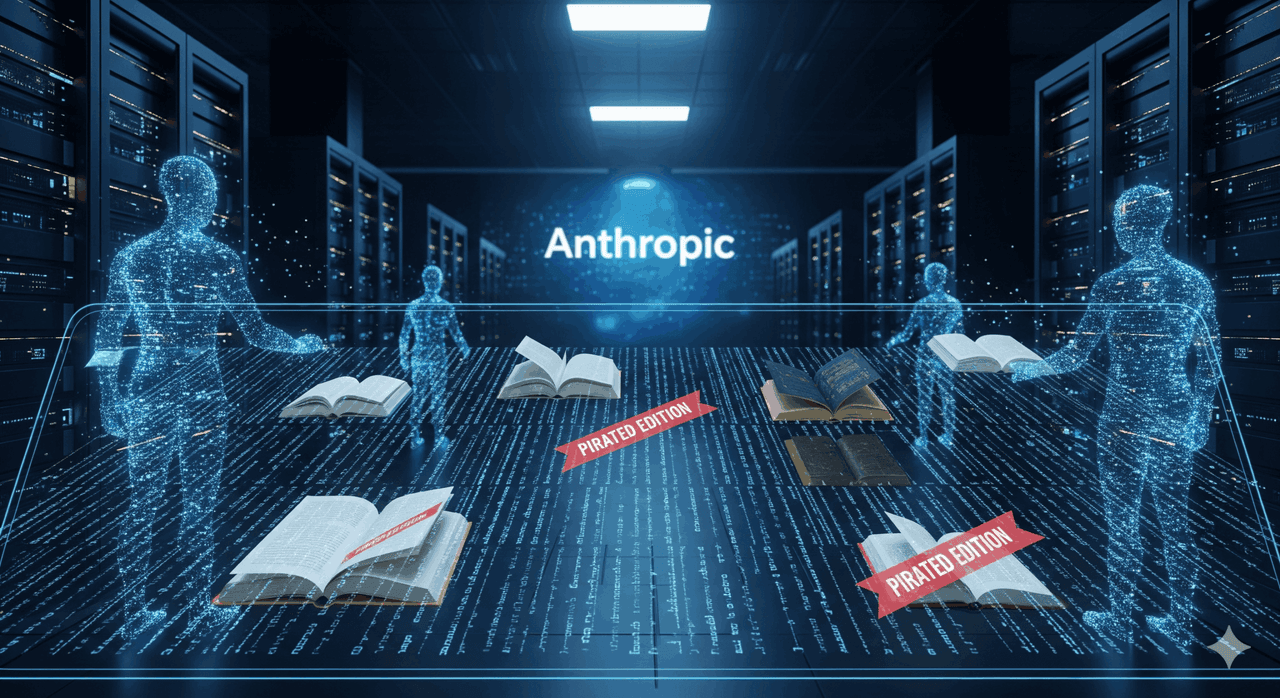

Anthropic's $1.5B AI Copyright Settlement: A Landmark Case with Mixed Reactions

60 Sources

60 Sources

[1]

Judge: Anthropic's $1.5B settlement is being shoved "down the throat of authors

At a hearing Monday, US district judge William Alsup blasted a proposed $1.5 billion settlement over Anthropic's rampant piracy of books to train AI. The proposed settlement comes in a case where Anthropic could have owed more than $1 trillion in damages after Alsup certified a class that included up to 7 million claimants whose works were illegally downloaded by the AI company. Instead, critics fear Anthropic will get off cheaply, striking a deal with authors suing that covers less than 500,000 works and paying a small fraction of its total valuation (currently $183 billion) to get away with the massive theft. Defector noted that the settlement doesn't even require Anthropic to admit wrongdoing, while the company continues raising billions based on models trained on authors' works. Most recently, Anthropic raised $13 billion in a funding round, making back about 10 times the proposed settlement amount after announcing the deal. Alsup expressed grave concerns that lawyers rushed the deal, which he said now risks being shoved "down the throat of authors," Bloomberg Law reported. In an order, Alsup clarified why he thought the proposed settlement was a chaotic mess. The judge said he was "disappointed that counsel have left important questions to be answered in the future," seeking approval for the settlement despite the Works List, the Class List, the Claim Form, and the process for notification, allocation, and dispute resolution all remaining unresolved. Denying preliminary approval of the settlement, Alsup suggested that the agreement is "nowhere close to complete," forcing Anthropic and authors' lawyers to "recalibrate" the largest publicly reported copyright class-action settlement ever inked, Bloomberg reported. Of particular concern, the settlement failed to outline how disbursements would be managed for works with multiple claimants, Alsup noted. Until all these details are ironed out, Alsup intends to withhold approval, the order said. One big change the judge wants to see is the addition of instructions requiring "anyone with copyright ownership" to opt in, with the consequence that the work won't be covered if even one rights holder opts out, Bloomberg reported. There should also be instruction that any disputes over ownership or submitted claims should be settled in state court, Alsup said. To Alsup, the settlement seemingly risks setting up a future where courts are bogged down over disputes linked to the class action for years. That's perhaps a bigger concern if many authors and publishers miss out on filing claims or receiving payments, since the judge noted that class members frequently "get the shaft" in class actions where attorneys stop caring after monetary relief is granted, Bloomberg reported. Further, Alsup is worried that an improper notification scheme could leave Anthropic in a vulnerable position, facing future claimants "coming out of the woodwork later," Bloomberg reported, despite doling out more than $1 billion. "When they pay that kind of money, they're going to get the relief in the form of a clean bill of health going forward," Alsup said at the hearing, suggesting that the settlement must get Anthropic completely off the hook for future legal claims over the AI training piracy. Warning class counsel that he felt "misled," the judge asked for more information about the claims process, noting, "I have an uneasy feeling about hangers-on with all this money on the table." Following the hearing, the judge set a schedule to ensure that lists of covered works and class members would be finalized by September 15, followed by the claims process finalized by September 25. That schedule would position the court to potentially preliminarily approve the settlement by October 10, Alsup suggested. Why the deal only covers about 500,000 works As of this writing, the list of covered works spans about 465,000, Alsup said. That's a far cry from the 7 million works that he initially certified as covered in the class. A breakdown from the Authors Guild -- which consulted on the case and is part of a Working Group helping to allocate claims of $3,000 per work to authors and publishers -- explained that "after accounting for the many duplicates," foreign editions, unregistered works, and books missing class criteria, "only approximately 500,000 titles meet the definition required to be part of the class." But duplicate downloads and other missing criteria don't explain why the payout per work seems so small, and that's a problem for authors who want higher payouts since this settlement could become a template in other cases where AI companies are accused of pirating works for AI training. According to the Authors Guild, "the Copyright Act gives courts discretion to award statutory damages of at least $750 and no more than $150,000 per infringed work when the infringement is willful, as is the case here." However, "when there are a large number of works at issue," it's rare that courts award maximum damages, the group said. Hoping to avoid a dragged-out legal battle that "could tie up the case for years," authors suing Anthropic settled on "a strong payout without the risks of trial," the Authors Guild said. Going that route, they supposedly "avoided years of delay through appeals" and "achieved a certain, immediate result that sends a powerful signal to the industry that piracy will cost you a lot," the Authors Guild suggested. The settlement will also likely serve to push more AI companies to avoid piracy and actually pay to license content for training, the group said. The Authors Guild confirmed that once the list is finalized, likely by October 10, a searchable database will be created for authors to confirm if their works are covered. Until then, authors can submit contact information through a website set up to manage the settlement process. That will ensure that authors are notified when the claims process begins, which, if the settlement is ultimately approved, will likely happen this fall, the Authors Guild said. "If your book is included in the class list, you will receive a formal notice by mail or email from the settlement administrator," the group said. "The notice will explain the terms of the settlement, your rights, and next steps. The Authors Guild will also share information to help authors understand the process."

[2]

Screw the money -- Anthropic's $1.5B copyright settlement sucks for writers | TechCrunch

Around half a million writers will be eligible for a payday of at least $3,000, thanks to a historic $1.5 billion settlement in a class action lawsuit that a group of authors brought against Anthropic. This landmark settlement marks the largest payout in the history of U.S. copyright law, but this isn't a victory for authors -- it's yet another win for tech companies. Tech giants are racing to amass as much written material as possible to train their LLMs, which power groundbreaking AI chat products like ChatGPT and Claude -- the same products that are endangering the creative industries, even if their outputs are milquetoast. These AIs can become more sophisticated when they ingest more data, but after scraping basically the entire internet, these companies are literally running out of new information. That's why Anthropic, the company behind Claude, pirated millions of books from "shadow libraries" and fed them into its AI. This particular lawsuit, Bartz v. Anthropic, is one of dozens filed against companies like Meta, Google, OpenAI, and Midjourney over the legality of training AI on copyrighted works. But writers aren't getting this settlement because their work was fed to an AI -- this is just a costly slap on the wrist for Anthropic, a company that just raised another $13B, because it illegally downloaded books instead of buying them. In June, federal judge William Alsup sided with Anthropic and ruled that it is, indeed, legal to train AI on copyrighted material. The judge argues that this use case is "transformative" enough to be protected by the fair use doctrine, a carve-out of copyright law that hasn't been updated since 1976. "Like any reader aspiring to be a writer, Anthropic's LLMs trained upon works not to race ahead and replicate or supplant them -- but to turn a hard corner and create something different," the judge said. It was the piracy -- not the AI training -- that moved Judge Alsup to bring the case to trial, but with Anthropic's settlement, a trial is no longer necessary. "Today's settlement, if approved, will resolve the plaintiffs' remaining legacy claims," said Aparna Sridhar, deputy general counsel at Anthropic, in a statement. "We remain committed to developing safe AI systems that help people and organizations extend their capabilities, advance scientific discovery, and solve complex problems." As dozens more cases over the relationship between AI and copyrighted works go to court, judges now have Bartz v. Anthropic to reference as a precedent. But given the ramifications of these decisions, maybe another judge will arrive at a different conclusion.

[3]

"First of its kind" AI settlement: Anthropic to pay authors $1.5 billion

Authors revealed today that Anthropic agreed to pay $1.5 billion and destroy all copies of the books the AI company pirated to train its artificial intelligence models. In a press release provided to Ars, the authors confirmed that the settlement is "believed to be the largest publicly reported recovery in the history of US copyright litigation." Covering 500,000 works that Anthropic pirated for AI training, if a court approves the settlement, each author will receive $3,000 per work that Anthropic stole. "Depending on the number of claims submitted, the final figure per work could be higher," the press release noted. Anthropic has already agreed to the settlement terms, but a court must approve them before the settlement is finalized. Preliminary approval may be granted this week, while the ultimate decision may be delayed until 2026, the press release noted. Justin Nelson, a lawyer representing the three authors who initially sued to spark the class action -- Andrea Bartz, Kirk Wallace Johnson, and Charles Graeber -- confirmed that if the "first of its kind" settlement "in the AI era" is approved, the payouts will "far" surpass "any other known copyright recovery." "It will provide meaningful compensation for each class work and sets a precedent requiring AI companies to pay copyright owners," Nelson said. "This settlement sends a powerful message to AI companies and creators alike that taking copyrighted works from these pirate websites is wrong." Groups representing authors celebrated the settlement on Friday. The CEO of the Authors' Guild, Mary Rasenberger, said it was "an excellent result for authors, publishers, and rightsholders generally." Perhaps most critically, the settlement shows "there are serious consequences when" companies "pirate authors' works to train their AI, robbing those least able to afford it," Rasenberger said.

[4]

I Wasn't Sure I Wanted Anthropic to Pay Me for My Books -- I Do Now

A billion dollars isn't what it used to be -- but it still focuses the mind. At least it did for me when I heard that the AI company Anthropic agreed to an at least $1.5 billion settlement for authors and publishers whose books were used to train an early version of its large language model, Claude. This came after a judge issued a summary judgement that it had pirated the books it used. The proposed agreement -- which is still under scrutiny by the wary judge -- would reportedly grant authors a minimum $3,000 per book. I've written eight and my wife has notched five. We are talking bathroom-renovation dollars here! Since the settlement is based on pirated books, it doesn't really address the big issue of whether it's OK for AI companies to train their models on copyrighted works. But it's significant that real money is involved. Previously the argument over AI copyright was based on legal, moral, and even political hypotheticals. Now that things are getting real, it's time to tackle the fundamental issue: Since elite AI depends on book content, is it fair for companies to build trillion-dollar businesses without paying authors? Legalities aside, I have been struggling with the issue. But now that we're moving from the courthouse to the checkbook, the film has fallen from my eyes. I deserve those dollars! Paying authors feels like the right thing to do. Despite the powerful forces (including US president Donald Trump) arguing otherwise. Before I go farther, let me drop a whopper of a disclaimer. As I mentioned, I'm an author myself, and stand to gain or lose from the outcome of this argument. I'm also on the council of the Author's Guild, which is a strong advocate for authors and is suing OpenAI and Microsoft for including authors' works in their training runs. (Because I cover tech companies, I abstain on votes involving litigation with those firms.) Obviously, I'm speaking for myself today. In the past, I've been a secret outlier on the council, genuinely torn on the issue of whether companies have the right to train their models on legally purchased books. The argument that humanity is building a vast compendium of human knowledge genuinely resonates with me. When I interviewed the artist Grimes in 2023, she expressed enthusiasm over being a contributor to this experiment: "Oh, sick, I might get to live forever!" she said. That vibed with me, too. Spreading my consciousness widely is a big reason I love what I do. But embedding a book inside a large language model built by a giant corporation is something different. Keep in mind that books are arguably the most valuable corpus that an AI model can ingest. Their length and coherency are unique tutors of human thought. The subjects they cover are vast and comprehensive. They are much more reliable than social media and provide a deeper understanding than news articles. I would venture to say that without books, large language models would be immeasurably weaker. So one might argue that OpenAI, Google, Meta, Anthropic and the rest should pay handsomely for access to books. Late last month, at that shameful White House tech dinner, CEOs took turns impressing Donald Trump with the insane sums they were allegedly investing in US-based data centers to meet AI's computation demands. Apple promised $600 billion, and Meta said it would match that amount. OpenAI is part of a $500 billion joint venture called Stargate. Compared to those numbers, that $1.5 billion that Anthropic, as part of the settlement, agreed to distribute to authors and publishers as part of the infringement case doesn't sound so impressive. Nonetheless, it could well be that the law is on the side of those companies. Copyright law allows for something called "fair use," which permits the uncompensated exploitation of books and articles based on several criteria, one of which is whether the use is "transformational" -- meaning that it builds on the book's content in an innovative manner that doesn't compete with the original product. The judge in charge of the Anthropic infringement case has ruled that using legally obtained books in training is indeed protected by fair use. Determining this is an awkward exercise, since we are dealing with legal yardsticks drawn before the internet -- let alone AI. Obviously, there needs to be a solution based on contemporary circumstances. The White House's AI Action Plan announced this May didn't offer one. But in his remarks about the plan, Trump weighed in on the issue. In his view, authors shouldn't be paid -- because it's too hard to set up a system that would pay them fairly. "You can't be expected to have a successful AI program when every single article, book, or anything else that you've read or studied, you're supposed to pay for," Trump said. "We appreciate that, but just can't do it -- because it's not doable." (An administration source told me this week that the statement "sets the tone" for official policy.)

[5]

Judge in Anthropic AI Piracy Suit Worried Authors May 'Get the Shaft' in $1.5B Settlement

A federal judge on Monday ordered the court to slow-roll a proposed $1.5 billion settlement to authors whose copyrighted works Anthropic pirated to train its Claude AI models. Judge William Alsup, of the US District Court for the Northern District of California, said the deal is "nowhere close to complete," and he will hold off on approving it until more questions are answered. Alsup's concerns seem to be around making sure authors have enough notice to join the suit, according to Bloomberg. In class action settlements, members can "get the shaft" once the terms are announced, Alsup said, which is why he wants more information from the parties before approval. Alsup also called out the authors' attorneys for adding additional lawyers (and their subsequent legal fees) to the case, which they said in court was to deal with settlement claim submissions. Alsup set a deadline of Sept. 15 to submit a final list of works covered by the settlement. If you think your works may qualify as part of the lawsuit, you can learn more on the Bartz settlement website. Don't miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source. The settlement terms were made public last week, but the agreement has to be approved by the court before any payments can be made. Lawyers for the plaintiffs told CNET at the time that they expected about 500,000 books or works to be included, with an estimated payout of $3,000 per work. This latest move may result in different settlement terms, but it will certainly drag out what has already been a long court case. The case originated from copyright concerns, an important legal issue for AI companies and creators. Alsup ruled in June that Anthropic's use of copyrighted material was fair use, meaning it wasn't illegal, but the way the company obtained the books warranted further scrutiny. In the ruling, it was revealed that Anthropic used shadow libraries like LibGen and then systematically acquired and destroyed thousands of used books to scan into its own digital library. The proposed settlement stems from those piracy claims. Without significant legislation and regulation, court cases like these have become very important checks on AI companies' power. Each case influences the next. Two days after Anthropic's fair use victory, Meta won a similar case. While many AI copyright cases are still winding their way through the courts, Anthropic's rulings and settlement terms will become an important benchmark for future cases.

[6]

Anthropic Agrees to Pay Authors at Least $1.5 Billion in AI Copyright Settlement

Anthropic will pay at least $3,000 for each copyrighted work that it pirated. The company downloaded unauthorized copies of books in early efforts to gather training data for its AI tools. Anthropic has agreed to pay at least $1.5 billion to settle a lawsuit brought by a group of book authors alleging copyright infringement, an estimated $3,000 per work. The amount is well below what Anthropic may have had to pay if it had lost the case at trial. Experts said the plaintiffs may have been awarded at least billions of dollars in damages, with some estimates placing the total figure over $1 trillion. This is the first class action legal settlement centered on AI and copyright in the United States, and the outcome may shape how regulators and creative industries approach the legal debate over generative AI and intellectual property. "This landmark settlement far surpasses any other known copyright recovery. It is the first of its kind in the AI era. It will provide meaningful compensation for each class work and sets a precedent requiring AI companies to pay copyright owners. This settlement sends a powerful message to AI companies and creators alike that taking copyrighted works from these pirate websites is wrong," says co-lead plaintiffs' counsel Justin Nelson of Susman Godfrey LLP. Anthropic is not admitting any wrongdoing or liability. "Today's settlement, if approved, will resolve the plaintiffs' remaining legacy claims. We remain committed to developing safe AI systems that help people and organizations extend their capabilities, advance scientific discovery, and solve complex problems," Anthropic deputy general counsel Aparna Sridhar said in a statement. The lawsuit, which was originally filed in 2024 in the US District Court for the Northern District of California, was part of a larger ongoing wave of copyright litigation brought against tech companies over the data they used to train artificial intelligence programs. Authors Andrea Bartz, Kirk Wallace Johnson, and Charles Graeber alleged that Anthropic trained its large language models on their work without permission, violating copyright law. This June, senior district judge William Alsup ruled that Anthropic's AI training was shielded by the "fair use" doctrine, which allows unauthorized use of copyrighted works under certain conditions. It was a win for the tech company, but came with a major caveat. Anthropic had relied on a corpus of books pirated from so-called "shadow libraries," including the notorious site LibGen, and Alsup determined that the authors should still be able to bring Anthropic to trial in a class action over pirating their work. "Anthropic downloaded over seven million pirated copies of books, paid nothing, and kept these pirated copies in its library even after deciding it would not use them to train its AI (at all or ever again). Authors argue Anthropic should have paid for these pirated library copies. This order agrees," Alsup wrote in his summary judgement. It's unclear how the literary world will respond to the terms of the settlement. Since this was an "opt-out" class action, authors who are eligible but dissatisfied with the terms will be able to request exclusion to file their own lawsuits. Notably, the plaintiffs filed a motion today to keep the "opt-out threshold" confidential, which means that the public will not have access to the exact number of class members who would need to opt out for the settlement to be terminated. This is not the end of Anthropic's copyright legal challenges. The company is also facing a lawsuit from a group of major record labels, including Universal Music Group, which alleges that the company used copyrighted lyrics to train its Claude chatbot. The plaintiffs are now attempting to amend their case to include allegations that Anthropic used the peer-to-peer file sharing service BitTorrent to illegally download songs, and their lawyers recently stated in court filings that they may file a new lawsuit about piracy if they are not permitted to amend the current complaint.

[7]

Judge puts Anthropic's $1.5 billion book piracy settlement on hold

Anthropic's $1.5 billion book piracy settlement has been put on pause after the federal judge overseeing the class action case raised concerns about the terms of the agreement. During a hearing this week, Judge William Alsup rejected the settlement over concerns that class action lawyers will create a deal behind closed doors that they will force "down the throats of authors," according to reports from Bloomberg Law and the Associated Press. Anthropic agreed to pay the landmark settlement last week, putting to rest a class action lawsuit from US authors that accused the AI company of training its models on hundreds of thousands of copyrighted books. Judge Alsup let the class action lawsuit move forward after ruling that Anthropic training its AI models on purchased books counts as fair use, but that it could be liable for training on illegally downloaded work. In addition to his concerns over authors being strong-armed into signing a deal, Alsup says he needs to review more information about the claims process outlined in the settlement. "I have an uneasy feeling about hangers on with all this money on the table," Alsup said, as reported by Bloomberg Law. Under the settlement, authors and publishers would receive about $3,000 for covered works. As noted by AP, an attorney for the authors said there are around 465,000 books that would be covered by the settlement, but Judge Alsup asked for a solid number to ensure Anthropic doesn't get hit with other lawsuits "coming out of the woodwork." He added that class members will need to be given "very good notice" to make sure they're aware of the case. Maria Pallante, CEO of the Association of American Publishers, an industry group backing the authors' lawsuit, told AP that Alsup "demonstrated a lack of understanding of how the publishing industry works." Pallante said that "class actions are supposed to resolve cases, not create new disputes, and certainly not between the class members who were harmed in the first place." The authors' attorney, Justin Nelson, said in a statement to Bloomberg Law that the lawyers "care deeply that every single proper claim gets compensation." Judge Alsup will revisit the settlement during another hearing on September 25th. "We'll see if I can hold my nose and approve it," Alsup said, according to the AP.

[8]

Judge Halts Anthropic's $1.5 Billion Author Deal Over Transparency Concerns

Don't miss out on our latest stories. Add PCMag as a preferred source on Google. Anthropic's $1.5 billion offer to settle a copyright lawsuit brought by authors has yet to be finalized. Judge William Alsup has denied the motion to approve the deal after finding some important claim details missing. "The district judge is disappointed that counsel have left important questions to be answered in the future, including respecting the Works List, the Class List, the Claim Form, and, particularly for works with multiple claimants, the processes for notification... allocation, and dispute resolution," Alsup wrote in an order filed before Monday's hearing. As Bloomberg Law reports, Alsup is concerned that the attorneys will stop caring once the monetary relief is approved. Therefore, he has asked the authors' counsel to provide a "very good notice" to the writers, give them the option to opt in or out, and ensure they know they can't go after Anthropic over the same issue again. In the class-action filed last year, authors claimed Anthropic used pirated versions of their books, without permission or compensation, to train the company's AI models. Anthropic decided to settle the case last month, with the final amount revealed last week. According to Bloomberg Law, Alsup has given the involved parties until Sept. 15 to submit a full list of works eligible for the $1.5 billion payout. That could be around 465,000 works, with each yielding close to $3,000. In addition to the works list, attorneys have been asked to get the class list and claim form for authors approved by the court by Oct. 10. Only after that will a preliminary approval of the settlement be sanctioned. At the hearing, Alsup also criticized the authors' counsel for assigning an "army" of lawyers to the case. The add-on lawyers won't get a piece of the settlement, and the attorneys' total payout will be decided based on the payout to class members, he added. Maria A. Pallante, president and CEO of the Association of American Publishers, says the court "demonstrated a lack of understanding of how the publishing industry works" and "seems to be envisioning a claims process that would be unworkable, and sees a world with collateral litigation between authors and publishers for years to come." A similar lawsuit alleging the use of pirated books for AI training was filed against Apple last week.

[9]

Anthropic to pay $1.5B over pirated books in Claude AI training -- payouts of roughly $3,000 per infringed work

A landmark settlement highlights the legal risks of using copyrighted materials without permission. Anthropic, the company behind Claude AI, has agreed to pay at least $1.5 billion to settle a class-action lawsuit brought by authors over the use of pirated books in training its large language models. The proposed settlement, filed September 5, comes after months of litigation that could change how AI companies acquire and manage data for model training. The class action was led by authors Andrea Barta, Charles Graeber, and Kirk Wallace Johnson, and accused Anthropic of downloading hundreds of thousands of copyrighted books from torrent-based sources like Library Genesis, Pirate Mirror, and Books3. They claim that doing so allowed the company to build Claude's underlying dataset. In June, a federal judge had allowed the case to proceed on the narrow issue of unauthorized digital copying, setting the stage for a trial in December. Instead, Anthropic has agreed to a non-reversionary settlement fund starting at $1.5 billion, with payouts of approximately $3,000 per infringed work. This figure may increase as more titles are identified. Anthropic will also be required to delete the infringing data, though there is currently no indication that the court will force the company to delete or retrain its models -- a process known as model disgorgement -- under the current agreement. This marks the largest publicly disclosed AI copyright settlement to date. OpenAI has also settled with publishers in a separate matter, but the specific details of these deals are confidential. While Anthropic's settlement is not an admission that they've done anything wrong, the sheer scale of the payout sets a new benchmark for data liability in generative AI development. It's worth noting that this case doesn't challenge the broader legality of training AI on public or lawfully obtained content -- a separate issue is still working its way through the courts -- but it does highlight the legal risk and potential financial cost of using pirated material even if the intent is research and the content is later purchased. As Judge Alsup put it in his June ruling, "That Anthropic later bought a copy of a book it earlier stole off the internet will not absolve it of liability for the theft," adding that it might affect the extent of statutory damages owed to rights holders. If models trained on pirated data face lawsuits or potential forced retraining, developers may need to start over using clean, licensed datasets. That means redoing training runs that already consumed millions of GPU hours, lending a huge boost to compute demand. Nvidia's H100 and upcoming Blackwell GPUs, as well as AMD's MI300X and HBM3e providers, could all benefit as courts force labs to scramble and revalidate their models. That is just speculation for the moment, but it will be interesting to see how courts decide to rule on related matters in the future.

[10]

An AI startup has agreed to a $2.2 billion copyright settlement. But will Australian writers benefit?

University of Sydney provides funding as a member of The Conversation AU. Anthropic, an AI startup founded in 2021, has reached a groundbreaking US$1.5 billion settlement (AU$2.28 billion) in a class-action copyright lawsuit. The case was initiated in 2024 by novelist Andrea Bartz and non-fiction writers Charles Graeber and Kirk Wallace Johnson. If the settlement is approved by the presiding judge, the company will pay authors about US$3,000 for each of the estimated 500,000 books included in the agreement. It will destroy illegally downloaded books and refrain from using pirated books to train chatbots in the future. This is the largest copyright settlement in US history, establishing a crucial legal precedent for the evolving relationship between AI companies and content creators. It will have implications for numerous other copyright cases currently underway against AI companies OpenAI, Microsoft, Google, and most recently Apple. In June, Meta prevailed in a copyright case brought against it, though the ruling left open the possibility of other lawsuits. The settlement follows a landmark US ruling on AI development and copyright law, issued in June 2025, that separated legal AI training from illegal acquisition of content. Anthropic allegedly pirated over seven million books from two online "shadow libraries" in June 2021 and July 2022. The plaintiffs and Anthropic are expected to finalise a list of works to be compensated by September 15. Cautious optimism In Australia, the response to news of a potential settlement has been cautiously optimistic. Stuart Glover, head of policy at the Australian Publishers Association told me: We welcome these court-enforced steps towards accountability, but this settlement shows why AI companies must respect copyright and pay creators - not just see what they can get away with. And for the sake of Australian authors and publishers whose works have been unlawfully scraped without compensation under this action, it's a clear call for the Australian government to maintain copyright and mandate that AI companies pay for what they use. Lucy Hayward, CEO of the Australian Society of Authors, told me: While all of the details are yet to be revealed, this settlement could represent a very welcome acknowledgement that AI companies cannot just steal authors' and artists' work to build their large language models. However, in the Anthropic case, authors will be only compensated if their publishers have registered their work with the US copyright office within a certain timeframe. Hayward expressed concern about this, as the seven million works that are alleged to have been pirated were written by authors from around the world and "we suspect many international authors may miss out on settlement money." She has called on Australian the government to introduce new laws requiring tech companies to "pay ongoing compensation to creators where Australian books have been used to train models offshore". Legal risks In June, US judge William Alsup ruled that using books to train AI was not a violation of US copyright law. But he ruled Anthropic would still have to stand trial over its use of pirated copies to build its library of material. Judge Alsup has since criticised the settlement for its loopholes. He has scheduled another hearing for September 25. "We'll see if I can hold my nose and approve it," he said. If the settlement is not approved, Anthropic risks significantly greater financial repercussions. The trial is scheduled for December. If the company loses the case, US copyright law allows for statutory damages of up to $150,000 per infringed work in cases of wilful copyright infringement. William Long, a legal analyst at Wolters Kluwer, suggests potential damages in a trial could reach multiple billions of dollars, potentially jeopardising or even bankrupting the company. Anthropic recently secured new funding worth US$13 billion, bringing its total value to $183 billion. Keith Kupferschmid, president and CEO of the US-based Copyright Alliance, has argued that this is evidence "AI companies can afford to compensate copyright owners for their works without it undermining their ability to continue to innovate and compete". For Mary Rasenberger, CEO of the Authors Guild, the historic settlement is "an excellent result for authors, publishers, and rightsholders". Rasenberger expects "the settlement will lead to more licensing that gives author[s] both compensation and control over the use of their work by AI companies, as should be the case in a functioning free market society." A step forward While this particular settlement may offer little help to Australian writers and publishers whose works are not registered with the US Copyright Office, overall it is at least potentially good news for creators globally. It represents a step towards the establishment of a legitimate licensing scheme. Australian copyright law does not include a US-style "fair use" exception, which AI companies claim protects their training practices. There have been calls to change the law with major AI players, including Google and Microsoft, lobbying the Australian government for copyright exemptions. The recent Productivity Commission interim report proposed a text and data mining exception to the Australian Copyright Act, which would allow AI training on copyrighted Australian work. The proposal faced strong opposition from the Australian Society of Authors and the publishing industry. As Arts Minister Tony Burke stated in August 2025, the government has "no plans, no intention, no appetite to be weakening" our copyright laws. The Australian publishing industry is not entirely opposed to AI, but significant legal and ethical challenges remain. The Australian Publishers Association has advocated for government policies on AI that prioritise a clear ethical framework, transparency, appropriate incentives and protections for creators, and a balanced policy approach, so that "both AI development and cultural industries can flourish".

[11]

Anthropic to Pay $1.5 Billion to Settle Author Copyright Claims

By Shirin Ghaffary, Annelise Levy (Bloomberg Law) and Aruni Soni (Bloomberg Law) Anthropic PBC will pay $1.5 billion to resolve an authors' copyright lawsuit over the AI company's downloading of millions of pirated books, one of the largest settlements over artificial intelligence and intellectual property to date. A request for preliminary approval of the accord, involving one of the fastest-growing AI startups, was filed Friday with a San Francisco federal judge who had set the closely watched case for trial in December.

[12]

Anthropic coughst $1.5 to authors whose work it stole

AI upstart Anthropic has agreed to create a $1.5 billion fund it will use to compensate authors whose works it used to train its models without seeking or securing permission. News of the settlement emerged late last week in a filing [PDF] in the case filed by three authors - Andrea Bartz, Charles Graeber, and Kirk Wallace Johnson - who claimed that Anthropic illegally used their works. We're going to see a lot more of this. AI companies wIll create 'slush funds' Anthropic admitted to having bought millions of physical books and then digitizing them. The company also downloaded millions of pirated books from the notorious Library Genesis and Pirate Library Mirror troves of stolen material. The company nonetheless won part of the case, on grounds that scanning books is fair use and using them to create "transformative works" - the output of an LLM that doesn't necessarily include excerpts from the books - was also OK. But the decision also found Anthropic broke the law by knowingly ingesting pirated books. Plaintiffs intended to pursue court action over those pirated works, but the filing details a proposed settlement under which Anthropic will create a $1.5 billion fund which values each pirated book it used for training at $3,000. Anthropic also agreed to destroy the pirated works. In the filing, counsel observes that this is the largest ever copyright recovery claim to succeed in the USA and suggest it "will set a precedent of AI companies paying for their use of alleged pirated websites." This settlement is indeed significant given that several other major AI companies - among them Perplexity AI and OpenAI - face similar suits. It may also set a precedent that matters in Anthropic's dispute with Reddit over having scraped the forum site's content to feed into its training corpus. The filing asks the court to approve the settlement, a request judges rarely overrule. "We're going to see a lot more of this," according to Daryl Plummer, a distinguished VP analyst at Gartner. Plummer predicted the AI industry will have to build "slush funds" to handle copyright and other legal claims. "Death by AI claims will rise one thousand percent," he told The Register, suggesting that as people turn to AI for advice and counsel, sometimes with disastrous outcomes, their loved ones will seek recompense. Anthropic appears not to have commented on the settlement. For what it's worth, it can cover the cost of the settlement with some of the $13 billion in funding it announced last week. ®

[13]

Anthropic Will Pay $1.5 Billion to Authors in Landmark AI Piracy Lawsuit

Anthropic will pay $1.5 billion to settle a lawsuit brought by a group of authors alleging that the AI company illegally pirated their copyrighted books to use in training its Claude AI models. The settlement was announced Aug. 29, as the parties in the lawsuit filed a motion with the 9th US Circuit Court of Appeals indicating they had reached an agreement. "This landmark settlement far surpasses any other known copyright recovery. It is the first of its kind in the AI era," Justin Nelson, lawyer for the authors, told CNET. "It will provide meaningful compensation for each class work and sets a precedent requiring AI companies to pay copyright owners. This settlement sends a powerful message to AI companies and creators alike that taking copyrighted works from these pirate websites is wrong." The settlement still needs to be approved by the court, which it could do at a hearing on Monday, Sept. 8. Authors in the class could receive approximately $3,000 per pirated work, according to their attorneys' estimates. They expect the case will include at least 500,000 works, with Anthropic paying an additional $3,000 for any materials added to the case. "In June, the District Court issued a landmark ruling on AI development and copyright law, finding that Anthropic's approach to training AI models constitutes fair use. Today's settlement, if approved, will resolve the plaintiffs' remaining legacy claims," Aparna Sridhar, Anthropic's deputy general counsel, told CNET. "We remain committed to developing safe AI systems that help people and organizations extend their capabilities, advance scientific discovery, and solve complex problems." This settlement is the latest update in a string of legal moves and rulings between the AI company and authors. Earlier this summer, US Senior District Court Judge William Alsup ruled Anthropic's use of the copyrighted materials was justifiable as fair use -- a concept in copyright law that allows people to use copyrighted content without the rights holder's permission for specific purposes, like education. The ruling was the first time a court sided with an AI company and said its use of copyrighted material qualified as fair use, though Alsup said this may not always be true in future cases. Two days after Anthropic's victory, Meta won a similar case under fair use. Read more: We're All Copyright Owners. Why You Need to Care About AI and Copyright Alsup's ruling also revealed that Anthropic systematically acquired and destroyed thousands of used books to scan them into a private, digitized library for AI training. It was this claim that was recommended for a secondary, separate trial that Anthropic has decided to settle out of court. In class action suits, the terms of a settlement need to be reviewed and approved by the court. The settlement means both groups "avoid the cost, delay and uncertainty associated with further litigating the case," Christian Mammen, an intellectual property lawyer and San Francisco office managing partner at Womble Bond Dickinson, told CNET. "Anthropic can move forward with its business without being the first major AI platform to have one of these copyright cases go to trial," Mammen said. "And the plaintiffs can likely receive the benefit of any financial or non-financial settlement terms sooner. If the case were litigated through trial and appeal, it could last another two years or more." Don't miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source. Copyright cases like these highlight the tension between creators and AI developers. AI companies have been pushing hard for fair use exceptions as they gobble up huge swaths of data to train their models and don't want to pay or wait to license them. Without legislation guiding how companies can develop and train AI, court cases like these have become important in shaping the future of the products people use daily. "The terms of this settlement will likely become a data point or benchmark for future negotiations and, possibly, settlements in other AI copyright cases," said Mammen. Every case is different and needs to be weighed on its merits, he added, but it still could be influential. There are still big questions about how copyright law should be applied in the age of AI. Just like how we saw Alsup's Anthropic analysis referenced in Meta's case, each case helps build precedent that guides the legal guardrails and green lights around this technology. The settlement will bring this specific case to an end, but it doesn't give any clarity to the underlying legal dilemmas that AI raises. "This remaining uncertainty in the law could open the door to a further round of litigation," Mammen said, "involving different plaintiffs and different defendants, with similar legal issues but different facts."

[14]

Anthropic's $1.5 billion copyright settlement faces judge's scrutiny

Sept 9 (Reuters) - A federal judge in San Francisco has declined, for now, to approve a landmark $1.5 billion settlement announced Friday between artificial intelligence company Anthropic and a class of authors suing it for copyright infringement. U.S. District Judge William Alsup during a hearing on Monday ordered both sides to provide more details, a court filing said. The judge in a Sunday order, opens new tab had said he was "disappointed" the parties had left important questions about the settlement unanswered. The settlement fund amounts to $3,000 for 500,000 downloaded books. The parties said this could grow if more works are identified. Alsup ordered the parties on Monday to provide fuller lists of the works and authors affected by the settlement by September 15 and other clarifications by September 22. Leaders of the Association of American Publishers and the Authors Guild attended the Monday hearing. AAP's president, Maria Pallante, said in a statement that Alsup had "demonstrated a lack of understanding of how the publishing industry works." "It's critical that the number of works included in the settlement is complete, and the Court's reluctance to give the parties time to do that -- without any explanation -- is troubling," Pallante said. "Similarly, the Court seems to be envisioning an administratively challenging claims process that would be unworkable for the class members, and lead authors and publishers into collateral litigation for years to come." Authors Guild CEO Mary Rasenberger said in a separate statement that she was "shocked by the court's offhand suggestion" during the hearing that the groups were "working behind the scenes in ways that could pressure authors, when that is precisely the opposite of our proposed role as informational advisors" representing author and publisher interests in the settlement. Author Andi Bartz, one of the writers suing Anthropic, said in a statement on Tuesday that the settlement was "an initial, corrective step in a critical battle" against AI companies. Another plaintiff, Kirk Johnson, said the settlement marked "the beginning of a fight on behalf of humans that don't believe we have to sacrifice everything on the altar of AI." Spokespeople for Anthropic did not immediately respond to a request for comment on Tuesday. Bartz, Johnson and writer Charles Graeber filed the class action against Anthropic last year. They argued that the company, which is backed by Amazon (AMZN.O), opens new tab and Alphabet (GOOGL.O), opens new tab, unlawfully used millions of pirated books to teach its AI assistant Claude to respond to human prompts. The proposed deal announced Friday marked the first settlement in a string of lawsuits targeting tech companies including OpenAI, Microsoft (MSFT.O), opens new tab and Meta Platforms (META.O), opens new tab over their alleged misuse of copyrighted material to train generative AI systems. Reporting by Blake Brittain in Washington Our Standards: The Thomson Reuters Trust Principles., opens new tab Blake Brittain Thomson Reuters Blake Brittain reports on intellectual property law, including patents, trademarks, copyrights and trade secrets, for Reuters Legal. He has previously written for Bloomberg Law and Thomson Reuters Practical Law and practiced as an attorney.

[15]

AI start-up Anthropic settles landmark copyright suit for $1.5bn

AI start-up Anthropic has agreed to pay $1.5bn to settle a copyright lawsuit over its use of pirated texts, setting a precedent for tech companies facing intellectual property cases from authors and publishers. The settlement, which must be approved by the San Francisco federal judge overseeing the case, would be "the largest publicly reported copyright recovery in history", according to a court filing on Friday. The class action suit was brought by authors who claimed Anthropic had downloaded 465,000 books and other texts from "pirated websites" including Library Genesis and Pirate Library Mirror, which it then used to train its large language models. Failure to reach an agreement would have led to a trial, with the prospect of damages of up to $1tn. That would have bankrupted Anthropic, a four-year-old start-up backed by Amazon and Google that was recently valued at $170bn. The case is among several facing artificial intelligence companies, including OpenAI and Meta, alleging they have improperly used copyrighted works to train their models. The results will help determine how authors are compensated for the use of their works and could have significant ramifications for how AI companies train their models and the costs of developing them. Mary Rasenberger, chief executive of the Authors Guild, said Friday's settlement sent a "strong message to the AI industry that there are serious consequences when they pirate authors' works to train their AI, robbing those least able to afford it". Anthropic and other AI companies have claimed that training models on copyrighted books is fair use, arguing that their models transform the original work into something with a new meaning. In June, the California district court ruled that Anthropic's use of some copyrighted works in such a way was fair. But it determined that storing pirated works was "inherently, irredeemably infringing", teeing up Friday's settlement. Friday's ruling also means Anthropic will have to destroy the datasets it had downloaded from Library Genesis and Pirate Library Mirror. "In June, the district court issued a landmark ruling on AI development and copyright law, finding that Anthropic's approach to training AI models constitutes fair use. Today's settlement, if approved, will resolve the plaintiffs' remaining legacy claims," said Anthropic's deputy general counsel Aparna Sridhar in a statement. "We remain committed to developing safe AI systems that help people and organisations extend their capabilities, advance scientific discovery and solve complex problems," she added.

[16]

Claude Maker Anthropic Agrees to Pay $1.5 Billion to Authors

Don't miss out on our latest stories. Add PCMag as a preferred source on Google. Anthropic, the company behind chatbot Claude, has agreed to pay "at least" $1.5 billion to settle a class-action lawsuit by authors that accused the AI company of downloading millions of pirated books to train its AI. Amid numerous lawsuits accusing the world's largest AI firms of improperly using copyrighted data for training -- including ChatGPT-maker OpenAI and AI search engine Perplexity -- this represents one of the largest AI copyright settlements yet. The settlement works out to close to $3,000 for each of the 500,000 works mentioned in the class action. According to the filing, the settlement, if approved, "will be the largest publicly reported copyright recovery in history, larger than any other copyright class-action settlement or any individual copyright case litigated to final judgment." In addition to providing monetary compensation, the Amazon-backed AI startup will be required to destroy all remaining copies of the pirated books within 30 days of the judgment. Though Anthropic has agreed to the settlement terms, the court still needs to approve them. The final decision may take until 2026, according to the filing. In the case filed last year, the plaintiffs had alleged that Anthropic committed large-scale copyright infringement by downloading and commercially exploiting books that it obtained from pirated datasets online, including an online library called Books3. Mary Rasenberger, CEO of the Authors Guild and Authors Guild Foundation, praised the results for "authors, publishers, and rightsholders generally," stating that the settlement is a "strong message to the AI industry that there are serious consequences when they pirate authors' works to train their AI, robbing those least able to afford it." OpenAI is currently facing its own class-action lawsuit from authors and novelists, including Game of Thrones author George R.R. Martin, who accuse it of "systematic theft on a mass scale" for using their written works to train its systems. Disclosure: Ziff Davis, PCMag's parent company, filed a lawsuit against OpenAI in April 2025, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.

[17]

Judge skewers $1.5 billion Anthropic settlement with authors in pirated books case over AI training

A federal judge on Monday skewered a $1.5 billion settlement between artificial intelligence company Anthropic and authors who allege nearly half million books had been illegally pirated to train chatbots, raising the specter that the case could still end up going to trial. After spending nearly an hour mostly lambasting a settlement that he believes is full of pitfalls, U.S. District Judge William Alsup scheduled another hearing in San Francisco on September 25 to review whether his concerns had been addressed. "We'll see if I can hold my nose and approve it" then, Alsup said before adjourning Monday's hearing. The judge's misgivings emerged just a few days after Anthropic and attorneys who filed the class-action lawsuit announced a $1.5 billion settlement that is designed to resolve the pirating claims and avert a trial that had been scheduled to begin in December. Alsup had dealt the case a mixed ruling in June, finding that training AI chatbots on copyrighted books wasn't illegal but that Anthropic wrongfully acquired millions of books through pirate websites to help improve its Claude chatbot. The proposed settlement would pay authors and publishers about $3,000 for each of the books covered by the agreement. Justin Nelson, an attorney for the authors, told Alsup that about 465,000 books are on the list of works pirated by Anthropic. The judge said he needed more ironclad assurances that number won't swell to ensure the company doesn't get blindsided by more lawsuits "coming out of the woodwork." The judge set a September 15 deadline for a "drop-dead list" of the total books that were pirated. Alsup's main concern centered on how the claims process will be handled in an effort to ensure everyone eligible knows about it so the authors don't "get the shaft." He set a September 22 deadline for submitting a claims form for him to review before the Sept. 25 hearing to review the settlement again. The judge also raised worries about two big groups connected to the case -- the Authors Guild and Association of American Publishers -- working "behind the scenes" in ways that could pressure some authors to accept the settlement without fully understanding it. Both Authors Guild CEO Mary Rasenberger and Association of American Publishers CEO Maria Pallante attended Monday's hearing, but didn't speak. The trio of authors -- thriller novelist Andrea Bartz and nonfiction writers Charles Graeber and Kirk Wallace Johnson -- who sued last year also sat in the front row of the court gallery, but didn't address Alsup. Before the hearing Johnson, author of "The Feather Thief" and other books, described the settlement as the "beginning of a fight on behalf of humans that don't believe we have to sacrifice everything on the altar of AI." Nelson, the lawyer for the authors, sought to ensure Alsup that he and other lawyers in the case were confident the money will be fairly distributed because the case has been widely covered by the media, with some stories landing on the front pages of major newspapers. "This is not an under-the-radar warranty case," Nelson said. Alsup made it clear, though, that he was leery about the settlement and warned he may decide to let the case go to trial. "I have an uneasy feeling about all the hangers on in the shadows,'" the judge said.

[18]

Judge reviews $1.5B Anthropic settlement proposal with authors over pirated books for AI training

SAN FRANCISCO (AP) -- A federal judge has begun reviewing a landmark class-action settlement agreement between the artificial intelligence company Anthropic and book authors who say the company took pirated copies of their works to train its chatbot. The company has agreed to pay authors and publishers $1.5 billion, amounting to about $3,000 for each of an estimated 500,000 books covered by the settlement. But U.S. District Judge William Alsup has raised some questions about the details of the agreement and asked representatives of author and publisher groups to appear in court Monday to discuss. A trio of authors -- thriller novelist Andrea Bartz and nonfiction writers Charles Graeber and Kirk Wallace Johnson -- sued last year and now represent a broader group of writers and publishers whose books Anthropic downloaded to train its chatbot Claude. Johnson, author of "The Feather Thief" and other books, said he planned to attend the hearing on Monday and described the settlement as the "beginning of a fight on behalf of humans that don't believe we have to sacrifice everything on the altar of AI." Alsup dealt the case a mixed ruling in June, finding that training AI chatbots on copyrighted books wasn't illegal but that Anthropic wrongfully acquired millions of books through pirate websites. Had Anthropic and the authors not agreed to settle, the case would have gone to trial in December.

[19]

Anthropic will pay a record-breaking $1.5 billion to settle copyright lawsuit with authors

Writers involved in the case will reportedly receive $3,000 per work. Anthropic will pay a record-breaking $1.5 billion to settle a class action lawsuit piracy lawsuit brought by authors and publishers. The settlement is the largest-ever payout for a copyright case in the United States. The AI company behind the Claude chatbot reached a settlement in the case last week, but terms of the agreement weren't disclosed at the time. Now, The New York Times that the 500,000 authors involved in the case will get $3,000 per work. "In June, the District Court issued a landmark ruling on AI development and copyright law, finding that Anthropic's approach to training AI models constitutes fair use," Anthropic's Deputy General Counsel Aparna Sridhar said in a statement. "Today's settlement, if approved, will resolve the plaintiffs' remaining legacy claims. We remain committed to developing safe AI systems that help people and organizations extend their capabilities, advance scientific discovery, and solve complex problems."

[20]

AI firm Anthropic agrees to pay authors $1.5bn for pirating work

Anthropic said on Friday that the settlement would "resolve the plaintiffs' remaining legacy claims." The settlement comes as other big tech companies including ChatGPT-maker OpenAI, Microsoft, and Instagram-parent Meta face lawsuits over similar alleged copyright violations. Anthropic, with its Claude chatbot, has long pitched itself as the ethical alternative among its competitors. "We remain committed to developing safe AI systems that help people and organisations extend their capabilities, advance scientific discovery, and solve complex problems," said Aparna Sridhar, Deputy General Counsel at Anthropic which is backed by both Amazon and Google-parent Alphabet. The lawsuit was filed against Anthropic last year by best-selling mystery thriller writer Andrea Bartz, whose novels include We Were Never Here, along with The Good Nurse author Charles Graeber and The Feather Thief author Kirk Wallace Johnson. They accused the company of stealing their work to train its Claude AI chatbot in order to build a multi-billion dollar business. The company holds more than seven million pirated books in a central library, according to Judge Alsup's June decision, and faced up to $150,000 in damages per copyrighted work. His ruling was among the first to weigh in on how Large Language Models (LLMs) can legitimately learn from existing material. It found that Anthropic's use of the authors' books was "exceedingly transformative" and therefore allowed under US law. But he rejected Anthropic's request to dismiss the case. Anthropic was set to stand trial in December over its use of pirated copies to build its library of material. Plaintiffs lawyers called the settlement announced Friday "the first of its kind in the AI era." "It will provide meaningful compensation for each class work and sets a precedent requiring AI companies to pay copyright owners," said lawyer Justin Nelson representing the authors. "This settlement sends a powerful message to AI companies and creators alike that taking copyrighted works from these pirate websites is wrong." The settlement could encourage more cooperation between AI developers and creators, according to Alex Yang, Professor of Management Science and Operations at London Business School. "You need that fresh training data from human beings," Mr Yang said. "If you want to grant more copyright to AI-created content, you must also strengthen mechanisms that compensate humans for their original contributions."

[21]

Anthropic Agrees to $1.5 Billion Settlement for Downloading Pirated Books to Train AI

Authors sued after it was revealed Anthropic downloaded the books from Library Genesis. Anthropic has agreed to pay $1.5 billion to settle a lawsuit brought by authors and publishers over its use of millions of copyrighted books to train the models for its AI chatbot Claude, according to a legal filing posted online. A federal judge found in June that Anthropic's use of 7 million pirated books was protected under fair use but that holding the digital works in a "central library" violated copyright law. The judge ruled that executives at the company knew they were downloading pirated works, and a trial was scheduled for December. The settlement, which was presented to a federal judge on Friday, still needs final approval but would pay $3,000 per book to hundreds of thousands of authors, according to the New York Times. The $1.5 billion settlement would be the largest payout in the history of U.S. copyright law, though the amount paid per work has often been higher. For example, in 2012, a woman in Minnesota paid about $9,000 per song downloaded, a figure brought down after she was initially ordered to pay over $60,000 per song. In a statement to Gizmodo on Friday, Anthropic touted the earlier ruling from June that it was engaging in fair use by training models with millions of books. “In June, the District Court issued a landmark ruling on AI development and copyright law, finding that Anthropic's approach to training AI models constitutes fair use," Aparna Sridhar, deputy general counsel at Anthropic, said in a statement by email. "Today's settlement, if approved, will resolve the plaintiffs' remaining legacy claims. We remain committed to developing safe AI systems that help people and organizations extend their capabilities, advance scientific discovery, and solve complex problems," Sridhar continued. According to the legal filing, Anthropic says the payments will go out in four tranches tied to court-approved milestones. The first payment would be $300 million within five days after the court's preliminary approval of the settlement, and another $300 million within five days of the final approval order. Then $450 million would be due, with interest, within 12 months of the preliminary order. And finally $450 million within the year after that. Anthropic, which was recently valued at $183 billion, is still facing lawsuits from companies like Reddit, which struck a deal in early 2024 to let Google train its AI models on the platform's content. And authors still have active lawsuits against the other big tech firms like OpenAI, Microsoft, and Meta. The ruling from June explained that Anthropic's training of AI models with copyrighted books would be considered fair use under U.S. copyright law because theoretically someone could read "all the modern-day classics" and emulate them, which would be protected: â€|not reproduced to the public a given work’s creative elements, nor even one author’s identifiable expressive styleâ€|Yes, Claude has outputted grammar, composition, and style that the underlying LLM distilled from thousands of works. But if someone were to read all the modern-day classics because of their exceptional expression, memorize them, and then emulate a blend of their best writing, would that violate the Copyright Act? Of course not. "Like any reader aspiring to be a writer, Anthropic’s LLMs trained upon works not to race ahead and replicate or supplant themâ€"but to turn a hard corner and create something different," the ruling said. Under this legal theory, all the company needed to do was buy every book it pirated to lawfully train its models, something that certainly costs less than $3,000 per book. But as the New York Times notes, this settlement won't set any legal precedent that could determine future cases because it isn't going to trial.

[22]

Anthropic agrees to pay $1.5 billion to settle author class action

Sept 5 (Reuters) - Anthropic told a San Francisco federal judge on Friday that it has agreed to pay $1.5 billion to settle a class-action lawsuit from a group of authors who accused the artificial intelligence company of using their books to train its AI chatbot Claude without permission. Anthropic and the plaintiffs in a court filing asked U.S. District Judge William Alsup to approve the settlement, after announcing the agreement in August without disclosing the terms or amount. "If approved, this landmark settlement will be the largest publicly reported copyright recovery in history, larger than any other copyright class action settlement or any individual copyright case litigated to final judgment," the plaintiffs said in the filing. The proposed deal marks the first settlement in a string of lawsuits against tech companies including OpenAI, Microsoft (MSFT.O), opens new tab and Meta Platforms (META.O), opens new tab over their use of copyrighted material to train generative AI systems. Anthropic as part of the settlement said it will destroy downloaded copies of books the authors accused it of pirating, and under the deal it could still face infringement claims related to material produced by the company's AI models. In a statement, Anthropic said the company is "committed to developing safe AI systems that help people and organizations extend their capabilities, advance scientific discovery, and solve complex problems." The agreement does not include an admission of liability. Writers Andrea Bartz, Charles Graeber and Kirk Wallace Johnson filed the class action against Anthropic last year. They argued that the company, which is backed by Amazon (AMZN.O), opens new tab and Alphabet (GOOGL.O), opens new tab, unlawfully used millions of pirated books to teach its AI assistant Claude to respond to human prompts. The writers' allegations echoed dozens of other lawsuits brought by authors, news outlets, visual artists and others who say that tech companies stole their work to use in AI training. The companies have argued their systems make fair use of copyrighted material to create new, transformative content. Alsup ruled in June that Anthropic made fair use of the authors' work to train Claude, but found that the company violated their rights by saving more than 7 million pirated books to a "central library" that would not necessarily be used for that purpose. A trial was scheduled to begin in December to determine how much Anthropic owed for the alleged piracy, with potential damages ranging into the hundreds of billions of dollars. The pivotal fair-use question is still being debated in other AI copyright cases. Another San Francisco judge hearing a similar ongoing lawsuit against Meta ruled shortly after Alsup's decision that using copyrighted work without permission to train AI would be unlawful in "many circumstances." Reporting by Blake Brittain and Mike Scarcella in Washington; Editing by David Bario, Lisa Shumaker and Matthew Lewis Our Standards: The Thomson Reuters Trust Principles., opens new tab * Suggested Topics: * Artificial Intelligence * Consumer Protection Blake Brittain Thomson Reuters Blake Brittain reports on intellectual property law, including patents, trademarks, copyrights and trade secrets, for Reuters Legal. He has previously written for Bloomberg Law and Thomson Reuters Practical Law and practiced as an attorney.

[23]

Anthropic agrees to pay $1.5 billion to settle authors' copyright lawsuit

If Anthropic's settlement is approved, it will be the largest publicly reported copyright recovery in history, the filing said. Anthropic has agreed to pay at least $1.5 billion to settle a class action lawsuit with a group of authors, who claimed the artificial intelligence startup had illegally accessed their books. The company will pay roughly $3,000 per book plus interest, and agreed to destroy the datasets containing the allegedly pirated material, according to a filing on Friday. The lawsuit against Anthropic has been closely watched by AI startups and media companies that have been trying to determine what copyright infringement means in the AI era. If Anthropic's settlement is approved, it will be the largest publicly reported copyright recovery in history, according to the filing. "This settlement sends a powerful message to AI companies and creators alike that taking copyrighted works from these pirate websites is wrong," Justin Nelson, the attorney for the plaintiffs, told CNBC in a statement. Anthropic didn't immediately respond to CNBC's request for comment. The lawsuit, filed in the U.S. District Court for the Northern District of California, was brought last year by authors Andrea Bartz, Charles Graeber and Kirk Wallace Johnson. The suit alleged that Anthropic had carried out "largescale copyright infringement by downloading and commercially exploiting books that it obtained from allegedly pirated datasets," the filing said. In June, a judge ruled that Anthropic's use of books to train its AI models was "fair use," but ordered a trial to assess whether the company infringed on copyright by obtaining works from the databases Library Genesis and Pirate Library Mirror. The case was slated to proceed to trial in December, according to Friday's filing. Earlier this week, Anthropic said it closed a $13 billion funding round that valued the company at $183 billion. The financing was led by Iconiq, Fidelity Management and Lightspeed Venture Partners.

[24]

Anthropic to pay authors $1.5 billion to settle lawsuit over pirated chatbot training material

NEW YORK (AP) -- Artificial intelligence company Anthropic has agreed to pay $1.5 billion to settle a class-action lawsuit by book authors who say the company took pirated copies of their works to train its chatbot. The landmark settlement, if approved by a judge as soon as Monday, could mark a turning point in legal battles between AI companies and the writers, visual artists and other creative professionals who accuse them of copyright infringement. The company has agreed to pay authors about $3,000 for each of an estimated 500,000 books covered by the settlement. "As best as we can tell, it's the largest copyright recovery ever," said Justin Nelson, a lawyer for the authors. "It is the first of its kind in the AI era." A trio of authors -- thriller novelist Andrea Bartz and nonfiction writers Charles Graeber and Kirk Wallace Johnson -- sued last year and now represent a broader group of writers and publishers whose books Anthropic downloaded to train its chatbot Claude. A federal judge dealt the case a mixed ruling in June, finding that training AI chatbots on copyrighted books wasn't illegal but that Anthropic wrongfully acquired millions of books through pirate websites. If Anthropic had not settled, experts say losing the case after a scheduled December trial could have cost the San Francisco-based company even more money. "We were looking at a strong possibility of multiple billions of dollars, enough to potentially cripple or even put Anthropic out of business," said William Long, a legal analyst for Wolters Kluwer. U.S. District Judge William Alsup of San Francisco has scheduled a Monday hearing to review the settlement terms. Books are known to be important sources of data -- in essence, billions of words carefully strung together -- that are needed to build the AI large language models behind chatbots like Anthropic's Claude and its chief rival, OpenAI's ChatGPT. Alsup's June ruling found that Anthropic had downloaded more than 7 million digitized books that it "knew had been pirated." It started with nearly 200,000 from an online library called Books3, assembled by AI researchers outside of OpenAI to match the vast collections on which ChatGPT was trained. Debut thriller novel "The Lost Night" by Bartz, a lead plaintiff in the case, was among those found in the Books3 dataset. Anthropic later took at least 5 million copies from the pirate website Library Genesis, or LibGen, and at least 2 million copies from the Pirate Library Mirror, Alsup wrote. The Authors Guild told its thousands of members last month that it expected "damages will be minimally $750 per work and could be much higher" if Anthropic was found at trial to have willfully infringed their copyrights. The settlement's higher award -- approximately $3,000 per work -- likely reflects a smaller pool of affected books, after taking out duplicates and those without copyright. On Friday, Mary Rasenberger, CEO of the Authors Guild, called the settlement "an excellent result for authors, publishers, and rightsholders generally, sending a strong message to the AI industry that there are serious consequences when they pirate authors' works to train their AI, robbing those least able to afford it." The Danish Rights Alliance, which successfully fought to take down one of those shadow libraries, said Friday that the settlement would be of little help to European writers and publishers whose works aren't registered with the U.S. Copyright Office. "On the one hand, it's comforting to see that compiling AI training datasets by downloading millions of books from known illegal file-sharing sites comes at a price," said Thomas Heldrup, the group's head of content protection and enforcement. On the other hand, Heldrup said it fits a tech industry playbook to grow a business first and later pay a relatively small fine, compared to the size of the business, for breaking the rules. "It is my understanding that these companies see a settlement like the Anthropic one as a price of conducting business in a fiercely competitive space," Heldrup said. The privately held Anthropic, founded by ex-OpenAI leaders in 2021, said Tuesday that it had raised another $13 billion in investments, putting its value at $183 billion. Anthropic also said it expects to make $5 billion in sales this year, but, like OpenAI and many other AI startups, it has never reported making a profit, relying instead on investors to back the high costs of developing AI technology for the expectation of future payoffs.

[25]

Anthropic settles with authors in first-of-its-kind AI copyright infringement lawsuit