Anthropic's Claude AI Gains Memory and Incognito Features, Enhancing Workplace Productivity

5 Sources

5 Sources

[1]

Anthropic's Claude AI can now automatically 'remember' past chats

Anthropic will now let its Claude AI chatbot "remember" the details of previous conversations without prompting. The feature is only rolling out for Team and Enterprise users for now, allowing Claude to automatically incorporate someone's preferences, the context of the project they're working on, and their main priorities into each of its responses. Anthropic rolled out the ability for paid users to prompt Claude to remember past chats last month, but now the chatbot can pull up these details without Team and Enterprise users having to ask. Claude's memory also carries over to projects, a feature that lets Pro and Teams generate things like diagrams, website designs, graphics, and more, based on files they upload to the chatbot. It seems to be particularly focused on work-related details, like a "team's processes" and "client needs." Anthropic notes that memory is "fully optional." Anthropic says users can view and edit the memory Claude has stored from their settings menu. "Based on what you tell Claude to focus on or to ignore, Claude will adjust the memories it references," Anthropic says. Both OpenAI and Google have already launched cross-chat memories for their chatbots. Last month, a report from The New York Times linked ChatGPT's rollout of cross-chat memories to an increase in reports of "delusional" AI chats. Along with memories, Anthropic is rolling out incognito chats for all users, which Claude won't save to its chat history or refer to in future chats. Google similarly rolled out Private Chats for Gemini in August.

[2]

Anthropic (finally) rolls out Memory feature -- here's who can get it

Anthropic has introduced a major upgrade for Claude. You now get the much-anticipated Memory feature -- similar to ChatGPT's memory -- which is now available for Team and Enterprise customers. But, with so many rival chatbots already offering the important feature that makes the chatbot "remember," I have a feeling free users might be seeing it soon. Clause Team and Enterprise users will now automatically have their settings retained, which means the chatbot will remember everything from project details and preferences to the user's name. The feature is optional and can be fully controlled via settings. Users who want to view, edit or disable what the chatbot remembers can easily do so, The option to go completely "incognito" (similar to ChatGPT's Temporary chat) is also still an option. This much anticipated memory feature comes at a cost. It's not even in Max, yet, which is $100, month. The next cheapest tier is Pro, which is $17/month. Yet, I still think free users might see it sooner. Here's four reasons why I think this feature won't stay limited to paid tier users for long. Anthropic's competitors already offer memory for free. Everyone from OpenAI, Google Gemini and other best chatbots have some form of memory-like features across more tiers. Free, Pro, or Max users often complain about having to constantly re-establish context. As memory becomes expected rather than exceptional, free users will push for access. The recently launched Max plan (April 20225) reinforces the idea that Anthropic is segmenting features by usage and need. In my mind, that means it's only a matter of time until features initally reserved for Enterprise will trickle down, even if partially for lower tiers, especially if the infrascruture already supports it. Memory may be locked behind paid plans right now, but Anthropic made Incognito Chat available for everyone. That shows the company believes features that touch privacy and memory are viable across tiers, even for non-paying users. In other words, with the appropriate controls, if Anthropic is confident in their privacy-first rollout, Memory is a natural next candidate for all users. Here's the thing. Anthropic's Memory is project-scoped, and Memory summaries are editable. Meaning, you can determine what things the chatbot remembers. These features suggest a solid groundwork for broader deployment. They give users great control, help set boundaries for projects vs. general use and safe scaling. Once internal performance, cost and privacy tests pass, expanding access becomes much more feasible. For all tiers to get the Memory option, Anthropic will need to ensure privacy controls are airtight so free-tier uses feel safe even enabling it. Other factotrs include performance costs (storage, retrieval, context management), which will need to be optimized so Memory for lower subscription levels doesn't degrade service. When this Claude's Memory feature is rolled out for free tier (I say "when" because I'm that confident), the strategy might start with limited Memory length or project-only memory for free/Max users before opening the full feature. I also imagine there will also be clear opt-in settings, summaries and UI cues, which are essential for users to know what memory is active and how to manage, view, or delete it. Right now, Memory is a paid teams feature, but everything Anthropic is doing suggests that this isn't a permanent restriction. As user expectations rise and AI assistants become more central to both work and life, memory seems to me like a standard feature, rather than an extra. If you use Claude Pro or Free keep an eye out -- this feature might be closer than it seems. Of course, when it does roll out, I'll be among the first to share the news. Follow Tom's Guide on Google News to get our up-to-date news, how-tos, and reviews in your feeds. Make sure to click the Follow button.

[3]

You have to pay Claude to remember you, but the AI will forget your conversations for free

The new features arrive with Claude's newly upgraded memory system for subscribers to Claude Max, Team, and Enterprise If you enjoy using Anthropic's Claude AI chatbot but don't really like the idea of your conversations lingering forever in the cloud, you're in luck. Claude can now go Incognito, meaning any interaction will be private and unsaved. You won't see it in your history or when you open the app. In an industry where AI privacy often comes with a monthly price tag, Anthropic's decision speaks to how explosively popular Incognito mode is among those leery of how much personal information digital tools absorb to be used at the whim of massive tech companies. Claude now offers this kind of ephemeral, memory-free mode to every user on every subscription tier, including the free level. Just click the little ghost icon when starting a new chat, and it's activated. The black border and label confirm your chat is incognito. When you close the window, it's gone. No history. No memory. No trace aside from a temporary 30-day retention period for safety. As with web browsers, Incognito mode is great if you want access to a digital toolkit without everything you look at being a potential news story. Maybe you are embarrassed by your personal, speculative, or just plain weird question. Now you can ask about it without the fear that Claude's going to bring it up later or incorporate it into a future response. It's not just about hiding embarrassing questions. It's about giving users a mental sandbox: a space to think out loud, test ideas, or learn something new without it becoming part of the chatbot's long-term memory. That long-term memory has just started rolling out now for Claude. Unlike Incognito mode, though, the memory features are only for Team and Enterprise subscribers at the moment. Opting into the memory features allows Claude to recall context from conversations, remember previous projects in Projects Mode, store notes about your work preferences, and even help you pick up where you left off. Each project's memory is isolated, which means your work chats won't bleed into your personal writing. But here's where it gets interesting: incognito mode and memory don't compete. They complement each other. Use incognito when you want a clean slate, free from influence or history. Use memory when you want Claude to be a continuity machine, helping you carry long-term threads across chats and tasks. And if you're the kind of person who changes your mind a lot about what you want remembered, Claude's approach is refreshingly respectful. Nothing gets saved unless you opt in. And if you don't want memory at all? You don't have to use it. It also sets Claude apart from some of its biggest rivals. While OpenAI's ChatGPT and Google Gemini both offer their own versions of memory and private chats, they don't make those distinctions quite as clear or customizable. Claude's implementation feels unusually transparent thanks to its prominent labels and icons Not having the memory feature in place is both appealing and seems to negate some of the possibilities of an AI chatbot. They're their own bubble and =can't be converted into regular ones after the fact, so if you forget to copy something important before closing the window, it's gone. You also can't use incognito mode inside Claude's "Projects" feature. Still, the broader implication that people want to at least have the option for privacy in their AI chatbot conversations is obvious. Incognito mode lowers the barrier to entry for people who are curious about AI but wary of leaving a data trail. And, oddly enough, an AI that can also forget things or at least imitate the experience seems a lot more human than one with total recall.

[4]

Anthropic adds memory, incognito mode to Claude Team and Enterprise, including portability to other LLMs

San Francisco-based AI startup Anthropic has announced a new set of productivity-focused features for its Claude AI platform, bringing memory capabilities to teams using its Team ($30/$150 per person per month for standard or premium) and Enterprise plans (variable pricing). Starting today, users can enable Claude to remember project details, team preferences, and work processes -- aiming to reduce repetitive context-setting and streamline complex collaboration across chats. They can also download their memories on a project-by-project basis and move them to other chatbots such as OpenAI's ChatGPT and Google's Gemini, according to the company's documentation. While memory imports are currently experimental, the feature represents a step toward interoperability among AI systems. However, Claude prioritizes work-related context, so imported personal details that are unrelated to professional use may not be retained. To get started, users can enable memory in settings and choose to generate memory from past conversations. Claude can then respond to queries such as "what were we working on last week?" using saved memory and chat history. Memory designed for workplaces This new memory feature is designed specifically for professional settings. Claude can now retain information about ongoing projects, client needs, and team workflows. The system is structured around project-based memory, meaning users can maintain separate memory contexts for distinct initiatives. For example, a product team planning a launch can keep that context siloed from client service discussions or internal operations. Anthropic says this helps maintain boundaries between unrelated conversations and protects sensitive data from being inadvertently shared across contexts. Building on individual user memory This launch for workplace teams builds on an earlier version of memory introduced to individual users on Max, Team, and Enterprise plans in August 2025. As reported by TechRadar, that update enabled Claude to recall past conversations only when prompted, providing continuity without automatic personalization. Unlike competitors like ChatGPT and Google Gemini -- which proactively store and integrate past conversations -- Claude's memory was designed from the start as an opt-in, user-controlled tool. The same is true today: for organizations concerned about data control, Anthropic has made memory optional. Users have full control over whether memory is enabled, and enterprise administrators can disable the feature at the organizational level. This opt-in approach reflects Anthropic's stated commitment to rolling out memory features with a focus on safety and responsible use. At the time, Anthropic emphasized that memory would only be activated upon request, framing this approach as a privacy-focused alternative to persistent background tracking. Users could ask Claude to surface past discussions, but the AI would otherwise maintain a generic persona. This boundary-first philosophy continues in the workplace rollout, with added tools to view, edit, and control memory directly. Transparency and user control Users can view and manage what Claude remembers through a memory summary interface. This summary, available via settings, offers a transparent look into what the AI has retained from past chats. Users can make edits directly or update the summary by prompting Claude in a conversation. Claude adjusts what it remembers and references based on this feedback. Introducing Incognito Chat for private, temporary conversations The company has also introduced Incognito chat, a new mode available to all Claude users, regardless of plan. Incognito chat sessions do not appear in conversation history and do not contribute to Claude's memory. This mode is intended for situations where confidentiality or a fresh, context-free exchange is needed -- such as brainstorming sessions or sensitive strategic discussions. Conversations held in Incognito mode do not alter existing memory or history, and standard data retention settings continue to apply for Team and Enterprise users. Building long-term, persistent context for teams With this update, Anthropic positions Claude as a more context-aware collaborator for teams managing multiple projects and workflows. By combining memory management, privacy controls, and portability, the new capabilities aim to improve continuity and reduce friction in workplace AI interactions.

[5]

Anthropic lets Claude remember previous interactions to streamline work - SiliconANGLE

Anthropic lets Claude remember previous interactions to streamline work The premium versions of Anthropic PBC's Claude AI chatbot are getting a useful upgrade with the addition of "memory", which will enable it to remember earlier interactions with users without any prompting. The new feature, which aims to improve Claude AI's contextual understanding, is being launched for Team and Enterprise subscribers only, and will enable the chatbot to automatically remember each user's preferences, the projects they're working on, and other relevant aspects of their work. One month ago, Anthropic gave Claude the ability to remember past chats when prompted to do so, but now users won't need to ask. Claude's memory is also being extended to the projects feature that lets Teams and Enterprise users generate graphics, diagrams, websites and more based on files they upload to it. Anthropic said in a blog post that the memory feature is focused on remembering work-related details such as team processes and client needs, with the goal being to reduce the number of repetitive, context-setting interactions and streamline collaboration and productivity across chats. Users will be able to view and edit Claude's memory from the settings menu, and based on what they tell it to focus on or ignore, it will "adjust the memories it references", the company said. To make memory even more useful, Anthropic said users will be able to download Claude's memories for a specific project and move them to third-party chatbots such as Google LLC's Gemini Pro or OpenAI's ChatGPT. It's an interesting addition that speaks to the demand for more interoperability between AI systems, but Anthropic notes that its memories prioritize work-related context, and will not export unrelated details to those chatbots, enhancing privacy somewhat. Anthropic isn't the first to do this. Both Google and OpenAI have added cross-chat memories to their respective chatbots, but these kinds of features are still experimental and may not always be reliable. Last month, the New York Times said in a report that ChatGPT's cross-chat memories were linked to an increase in the number of "delusional" responses from chatbots, so users can only be urged to proceed with caution. Anthropic asserted that Claude's memory abilities are entirely optional and are switched off by default. To enable it, users must go to Claude's settings and select the option to generate memory from previous interactions. Once done, Claude will then be able to respond immediately to queries such as, "what were we working on last week?", by going over its past memories. To reassure more cautious customers, Anthropic has also given enterprise administrators the option to disable memory at the organizational level. Together with memory, Claude AI is also getting an incognito chat experience, and it's being made available to all users, including those on its free tier. When chatting incognito, Claude will not remember a thing, and the chats will not appear in the conversation history once the user's session is closed. Anthropic said the mode is aimed at users who need confidentiality, or want to engage in a fresh, context-free conversation with Claude. The memory and incognito updates came just two days after Anthropic announced a new "agentic" feature that allows Claude to create Word, PDF, PowerPoint and Excel documents directly within its chat interface. This capability launched on Tuesday as a preview for Claude Max, Team and Enterprise subscribers, and will also comment to Claude Pro users in a few week's time. With this update, Claude effectively becomes an AI agent, using its reasoning capabilities to break down multistep tasks and complete them in a logical way using third-party software and applications. Anthropic said it's giving Claude access to a "private computer environment", where it can write code and run applications to create files and perform analyses when users request it to do so. It's not the first agentic feature of Claude. Last month, the company rolled out an experimental plugin that allows the chatbot to utilize the Chrome web browser. Anthropic's rivals are also experimenting with agentic features. For instance, ChatGPT has introduced agents that can browse the internet and compile reports by accessing a user's calendar and personal files, while Google has added advanced research capabilities to Gemini, enabling it to compile detailed research reports. This feature is also optional. To create PDFs and other documents with Claude, users will need to go to the settings, navigate to the "experimental" features option and switch on "Upgraded file creation and analysis".

Share

Share

Copy Link

Anthropic introduces memory capabilities for Claude AI's Team and Enterprise users, allowing the chatbot to remember past interactions and streamline work processes. The update also includes an incognito mode for all users, ensuring privacy in conversations.

Claude AI's Memory Upgrade

Anthropic has introduced a significant update to its Claude AI chatbot, focusing on enhancing workplace productivity and user privacy. The new features, primarily targeting Team and Enterprise subscribers, include an advanced memory system and an incognito mode for all users

1

2

.

Source: VentureBeat

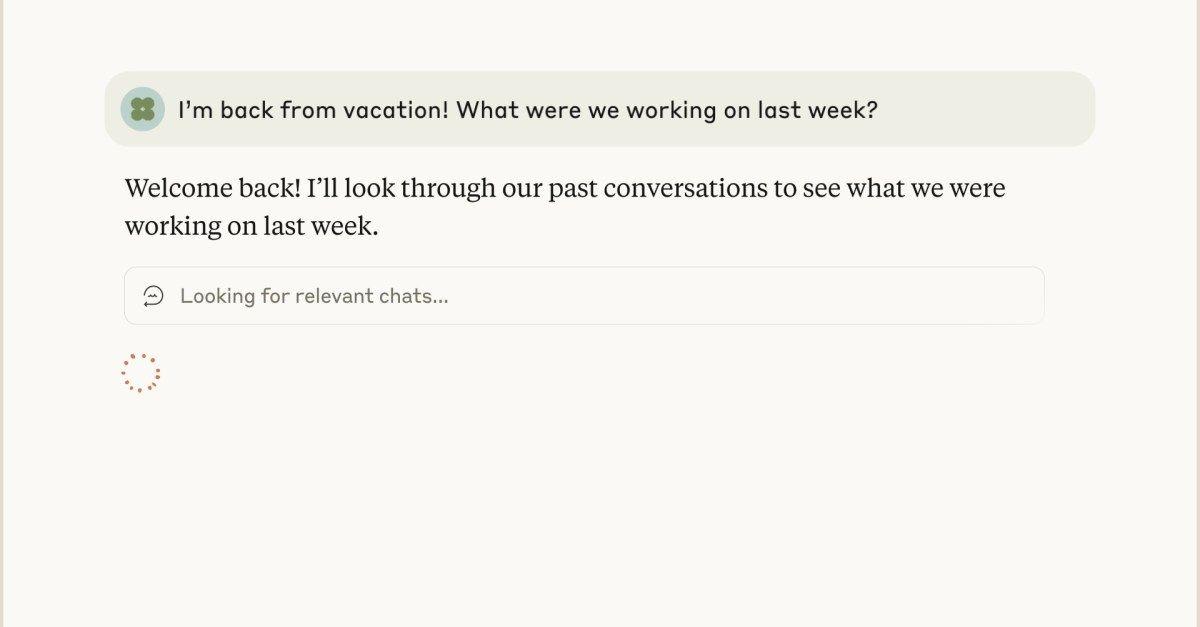

Automatic Memory for Enhanced Productivity

Claude's new memory feature allows the AI to automatically remember details from previous conversations without prompting. This capability is designed to streamline work processes by recalling user preferences, project contexts, and team priorities

1

. The memory function extends to Claude's Projects mode, enabling it to generate diagrams, website designs, and graphics based on uploaded files while maintaining context4

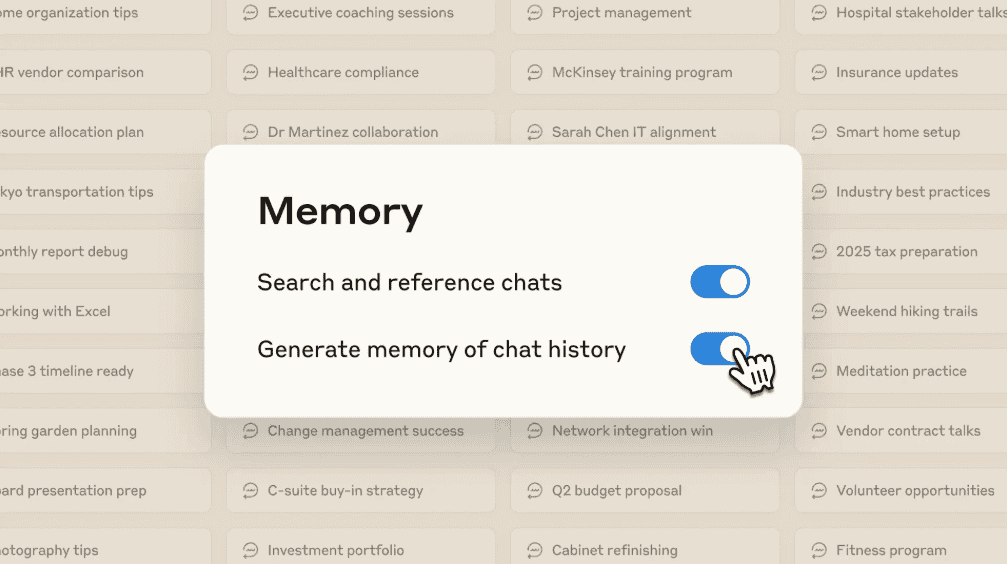

.User Control and Privacy

Anthropic has prioritized user control in implementing these features. Team and Enterprise users can view and edit Claude's stored memories from the settings menu, allowing them to adjust what the AI focuses on or ignores

1

. The memory feature is optional and can be disabled at the organizational level for enterprises concerned about data control4

.

Source: SiliconANGLE

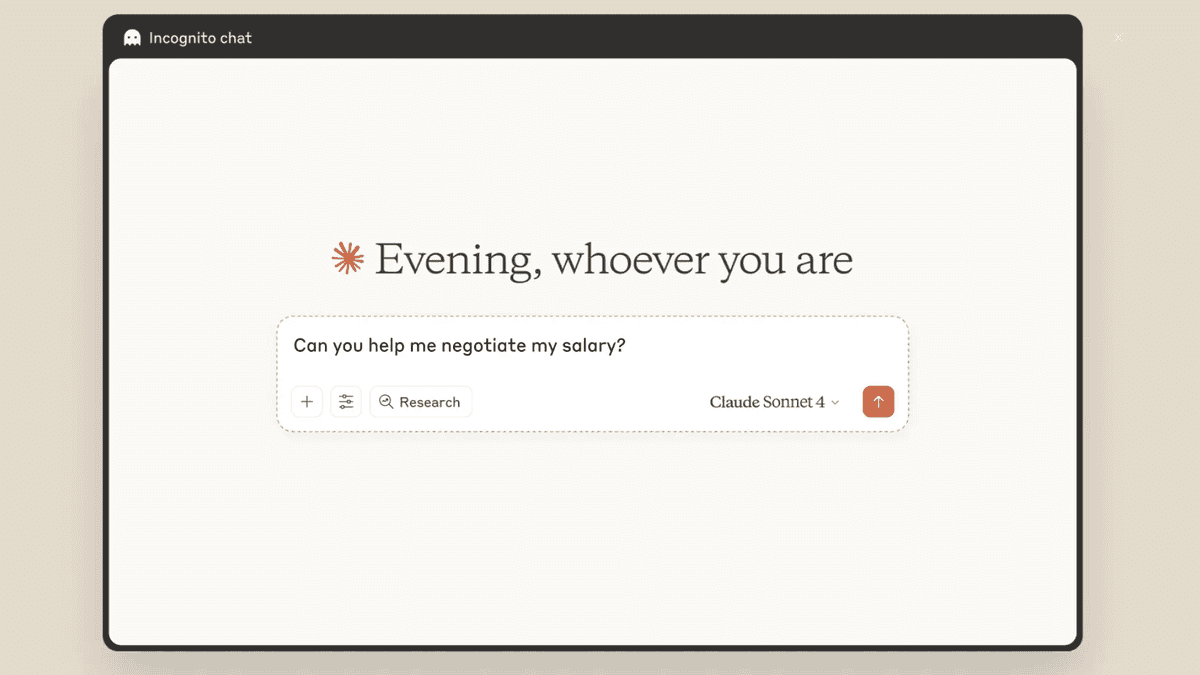

Incognito Mode for All Users

In a move towards enhanced privacy, Anthropic has introduced an incognito mode available to all Claude users, regardless of their subscription tier. This feature ensures that conversations are not saved to chat history and are not referenced in future interactions

3

. Incognito mode provides a clean slate for users who want to engage in private or exploratory conversations without leaving a data trail3

.

Source: TechRadar

Memory Portability and Interoperability

An intriguing aspect of Claude's memory upgrade is the ability to download memories on a project-by-project basis and potentially move them to other chatbots like OpenAI's ChatGPT or Google's Gemini

4

. While this feature is still experimental, it represents a step towards greater interoperability among AI systems4

.Related Stories

Pricing and Availability

The memory feature is currently available for Team and Enterprise subscribers, with Team plans starting at $30 per person per month for standard use and $150 for premium

4

. Enterprise pricing is variable. The incognito mode, however, is available to all users, including those on the free tier5

.Future Implications

As AI chatbots become more integrated into workplace processes, features like memory and privacy controls are likely to become standard. Anthropic's approach to balancing productivity enhancements with user privacy could set a precedent in the AI industry

2

3

. The potential for memory portability between different AI platforms also hints at a future where AI assistants may become more interoperable and user-centric4

.References

Summarized by

Navi

[3]

[4]

Related Stories

Anthropic Expands Claude's Memory Feature to All Paid Subscribers

23 Oct 2025•Technology

Anthropic's Claude Chatbot Gains Memory Feature: A Step Towards Personalized AI Assistance

12 Aug 2025•Technology

Anthropic's Claude AI Launches on Android, Challenging ChatGPT with Enhanced Features and Security

17 Jul 2024

Recent Highlights

1

Samsung unveils Galaxy S26 lineup with Privacy Display tech and expanded AI capabilities

Technology

2

Anthropic refuses Pentagon's ultimatum over AI use in mass surveillance and autonomous weapons

Policy and Regulation

3

AI models deploy nuclear weapons in 95% of war games, raising alarm over military use

Science and Research