Apple's iOS 26: A Leap Forward with AI-Powered Features and Liquid Glass Design

3 Sources

3 Sources

[1]

All the Important New iOS 26 Features, From Liquid Glass to Photos App Fixes

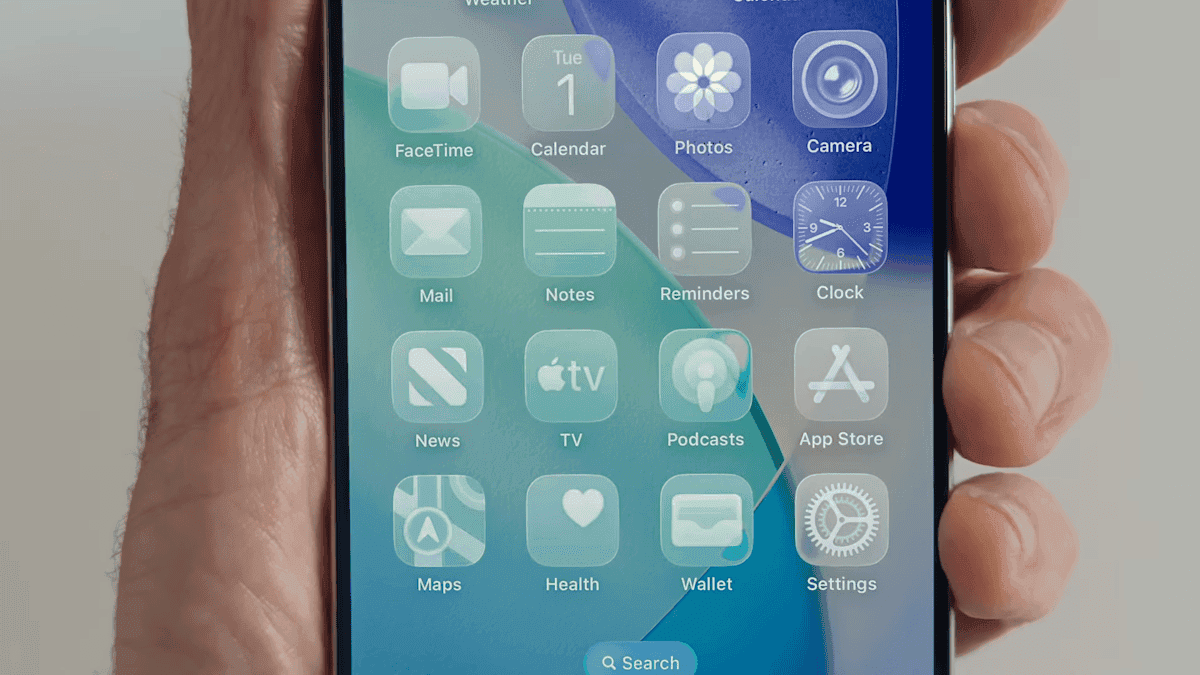

While we look ahead to what new iPhone 17 models might bring to the phone's hardware, we've already got a look at the future of iPhone software, iOS 26. The new Liquid Glass interface is a major design refresh that will make its way across all of Apple's product lines. The Camera and Photos apps are gaining long-awaited functional redesigns; the Messages and Phone apps are taking a firmer stand against unwanted texts and calls; and Apple Intelligence contributes some improvements in a year when Siri has been delayed. The next version of the operating system is due to ship in September or October (likely with new iPhone 17 models), but developer betas are available now, with a public beta expected this month. After more than a decade of a flat, clean user interface -- an overhaul introduced in iOS 7 when former Apple Chief Design Officer Jony Ive took over the design of software as well as hardware -- the iPhone is getting a new look. The new design extends throughout the Apple product lineup, from iOS to WatchOS, TVOS and iPadOS. The Liquid Glass interface also now enables a third way to view app icons on the iPhone home screen. Not content with Light and Dark modes, iOS 26 now features an All Clear look -- every icon is clear glass with no color. Lock screens can also have an enhanced 3D effect using spatial scenes, which use machine learning to give depth to your background photos. Translucency is the defining characteristic of Liquid Glass, behaving like glass in the real world in the way it deals with light and color of objects behind and near controls. But it's not just a glassy look: The "liquid" part of Liquid Glass refers to how controls can merge and adapt -- dynamically morphing, in Apple's words. In the example Apple showed, the glassy time numerals on an iPhone lock screen stretched to accommodate the image of a dog and even shrunk as the image shifted to accommodate incoming notifications. The dock and widgets are now rounded, glassy panels that float above the background. The Camera app is getting a new, simplified interface. You could argue that the current Camera app is pretty minimal, designed to make it quick to frame a shot and hit the big shutter button. But the moment you get into the periphery, it becomes a weird mix of hidden controls and unintuitive icons. Now, the Camera app in iOS 26 features a "new, more intuitive design" that takes minimalism to the extreme. The streamlined design shows just two modes: Video or Camera. Swipe left or right to choose additional modes, such as Pano or Cinematic. Swipe up for settings such as aspect ratio and timers, and tap for additional preferences. With the updated Photos app, viewing the pictures you capture should be a better experience -- a welcome change that customers have clamored for since iOS 18's cluttered attempt. Instead of a long, difficult-to-discover scrolling interface, Photos regains a Liquid Glass menu at the bottom of the screen. The Phone app has kept more closely to the look of its source than others: a sparse interface with large buttons as if you're holding an old-fashioned headset or pre-smartphone cellular phone. iOS 26 finally updates that look not just with the new overall interface but in a unified layout that takes advantage of the larger screen real estate on today's iPhone models. It's not just looks that are different, though. The Phone app is trying to be more useful for dealing with actual calls -- the ones you want to take. The Call Screening feature automatically answers calls from unknown numbers, and your phone rings only when the caller shares their name and reason for calling. Or what about all the time wasted on hold? Hold Assist automatically detects hold music and can mute the music but keep the call connected. Once a live agent becomes available, the phone rings and lets the agent know you'll be available shortly. The Messages app is probably one of the most used apps on the iPhone, and for iOS 26, Apple is making it a more colorful experience. You can add backgrounds to the chat window, including dynamic backgrounds that show off the new Liquid Glass interface. In addition to the new look, group texts in Messages can incorporate polls for everyone in the group to reply to -- no more scrolling back to find out which restaurant Brett suggested for lunch that you missed. Other members in the chat can also add their own items to a poll. A more useful feature is a feature to detect spam texts better and screen unknown numbers, so the messages you see in the app are the ones you want to see and not the ones that distract you. In the Safari app, the Liquid Glass design floats the tab bar above the web page (although that looks right where your thumb is going to be, so it will be interesting to see if you can move the bar to the top of the screen). As you scroll, the tab bar shrinks. FaceTime also gets the minimal look, with controls in the lower-right corner that disappear during the call to get out of the way. On the FaceTime landing page, posters of your contacts, including video clips of previous calls, are designed to make the app more appealing. Do you like the sound of that song your friend is playing but don't understand the language the lyrics are in? The Music app includes a new lyrics translation feature that displays along with the lyrics as the song plays. And for when you want to sing along with one of her favorite K-pop songs, for example, but you don't speak or read Korean, a lyrics pronunciation feature spells out the right way to form the sounds. AutoMix blends songs like a DJ, matching the beat and time-stretching for a seamless transition. And if you find yourself obsessively listening to artists and albums again and again, you can pin them to the top of your music library for quick access. The iPhone doesn't get the same kind of gaming affection as Nintendo's Switch or Valve's Steam Deck, but the truth is that the iPhone and Android phones are used extensively for gaming -- Apple says half a billion people play games on iPhone. Trying to capitalize on that, a new Games app acts as a specific portal to Apple Arcade and other games. Yes, you can get to those from the App Store app, but the Games app is designed to remove a layer of friction so you can get right to the gaming action. Although not specific to iOS, Apple's new live translation feature is ideal on the iPhone when you're communicating with others. It uses Apple Intelligence to dynamically enable you to talk to someone who speaks a different language in near-real time. It's available in the Messages, FaceTime and Phone apps and shows live translated captions during a conversation. Updates to the Maps app sometimes involve adding more detail to popular areas or restructuring the way you store locations. Now, the app takes note of routes you travel frequently and can alert you of any delays before you get on the road. It also includes a welcome feature for those of us who get our favorite restaurants mixed up: visited places. The app notes how many times you've been to a place, be that a local business, eatery or tourist destination. It organizes them in categories or other criteria such as by city to make them easier to find the next time. Liquid Glass also makes its way to CarPlay in your vehicle, with a more compact design when a call comes in that doesn't obscure other items, such as a directional map. In Messages, you can apply tapbacks and pin conversations for easy access. Widgets are now part of the CarPlay experience, so you can focus on just the data you want, like the current weather conditions. And Live Activities appear on the CarPlay screen, so you'll know when that coffee you ordered will be done or when a friend's flight is about to arrive. The Wallet app is already home for using Apple Card, Apple Pay, electronic car keys and for storing tickets and passes. In iOS 26, you can create a new Digital ID that acts like a passport for age and identity verification (though it does not replace a physical passport) for domestic travel for TSA screening at airports. The app can also let you use rewards and set up installment payments when you purchase items in a store, not just for online orders. And with the help of Apple Intelligence, the Wallet app can help you track product orders, even if you did not use Apple Pay to purchase them. It can pull details such as shipping numbers from emails and texts so that information is all in one place. Although last year's WWDC featured Apple Intelligence features heavily, improvements to the AI tech were less prominent this year, folded into the announcements during the WWDC keynote. As an alternative to creating Genmoji from scratch, you can combine existing emojis -- "like a sloth and a light bulb when you're the last one in the group chat to get the joke," to use Apple's example. You can also change expressions in Genmoji of people you know that you've used to create the image. Image Playground adds the ability to tap into ChatGPT's image generation tools to go beyond the app's animation or sketch styles. Visual Intelligence can already use the camera to try to decipher what's in front of the lens. Now the technology works on the content on the iPhone's screen, too. It does this by taking a screenshot (press the sleep and volume up buttons) and then including a new Image Search option in that interface to find results across the web or in other apps such as Etsy. This is also a way to add event details from images you come across, like posters for concerts or large gatherings. (Perhaps this could work for QR codes as well?) In the screenshot interface, Visual Intelligence can parse the text and create an event in the Calendar app. Not everything fits into a keynote presentation -- even, or maybe especially, when it's all pre-recorded -- but some of the more interesting new features in iOS 26 went unremarked during the big reveal. For instance: The finished version of iOS 26 will be released in September or October with new iPhone 17 models. In the meantime, developers can install the first developer betas now, with an initial public beta arriving this month. (Don't forget to go into any beta software with open eyes and clear expectations.) Follow the WWDC 2025 live blog for details about Apple's other announcements. iOS 26 will run on the iPhone 11 and later models, including the iPhone SE (2nd generation and later). That includes:

[2]

These Are the 6 Apple Intelligence Features I'm Actively Using

Current Apple conversations are currently more focused on what colors and features we'll see in upcoming iPhone 17 models instead of the state of Apple Intelligence -- which is probably a relief to people within Apple. But although the company's homegrown AI technology has had its setbacks, it's far from being a bust, and I realized it's been genuinely useful to me in several ways. So I sat down to figure out just which of the current Apple Intelligence features I regularly use. They aren't necessarily the showy ones, like Image Playground, but ones that help in small, significant ways. If you have a compatible iPhone -- an iPhone 15 Pro, iPhone 16E, iPhone 16 or iPhone 16 Pro (or their Plus and Max variants) -- I want to share six features that I'm turning to nearly every day. More features will be added as time goes on -- and keep in mind that Apple Intelligence is still officially beta software -- but this is where Apple is starting its AI age. On the other hand, maybe you're not impressed with Apple Intelligence, or want to wait until the tools evolve more before using them? You can easily turn off Apple Intelligence entirely or use a smaller subset of features. This feature arrived only recently, but it's become one of my favorites. When a notification arrives that seems like it could be more important than others, Prioritize Notifications pops it to the top of the notification list on the lock screen (with a colorful Apple Intelligence shimmer, of course). In my experience so far, those include weather alerts, texts from people I regularly communicate with and email messages that contain calls to action or impending deadlines. To enable it, go to Settings > Notifications > Prioritize Notifications and then turn the option on. You can also enable or disable priority alerts from individual apps from the same screen. You're relying on the AI algorithms to decide what gets elevated to a priority -- but it seems to be off to a good start. In an era with so many demands on our attention and seemingly less time to dig into longer topics ... Sorry, what was I saying? Oh, right: How often have you wanted a "too long; didn't read" version of not just long emails but the fire hose of communication that blasts your way? The ability to summarize notifications, Mail messages and web pages is perhaps the most pervasive and least intrusive feature of Apple Intelligence so far. When a notification arrives, such as a text from a friend or group in Messages, the iPhone creates a short, single-sentence summary. Sometimes summaries are vague and sometimes they're unintentionally funny but so far I've found them to be more helpful than not. Summaries can also be generated from alerts by third-party apps like news or social media apps -- although I suspect that my outdoor security camera is picking up multiple passersby over time and not telling me that 10 people are stacked by the door. That said, Apple Intelligence definitely doesn't understand sarcasm or colloquialisms -- you can turn summaries off if you prefer. You can also generate a longer summary of emails in the Mail app: Tap the Summarize button at the top of a message to view a rundown of the contents in a few dozen words. In Safari, when viewing a page where the Reader feature is available, tap the Page Menu button in the address bar, tap Show Reader and then tap the Summary button at the top of the page. I was amused during the iOS 18 and the iPhone 16 releases that the main visual indicator of Apple Intelligence -- the full-screen, color-at-the-edges Siri animation -- was noticeably missing. Apple even lit up the edges of the massive glass cube of its Apple Fifth Avenue Store in New York City like a Siri search. Instead, iOS 18 used the same-old Siri sphere. Now, the modern Siri look has arrived as of iOS 18.1, but only on devices that support Apple Intelligence. If you're wondering why you're still seeing the old interface, I can recommend some steps to turn on the new experience. With the new look are a few Siri interaction improvements: It's more forgiving if you stumble through a query, like saying the wrong word or interrupting yourself mid-thought. It's also better about listening after delivering results, so you can ask related followup questions. However, the ability to personalize answers based on what Apple Intelligence knows about you is still down the road. What did appear, as of iOS 18.2, was integration of ChatGPT, which you can now use as an alternate source of information. For some queries, if Siri doesn't have the answer right away, you're asked if you'd like to use ChatGPT instead. You don't need a ChatGPT account to take advantage of this (but if you do have one, you can sign in). Perhaps my favorite new Siri feature is the ability to bring up the assistant without saying the words "Hey Siri" out loud. In my house, where I have HomePods and my family members use their own iPhones and iPads, I never know which device is going to answer my call (even though they're supposed to be smart enough to work it out). Plus, honestly, even after all this time I'm not always comfortable talking to my phone -- especially in public. It's annoying enough when people carry on phone conversations on speaker, I don't want to add to the hubbub by making Siri requests. Instead, I turn to a new feature called Tap to Siri. Double-tap the bottom edge of the screen on the iPhone or iPad to bring up the Siri search bar and the onscreen keyboard. On a Mac, go to System Settings > Apple Intelligence & Siri and choose a key combination under Keyboard shortcut, such as Press Either Command Key Twice. Yes, this involves more typing work than just speaking conversationally, but I can enter more specific queries and not wonder if my robot friend is understanding what I'm saying. Until iOS 18.1, the Photos app on the iPhone and iPad lacked a simple retouch feature. Dust on the camera lens? Litter on the ground? Sorry, you need to deal with those and other distractions in the Photos app on MacOS or using a third-party app. Now Apple Intelligence includes Clean Up, an AI-enhanced removal tool, in the Photos app. When you edit an image and tap the Clean Up button, the iPhone analyzes the photo and suggests potential items to remove by highlighting them. Tap one or draw a circle around an area -- the app erases those areas and uses generative AI to fill in plausible pixels. In this first incarnation, Clean Up isn't perfect and you'll often get better results in other dedicated image editors. But for quickly removing annoyances from photos, it's fine. Focus modes on the iPhone can be enormously helpful, such as turning on Do Not Disturb to insulate yourself from outside distractions. You can also create personalized Focus modes. For example, my Podcast Recording mode blocks outside notifications except from a handful of people during scheduled recording times. With Apple Intelligence enabled, a new Reduce Interruptions Focus mode is available. When active, it becomes a smarter filter for what gets past the wall holding back superfluous notifications. Even things that are not specified in your criteria for allowed notifications, such as specific people, might pop up. On my iPhone, for instance, that can include weather alerts or texts from my bank when a large purchase or funds transfer has occurred.

[3]

7 AI features coming to iOS 26 that I can't wait to use (and how you can try them)

Apple Worldwide Developers' Conference (WWDC) was a little over a month ago -- meaning the iOS 26 public beta should be released any day now. Even though Apple has not yet launched the highly anticipated Siri upgrade -- the company said we will hear more about it in the coming year -- at WWDC, Apple unveiled a slew of AI features across its devices and operating systems, including iOS, MacOS, WatchOS, and iPadOS. Also: Apple's iOS 26 and iPadOS 26 public betas will release any minute now: What to expect While the features aren't the flashiest, many of them address issues that Apple users have long had with their devices or in their everyday workflows, while others are downright fun. I gathered the AI features announced and ranked them according to what I am most excited to use and what people on the web have been buzzing about. Apple introduced Visual Intelligence last year with the launch of the iPhone 16. At the time, it allowed users to take photos of objects around them and then use the iPhone's AI capability to search for them and find more information. Last month, Apple upgraded the experience by adding Visual Intelligence to the iPhone screen. To use it, you just have to take a screenshot. Visual Intelligence can use Apple Intelligence to grab the details from your screenshot and suggest actions, such as adding an event to your calendar. You can also use the "ask button" to ask ChatGPT for help with a particular image. This is useful for tasks in which ChatGPT could provide assistance, such as solving a puzzle. You can also tap on "Search" to look on the web. Also: Your entire iPhone screen is now searchable with new Visual Intelligence features Although Google already offered the same capability years ago with Circle to Search, this is a big win for Apple users, as it is functional and was executed well. It leverages ChatGPT's already capable models rather than trying to build an inferior one itself. Since generative AI exploded in popularity, a useful application that has emerged is real-time translation. Because LLMs have a deep understanding of language and how people speak, they are able to translate speech not just literally but also accurately using additional context. Apple will roll out this powerful capability across its own devices with a new real-time translation feature. Also: Apple Intelligence is getting more languages - and AI-powered translation The feature can translate text in Messages and audio on FaceTime and phone calls. If you are using it for verbal conversations, you just click a button on your call, which alerts the other person that the live translation is about to take place. After a speaker says something, there is a brief pause, and then you get audio feedback with a conversational version of what was said in your language of choice, with a transcript you can follow along. This feature is valuable because it can help people communicate with each other. It is also easy to access because it is being baked into communication platforms people already rely on every day. The AutoMix feature uses AI to add seamless transitions from one song to another, mimicking what a DJ would do with time stretching and beat matching. In the settings app on the beta, Apple says, "Songs transition at the perfect moment, based on analysis of the key and tempo of the music." Also: How to use the viral AutoMix feature on iOS 26 (and which iPhone models support it) It works in tandem with the Autoplay feature, so if you are playing a song, like a DJ, it can play another song that matches the vibes while seamlessly transitioning to it. Many users are already trying it on their devices in the developer beta and are taking to social media to post the pretty impressive results. The Shortcuts update was easy to miss during the keynote, but it is one of the best use cases for AI. If you are like me, you typically avoid programming Shortcuts because they seem too complicated to create. This is where Apple Intelligence can help. Also: My favorite iPhone productivity feature just got a major upgrade with iOS 26 (and it's not Siri) With the new intelligent actions features, you can tap into Apple Intelligence models either on-device or in Private Cloud Compute within your Shortcut, unlocking a new, much more advanced set of capabilities. For example, you could set up a Shortcut that takes all the files you add to your home screen and then sorts them into files for you using Apple Intelligence. The process is meant to be as intuitive as possible, with dedicated actions and the ability to use Apple Intelligence models, either on-device or with Private Cloud Compute, to generate responses that feed into the rest of a shortcut, according to Apple. However, if even this seems too daunting, there is also a gallery feature available to try out some of the features and get inspiration for building. The Hold Assist feature is a prime example of a feature that is not over the top but has the potential to save you a lot of time in your everyday life. The way it works is simple: if you're placed on hold and your phone detects hold music, it will ask if you want your call spot held in line and notify you when it's your turn to speak with someone, alerting the person on the other end of the call that you will be right there. Also: Your iPad is getting a huge upgrade for free. 4 features I can't wait to use on iPadOS 26 Imagine how much time you will get back from calls with customer service. If the feature seems familiar, it's because Google has a similar "Hold for me" feature, which allows users to use Call Assist to wait on hold for you and notify you when they are back. Having said this, even though it may not be an original feature, it is one Apple users will be happy to have. The Apple Vision Pro introduced the idea of enjoying your precious memories in an immersive experience that places you in the scene. However, to take advantage of this feature, you had to take spatial photos and record spatial videos. Now, a similar feature is coming to iOS, allowing users to transform any picture they have into a 3D-like image that separates the foreground and background for a spatial effect. Also: iOS 26 will bring any photo on your iPhone to life with 3D spatial effects The best part is that you can add these photos to your lock screen, and as you move your phone, the 3D element looks like it moves with it. It may seem like there is no AI involved, but according to Craig Federighi, Apple's SVP of software engineering, it can transform your 2D photo into a 3D effect by using "advanced computer vision techniques running on the Neural Engine. " Using AI for working out insights isn't new, as most fitness wearable companies, including Whoop and Oura, have implemented a feature of that sort before. However, Workout Buddy is a unique feature and an application of AI I haven't seen before. Essentially, it uses your fitness data, such as history, paces, Activity Rings, and Training Load, to give you unique feedback as you are working out. Also: Your Apple Watch is getting a major upgrade. Here are the best features in WatchOS 26 Even though this feature is a part of the WatchOS upgrade -- and I don't happen to own one -- it does seem like a fun and original way to use AI. As someone who lacks all desire to work out, I can see that having a motivational reminder can have a positive impact on the longevity of my workout. The list above is already pretty extensive, and yet, Apple unveiled a lot more AI features: Apple has yet to publicly announce the official public beta release date, and the official page says "coming soon." Following prior year patterns and reports, it should happen any day now. The final, most stable version will be released in the fall with the launch of its latest devices. To enroll in the public beta, visit the Apple Beta Software Program site and click the blue sign-up button. The program is free and open to anyone with an Apple Account who accepts the Apple Beta Software Program Agreement when signing on. Also: Five 'new' iOS 26 features that feel familiar (I've been using them on Android for years) Apple also already released an iOS 26 beta geared toward developers. To get started, you need an iPhone 11 or newer running iOS 16.5 or later and an Apple ID used in the Apple Developer Program. ZDNET's guide provides step-by-step instructions on downloading the beta. With either beta, it is worth keeping in mind that the beta gives users access to all of the latest features available in iOS 26, but the downside is that since the features are not fully developed, many are buggy. Since you won't get the best experience, a good practice is to use either a secondary phone or make sure your data is properly backed up in case something goes wrong. Get the morning's top stories in your inbox each day with our Tech Today newsletter.

Share

Share

Copy Link

Apple's upcoming iOS 26 introduces a range of AI-powered features and a new Liquid Glass interface, enhancing user experience across various applications and system functions.

Liquid Glass: A New Era of iPhone Design

Apple's upcoming iOS 26 introduces a groundbreaking design overhaul called Liquid Glass, marking a significant departure from the flat interface that has defined iPhones for over a decade

1

. This new interface, characterized by translucency and dynamic morphing, will extend across Apple's entire product lineup. The design mimics real-world glass, adapting to light and color of surrounding elements, creating a more immersive and responsive user experience1

.

Source: CNET

AI-Powered Enhancements

iOS 26 leverages Apple Intelligence to introduce several AI-driven features:

-

Prioritize Notifications: This feature uses AI algorithms to identify and elevate important notifications, improving user focus and productivity

2

. -

Intelligent Summaries: The system can now provide concise summaries of notifications, emails, and web pages, helping users quickly grasp essential information

2

. -

Visual Intelligence: Building on previous capabilities, users can now interact with screenshots to perform actions like adding events to calendars or seeking ChatGPT assistance for image-related queries

3

.

Source: CNET

- Real-Time Translation: Leveraging advanced language models, iOS 26 offers real-time translation for text messages, FaceTime, and phone calls, breaking down language barriers in communication

3

.

App Redesigns and Functional Improvements

Several core apps receive significant updates in iOS 26:

-

Camera and Photos: The Camera app gets a simplified, more intuitive interface, while the Photos app regains a user-friendly menu system

1

. -

Phone App: Beyond aesthetic changes, new features like Call Screening and Hold Assist aim to improve call management and reduce time wasted on unwanted calls or hold music

1

3

. -

Messages: The app now supports customizable backgrounds and includes features like group polls to enhance communication

1

. -

Music: New AI-powered features include lyrics translation, pronunciation guides, and AutoMix for seamless song transitions

1

3

.

Related Stories

Siri and Shortcuts Enhancements

While the major Siri upgrade is still in development, iOS 26 introduces some improvements:

-

New Siri Interface: Devices supporting Apple Intelligence will showcase a modern Siri look, with improved interaction capabilities

2

. -

Tap to Siri: This feature allows users to summon Siri without voice commands, enhancing privacy and convenience

2

. -

Intelligent Shortcuts: The Shortcuts app now incorporates AI models to create more advanced and intuitive automations

3

.

Availability and Compatibility

Source: ZDNet

iOS 26 is expected to launch in September or October, likely coinciding with new iPhone 17 models. Developer betas are already available, with public betas anticipated soon. The new features, particularly those powered by Apple Intelligence, will be available on compatible devices, including iPhone 15 Pro, iPhone 16E, iPhone 16, and iPhone 16 Pro (including Plus and Max variants)

1

2

3

.As Apple continues to integrate AI capabilities into its ecosystem, iOS 26 represents a significant step forward in both design and functionality. While some features may still be in beta or evolving, the update promises to enhance user experience across various aspects of iPhone usage, from visual interface to practical applications in communication, productivity, and entertainment.

References

Summarized by

Navi

Related Stories

iOS 26: Apple's AI-Powered Update Brings New Design and Enhanced Features

25 Jul 2025•Technology

Apple Unveils iOS 26 with Liquid Glass Design and AI-Powered Features at WWDC 2025

04 Jun 2025•Technology

iOS 26: Apple's AI-Powered Update Brings Liquid Glass Design and Enhanced Intelligence Features

20 Aug 2025•Technology

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology