Brain Implant Decodes Inner Speech with Password Protection, Advancing AI-Powered Communication

13 Sources

13 Sources

[1]

A mind-reading brain implant that comes with password protection

A brain implant can decode a person's internal chatter - but the device works only if the user thinks of a preset password. The mind-reading device, or brain-computer interface (BCI), accurately deciphered up to 74% of imagined sentences. The system began decoding users' internal speech - the silent dialogue in people's minds -- only when they thought of a specific keyword. This ensured that the system did not accidentally translate sentences that users would rather keep to themselves. The study, published in Cell on 14 August, represents a "technically impressive and meaningful step" towards developing BCI devices that accurately decode internal speech, says Sarah Wandelt, a neural engineer at the Feinstein Institutes for Medical Research in Manhasset, New York, who was not involved in the work. The password mechanism also offers a straightforward way to protect users' privacy, a crucial feature for real-world use, adds Wandelt. BCI systems translate brain signals into text or audio and have become promising tools for restoring speech in people with paralysis or limited muscle control. Most devices require users to try to speak out loud, which can be exhausting and uncomfortable. Last year, Wandelt and her colleagues developed the first BCI for decoding internal speech, which relied on signals in the supramarginal gyrus, a brain region that plays a major part in speech and language. But there's a risk that these internal-speech BCIs could accidentally decode sentences users never intended to utter, says Erin Kunz, a neural engineer at Stanford University in California. "We wanted to investigate this robustly," says Kunz, who co-authored the new study. First, Kunz and her colleagues analysed brain signals collected by microelectrodes placed in the motor cortex -- the region involved in voluntary movements -- of four participants. All four have trouble speaking, one because of a stroke and three because of motor neuron disease, a degeneration of the nerves that leads to loss of muscle control. The researchers instructed participants to either attempt to say a set of words or imagine saying them. Recordings of the participants' brain activity showed that attempted and internal speech originated in the same brain region and generated similar neural signals, but those associated with internal speech were weaker. Next, Kunz and her colleagues used this data to train artificial intelligence models to recognize phonemes, the smallest units of speech, in the neural recordings. The team used language models to stitch these phonemes together to form words and sentences in real time, drawn from a vocabulary of 125,000 words. The device correctly interpreted 74% of sentences imagined by two participants who were instructed to think of specific phrases. This level of accuracy is similar to that of the team's earlier BCI for attempted speech, says Kunz. In some cases, the device also decoded numbers that participants imagined when they silently counted pink rectangles shown on a screen, suggesting that the BCI can detect spontaneous self-talk. To address the risk of decoding sentences that users don't intend to say out loud, the researchers added a password to their system so that participants could control when it started decoding. When a participant imagined the password 'Chitty-Chitty-Bang-Bang' (the name of an English-language children's novel) the BCI recognized it with an accuracy of more than 98%. The study is exciting because it unravels the neural differences between internal and attempted speech, says Silvia Marchesotti, a neuroengineer at the University of Geneva, Switzerland. She adds that it will be important to explore speech signals in brain regions other than the motor cortex. The researchers are planning to do this, in addition to improving the system's speed and accuracy. "If we look at other parts of the brain, perhaps we can also address more types of speech impairments," says Kunz.

[2]

Mind-reading AI can turn even imagined speech into spoken words

Scientists have decoded the brain activity of people as they merely imagined speaking, using implanted electrodes and AI. Brain-computer interfaces can already decode what people with paralysis are attempting to say by reading their neural activity. But Benyamin Meschede-Krasa at Stanford University says this in itself can require a fair amount of physical effort, so he and his colleagues sought a less energy-intensive approach. "We wanted to see whether there were similar patterns when someone was simply imagining speaking in their head," he says. "And we found that this could be an alternative, and indeed, a more comfortable way for people with paralysis to use that kind of system to restore their communication." Meschede-Krasa and his colleagues recruited four people who were severely paralysed as a result of either amyotrophic lateral sclerosis or brainstem stroke. All the participants had previously had microelectrodes implanted into their motor cortex, which is involved in speech, for research purposes. The researchers asked each person to attempt to say a list of words and sentences, and also to just imagine saying them. They found that brain activity was similar for both attempted and inner speech, but activation signals were generally weaker for the latter. The team trained an AI model to recognise those signals and decode them, using a vocabulary database of up to 125,000 words. To ensure the privacy of people's inner speech, the team programmed the AI to be unlocked when they thought of the password Chitty Chitty Bang Bang, which it detected with 98 per cent accuracy. Through a series of experiments, the team found that just imaging speaking a word resulted in the model correctly decoding it up to 74 per cent of the time. This demonstrates a solid proof-of-principle for this approach, but it is less robust than interfaces that decode actual attempted speech, says team member Frank Willett, also at Stanford. Ongoing improvements to both the sensors and AI over the next few years could make this more accurate, he says. The participants expressed a significant preference for this system, which was faster and less laborious than those based on attempted speech, says Meschede-Krasa. The concept takes "an interesting direction" for future brain-computer interfaces, says Mariska Vansteensel at UMC Utrecht in The Netherlands. But it lacks differentiation for the fine lines between attempted speech, what we want to be speech and the thoughts we want to keep to ourselves, she says. "I'm not sure if everyone was able to distinguish so precisely between these different concepts of imagined and attempted speeches." She also says the password would need to be turned on and off, in line with the user's decision of whether to say what they're thinking mid-conversation. "We really need to make sure that BCI [brain computer interface]-based utterances are the ones people intend to share with the world and not the ones they want to keep to themselves no matter what," she says. Benjamin Alderson-Day at Durham University in the UK says there's no reason to consider this system a mind reader. "It really only works with very simple examples of language," he says. "I mean if your thoughts are limited to single words like 'tree' or 'bird,' then you might be concerned, but we're still quite a way away from capturing people's free-form thoughts and most intimate ideas." Willett stresses that all brain-computer interfaces are regulated by federal agencies to ensure adherence to "the highest standards of medical ethics".

[3]

Scientists decode inner speech from brain activity with high accuracy

Cell PressAug 14 2025 Scientists have pinpointed brain activity related to inner speech-the silent monologue in people's heads-and successfully decoded it on command with up to 74% accuracy. Publishing August 14 in the Cell Press journal Cell, their findings could help people who are unable to audibly speak communicate more easily using brain-computer interface (BCI) technologies that begin translating inner thoughts when a participant says a password inside their head. This is the first time we've managed to understand what brain activity looks like when you just think about speaking. For people with severe speech and motor impairments, BCIs capable of decoding inner speech could help them communicate much more easily and more naturally." Erin Kunz, lead author of Stanford University BCIs have recently emerged as a tool to help people with disabilities. Using sensors implanted in brain regions that control movement, BCI systems can decode movement-related neural signals and translate them into actions, such as moving a prosthetic hand. Research has shown that BCIs can even decode attempted speech among people with paralysis. When users physically attempt to speak out loud by engaging the muscles related to making sounds, BCIs can interpret the resulting brain activity and type out what they are attempting to say, even if the speech itself is unintelligible. Although BCI-assisted communication is much faster than older technologies, including systems that track users' eye movements to type out words, attempting to speak can still be tiring and slow for people with limited muscle control. The team wondered if BCIs could decode inner speech instead. "If you just have to think about speech instead of actually trying to speak, it's potentially easier and faster for people," says Benyamin Meschede-Krasa, the paper's co-first author, of Stanford University. The team recorded neural activity from microelectrodes implanted in the motor cortex-a brain region responsible for speaking-of four participants with severe paralysis from either amyotrophic lateral sclerosis (ALS) or a brainstem stroke. The researchers asked the participants to either attempt to speak or imagine saying a set of words. They found that attempted speech and inner speech activate overlapping regions in the brain and evoke similar patterns of neural activity, but inner speech tends to show a weaker magnitude of activation overall. Using the inner speech data, the team trained artificial intelligence models to interpret imagined words. In a proof-of-concept demonstration, the BCI could decode imagined sentences from a vocabulary of up to 125,000 words with an accuracy rate as high as 74%. The BCI was also able to pick up what some inner speech participants were never instructed to say, such as numbers when the participants were asked to tally the pink circles on the screen. The team also found that while attempted speech and inner speech produce similar patterns of neural activity in the motor cortex, they were different enough to be reliably distinguished from each other. Senior author Frank Willett of Stanford University says researchers can use this distinction to train BCIs to ignore inner speech altogether. For users who may want to use inner speech as a method for faster or easier communication, the team also demonstrated a password-controlled mechanism that would prevent the BCI from decoding inner speech unless temporarily unlocked with a chosen keyword. In their experiment, users could think of the phrase "chitty chitty bang bang" to begin inner-speech decoding. The system recognized the password with more than 98% accuracy. While current BCI systems are unable to decode free-form inner speech without making substantial errors, the researchers say more advanced devices with more sensors and better algorithms may be able to do so in the future. "The future of BCIs is bright," Willett says. "This work gives real hope that speech BCIs can one day restore communication that is as fluent, natural, and comfortable as conversational speech." Cell Press Journal reference: Kunz, E. M., et al. (2025). Inner speech in motor cortex and implications for speech neuroprostheses. Cell. doi.org/10.1016/j.cell.2025.06.015.

[4]

"Mind-Reading" Tech Decodes Inner Speech With Up to 74% Accuracy - Neuroscience News

Summary: Scientists have, for the first time, decoded inner speech -- silent thoughts of words -- on command using brain-computer interface technology, achieving up to 74% accuracy. By recording neural activity from participants with severe paralysis, the team found that inner speech and attempted speech share overlapping brain activity patterns, though inner speech signals are weaker. Artificial intelligence models trained on these patterns could interpret imagined words from a vast vocabulary, and a password-based system ensured decoding only when desired. This breakthrough could pave the way for faster, more natural communication for people who cannot speak, with potential for even greater accuracy as technology advances. Scientists have pinpointed brain activity related to inner speech -- the silent monologue in people's heads -- and successfully decoded it on command with up to 74% accuracy. Publishing August 14 in the Cell Press journal Cell, their findings could help people who are unable to audibly speak communicate more easily using brain-computer interface (BCI) technologies that begin translating inner thoughts when a participant says a password inside their head. "This is the first time we've managed to understand what brain activity looks like when you just think about speaking," says lead author Erin Kunz of Stanford University. "For people with severe speech and motor impairments, BCIs capable of decoding inner speech could help them communicate much more easily and more naturally." BCIs have recently emerged as a tool to help people with disabilities. Using sensors implanted in brain regions that control movement, BCI systems can decode movement-related neural signals and translate them into actions, such as moving a prosthetic hand. Research has shown that BCIs can even decode attempted speech among people with paralysis. When users physically attempt to speak out loud by engaging the muscles related to making sounds, BCIs can interpret the resulting brain activity and type out what they are attempting to say, even if the speech itself is unintelligible. Although BCI-assisted communication is much faster than older technologies, including systems that track users' eye movements to type out words, attempting to speak can still be tiring and slow for people with limited muscle control. The team wondered if BCIs could decode inner speech instead. "If you just have to think about speech instead of actually trying to speak, it's potentially easier and faster for people," says Benyamin Meschede-Krasa, the paper's co-first author, of Stanford University. The team recorded neural activity from microelectrodes implanted in the motor cortex -- a brain region responsible for speaking -- of four participants with severe paralysis from either amyotrophic lateral sclerosis (ALS) or a brainstem stroke. The researchers asked the participants to either attempt to speak or imagine saying a set of words. They found that attempted speech and inner speech activate overlapping regions in the brain and evoke similar patterns of neural activity, but inner speech tends to show a weaker magnitude of activation overall. Using the inner speech data, the team trained artificial intelligence models to interpret imagined words. In a proof-of-concept demonstration, the BCI could decode imagined sentences from a vocabulary of up to 125,000 words with an accuracy rate as high as 74%. The BCI was also able to pick up what some inner speech participants were never instructed to say, such as numbers when the participants were asked to tally the pink circles on the screen. The team also found that while attempted speech and inner speech produce similar patterns of neural activity in the motor cortex, they were different enough to be reliably distinguished from each other. Senior author Frank Willett of Stanford University says researchers can use this distinction to train BCIs to ignore inner speech altogether. For users who may want to use inner speech as a method for faster or easier communication, the team also demonstrated a password-controlled mechanism that would prevent the BCI from decoding inner speech unless temporarily unlocked with a chosen keyword. In their experiment, users could think of the phrase "chitty chitty bang bang" to begin inner-speech decoding. The system recognized the password with more than 98% accuracy. While current BCI systems are unable to decode free-form inner speech without making substantial errors, the researchers say more advanced devices with more sensors and better algorithms may be able to do so in the future. "The future of BCIs is bright," Willett says. "This work gives real hope that speech BCIs can one day restore communication that is as fluent, natural, and comfortable as conversational speech." Funding: This work was supported by the Assistant Secretary of Defense for Health Affairs, the National Institutes of Health, the Simons Collaboration for the Global Brain, the A.P. Giannini Foundation, Department of Veterans Affairs, the Wu Tsai Neurosciences Institute, the Howard Hughes Medical Institute, Larry and Pamela Garlick, the National Institute on Deafness and Other Communication Disorders, the National Institute of Neurological Disorders and Stroke, the Eunice Kennedy Shriver National Institute of Child Health and Human Development, the Blavatnik Family Foundation, and the National Science Foundation. Inner speech in motor cortex and implications for speech neuroprostheses Speech brain-computer interfaces (BCIs) show promise in restoring communication to people with paralysis but have also prompted discussions regarding their potential to decode private inner speech. Separately, inner speech may be a way to bypass the current approach of requiring speech BCI users to physically attempt speech, which is fatiguing and can slow communication. Using multi-unit recordings from four participants, we found that inner speech is robustly represented in the motor cortex and that imagined sentences can be decoded in real time. The representation of inner speech was highly correlated with attempted speech, though we also identified a neural "motor-intent" dimension that differentiates the two. We investigated the possibility of decoding private inner speech and found that some aspects of free-form inner speech could be decoded during sequence recall and counting tasks. Finally, we demonstrate high-fidelity strategies that prevent speech BCIs from unintentionally decoding private inner speech.

[5]

New brain implant can decode a person's 'inner monologue'

A study participant uses the brain-computer interface to decode inner speech. (Image credit: Emory BrainGate Team) Scientists have developed a brain-computer interface that can capture and decode a person's inner monologue. The results could help people who are unable to speak communicate more easily with others. Unlike some previous systems, the new brain-computer interface does not require people to attempt to physically speak. Instead, they just have to think what they want to say. "This is the first time we've managed to understand what brain activity looks like when you just think about speaking," study co-author Erin Kunz, an electrical engineer at Stanford University, said in a statement. "For people with severe speech and motor impairments, [brain-computer interfaces] capable of decoding inner speech could help them communicate much more easily and more naturally." Brain-computer interfaces (BCIs) allow people who are paralyzed to use their thoughts to control assistive devices, such as prosthetic hands, or to communicate with others. Some systems involve implanting electrodes in a person's brain, while others use MRI to observe brain activity and relate it to thoughts or actions. But many BCIs that help people communicate require a person to physically attempt to speak in order to interpret what they want to say. This process can be tiring for people who have limited muscle control. Researchers in the new study wondered if they could instead decode inner speech. In the new study, published Aug. 14 in the journal Cell, Kunz and her colleagues worked with four people who were paralyzed by either a stroke or amyotrophic lateral sclerosis (ALS), a degenerative disease that affects the nerve cells that help control muscles. The participants had electrodes implanted in their brains as part of a clinical trial for controlling assistive devices with thoughts. The researchers trained artificial intelligence models to decode inner speech and attempted speech from electrical signals picked up by the electrodes in the participants' brains. The models decoded sentences that participants internally "spoke" in their minds with up to 74% accuracy, the team found. They also picked up on a person's natural inner speech during tasks that required it, such as remembering the order of a series of arrows pointing in different directions. Inner speech and attempted speech produced similar patterns of brain activity in the brain's motor cortex, which controls movement, but inner speech produced weaker activity overall. One ethical dilemma with BCIs is that they could potentially decode people's private thoughts rather than what they intended to say aloud. The differences in brain signals between attempted and inner speech suggest that future brain-computer interfaces could be trained to ignore inner speech entirely, study co-author Frank Willett, an assistant professor of neurosurgery at Stanford, said in the statement. As an additional safeguard against the current system unintentionally decoding a person's private inner speech, the team developed a password-protected BCI. Participants could use attempted speech to communicate at any time, but the interface started decoding inner speech only after they spoke the passphrase "chitty chitty bang bang" in their minds. Though the BCI wasn't able to decode complete sentences when a person wasn't explicitly thinking in words, advanced devices may be able to do so in the future, the researchers wrote in the study. "The future of BCIs is bright," Willett said in the statement. "This work gives real hope that speech BCIs can one day restore communication that is as fluent, natural, and comfortable as conversational speech."

[6]

For Some Patients, the 'Inner Voice' May Soon Be Audible

Carl Zimmer writes the "Origins" column for The New York Times. He has written about neuroengineering since 1993. For decades, neuroengineers have dreamed of helping people who have been cut off from the world of language. A disease like amyotrophic lateral sclerosis, or A.L.S., weakens the muscles in the airway. A stroke can kill neurons that normally relay commands for speaking. Perhaps, by implanting electrodes, scientists could instead record the brain's electric activity and translate that into spoken words. Now a team of researchers has made an important advance toward that goal. Previously they succeeded in decoding the signals produced when people tried to speak. In the new study, published on Thursday in the journal Cell, their computer often made correct guesses when the subjects simply imagined saying words. Christian Herff, a neuroscientist at Maastricht University in the Netherlands who was not involved in the research, said the result went beyond the merely technological and shed light on the mystery of language. "It's a fantastic advance," Dr. Herff said. The new study is the latest result in a long-running clinical trial, called BrainGate2, that has already seen some remarkable successes. One participant, Casey Harrell, now uses his brain-machine interface to hold conversations with his family and friends. In 2023, after A.L.S. had made his voice unintelligible, Mr. Harrell agreed to have electrodes implanted in his brain. Surgeons placed four arrays of tiny needles on the left side, in a patch of tissue called the motor cortex. The region becomes active when the brain creates commands for muscles to produce speech. A computer recorded the electrical activity from the implants as Mr. Harrell attempted to say different words. Over time, with the help of artificial intelligence, the computer accurately predicted almost 6,000 words, with an accuracy of 97.5 percent. It could then synthesize those words using Mr. Harrell's voice, based on recordings made before he developed A.L.S. But successes like this one raised a troubling question: Could a computer accidentally record more than patients actually wanted to say? Could it eavesdrop on their inner voice? "We wanted to investigate if there was a risk of the system decoding words that weren't meant to be said aloud," said Erin Kunz, a neuroscientist at Stanford University and an author of the new study. She and her colleagues also wondered if patients might actually prefer using inner speech. They noticed that Mr. Harrell and other participants became fatigued when they tried to speak; could simply imagining a sentence be easier for them, and allow the system to work faster? "If we could decode that, then that could bypass the physical effort," Dr. Kunz said. "It would be less tiring, so they could use the system for longer." But it wasn't clear if the researchers could actually decode inner speech. In fact, scientists don't even agree on what "inner speech" is. Our brains produce language, picking out words and organizing them into sentences, using a constellation of regions that, together, are the size of a large strawberry. We can use the signals from the language network to issue commands to our muscles to speak, or use sign language, or type a text message. But many people also have the feeling that they use language to perform the very act of thinking. After all, they can hear their thoughts as an inner voice. Some researchers have indeed argued that language is essential for thought. But others, pointing to recent studies, maintain that much of our thinking does not involve language at all, and that people who hear an inner voice are just perceiving a kind of sporadic commentary in their heads. "Many people have no idea what you're talking about when you say you have an inner voice," said Evelina Fedorenko, a cognitive neuroscientist at M.I.T. "They're like, 'You know, maybe you should go see a doctor if you're hearing words in your head.'" (Dr. Fedorenko said she has an inner voice, while her husband does not.) Dr. Kunz and her colleagues decided to investigate the mystery for themselves. The scientists gave participants seven different words, including "kite" and "day," then compared the brain signals when participants attempted to say the words and when they only imagined saying them. As it turned out, imagining a word produced a pattern of activity similar to that of trying to say it, but the signal was weaker. The computer did a pretty good job of predicting which of the seven words the participants were thinking. For Mr. Harrell, it didn't do much better than a random guesses would have, but for another participant it picked the right word more than 70 percent of the time. The researchers put the computer through more training, this time specifically on inner speech. Its performance improved significantly, including on Mr. Harrell. Now when the participants imagined saying entire sentences, such as "I don't know how long you've been here," the computer could accurately decode most or all of the words. Dr. Herff, who has done his own studies on inner speech, was surprised that the experiment succeeded. Before, he would have said that inner speech is fundamentally different from the motor cortex signals that produce actual speech. "But in this study, they show that, for some people, it really isn't that different," he said. Dr. Kunz emphasized that the computer's current performance involving inner speech would not be good enough to let people hold conversations. "The results are an initial proof of concept more than anything," she said. But she is optimistic that decoding inner speech could become the new standard for brain-computer interfaces. In more recent trials, the results of which have yet to be published, she and her colleagues have improved the computer's accuracy and speed. "We haven't hit the ceiling yet," she said. As for mental privacy, Dr. Kunz and her colleagues found some reason for concern: On occasion, the researchers were able to detect words that the participants weren't imagining out loud. In one trial, the participants were shown a screen full of 100 pink and green rectangles and circles. They then had to determine the number of shapes of one particular color -- green circles, for instance. As the participants worked on the problem, the computer sometimes decoded the word for a number. In effect, the participants were silently counting the shapes, and the computer was hearing them. "These experiments are the most exciting to me," Dr. Herff said, because they suggest that language may play a role in many different forms of thought beyond just communicating. "Some people really seem to think this way," he said. Dr. Kunz and her colleagues explored ways to prevent the computer from eavesdropping on private thoughts. They came up with two possible solutions. One would be to only decode attempted speech, while blocking inner speech. The new study suggests this strategy could work. Even though the two kinds of thought are similar, they are different enough that a computer can learn to tell them apart. In one trial, the participants mixed sentences in their minds of both attempted and imagined speech. The computer was able to ignore the imagined speech. For people who would prefer to communicate with inner speech, Dr. Kunz and her colleagues came up with a second strategy: an inner password to turn the decoding on and off. The password would have to be a long, unusual phrase, they decided, so they chose "Chitty Chitty Bang Bang," the name of a 1964 novel by Ian Fleming as well as a 1968 movie starring Dick van Dyke. One of the participants, a 68-year-old woman with A.L.S., imagined saying "Chitty Chitty Bang Bang" along with an assortment of other words. The computer eventually learned to recognize the password with a 98.75 percent accuracy -- and decoded her inner speech only after detecting the password. "This study represents a step in the right direction, ethically speaking," said Cohen Marcus Lionel Brown, a bioethicist at the University of Wollongong in Australia. "If implemented faithfully, it would give patients even greater power to decide what information they share and when." Dr. Fedorenko, who was not involved in the new study, called it a "methodological tour de force." But she questioned whether an implant could eavesdrop on many of our thoughts. Unlike Dr. Herff, she doesn't see a role for language in much of our thinking. Although the BrainGate2 computer successfully decoded words that patients consciously imagined saying, Dr. Fedorenko noted, it performed much worse when people responded to open-ended commands. For example, in some trials, the participants were asked to think about their favorite hobby when they were children. "What they're recording is mostly garbage," she said. "I think a lot of spontaneous thought is just not well-formed linguistic sentences."

[7]

Brain-computer interface shows promise for decoding inner speech in real time

Scientists have pinpointed brain activity related to inner speech -- the silent monolog in people's heads -- and successfully decoded it on command with up to 74% accuracy. Published in the journal Cell, their findings could help people who are unable to audibly speak communicate more easily using brain-computer interface (BCI) technologies that begin translating inner thoughts when a participant says a password inside their head. "This is the first time we've managed to understand what brain activity looks like when you just think about speaking," says lead author Erin Kunz of Stanford University. "For people with severe speech and motor impairments, BCIs capable of decoding inner speech could help them communicate much more easily and more naturally." BCIs have recently emerged as a tool to help people with disabilities. Using sensors implanted in brain regions that control movement, BCI systems can decode movement-related neural signals and translate them into actions, such as moving a prosthetic hand. Research has shown that BCIs can even decode attempted speech among people with paralysis. When users physically attempt to speak out loud by engaging the muscles related to making sounds, BCIs can interpret the resulting brain activity and type out what they are attempting to say, even if the speech itself is unintelligible. Although BCI-assisted communication is much faster than older technologies, including systems that track users' eye movements to type out words, attempting to speak can still be tiring and slow for people with limited muscle control. The team wondered if BCIs could decode inner speech instead. "If you just have to think about speech instead of actually trying to speak, it's potentially easier and faster for people," says Benyamin Meschede-Krasa, the paper's co-first author, of Stanford University. The team recorded neural activity from microelectrodes implanted in the motor cortex -- a brain region responsible for speaking -- of four participants with severe paralysis from either amyotrophic lateral sclerosis (ALS) or a brainstem stroke. The researchers asked the participants to either attempt to speak or imagine saying a set of words. They found that attempted speech and inner speech activate overlapping regions in the brain and evoke similar patterns of neural activity, but inner speech tends to show a weaker magnitude of activation overall. Using the inner speech data, the team trained artificial intelligence models to interpret imagined words. In a proof-of-concept demonstration, the BCI could decode imagined sentences from a vocabulary of up to 125,000 words with an accuracy rate as high as 74%. The BCI was also able to pick up what some inner speech participants were never instructed to say, such as numbers when the participants were asked to tally the pink circles on the screen. The team also found that while attempted speech and inner speech produce similar patterns of neural activity in the motor cortex, they were different enough to be reliably distinguished from each other. Senior author Frank Willett of Stanford University says researchers can use this distinction to train BCIs to ignore inner speech altogether. For users who may want to use inner speech as a method for faster or easier communication, the team also demonstrated a password-controlled mechanism that would prevent the BCI from decoding inner speech unless temporarily unlocked with a chosen keyword. In their experiment, users could think of the phrase "chitty chitty bang bang" to begin inner-speech decoding. The system recognized the password with more than 98% accuracy. While current BCI systems are unable to decode free-form inner speech without making substantial errors, the researchers say more advanced devices with more sensors and better algorithms may be able to do so in the future. "The future of BCIs is bright," Willett says. "This work gives real hope that speech BCIs can one day restore communication that is as fluent, natural, and comfortable as conversational speech."

[8]

New Brain Interface Interprets Inner Monologues With Startling Accuracy

Scientists decoded the silent inner thoughts of four people with paralysis, a breakthrough that could transform assistive speech. Scientists can now decipher brain activity related to the silent inner monologue in people’s heads with up to 74% accuracy, according to a new study. In new research published today in Cell, scientists from Stanford University decoded imagined words from four participants with severe paralysis due to ALS or brainstem stroke. Aside from being absolutely wild, the findings could help people who are unable to speak communicate more easily using brain-computer interfaces (BCIs), the researchers say. “This is the first time we’ve managed to understand what brain activity looks like when you just think about speaking,†lead author Erin Kunz, a graduate student in electrical engineering at Stanford University, said in a statement. “For people with severe speech and motor impairments, BCIs capable of decoding inner speech could help them communicate much more easily and more naturally.†Previously, scientists have managed to decode attempted speech using BCIs. When people physically attempt to speak out loud by engaging the muscles related to speech, these technologies can interpret the resulting brain activity and type out what they’re trying to say. But while effective, the current methods of BCI-assisted communication can still be exhausting for people with limited muscle control. The new study is the first to directly take on inner speech. To do so, the researchers recorded activity in the motor cortexâ€"the region responsible for controlling voluntary movements, including speechâ€"using microelectrodes implanted in the motor cortex of the four participants. The researchers found that attempted and imagined speech activate similar, though not identical, patterns of brain activity. They trained an AI model to interpret these imagined speech signals, decoding sentences from a vocabulary of up to 125,000 words with as much as 74% accuracy. In some cases, the system even picked up unprompted inner thoughts, like numbers participants silently counted during a task. For people who want to use the new technology but don’t always want their inner thoughts on full blast, the team added a password-controlled mechanism that prevented the BCI from decoding inner speech unless the participants thought of a password (“chitty chitty bang bang†in this case). The system recognized the password with more than 98% accuracy. While 74% accuracy is high, the current technology still makes a substantial amount of errors. But the researchers are hopeful that soon, more sensitive recording devices and better algorithms could boost their performance even more. “The future of BCIs is bright,†Frank Willett, assistant professor in the department of neurosurgery at Stanford and the study’s lead author, said in a statement. “This work gives real hope that speech BCIs can one day restore communication that is as fluent, natural, and comfortable as conversational speech.â€

[9]

These brain implants speak your mind -- even when you don't want to

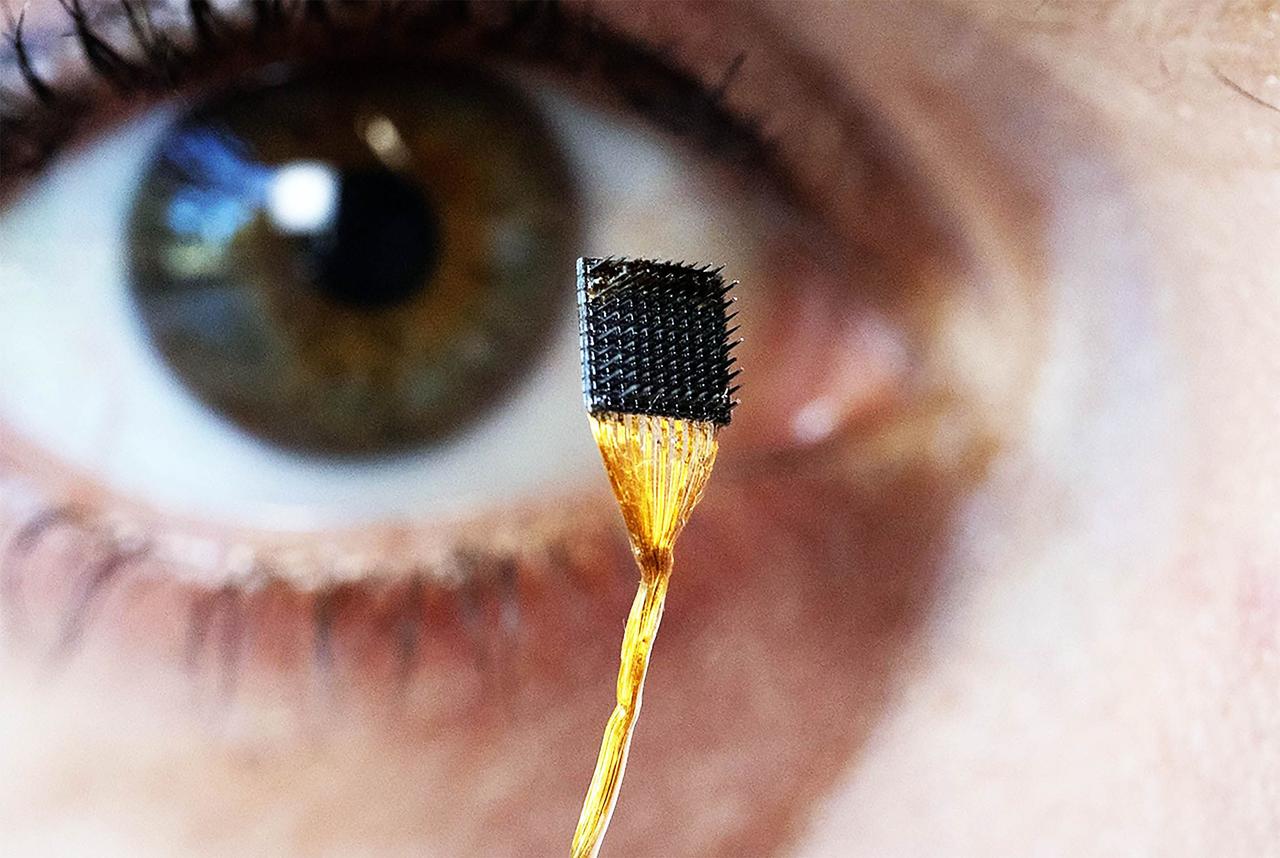

Postdoctoral researcher Erin Kunz holds up a microelectrode array that can be placed on the brain's surface as part of a brain-computer interface. Jim Gensheimer hide caption Surgically implanted devices that allow paralyzed people to speak can also eavesdrop on their inner monologue. That's the conclusion of a study of brain-computer interfaces (BCIs) in the journal Cell. The finding could lead to BCIs that allow paralyzed users to produce synthesized speech more quickly and with less effort. But the idea that new technology can decode a person's inner voice is "unsettling," says Nita Farahany, a professor of law and philosophy at Duke University and author of the book: The Battle for Your Brain. "The more we push this research forward, the more transparent our brains become," Farahany says, adding that measures to protect people's mental privacy are lagging behind technology that decodes signals in the brain. BCI's are able to decode speech using tiny electrode arrays that monitor activity in the brain's motor cortex, which controls the muscles involved in speaking. Until now, those devices have relied on signals produced when a paralyzed person is actively trying to speak a word or sentence. "We're recording the signals as they're attempting to speak and translating those neural signals into the words that they're trying to say," says Erin Kunz, a postdoctoral researcher at Stanford University's Neural Prosthetics Translational Laboratory. Relying on signals produced when a paralyzed person attempts speech makes it easy for that person to mentally zip their lip and avoid oversharing. But it also means they have to make a concerted effort to convey a word or sentence, which can be tiring and time consuming. So Kunz and a team of scientists set out to find a better way -- by studying the brain signals from four people who were already using BCIs to communicate. The team wanted to know whether they could decode brain signals that are far more subtle than those produced by attempted speech. The team wanted to decode imagined speech. During attempted speech, a paralyzed person is doing their best to to physically produce understandable spoken words, even though they no longer can. In imagined or inner speech, the individual merely thinks about a word or sentence -- perhaps by imagining what it would sound like. The team found that imagined speech produces signals in the motor cortex that are similar to, but fainter than, those of attempted speech. And with help from artificial intelligence, they were able to translate those fainter signals into words. "We were able to get up to a 74% accuracy decoding sentences from a 125,000-word vocabulary," Kunz says. Decoding a person's inner speech made communication faster and easier for the participants. But Kunz says the success raised an uncomfortable question: "If inner speech is similar enough to attempted speech, could it unintentionally leak out when someone is using a BCI?" Their research suggested it could, in certain circumstances, like when a person was silently recalling a sequence of directions. So the team tried two strategies to protect BCI users' privacy. First, they programmed the device to ignore inner speech signals. That worked, but took away the speed and ease associated with decoding inner speech. So Kunz says the team borrowed an approach used by virtual assistants like Alexa and Siri, which wake up only when they hear a specific phrase. "We picked Chitty Chitty Bang Bang, because it doesn't occur too frequently in conversations and it's highly identifiable," Kunz says. That allowed participants to control when their inner speech could be decoded. But the safeguards tried in the study "assume that we can control our thinking in ways that may not actually match how our minds work," Farahany says. For example, Farahany says, participants in the study couldn't prevent the BCI from decoding the numbers they were thinking about, even though they did not intend to share them. That suggests "the boundary between public and private thought may be blurrier than we assume," Farahany says. Privacy concerns are less of an issue with surgically implanted BCIs, which are well understood by users and will be regulated by the Food and Drug Administration when they reach the market. But that sort of education and regulation may not extend to upcoming consumer BCIs, which will probably be worn as caps and used for activities like playing video games. Early consumer devices won't be sensitive enough to detect words the way implanted devices do, Farahany says. But the new study suggests that capability could be added someday. If so, Farahany says, companies like Apple, Amazon, Google and Meta might be able to find out what's going on in a consumer's mind, even if that person doesn't intend to share the information. "We have to recognize that this new era of brain transparency really is an entirely new frontier for us," Farahany says. But it's encouraging, she says, that scientists are already thinking about ways to help people keep their private thoughts private.

[10]

Brain-computer interface 'reads' and translates your inner thoughts - Earth.com

You read words with your eyes, but you also hear them with a voice in your head. That inner speech isn't just a feeling. It leaves a clear footprint in the brain that can be mapped to text on a screen. For people who can't speak because of paralysis or other conditions, that shift could open the door to faster, more natural conversation. Scientists have now identified brain activity patterns linked to inner speech and decoded them into text with a 74% accuracy rate. Published in the journal Cell, the work could enable brain-computer interface (BCI) systems to help people who cannot speak out loud communicate by silently thinking a password to activate the system. "This is the first time we've managed to understand what brain activity looks like when you just think about speaking," explains lead author Erin Kunz of Stanford University. "For people with severe speech and motor impairments, BCIs capable of decoding inner speech could help them communicate much more easily and more naturally." BCIs already help people with disabilities by interpreting brain signals that control movement. These systems operate prosthetic devices or type text by decoding attempted speech - when users engage speech muscles but produce unintelligible sounds. This is faster than eye-tracking but still demands effort, which can be tiring. The Stanford team explored decoding purely imagined speech. "If you just have to think about speech instead of actually trying to speak, it's potentially easier and faster for people," says co-first author Benyamin Meschede-Krasa. Four participants with severe paralysis from ALS or brainstem stroke took part. Researchers implanted microelectrodes into the motor cortex, a speech-related brain area. Participants either attempted speaking or imagined saying words. The two tasks activated overlapping regions, though inner speech produced weaker signals. The researchers also observed that imagined speech involved reduced activity in non-primary motor areas compared to attempted speech, suggesting more streamlined neural pathways. Their models could reliably distinguish between the two types of speech despite these similarities. Artificial intelligence models, trained specifically on data from participants' imagined speech, demonstrated the ability to decode complete sentences from a remarkably large vocabulary of up to 125,000 words, achieving an accuracy rate of 74%. In addition to decoding prompted sentences, the system was sensitive enough to pick up on unplanned, spontaneous mental activity. For example, when participants were asked to count the number of pink circles on a screen, the BCI detected the numbers they silently tallied, even though they were never instructed to think them. This finding indicates that imagined speech generates distinct and identifiable neural patterns, even without direct prompting or deliberate effort from the user. Furthermore, the research revealed that inner speech signals tend to follow a consistent temporal pattern across multiple trials. In other words, the timing of brain activity during silent speech remained stable each time the participants imagined speaking. This reliable internal rhythm could be an important factor in refining future decoding algorithms, as it provides a predictable framework for translating thought into text with greater accuracy and speed. While the brain activity patterns for attempted speech and inner speech showed many similarities, the researchers discovered that there were still consistent and measurable differences between them. These differences were significant enough for the system to accurately tell the two apart, even when the signals came from overlapping brain regions. According to Frank Willett, this distinction opens the door for developing BCIs that can selectively respond to one type of speech over the other. In practical terms, this means a device could be programmed to completely ignore inner speech unless the user chooses to activate it. To give users full control, the team designed a password-based activation method. In this setup, decoding of inner speech would remain locked until the person silently thought of a specific phrase - in this case, "chitty chitty bang bang." When tested, the system successfully recognized the password with more than 98% accuracy, ensuring both security and intentional use. Summing it all up, your inner voice leaves a measurable trace in the brain's speech-planning system. With thin sensors and well-trained models, that trace can be turned into text in real time, without any physical movement. Free-form inner speech decoding remains a challenge due to variability in brain activity and limited vocabulary coverage. However, the authors highlight that higher-density electrode arrays, advanced machine learning models, and integration of semantic context could improve accuracy. "The future of BCIs is bright," Willett says. "This work gives real hope that speech BCIs can one day restore communication that is as fluent, natural, and comfortable as conversational speech." It's not telepathy, and it's not flawless, but it points toward a faster, more humane way for people who can't speak to be heard - using the voice they already have, the one inside. Like what you read? Subscribe to our newsletter for engaging articles, exclusive content, and the latest updates.

[11]

Scientists Say They've Found a Way to Vocalize the "Inner Voices" of People Who Can't Speak

New advances in brain-computer interface (BCI) technology may make speech for those who've lost the ability to do so easier than ever before. In a new, groundbreaking study published in the journal Cell, researchers from Stanford University claimed that they have found a way to decode the "inner speech" of those who can no longer vocalize, making it far less difficult to talk with friends and family than previous BCIs that required them to exert ample effort when trying to speak. Stanford neuroscientist and coauthor Erin Kunz told the New York Times that the idea of translating inner speech stemmed from care for subjects of BCI experiments, many of whom have diseases like amyotrophic lateral sclerosis (ALS) that weaken their airway muscles and eventually make speech all but impossible. Generally speaking, BCIs for people with ALS and other speech-inhibiting disorders require them to attempt to speak and let a computer do the rest of the work, but as Kunz and her colleagues noticed, they often seemed worn down under the strain of such attempts. What if, the scientists wondered, the BCIs could simply translate their thoughts to be said out loud directly? "If we could decode that, then that could bypass the physical effort," Kunz told the NYT. "It would be less tiring, so they could use the system for longer." Casey Harrell, an ALS patient and volunteer in the long-running BCI clinical trial, had already done the hard work of attempting speech while the electrodes in his brain recorded his neurological activity before becoming one of the four subjects in the inner speech portion of the study. Last summer, Harrell's journey back to speech made headlines after his experimental BCI gave him back the ability to talk using only his brainwaves, his attempts at speech, and old recordings from podcast interviews he coincidentally happened to have given before ALS made such a feat impossible. In the newer portion of the study, however, researchers found that their computers weren't great at decoding what words he was thinking. Thusly, they went back to the drawing board and began training their bespoke AI models to successfully link thoughts to words, making the computer able to translate such complex sentences as "I don't know how long you've been here" with far more accuracy. As they began working with something as private as thoughts, however, the researchers discovered something unexpected: sometimes, the computer would pick up words the study subjects were not imagining saying aloud, essentially broadcasting their personal thoughts that were not meant to be shared. "We wanted to investigate if there was a risk of the system decoding words that weren't meant to be said aloud," Kunz told the NYT -- and it seems that they got their answer. To circumvent such an invasion of mental privacy -- one of the more dystopian outcomes people fear from technologies like BCIs -- the Stanford team selected a unique "inner password" that would turn the decoding on and off. This mental safe word would have to be unusual enough that the computer wouldn't erroneously pick up on it, so they went with "Chitty Chitty Bang Bang," the title of a 1964 fantasy novel by Ian Fleming. Incredibly, the password seemed to work, and when participants imagined it before and after whatever phrase they wanted to be played aloud, the computer complied 98.75 percent of the time. Though this small trial was meant, as Kunz said, to be "proof-of-concept," it's still a powerful step forward, while simultaneously ensuring the privacy of those who would like only for some of their thoughts to be said out loud.

[12]

How a brain-computer chip can read people's minds

Researchers said the technology could one day help people who cannot speak communicate more easily. An experimental brain implant can read people's minds, translating their inner thoughts into text. In an early test, scientists from Stanford University used a brain-computer interface (BCI) device to decipher sentences that were thought, but not spoken aloud. The implant was correct up to 74 per cent of the time. BCIs work by connecting a person's nervous system to devices that can interpret their brain activity, allowing them to take action - like using a computer or moving a prosthetic hand - with only their thoughts. They have emerged as a possible way for people with disabilities to regain some independence. Perhaps the most famous is Elon Musk's Neuralink implant, an experimental device that is in early trials testing its safety and functionality in people with specific medical conditions that limit their mobility. The latest findings, published in the journal Cell, could one day make it easier for people who cannot speak to communicate more easily, the researchers said. "This is the first time we've managed to understand what brain activity looks like when you just think about speaking," said Erin Kunz, one of the study's authors and a researcher at Stanford University in the United States. Working with four study participants, the research team implanted microelectrodes - which record neural signals - into the motor cortex, which is the part of the brain responsible for speech. The researchers asked participants to either attempt to speak or to imagine saying a set of words. Both actions activated overlapping parts of the brain and elicited similar types of brain activity, though to different degrees. They then trained artificial intelligence (AI) models to interpret words that the participants thought but did not say aloud. In a demonstration, the brain chip could translate the imagined sentences with an accuracy rate of up to 74 per cent. In another test, the researchers set a password to prevent the BCI from decoding people's inner speech unless they first thought of the code. The system recognised the password with around 99 per cent accuracy. The password? "Chitty chitty bang bang". For now, brain chips cannot interpret inner speech without significant guardrails. But the researchers said more advanced models may be able to do so in the future. Frank Willett, one of the study's authors and an assistant professor of neurosurgery at Stanford University, said in a statement that BCIs could also be trained to ignore inner speech. "This work gives real hope that speech BCIs can one day restore communication that is as fluent, natural, and comfortable as conversational speech," he said.

[13]

New Brain Implant Could Let People Speak Just by Thinking Words

FRIDAY, Aug. 15, 2025 (HealthDay News) -- For the first time, scientists have created a brain implant that can "hear" and vocalize words a person is only imagining in their head. The device, developed at Stanford University in California, could help people with severe paralysis communicate more easily, even if they can't move their mouth to try to speak. "This is the first time we've managed to understand what brain activity looks like when you just think about speaking," Erin Kunz, lead author of the study, published Aug. 14 in the journal Cell, told the Financial Times. "For people with severe speech and motor impairments, brain-computer interfaces [BCIs] capable of decoding inner speech could help them communicate much more easily and more naturally," said Kunz, a postdoctoral scholar in neurosurgery. Four people with paralysis from amyotrophic lateral sclerosis (ALS) or brainstem stroke volunteered for the study. One participant could only communicate by moving his eyes up and down for "yes" and side to side for "no." Electrode arrays from the BrainGate brain-computer interface (BCI) were implanted in the brain area that controls speech, called the motor cortex. Participants were then asked to try speaking or to silently imagine certain words. The device picked up brain activity linked to phonemes, the small units that make up speech patterns, and artificial intelligence (AI) software stitched them into sentences. Imagined speech signals were weaker than attempted speech but still accurate enough to reach up to 74% recognition in real time, the research shows. Senior author Frank Willett, an assistant professor of neurosurgery at Stanford, told the Financial Times the results show that "future systems could restore fluent, rapid and comfortable speech via inner speech alone," with better implants and decoding software. "For people with paralysis attempting to speak can be slow and fatiguing and, if the paralysis is partial, it can produce distracting sounds and breath control difficulties," Willett said. The team also addressed privacy concerns. In one surprising finding, the BCI sometimes picked up words participants weren't told to imagine -- such as numbers they were silently counting. To protect privacy, researchers created a "password" system that blocks the device from decoding unless the user unlocks it. In the study, imagining the phrase "chitty chitty bang bang" worked 98% of the time to prevent unintended decoding. "This work gives real hope that speech BCIs can one day restore communication that is as fluent, natural and comfortable as conversational speech," Willett said. More information Learn more about the technology by reading the full study in the journal Cell. SOURCE: Financial Times, Aug. 14, 2025

Share

Share

Copy Link

Scientists have developed a brain-computer interface that can decode a person's inner speech with up to 74% accuracy, using a password protection mechanism to ensure privacy.

Breakthrough in Brain-Computer Interface Technology

Scientists have achieved a significant milestone in brain-computer interface (BCI) technology by successfully decoding inner speech—the silent dialogue in people's minds—with up to 74% accuracy. This groundbreaking research, published in the journal Cell on August 14, 2025, represents a major step forward in developing BCIs that can accurately interpret internal speech

1

.

Source: Neuroscience News

The Inner Speech Decoding System

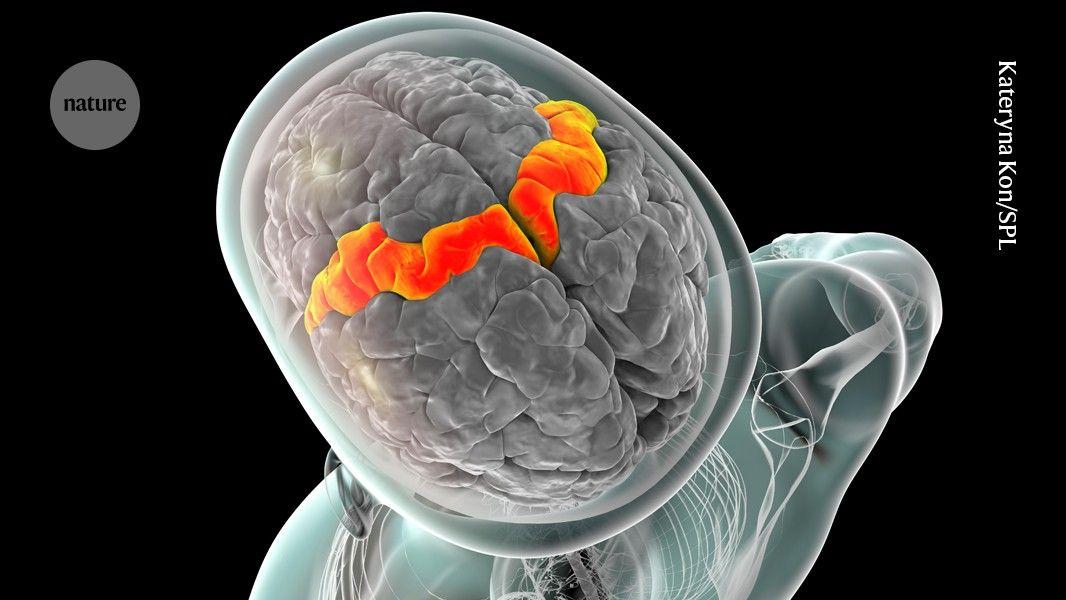

The study, led by researchers at Stanford University, involved four participants with severe paralysis due to either amyotrophic lateral sclerosis (ALS) or brainstem stroke. Microelectrodes were implanted in the participants' motor cortex, the brain region responsible for speech and voluntary movements

2

.

Source: Earth.com

The research team discovered that attempted speech and inner speech activate overlapping regions in the brain and generate similar neural signals. However, the signals associated with inner speech were generally weaker

3

.AI-Powered Decoding and Vocabulary

Using the collected data, the researchers trained artificial intelligence models to recognize phonemes—the smallest units of speech—in the neural recordings. These AI models could then stitch the phonemes together to form words and sentences in real-time, drawing from a vocabulary of 125,000 words

1

.The BCI system demonstrated impressive capabilities:

- It correctly interpreted 74% of sentences imagined by two participants who were instructed to think of specific phrases

1

. - The device could decode numbers that participants imagined when silently counting objects on a screen, suggesting its ability to detect spontaneous self-talk

1

.

Related Stories

Privacy Protection Through Password Mechanism

To address concerns about unintentional decoding of private thoughts, the researchers implemented a password protection feature. Participants could control when the system started decoding by imagining a specific phrase: "Chitty-Chitty-Bang-Bang"

4

.This password mechanism was highly effective, with the BCI recognizing it with an accuracy of more than 98%

1

. This feature offers a straightforward way to protect users' privacy, a crucial aspect for real-world applications of such technology1

.Implications and Future Directions

Source: New Scientist

The development of this inner speech decoding system has significant implications for individuals with severe speech and motor impairments. It offers the potential for faster and less physically demanding communication compared to BCIs that rely on attempted speech

5

.While the current system shows promise, researchers acknowledge that more advanced devices with additional sensors and improved algorithms may be needed to decode free-form inner speech without substantial errors

4

.The study's findings also contribute to our understanding of the neural differences between internal and attempted speech, which could lead to further advancements in BCI technology and our knowledge of brain function

1

.As BCI technology continues to evolve, it holds the potential to restore more natural and fluid communication for individuals with paralysis or limited muscle control, bringing hope for improved quality of life and independence

5

.References

Summarized by

Navi

[2]

[4]

[5]

Related Stories

Brain Implants Decode Inner Speech: Medical Breakthrough Raises Ethical Concerns

31 Aug 2025•Technology

Brain Implant Breakthrough: Real-Time Thought-to-Speech Translation

01 Apr 2025•Science and Research

Breakthrough Brain-Computer Interface Restores Real-Time Speech for ALS Patient

12 Jun 2025•Science and Research

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology