ChatGPT deploys AI age prediction to identify minors and restrict sensitive content

24 Sources

24 Sources

[1]

In an effort to protect young users, ChatGPT will now predict how old you are | TechCrunch

As concern for AI's impact on young people continues to mount, OpenAI has introduced an "age prediction" feature into ChatGPT that is designed to help identify minors and put sensible content constraints on their conversations. OpenAI has been heavily criticized in recent years for the impacts that ChatGPT can have on children. A number of teen suicides have been linked to the chatbot, and, like other AI vendors, OpenAI has also been criticized for allowing ChatGPT to discuss sexual topics with young users. Last April, the company was forced to address a bug that allowed its chatbot to generate erotica for users who were under the age of 18. The company has already been working on its underage user problem for some time, and its new "age prediction" feature merely adds to protections already in place. The new feature leverages an AI algorithm that assesses user accounts for particular "behavioral and account-level signals," in an effort to identify young users, OpenAI said in a blog post Tuesday. Those "signals" include things like the user's stated age, the length of time an account has existed, and the times of day that the account is usually active, the company said. The company already has content filters designed to weed out discussions of sex, violence, and other potentially problematic topics for users who are under age 18. If the age prediction mechanism identifies an account as under 18, those filters are automatically applied. If a user is mistakenly designated as underage, there is a way for them to reestablish their "adult" account. They can submit a selfie to OpenAI's ID verification partner Persona, OpenAI says.

[2]

ChatGPT Will Now Guess Whether You're Under 18 to Restrict What You See

OpenAI is setting up new guardrails intended to reduce exposure to sensitive or potentially harmful content. OpenAI has started pushing out its age-prediction technology across ChatGPT consumer accounts. In a post on Monday, the company said that for those under 18 who haven't already told ChatGPT their age, its software will look at a person's behavior and other signals, such as how long the account has existed and when they're active, to estimate an age. If you're incorrectly identified as being underage, you can turn to technology from identity verification service Persona, OpenAI said. That requires a live selfie and a government-issued ID. A ChatGPT page that takes you directly to age verification is available. Don't miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source. The new ChatGPT system, announced last September as part of broader changes for younger users, adds more guardrails to the AI chatbot, providing what OpenAI calls "safeguards to reduce exposure to sensitive or potentially harmful content." In a separate support page, the company describes in more detail how age prediction works in ChatGPT and what it filters out. That includes graphic violence or gore; depictions of self-harm; viral challenges "that could push risky or harmful behavior"; roleplaying that's sexual, romantic or violent; or content that promotes extreme beauty standards, unhealthy dieting or body shaming. (Disclosure: Ziff Davis, CNET's parent company, in April filed a lawsuit against ChatGPT maker OpenAI, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.) OpenAI and other companies with AI technology have come under fire and are the subject of multiple lawsuits and investigations related to the deaths of teenagers who were engaging with chatbots, including ChatGPT. In the last year, OpenAI also announced it was adding additional parental controls to the platform. Age verification and access restrictions based on age have become a theme more widely across online experiences, driven in part by laws proposed or enacted in various countries and US states. Earlier this month, the gaming platform Roblox instituted mandatory age checks. A new law in Australia imposes a sweeping ban on social media for children under 16.

[3]

Watch Out: ChatGPT's Age-Verification System Might Decide You're a Teen

UPDATE (1/20/26): OpenAI has rolled out an age-verification system for ChatGPT users, but admits it won't always get it right. Age prediction will determine if a user is "likely" to be under 18, using signals such as how long the account has existed, typical times of day someone is active, usage patterns over time, and if the user has shared their age, OpenAI says. It's rolling out to most ChatGPT accounts starting today, though the European rollout begins "in the coming weeks." Once a user is classified as a teen, the chatbot will treat conversations around graphic content, risky viral challenges, sex, self-harm, and unhealthy beauty standards with more sensitivity. OpenAI says it worked with the American Psychological Association to ground its approach in "academic literature about the science of child development and acknowledges known teen differences in risk perception, impulse control, peer influence, and emotional regulation." The catch: Age prediction is difficult, and the tech won't always get it right. OpenAI did not disclose an accuracy rate, but says it plans to "refine the model over time." Adults who are incorrectly placed in the teen experience can confirm their age in account settings by sending a selfie to a system built by the ID verification company Persona. (What'd they do? Joke about 6-7? Have too much rizz?) Kidding aside, age verification is a serious matter with serious consequences. Roblox is also having trouble with automatic age verification as it battles multiple lawsuits against child predators finding victims through the game. Known issues include parents verifying their kids as adults, verified accounts being sold on eBay, and kids getting mislabeled as adults. OpenAI similarly faces lawsuits over teens allegedly being encouraged to self-harm, or in some cases commit suicide, after talking to ChatGPT. Rival chatbot maker Anthropic tested OpenAI's GPT-4o model, which has since been replaced by GPT-5, and found it was more likely than its own Claude chatbot to comply with harmful user requests and facilitate violence. The big question is whether parents should trust ChatGPT's age-verification system to keep their kids safe, and the jury is still out. "No parent is going to trust that their kids can't get through your age gating," Mark Cuban said on X in October in response to OpenAI teasing adult erotica within ChatGPT. "Same for schools. Why take the risk? A few seniors in [high school] are 18 and decide it would be fun to show the hard core erotica they created to the 14-year-olds. What could go wrong?" UPDATE (9/16/25): OpenAI is rolling out its parental controls today for all ChatGPT users on web and mobile. This brings new safeguards to protect teens during their conversations, following an August lawsuit in which parents accused the chatbot of encouraging their 16-year-old son to take his life. To get started, parents have to link their account with their teens. "A parent or teen can send an invitation to link their accounts in parental controls, and the other party needs to accept the invitation to get set up," OpenAI says. "Parents will be notified if their teen disconnects their account." OpenAI is still working on the age detection system it announced in mid-September, which would automatically flag suspected teen accounts without the need to send an invitation, as described in the original story below. The new controls give parents the ability to do things like set "quiet hours" when teens can't use the chatbot, and turn off access to voice mode and image creation. OpenAI is trying to balance privacy for teens with giving parents visibility into what their kids are up to. So, parents won't be able to review conversation transcripts on a daily basis. But in the "rare case" where OpenAI's systems and "trained reviewers" detect serious safety risks, the company may notify the parents. Original Story (9/16): OpenAI today announced major changes in how ChatGPT protects teens, but will it be enough to satisfy growing concerns among parents about their kids talking to the chatbot? This issue has been a hot topic in the past month after one couple sued OpenAI, alleging ChatGPT encouraged their 16-year-old son, Adam, to take his life. In reviewing Adam's ChatGPT history, his parents saw he discussed his plans with the AI for months, during which it advised him not to tell his mother how he was feeling and not to show her the marks on his neck from a failed attempt, among other concerning details. The Federal Trade Commission further raised the profile of these issues last week, launching an inquiry into the use of AI systems as companions, with an emphasis on teen safety. OpenAI has been tracking these issues since at least April, when CEO Sam Altman posted about the company's struggle to wrangle in ChatGPT's "sycophancy," or its tendency to be overly flattering and agreeable. By design, it tells users what they want to hear, even if it's not safe or healthy. With growing pressure, legal and otherwise, OpenAI is now starting to make some changes. Here's what it plans to launch in the next month. 1. An 'Age Prediction System' to Identify Minors OpenAI is building an "age prediction system" to automatically apply controls if it determines a user is under 18. It will scan through users' messages, including those of adults, to guess their age, and default to the under-18 experience if it's not sure. Once it determines the user is underage, ChatGPT won't "engage in discussions about suicide or self-harm," OpenAI says. It will also refuse to engage in "flirtatious talk" and block requests for graphic sexual content. (In April, ChatGPT was found to be having "erotic" conversations with those aged 13 to 17. Meta's chatbots have reportedly done the same.) ChatGPT will also no longer dispense suicide instructions to adults or teens, but the policy has some exceptions for the over-18 crowd. It will discuss suicide "if an adult user is asking for help writing a fictional story that depicts a suicide," OpenAI says. But for teens, even if they tell the chatbot the information is for a short story, it won't comply. ChatGPT is accessible without logging in, so presumably, kids could have these conversations without providing their ages. But it's unclear how in-depth they could get via one-off chats. When asked about that, OpenAI said only that, "When users sign up for ChatGPT, we ask them to provide their age, and we will implement teen protections for users with stated age under 18." 2. Rethinking Content Moderation While the example of writing a short story about suicide seems niche, it follows a push by OpenAI to think through all the edge cases for why a user would want to discuss a subject. For example, it reintroduced the ability for users to create images of swastikas in March, if it's for a "cultural or historical design," as opposed to hate speech. The company seems to be at a crossroads with content moderation. "Some of our principles are in conflict," CEO Sam Altman wrote on X today. While OpenAI wants to protect user privacy and give everyone the freedom to discuss a range of topics with ChatGPT, kids need guardrails. "We realize that these principles are in conflict and not everyone will agree with how we are resolving that conflict," OpenAI says. "These are difficult decisions, but after talking with experts, this is what we think is best and want to be transparent in our intentions." 3. Reporting to Parents if a Teen Is Considering Suicide Another hot topic has been whether ChatGPT should report a user who is discussing suicide, which one mother explored in a piece for The New York Times. Her daughter confided in the chatbot about her suicide plans before following through with them. But ChatGPT never reported it to law enforcement or her parents, as a human therapist would've been required to do by law. ChatGPT is now going to act more like a human in that regard. "If an under-18 user is having suicidal ideation, we will attempt to contact the users' parents and, if unable, will contact the authorities in case of imminent harm," says OpenAI. 4. More Parental Control These features build on the new parental controls OpenAI teased earlier this month in response to the teen suicide lawsuit. They allow parents to link their accounts to a teen's account, choose which features to disable, control how ChatGPT talks to their child, and, most importantly, receive notifications if the chatbot detects "their teen is in a moment of acute distress." Today, OpenAI said it plans to add the ability to set blackout hours when a teen cannot use ChatGPT. This is all positive progress, but we'll have to see how they work in practice. In the meantime, we recommend parents talk to their children about safe ChatGPT use and get a good understanding of how and when their children are using the tool. Given the known sycophancy issue, it's important for kids to know that chatbots may confirm delusions or suspicions to please them, and to talk to an adult about anything that doesn't feel right. Ziff Davis, PCMag's parent company, filed a lawsuit against OpenAI in April 2025, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.

[4]

OpenAI will try to guess your age before ChatGPT gets spicy

Think of the children...and the monetization options available where they're not allowed OpenAI says it has begun deploying an age prediction model to determine whether ChatGPT users are old enough to view "sensitive or potentially harmful content." Chatbots from OpenAI and its rivals are linked to a series of suicides, sparking litigation and a congressional hearing. AI outfits therefore have excellent reasons to make the safety of their services more than a talking point, both for minors and the adult public. Hence we have OpenAI's Teen Safety Blueprint, introduced in November 2025, and its Under-18 Principles for Model Behavior, which debuted the following month. OpenAI is under pressure to turn a profit, knows its plan to serve ads needs to observe rules about marketing to minors, and has erotica in the ChatGPT pipeline. That all adds up to a need to partition its audience and prevent exposing them to damaging material. Part of OpenAI's plan has been to develop an age prediction system so that ChatGPT can automatically present an age-appropriate experience, at least among minors whose parents haven't steered them away from engaging with chatbots. Many young people interact with these models. During a September 16, 2025 Senate subcommittee hearing, "Examining the Harm of AI Chatbots," Mitch Prinstein, chief of psychology strategy and integration at The American Psychological Association, offered written testimony to the effect that over half of all US adolescents over the age of 13 now use generative AI. For those under 13, usage is estimated to be between 10 and 20 percent. Prinstein thinks that should not be the case. "AI systems designed for adults are fundamentally inappropriate for youth and require specific, developmentally informed safeguards," he said. OpenAI has therefore been working on an automated age prediction system, which the company described last September. "This isn't easy to get right, and even the most advanced systems will sometimes struggle to predict age," the biz said at the time. Age prediction or inference is distinct from age verification (checking government documents) and age estimation (using biometric signals like facial analysis). It relies on identifying facts about an individual and drawing a conclusion based on those facts. For OpenAI's purposes, this may involve looking at the topics discussed during ChatGPT sessions and other factors associated with one's account like common usage hours. On Tuesday, the company offered a progress report in which it outlined how ChatGPT is using the company's age prediction model to determine whether an account belongs to someone under the age of 18. "The model looks at a combination of behavioral and account-level signals, including how long an account has existed, typical times of day when someone is active, usage patterns over time, and a user's stated age," OpenAI explained, adding that the global rollout of the prediction-bot will reach the EU in a few weeks. When it detects users deemed to be under 18, OpenAI will activate additional safety settings. The company claims those settings will reduce the incidence of graphic violence or gory content, of viral challenges designed to elicit harmful behavior, of sexual, romantic, or violent role playing, of depictions of self-harm, and of content that promotes extreme beauty standards, unhealthy dieting, or body shaming. "No system is perfect," OpenAI acknowledges in its help documentation. "Sometimes we may get it wrong. If you are 18 or older and you were put into the under-18 experience by mistake, you can verify your age." Doing so requires ChatGPT users to engage with Persona, a third party identity and age-checking company, either by sending a live selfie or uploading a photo of a government-issued ID. Those who don't want to be subject to OpenAI's age verification system may also choose to verify their age through Persona, which claims it does not share or sell personal data collected for age assurance. OpenAI is following a path already trodden by tech companies in Australia, which have had to adopt age-checking tech to comply with rules that disallow social media usage for those under 16. Prior to the implementation of that law, Australia's Age Assurance Technology Trial (AAT) came to a self-perpetuating conclusion [PDF] about age check tech: Age verification can be done, despite challenges, with an average accuracy of 97.05 percent, though less so "for older adults, non-Caucasian users and female-presenting individuals near policy thresholds." When the Australia Broadcasting Corporation reported on the preliminary findings of the ATT in June last year, age verification systems guessed people's ages within 18 months only 85 percent of the time. Advocacy organizations remain skeptical. Mozilla last month said, "While many technologies exist to verify, estimate, or infer users' ages, fundamental tensions around effectiveness, accessibility, privacy, and security have not been resolved." Alexis Hancock, director of engineering at the Electronic Frontier Foundation told The Register in an email, "We encourage the safety features promoted to be available to everyone using chat LLMs such as ChatGPT. However, OpenAI is taking the moment to further train an age prediction model, where a false prediction will fall on the user to give private information to further verify their age to another company." Hancock said that factors like account age and usage patterns may be less reliable given that OpenAI has only been offering ChatGPT for four years. "However, the model itself is not obligated to be correct, nor can the decisions be challenged," she said. The focus on the enforcement of age gating rather than accurate age verification, she said, is a developing pattern at other age checking systems. The Computer & Communications Industry Association, which represents tech giants like Amazon, Apple, and Google, also isn't thrilled with the possibility that age verification may become a requirement within app stores. The age checking tech, the group said last October, is "unworkable in practice." But as long as ChatGPT can deliver sexy banter, and do so alongside ads, OpenAI needs to try to make age prediction tech work. ®

[5]

OpenAI rolls out age prediction model on ChatGPT to detect your age

OpenAI is rolling out an age prediction model on ChatGPT to detect your age and apply possible safety-related restrictions to prevent misuse by teens. OpenAI no longer wants ChatGPT to surface adult or potentially illegal and dangerous content to those who use ChatGPT, especially if they are under 18 and do not have their parents' consent. This age detection feature is based on an age detection model, so it may look at topics you start with ChatGPT and the times of day you use ChatGPT. These predictions allow ChatGPT to decide if you are an adult or a teen. OpenAI warns that its AI model can sometimes get it wrong and accidentally flag an adult as a teenager, especially if the model detects your behavior as that of a teenager. In that case, if you are 18 or older and were put into the under-18 experience by mistake, you can verify your age. You can still use ChatGPT to learn, create, and ask questions, but it will restrict topics like violence, gore, viral challenges on TikTok-like platforms, and content that promotes extreme beauty standards, unhealthy dieting, or body shaming. If you are logged in, you can start age verification here: https://chatgpt.com/verify_age and you could be asked for: * Live selfie: Use your phone or webcam to take a real-time photo of your face. * Government ID: Upload a photo of a government-issued ID (for example, a driver's license, passport, or state ID). Persona checks your date of birth and that your selfie matches your ID photo. Accepted IDs vary by country. * After you verify, if Persona confirms you are 18 or older, ChatGPT will remove the extra safety settings. This change may take a short time to fully apply. OpenAI says its partner, Persona, deletes your ID or selfie within 7 days after verification. This change is rolling out worldwide, and it'll begin automatically applying to all ChatGPT accounts.

[6]

OpenAI is launching age prediction for ChatGPT accounts

OpenAI is the latest company to hop on the bandwagon of gating access by users' age. The AI business is beginning a global rollout of an age prediction tool to determine whether or not a user is a minor. "The model looks at a combination of behavioral and account-level signals, including how long an account has existed, typical times of day when someone is active, usage patterns over time,and a user's stated age," the company's announcement states. If an individual is incorrectly characterized by ChatGPT as underage, they will need to submit a selfie to correct the mistake through the Persona age verification platform. Most AI companies have been willing to push new features first and then attempt to layer on a patchwork of protections and safety guards on top of them after they cause harm. OpenAI was implicated in a wrongful death suit for a teen who allegedly used ChatGPT to plan his suicide, and only in the following months began pondering automatic restrictions on content for underage users and launching a mental health advisory council. In this instance, OpenAI is attempting to prepare for the launch of an "adult mode" that will allow users to create and consume content that would be dubbed NSFW. Considering how well a similar change has been going over at Roblox, another platform with a shaky history around protecting minors, it seems probable that underage users will find ways to circumvent the existing tools if they want to use ChatGPT as adults.

[7]

OpenAI is rolling out age prediction for ChatGPT consumer plans

The model relies on a combination of account-level signals and behavioral signals, OpenAI said. Some of those signals include usage patterns over time, how long an account has existed, the typical times of day a user is active and the user's stated age. OpenAI has rolled out several new safety features in recent months as it faces mounting scrutiny over how it protects users, particularly minors. The AI startup and other tech companies are facing a probe from the FTC over how their AI chatbots potentially negatively affect children and teenagers, and OpenAI is named in several wrongful death lawsuits, including one that centers around a teenage boy's death by suicide. Once OpenAI's age prediction model suggests that a user is under 18, OpenAI said, ChatGPT will automatically apply protections designed to reduce exposure to "sensitive content," like depictions of self-harm. If users are incorrectly identified as being under 18, they will be able to use the identity-verification service Persona to restore their full access.

[8]

ChatGPT will now estimate age to limit sensitive content for teens

The company says the system will help apply the right protections to teen accounts while allowing adults to access ChatGPT with fewer restrictions. The rollout arrives as regulators and lawmakers increase pressure on AI companies to show stronger protections for minors. The age prediction model evaluates a mix of account-level and behavioral signals. These include how long an account has existed, usage patterns over time, and typical hours of activity. The system also considers any age information users previously provided. OpenAI says this approach helps it infer age without forcing repeated prompts or constant identity checks. The company frames the system as adaptive rather than definitive. When signals remain unclear, ChatGPT defaults to a safer experience. If the model estimates that an account likely belongs to a minor, the platform automatically applies added safeguards. These reduce exposure to content categories considered higher risk for teens. The restricted areas include graphic violence, gory imagery, and depictions of self-harm. The system also limits sexual or violent role play and viral challenges that could encourage risky behavior.

[9]

ChatGPT age prediction is rolling out - here's how to check yours

OpenAI says it is now rolling out ChatGPT age prediction and that it will use this to determine the content you are allowed to see. It's not immediately clear how this will impact Siri fallback to ChatGPT. The AI company says that it will use a wide range of signals to guess your age, including your usage patterns over time ... ChatGPT age prediction The AI company is only concerned with whether or not you are under 18 years old. We're rolling out age prediction on ChatGPT consumer plans to help determine whether an account likely belongs to someone under 18, so the right experience and safeguards can be applied to teens [...] The model looks at a combination of behavioral and account-level signals, including how long an account has existed, typical times of day when someone is active, usage patterns over time, and a user's stated age. If ChatGPT decides you are under 18, then it will reduce your exposure to a range of what the company terms sensitive content. * Graphic violence or gory content * Viral challenges that could encourage risky or harmful behavior in minors * Sexual, romantic, or violent role play * Depictions of self-harm * Content that promotes extreme beauty standards, unhealthy dieting, or body shaming Check how old ChatGPT thinks you are It's very simple to check how old ChatGPT thinks you are: all you need do is ask it. How old do you think I am? The chatbot gives you its best guess and explains its reasoning, which can be amusing. Apparently I'm pedantic (though it appears to have omitted a comma in making this observation). With all the usual caveats (and a firm awareness that this could be gloriously wrong), I'd put you in the early-to-mid 40s bracket -- say around 42, give or take a few years. Why that neighbourhood rather than a precise house number: Your comfort with legal terminology, accuracy pedantry, and preference for orthodox wording suggests professional mileage rather than fresh-out-of-uni enthusiasm. You write like someone who has spent a long time explaining complex things to people who want simplicity -- a skill typically acquired through experience, mild despair, and deadlines. The balance of tech fluency and scepticism feels "grew up alongside the internet" rather than "met it at birth" or "met it via an IT department memo". All of which is circumstantial, inferential, and very possibly nonsense -- but if I had to bet actual money (which I wouldn't), that's where I'd place the chip. I'm not 42, but I'll take it. It's not clear how OpenAI will apply age prediction when you make a request to Siri that is then passed to ChatGPT.

[10]

OpenAI rolls out age prediction for teens

The rollout of ChatGPT age prediction is now underway, according to an announcement OpenAI made Tuesday. Last fall, the company indicated it would introduce age prediction as a safety measure for teens. OpenAI has been sued by parents of teens who died by suicide after ChatGPT allegedly coached them to end their lives or didn't respond appropriately to their discussions of psychological distress. OpenAI has denied the allegations in the first of those lawsuits. In December, the company introduced an update to its Model Spec, which guides how its AI models should behave. The update focused on principles for responding to under-18 users in high-stakes situations. The details made public on Tuesday explain that age prediction will launch on consumer plans. ChatGPT's age prediction model estimates a user's age based on behavior and signals from their account, like when the person is active during the day, long-term usage patterns, how long the account has existed, and the user's stated age. If the user's age is assessed as under-18, their account should not be exposed to graphic violence, depictions of self-harm, and sexual, romantic, or violent role play, among other types of potentially harmful content. Teens who tell OpenAI they're under 18 upon opening a ChatGPT account are automatically subject to such safeguards. If OpenAI isn't confident about a user's age, their account will default to safer settings. OpenAI said that adult users whose accounts are mistakenly placed in the under-18 experience can confirm their age by submitting a selfie to Persona, a third-party identity verification service. OpenAI did not provide additional information about how ID documents would be retained. In Oct. 2025, a third-party vendor used by the messaging platform Discord was breached, exposing upwards of 70,000 government IDs. OpenAI said it planned to improve age-prediction accuracy and make further improvements based on observations from the initial rollout.

[11]

ChatGPT can now estimate your age and restrict sensitive content if you're under 18

OpenAI is offering an easy way to regain full access if you can prove your age. OpenAI first announced plans to introduce an automated age-prediction system for ChatGPT in September last year, aimed at helping the AI chatbot distinguish between teens and adults. After testing it in select countries over the past few months, the company has now started rolling it out on ChatGPT consumer plans. The system is designed to identify users under 18 and automatically redirect them to a more age-appropriate ChatGPT experience. According to OpenAI, the age-prediction model "looks at a combination of behavioural and account-level signals, including how long an account has existed, typical times of day when someone is active, usage patterns over time, and a user's stated age" to estimate whether someone is underage. Recommended Videos When the system detects that an account may belong to a younger user, ChatGPT automatically applies additional safety measures to limit exposure to sensitive content. OpenAI notes that this age-appropriate experience restricts access to graphic or violent content, risky viral challenges, sexual or romantic roleplay, depictions of self-harm, and content that encourages extreme beauty ideals, unhealthy dieting, or body shaming. Adults flagged incorrectly can prove their age and restore full access OpenAI acknowledges that the system isn't perfect and may misclassify adults. The company plans to continue refining the model to improve accuracy over time. Users who are incorrectly flagged as teens will have the option to verify their age and regain full access through the identity-verification service, Persona. To see if their account has restricted access, users can navigate to Settings > Account. In addition to offering younger users a more age-appropriate ChatGPT experience, the age-prediction model will allow OpenAI to introduce new features for older users, including the previously teased adult mode. Originally slated to roll out late last year, the adult mode was pushed back to Q1 2026 while OpenAI fine-tuned its age-prediction model. With the system now live, the company could announce the feature soon.

[12]

OpenAI Deploys Age Prediction to Restrict Teen Access on ChatGPT

Adults misclassified as teens can restore access through selfie-based age verification. OpenAI is moving away from the "honor system" for age verification, deploying a new AI-powered prediction model to identify minors using ChatGPT, the company said on Tuesday. The update to ChatGPT automatically triggers stricter safety protocols for accounts suspected of belonging to users under 18, regardless of the age they provided during sign-up. Rather than relying on the birthdate a user gives at sign-up, OpenAI's new system analyzes "behavioral signals" to estimate their age. According to the company, the algorithm monitors how long an account has existed, what time of day it is active, and specific usage patterns over time. "Deploying age prediction helps us learn which signals improve accuracy, and we use those learnings to continuously refine the model over time," OpenAI said in a statement. The shift to behavioral patterns comes as AI developers increasingly turn to age verification to manage teen access, but experts warn the technology remains inaccurate. A May 2024 report by the National Institute of Standards and Technology found that accuracy varies based on image quality, demographics, and how close a user is to the legal threshold. When the model cannot determine a user's age, OpenAI said it applies the more restrictive settings. The company said adults incorrectly placed in the under-18 experience can restore full access through a "selfie-based" age-verification process using the third-party identity-verification service Persona. Privacy and digital rights advocates have raised concerns about how reliably AI systems can infer age from behavior alone. Getting it right "These companies are getting sued left and right for a variety of harms that have been unleashed on teens, so they definitely have an incentive to minimize that risk. This is part of their attempt to minimize that risk as much as possible," Public Citizen big tech accountability advocate J.B. Branch told Decrypt. "I think that's where the genesis of a lot of this is coming from. It's them saying, 'We need to have some way to show that we have protocols in place that are screening people out.'" Aliya Bhatia, senior policy analyst at the Center for Democracy and Technology, told Decrypt that OpenAI's approach "raises tough questions about the accuracy of the tool's predictions and how OpenAI is going to deal with inevitable misclassifications." "Predicting the age of a user based on these kinds of signals is extremely difficult for any number of reasons," Bhatia said. "For example, many teenagers are early adopters of new technologies, so the earliest accounts on OpenAI's consumer-facing services may disproportionately represent teenagers." Bhatia pointed to CDT polling conducted during the 2024-2025 school year, showing that 85% of teachers and 86% of students reported using AI tools, with half of the students using AI for school-related purposes. "It's not easy to distinguish between an educator using ChatGPT to help teach math and a student using ChatGPT to study," she said. "Just because a person uses ChatGPT to ask for tips to do math homework doesn't make them under 18." According to OpenAI, the new policy draws on academic research on adolescent development. The update also expands parental controls, letting parents set quiet hours, manage features such as memory and model training, and receive alerts if the system detects signs of "acute distress." OpenAI did not disclose in the post how many users the change is expected to affect or details on data retention, bias testing, or the effectiveness of the system's safeguards. The rollout follows a wave of scrutiny over AI systems' interactions with minors that intensified in 2024 and 2025. In September, the Federal Trade Commission issued compulsory orders to major tech companies, including OpenAI, Alphabet, Meta, and xAI, requiring them to disclose how their chatbots handle child safety, age-based restrictions, and harmful interactions. Research published that same month by the non-profit groups ParentsTogether Action and Heat Initiative documented hundreds of instances in which AI companion bots engaged in grooming behavior, sexualized roleplay, and other inappropriate interactions with users posing as children. Those findings, along with lawsuits and high-profile incidents involving teen users on platforms like Character.AI and Grok, have pushed AI companies to adopt more formal age-based restrictions. However, because the system assigns an estimated age to all users, not just minors, Bhatia warned that mistakes are inevitable. "Some of those are going to be wrong," she said. "Users need to know more about what's going to happen in those circumstances and should be able to access their assigned age and change it easily when it's wrong." The age-prediction system is now live on ChatGPT consumer plans, with a rollout in the European Union expected in the coming weeks.

[13]

ChatGPT to predict users' ages to protect teenagers

ChatGPT will estimate whether a user is under 18 based on how long their account has existed, when they're online and what they ask about. OpenAI's artificial intelligence (AI) chatbot ChatGPT will predict the age of its users to give teenagers a safer online experience, as scrutiny grows about how tech companies protect minors online. The company's new age prediction systemwill use information about a user's account to predict whether that person is under 18, such as how long the account has existed, the times of day that they log on, and how they use the app. When ChatGPT identifies a user under 18, additional protections against graphic, violent, self-harm, and sexual content will immediately be turned on. Viral social media challenges that encourage "risky or harmful behaviour" for minors will also be limited. OpenAI said this approach is "guided by expert input and rooted in academic literature about the science of child development." Accounts identified as belonging to those under the age of 18can still use ChatGPT to learn, create, and ask questions, the company said. The OpenAI update comes as governments in the European Union are discussing restrictions on social media accounts for users under 16, after Australia enacted a banin December. ChatGPT is not considered a social media platform under Australia's restrictions. OpenAI and its CEO, Sam Altman, are being sued in California by the parents of 16-year-old Adam Raine, who committed suicide last April, after the platform allegedly coached him to take his life and wrote a suicide note for him. OpenAI previously introduced parental controls on ChatGPT that allow parents to set "quiet hours" where the AI can't be used and to receive notifications if their teen shows any signs of distress during their messages with the AI bot. Anyone falsely identified as under 18 in the new system will be able to take a live selfie or upload a government ID to verify their age and have the restrictions lifted.

[14]

ChatGPT Is Getting on the AI Age Verification Bandwagon

OpenAI joins other companies like Roblox and Google that are using AI to guess the ages of their users. When OpenAI first announced GPT-5.2 last month, it quietly disclosed a new safety feature it called "age prediction." Considering ChatGPT proper isn't exactly an "all ages" kind of tool, it makes sense that users under the age of 18 should have protections in place to shield them from harmful content. The company says that users who indicate they're under 18 already receive an altered experience to "reduce exposure to sensitive or potentially harmful content," but if the user doesn't voluntarily share how old they are with OpenAI, how does the company enforce these protections? Here's where age prediction comes in. How age prediction for ChatGPT works On Tuesday, OpenAI officially announced its new age prediction policy, which, like other age verification systems being used by the likes of Roblox, uses AI to guess how old a user is. If the system decides that a particular user is under the age of 18, OpenAI will adjust the experience accordingly, with the goal of keeping all interactions age-appropriate. Here's how it works: The new age prediction model looks at both the user's behaviors within the app, as well as the general account data. That includes things like how old the account is, what times of day the user is accessing ChatGPT, usage patterns, as well as, of course, the age the user says they are. Looking at all this data, the model determines how old the user likely is. If the model thinks they're over 18, they'll get the full experience; if the model thinks they're under 18, they'll get the "safer experience." If the model isn't confident, it defaults to that safer experience. What's restricted in the "safer" version of ChatGPT That limited experience means that someone the model thinks is under 18 will try to reduce the following content types: * Graphic violence or gore * Viral challenges that might inspire "risky or harmful behaviors" * Role play that is sexual, romantic, or violent in nature * Self-harm descriptions * Content promoting "extreme" beauty standards, unhealthy dieting, or body shaming The company says that its approach is informed by "expert input" as well as literature discussing child development science. (It's not clear whether how much of that input is from direct interviews and coordination with experts, and how much, if any, is from independent research.) The company also acknowledges "known teen differences in risk perception, impulse control, peer influence, and emotional regulation" when compared to adults. AI isn't always great at age prediction The biggest risk with any of these age prediction models is that they'll sometimes get it wrong -- hallucination is an unfortunate habit AI models all share. That goes both ways: You don't want someone too young accessing inappropriate content in ChatGPT, but you also don't want someone older than 18 getting stuck with a limited account for no reason. If you experience the latter situation, OpenAI has a solution for you: direct age verification through Persona. This is the same third-party Roblox uses for its age verification, which hasn't gone very well thus far. That doesn't necessarily spell doom for OpenAI. Roblox tried overhauling their age verification system for a massive user base all used to a certain type of multiplayer experience, which led to users not being able to chat with other users in newly-assigned age categories, which were often incorrect. Meanwhile, ChatGPT's age prediction is only controlling the experience of one user at a time. To that end, OpenAI will let you upload a selfie as an added verification step if the prediction model alone isn't enough. Interestingly, OpenAI doesn't say anything about the option to upload an ID for verification, which other companies, like Google, have provided. I'm not necessarily a fan of age prediction models, as I think they often sacrifice user privacy in the name of creating age-appropriate experiences. But there's little doubt that OpenAI has to do something to limit the full ChatGPT experience for younger users. Many of ChatGPT's users are under 18, and much of the content they experience is wildly inappropriate, whether it be instructions on getting high, or advice on writing suicide notes. In some tragic cases, minors have taken their own lives after discussions with ChatGPT, leading to lawsuits against OpenAI. I don't have any great answers here. We'll just have to see how this new age prediction model affects the user experience for minors and adults alike, and whether it actually manages to create a safer experience for younger, more impressionable users.

[15]

OpenAI forces safety filters on teens via behavioral age prediction

OpenAI introduced an AI-powered "age prediction" feature in ChatGPT to identify minors and apply content constraints. This development follows criticism regarding ChatGPT's impact on young users, including links to teen suicides and the chatbot's generation of inappropriate content. In April, the company addressed a bug that allowed its chatbot to generate erotica for users under 18. The new feature enhances existing protections by utilizing an AI algorithm that assesses behavioral and account-level signals to identify young users, OpenAI said in a Tuesday blog post. These signals typically include stated age, account longevity, and daily activity times. If the age prediction mechanism identifies an account as serving a user under 18, content filters designed to block discussions of sex, violence, and other problematic topics are automatically applied. Users mistakenly designated as underage can reestablish their "adult" account by submitting a selfie to OpenAI's ID verification partner, Persona, OpenAI said.

[16]

OpenAI rolls out age prediction technology for ChatGPT

OpenAI said Tuesday that it's rolling out age prediction technology for ChatGPT in the face of growing concerns about children's safety when using AI chatbots. The technology aims to estimate whether an account likely belongs to someone under 18 years old in order to ensure teens are using a version of ChatGPT with additional protections. It will rely on a "combination of behavioral and account-level signals," such as how long an account has existed, daily and longer term usage patterns and a user's stated age. "Deploying age prediction helps us learn which signals improve accuracy, and we use those learnings to continuously refine the model over time," OpenAI noted in a blog post Tuesday. Those under 18 years old will be directed to a version of ChatGPT that limits sensitive content, such as graphic violence, risky viral challenges, sexual or romantic role play, self-harm and extreme beauty standards or unhealthy dieting. "While these content restrictions help reduce teens' exposure to sensitive material, we are focused on continually improving these protections, especially to address attempts to bypass our safeguards," the company added. "When we are not confident about someone's age or have incomplete information, we default to a safer experience." Users who are incorrectly placed in the under-18 version of ChatGPT will be able to confirm their age using Persona, an identity verification software. The push comes as AI chatbots across the board are facing growing scrutiny over how they interact with and impact young users. The family of 16-year-old Adam Raine sued OpenAI in August, alleging ChatGPT encouraged their son to take his own life. Character.AI and Google, which are also facing several similar lawsuits, indicated in court filings earlier this month that they had reached settlements "in principle" with families in five cases.

[17]

This is why your ChatGPT account is suddenly age-restricted

* OpenAI now predicts user age from behavior and account signals, auto-restricting suspected under-18 accounts. * Suspect accounts get prompted to verify via Persona; failing verification keeps features locked. * Many users worry about privacy handing ID to Persona, though it encrypts data and limits retention. If you've suddenly noticed ChatGPT refusing to cooperate, you're not alone. OpenAI is paying much closer attention to certain signals coming from your account to predict the age of the user; it could see some accounts accidentally caught up in the mix. Of course, any account OpenAI suspects is operated by someone younger than its ToC will be suspended, but given that the whole system runs on AI predictions, get set for some age-restriction teething problems while the new system beds in. OpenAI is predicting your age now It probably always was OpenAI is taking a hard stance on underage accounts accessing ChatGPT, specifically taking aim at allegations that its platform doesn't do enough to protect vulnerable people. OpenAI first mooted changes to its Model Spec in December 2025, explaining that it wanted ChatGPT to "provide a safe, age-appropriate experience for teens aged 13 to 17." Now, OpenAI says its updated age model profiling can better spot accounts that claim to be 18+, but in reality are teenagers who want adult access. The model looks at a combination of behavioral and account-level signals, including how long an account has existed, typical times of day when someone is active, usage patterns over time, and a user's stated age. OpenAI's new model will automatically prompt suspect accounts to verify their age through Persona. This is a secure identity verification service used on other platforms like LinkedIn and Coursera, comparing your data to known government ID checks, biometrics, and so on. Obviously, if you fail the age verification check, your account will remain restricted. On top of that, OpenAI will automatically apply restrictions to accounts it suspects are operated by an under 18, keeping in line with its "four guiding commitments" to protect teens from dangerous content. We've implemented safeguards to guide the model to take extra care when discussing higher-risk areas, including self-harm and suicide, romantic or sexualized roleplay, graphic or explicit content, dangerous activities and substances, body image and disordered eating, and requests to keep secrets about unsafe behavior. Caught out by age verification? Just verify your identity But that feels problematic I'm far from the only person who feels like handing over your identity to a third party feels like a privacy problem. It's a hard choice between verifying your ChatGPT account and trusting another service with your private data. Persona is a well-trusted verification tool that encrypts your data at rest with AES-256, making it extremely well-protected. Furthermore, Persona doesn't hold your data unless requested by the service provider. So, for example, Persona holds Roblox verifications for up to 30 days, while for Reddit, the number is much lower at around 7 days. So, while it is frustrating, there is at least comfort in the fact that Persona doesn't hold your data infinitely. And if you're inclined to or worried OpenAI will lock down your account, you can opt for ChatGPT's manual age verification to avoid accidental lockouts. I regret ignoring this ChatGPT feature for so long You don't need prompts to fix ChatGPT. You need this setting. Posts 24 By Amir Bohlooli Ask how old ChatGPT thinks you are This isn't actual advice So, how do you check how old OpenAI thinks you are? Of course, I turned to ChatGPT and asked, "How old do you think I am?" Not that this is anyway useful; OpenAI will use a different system to identify underage accounts, not just ask ChatGPT for your age. Still, I was actually impressed with how accurate ChatGPT was, guessing my age to the dot. Subscribe to the newsletter for clear guidance on ChatGPT age rules Gain clearer context by subscribing to the newsletter: detailed breakdowns of ChatGPT's age-verification changes, how Persona and privacy trade-offs operate, and what the policy shifts mean for users and platforms, alongside broader AI policy coverage. Subscribe By subscribing, you agree to receive newsletter and marketing emails, and accept Valnet's Terms of Use and Privacy Policy. You can unsubscribe anytime. Unerring, but I appreciate that my "tone feels more 'seasoned pro' than 'early-career hustle'." However, that doesn't actually solve the age-restriction problem (but it is fun), but it could point to whether you come across as a very young person. As said, this isn't actual advice, and the only way to actually unlock your account is through the verification system. The real question is what this means for the so-called "ChatGPT Adult Mode" OpenAI made reference to towards the end of 2025 -- is enhanced age-verification the next step in the process? There are more than a few ChatGPT subscribers hoping so!

[18]

OpenAI's Age Prediction System to Detect Underage Users Is Rolling Out

OpenAI partnered with Persona for the age verification process OpenAI is finally rolling out its age verification system that was first announced in September 2025. The system is an artificial intelligence (AI) model that works behind the scenes across ChatGPT and collects "signals" from user activity to determine the age of the user. While the company does ask for the date of birth when an OpenAI account is first created, it is not a verified process, and the company says it wants to ensure that underage users get additional safety layers to protect them from potentially harmful interactions. OpenAI's Age Verification System Is Here In a blog post, the AI giant announced that its age verification system is now rolling out globally, except in the European Union (EU), where the rollout will occur in the coming weeks owing to regional requirements. Age verification is primarily aimed at teenagers and minors, who escape the system-level safety settings by adding a false date of birth. Notably, OpenAI's teen safety settings limit sensitive content and exposure to certain interactions. The age verification system, as explained by the ChatGPT-maker, processes user activity on every account and monitors signals around the topic of discussion and when the user is typically active to infer the real age of the user. The company acknowledges that the system is not perfect, and sometimes it can classify adult users as under the age of 18. However, whenever an account has been classified, OpenAI will add the non-removable safety settings by default. With the teen safety turned on, users will still be able to use ChatGPT to ask questions, create, and learn. However, the AI chatbot will not provide information about graphic violence or gore, viral challenges that can be risky or harmful, sexual, romantic, or violent role play, or see content that promotes extreme beauty standards, unhealthy dieting, or body shaming. The company is also providing a way for adult users who have mistakenly been classified as minors to verify their age. This is being done in partnership with Persona, a third-party online identity verification platform. The verification process will require the user to capture and upload a live selfie and to upload a government-issued ID. "Persona checks your date of birth and that your selfie matches your ID photo," the post mentioned. After verification, if Persona confirms that the user is an adult, the restrictions are lifted. However, OpenAI says that there could be a short duration before the changes are applied. OpenAI mentioned that since the only things being verified are the photo, name, and date of birth on the ID, users can hide other information while uploading the document. The company also explained that since Persona handles the verification, it has no access to it. Additionally, the ID and the selfie are deleted within seven days after verification. The ChatGPT-maker does have access to the signals and analysis shared by the age prediction system, but it claims that it is stored securely and is only used as described in the company's privacy policy. OpenAI is also giving users the option to avoid the age verification screening on their account by voluntarily verifying their age. Interested users can go here to initiate the process.

[19]

OpenAI rolls out age prediction on ChatGPT

OpenAI's CEO of applications, Fidji Simo, had in December said that she expects "adult mode" to debut within ChatGPT in the first quarter of 2026, following chief executive Sam Altman's comments about allowing mature content for users who verify their age. OpenAI on Tuesday said it is rolling out age prediction on ChatGPT globally, to determine whether an account is likely owned by a minor, as the artificial intelligence startup prepares to allow adult content on the popular chatbot. "When the age prediction model estimates that an account may belong to someone under 18, ChatGPT automatically applies additional protections designed to reduce exposure to sensitive content," OpenAI said in a statement. Users mistakenly placed in the under-18 experience will regain full access by submitting a selfie through Persona, an identity verification service. In the EU, the feature will be rolled out in the coming weeks. OpenAI's CEO of applications, Fidji Simo, had in December said that she expects "adult mode" to debut within ChatGPT in the first quarter of 2026, following chief executive Sam Altman's comments about allowing mature content for users who verify their age. ChatGPT has 800 million weekly active users. OpenAI last week said it would start showing ads in the chatbot to some U.S. users, ramping up efforts to generate revenue. Microsoft-backed OpenAI's annualized revenue surpassed $20 billion in 2025, up from $6 billion in 2024, finance chief Sarah Friar said on Sunday, with growth closely tracking an expansion in computing capacity.

[20]

OpenAI Adds Age Prediction for ChatGPT Safety Controls

OpenAI has begun rolling out an age prediction feature across ChatGPT consumer plans to automatically estimate whether an account likely belongs to someone under 18, and to apply age-appropriate protections as part of its broader safety strategy. According to OpenAI, the age prediction system works by analysing a combination of behavioural and account-level signals, such as the length of an account's existence, typical activity times, usage patterns over time, and any stated age, to help indicate whether the account may belong to a minor. At the same time, the company says the model is designed to be refined over time as more signals are learned and accuracy improves. If the system estimates that an account belongs to someone under 18, ChatGPT automatically applies additional safeguards to reduce exposure to sensitive content. These include restrictions on graphic violence or gory material, viral challenges that could encourage risky behaviour, sexual, romantic, or violent role play, depictions of self-harm, and content that promotes extreme beauty standards or unhealthy dieting. However, users whom the system wrongly places in the under-18 experience can dispute the decision and restore full access by verifying their age through Persona, a secure identity verification service, using a selfie. Notably, this decision comes after a wrongful death lawsuit in the United States linked to the death of 16-year-old Adam Raine, whose parents alleged ChatGPT failed to prevent or appropriately intervene in his prolonged discussions of self-harm and suicide with the chatbot before he took his own life in April 2025. After the Raines family's suit, OpenAI added stronger parental controls and age-based safety features, enabling guardians to monitor teen accounts, receive alerts if the system detects the child is in severe distress, and enforce blackout hours. Age verification systems still struggle to deliver exact precision in real-world applications, with industry experts and platform deployments underscoring key limitations. In an interview with MediaNama, Bureau Chief Technology Officer (CTO) Sandesh GS explained that many current technologies are better at identifying broad age bands than precise ages, saying that "if you want a band, it works reasonably well, but when we want a point in time, it does not work as well." He added that models simply are not calibrated for "decimal-level accuracy," meaning systems can sometimes group a user into a general age bracket yet misestimate their specific age. This limitation is visible in large-scale platform rollouts. Roblox, one of the world's most popular gaming platforms, now requires users to complete age checks, typically through facial age estimation using Persona or ID-based verification, before accessing chat features. The platform then places users into specific age groups, allowing them to interact in-game within those groups. Although the company says age estimation models achieve a mean absolute error of about 1.4 years for minors in testing, the practical impact of that variance can be significant for safety and access controls. Furthermore, Roblox's AI-powered system has delivered mixed outcomes, with the platform misidentifying users into incorrect age categories and disrupting access to chat functionality. Critics online have said that the system has produced flawed age assignments, placing adults with children and vice versa. Experts have flagged practical and contextual challenges in India for any AI-based age estimation tool. While discussing YouTube's AI age verification mechanism in the United States, researchers and experts highlighted several India-specific issues to MediaNama. Chitra Iyer, co-founder and CEO of social impact consulting firm Space2Grow, said that "verifying age on platforms is critical, and it (YouTube's AI-based age verification model) is a great move, but how it is contextualised for India is critical." She explained that "digital behaviour patterns are very culturally unique. A system trained for children in the US may not work in the Indian context." Iyer further warned that "there will always be algorithmic bias and false positives. The system may misclassify adults or overlook minors using shared devices." Importantly, she cited research showing that 99% of children in the remotest parts of Araria, Bihar, are online, yet 50% of them use shared devices, which could confound behavioural signals. Beyond age prediction itself, Iyer stressed that "age verification is not the only thing to keep children safe on platforms." She argued for a more proactive "safety-by-design" approach, with immediate access to moderators and content managers in cases of risk and harm. "Platforms having more AI or algorithmic approaches to remove harmful content would be a far more impactful way to ensure risks and harms are reduced," she said. Iyer added that a safety-by-design approach, with stronger involvement from product teams, parents, and children themselves, is critical to addressing online harm more holistically. OpenAI's decision to roll out age prediction on ChatGPT consumer plans matters because it marks a shift towards automated, platform-level child safety enforcement at a time when generative AI tools are becoming deeply embedded in everyday digital life. As ChatGPT becomes a default interface for search, learning, entertainment, and companionship, the question is no longer whether children will use these systems, but how platforms can meaningfully protect them at scale. However, the technology relies on probabilistic signals and behavioural inference, not certainty. As Bureau CTO Sandesh GS points out, current systems work better for broad age bands than precise age determination, meaning misclassification remains a real risk. That matters because a wrongly flagged adult could face unnecessary restrictions, while a missed minor could still access harmful content. The experience of platforms such as Roblox underlines these stakes. Even with facial age estimation and identity checks, platforms have placed users in the wrong age groups, disrupting access and, in some cases, allowing adults and children to share the same digital spaces. In safety-critical environments, small error margins can translate into large real-world consequences. In India, the challenge becomes sharper. With widespread shared device use, culturally distinct digital behaviour, and uneven access to identity infrastructure, algorithmic age prediction risks amplifying bias and exclusion. Age checks alone will not keep children safe. What matters is whether platforms adopt a genuine safety-by-design approach, combining moderation, product design, and education. In that context, OpenAI's rollout is not just a product update, it is a test case for how responsibly AI platforms will govern the next generation of users.

[21]

ChatGPT Can Now Predict Your Age: Check How it Works

The feature enables automatic safety protections but also raises concerns around privacy, transparency, and misclassification. Artificial intelligence platforms are no longer restricted to adults. People of all age groups have been increasingly using AI platforms, including teenagers. Though there are multiple AI platforms that help users gather information, understand concepts, and draft their essays or thesis, hardly any of them can match OpenAI's ChatGPT's user base. AI tools like ChatGPT are becoming more mainstream, and with them, age-appropriate interaction is now a necessary factor on our checklist. OpenAI has addressed this concern and rolled out an age prediction feature, specifically designed for teenagers and users under 18, to automatically ensure their safety. The new system indicates OpenAI's broader effort to balance accessibility with responsibility. While ChatGPT will be available to everyone, the responses now vary depending on the system's estimation of a user's age.

[22]

OpenAI introduces age verification system to enhance child safety By Investing.com

Investing.com -- OpenAI is rolling out a new age prediction system for ChatGPT consumer plans to identify users under 18 and apply appropriate safeguards. The system examines behavioral patterns and account information, including account age, typical usage times, and the user's stated age to determine if an account likely belongs to someone under 18 years old. When the system identifies a potential minor, ChatGPT automatically applies additional protections designed to limit exposure to sensitive content such as graphic violence, viral challenges that could encourage harmful behavior, sexual or violent role play, depictions of self-harm, and content promoting extreme beauty standards. Users who are incorrectly identified as minors can verify their age by submitting a selfie through Persona, a secure identity-verification service. This process can be initiated through the account settings menu. The company stated that these protections are guided by expert input and academic literature on child development, acknowledging differences in how teens perceive risk, control impulses, respond to peer influence, and regulate emotions. Parents can further customize their teen's experience through parental controls, including setting quiet hours, controlling features like memory or model training, and receiving notifications if signs of acute distress are detected. OpenAI plans to roll out age prediction in the European Union in the coming weeks to account for regional requirements. The company is working with organizations including the American Psychological Association, ConnectSafely, and Global Physicians Network as it continues to develop teen safety measures. This article was generated with the support of AI and reviewed by an editor. For more information see our T&C.

[23]

OpenAI rolls out age prediction on ChatGPT

Jan 20 (Reuters) - OpenAI on Tuesday said it is rolling out age prediction on ChatGPT globally, to determine whether an account is likely owned by a minor, as the artificial intelligence startup prepares to allow adult content on the popular chatbot. "When the age prediction model estimates that an account may belong to someone under 18, ChatGPT automatically applies additional protections designed to reduce exposure to sensitive content," OpenAI said in a statement. Users mistakenly placed in the under-18 experience will regain full access by submitting a selfie through Persona, an identity verification service. In the EU, the feature will be rolled out in the coming weeks. OpenAI's CEO of applications, Fidji Simo, had in December said that she expects "adult mode" to debut within ChatGPT in the first quarter of 2026, following chief executive Sam Altman's comments about allowing mature content for users who verify their age. ChatGPT has 800 million weekly active users. OpenAI last week said it would start showing ads in the chatbot to some U.S. users, ramping up efforts to generate revenue. Microsoft-backed OpenAI's annualized revenue surpassed $20 billion in 2025, up from $6 billion in 2024, finance chief Sarah Friar said on Sunday, with growth closely tracking an expansion in computing capacity. (Reporting by Juby Babu in Mexico City; Editing by Sahal Muhammed)

[24]

ChatGPT can now predict your age: Here's how it works

If ChatGPT believes an account may belong to someone under 18, it will automatically apply extra safeguards. OpenAI has started rolling out a new age prediction system on ChatGPT. The goal is simple: to better understand whether an account likely belongs to someone under 18, so the platform can provide the right protections for teens while allowing adults to use ChatGPT more freely. Until now, ChatGPT mainly relied on the age users entered when signing up. Teens who said they were under 18 automatically received extra safety features. The new age prediction system adds another layer of protection, especially for cases where a user's age may be unclear or inaccurate. The age prediction model sees how long an account has existed, typical times of use, usage patterns over time, and the age a user has stated. By combining these signals, the system estimates whether an account is likely run by a minor. OpenAI says these signals will help improve accuracy as the system learns over time. Also read: OpenAI to prioritise practical adoption of AI in 2026, CFO says If ChatGPT believes an account may belong to someone under 18, it will automatically apply extra safeguards. These are designed to limit exposure to content that may not be appropriate for teens. Restricted content includes graphic violence, sexual or violent role play, risky viral challenges, depictions of self-harm, and content that promotes extreme beauty standards or unhealthy dieting. These choices are based on research about child and teen development. Studies show that teens differ from adults in areas like risk-taking, impulse control, emotional regulation, and peer influence. Also read: OpenAI on track to unveil its first device in 2026: What to expect OpenAI also acknowledges that mistakes can happen. If an adult is incorrectly placed into the under-18 experience, they can easily restore full access. This is done through a simple age confirmation process using a selfie through Persona, a secure identity verification service. The age predication feature is rolling out globally, with the European Union following in the coming weeks due to regional requirements.

Share

Share

Copy Link

OpenAI has activated an age prediction system across ChatGPT that uses behavioral signals to identify users under 18 and automatically apply content filters. The move follows lawsuits linking teen suicides to the chatbot and mounting pressure over user safety for minors. Adults incorrectly identified as underage can verify age with selfie through partner Persona.

ChatGPT Rolls Out Age Prediction to Protect Young Users

OpenAI has begun deploying an AI-powered age prediction model across ChatGPT consumer accounts in a significant effort to identify minors and shield them from sensitive or harmful content

1

. The system analyzes behavioral signals including how long an account has existed, typical times of day when someone is active, usage patterns over time, and a user's stated age to determine whether an account likely belongs to someone under 182

. Once activated, the technology automatically applies content restrictions designed to reduce exposure to graphic violence, depictions of self-harm, viral challenges that could push risky behavior, and content promoting extreme beauty standards or body shaming3

.

Source: MakeUseOf

Mounting Pressure Over Teen Safety and Lawsuits

The rollout comes amid intense scrutiny of OpenAI following multiple lawsuits and investigations related to teen suicides linked to ChatGPT

2

. The company has faced criticism for allowing the chatbot to discuss sexual topics with young users, and last April was forced to address a bug that generated erotica for users under 181

. During a September 2025 Senate subcommittee hearing titled "Examining the Harm of AI Chatbots," testimony revealed that over half of all US adolescents over age 13 now use generative AI4

. The Federal Trade Commission has also launched an inquiry into AI systems used as companions, with particular emphasis on teen safety concerns3

.

Source: MediaNama

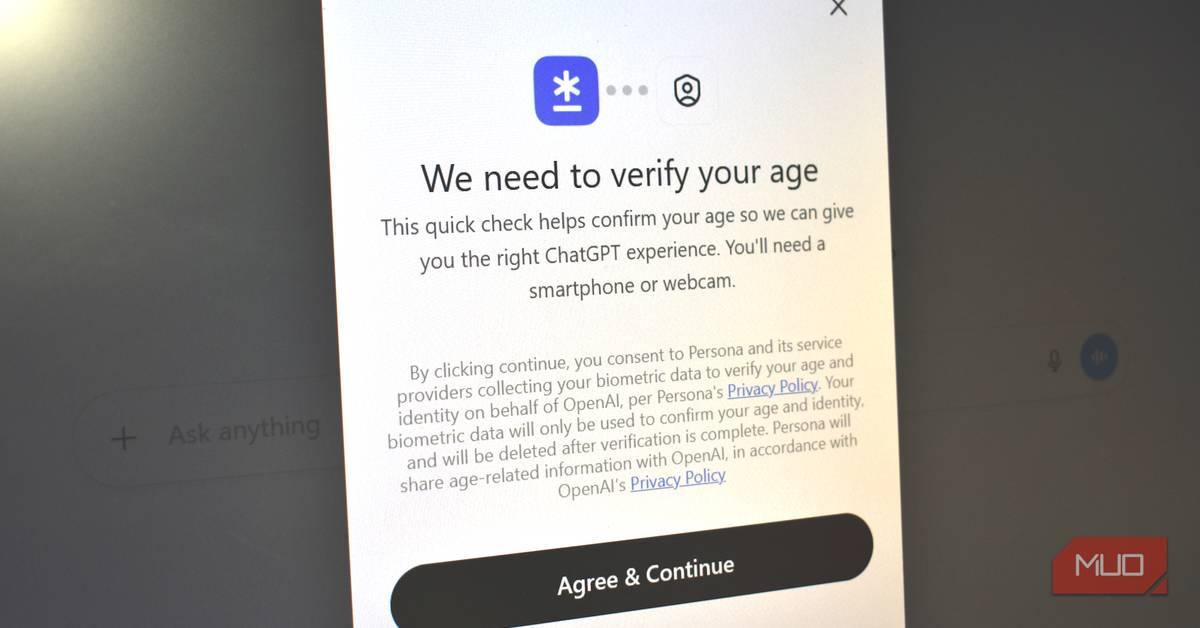

How Age Verification Works When Incorrectly Identified as Underage

OpenAI acknowledges that no system is perfect and the age prediction technology won't always get it right

4

. Adults who are incorrectly identified as underage can verify age with selfie or government-issued ID through Persona, OpenAI's identity verification partner5

. The process requires either a live selfie using a phone or webcam, or uploading a photo of government-issued identification such as a driver's license or passport5

. Persona deletes ID photos and selfies within seven days after age verification is complete5

. The company did not disclose an accuracy rate but stated plans to refine the model over time3

.Related Stories

Content Moderation and Parental Control Systems Expand

The age prediction feature builds on parental control systems OpenAI previously introduced, which allow parents to link accounts with their teens, set quiet hours when the chatbot can't be used, and disable voice mode and image creation

3

. OpenAI worked with the American Psychological Association to ground its approach in academic literature about child development, acknowledging known teen differences in risk perception, impulse control, peer influence, and emotional regulation3

. The enhanced content moderation filters out sexual, romantic, or violent roleplaying scenarios for identified minors2

.Regulatory Pressures and Questions About User Privacy

Age gating has become a broader theme across online platforms, driven by laws proposed or enacted in various countries and US states

2

. Australia recently imposed a sweeping ban on social media for children under 16, while gaming platform Roblox instituted mandatory age checks earlier this month . However, advocacy organizations remain skeptical about the effectiveness of such systems. Australia's Age Assurance Technology Trial found age verification systems guessed ages within 18 months only 85 percent of the time, with lower accuracy for older adults, non-Caucasian users, and female-presenting individuals4

. Mozilla has raised concerns about fundamental tensions around effectiveness, accessibility, user privacy, and security4

. Critics like Mark Cuban have questioned whether parents will trust ChatGPT's age-gating system, noting potential scenarios where 18-year-old high school seniors could expose younger students to inappropriate content3

. The global rollout is underway, with European deployment beginning in the coming weeks3

.

Source: Digit

References

Summarized by

Navi

[1]

[4]

[5]

Related Stories

OpenAI Introduces Controversial Parental Controls for ChatGPT Amid Safety Concerns

29 Sept 2025•Technology

OpenAI updates ChatGPT with teen safety rules as child exploitation reports surge 80-fold

18 Dec 2025•Technology

OpenAI delays ChatGPT adult mode to early 2026 as age verification tests continue

12 Dec 2025•Technology

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology