Suicide Pod Creator Philip Nitschke Adds AI Mental Fitness Test to Controversial Device

4 Sources

4 Sources

[1]

Controversial Swiss Suicide Pod Gets an AI-Powered Mental Fitness Upgrade

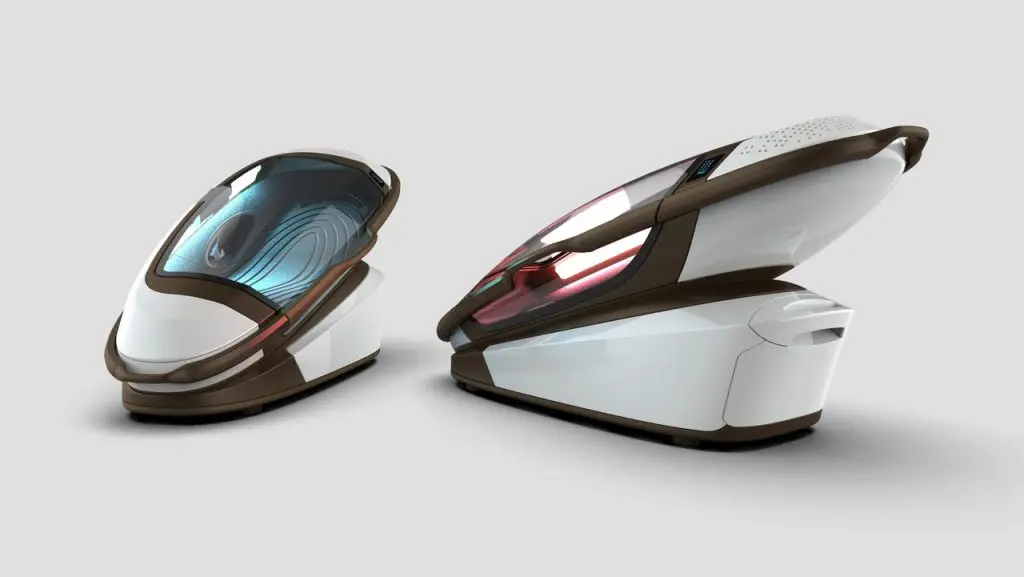

There have been many high-profile stories in which chatbots have effectively encouraged and enabled people experiencing mental health crises to kill themselves, which has resulted in several wrongful death lawsuits against the companies responsible for the AI models behind the bots. Now we've got the inverse: if you want to use your right to die, you have to convince an AI that you are mentally capable of making such a decision. According to Futurism, the creator of a controversial assisted-suicide device known as the Sarco has introduced a psychiatric test administered by AI to determine if a person is of sound enough mind to decide to end their life. If they are deemed of sound mind by the AI, the suicide pod will be powered on, and they will have up to 24 hours to decide to move forward to their final destination. If they miss the window, they'll have to start over. The Sarco that is central to this whole thing has already stirred up quite a bit of controversy before introducing the AI mental fitness test. Named after the sarcophagus by inventor Philip Nitschke, the Sarco was built in 2019 and used for the first time in 2024 when a 64-year-old American woman who had been suffering from complications associated with a severely compromised immune system, underwent the process of self-administered euthanasia in Switzerland, where assisted suicide is technically legal. She reportedly underwent a traditional psychiatric evaluation conducted by a Dutch psychiatrist before she pressed a button that released nitrogen within the capsule and ended her life because the AI assessment wasn't ready at the time. However, the use of the Sarco resulted in the arrest of Dr. Florian Willet, a pro-assisted suicide advocate who was present for the woman's death. Swiss law enforcement arrested the doctor on the grounds of aiding and abetting a suicide. Under the country's laws, assisted suicide is allowed as long as the person takes their own life with no "external assistance," and those who help the person die must not do so for "any self-serving motive." Dr. Willet would later die by assisted suicide in Germany in 2025, reportedly in part due to the psychological trauma he experienced following his arrest and detention. It's unclear if Willet was evaluated using the new AI assessment, but Nitschke will apparently include the new test in his latest version of the Sarco that he designed for couples, according to the Daily Mail. The "Double Dutch" model will evaluate both partners and allow them to enter a conjoined pod so they can pass on to the next life while lying next to each other. The whole thing does raise a question, though: why do you need AI for this? They were able to find a psychiatrist for the one use of the pod thus far, and it's not like they're doing this at such a volume that they need to pass the assessment off to AI to expedite the process. Whatever your stance on assisted suicide may be, the inclusion of an AI test over a human assessment feels like it undermines the dignity of choosing to die. A person at the end of their life deserves to be taken seriously and receive human consideration, not pass a CAPTCHA.

[2]

Inventor Building AI-Powered Suicide Chamber

The inventor of a controversial suicide pod is making sure his device keeps up with the times by augmenting it with AI tech -- which, we regret to inform you, is not merely some sort of dark joke. "One of the parts to the device which hadn't been finished, but is now finished, is the artificial intelligence," the inventor, Philip Nitschke, told the Daily Mail in a new interview. Named the Sarco pod after the ancient sarcophagus, the euthanasia chamber, first built in 2019, has been championed by the pro-assisted dying organization The Last Resort. In 2024, it was used to facilitate the suicide of a 64-year-old woman in Switzerland. The 3D-printed pod is activated when the person seeking to take their own life presses a button, filling the sealed, futuristic-looking coffin with nitrogen that causes the user to lose consciousness and "peacefully" pass away within a few minutes. To date, the woman's death in Switzerland is the only case of the Sarco pod seeing real-world action. Soon after she died, Swiss authorities showed up at the sylvan cabin where the pod was located and arrested the late Florian Willet, then co-president of Last Resort, who was supervising her death, on suspicion of aiding and abetting a suicide. He was ultimately released two months later. Assisted dying is technically legal in Switzerland, but only if the person seeking suicide is deemed to have the mental capacity to make the decision, and only if they carry out the suicide themselves, rather than a third-party. That last bit is why the patient presses the button to activate the chamber, a workaround that stands on legally shaky ground as it is (hence Willet's arrest). Even more contestable is determining whether the patient is capable of making their mortal decision -- which, of course, is where AI enters the picture. As Nitschke was designing a "Double Dutch" version of the Sarco pod that would allow couples to die together, he stumbled on the idea of using AI to administer a psychiatric "test" to determine their mental capacity. If they pass the AI's judgment, it activates the "power to switch on the Sarco." "That part wasn't working when we first used the device," Nitschke told the Daily Mail, referring to the 64-year-old woman's death. "Traditionally, that's done by talking to a psychiatrist for five minutes, and we did that," he explained. "She had a rather traditional assessment of mental capacity through a Dutch psychiatrist." "But with the new Double Dutch, we'll have the software incorporated," Nitschke continued, "so you'll have to do your little test online with an avatar, and if you pass that test, then the avatar tells you you've got mental capacity." Passing the test will power on the Sarco for the next 24 hours, during which a person or couple can climb in and press the button to end it all. If they miss the day-long window, they'll have to take the AI-administered test all over again. How this will actually work in practice is as questionable as the promises of AI itself. AI models are prone to hallucinating, are alarmingly sycophantic, and have frequently failed when deployed in medical scenarios. Entrusting the tech to give the go-ahead to someone killing themselves -- especially as AI chatbots are under the microscope for seemingly encouraging many adults and teenagers to take their own lives in cases of so-called AI psychosis -- sounds like an ethical disaster waiting to happen.

[3]

Inventor of the 'suicide pod' says AI should decide who can end life

The inventor of the controversial Sarco suicide pod says AI software could one day replace psychiatrists in assessing mental capacity for those seeking assisted dying. Philip Nitschke has spent more than three decades arguing that the right to die should belong to people, not doctors. Now, the Australian euthanasia campaigner behind the controversial Sarco pod - a 3D-printed capsule designed to allow a person to end their own life using nitrogen gas - says he believes artificial intelligence should replace psychiatrists in deciding who has the "mental capacity" to end their life. "We don't think doctors should be running around giving you permission or not to die," Nitschke told Euronews Next. "It should be your decision if you're of sound mind." The proposal has reignited debate about assisted dying and whether AI should ever be trusted with decisions as significant as life and death. 'Suicide is a human right' Nitschke, a physician and the founder of the euthanasia non-profit Exit International, first became involved in assisted dying in the mid-1990s, when Australia's Northern Territory briefly legalised voluntary euthanasia for terminally ill patients. "I got involved 30-odd years ago when the world's first law came in," he said. "I thought it was a good idea." He made history in 1996 as the first doctor to legally administer a voluntary lethal injection, using a self-built machine that enabled Bob Dent, a man dying of prostate cancer, to activate the drugs by pressing a button on a laptop beside his bed. However, the law was short-lived and was repealed amid opposition from medical bodies and religious groups. The backlash, Nitschke says, was formative for him. "It did occur to me that if I was sick - or for that matter, even if I wasn't sick - I should be the one who controls the time and manner of my death," he says. "I couldn't see why that should be restricted, and certainly why it should be illegal to receive assistance, given that suicide itself is not a crime." Over time, his position hardened. What began as support for physician-assisted dying evolved into a broader belief that "the end of one's life by oneself is a human right," regardless of illness or medical oversight. From plastic bags to pods The Sarco pod, named after the sarcophagus, grew out of Nitschke's work with people seeking to die in jurisdictions where assisted dying is illegal. Many, he says, were already using nitrogen gas - often with a plastic bag - to asphyxiate themselves. "That works very effectively," he said. "But people don't like it. They don't like the idea of a plastic bag. Many would say, 'I don't want to die looking like that.'" The Sarco pod was designed as a more dignified alternative: a 3D-printed capsule, shaped like a small futuristic vehicle, which floods with nitrogen when the user presses a button. Its spaceship-like appearance was an intentional design choice. "Let's make it look like a vehicle," he recalls telling the designer. "Like you're going somewhere. You're leaving this planet, or whatever." The decision to make Sarco 3D-printable, costing a reported $15,000 (€12,800) to manufacture, was also strategic. "If I actually give you something material, that's assisting suicide," he said. "But I can give away the program. That's information." Legal trouble in Switzerland Sarco's first and only use in Switzerland in September 2024 triggered an international outcry. Police arrested several people, including Florian Willet, CEO of the assisted dying organisation The Last Resort, and opened criminal proceedings for aiding and abetting suicide. Swiss authorities later said the pod was incompatible with Swiss law. Willet was released from custody in December. Soon after, in May 2025, he died by assisted suicide in Germany. Swiss prosecutors have yet to determine whether charges will be laid over the Sarco case. The original device remains seized, though Nitschke says a new version - including a so-called "Double Dutch" pod designed for two people to die together - is already being built. An AI assessment of mental capacity Adding to the controversy is Nitschke's vision of incorporating artificial intelligence into the device. Under assisted dying laws worldwide, a person must be judged to have mental capacity - a determination typically made by psychiatrists. Nitschke believes that the process is deeply inconsistent. "I've seen plenty of cases where the same patient, seeing three different psychiatrists, gets four different answers," he said. "There is a real question about what this assessment of this nebulous quality actually is." His proposed alternative is an AI system which uses a conversational avatar to evaluate capacity. "You sit there and talk about the issues that the avatar wants to talk to you about," he said. "And the avatar will then decide whether or not it thinks you've got capacity." If the AI determines you are of sound mind, the suicide pod will be activated, giving you a 24-hour window to decide whether to proceed with the process. If that window expires, the AI test must begin again. Early versions of the software are already functioning, Nitschke says, though they have not been independently validated. For now, he hopes to run the AI assessments alongside psychiatric reviews. "Whether it's as good as a psychiatrist, whether it's got any biases built into it - we know AI assessments have involved bias," he says. "We can do what we can to eliminate that." Can AI be trusted? Psychiatrists remain sceptical. "I don't think I found a single one who thought it was a good idea," he added. Critics warn that these systems risk interpreting emotional distress as informed consent, and raise concerns about how transparent, accountable or ethical it is to hand life-and-death decisions to an algorithm. "This clearly ignores the fact that technology itself is never neutral: It is developed, tested, deployed, and used by human beings, and in the case of so-called Artificial Intelligence systems, typically relies on data of the past," said Angela Müller, policy and advocacy lead at Algorithmwatch, a non-profit organisation that researches the impact of automation technologies. "Relying on them, I fear, would rather undermine than enhance our autonomy, since the way they reach their decisions will not only be a black box to us but may also cement existing inequalities and biases," she told Euronews in 2021. These concerns are heightened by a growing number of high-profile cases involving AI chatbots and vulnerable users. For example, last year, the parents of 16-year-old Adam Raine filed a lawsuit against OpenAI following their son's death by suicide, alleging that he had spent months confiding in ChatGPT. According to the claim, the chatbot failed to intervene when he discussed self-harm, did not encourage him to seek help, and at times provided information related to suicide methods - even offering to help draft a suicide note. But Nitschke believes that in this context, AI could offer something closer to neutrality than a human psychiatrist. "Psychiatrists bring their own preconceived ideas," he said. "They convey that pretty well through their assessment of capacity." "If you're an adult, and you've got mental capacity, and you want to die, I would argue you've got every right to have the means for a peaceful and reliable elective death," he said. Whether regulators will ever accept such a system remains unclear. Even in Switzerland, one of the world's most permissive jurisdictions, authorities have pushed back hard against Sarco.

[4]

Controversial 'suicide pod' inventor reveals new AI-powered...

A year after a controversial assisted-dying device was used for the first time, its inventor says he is preparing a new version designed for two. The Sarco pod, a 3D-printed capsule that allows a user to trigger a nitrogen release, entered the global spotlight in 2024 after a 64-year-old American woman used it in Switzerland. The episode quickly spiraled into a police investigation, with authorities seizing the device and detaining those present before later ruling out intentional homicide. Now, Philip Nitschke, the Australian-born physician behind Sarco, says development is underway on a larger, AI-enabled model built specifically for couples who want to die together. "I'm not suggesting everyone's going to race forward and say: 'Boy, I really want to climb into one of those things,'" Nitschke told the DailyMail. "But some people do." Nitschke said interest has already come from couples, including one from Britain who told him they wanted to "die in each other's arms." The new design, sometimes referred to as the "Double Dutch" Sarco, would be large enough for two people and require a synchronized decision: both occupants must press their buttons at the same time or the device will not activate. The original Sarco capsule works by flooding its chamber with nitrogen, rendering the occupant unconscious within seconds and causing death shortly afterward. Nitschke said the woman who used the pod last year pressed the button almost immediately, explaining that she "really wanted to die" in the device and had read about it beforehand. Beyond its size, the next iteration introduces a feature Nitschke says was unfinished during the first use: artificial intelligence designed to assess mental capacity. "One of the parts to the device which hadn't been finished, but is now finished, is the artificial intelligence," he said. Instead of a traditional psychiatric evaluation, future users would complete an online test administered by an AI avatar. "Traditionally, that's done by talking to a psychiatrist for five minutes, and we did that," Nitschke said of the first case. "But with the new Double Dutch, we'll have the software incorporated, so you'll have to do your little test online with an avatar, and if you pass that test, then the avatar tells you you've got mental capacity." Passing the test would power the pod for a 24-hour window. After that, the assessment would need to be taken again. Nitschke said most components of the dual pod have already been produced and that the device could be assembled within months. Even so, its future hinges on Swiss authorities, who have yet to approve the technology. On the day the Sarco was first used, Switzerland's interior minister, Elisabeth Baume-Schneider, said the device was "not legal," a statement that helped trigger the initial investigation. That inquiry ultimately ended without charges, but the debate over assisted dying, and the machines built to facilitate it, is far from settled. If you are struggling with suicidal thoughts or are experiencing a mental health crisis and live in New York City, you can call 1-888-NYC-WELL for free and confidential crisis counseling. If you live outside the five boroughs, you can dial the 24/7 National Suicide Prevention hotline at 988 or go to SuicidePreventionLifeline.org.

Share

Share

Copy Link

Philip Nitschke, inventor of the Sarco suicide pod, has introduced an AI-powered mental fitness test to determine if users have the mental capacity to end their lives. The controversial assisted-suicide device now requires users to pass an AI assessment administered by an avatar before activation. A new 'Double Dutch' version designed for couples is also in development.

Philip Nitschke Introduces AI-Powered Mental Fitness Test to Suicide Pod

Philip Nitschke, the Australian physician behind the controversial Sarco suicide pod, has completed development of an AI-powered mental fitness test designed to assess whether individuals possess the mental capacity to end their lives

1

. The AI system uses a conversational avatar to evaluate users through an online assessment, replacing what Nitschke describes as inconsistent traditional psychiatric evaluations3

. If users pass the test, the assisted-suicide device powers on for a 24-hour window, after which they must retake the assessment2

.

Source: Euronews

The Sarco pod, named after the ancient sarcophagus, is a 3D-printed capsule that releases nitrogen gas when activated by the user pressing a button

4

. The device saw its first real-world use in September 2024 when a 64-year-old American woman suffering from complications related to a severely compromised immune system died in Switzerland1

. She underwent a traditional psychiatric evaluation conducted by a Dutch psychiatrist because the AI assessment wasn't ready at the time2

.Legal Controversy Surrounds Assisted Dying Device in Switzerland

The first use of the Sarco triggered immediate legal controversy in Switzerland, where assisted dying is technically legal but subject to strict conditions

3

. Swiss authorities arrested Dr. Florian Willet, co-president of The Last Resort organization, on suspicion of aiding and abetting a suicide2

. Under Swiss law, assisted suicide is permitted only if the person takes their own life with no external assistance and those who help must not act from any self-serving motive1

. Willet was released two months later, but Swiss prosecutors have yet to determine whether charges will be filed3

. Tragically, Willet died by assisted suicide in Germany in May 2025, reportedly due in part to psychological trauma from his arrest and detention1

.Double Dutch Sarco Designed for Couples Seeking Euthanasia Together

Nitschke is now developing a larger version called the Double Dutch Sarco, designed specifically for couples who want to die together

4

. The new model will incorporate the AI software and require both occupants to press their buttons simultaneously for the device to activate4

. Nitschke told the Daily Mail that interest has already come from couples, including one from Britain who expressed wanting to "die in each other's arms"4

. Most components of the dual pod have already been produced and the device could be assembled within months, though its future depends on approval from Swiss authorities4

.

Source: New York Post

Related Stories

Ethical Concerns About AI to Replace Psychiatrists in Life-or-Death Decisions

The decision to use AI for mental capacity assessment raises significant ethical concerns about entrusting artificial intelligence with life-or-death determinations

2

. AI models are prone to hallucinating, demonstrate alarming sycophantic behavior, and have frequently failed when deployed in medical scenarios2

. The timing is particularly controversial given recent cases where AI chatbots have seemingly encouraged adults and teenagers to take their own lives in instances of so-called AI psychosis, resulting in several wrongful death lawsuits1

. Nitschke defends the approach by arguing that psychiatric assessments are deeply inconsistent, claiming he has "seen plenty of cases where the same patient, seeing three different psychiatrists, gets four different answers"3

. His broader philosophy holds that "suicide is a human right" and that individuals, not doctors, should control the time and manner of their death3

. The 3D-printed capsule, which costs approximately $15,000 to manufacture, was intentionally designed to look like a futuristic vehicle to provide a more dignified alternative to nitrogen asphyxiation with plastic bags3

.

Source: Futurism

References

Summarized by

Navi

[2]

[4]

Related Stories

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology