Datapelago Emerges from Stealth with Universal Data Processing Engine, Raising $47M

3 Sources

3 Sources

[1]

Startup DataPelago Exits Stealth, Debuts Universal Data Processing Engine For 'Accelerated Computing' Tasks

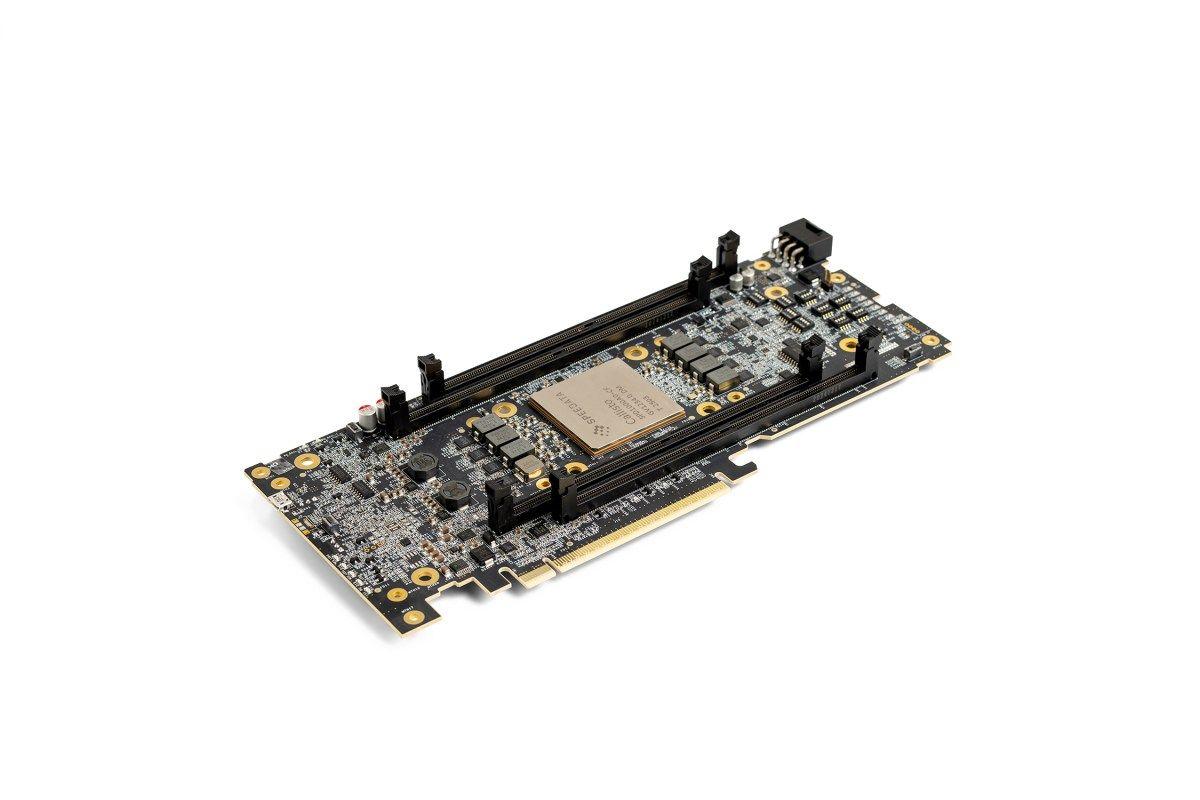

DataPelago says its new technology provides a data processing boost for advanced analytics and AI applications that require huge volumes of complex, structured and unstructured data. Startup DataPelago exited stealth today, unveiling what the company describes as the world's first "universal data processing engine" that can handle the complexity and volume of today's data for "accelerated computing" analytical and artificial intelligence workloads. DataPelago, founded in 2021 and based in Mountain View, Calif., also said the company had cumulatively raised $47 million in seed and Series A funding from investors Eclipse, Taiwania Capital, Qualcomm Ventures, Alter Venture Partners, Nautilus Venture Partners and Silicon Valley Bank (a division of First Citizens Bank). "Data is changing, the applications are changing and, most importantly, [IT] infrastructure is changing. When you have three different disruptive trends coming all together, it requires you to step back and see what the next world looks like and what should be the data processing platform," said Rajan Goyal, DataPelago co-founder and CEO, in an interview with CRN. [Related: Meeting The Exploding Demand For Data: The 2024 CRN Big Data 100] The exploding volume of digital data created each year is expected to reach 291 zettabytes by 2027, according to market researcher IDC, with much of that unstructured and semi-structured data. At the same, Goyal (pictured) says, traditional data processing systems based on CPUs and basic software architectures cannot handle the complexity and volume of today's data. Such limitations weren't a problem when data analytics was largely focused on powering business intelligence dashboards. But businesses and organizations today are increasingly trying to extract more value and deeper analytical insights from data, including processing data for real-time analytical and generative AI-driven applications, Goyal said. To tackle the problem Goyal launched DataPelago in 2021 and assembled a "multi-disciplinary team" of people with expertise in system architecture, data analytics, cloud SaaS, open-source development and other technology areas. (Goyal himself has startup experience having worked as an architecture engineer at semiconductor startup Cavium between 2005 and 2016 and CTO at data center tech company Fungible between 2016 and 2021. Before that he held software engineering and development positions at Oracle and Cisco Systems.) DataPelago's universal data processing engine, which is being used by some customers on a pilot/preview basis, is designed to overcome the performance, cost and scalability limitations of current-generation IT systems and meet the needs of what the company calls "the accelerated computing era." "Some of our customers are spending hundreds of million of dollars on these use cases, and even a $50 million savings in a year is a huge value that we bring to the customer," Goyal said. The startup's universal engine was built from the ground up to support GenAI and data lakehouse analytics workloads by employing a hardware-software co-design approach, according to the company. The engine is designed to work with today's data stacks including CPU-, GPU-, TPU- and FPGA-based hardware; data processing frameworks such as Spark, Trino and Apache Flink; multiple types of data stores; and data processing platforms such as Snowflake and data lakehouses like Databricks, Goyal said. "We want to build an engine which can provide the benefits to all software frameworks out there," the CEO said. The DataPelago engine leverages several open-source technologies including Apache Gluten, Meta's Velox, and Substrait - the latter a cross-language specification for data compute operations. The engine is built on a platform comprised of three layers: a DataVM virtual machine, a DataOS and a DataApp. The engine processes data in the most efficient way possible based on available hardware resources and the data being processed. The result, according to the company, is a unique architecture that enables the engine to process data one to two orders of magnitude faster than traditional query engines. DataPelago says its processing engine is uniquely suited for use cases that are resource intensive, such as analyzing billions of transactions while ensuring data freshness, supporting AI-driven models to detect threats at wire-line speeds across millions of consumer and data center endpoints, and "providing a scalable platform to facilitate the rapid deployment of training, fine-tuning and RAG inference pipelines," according to the company's announcement. "When data can be extracted as quickly as it's generated, businesses can harness insights to make better decisions and operate more efficiently," said Lior Susan, CEO and founding partner at investor Eclipse and a DataPelago board member, in a statement. "DataPelago's universal data processing engine represents a paradigm shift that will unlock new possibilities in the worlds of engineering, supply chains, sustainable energy, the medical field, and beyond." Goyal said the latest funding will be used to accelerate the company's development operations and grow the company's early sales and go-to-market operations, including developing a channel. The company is currently working with a channel partner overseas and expects to recruit solution providers and systems integrators as partners.

[2]

DataPelago aims to save enterprises significant $$$ with universal data processing

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More As data continues to be key to business success, enterprises are racing to drive maximum value from the information in hand. But the volume of enterprise data is growing so quickly -- doubling every two years -- that the computing power to process it in a timely and cost-efficient manner is hitting a ceiling. California-based DataPelago aims to solve this with a "universal data processing engine" that allows enterprises to supercharge the performance of existing data query engines (including open-source ones) using the power of accelerating computing elements such as GPUs and FPGAs (Fixed Programming Gate Arrays). This enables the engines to process exponentially increasing volumes of complex data across varied formats. The startup has just emerged from stealth but is already claiming to deliver a five-fold reduction in query/job latency while providing significant cost benefits. It has also raised $47 million in funding with the backing of multiple venture capital firms, including Eclipse, Taiwania Capital, Qualcomm Ventures, Alter Venture Partners, Nautilus Venture Partners and Silicon Valley Bank. Addressing the data challenge More than a decade ago, structured and semi-structured data analysis was the go-to option for data-driven growth, providing enterprises with a snapshot of how their business was performing and what needed to be fixed. The approach worked well, but the evolution of technology also led to the rise of unstructured data -- images, PDFs, audio and video files - within enterprise systems. Initially, the volume of this data was small, but today, it accounts for 90% of all information created (far more than structured/semi-structured) and is very critical for advanced enterprise applications like large language models. Now, as enterprises are looking to mobilize all their data assets, including large volumes of unstructured data, for these use cases, they are running into performance bottlenecks and struggling to process them timely and cost-effectively. The reason, as DataPelago CEO Rajan Goyal says, is the computing limitation of legacy platforms, which were originally designed for structured data and general-purpose computing (CPUs). "Today, companies have two choices for accelerated data processing...Open-source systems offered as a managed service by cloud service providers have smaller licensing fees but require users to pay more for cloud infrastructure compute costs to reach an acceptable level of performance. On the other hand, proprietary services (built with open-source frameworks or otherwise) can be inherently more performant, but they have much higher licensing fees. Both choices result in higher total cost of ownership (TCO) for customers," he explained. To address this performance and cost gap for next-gen data workloads, Goyal started building DataPelago, a unified platform that dynamically accelerates query engines with accelerated computing hardware like GPUs and FPGAs, enabling them to handle advanced processing needs for all types of data, without massive increase in TCO. "Our engine accelerates open-source query engines like Apache Spark or Trino with the power of GPUs resulting in a 10:1 reduction in the server count, which results in lower infrastructure cost and lower licensing cost in the same proportion. Customers see disruptive price/performance advantages, making it viable to leverage all the data they have at their disposal," the CEO noted. At the core, DataPelago's offering uses three main components - DataApp, DataVM and DataOS. The DataApp is a pluggable layer that allows integration of DataPelago with open data processing frameworks like Apache Spark or Trino, extending them at the planner and executor node level. Once the framework is deployed and the user runs a query or data pipeline, it is done unmodified, with no change required in the user-facing application. On the backend, the framework's planner converts it into a plan, which is then taken by DataPelago. The engine uses an open-source library like Apache Gluten to convert the plan into an open-standard, Intermediate Representation called Substrait. This plan is sent to the executor node where DataOS converts the IR into an executable Data Flow Graph (DFG). Finally, the DataVM evaluates the nodes of the DFG and dynamically maps them to the right computing element - CPU, FPGA, Nvidia GPU or AMD GPU - based on availability or cost/performance characteristics. This way, the system redirects the workload to the most suitable hardware available from hyperscalers or GPU cloud providers for maximizing performance and cost benefits. Significant savings for early DataPelago adopters While the technology to dynamically accelerate query engines with accelerated computing is new, the company is already claiming it can deliver a five-fold reduction in query/job latency with a two-fold reduction in TCO compared to existing data processing engines. "One company we're working with was spending $140M on one workload, with 90% of this cost going to compute. We are able to decrease their total spend to < $50M," Goyal said. The CEO did not share the total number of companies working with DataPelago, but he did point out that the company is seeing significant traction from enterprises across verticals such as security, manufacturing, finance, telecommunications, SaaS and retail. The existing customer base includes notable names such as Samsung SDS, McAfee and insurance technology provider Akad Seguros, he added. "DataPelago's engine allows us to unify our GenAI and data analytics pipelines by processing structured, semi-structured, and unstructured data on the same pipeline while reducing our costs by more than 50%," André Fichel, CTO at Akad Seguros, said in a statement. As the next step, Goyal plans to build on this work and take its solution to more enterprises looking to accelerate their data workloads while being cost-efficient at the same time. "The next phase of growth for DataPelago is building out our go-to-market team to help us manage the high number of customer conversations we're already engaging in, as well as continue to grow into a global service," he said.

[3]

DataPelago raises $47M to optimize hardware for analytical workloads - SiliconANGLE

DataPelago raises $47M to optimize hardware for analytical workloads DataPelago Inc. today unveiled what it calls a "universal data processing engine" that powers high-speed computing at massive scale by making better use of an organization's underlying infrastructure. The three-year-old startup also announced $47 million in new funding. The company's cloud-based software framework is independent of the operating system and leverages all available CPU, graphics processing units, floating point units and data processing units to apply to generative artificial intelligence and "data lakehouse" analytics across large volumes of unstructured data. The engine is based on two intelligent layers called the Accelerated Computing Virtual Machine and the DataOS. Together they process data in the most efficient way possible based on the characteristics of the data and the hardware resources available, the company said. DataPelago said the Accelerated Computing Virtual Machine uses a domain-specific instruction set for data operators while dynamically abstracting accelerated computing elements. DataOS maps data operations to underlying processing resources to optimize performance. The result is an analytics engine that the company claims can process data up to 10 times faster than traditional computing platforms at one-half to one-third the cost. "If you have a sea of GPU, FPGA or CPU servers available, the system will automatically decide where to run which workload," said co-founder and Chief Executive Rajan Goyal. "We wanted to build an engine that fits into the new ecosystem without disrupting it to deliver the promise of accelerated computing without even a single line of change in the application." Goyal's previous startups include Cavium Inc., which built multicore processors for domain-specific use cases and sold to Marvell Technology Group Ltd. for $6 billion in 2018. He was also chief technology officer at chipmaker Fungible Inc., whose data processing units are used in data centers to offload networking, storage and security tasks. It was acquired by Microsoft Corp. in early 2023. DataPelago's architecture is based on three technology pillars, Goyal said. The first is a virtual machine that abstracts underlying instruction sets so developers don't need to write operating system kernels for different kinds of hardware. "It generates the code at runtime for the target CUDA kernels, or the ROCm kernels in AMD, or the field-programmable gate arrays," he said, referring to platforms from Nvidia Corp. and Advanced Micro Devices Inc. The DataOS, "when given a query plan, decides at runtime and dynamically maps operators to run CPUs, [single instruction, multiple thread] processors, vectorized machines, GPUs and FPGAs," he said. "We not only look at the data affinity to generate the tasks but also the capability of the commuting element to map the task to the right source." DataOS derives workload statistics from metadata and sends a sideband signal to the runtime engine about the processing elements needed. "That's what we mean by reconfiguring the computing process," Goyal said. The third piece is a composable architecture that can be integrated with query engines and analytic frameworks such as Apache Trino and Apache Spark without changes to the upper processing layer. Goyal said the company has been testing its technology with customers that are looking to control spiraling costs for analytics hardware or want to train artificial intelligence models more quickly. He claimed DataPelago's technology is able to achieve 10-fold performance improvements while reducing total cost of ownership by a factor of two to three times. The company raised $8 million in earlier seed funding. The new round was led by Eclipse Ventures LLC, Taiwania Capital Management Corp., Qualcomm Inc.'s venture capital arm, Alter Venture Partners Management Inc., Nautilus Ventures Advisors US LLC and Silicon Valley Bank.

Share

Share

Copy Link

Datapelago, a startup focused on optimizing data processing for enterprises, has exited stealth mode with a $47 million funding round. The company introduces a universal data processing engine designed to accelerate computing tasks and reduce infrastructure costs.

Datapelago Unveils Universal Data Processing Engine

Datapelago, a startup specializing in data processing optimization, has emerged from stealth mode with a bang, introducing its revolutionary universal data processing engine. The company aims to address the growing challenges faced by enterprises in managing and processing vast amounts of data efficiently and cost-effectively

1

.Significant Funding Secured

In a testament to the potential of its technology, Datapelago has successfully raised $47 million in funding. This substantial investment will fuel the company's efforts to further develop and commercialize its innovative data processing solution

3

.Accelerating Computing Tasks

Datapelago's universal data processing engine is designed to significantly accelerate computing tasks across various hardware platforms. By optimizing data processing workflows, the company promises to enhance performance and reduce the time required for complex analytical operations

1

.Cost Savings for Enterprises

One of the key benefits touted by Datapelago is the potential for substantial cost savings. The company claims that its technology can help enterprises reduce their infrastructure costs by optimizing hardware utilization and improving overall efficiency in data processing tasks

2

.Addressing the Data Processing Challenge

As organizations grapple with ever-increasing volumes of data, the need for efficient processing solutions has become paramount. Datapelago's approach aims to tackle this challenge head-on by providing a versatile engine that can adapt to various hardware configurations and workload types

1

.Related Stories

Industry Impact and Future Prospects

The emergence of Datapelago and its universal data processing engine could potentially disrupt the data infrastructure landscape. As enterprises continue to invest heavily in data analytics and processing capabilities, solutions that offer both performance improvements and cost savings are likely to gain significant traction in the market

2

.Next Steps for Datapelago

With its successful funding round and public debut, Datapelago is now poised to expand its operations and customer base. The company will likely focus on refining its technology, building partnerships, and demonstrating the real-world benefits of its universal data processing engine to potential enterprise clients

3

.References

Summarized by

Navi

Related Stories

Recent Highlights

1

OpenAI secures $110 billion funding round from Amazon, Nvidia, and SoftBank at $730B valuation

Business and Economy

2

Trump orders federal agencies to ban Anthropic after Pentagon dispute over AI surveillance

Policy and Regulation

3

Google releases Nano Banana 2 AI image model with Pro quality at Flash speed

Technology