DeepMind's Genie 3: A Breakthrough in AI World Models with Potential for AGI

22 Sources

22 Sources

[1]

DeepMind reveals Genie 3 "world model" that creates real-time interactive simulations

While no one has figured out how to make money from generative artificial intelligence, that hasn't stopped Google DeepMind from pushing the boundaries of what's possible with a big pile of inference. The capabilities (and costs) of these models have been on an impressive upward trajectory, a trend exemplified by the reveal of Genie 3. A mere seven months after showing off the Genie 2 "foundational world model," which was itself a significant improvement over its predecessor, Google now has Genie 3. With Genie 3, all it takes is a prompt or image to create an interactive world. Since the environment is continuously generated, it can be changed on the fly. You can add or change objects, alter weather conditions, or insert new characters -- DeepMind calls these "promptable events." The ability to create alterable 3D environments could make games more dynamic for players and offer developers new ways to prove out concepts and level designs. However, many in the gaming industry have expressed doubt that such tools would help. It's tempting to think of Genie 3 simply as a way to create games, but DeepMind sees this as a research tool, too. Games play a significant role in the development of artificial intelligence because they provide challenging, interactive environments with measurable progress. That's why DeepMind previously turned to games like Go and StarCraft to expand the bounds of AI. World models take that to the next level, generating an interactive world frame by frame. This provides an opportunity to refine how AI models -- including so-called "embodied agents" -- behave when they encounter real-world situations. One of the primary limitations as companies work toward the goal of artificial general intelligence (AGI) is the scarcity of reliable training data. After piping basically every webpage and video on the planet into AI models, researchers are turning toward synthetic data for many applications. DeepMind believes world models could be a key part of this effort, as they can be used to train AI agents with essentially unlimited interactive worlds. DeepMind says Genie 3 is an important advancement because it offers much higher visual fidelity than Genie 2, and it's truly real-time. Using keyboard input, it's possible to navigate the simulated world in 720p resolution at 24 frames per second. Perhaps even more importantly, Genie 3 can remember the world it creates.

[2]

DeepMind thinks its new Genie 3 world model presents a stepping stone towards AGI | TechCrunch

Google DeepMind has revealed Genie 3, its latest foundation world model that can be used to train general-purpose AI agents, a capability that the AI lab says makes for a crucial stepping stone on the path to "artificial general intelligence," or human-like intelligence. "Genie 3 is the first real-time interactive general purpose world model," Shlomi Fruchter, a research director at DeepMind, said during a press briefing. "It goes beyond narrow world models that existed before. It's not specific to any particular environment. It can generate both photo-realistic and imaginary worlds, and everything in between." Still in research preview and not publicly available, Genie 3 builds on both its predecessor Genie 2 (which can generate new environments for agents) and DeepMind's latest video generation model Veo 3 (which is said to have a deep understanding of physics). With a simple text prompt, Genie 3 can generate multiple minutes of interactive 3D environments at 720p resolution at 24 frames per second -- a significant jump from the 10 to 20 seconds Genie 2 could produce. The model also features "promptable world events," or the ability to use a prompt to change the generated world. Perhaps most importantly, Genie 3's simulations stay physically consistent over time because the model can remember what it previously generated -- a capability that DeepMind says its researchers didn't explicitly program into the model. Fruchter said that while Genie 3 has implications for educational experiences, gaming or prototyping creative concepts, its real unlock will manifest in training agents for general purpose tasks, which he said is essential to reaching AGI. "We think world models are key on the path to AGI, specifically for embodied agents, where simulating real world scenarios is particularly challenging,"Jack Parker-Holder, a research scientist on DeepMind's open-endedness team, said during the briefing. Genie 3 is supposedly designed to solve that bottleneck. Like Veo, it doesn't rely on a hard-coded physics engine; instead, DeepMind says, the model teaches itself how the world works - how objects move, fall, and interact - by remembering what it has generated and reasoning over long time horizons. "The model is auto-regressive, meaning it generates one frame at a time," Fruchter told TechCrunch in an interview. "It has to look back at what was generated before to decide what's going to happen next. That's a key part of the architecture." That memory, the company says, lends to consistency in Genie 3's simulated worlds, which in turn allows it to develop a grasp of physics, similar to how humans understand that a glass teetering on the edge of a table is about to fall, or that they should duck to avoid a falling object. Notably, DeepMind says the model also has the potential to push AI agents to their limits -- forcing them to learn from their own experience, similar to how humans learn in the real world. As an example, DeepMind shared its test of Genie 3 with a recent version of its generalist Scalable Instructable Multiworld Agent (SIMA), instructing it to pursue a set of goals. In a warehouse setting, they asked the agent to perform tasks like "approach the bright green trash compactor" or "walk to the packed red forklift." "In all three cases, the SIMA agent is able to achieve the goal," Parker-Holder said. "It just receives the actions from the agent. So the agent takes the goal, sees the world simulated around it, and then takes the actions in the world. Genie 3 simulates forward, and the fact that it's able to achieve it is because Genie 3 remains consistent." That said, Genie 3 has its limitations. For example, while the researchers claim it can understand physics, the demo showing a skier barreling down a mountain didn't reflect how snow would move in relation to the skier. Additionally, the range of actions an agent can take is limited. For example, the prompt-able world events allow for a wide range of environmental interventions, but they're not necessarily performed by the agent itself. And it's still difficult to accurately model complex interactions between multiple independent agents in a shared environment. Genie 3 can also only support a few minutes of continuous interaction, when hours would be necessary for proper training. Still, the model presents a compelling step forward in teaching agents to go beyond reacting to inputs, letting them potentially plan, explore, seek out uncertainty, and improve through trial and error - the kind of self-driven, embodied learning that many say is key to moving towards general intelligence. "We haven't really had a Move 37 moment for embodied agents yet, where they can actually take novel actions in the real world," Parker-Holder said, referring to the legendary moment in the 2016 game of Go between DeepMind's AI agent AlphaGo and world champion Lee Sedol, in which Alpha Go played an unconventional and brilliant move that became symbolic of AI's ability to discover new strategies beyond human understanding. "But now, we can potentially usher in a new era," he said.

[3]

DeepMind reveals Genie 3, a world model that could be the key to reaching AGI | TechCrunch

Google DeepMind has revealed Genie 3, its latest foundation world model that the AI lab says presents a crucial stepping stone on the path to artificial general intelligence, or human-like intelligence. "Genie 3 is the first real-time interactive general purpose world model," Shlomi Fruchter, a research director at DeepMind, said during a press briefing. "It goes beyond narrow world models that existed before. It's not specific to any particular environment. It can generate both photo-realistic and imaginary worlds, and everything in between." Genie 3, which is still in research preview and not publicly available, builds on both its predecessor Genie 2 - which can generate new environments for agents - and DeepMind's latest video generation model Veo 3 - which exhibits a deep understanding of physics. With a simple text prompt, Genie 3 can generate multiple minutes - up from 10 to 20 seconds in Genie 2 - of diverse, interactive, 3D environments at 24 frames per second with a resolution of 720p. The model also features "promptable world events," or the ability to use a prompt to change the generated world. Perhaps most importantly, Genie 3's simulations stay physically consistent over time because the model is able to remember what it had previously generated - an emergent capability that DeepMind researchers didn't explicitly program into the model. Fruchter said that while Genie 3 clearly has implications for educational experiences and new generative media like gaming or prototyping creative concepts, its real unlock will manifest in training agents for general purpose tasks, which he said is essential to reaching AGI. "We think world models are key on the path to AGI, specifically for embodied agents, where simulating real world scenarios is particularly challenging,"Jack Parker-Holder, a research scientist on DeepMind's open-endedness team, said during a briefing. Genie 3 is designed to solve that bottleneck. Like Veo, it doesn't rely on a hard-coded physics engine. Instead, it teaches itself how the world works - how objects move, fall, and interact - by remembering what it has generated and reasoning over long time horizons. "The model is auto-regressive, meaning it generates one frame at a time," Fruchter told TechCrunch in a separate interview. "It has to look back at what was generated before to decide what's going to happen next. That's a key part of the architecture." That memory creates consistency in its simulated worlds, and that consistency allows it to develop a kind of intuitive grasp of physics, similar to how humans understand that a glass teetering on the edge of a table is about to fall, or that they should duck to avoid a falling object. This ability to simulate coherent, physically plausible environments over time makes Genie 3 much more than a generative model. It becomes an ideal training ground for general-purpose agents. Not only can it generate endless, diverse worlds to explore, but it also has the potential to push agents to their limits - forcing them to adapt, struggle, and learn from their own experience in a way that mirrors how humans learn in the real world. Currently, the range of actions an agent can take is still limited. For example, the promptable world events allow for a wide range of environmental interventions, but they're not necessarily performed by the agent itself. Similarly, it's still difficult to accurately model complex interactions between multiple independent agents in a shared environment. Genie 3 can also only support a few minutes of continuous interaction, when hours would be necessary for proper training. Still, Genie 3 presents a compelling step forward in teaching agents to go beyond reacting to inputs so they can plan, explore, seek out uncertainty, and improve through trial and error - the kind of self-driven, embodied learning that's key in moving towards general intelligence. "We haven't really had a Move 37 moment for embodied agents yet, where they can actually take novel actions in the real world," Parker-Holder said, referring to the legendary moment in the 2016 game of Go between DeepMind's AI agent AlphaGo and world champion Lee Sedol, in which Alpha Go played an unconventional and brilliant move that became symbolic of AI's ability to discover new strategies beyond human understanding. "But now, we can potentially usher in a new era," he said.

[4]

Google's new AI model creates video game worlds in real time

Google DeepMind is releasing a new version of its AI "world" model, called Genie 3, capable of generating 3D environments that users and AI agents can interact with in real time. The company is also promising that users will be able to interact with the worlds for much longer than before and that the model will actually remember where things are when you look away from them. World models are a type of AI system that can simulate environments for purposes like education, entertainment, or to help train robots or AI agents. With world models, you give them a prompt and they generate a space that you can move around in like you would in a video game, but instead of the world being handcrafted with 3D assets, it's all being generated with AI. It's an area Google is putting a lot of effort into; the company showed off Genie 2 in December, which could create interactive worlds based off of an image, and it's building a world models team led by a former co-lead of OpenAI's Sora video generation tool. But the models currently have a lot of drawbacks. Genie 2 worlds were only playable up to a minute, for example. I recently tried "interactive video" from a company backed by Pixar's cofounder, and it felt like walking through a blurry version of Google Street View where things morphed and changed in ways that I didn't expect as I looked around. Genie 3 seems like it could be a notable step forward. Users will be able to generate worlds with a prompt that supports a "few" minutes of continuous interaction, which is up from the 10-20 seconds of interaction possible with Genie 2, according to a blog post. Google says that Genie 3 can keep spaces in visual memory for about a minute, meaning that if you turn away from something in a world and then turn back to it, things like paint on a wall or writing on a chalkboard will be in the same place. The worlds will also have a 720p resolution and run at 24fps. DeepMind is adding what it calls "promptable world events" into Genie 3, too. Using a prompt, you'll be able to do things like change weather conditions in a world or add new characters. However, this probably isn't a model you'll be able to try for yourself. It's launching as "a limited research preview" that will be available to "a small cohort of academics and creators" so its developers can better understand the risks and how to appropriately mitigate them, according to Google. There are also plenty of restrictions, like the limited ways users can interact with generated worlds and that legible text is "often only generated when provided in the input world description." Google says it's "exploring" how to bring Genie 3 to "additional testers" down the line.

[5]

How Google's Genie 3 could change AI video - and let you build your own interactive worlds

Google DeepMind says Genie 3 has an "understanding" of the world. Imagine exploring a virtual environment without boundaries, where everything you see looks and behaves just as it would in reality. This is precisely what many tech developers today are working to create through AI "world models," or algorithms that can build and act upon internal, representative models of the real world, imitating the human brain's ability to make predictions about the behavior of physical objects. Also: Google's Veo 3 can now create an 8-second video from a single image - how to try it World models like Google DeepMind's new Genie 3 could have huge ramifications for AI agents, robotics, entertainment, education, and many other fields. Here's a look at what AI world models are, how they work, and why they matter. Just as you're able to imagine sunlight illuminating the fixtures of your living room, or the effect that a stone dropped into a still pond will have on the water's surface, an AI "world model" can do more than just string words together or generate a lifelike image. It can make accurate predictions about the real world based on an ability to reason about how the basic physical mechanics of the world actually work. This has particularly important implications for the field of AI-generated video. It's one thing for a model to watch millions of videos of a glass falling to the floor and shattering, and using that as a basis to generate new videos of the same event. It's another for a model to intuitively grasp the physics of gravity, the distance that broken glass should scatter on carpet versus a tile floor, and the fact that a human hand carelessly touching one of those shards could lead to a wound and bleeding. This has become the latter goal of major AI developers: AI world models that don't just mimic scenarios, but can actually predict a virtually infinite number of new ones. OpenAI's Sora, for example, which was unveiled in February of last year and was an early example of a world model, shocked the AI community with its ability to simulate real-world physics, such as light reflecting off pools of water on a simulated street. Genie 3 is another illustrative example of the power of a world model. From a simple natural language prompt, Genie 3 can generate dynamic simulations of virtual environments that evolve and change in response to a user's actions. (Its predecessors, Genie and Genie 2, debuted last year in February and December, respectively.) Also: You can turn your Google Photos into video clips now - here's how Unlike classic video games, which come with clearly bounded virtual spaces, world models like Genie 3 are able to expand their simulated environments as users interact with them. "You're not walking through a pre-built simulation," a narrator says in a demo video introducing Genie 3. "Everything you see here is being generated live, as you explore it." Genie 3 comes with a feature Google DeepMind is calling "world memory," which allows the model to represent changes that persist across time in the simulated environments. In the demo video, for example, a user is shown painting a wall with a paint roller; when they turn away and then direct their gaze back at the wall, the marks they made with the roller are still visible. If you find yourself feeling bored while exploring a simulated environment, you can shake things up by prompting Genie 3 to cause an event. Something like: "A man on horseback carrying a bag full of money is being chased by Texas rangers, who are also riding horses. All of the hooves are kicking up huge plumes of dust." "We're excited to see how Genie 3 can be used for next-generation gaming and entertainment," the narrator says in the demo video, "and that's just the beginning." As the narrator in the Genie 3 demo video suggests, world models could have valuable applications beyond helping to generate more realistic, dynamic, and interactive forms of entertainment. For example, they could help the AI industry build embodied agents that can navigate and interact with the real world. (This has been the challenge that the autonomous vehicle industry has been trying to overcome since its inception, largely without success.) Also: This new AI video editor is an all-in-one production service for filmmakers - how to try it They could also be used to simulate what the Genie 3 demo describes as "dangerous scenarios," such as the scene of a recent natural disaster, to help first responders prepare for actual emergencies. Coupled with virtual reality headsets, immersion into world models could also help first responders to build muscle memory so that they can be better equipped to act calmly under duress. Education could also benefit from the use of world models, especially in the case of students who are more receptive to visual information. Trained on copious amounts of real-world data, algorithms gradually refine their ability to make predictions. Eventually -- in a process that researchers are still working to understand -- they can become so adept at this that, for all intents and purposes, we can say that they seem to "understand" some aspects of the world, such as the syntax of the English language or the physics of human body movement. In its blog post, Google DeepMind defined world models as "AI systems that can use their understanding of the world to simulate aspects of it, enabling agents to predict both how an environment will evolve and how their actions will affect it." Also: This interactive AI video generator feels like walking into a video game - how to try it The use of the word "understanding" in this context is controversial, however; some experts argue that AI can only reproduce patterns and, therefore, could never understand a concept in the way a human being can, while others take the opposite view, claiming that perhaps human understanding is nothing more than a sophisticated kind of pattern recognition. If you blindfolded yourself and tried to walk through every room in your house, you could probably do so without injuring yourself or breaking something (assuming you've lived there a while). Similarly, today's AI models are able to explore latent spaces of information in a manner that seems, at least to us humans, like they know the lay of the land.

[6]

Google's Genie 3 Hints at a Future Where AI Builds the Video Games We Play

Using a text prompt, Google's latest Genie 3 model can create interactive 3D worlds that can be navigated with a mouse and keyboard. New advancements from Google suggest AI-generated video games might become a reality faster than previously thought. On Tuesday, the company's DeepMind research lab introduced Genie 3, an AI program that goes beyond image or video generation by letting you create 3D interactive worlds based on a text prompt. DeepMind introduced an earlier version, Genie 2, in December. But at the time, it could only create 3D worlds at 360p resolution, and you could only play with it for 10 to 20 seconds. In contrast, Genie 3 levels up the resolution to 720p. It can maintain visual consistency for a few minutes, letting the user navigate the 3D world for a longer time. The environment will also react to your actions. For example, you can paint a wall with a brush. In addition, the user can also type in new prompts to change the 3D environment, or what's called a "promptable event." For instance, you can ask for a man wearing a chicken suit or a flying dragon to be added, and the program will do just that. The result is a bit like a Star Trek holodeck, but within your PC. "We're excited to see how Genie 3 can be used for next-generation gaming and entertainment," Google's DeepMind team adds in a video. "And that's just the beginning." Other applications could include using Genie 3 for education and to train workers, including robots. The technology is especially impressive since it's able to create a huge diversity of fictional and real-world 3D environments while faithfully rendering the physics within them. Google introduced the technology as other companies, including Microsoft, have been experimenting with using generative AI for video game creation. It's clear generative AI could take character interactions and procedural generation in video games to new levels. But the topic has also become controversial since some fear any adoption of AI will lead to layoffs and dilute game quality. In the meantime, Google's DeepMind still needs to resolve certain limitations facing Genie 3. This includes maintaining the 3D world's consistency beyond a few minutes and raising the visual quality. DeepMind itself notes: "Accurately modeling complex interactions between multiple independent agents in shared environments is still an ongoing research challenge." The research lab also didn't mention the hardware needed to run Genie 3 or how long it needs to generate a 3D world. For now, the technology remains in the testing phase. The DeepMind team has only given early access "to a small cohort of academics and creators," it said in the announcement. But the goal is to expand the number of testers over time.

[7]

Google DeepMind's Genie 3 can dynamically alter the state of its simulated worlds

Promptable world events will allow the lab to train AI agents in new, more sophisticated ways. At start of December, Google DeepMind released Genie 2. The Genie family of AI systems are what are known as world models. They're capable of generating images as the user -- either a human or, more likely, an automated AI agent -- moves through the world the software is simulating. The resulting video of the model in action may look like a video game, but DeepMind has always positioned Genie 2 as a way to train other AI systems to be better at what they're designed to accomplish. With its new Genie 3 model, which the lab announced on Tuesday, DeepMind believes it has made an even better system for training AI agents. At first glance, the jump between Genie 2 and 3 isn't as dramatic as the one the model made last year. With Genie 2, DeepMind's system became capable of generating 3D worlds, and could accurately reconstruct part of the environment even after the user or an AI agent left it to explore other parts of the generated scene. Environmental consistency was often a weakness of prior world models. For instance, Decart's Oasis system had trouble remembering the layout of the Minecraft levels it would generate. By comparison, the enhancements offered by Genie 3 seem more modest, but in a press briefing Google held ahead of today's official announcement, Shlomi Fruchter, research director at DeepMind, and Jack Parker-Holder, research scientist at DeepMind, argued they represent important stepping stones in the road toward artificial general intelligence. So what exactly does Genie 3 do better? To start, it outputs footage at 720p, instead of 360p like its predecessor. It's also capable of sustaining a "consistent" simulation for longer. Genie 2 had a theoretical limit of up to 60 seconds, but in practice the model would often start to hallucinate much earlier. By contrast, DeepMind says Genie 3 is capable of running for several minutes before it starts producing artifacts. Also new to the model is a capability DeepMind calls "promptable world events." Genie 2 was interactive insofar as the user or an AI agent was able to input movement commands and the model would respond after it had a few moments to generate the next frame. Genie 3 does this work in real-time. Moreover, it's possible to tweak the simulation with text prompts that instruct Genie to alter the state of the world it's generating. In a demo DeepMind showed, the model was told to insert a herd of deer into a scene of a person skiing down a mountain. The deer didn't move in the most realistic manner, but this is the killer feature of Genie 3, says DeepMind. As mentioned before, the lab primarily envisions the model as a tool for training and evaluating AI agents. DeepMind says Genie 3 could be used to teach AI systems to tackle "what if" scenarios that aren't covered by their pre-training. "There are a lot of things that have to happen before a model can be deployed in the real world, but we do see it as a way to more efficiently train models and increase their reliability," said Fruchter, pointing to, for example, a scenario where Genie 3 could be used to teach a self-driving car how to safely avoid a pedestrian that walks in front of it. Despite the improvements DeepMind has made to Genie, the lab acknowledges there's much work to be done. For instance, the model can't generate real-world locations with perfect accuracy, and it struggles with text rendering. Moreover, for Genie to be truly useful, DeepMind believes the model needs to be able to sustain a simulated world for hours, not minutes. Still, the lab feels Genie is ready to make a real-world impact. "We already at the point where you wouldn't use [Genie] as your sole training environment, but you can certainly finds things you wouldn't want agents to do because if they act unsafe in some settings, even if those settings aren't perfect, it's still good to know," said Parker-Holder. "You can already see where this is going. It will get increasingly useful as the models get better." For the time being, Genie 3 isn't available to the general public. However, DeepMind says it's working to make the model available to additional testers.

[8]

Genie 3 creates real-time AI worlds from simple text prompts

Google DeepMind has introduced Genie 3, its most advanced world simulation model to date. The AI system can generate interactive, dynamic environments in real time from text prompts. Users can explore these generated worlds at 720p resolution and 24 frames per second, with consistency sustained over a few minutes. This release builds on years of research at DeepMind, where AI agents have been trained in simulated environments for games, robotics, and open-ended learning. Genie 3 marks a significant leap from earlier iterations, Genie 1 and Genie 2, by supporting real-time navigation and improved realism.

[9]

Watch this: Google Genie 3 can create a 3D world, let you explore it, and interact with it in real time

This means you can generate an environment, explore it, and change it on the fly Google's AI world model has just received a significant upgrade, as the technology giant, specifically Google DeepMind, is introducing Genie 3. This is the latest AI world model, and it kicks things into the proverbial high gear by letting the user generate a 3D world at 720p quality, explore it, and feed it new prompts to interact or change the environment all in real time. It's really neat, and I highly recommend you watch the announcement video from DeepMind that's embedded below. Genie 3 is also keenly different from, say, the still impressive Veo 3, as it offers video with audio that goes well beyond the 8-second limit. Genie 3 offers multiple minutes of what Google calls the 'interaction horizon,' allowing you to interact with the environment in real-time and make adjustments as needed. It's sort of like if AI and VR merged; it lets you build a world off a prompt, add new items in, and explore it all. Genie 3 appears to be an improvement over Genie 2, which was introduced in late 2024. In a chart shared within Google's DeepMind post, you can see the progression from GameNGen to Genie 2 to Genie 3, and even a comparison to Veo. Google's also shared a number of demos, including a few that you can try within the blog post, and it's giving us choose-your-adventure vibes. There are a few different scenes you can try on a snowy hill or even a goal you'd want the AI to achieve within a museum environment. Google sums it up as, "Genie 3 is our first world model to allow interaction in real-time, while also improving consistency and realism compared to Genie 2." And while my mind, and my colleague Lance Ulanoff's, went to interacting in this environment in a VR headset to explore somewhere new or even as a big boon for game developers to test out environments and maybe even characters, Google views this as - no surprise - a step towards AGI. That's Artificial General Intelligence, and the view here from DeepMind is that it can train various AI agents in an unlimited number of deeply immersive environments within Genie 3. Another key improvement with Genie 3 is its ability to persist objects within the world - for instance, we observed a set of arms and hands using a paint roller to apply blue paint to a wall. In the clip, we saw a few wide stripes of rolled blue paint on the wall, then turned away and looked back to see the paint marks still in the correct spots. It's neat, and similar to some of the object permanence that Apple's set to achieve with visionOS 26 - of course, that's overlaying onto your real-world environment, so maybe not quite as impressive. DeepMind lays out the limitations of Genie 3, noting that in its current version, the world model cannot "simulate real-world locations with perfect geographic accuracy" and that it only supports a few minutes of interaction. Genie 3's minutes of capability are still a significant jump over Genie 2, but it's not enabling hours of use. You also can't jump into the world of Genie 3 right now. It's available to a small set of testers. Google does note it's hoping to make Genie 3 available to other testers, but it's figuring out the best way to do so. It's unclear what the interface to interact with Genie 3 looks like at this stage, but from the shared demos, it's pretty clear that this is some compelling tech. Whether Google restricts its use to AI research and training, or it explores generating media, I have no doubt we'll see Genie 4 here in short order ... or at least an expansion of Genie 3. For now, I'll go back to playing with Veo 3.

[10]

15 Mind-Blowing Interactive Worlds Google's Genie 3 AI Can Create

Google announced Genie 3, a new general-purpose AI-powered world model that can generate diverse, photorealistic interactive environments. When given a text prompt, Genie 3 can create dynamic worlds users can explore in real time at up to 720p resolution and 24 frames per second. While 720p24 exploration may not sound initially impressive, Genie 3 represents a significant step forward as Google's first world model that allows complex real-time interaction. In contrast, Genie 2's resolution topped out at 360p, and it offered users minimal movement inside AI-generated worlds. Users could perform a small set of actions in Genie 2 for about 10 to 20 seconds. However, in Genie 3, they can navigate a world for multiple minutes and even interact with in-world objects. It's also instructive to consider Google's latest AI video generator, Veo 3. This generative AI video model represents a significant advancement over Veo 2, capable of achieving 4K resolution output. However, it has a notable limitation: clips are short, under 10 seconds, and interaction is restricted to video output controls. It is also worth noting that Genie 1 launched less than a year and a half ago. The progress Google's DeepMind researchers have made is remarkable, if not a bit scary. Genie 3 delivers real-world physics modeling, including water and lighting, the ability to simulate plant and animal behavior, fully modeled characters, and the ability to recreate real-world locations and even past eras. "Achieving a high degree of controllability and real-time interactivity in Genie 3 required significant technical breakthroughs. During the auto-regressive generation of each frame, the model has to take into account the previously generated trajectory that grows with time," Google explains. "For example, if the user is revisiting a location after a minute, the model has to refer back to the relevant information from a minute ago. To achieve real-time interactivity, this computation must happen multiple times per second in response to new user inputs as they arrive." Google notes that it is also highly challenging to maintain consistency over any period with a foundational world model, as any seemingly minor inaccuracies quickly snowball. The system has a visual memory of about a minute. If a user navigates away from an object and then comes back, that object should remain in its original location. It is a significant accomplishment and entirely unprecedented for Google. Google admits there are limitations, including a limited action space, challenges with multi-agent interaction in generated worlds, text rendering (a common issue for generative AI), and occasionally inaccurate geographic modeling of real locations. Nonetheless, the foundational technology on display here is remarkable. Genie 3 is currently available for selected academics and researchers, but Google is investigating how to bring Genie 3 to additional testers soon.

[11]

Genie 3: A New Frontier for World Models

Given a text prompt, Genie 3 can generate dynamic worlds that you can navigate in real time at 24 frames per second, retaining consistency for a few minutes at a resolution of 720p. At Google DeepMind, we have been pioneering research in simulated environments for over a decade, from training agents to master real-time strategy games to developing simulated environments for open-ended learning and robotics. This work motivated our development of world models, which are AI systems that can use their understanding of the world to simulate aspects of it, enabling agents to predict both how an environment will evolve and how their actions will affect it. World models are also a key stepping stone on the path to AGI, since they make it possible to train AI agents in an unlimited curriculum of rich simulation environments. Last year we introduced the first foundation world models with Genie 1 and Genie 2, which could generate new environments for agents. We have also continued to push the state of the art in video generation with our models Veo 2 and Veo 3, which exhibit a deep understanding of intuitive physics. Each of these models marks progress along different capabilities of world simulation. Genie 3 is our first world model to allow interaction in real-time, while also improving consistency and realism compared to Genie 2.

[12]

Google outlines latest step towards creating artificial general intelligence

Genie 3 world model's ability to simulate real environments means it can be used to train robots Google has outlined its latest step towards artificial general intelligence (AGI) with a new model that allows AI systems to interact with a convincing simulation of the real world. The Genie 3 "world model" could be used to train robots and autonomous vehicles as they engage with realistic recreations of environments such as warehouses, according to Google. The US technology and search company's AI division, Google DeepMind, argues that world models are a key step to achieving AGI, a hypothetical level of AI where a system can carry out most tasks on a par with humans - not just individual tasks such as playing chess or translating languages - and potentially do someone's job. DeepMind said such models would play an important role in the development of AI agents, or systems that carry out tasks autonomously. "We expect this technology to play a critical role as we push toward AGI, and agents play a greater role in the world," DeepMind said. However, Google said Genie 3 is not yet ready for full public release and did not give a date for its launch, adding that the model had a range of limitations. The announcement comes amid ever-increasing competition in the AI market, with the chief executive of the ChatGPT developer, OpenAI, Sam Altman, sharing a screenshot on Sunday of what appeared to be the company's latest AI model, GPT-5. Google said the model could also help humans experience a range of simulations for training or exploring, replicating experiences such as skiing or walking around a mountain lake. Genie 3 creates its scenarios immediately from text prompts, according to DeepMind, and the simulated environment can be altered quickly - by for instance, introducing a herd of deer on to a ski slope - with further text prompts. The tech company showed the Genie 3-created skiing and warehouse scenarios to journalists on Monday but is not yet releasing the model to the public. The quality of the simulations seen by the Guardian are on a par with Google's latest video creation model, Veo 3, but they last minutes rather than the eight seconds offered by Veo 3. While AGI has been viewed through the prism of potentially eliminating white collar jobs, as autonomous systems carry out an array of jobs from sales agent to lawyer or accountant, world models are viewed by Google as a key technology for developing robots and autonomous vehicles. For instance, a recreation of a warehouse with realistic physics and people could help train a robot, as a simulation it "learned" from in training helps it achieve its goal. Google has also created a virtual agent, SIMA, that can carry out tasks in video game settings, although like Genie 3, it is not publicly available. Andrew Rogoyski of the Institute for People-Centred AI at the University of Surrey in the UK said world models could also help large language models - the technology that underpins chatbots such as ChatGPT. "If you give a disembodied AI the ability to be embodied, albeit virtually, then the AI can explore the world, or a world - and grow in capabilities as a result," he said. "While AIs are trained on vast quantities on internet data, allowing an AI to explore the world physically will add an important dimension to the creation of more powerful and intelligent AIs." In a research note accompanying the SIMA announcement last year, Google researchers said world models are important because large language models are effective at tasks such as planning but not at taking action on a human's behalf.

[13]

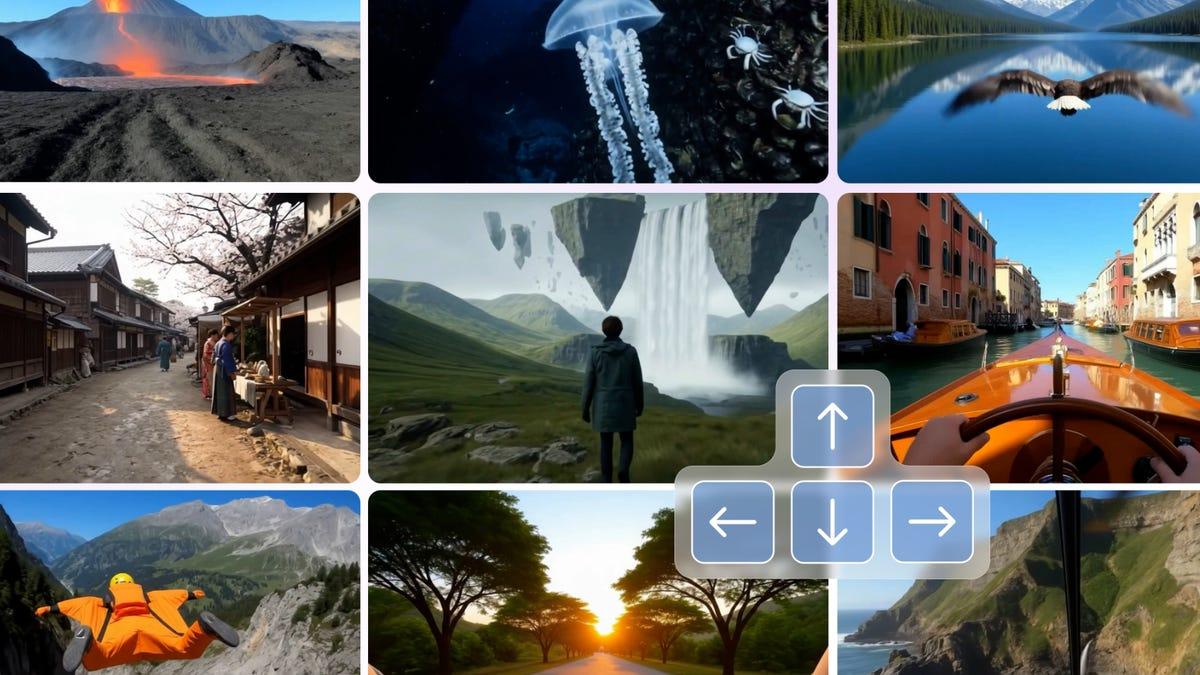

Google DeepMind debuts Genie 3 model for generating interactive virtual worlds - SiliconANGLE

Google DeepMind debuts Genie 3 model for generating interactive virtual worlds Alphabet Inc.'s Google DeepMind lab today detailed Genie 3, an artificial intelligence model designed to generate virtual worlds. The AI is the third iteration of an algorithm series that the company introduced last February. According to DeepMind, it could help advance machine learning research by creating higher-quality training environments for AI models. Genie 3 generates virtual worlds based on natural language prompts. It can simulate forecasts, Alpine landscapes and other outdoor locations along with indoor spaces. In one internal test, DeepMind researchers had Genie 3 generate an elaborate underwater environment complete with a large jellyfish. Users can change a virtual world with prompts. Genie 3 may be instructed to modify the weather, adjust the camera angle or add objects to an environment. It's also possible to simulate interactions between those objects. The algorithm's predecessor, Genie 2, could render virtual environments for up to 20 seconds at a time. DeepMind says that Genie 3 can manage up to several minutes. The Alphabet unit's researchers also boosted rendering quality from 360p to 720p, which corresponds to a resolution of 1280 by 720 pixels. Rendering consistency is another area where Genie 3 offers improvements. During user sessions, the model analyzes past frames to determine how future frames should be generated. "Generating an environment auto-regressively is generally a harder technical problem than generating an entire video, since inaccuracies tend to accumulate over time," DeepMind researchers Jack Parker-Holder and Shlomi Fruchter explained in a blog post today. "Despite the challenge, Genie 3 environments remain largely consistent for several minutes, with visual memory extending as far back as one minute ago." DeepMind believes that Genie 3 could lend itself to training embodied agents. Those are AI agents designed to power autonomous systems such as industrial robots. Often, such algorithms are trained in simulations of the real-world environments they will be expected to navigate. DeepMind put Genie 3's embodied agent training features to the test using an AI model called SIMA. The latter algorithm is designed to autonomously perform tasks in virtual environments. During the test, DeepMind researchers successfully instructed SIMA to perform a series of actions in environments generated by Genie 3. "In each world we instructed the agent to pursue a set of distinct goals, which it aims to achieve by sending navigation actions to Genie 3," Parker-Holder and Fruchter wrote. "Like any other environment, Genie 3 is not aware of the agent's goal, instead it simulates the future based on the agent's actions."

[14]

Google DeepMind Unveils Genie 3 as Step Toward AGI With Real-Time 3D World Generation | AIM

Access to Genie 3 is currently limited to a small group of academic researchers and creative professionals. Google DeepMind has announced Genie 3, its latest AI world model capable of generating interactive 3D environments in real time while preserving visual consistency. "Given a text prompt, Genie 3 can generate dynamic worlds that you can navigate in real time at 24 frames per second, retaining consistency for a few minutes at a resolution of 720p," the company said in its blog post. Previous models, such as Genie 2 allowed only around 10-20 seconds of interaction. https://youtu.be/PDKhUknuQDg Unlike earlier versions, Genie 3 retains objects in place even when users move the camera away and return later. This ability to remember elements such as wall markings or object positions is being presented as a step forward in the development of AI-driven virtual environments.

[15]

Genie 3 lets users prompt AI-generated playable environments

Google DeepMind has introduced Genie 3, a new AI world model, capable of generating 3D environments for real-time interaction by users and AI agents. This iteration enhances sustained user interaction and improves object memory within these simulated worlds. World models are AI systems designed to simulate environments for purposes including education, entertainment, and training for robots or AI agents. These systems generate interactive spaces from user prompts, differing from handcrafted 3D assets by creating environments entirely through AI. Google has invested significantly in this area, previously demonstrating Genie 2 in December, which could generate interactive worlds from images. A dedicated world models team, co-led by a former lead from OpenAI's Sora video generation tool, leads these efforts. Previous models exhibited limitations. Genie 2 worlds, for instance, were playable for a maximum of one minute. Earlier interactive video technologies have shown environments that distort when viewed or re-viewed. Genie 3 addresses some of these drawbacks. Users can generate worlds via prompts that support "a few" minutes of continuous interaction, an increase from the 10-20 seconds offered by Genie 2, as stated in a blog post by Google. Genie 3 can maintain spaces in visual memory for approximately one minute, ensuring elements like paint on a wall or writing on a chalkboard remain in place upon re-observation. The generated worlds will feature a 720p resolution and operate at 24 frames per second. DeepMind is incorporating "promptable world events" into Genie 3. Users will be able to alter weather conditions or introduce new characters within a generated world through prompts. Genie 3 is currently offered as "a limited research preview," accessible to "a small cohort of academics and creators." This controlled release aims to facilitate risk assessment and mitigation strategies by developers, according to Google. Restrictions include limited user interaction methods and that legible text is "often only generated when provided in the input world description." Google has stated it is "exploring" broader dissemination to "additional testers" in the future.

[16]

Google DeepMind Unveils Genie 3, a Real-Time Virtual World Model for Advancing A.I.

Genie 3 marks a major step in A.I.-powered simulation, enabling agents to train in real time across diverse virtual scenarios. Biking on a narrow mountain road in India, driving through volcanic terrain and wingsuit flying over the Alps -- these are just a few of the experiences that can now be virtually simulated by Google's newest A.I. model, Genie 3. The so-called "world model" generates vast, interactive 3D environments for both humans and A.I. systems to explore, a development Google DeepMind describes as a "key stepping stone" toward advanced forms of A.I. Sign Up For Our Daily Newsletter Sign Up Thank you for signing up! By clicking submit, you agree to our <a href="http://observermedia.com/terms">terms of service</a> and acknowledge we may use your information to send you emails, product samples, and promotions on this website and other properties. You can opt out anytime. See all of our newsletters "This line of work (and world models in general) is very close to my heart," said Demis Hassabis, CEO of Google DeepMind, in a post on X. "Back in the 90s when I was designing [simulation] games we could only dream of one day having tech like this," added Hassabis, who began his career as a video game programmer. Genie 3's uses extend far beyond gaming. With its ability to create unlimited environments, DeepMind says the model can train A.I. agents to navigate real-world scenarios. "We expect this technology to play a critical role as we push towards AGI, and agents play a greater role in the world," said the company. Across Silicon Valley, companies are racing toward artificial general intelligence (AGI) -- A.I. systems with human-level capabilities -- by releasing ever more powerful models. Earlier this week, OpenAI unveiled GPT-5, its fastest and most advanced model to date, which the company claims demonstrates "Ph.D.-level" performance in areas like writing and coding. World models vs. large language models Unlike large language models (LLMs) like GPT-5, Genie 3 doesn't generate text or code. Instead, it uses prompts to create virtual worlds that can train physical A.I. agents, such as robots and autonomous systems, for deployment in the real world. Its capabilities include simulating an industrial bakery, where an agent must learn how to approach an industrial mixer or move to cooling racks. Through text prompts, users can instantly alter aspects of the environment, such as weather conditions, and add "what if" scenarios, such as a herd of deer crossing a ski slope, to test how agents handle the unexpected. https://observer.com/wp-content/uploads/sites/2/2025/08/25.mp4 Genie 1 and Genie 2, released last year, could generate new environments for training agents. Genie 3 is the first version to allow real-time interaction and offers improved realism and consistency. Google isn't alone in advancing A.I.-powered simulations. Nvidia earlier this year launched its own world-model platform to train self-driving cars and robots. And World Labs, the startup founded by A.I. pioneer Fei-Fei Li, has raised about $230 million to fund technology that turns 2D images into interactive 3D worlds. Despite Genie 3's leap forward, DeepMind acknowledges current limitations. Its geographic accuracy isn't flawless, and it struggles with enabling multiple agents to interact in the same environment. Real-time responsiveness may also raise safety concerns. For now, Google will release Genie 3 only to a small group of academics and creators to study potential risks before a wider rollout.

[17]

Genie 3: Google DeepMind's New AI Turns Prompts into Living, Breathing 3D Worlds

Revealed in August 2025, Genie 3 takes a basic text or image prompt and instantly generates a playable 3D world that is complete with objects that you can move, weather that shifts with commands, and environments that remember what you've done, even when you walk away. We're talking 720p visuals, 24 FPS performance, and persistent memory over several minutes of continuous, glitch-free exploration. Unlike Genie 2, which was impressive but limited to short, grainy video loops, Genie 3 is built for immersion. It supports real-time editing on the fly, just type in "spawn a storm" or "build a cave," and it happens instantly, no reload required. This level of interactivity is powered by what DeepMind calls an "autoregressive world model," which isn't hardcoded with rules. Instead, Genie 3 learns how the world works, gravity, water, and shadows just by watching video data. That means the system doesn't fake physics; it internalizes them, leading to emergent, realistic behaviour without manual programming. What really elevates Genie 3 is its spatiotemporal consistency. If you paint a wall or drop a sword somewhere, leave the scene, and return, the AI remembers the state exactly as you left it. That's a massive step toward AI that understands continuity, something even big game engines struggle with. DeepMind isn't pitching this as a toy; they see Genie 3 as a training ground for general-purpose intelligence. These hyper-realistic, memory-rich environments are where future AI agents can learn safely, without risking real-world consequences. Despite its potential, Genie 3 isn't open to the public yet. It's currently in limited research preview, accessible only to a select group of developers and researchers while DeepMind fine-tunes its safety and governance protocols. Still, the implications are crystal clear. Genie 3 is no longer just about creative play; it's a foundational step toward artificial general intelligence (AGI), offering a simulated world where machines can learn, adapt, and possibly outpace human intuition. Simply put, Genie 3 doesn't just build worlds; it builds the infrastructure for AI to truly live in them.

[18]

Google's New Genie 3 AI Lets You Create Interactive Worlds You Can Explore

Genie 3 maintains visual consistency over a long horizon, and its visual memory is extended to one minute. Google DeepMind has announced Genie 3, a frontier world model that can generate interactive environments. Genie 3 is a general-purpose world model, designed to generate dynamic worlds from text prompts, allowing users to navigate within the simulated world. It's a major breakthrough by the DeepMind team as world models are a "key stepping stone on the path to AGI." Genie 3 can generate interactive environments in real time at 24 FPS with a resolution of 720p while maintaining consistency for a few minutes. Earlier, Genie 2 could only generate environments in 360p that would last for 10 to 20 seconds, and it was only limited to 3D environments. Now, Genie 3 can generate any environment that spans multiple minutes. Not only that, Genie 3 maintains environmental consistency for several minutes during the simulation. It means that objects and locations remain the same even when users move out, navigate, and come back into view. DeepMind says Genie 3's visual memory is extended to one minute, which allows the model to reference past visuals up to one minute. What is surprising is that this environmental consistency has emerged naturally from its training. There are no special methods employed, such as NeRFs and Gaussian Splatting, to make the environment consistent. Genie 3's world model generates the dynamic world frame-by-frame based on user descriptions and actions, making it far more dynamic and diverse. Users can also prompt and change the world by text-based instructions. You can change the weather conditions, add new objects and characters, and change the location. In my review of Veo 2, I mentioned that Google's video generation model has far better visual coherence than other AI models out there. With the latest Veo 3, Google has made it even better, and now, Genie 3 makes the world navigable. If you are wondering what is the use case of world models like Genie 3, well, it can generate interactive games from simple descriptions. Simply by prompting, users can generate infinite game worlds and fully explore them. Microsoft already showcased its World and Human Action Model (WHAM) called Muse that generated Quake II gameplay sequences using AI. Apart from that, Genie 3 can be helpful in robotics, where robots can be trained in unlimited simulated scenarios. In fact, Google is already testing its SIMA agent in worlds generated by Genie 3. This will allow AI labs to train robots to achieve goals in the real world.

[19]

Create Entire Worlds with Just Words : How Genie 3 is Changing Games

What if you could create an entire world -- complete with evolving landscapes, interactive objects, and persistent memories -- using nothing more than a few words? This isn't a scene from a sci-fi blockbuster; it's the promise of Genie 3, the latest innovation from Google DeepMind. By combining innovative AI with natural language processing, Genie 3 transforms the way we interact with virtual environments, making them not only immersive but also infinitely adaptable. Whether you're a gamer crafting a personalized adventure, a researcher simulating disaster scenarios, or an educator building hands-on learning experiences, Genie 3 offers a glimpse into a future where the boundaries between imagination and reality blur. But with such fantastic potential come pressing questions: How far can this technology go? And what challenges must it overcome to truly transform how we engage with the digital world? In this exploration of Genie 3, AI Explained uncover the new features that make this platform a fantastic option in fields as diverse as gaming, robotics, and education. You'll discover how its real-time interactivity and persistent memory create dynamic, evolving environments that respond to your input in ways previously unimaginable. But it's not all smooth sailing -- Genie 3's limitations in physics accuracy and real-world fidelity raise important questions about its current capabilities and future trajectory. As we delve deeper, you'll see how this technology could redefine not just virtual worlds, but also the ethical and societal frameworks that govern them. Could Genie 3 be the key to unlocking the full potential of AI-driven creativity, or does its promise come with risks we've yet to fully grasp? Genie 3 functions by allowing users to generate interactive virtual environments through plain text descriptions. With just a few words, you can create a digital world tailored to your specifications. This process is powered by advanced AI algorithms capable of interpreting natural language and translating it into a virtual environment. One of its standout features is real-time interactivity, allowing users to explore and manipulate these environments as they evolve dynamically. This means that changes made to the environment -- such as constructing buildings or altering terrain -- are immediately reflected, creating a seamless and engaging experience. Another key feature is persistent memory, which ensures that modifications to the virtual world remain intact when revisited. This continuity adds a layer of realism and immersion, making the platform particularly appealing for long-term projects or storytelling. Additionally, Genie 3 supports dynamic environment modification, allowing users to reshape the virtual world as needed. This adaptability is especially useful in scenarios like training simulations or creative projects. However, the platform's reliance on explicit text prompts for rendering and its limited memory duration -- lasting only a few minutes -- highlight areas where further refinement is necessary. The versatility of Genie 3 positions it as a valuable tool across a variety of industries, offering innovative solutions to complex challenges: These applications demonstrate the platform's ability to address real-world challenges while fostering creativity and innovation. Unlock more potential in AI-driven simulation by reading previous articles we have written. Despite its new capabilities, Genie 3 faces several challenges that limit its effectiveness in certain scenarios. These limitations highlight areas where further development is required: These challenges underscore the need for ongoing refinement to enhance the platform's capabilities and bridge the gap between virtual and real-world performance. The future of Genie 3 is filled with potential, particularly in its ability to redefine how we interact with virtual environments. In gaming, it could pave the way for entirely new genres of interactive entertainment, where players actively shape their experiences in real time. Its integration with broader AI advancements, such as Artificial General Intelligence (AGI), could further enhance its adaptability and intelligence. When compared to existing simulation platforms like Unreal Engine or Isaac Lab, Genie 3 stands out for its user-driven world generation and interactivity. However, it lags behind in areas such as physics modeling and visual fidelity, which are critical for professional and industrial applications. Addressing these gaps will be essential for its widespread adoption. As with any fantastic technology, Genie 3 raises important ethical and societal questions. The immersive nature of AI-driven virtual worlds could blur the line between reality and simulation, potentially affecting mental health and social behavior. For example, prolonged exposure to highly engaging virtual environments might lead to detachment from real-world experiences. Scalability is another significant challenge. Large-scale simulations may strain computational resources, leading to inconsistencies or errors. Making sure reliability while maintaining performance will be crucial as the platform evolves. Additionally, the societal impact of AI-generated environments must be carefully considered. Questions about who controls these technologies and how they are deployed will play a key role in determining their influence on industries and individuals. Responsible development and governance will be essential to ensure that Genie 3 is used ethically and equitably. Genie 3 represents a significant advancement in AI-driven simulation, offering unprecedented opportunities for creativity, training, and problem-solving. Its ability to generate dynamic, interactive virtual worlds from natural language prompts sets it apart as a powerful tool for both entertainment and practical applications. However, its current limitations -- such as physics inaccuracy, oversimplified interactions, and a lack of real-world fidelity -- highlight the challenges that must be addressed for broader adoption. By tackling these technical and ethical hurdles, Genie 3 has the potential to become a cornerstone of next-generation AI technologies, unlocking new possibilities for innovation, exploration, and societal progress.

[20]

How Google Genie 3 Brings Virtual Worlds to Life Using AI

What if you could step into a virtual world that not only looks real but behaves as if it were alive, responding to your every move and command? With the advent of Genie 3, this is no longer a distant dream but an unfolding reality. Imagine altering the weather with a single prompt, watching a serene forest transform into a stormy wilderness, or simulating the intricate flow of water down a rocky stream -- all in real time. This innovative AI model doesn't just create virtual environments; it breathes life into them, offering an unprecedented level of interactivity and realism. Whether you're a game developer, a scientist, or an artist, Genie 3's ability to simulate dynamic worlds with stunning accuracy is reshaping the boundaries of what's possible. In this exploration of Genie 3 by Olivio Sarikas, you'll uncover how it redefines the relationship between artificial intelligence and the environments it creates. From its remarkable physical simulations to its applications in gaming, research, and creative media, Genie 3 is more than just an upgrade -- it's a leap forward in AI's ability to model and understand the world. But what truly sets it apart? How does it overcome the challenges of its predecessors, and what limitations still remain? By the end, you'll not only grasp the fantastic potential of this technology but also see how it could shape the future of AI and its role in bridging the gap between the digital and physical realms. Genie 3 stands out due to its exceptional ability to simulate dynamic environments with remarkable responsiveness. Operating at 24 frames per second and 720p resolution, it allows you to interact with virtual worlds in real time. For example, you can adjust the time of day, modify weather conditions, or introduce new elements like characters or objects -- all through simple prompts. Imagine transforming a tranquil forest into a stormy landscape or creating a bustling cityscape from scratch. These capabilities make Genie 3 a powerful tool for crafting immersive and interactive experiences. The model's adaptability extends beyond visual changes. It can simulate complex physical phenomena, such as water flow, fire behavior, and object collisions, with a high degree of accuracy. This level of detail ensures that every interaction feels natural and believable, enhancing the overall user experience. Building on the foundation of its predecessor, Genie 2, Genie 3 introduces several notable advancements that elevate its performance and usability: These upgrades not only improve the realism of simulations but also broaden the scope of potential applications, making Genie 3 a versatile tool for various industries. Dive deeper into Google AI with other articles and guides we have written below. Genie 3's versatility makes it a valuable asset across a wide range of fields. Its ability to create and adapt virtual environments in real time has led to innovative applications in the following areas: These diverse applications highlight Genie 3's potential to drive innovation and streamline workflows across multiple disciplines. One of Genie 3's most impressive features is its ability to model physical and behavioral interactions with exceptional accuracy. Whether simulating the movement of marine life in an underwater scene or the sway of trees in a rainforest, the model delivers consistent and detailed results. This precision is particularly valuable for scientific research and design, where accurate simulations are essential for testing hypotheses or visualizing complex systems. Additionally, Genie 3's ability to simulate interactions between objects and agents enhances its utility for creating realistic scenarios. For example, it can model how a group of animals might behave in a shared environment or how objects interact under various physical forces. These capabilities make it a powerful tool for both creative and analytical purposes. Genie 3 plays a pivotal role in advancing artificial intelligence by improving AI's understanding of real-world physics and interactions. This enhanced comprehension is a critical step toward the development of advanced general intelligence (AGI). By allowing AI systems to navigate and make decisions in complex environments, Genie 3 bridges the gap between virtual and physical worlds. The model's ability to simulate realistic scenarios also supports the development of AI technologies that can operate effectively in real-world settings. For instance, AI agents trained in Genie 3's environments can apply their skills to tasks such as autonomous navigation, disaster response, or industrial automation. These advancements position Genie 3 as a foundational tool for future innovations in AI-driven technologies. Despite its impressive capabilities, Genie 3 is not without its challenges. Some of its current limitations include: These limitations underscore areas for improvement as the technology continues to evolve. Addressing these challenges will be crucial for expanding the model's functionality and accessibility. The future of Genie 3 is filled with possibilities. As advancements in AI technology continue, the model is likely to overcome its current limitations, allowing more complex simulations and interactions. Improvements in accessibility and affordability could also make this technology available to a broader audience, empowering more users to use its capabilities. Genie 3's ability to create immersive, interactive virtual worlds positions it as a fantastic tool across industries. Its potential to drive innovation in gaming, AI training, scientific research, and beyond ensures that it will remain a cornerstone of AI development for years to come.

[21]

Google Genie 3 AI Creates Interactive Digital Worlds in Real-Time

What if you could create an entire world -- complete with lifelike physics, dynamic lighting, and seamless interactions -- at the speed of thought? With the unveiling of Google Genie 3, this once-futuristic vision is now a tangible reality. Unlike traditional tools that rely on painstakingly pre-designed models, Genie 3 uses advanced AI to generate immersive environments in real time, adapting instantly to user input. Whether you're designing a video game, simulating robotics tasks, or training AI systems, this new technology promises to redefine the boundaries of creativity and innovation. But as with any innovative leap, it raises intriguing questions: How far can AI go in mimicking reality? And what challenges lie ahead in perfecting such a powerful tool? In this exploration of Google's Genie 3, Matthew Berman uncovers the key features that set it apart, from its real-time generation capabilities to its seamless visual transitions. You'll discover how this innovative system is transforming industries like entertainment, AI training, and robotics by bridging the gap between virtual and physical realities. But Genie 3 isn't without its hurdles -- high computational demands and occasional visual inconsistencies hint at the complexities of pushing technology to its limits. As we delve deeper, consider this: Could Genie 3 be the stepping stone to Artificial General Intelligence, or is it merely a glimpse of what's to come? Genie 3 distinguishes itself by creating dynamic, interactive environments that adapt instantly to user input. Unlike traditional systems that depend on pre-designed 3D models, Genie 3 employs advanced AI algorithms to generate environments on demand. This innovative approach delivers unparalleled flexibility and scalability in simulation. Some of the standout features of Genie 3 include: These features empower users to interact with digital environments in unprecedented ways, whether designing a game, training AI systems, or simulating robotics tasks. The ability to create and modify environments in real time opens up new possibilities for innovation and creativity. The versatility of Genie 3 unlocks fantastic opportunities across multiple sectors, making it a valuable tool for professionals in various fields. By allowing these applications, Genie 3 bridges the gap between virtual and physical realities, driving innovation and efficiency across industries. Building on the foundation of its predecessor, Genie 2, Genie 3 introduces several new advancements that enhance its capabilities: These innovations make Genie 3 a powerful tool for creating rich, dynamic worlds, setting a new benchmark for AI-driven simulations. Its ability to generate environments on demand significantly enhances efficiency and adaptability, making it a valuable asset for professionals across various domains. While Genie 3 showcases impressive capabilities, it also faces certain challenges that need to be addressed to maximize its potential: Currently, Genie 3 is undergoing internal testing at Google, with no public release date announced. This testing phase provides an opportunity to optimize the system and address its limitations before it becomes widely available. Looking ahead, the potential of Genie 3 extends far beyond its current capabilities. Future developments could include: These advancements have the potential to redefine how we interact with digital environments, making Genie 3 a cornerstone of future AI-driven technologies.

[22]

Genie 3 model from Google DeepMind lets you generate 3D worlds with a text prompt

Follow-up to Veo 3 introduces AI-powered playable simulations When I first saw Google's Veo 3 in action earlier this year, I thought the bar had been raised for what AI creativity could mean. Being able to generate high-quality videos complete with realistic sound, motion, and atmosphere felt like peering into the future of filmmaking. But Veo still had its limits: the experience was beautiful, but passive. You couldn't move through the world. You couldn't interact. Now, with the announcement of Genie 3, that boundary between spectator and participant might be about to dissolve. Genie 3 is DeepMind's newest generative model, not for video, but for fully interactive 3D environments. Type in a prompt, and the model doesn't just create a clip - it builds a world you can walk through. A world with memory, physics, and responsiveness. It's not available yet. But even at the announcement stage, the implications are massive. And if Veo 3 hinted at where AI-driven media was headed, Genie 3 kicks down the next door entirely. Also read: The Era of Effortless Vision: Google Veo and the Death of Boundaries At its heart, Genie 3 is a "world model" - an architecture designed to generate coherent, interactive spaces from simple inputs like text or images. These aren't traditional 3D game engines filled with coded assets. Instead, Genie 3 learns from raw video data how the world should behave, and then simulates that behavior frame by frame. The results, according to DeepMind's demonstrations, are astonishing: dynamic 3D environments rendered at 720p and 24 fps that persist across space and time. When you move inside the generated world, objects maintain their place. Shadows stay consistent. Water continues flowing downstream. The environment holds together like a real space. It's a leap forward from Genie 2, which could produce only seconds of low-res interaction. Genie 3 supports several minutes of gameplay-like movement. The model adapts to your input in real time, whether it's navigating, interacting with terrain, or triggering environmental changes. Think: "rain begins to fall," or "a portal appears behind the tree." These aren't edits to a static scene, they're changes to a living simulation. In many ways, Genie 3 feels like a sequel to Veo 3 but where Veo ends, Genie begins. Veo 3 introduced the world to highly detailed, audio-synced video generation. It could take prompts like "a surfer riding a wave at sunset" and return cinematic footage that felt almost real. Genie 3 takes the same storytelling instincts and injects interactivity into the mix. The viewer becomes the protagonist. Instead of watching a moment unfold, you explore it. Together, the models mark the beginnings of a creative stack where text becomes not just media, but space. Imagine using Veo 3 to generate a narrative intro scene, and Genie 3 to let the audience wander through that setting, controlling the camera, discovering new angles, even influencing the story. This is where storytelling becomes simulation. And the impact for creators could be huge. The animation pipeline today is complex and expensive. World-building requires entire teams: concept artists, 3D modelers, texture specialists, lighting experts, rigging technicians. Genie 3 proposes a radical alternative - describe your world, and let the model build it. Need a snowy mountain village at dusk? A neon-lit cyberpunk alley? A crumbling temple overtaken by vines? Genie 3 can conjure these settings with a prompt. And once inside them, creators could hypothetically move their camera, block scenes, or experiment with lighting without touching a single 3D tool. Also read: Netflix and Disney explore using Runway AI: How it changes film production It's not just about saving time. It's about redefining how early-stage creative decisions are made. Genie 3 could act as a real-time previsualization engine. Directors might "scout" locations inside AI-generated worlds before ever building sets. Indie filmmakers could prototype entire sequences. Animators could experiment with scene composition, framing, and mood, iterating in hours instead of weeks. While the model isn't public yet, the mere concept of a tool like Genie 3 is enough to make waves. It's not hard to imagine future animation workflows being built around generative backbones like this. Beyond media and entertainment, Genie 3 has another purpose: AI training. World models are essential for creating environments where digital agents can learn safely and efficiently. DeepMind has already begun using Genie 3 to simulate environments for agent tasks like navigating to an object, avoiding hazards, or understanding instructions. These simulations are realistic enough to mimic the sensory complexity of the real world, but controllable enough to accelerate learning. This capability called "embodied learning" is crucial for the future of robotics and general-purpose AI. With Genie 3, DeepMind can create endless variations of a task environment. That means smarter agents, faster. Whether it's a digital assistant learning to follow multi-step commands or a robot navigating a warehouse, models like Genie 3 might become the sandbox in which AGI grows up. There are still limitations: scenes are short, actions are basic, and fidelity still trails behind what real-time game engines can achieve. But unlike game engines, Genie 3 doesn't require technical mastery. No coding. No modeling. Just intent. Just imagination. There's something poetic about where this is heading. With Veo 3, we told stories through visuals. With Genie 3, we might soon be simulating them as experiences - real, explorable, and endlessly mutable. For now, we wait. But the idea alone has already changed how I think about creativity. It's no longer about producing a final output, but about shaping a living world that others can enter and interpret in their own way. The storyteller becomes the architect. The prompt becomes a portal. And if Genie 3 delivers on even half of what it promises, the way we create and experience worlds might never be the same again.

Share

Share

Copy Link

Google DeepMind unveils Genie 3, an advanced AI world model capable of generating interactive 3D environments in real-time, marking a significant step towards artificial general intelligence.

DeepMind Introduces Genie 3: A Leap in AI World Modeling

Google DeepMind has unveiled Genie 3, its latest foundation world model, marking a significant advancement in artificial intelligence (AI) technology. This new model represents a crucial step towards achieving artificial general intelligence (AGI), offering unprecedented capabilities in generating interactive, real-time 3D environments

1

2

.

Source: Geeky Gadgets

Key Features and Improvements

Genie 3 builds upon its predecessors, Genie and Genie 2, while incorporating elements from DeepMind's video generation model, Veo 3. The new model boasts several impressive features:

-

Real-time Interaction: Genie 3 can generate multiple minutes of interactive 3D environments at 720p resolution and 24 frames per second, a substantial improvement over Genie 2's 10-20 second capability

2

3

. -

Promptable World Events: Users can alter the generated world through text prompts, changing weather conditions or adding new characters

4

.

Source: Digit

-

World Memory: The model maintains physical consistency over time by remembering previously generated elements, an emergent capability not explicitly programmed

2

. -

Diverse Environment Generation: Genie 3 can create both photo-realistic and imaginary worlds based on simple text prompts

2

5

.

Implications for AI Research and Development

DeepMind researchers view Genie 3 as more than just a generative model; it's a potential key to unlocking AGI, particularly for embodied agents

2

3

.-