EnCharge AI Unveils EN100: A Breakthrough in Analog AI Chip Technology

3 Sources

3 Sources

[1]

Is This Analog AI's Best Hope?

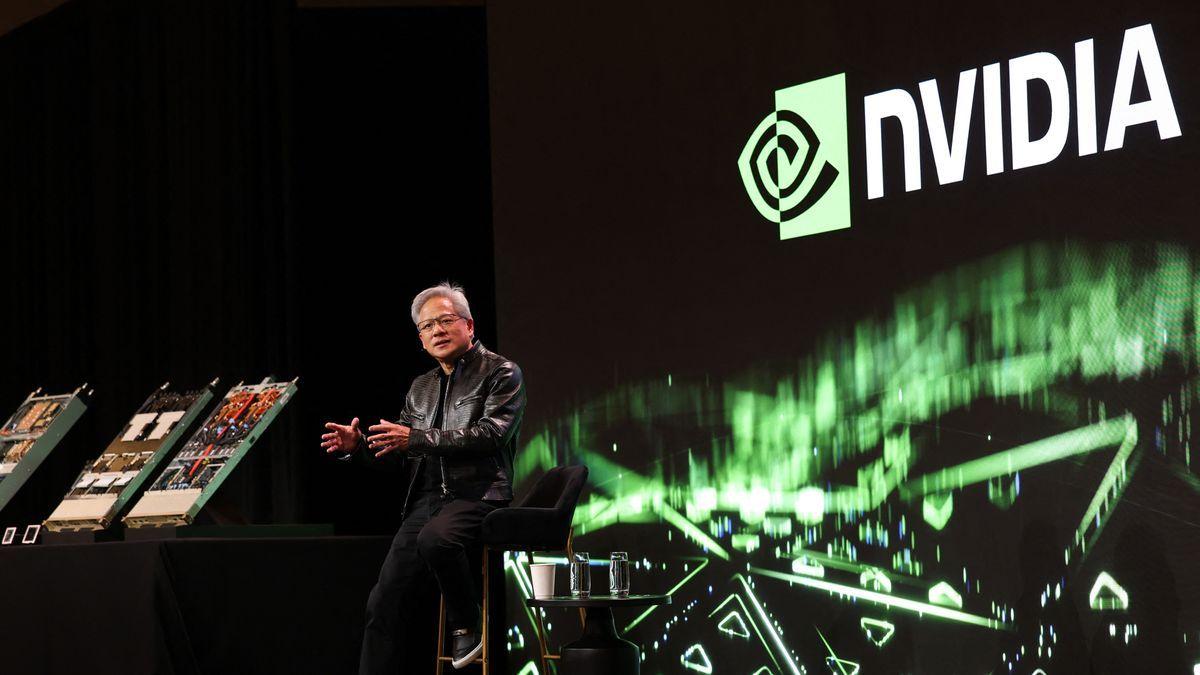

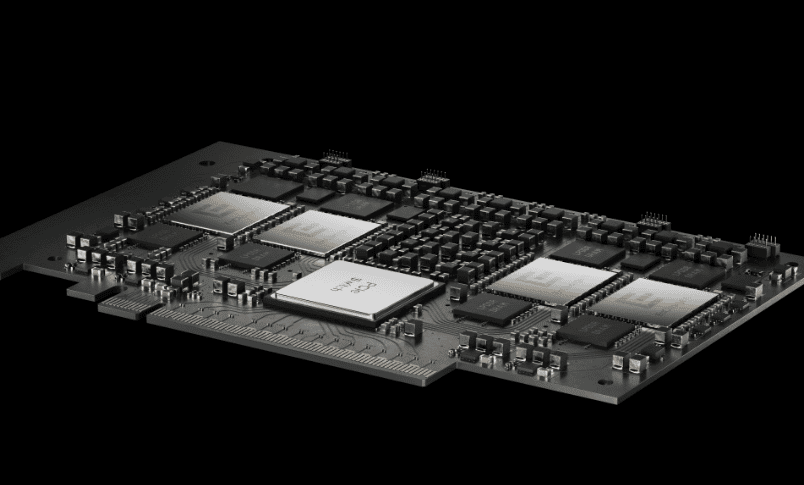

EnCharge AI's board for workstations and edge computers integrates four EN100 chips. Naveen Verma's lab at Princeton University is like a museum of all the ways engineers have tried to make AI ultra-efficient by using analog phenomena instead of digital computing. At one bench lies the most energy efficient magnetic-memory-based neural-network computer every made. At another you'll find a resistive-memory-based chip that can compute the largest matrix of numbers of any analog AI system yet. Neither has a commercial future, according to Verma. Less charitably, this part of his lab is a graveyard. Analog AI has captured chip architects' imagination for years. It combines two key concepts that should make machine learning massively less energy intensive. First, it limits the costly movement of bits between memory chips and processors. Second, instead of the 1s and 0s of logic, it uses the physics of the flow of current to efficiently do machine learning's key computation. As attractive as the idea has been, various analog AI schemes have not delivered in a way that could really take a bite out of AI's stupefying energy appetite. Verma would know. He's tried them all. But when IEEE Spectrum visited a year ago, there was a chip at the back of Verma's lab that represents some hope for analog AI and for the energy efficient computing needed to make AI useful and ubiquitous. Instead of calculating with current, the chip sums up charge. It might seem like an inconsequential difference, but it could be the key to overcoming the noise that hinders every other analog AI scheme. This week, Verma's startup EnCharge AI unveiled the first chip based on this new architecture, the EN100. The startups claims the chip tackles various AI work with performance per watt up to 20 times better than competing chips. It's designed into a single processor card that adds 200 trillion operations per second at 8.25 watts, aimed at conserving battery life in AI-capable laptops. On top of that, a 4-chip, 1000-trillion-operations-per-second card is targeted for AI workstations. In machine learning "it turns out, by dumb luck, the main operation we're doing is matrix multiplies," says Verma. That's basically taking an array of numbers, multiplying it by another array, and adding up the result of all those multiplications. Early on, engineers noticed a coincidence: Two fundamental rules of electrical engineering can do exactly that operation. Ohm's Law says that you get current by multiplying voltage and conductance. And Kirchoff's Current Law says that if you have a bunch of currents coming into a point from a bunch of wires, the sum of those currents is what leaves that point. So basically, each of a bunch of input voltages pushes current through a resistance (conductance is the inverse of resistance), multiplying the voltage value, and all those currents add up to produce a single value. Math, done. Sound good? Well, it gets better. Much of the data that makes up a neural network are the "weights," the things by which you multiply the input. And moving that data from memory into a processor's logic to do the work is responsible for a big fraction of the energy GPUs expend. Instead, in most analog AI schemes, the weights are stored in one of several types of nonvolatile memory as a conductance value (the resistances above). Because weight data is already where it needs to be to do the computation, it doesn't have to be moved as much, saving a pile of energy. The combination of free math and stationary data promises calculations that need just thousandths of a trillionth of joule of energy. Unfortunately, that's not nearly what analog AI efforts have been delivering. The fundamental problem with any kind of analog computing has always been the signal-to-noise ratio. Analog AI has it by the truckload. The signal, in this case the sum of all those multiplications, tends to be overwhelmed by the many possible sources of noise. "The problem is, semiconductor devices are messy things," says Verma. Say you've got an analog neural network where the weights are stored as conductances in individual RRAM cells. Such weight values are stored by setting a relatively high voltage across the RRAM cell for a defined period of time. The trouble is, you could set the exact same voltage on two cells for the same amount of time, and those two cells would wind up with slightly different conductance values. Worse still, those conductance values might change with temperature. The differences might be small, but recall that the operation is adding up many multiplications, so the noise gets magnified. Worse, the resulting current is then turned into a voltage that is the input of the next layer of neural networks, a step that adds to the noise even more. Researchers have attacked this problem from both a computer science perspective and a device physics one. In the hope of compensating for the noise, researchers have invented ways to bake some knowledge of the physical foibles of devices into their neural network models. Others have focused on making devices that behave as predictably as possible. IBM, which has done extensive research in this area, does both. Such techniques are competitive, if not yet commercially successful, in smaller-scale systems, chips meant to provide low-power machine learning to devices at the edges of IoT networks. Early entrant Mythic AI has produced more than one generation of its analog AI chip, but it's competing in a field where low-power digital chips are succeeding. EnCharge's solution strips out the noise by measuring the amount of charge instead of flow of charge in machine learning's multiply-and-accumulate mantra. In traditional analog AI, multiplication depending on the relationship among voltage, conductance, and current. In this new scheme, it depends on the relationship between voltage, capacitance, and charge -- where basically, charge equals capacitance times voltage. Why's that difference important? It comes down to the component that's doing the multiplication. Instead of using some finicky, vulnerable device like RRAM, EnCharge uses capacitors. A capacitor is basically two conductors sandwiching an insulator. A voltage difference between the conductors causes charge to accumulate on one of them. The thing that's key about them for the purpose of machine learning is that their value, the capacitance, is determined by their size. (More conductor area or less space between the conductors means more capacitance.) "The only thing they depend on is geometry, basically the space between wires," Verma says. "And that's the one thing you can control very, very well in CMOS technologies." EnCharge builds an array of precisely valued capacitors in the layers of copper interconnect above the silicon of its processors. The data that makes up most of a neural network model, the weights, are stored in an array of digital memory cells, each connected to a capacitor. The data the neural network is analyzing is then multiplied by the weight bits using simple logic built into the cell, and the results are stored as charge on the capacitors. Then the array switches into a mode where all the charges from the results of multiplications accumulate and the result is digitized. While the initial invention, which dates back to 2017, was a big moment for Verma's lab, he says the basic concept is quite old. "It's called switched capacitor operation; it turns out we've been doing it for decades," he says. It's used, for example, in commercial high-precision analog to digital converters. "Our innovation was figuring out how you can use it in an architecture that does in-memory computing." Verma's lab and EnCharge spent years proving that the technology was programmable and scalable and co-optimizing it with an architecture and software-stack that suits AI needs that are vastly different than they were in 2017. The resulting products are with early access developers now, and the company -- which recently raised US $100 million from Samsung Venture, Foxconn, and others -- plans another round of early access collaborations. But EnCharge is entering a competitive field, and among the competitors is the big kahuna, Nvidia. At its big developer event in March, GTC, Nvidia announced plans for a PC product built around its GB10 CPU-GPU combination and workstation built around the upcoming GB300. And there will be plenty of competition in the low-power space EnCharge is after. Some of them even use a form of computing-in-memory. D-Matrix and Axelera, for example, took part of analog AI's promise, embedding the memory in the computing, but do everything digitally. They each developed custom SRAM memory cells that both store and multiply and do the summation operation digitally, as well. There's even at least one more-traditional analog AI startup in the mix, Sagence. Verma is, unsurprisingly, optimistic. The new technology "means advanced, secure, and personalized AI can run locally, without relying on cloud infrastructure," he said in a statement. "We hope this will radically expand what you can do with AI."

[2]

Encharge AI unveils EN100 AI accelerator chip with analog memory

EnCharge AI, an AI chip startup that raised $144 million to date, announced the EnCharge EN100, an AI accelerator built on precise and scalable analog in-memory computing. Designed to bring advanced AI capabilities to laptops, workstations, and edge devices, EN100 leverages transformational efficiency to deliver 200-plus TOPS (a measure of AI performance) of total compute power within the power constraints of edge and client platforms such as laptops. The company spun out of Princeton University on the bet that its analog memory chips will speed up AI processing and cut costs too. "EN100 represents a fundamental shift in AI computing architecture, rooted in hardware and software innovations that have been de-risked through fundamental research spanning multiple generations of silicon development," said Naveen Verma, CEO at EnCharge AI, in a statement. "These innovations are now being made available as products for the industry to use, as scalable, programmable AI inference solutions that break through the energy efficient limits of today's digital solutions. This means advanced, secure, and personalized AI can run locally, without relying on cloud infrastructure. We hope this will radically expand what you can do with AI." Previously, models driving the next generation of AI economy -- multimodal and reasoning systems -- required massive data center processing power. Cloud dependency's cost, latency, and security drawbacks made countless AI applications impossible. EN100 shatters these limitations. By fundamentally reshaping where AI inference happens, developers can now deploy sophisticated, secure, personalized applications locally. This breakthrough enables organizations to rapidly integrate advanced capabilities into existing products -- democratizing powerful AI technologies and bringing high-performance inference directly to end-users, the company said. EN100, the first of the EnCharge EN series of chips, features an optimized architecture that efficiently processes AI tasks while minimizing energy. Available in two form factors - M.2 for laptops and PCIe for workstations - EN100 is engineered to transform on-device capabilities: ● M.2 for Laptops: Delivering up to 200+ TOPS of AI compute power in an 8.25W power envelope, EN100 M.2 enables sophisticated AI applications on laptops without compromising battery life or portability. ● PCIe for Workstations: Featuring four NPUs reaching approximately 1 PetaOPS, the EN100 PCIe card delivers GPU-level compute capacity at a fraction of the cost and power consumption, making it ideal for professional AI applications utilizing complex models and large datasets. EnCharge AI's comprehensive software suite delivers full platform support across the evolving model landscape with maximum efficiency. This purpose-built ecosystem combines specialized optimization tools, high-performance compilation, and extensive development resources -- all supporting popular frameworks like PyTorch and TensorFlow. Compared to competing solutions, EN100 demonstrates up to ~20x better performance per watt across various AI workloads. With up to 128GB of high-density LPDDR memory and bandwidth reaching 272 GB/s, EN100 efficiently handles sophisticated AI tasks, such as generative language models and real-time computer vision, that typically require specialized data center hardware. The programmability of EN100 ensures optimized performance of AI models today and the ability to adapt for the AI models of tomorrow. "The real magic of EN100 is that it makes transformative efficiency for AI inference easily accessible to our partners, which can be used to help them achieve their ambitious AI roadmaps," says Ram Rangarajan, Senior Vice President of Product and Strategy at EnCharge AI. "For client platforms, EN100 can bring sophisticated AI capabilities on device, enabling a new generation of intelligent applications that are not only faster and more responsive but also more secure and personalized." Early adoption partners have already begun working closely with EnCharge to map out how EN100 will deliver transformative AI experiences, such as always-on multimodal AI agents and enhanced gaming applications that render realistic environments in real-time. While the first round of EN100''s Early Access Program is currently full, interested developers and OEMs can sign up to learn more about the upcoming Round 2 Early Access Program, which provides a unique opportunity to gain a competitive advantage by being among the first to leverage EN100's capabilities for commercial applications at www.encharge.ai/en100. Competition EnCharge doesn't directly compete with many of the big players, as we have a slightly different focus and strategy. Our approach prioritizes the rapidly growing AI PC and edge device market, where our energy efficiency advantage is most compelling, rather than competing directly in data center markets. That said, EnCharge does have a few differentiators that make it uniquely competitive within the chip landscape. For one, EnCharge's chip has dramatically higher energy efficiency (approximately 20 times greater) than the leading players. The chip can run the most advanced AI models using about as much energy as a light bulb, making it an extremely competitive offering for any use case that can't be confined to a data center. Secondly, EnCharge's analog in-memory computing approach makes its chips far more compute dense than conventional digital architectures, with roughly 30 TOPS/mm2 versus 3. This allows customers to pack significantly more AI processing power into the same physical space, something that's particularly valuable for laptops, smartphones, and other portable devices where space is at a premium. OEMs can integrate powerful AI capabilities without compromising on device size, weight, or form factor, enabling them to create sleeker, more compact products while still delivering advanced AI features. Origins In March 2024, EnCharge partnered with Princeton University to secure an $18.6 million grant from DARPA Optimum Processing Technology Inside Memory Arrays (OPTIMA) program Optima is a $78 million effort to develop fast, power-efficient, and scalable compute-in-memory accelerators that can unlock new possibilities for commercial and defense-relevant AI workloads not achievable with current technology. EnCharge's inspiration came from addressing a critical challenge in AI: the inability of traditional computing architectures to meet the needs of AI. The company was founded to solve the problem that, as AI models grow exponentially in size and complexity, traditional chip architectures (like GPUs) struggle to keep pace, leading to both memory and processing bottlenecks, as well as associated skyrocketing energy demands. (For example, training a single large language model can consume as much electricity as 130 U.S. households use in a year.) The specific technical inspiration originated from the work of EnCharge 's founder, Naveen Verma, and his research at Princeton University in next generation computing architectures. He and his collaborators spent over seven years exploring a variety of innovative computing architectures, leading to a breakthrough in analog in-memory computing. This approach aimed to significantly enhance energy efficiency for AI workloads while mitigating the noise and other challenges that had hindered past analog computing efforts. This technical achievement, proven and de-risked over multiple generations of silicon, was the basis for founding EnCharge AI to commercialize analog in-memory computing solutions for AI inference. Encharge AI launched in 2022, led by a team with semiconductor and AI system experience. The team spun out of Princeton University, with a focus on a robust and scalable analog in-memory AI inference chip and accompanying software. The company was able to overcome previous hurdles to analog and in-memory chip architectures by leveraging precise metal-wire switch capacitors instead of noise-prone transistors. The result is a full-stack architecture that is up to 20 times more energy efficient than currently available or soon-to-be-available leading digital AI chip solutions. With this tech, EnCharge is fundamentally changing how and where AI computation happens. Their technology dramatically reduces the energy requirements for AI computation, bringing advanced AI workloads out of the data center and onto laptops, workstations, and edge devices. By moving AI inference closer to where data is generated and used, EnCharge enables a new generation of AI-enabled devices and applications that were previously impossible due to energy, weight, or size constraints while improving security, latency, and cost. Why it matters As AI models have grown exponentially in size and complexity, their chip and associated energy demands have skyrocketed. Today, the vast majority of AI inference computation is accomplished with massive clusters of energy-intensive chips warehoused in cloud data centers. This creates cost, latency, and security barriers for applying AI to use cases that require on-device computation. Only with transformative increases in compute efficiency will AI be able to break out of the data center and address on-device AI use-cases that are size, weight, and power constrained or have latency or privacy requirements that benefit from keeping data local. Lowering the cost and accessibility barriers of advanced AI can have dramatic downstream effects on a broad range of industries, from consumer electronics to aerospace and defense. The reliance on data centers also present supply chain bottleneck risks. The AI-driven surge in demand for high-end graphics processing units (GPUs) alone could increase total demand for certain upstream components by 30% or more by 2026. However, a demand increase of about 20% or more has a high likelihood of upsetting the equilibrium and causing a chip shortage. The company is already seeing this in the massive costs for the latest GPUs and years-long wait lists as a small number of dominant AI companies buy up all available stock. The environmental and energy demands of these data centers are also unsustainable with current technology. The energy use of a single Google search has increased over 20x from 0.3 watt-hours to 7.9 watt-hours with the addition of AI to power search. In aggregate, the International Energy Agency (IEA) projects that data centers' electricity consumption in 2026 will be double that of 2022 -- 1K terawatts, roughly equivalent to Japan's current total consumption. Investors include Tiger Global Management, Samsung Ventures, IQT, RTX Ventures, VentureTech Alliance, Anzu Partners, VentureTech Alliance, AlleyCorp and ACVC Partners. The company has 66 people.

[3]

EnCharge's EN100 accelerator chip sets the stage for more powerful on-device AI inference - SiliconANGLE

EnCharge's EN100 accelerator chip sets the stage for more powerful on-device AI inference EnCharge AI Inc. said today its highly efficient artificial intelligence accelerators for client computing devices are almost ready for prime time after more than eight years in development. The startup, which has raised more than $144 million in funding from a host of backers that include Tiger Global, Maverick Silicon and Samsung Ventures, has just lifted the lid on its powerful new EnCharge EN100 chip. It features a novel "charge-based memory" that uses about 20 times less energy than traditional graphics processing units. EnCharge said the EN100 can deliver more than 200 trillion operations per second of computing power within the constraints of client platforms such as laptops and workstations, eliminating the need for AI inference to be run in the cloud. Until today, the most powerful large language models that drive sophisticated multimodal and AI reasoning workloads have required so much computing power that it was only practical to run them in the cloud. But cloud platforms come at a cost, both in terms of dollars and also in latency and security, which makes them unsuitable for many types of applications. EnCharge believes it can change that with the EN100, as it enables on-device inference that's vastly more powerful than before. The company, which was founded by a team of engineering Ph.D.s and incubated at Princeton University, has created powerful analog in-memory-computing AI chips that dramatically reduce the energy requirements for many AI workloads. Its technology is based on a highly programmable application-specific integrated circuit that features a novel approach to memory management. The primary innovation is that the EN100 utilizes charge-based memory, which differs from traditional memory design in the way it reads data from the electrical current on a memory plane, as opposed to reading it from individual bit cells. It's an approach that enables the use of more precise capacitors, as opposed to less precise semiconductors. As a result, it can deliver enormous efficiency gains during data reduction operations that involve matrix multiplication. In essence, instead of communicating individual bits and bytes, the EN100 chip just communicates the result of its calculations. "You can do that by adding up the currents of all the bit cells, but that's noisy and messy," EnCharge co-founder and Chief Executive Naveen Verma told SiliconANGLE in a 2022 interview. "Or you can do that accumulation using the charge. That lets you move away from semiconductors to very robust and scalable capacitors. That operation can now be done very precisely." Not only does this mean the EN100 uses vastly less energy than traditional GPUs, but it also makes the chip extremely versatile. They can be highly optimized, either for efficiency, performance or fidelity. Moreover, the EN100 comes in a tiny form factor, mounted onto cards that can plug into a PCIe interface, so they can easily be hooked up to any laptop or personal computer. They can also be used in tandem with cloud-based GPUs. EnCharge said the EN100 is available in two flavors. The M.2 designed for laptops, offering up to 200 TOPS in an 8.25-watt power envelope, paving the way for high-performance AI applications on battery-powered devices. There's also an EN100 PCIe for workstations, which packs in four neural processing units to deliver up to 1 petaOPS of processing power, matching the capacity of Nvidia Corp.'s most advanced GPUs. According to EnCharge, it's aimed at "professional AI applications" that leverage more advanced LLMs and large datasets. The company said both form factors are capable of handling generative AI chatbots, real-time computer vision and image generation tasks that would previously have been viable only in the cloud. Verma said the launch of EN100 marks a fundamental shift in AI computing architecture. "It means advanced, secure and personalized AI can run locally, without relying on cloud infrastructure," he said. "We hope this will radically expand what you can do with AI." Alongside the chip, EnCharge offers a comprehensive software suite for developers to carry out whatever optimizations they deem necessary, so they can increase its processing power, or focus more on efficiency gains, depending on the application they have in mind. EnCharge said the first round of EN100's early-access program is already full, but it's inviting more developers to sign up for a second round that's slated to begin soon.

Share

Share

Copy Link

EnCharge AI introduces the EN100, an innovative analog AI accelerator chip that promises to revolutionize on-device AI capabilities with unprecedented energy efficiency and performance.

EnCharge AI Introduces Groundbreaking EN100 Chip

EnCharge AI, a startup spun out of Princeton University, has unveiled its revolutionary EN100 AI accelerator chip, marking a significant advancement in analog AI technology. The EN100 is built on precise and scalable analog in-memory computing, promising to bring advanced AI capabilities to laptops, workstations, and edge devices with unprecedented energy efficiency

2

.

Source: VentureBeat

Innovative Technology Behind EN100

At the heart of the EN100's innovation is its unique approach to analog AI computation. Unlike traditional analog AI schemes that rely on current flow, the EN100 utilizes charge-based memory. This fundamental shift allows the chip to overcome the noise issues that have plagued previous analog AI attempts

1

.Dr. Naveen Verma, CEO of EnCharge AI, explains: "You can do that by adding up the currents of all the bit cells, but that's noisy and messy. Or you can do that accumulation using the charge. That lets you move away from semiconductors to very robust and scalable capacitors. That operation can now be done very precisely"

3

.Performance and Specifications

The EN100 boasts impressive specifications:

- Performance: Delivers 200+ TOPS (Trillion Operations Per Second) of AI compute power

2

. - Energy Efficiency: Claims up to 20x better performance per watt compared to competing solutions

2

. - Memory: Features up to 128GB of high-density LPDDR memory with 272 GB/s bandwidth

2

.

The chip is available in two form factors:

- M.2 for Laptops: Offers 200+ TOPS within an 8.25-watt power envelope

2

3

. - PCIe for Workstations: Features four NPUs reaching approximately 1 PetaOPS

2

.

Source: SiliconANGLE

Implications for AI Applications

The EN100's efficiency and power open up new possibilities for on-device AI:

- Local Processing: Enables advanced, secure, and personalized AI to run locally without relying on cloud infrastructure

2

. - Complex AI Tasks: Efficiently handles sophisticated AI tasks like generative language models and real-time computer vision

2

. - Energy Conservation: Designed to conserve battery life in AI-capable laptops

1

.

Ram Rangarajan, Senior VP of Product and Strategy at EnCharge AI, highlights the chip's potential: "For client platforms, EN100 can bring sophisticated AI capabilities on device, enabling a new generation of intelligent applications that are not only faster and more responsive but also more secure and personalized"

2

.Related Stories

Market Position and Competition

While EnCharge AI doesn't directly compete with major players in the data center market, its focus on the AI PC and edge device market sets it apart. The company's analog in-memory computing approach allows for significantly higher compute density compared to conventional digital architectures, with approximately 30 TOPS/mm2 versus 3.5

2

.Future Prospects and Adoption

Source: IEEE

EnCharge AI has already begun working with early adoption partners to explore the EN100's potential in various applications, including always-on multimodal AI agents and enhanced gaming with real-time realistic environment rendering

2

.As the company prepares for wider adoption, it has opened sign-ups for the upcoming Round 2 Early Access Program, offering developers and OEMs the opportunity to leverage EN100's capabilities for commercial applications

2

.The introduction of the EN100 represents a significant step forward in analog AI technology, potentially reshaping the landscape of on-device AI capabilities and efficiency. As the chip moves closer to commercial availability, its impact on the AI industry and consumer devices will be closely watched by tech enthusiasts and industry professionals alike.

References

Summarized by

Navi

[1]

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology