FuriosaAI Secures Major Partnership with LG After Rejecting Meta's $800M Acquisition Offer

6 Sources

6 Sources

[1]

Instead of selling to Meta, AI chip startup FuriosaAI signed a huge customer | TechCrunch

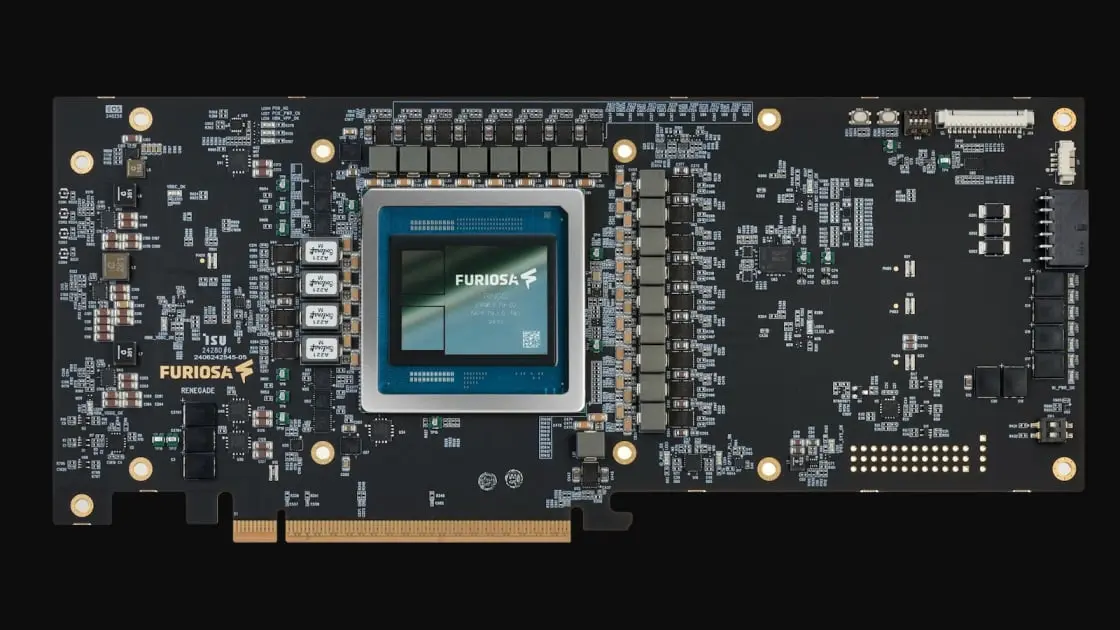

South Korean AI chip startup FuriosaAI announced a partnership on Tuesday to supply its AI chip, RNGD, to enterprises using LG AI Research's recently unveiled EXAONE platform. RNGD is optimized for running large language models (LLMs) and just last week, the Korean tech giant LG unveiled its next-generation hybrid AI model EXAONE 4.0. The collaboration targets key sectors, including electronics, finance, telecommunications, and biotechnology, for a range of diverse applications. This news comes roughly three months after FuriosaAI declined Meta's $800 million acquisition offer, opting to remain independent. The deal fell through due to disagreements over post-acquisition business strategy and organizational structure, rather than price issues, according to local media outlets. Meta's interest in acquiring AI chipmakers like FuriosaAI reflects its broader strategy to reduce its reliance on third-party suppliers, such as Nvidia. When asked why the deal with Meta fell through, CEO of FuriosaAI June Paik told TechCrunch: "We want to continue our mission, and I think it's an exciting opportunity at the same time. I believe it's a very impactful contribution, both personally and for the company, to make AI computing more sustainable." With M&A (at least from Meta) off the table, Paik declined to specify if the startup is now in pursuit of fresh funding. However, Paik says this new partnership will lead to business possibilities far beyond South Korea. "LG AI's EXAONE is regarded as the leading sovereign AI model in South Korea. It won't be used just within LG. It will be one of the main AI models used in the Korean AI ecosystem. We expect there will be many demands for this EXAONE, as well as for our chip solutions in South Korea, but not only in Korea. The LG team is also partnering with and doing business with global customers. So, we also expect this to be used by those customers, including global customers," Paik said. LG AI's decision to adopt Furiosa's AI chip and accelerator is notable for another reason: it's one of the few public endorsements of a rival to Nvidia by a major enterprise, Paik said. One major reason for the win is that the startup's hardware costs less. "We had to prove that our solution not only delivers strong performance but also lowers total cost of ownership," Paik said. FuriosaAI claims that its RNGD accelerator outperformed competitive GPUs with LG AI Research's EXAONE models, delivering 2.25 times better inference performance. Paik also says that LG found the FuriosaAI hardware was more energy efficiency. Furiosa's chip is not a general GPU but was built exclusively for AI. "We can support a wide variety of AI models efficiently. But unlike GPUs, which are still fundamentally general-purpose processors, our architecture is natively built for AI computing. We do not develop our chip for rendering or mining," Paik said. The Seoul-based startup, which also operates an office in Santa Clara, has a global team of just 15 employees.

[2]

How AI chip upstart FuriosaAI won over LG

Testing shows RNGD chips up to 2.25x higher performance per watt than.... five-year-old Nvidia silicon South Korean AI chip startup FuriosaAI scored a major customer win this week after LG's AI Research division tapped its AI accelerators to power servers running its Exaone family of large language models. But while floating point compute capability, memory capacity, and bandwidth all play a major role in AI performance, LG didn't choose Furiosa's RNGD -- pronounced "renegade" -- inference accelerators for speeds and feeds. Rather, it was power efficiency. "RNGD provides a compelling combination of benefits: excellent real-world performance, a dramatic reduction in our total cost of ownership, and a surprisingly straightforward integration," Kijeong Jeon, product unit leader at LG AI Research, said in a canned statement. A quick peek at RNGD's spec sheet reveals what appears to be a rather modest chip, with floating point performance coming in at between 256 and 512 teraFLOPS depending on whether you opt for 16- or 8-bit precision. Memory capacity is also rather meager, with 48GB across a pair of HBM3 stacks, that's good for about 1.5TB/s of bandwidth. Compared to AMD and Nvidia's latest crop of GPUs, RNGD doesn't look all that competitive until you consider the fact that Furiosa has managed to do all this using just 180 watts of power. In testing, LG research found the parts were as much as 2.25x more power efficient than GPUs for LLM inference on its homegrown family of Exaone models. Before you get too excited, the GPUs in question are Nvidia's A100s, which are getting rather long in the tooth -- they made their debut just as the pandemic was kicking off in 2020. But as FuriosaAI CEO June Paik tells El Reg, while Nvidia's GPUs have certainly gotten more powerful in the five years since the A100's debut, that performance has come at the expense of higher energy consumption and die area. While a single RNGD PCIe card can't compete with Nvidia's H100 or B200 accelerators on raw performance, in terms of efficiency -- the number of FLOPS you can squeeze from each watt -- the chips are more competitive than you might think. Paik credits much of the company's efficiency advantage here to RNGD's Tensor Contraction Processor architecture, which he says requires far fewer instructions to perform matrix multiplication than on a GPU and minimizes data movement. The chips also benefit from RNGD's use of HBM, which Paik says requires far less power than relying on GDDR, like we've seen with some of Nvidia's lower-end offers, like the L40S or RTX Pro 6000 Blackwell cards. At roughly 1.4 teraFLOPS per watt, RNGD is actually closer to Nvidia's Hopper generation than to the A100. RNGD's efficiency becomes even more apparent if we shift focus to memory bandwidth, which is arguably the more important factor when it comes to LLM inference. As a general rule, the more memory bandwidth you've got, the faster it'll spit out tokens. Here again, at 1.5TB/s, RNGD's memory isn't particularly fast. Nvidia's H100 offers both higher capacity at 80GB and between 3.35TB/s and 3.9TB/s of bandwidth. However, that chip uses anywhere from 2 to 3.9 times the power. For roughly the same wattage as an H100 SXM module, you could have four RNGD cards totaling 2 petaFLOPs of dense FP8, 192GB of HBM, and 6TB/s memory bandwidth. That's still a ways behind Nvidia's latest generation of Blackwell parts, but far closer than RNGD's raw speeds and feeds would have you believe. And, since RNGD is designed solely with inference in mind, models really can be spread across multiple accelerators using techniques like tensor parallelism, or even multiple systems using pipeline parallelism. LG AI actually used four RNGD PCIe cards in a tensor-parallel configuration to run its in-house Exaone 32B model at 16-bit precision. According to Paik, LG had very specific performance targets it was looking for when validating the chip for use. Notably, the constraints included a time-to-first token (TTFT), which measures the amount of time you have to wait before the LLM starts generating a response, of roughly 0.3 seconds for more modest 3,000 token prompts or 4.5 seconds for larger 30,000 token prompts. In case you're wondering, these tests are analogous to medium to large summarization tasks, which put more pressure on the chip's compute subsystem than a shorter prompt would have. LG found that it was able to achieve this level of performance while churning out about 50-60 tokens a second at a batch size of one. According to Paik, these tests were conducted using FP16, since the A100s LG compared against do not natively support 8-bit floating-point activations. Presumably dropping down to FP8 would essentially double the model's throughput and further reduce the TTFT. Using multiple cards does come with some inherent challenges. In particular, the tensor parallelism that allows both the model's weights and computation to be spread across four or more cards is rather network-intensive. Unlike Nvidia's GPUs, which often feature speedy proprietary NVLink interconnects that shuttle data between chips at more than a terabyte a second, Furiosa stuck with good old PCIe 5.0, which tops out at 128GB/s per card. In order to avoid interconnect bottlenecks and overheads, Furiosa says it optimized the chip's communication scheduling and compiler to overlap inter-chip direct memory access operations. But because RNGD hasn't shared figures for higher batch sizes, it's hard to say just how well this approach scales. At a batch of one, the number of tensor parallel operations is relatively few, he admitted. According to Paik, individual performance should only drop by 20-30 percent at batch 64. That suggests the same setup should be able to achieve close to 2,700 tokens a second of total throughput and support a fairly large number of concurrent users. But without hard details, we can only speculate. In any case, Furiosa's chips are good enough that LG's AI Research division now plans to offer servers powered by RNGD to enterprises utilizing its Exaone models. "After extensively testing a wide range of options, we found RNGD to be a highly effective solution for deploying Exaone models," Jeon said. Similar to Nvidia's RTX Pro Blackwell-based systems, LG's RNGD boxes will be available with up to eight PCIe accelerators. These systems will run what Furiosa describes as a highly mature software stack, which includes a version of vLLM, a popular model serving runtime. LG will also offer its agentic AI platform, called ChatExaone, which bundles up a bunch of frameworks for document analysis, deep research, data analysis, and retrieval augmented generation (RAG). Furiosa's powers of persuasion don't stop at LG, either. As you may recall, Meta reportedly made an $800 million bid to acquire the startup earlier this year, but ultimately failed to convince Furiosa's leaders to hand over the keys to the kingdom. Furiosa benefits from the growing demand for sovereign AI models, software, and infrastructure, designed and trained on homegrown hardware. However, to compete on a global scale, Furiosa faces some challenges. Most notably, Nvidia and AMD's latest crop of GPUs not only offer much higher performance, memory capacity, and bandwidth than RNGD, but by our estimate are a fair bit more energy-efficient. Nvidia's architectures also allow for greater degrees of parallelism thanks to its early investments in rack-scale architectures, a design point we're only now seeing chipmakers embrace. Having said that, it's worth noting that the design process for RNGD began in 2022, before OpenAI's ChatGPT kicked off the AI boom. At this time, models like Bert were mainstream with regard to language models. Paik, however, bet that GPT was going to take off and the underlying architecture was going to become the new norm, and that informed decisions like using HBM versus GDDR memory. "In retrospect I think I should have made an even more aggressive bet and had four HBM [stacks] and put more compute dies on a single package," Paik said. We've seen a number of chip companies, including Nvidia, AMD, SambaNova, and others, embrace this approach in order to scale their chips beyond the reticle limit. Hindsight being what it is, Paik says now that Furiosa has managed to prove out its tensor compression processor architecture, HBM integration, and software stack, the company simply needs to scale up its architecture. "We have a very solid building block," he said. "We're quite confident that when you scale up this chip architecture it will be quite competitive against all the latest GPU chips." ®

[3]

Nvidia Chip Challenger FuriosaAI Scores First Major Customer LG

FuriosaAI Inc., the Seoul-based startup seeking to design chips to compete with Nvidia Corp., has sealed its first major contract months after rejecting an $800 million acquisition offer from Meta Platforms Inc. The startup won final approval for its AI chip RNGD (pronounced "Renegade") from LG AI Research after seven months of rigorous evaluation spanning performance and efficiency. The larger Korean company will use the chip to power its Exaone large-language models, FuriosaAI Chief Executive Officer June Paik told Bloomberg News.

[4]

FuriosaAI chose a partnership with LG over a Meta buyout

FuriosaAI will supply its RNGD AI chip to enterprises using LG's next-generation EXAONE 4.0 AI platform FuriosaAI, a South Korean AI chip startup, announced a partnership with LG AI Research to supply its RNGD AI chip to enterprises utilizing the EXAONE platform, following its rejection of an $800 million acquisition offer from Meta. The RNGD chip is specifically engineered for optimal performance with large language models (LLMs). This collaboration follows LG's introduction of its next-generation hybrid AI model, EXAONE 4.0, which occurred the week prior to the partnership announcement. The strategic alliance aims to serve diverse applications across key sectors, including electronics, finance, telecommunications, and biotechnology. This development occurred approximately three months after FuriosaAI declined an $800 million acquisition proposal from Meta, opting to maintain its independent operational status. Local media outlets indicated that the acquisition talks failed due to fundamental disagreements concerning post-acquisition business strategy and organizational structure, rather than financial considerations. Meta's pursuit of AI chipmakers, such as FuriosaAI, aligns with its broader corporate objective of reducing its dependency on external suppliers like Nvidia. June Paik, CEO of FuriosaAI, addressed the termination of discussions with Meta, stating, "We want to continue our mission, and I think it's an exciting opportunity at the same time. I believe it's a very impactful contribution, both personally and for the company, to make AI computing more sustainable." Paik did not elaborate on whether the company is seeking new funding following the decision to forgo the acquisition. Meta offered AI expert a $1.25B contract, got denied Paik anticipates that the partnership with LG AI Research will generate business prospects extending beyond the South Korean market. Paik noted, "LG AI's EXAONE is regarded as the leading sovereign AI model in South Korea. It won't be used just within LG. It will be one of the main AI models used in the Korean AI ecosystem. We expect there will be many demands for this EXAONE, as well as for our chip solutions in South Korea, but not only in Korea. The LG team is also partnering with and doing business with global customers. So, we also expect this to be used by those customers, including global customers." LG AI's decision to integrate FuriosaAI's chip and accelerator represents a notable endorsement of an Nvidia competitor by a major enterprise, as highlighted by Paik. A significant factor in this adoption was the demonstrated cost-effectiveness of FuriosaAI's hardware. Paik affirmed, "We had to prove that our solution not only delivers strong performance but also lowers total cost of ownership." FuriosaAI asserts that its RNGD accelerator demonstrated superior performance compared to competitive GPUs when running LG AI Research's EXAONE models, achieving 2.25 times greater inference performance. Paik also confirmed that LG determined FuriosaAI's hardware offered enhanced energy efficiency. FuriosaAI's chip is designed exclusively for AI applications, distinguishing it from general-purpose GPUs. Paik explained, "We can support a wide variety of AI models efficiently. But unlike GPUs, which are still fundamentally general-purpose processors, our architecture is natively built for AI computing. We do not develop our chip for rendering or mining." The Seoul-based startup, which also maintains an office in Santa Clara, currently employs a global team of 15 individuals.

[5]

Nvidia chip challenger FuriosaAI scores first major customer LG - The Economic Times

FuriosaAI Inc., the Seoul-based startup seeking to design chips to compete with Nvidia Corp., has sealed its first major contract months after rejecting an $800 million acquisition offer from Meta Platforms Inc. The startup won final approval for its AI chip RNGD (pronounced "Renegade") from LG AI Research after seven months of rigorous evaluation spanning performance and efficiency. The larger Korean company will use the chip to power its Exaone large-language models, FuriosaAI Chief Executive Officer June Paik told Bloomberg News. LG's approval is a validation for FuriosaAI, one of a handful of Korean chip designers hoping to ride a post-ChatGPT boom in AI infrastructure. The RNGD chip was designed to challenge not just industry leader Nvidia but also fellow startups Groq Inc., SambaNova Systems Inc. and Cerebras Systems Inc. "For the last eight years, we worked very hard from R&D to product phases and finally this commercialisation phase," Paik said. "This signals that our product is ready for enterprise adoption." Founded in 2017 by Paik, who previously worked at Samsung Electronics Co. and Advanced Micro Devices Inc., FuriosaAI develops semiconductors for AI inferencing or services. It claims to deliver 2.25 times better inference performance per watt compared to graphics processing units. Like Korean peers Rebellions Inc. and Semifive Inc., FuriosaAI is trying to tap a giant semiconductor ecosystem of talent, suppliers and government incentives that've sprung up around Samsung and SK Hynix Inc. over the past decade. As part of their partnership, FuriosaAI and LG intend to deploy RNGD servers using Exaone across a range of industries from electronics to finance. They will also power LG's in-house enterprise AI agent, ChatExaone, which the company plans to expand to external clients. FuriosaAI is working to secure its next customers in the US, the Middle East and Southeast Asia. It expects to reach similar agreements in the second half of this year, Paik said. FuriosaAI attracted public attention when news emerged in March that it had rejected Meta's advances, opting for independence. It plans to raise capital before eventually pursuing an initial public offering, according to people familiar with the matter.

[6]

Jensen Huang may have met his match, and it's not AMD, but a stealthy South Korean challenger

FuriosaAI, a rising South Korean chipmaker, is quietly emerging as a serious Nvidia challenger in the AI hardware world. After turning down Meta's $800M offer, FuriosaAI partnered with LG to power its LLM platform, proving it can outperform even Nvidia's best chips in efficiency and cost. Their RNGD chip delivers faster AI inference while using far less power. This bold move signals a possible shift in global AI chip dominance. With Nvidia's grip on the AI industry being tested, FuriosaAI might just be the surprise competitor Jensen Huang never saw coming.

Share

Share

Copy Link

South Korean AI chip startup FuriosaAI partners with LG AI Research to supply its RNGD chip for the EXAONE platform, marking a significant milestone after declining Meta's acquisition bid.

FuriosaAI's Strategic Partnership with LG

South Korean AI chip startup FuriosaAI has announced a significant partnership with LG AI Research to supply its RNGD (pronounced "Renegade") AI chip for enterprises using LG's next-generation EXAONE 4.0 AI platform

1

2

. This collaboration comes approximately three months after FuriosaAI declined an $800 million acquisition offer from Meta Platforms Inc., choosing to remain independent3

.The RNGD Chip: A Challenger to Nvidia

Source: ET

FuriosaAI's RNGD chip is specifically designed for optimal performance with large language models (LLMs). The company claims that its RNGD accelerator outperformed competitive GPUs when running LG AI Research's EXAONE models, delivering 2.25 times better inference performance

1

.Key features of the RNGD chip include:

- Floating point performance between 256 and 512 teraFLOPS (16- or 8-bit precision)

- 48GB memory across two HBM3 stacks

- Power consumption of just 180 watts

4

LG's Adoption and Performance Evaluation

LG AI Research conducted a rigorous seven-month evaluation of the RNGD chip, focusing on performance and efficiency

5

. The tests revealed that RNGD provided:- Excellent real-world performance

- Significant reduction in total cost of ownership

- Straightforward integration

4

In specific tests, LG achieved:

- Time-to-first token (TTFT) of 0.5 seconds for 3,000 token prompts

- TTFT of 4.5 seconds for 30,000 token prompts

- Token generation rate of 50-60 tokens per second at batch size one

4

Related Stories

FuriosaAI's Market Position and Future Plans

Source: Bloomberg

FuriosaAI, founded in 2017 by June Paik, is positioning itself as a competitor to industry leader Nvidia and other AI chip startups like Groq Inc., SambaNova Systems Inc., and Cerebras Systems Inc.

5

. The company is leveraging South Korea's semiconductor ecosystem, which has developed around giants like Samsung and SK Hynix5

.CEO June Paik expressed optimism about the partnership's global potential: "We expect there will be many demands for this EXAONE, as well as for our chip solutions in South Korea, but not only in Korea. The LG team is also partnering with and doing business with global customers"

1

.Implications for the AI Chip Market

This partnership represents a notable endorsement of an Nvidia competitor by a major enterprise

1

. It also highlights the growing competition in the AI chip market, with startups like FuriosaAI challenging established players.

Source: The Register

FuriosaAI is now working to secure customers in the US, Middle East, and Southeast Asia, with expectations to reach similar agreements in the second half of this year

5

. The company plans to raise capital before eventually pursuing an initial public offering5

.As the AI industry continues to evolve rapidly, partnerships like this between chip manufacturers and major tech companies could reshape the landscape of AI computing infrastructure.

References

Summarized by

Navi

[1]

[2]

[4]

Related Stories

FuriosaAI launches RNGD chip in mass production to challenge Nvidia's AI inference dominance

06 Jan 2026•Technology

FuriosaAI targets $500M funding round as RNGD chip production begins, eyes 2027 IPO

20 Jan 2026•Startups

FuriosaAI Rejects Meta's $800 Million Acquisition Offer, Aims for Independent Growth in AI Chip Market

24 Mar 2025•Technology

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology