Google removes AI Overviews for medical queries after investigation finds dangerous health advice

14 Sources

14 Sources

[1]

Google removes some AI health summaries after investigation finds "dangerous" flaws

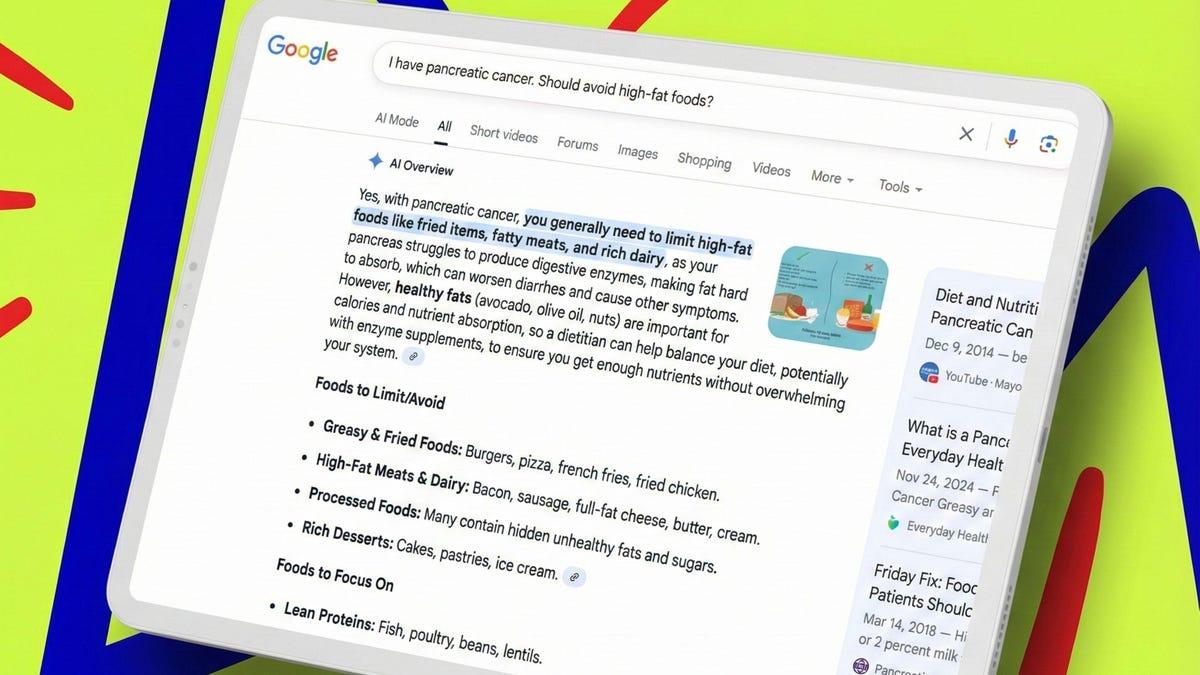

On Sunday, Google removed some of its AI Overviews health summaries after a Guardian investigation found people were being put at risk by false and misleading information. The removals came after the newspaper found that Google's generative AI feature delivered inaccurate health information at the top of search results, potentially leading seriously ill patients to mistakenly conclude they are in good health. Google disabled specific queries, such as "what is the normal range for liver blood tests," after experts contacted by The Guardian flagged the results as dangerous. The report also highlighted a critical error regarding pancreatic cancer: The AI suggested patients avoid high-fat foods, a recommendation that contradicts standard medical guidance to maintain weight and could jeopardize patient health. Despite these findings, Google only deactivated the summaries for the liver test queries, leaving other potentially harmful answers accessible. The investigation revealed that searching for liver test norms generated raw data tables (listing specific enzymes like ALT, AST, and alkaline phosphatase) that lacked essential context. The AI feature also failed to adjust these figures for patient demographics such as age, sex, and ethnicity. Experts warned that because the AI model's definition of "normal" often differed from actual medical standards, patients with serious liver conditions might mistakenly believe they are healthy and skip necessary follow-up care. Vanessa Hebditch, director of communications and policy at the British Liver Trust, told The Guardian that a liver function test is a collection of different blood tests and that understanding the results "is complex and involves a lot more than comparing a set of numbers." She added that the AI Overviews fail to warn that someone can get normal results for these tests when they have serious liver disease and need further medical care. "This false reassurance could be very harmful," she said. Google declined to comment on the specific removals to The Guardian. A company spokesperson told The Verge that Google invests in the quality of AI Overviews, particularly for health topics, and that "the vast majority provide accurate information." The spokesperson added that the company's internal team of clinicians reviewed what was shared and "found that in many instances, the information was not inaccurate and was also supported by high-quality websites." Why AI Overviews produces errors The recurring problems with AI Overviews stem from a design flaw in how the system works. As we reported in May 2024, Google built AI Overviews to show information backed up by top web results from its page ranking system. The company designed the feature this way based on the assumption that highly ranked pages contain accurate information. However, Google's page ranking algorithm has long struggled with SEO-gamed content and spam. The system now feeds these unreliable results to its AI model, which then summarizes them with an authoritative tone that can mislead users. Even when the AI draws from accurate sources, the language model can still draw incorrect conclusions from the data, producing flawed summaries of otherwise reliable information. The technology does not inherently provide factual accuracy. Instead, it reflects whatever inaccuracies exist on the websites Google's algorithm ranks highly, presenting the facts with an authority that makes errors appear trustworthy. Other examples remain active The Guardian found that typing slight variations of the original queries into Google, such as "lft reference range" or "lft test reference range," still prompted AI Overviews. Hebditch said this was a big worry and that the AI Overviews present a list of tests in bold, making it very easy for readers to miss that these numbers might not even be the right ones for their test. AI Overviews still appear for other examples that The Guardian originally highlighted to Google. When asked why these AI Overviews had not also been removed, Google said they linked to well-known and reputable sources and informed people when it was important to seek out expert advice. Google said AI Overviews only appear for queries where it has high confidence in the quality of the responses. The company constantly measures and reviews the quality of its summaries across many different categories of information, it added. This is not the first controversy for AI Overviews. The feature has previously told people to put glue on pizza and eat rocks. It has proven unpopular enough that users have discovered that inserting curse words into search queries disables AI Overviews entirely.

[2]

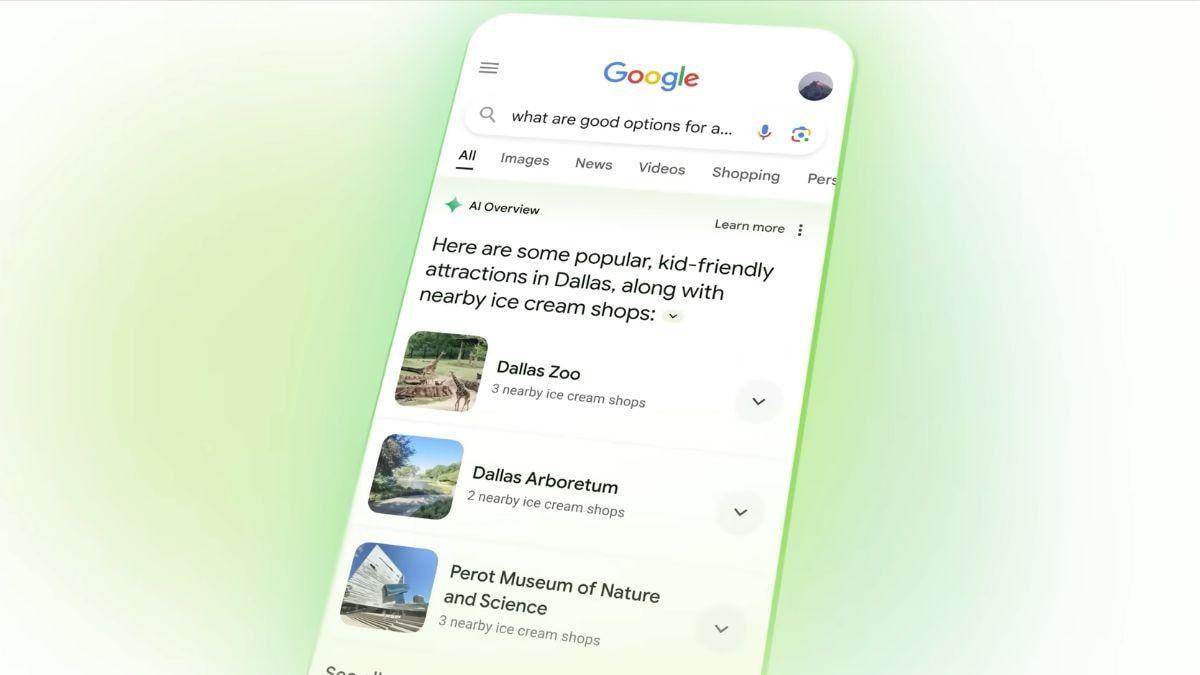

Google removes AI Overviews for certain medical queries

Following an investigation by the Guardian that found Google AI Overviews offering misleading information in response to certain health-related queries, the company appears to have removed the AI Overviews for some of those queries. For example, the Guardian initially reported that when users asked "what is the normal range for liver blood tests," they would be presented with numbers that did not account for factors such as nationality, sex, ethnicity, or age, potentially leading them to think their results were healthy when they were not. Now, the Guardian says AI Overviews have been removed from the results for "what is the normal range for liver blood tests" and "what is the normal range for liver function tests." However, it found that variations on those queries, such as "lft reference range" or "lft test reference range," could still lead to AI-generated summaries. When I tried those queries this morning -- several hours after the Guardian published its story -- none of them resulted in seeing AI Overviews, though Google still gave me the option to ask the same query in AI Mode. In several cases, the top result was actually the Guardian article about the removal. A Google spokesperson told the Guardian that the company does not "comment on individual removals within Search," but that it works to "make broad improvements." The spokesperson also said that an internal team of clinicians reviewed the queries highlighted by the Guardian and found "in many instances, the information was not inaccurate and was also supported by high quality websites." TechCrunch has reached out to Google for additional comment. Last year, the company announced new features aimed at improving Google Search for healthcare use cases, including improved overviews and health-focused AI models. Vanessa Hebditch, the director of communications and policy at the British Liver Trust, told the Guardian that the removal is "excellent news," but added, "Our bigger concern with all this is that it is nit-picking a single search result and Google can just shut off the AI Overviews for that but it's not tackling the bigger issue of AI Overviews for health."

[3]

Google pulls AI overviews for some medical searches

Earlier this month, The Guardian published an investigation that showed Google was serving up misleading and outright false information via its AI overviews in response to certain medical inquiries. Now those results appear to have been removed. According to the original report: In one case that experts described as "really dangerous", Google wrongly advised people with pancreatic cancer to avoid high-fat foods. Experts said this was the exact opposite of what should be recommended, and may increase the risk of patients dying from the disease. In another "alarming" example, the company provided bogus information about crucial liver function tests, which could leave people with serious liver disease wrongly thinking they are healthy. As of this morning, the AI overviews for questions like "what is the normal range for liver blood tests?" have been disabled entirely. Google declined to comment on the specific removal. But this is just one more controversy for a feature that has told people to put glue on pizza, eat rocks, and been the subject of multiple lawsuits.

[4]

Use Google AI Overview for health advice? It's 'really dangerous,' investigation finds

Don't rely solely on AI to research serious medical conditions. Turning to AI to answer health-related questions seems like an easy and convenient option that may spare you from a doctor's visit. That's particularly true with a tool like Google's AI Overviews, which summarizes the results that pop up during a regular Google Search. Also: How to get rid of AI Overviews in Google Search: 4 easy ways But that doesn't mean Google's AI is the best route to take, especially if you're researching a serious medical problem or condition. A recent investigation by British newspaper The Guardian concluded that Google's own AI Overviews put users at risk by providing false and misleading health information in its summaries. To conduct its tests, The Guardian used AI Overviews to research several health-related questions. The paper then asked different medical and health experts to review the responses. Also: 40 million people globally are using ChatGPT for healthcare - but is it safe? In one case described by experts as "really dangerous," Google's AI Overviews advised people with pancreatic cancer to avoid high-fat foods. But the experts said that this is the exact opposite of the correct advice. In another instance, the AI-generated information about women's cancer tests was "completely wrong," according to the experts. Here, a search for "vaginal cancer symptoms and tests" listed a pap test as a test for vaginal cancer, which the experts said was incorrect. In a third case, Google served up false information about critical liver function tests that could give people with serious liver disease the wrong impression. For example, searching for the phrase "what is the normal range for liver blood tests" resulted in misleading data with little context and no regard for nationality, sex, ethnicity, or age. Based on its investigation, The Guardian also said that AI Overviews offered inaccurate results on searches about mental health. In particular, some of the summaries for such conditions as psychosis and eating disorders displayed "very dangerous advice" and were "incorrect, harmful, or could lead people to avoid seeking help," Stephen Buckley, the head of information at mental health charity Mind, told Google. In response to a request for feedback on The Guardian's findings, a Google spokesperson send ZDNET the following statement: "Many of the examples shared with us are incomplete screenshots, but from what our internal team of clinicians could assess, the responses link to well-known, reputable sources and recommend seeking out expert advice. We invest significantly in the quality of AI Overviews, particularly for topics like health, and the vast majority provide accurate information." Also: Using AI for therapy? Don't - it's bad for your mental health, APA warns Google also reviewed the specific questions posed by The Guardian and qualified some of the answers. For example, the AI Overview doesn't say that pap tests are meant for diagnosing vaginal cancer but says that vaginal cancer can be found incidentally on a pap test, according to Google. For the pancreatic cancer example, the overview cites Johns Hopkins University as one reputable source. For the mental health example, Google said the overview pointed to a source that links to a free, confidential, national crisis support line. For the liver test query, Google said that the AI Overview stated that normal ranges can vary from lab to lab and that a disclaimer advised people to consult a professional for medical advice or diagnosis. I tested AI Overviews by submitting some of the same questions that The Guardian asked. In one search, I told Google that I have pancreatic cancer and asked if I should avoid high-fat foods. The AI said that you do often need to limit high-fat foods with pancreatic cancer because your pancreas struggles to produce digestive enzymes. But you also need calories, so working with a registered dietitian to find the right balance of healthy fats and easy-to-digest options is crucial to avoid weight loss and malnutrition. Also: Google search chief talks future of news content amid AI scramble Next, I searched for "vaginal cancer symptoms and tests." Here, AI Overviews did list a pap test as one of several diagnostic tests but qualified it by saying that this type of test checks for abnormal cells on the cervix, which can sometimes find vaginal cancer. Asking Google AI for the normal range for liver blood tests gave me general ranges but also offered specific numbers by gender and age. Based on my own limited testing, the answers provided by AI Overviews seemed less cut and dry than those obtained by The Guardian. But that points to another challenge with AI and search in general. The way you phrase your question influences the answer. I could ask the same question two different ways and get two different responses, one that's partially accurate and potentially useful and the other inaccurate and unhelpful. In defending its AI Overviews, Google said that the system uses web rankings to try to ensure that the information is reliable and relevant. If any content from the web is misinterpreted or is lacking context, the company will use those examples to try to improve the process. Still, The Guardian's investigation points out the pitfalls of relying on AI for any critical research, especially involving serious health conditions. The key takeaway here is "Don't." Don't risk your health by assuming that the information provided by an AI is going to be correct. Also: Sick of AI in your search results? Try these 8 Google alternatives If you do insist on using AI for this purpose, double-check and triple-check the responses. Run the same search across different AIs to see if they're in agreement. Better yet, investigate the sources consulted for the answers to see if the AI interpreted them correctly. Best yet, talk to your doctor. If you are experiencing a serious medical condition, your doctor's office should always be your primary point of contact. Don't be afraid to reach out. Many medical offices provide email and messaging for patient questions. While it may be tempting to turn to AI for quick and easy answers, you don't want to run the risk of getting the wrong information.

[5]

Google removes AI Overviews from some medical searches after experts warn of risks to users' health

Serving tech enthusiasts for over 25 years. TechSpot means tech analysis and advice you can trust. What just happened? Don't be surprised to find some of Google's AI Overviews conspicuously absent from certain medical searches. The company has removed several of these AI summaries following a report that found inaccurate information was being shown to users that could put their health at risk. The Guardian reported at the start of January that AI Overviews, which are supposed to provide AI-generated summaries of search results, were putting people at risk by offering misleading health advice. One example was the AI Overviews advising people with pancreatic cancer to avoid high-fat foods. Experts say this is exactly the opposite of what should be recommended, and may increase the patient's risk of death. The summaries also showed incorrect information about crucial liver function tests, which could leave people with serious liver disease wrongly thinking they are healthy. Answers for women's cancer tests were also showing the wrong information, which experts say could cause people to dismiss serious symptoms. Google claimed that the incorrect examples they were shown linked to well-known, reputable sources. But the company has now removed AI Overviews for several health queries. Don't put your faith in AI Overviews Questions that no longer surface Google's AI feature include "what is the normal range for liver blood tests?" and "what is the normal range for liver function tests?" A Google spokesperson said: "We do not comment on individual removals within Search. In cases where AI Overviews miss some context, we work to make broad improvements, and we also take action under our policies where appropriate." While medical experts have welcomed the move, there are warnings that rephrasing these questions could still lead to misleading AI Overviews being generated. The Guardian found that only slight variations to the queries prompted the AI Overviews to appear. It's also noted that several incorrect or misleading AI Overviews that appeared in the original Guardian report have not been removed, including summaries related to cancer and mental health. Google reiterated that these had not been removed because they link to well-known and reputable sources and informed people when it was important to seek out expert advice. The AI Overviews feature has a history of generating information that ranges from false or misleading to utterly nonsensical. It famously told people to put glue on pizza to help the cheese stick, and to eat rocks, neither of which are particularly good for your health or teeth. A report from September found that over 10% of Google AI Overviews cite AI-generated content.

[6]

Google Pulls Some Health-Related AI Overviews as Industry Goes All-In on Healthcare

Google has quietly pulled back some AI-generated health summaries at the same time that the industry is seemingly racing to embed AI into healthcare. The move came after The Guardian found some of the information provided by the feature was misleading. The Guardian reported Sunday that Google had removed AI-generated search summaries for the queries “what is the normal range for liver blood tests†and “what is the normal range for liver function tests.†As of Tuesday morning, those searches no longer provide an AI Overview at the top of the page. Instead, users are shown short excerpts pulled from traditional search results. According to The Guardian, the AI Overviews for those liver-related searches and others had “served up inaccurate health information and put people at risk of harm.†For example, the summaries reportedly provided for the liver test searchers included “masses of numbers, little context and no accounting for nationality, sex, ethnicity or age of patients.†The Guardian reported that some experts said these results could be dangerous. For instance, someone with liver disease might delay follow-up care if they rely on an AI-generated definition of what’s normal. "We invest significantly in the quality of AI Overviews, particularly for topics like health, and the vast majority provide accurate information," a Google spokesperson told Gizmodo in an emailed statement. "Our internal team of clinicians reviewed what’s been shared with us and found that in many instances, the information was not inaccurate and was also supported by high quality websites. In cases where AI Overviews miss some context, we work to make broad improvements, and we also take action under our policies where appropriate." The removals come at a moment when more people than ever are turning to AI for answers about their health, and the industry is taking notice. OpenAI, the company behind ChatGPT, said last week that roughly a quarter of its 800 million regular users submit a healthcare-related prompt every week, with more than 40 million doing so daily. Days later, OpenAI launched ChatGPT Health, a health-focused experience that can connect with users' medical records, wellness apps, and wearable devices. And shortly after that, the company announced it had acquired the healthcare startup Torch, which tracks medical records including lab results, recordings from doctor visits, and medications. Meanwhile, rival AI company Anthropic is not far behind. On Monday, the company announced a new set of AI tools that allow healthcare providers, insurers, and patients to use its Claude chatbot for medical purposes. For hospitals and insurance companies, Anthropic says the tools can help streamline tasks such as prior authorization requests and patient communications. For patients, Claude can be given access to lab results and health records to generate summaries and explanations in plain language. As AI advances in healthcare, even minor errors or missing context can have significant consequences for patients. Whether these companies are ready for that remains to be seen.

[7]

Google pulls AI Overviews on some health queries after claims they mislead users

A number of health-related AI overviews have seemingly been removed Google has removed several AI Overviews from searches relating to health information after experts deemed them misleading and potentially dangerous. An investigation by The Guardian found that certain queries, such as "what is the normal range for liver blood tests," would return misleading information. According to The Guardian's report, typing that into Google would return an AI Overview that didn't account for variants like age, sex, ethnicity or nationality. In another instance, the AI result seemingly told people with pancreatic cancer to avoid high-fat foods. The experts consulted by the site didn't pull their punches. Anna Jewell, the director of support, research and influencing at Pancreatic Cancer UK told the site the AI Overview was "completely incorrect" and that following its advice "could be really dangerous and jeopardise a person's chances of being well enough to have treatment." Other examples The Guardian provided include searching for "vaginal cancer symptoms and tests," which led to an incorrect AI Overview saying a pap test screens for vaginal cancer (it screens for cervical cancer), as well as searches around eating disorders. Google itself seems to have quietly acknowledged the investigation and has removed some of the AI Overviews, but not all. Searching for the above query on liver blood tests doesn't surface an AI Overview at all, while searching for vaginal cancer symptoms still provides an AI Overview -- but now makes the distinction on pap tests. Google told The Guardian: "We do not comment on individual removals within Search. In cases where AI Overviews miss some context, we work to make broad improvements, and we also take action under our policies where appropriate." I have contacted Google separately for additional comment on the allegations and will update this article if and when I receive a response. In March last year, Google announced it was rolling out new features to improve responses to healthcare queries. "People use Search and features like AI Overviews to find credible and relevant information about health, from common illnesses to rare conditions," the company wrote in a blog post. "Since AI Overviews launched last year, people are more satisfied with their search results, and they're asking longer, more complex questions. And with recent health-focused advancements on Gemini models, we continue to further improve AI Overviews on health topics so they're more relevant, comprehensive and continue to meet a high bar for clinical factuality." Picking apart the inclusion of AI into healthcare is extremely difficult and nuanced. Nobody would argue that showing incorrect health information in a common search result isn't bad. And studies have shown AI Overviews aren't as accurate as we'd like them to be. But what about AI-driven algorithms that support clinicians with faster and more accurate diagnoses? Or an easily-accessible AI chatbot working, not as a substitute for a medical professional, but as a first step for someone interested in collecting more information about a symptom or feeling. I've certainly asked ChatGPT a few health questions over the last few months to get a steer on whether or not I should seek further help. And, as it happens, OpenAI has just launched ChatGPT Health, an AI tool aiming to make medical information easier to understand. Would you trust an AI answer when it comes to aspects of your mental or physical health? Let me know in the comments below.

[8]

'Dangerous and alarming': Google removes some of its AI summaries after users' health put at risk

Exclusive: Guardian investigation finds AI Overviews provided inaccurate and false information when queried over blood tests Google has removed some of its artificial intelligence health summaries after a Guardian investigation found people were being put at risk of harm by false and misleading information. The company has said its AI Overviews, which use generative AI to provide snapshots of essential information about a topic or question, are "helpful" and "reliable". But some of the summaries, which appear at the top of search results, served up inaccurate health information, putting users at risk of harm. In one case that experts described as "dangerous" and "alarming", Google provided bogus information about crucial liver function tests that could leave people with serious liver disease wrongly thinking they were healthy. Typing "what is the normal range for liver blood tests" served up masses of numbers, little context and no accounting for nationality, sex, ethnicity or age of patients, the Guardian found. What Google's AI Overviews said was normal may vary drastically from what was actually considered normal, experts said. The summaries could lead to seriously ill patients wrongly thinking they had a normal test result, and not bother to attend follow-up healthcare meetings. After the investigation, the company has removed AI Overviews for the search terms "what is the normal range for liver blood tests" and "what is the normal range for liver function tests". A Google spokesperson said: "We do not comment on individual removals within Search. In cases where AI Overviews miss some context, we work to make broad improvements, and we also take action under our policies where appropriate." Vanessa Hebditch, the director of communications and policy at the British Liver Trust, a liver health charity, said: "This is excellent news, and we're pleased to see the removal of the Google AI Overviews in these instances. "However, if the question is asked in a different way, a potentially misleading AI Overview may still be given and we remain concerned other AI‑produced health information can be inaccurate and confusing." The Guardian found that typing slight variations of the original queries into Google, such as "lft reference range" or "lft test reference range", prompted AI Overviews. That was a big worry, Hebditch said. "A liver function test or LFT is a collection of different blood tests. Understanding the results and what to do next is complex and involves a lot more than comparing a set of numbers. "But the AI Overviews present a list of tests in bold, making it very easy for readers to miss that these numbers might not even be the right ones for their test. "In addition, the AI Overviews fail to warn that someone can get normal results for these tests when they have serious liver disease and need further medical care. This false reassurance could be very harmful." Google, which has a 91% share of the global search engine market, said it was reviewing the new examples provided to it by the Guardian. Hebditch said: "Our bigger concern with all this is that it is nit-picking a single search result and Google can just shut off the AI Overviews for that but it's not tackling the bigger issue of AI Overviews for health." Sue Farrington, the chair of the Patient Information Forum, which promotes evidence-based health information to patients, the public and healthcare professionals, welcomed the removal of the summaries but said she still had concerns. "This is a good result but it is only the very first step in what is needed to maintain trust in Google's health-related search results. There are still too many examples out there of Google AI Overviews giving people inaccurate health information." Millions of adults worldwide already struggle to access trusted health information, Farrington said. "That's why it is so important that Google signposts people to robust, researched health information and offers of care from trusted health organisations." AI Overviews still pop up for other examples the Guardian originally highlighted to Google. They include summaries of information about cancer and mental health that experts described as "completely wrong" and "really dangerous". Asked why these AI Overviews had not also been removed, Google said they linked to well-known and reputable sources, and informed people when it was important to seek out expert advice. A spokesperson said: "Our internal team of clinicians reviewed what's been shared with us and found that in many instances, the information was not inaccurate and was also supported by high quality websites." Victor Tangermann, a senior editor at the technology website Futurism, said the results of the Guardian's investigation showed Google had work to do "to ensure that its AI tool isn't dispensing dangerous health misinformation". Google said AI Overviews only show up on queries where it has high confidence in the quality of the responses. The company constantly measures and reviews the quality of its summaries across many different categories of information, it added. In an article for Search Engine Journal, senior writer Matt Southern said: "AI Overviews appear above ranked results. When the topic is health, errors carry more weight."

[9]

Google Removes AI Health Summaries After Risky Medical Errors Exposed

We have all been there. You feel a weird pain in your side or get a confusing test result back from the doctor, and the first thing you do is open Google. You aren't looking for a medical degree; you just want a quick answer to "am I okay?" But recently, Google had to pump the brakes on its AI search summaries because, as it turns out, asking a robot for medical advice might actually be dangerous. Google quietly scrubbed a bunch of AI-generated health summaries from its search results after an investigation revealed they were giving out inaccurate and frankly scary information. This all started after a report by The Guardian pointed out that these "AI Overviews" -- the colorful boxes that pop up at the very top of your search -- were serving up incomplete data. The most glaring example was about liver blood tests If you asked the AI for "normal ranges," it would just spit out a list of numbers. It didn't ask if you were male or female. It didn't ask about your age, your ethnicity, or your medical history. It just gave a flat number. Medical experts looked at this and basically said, "This is dangerous." The problem here isn't just that the AI was wrong; it's that it was dangerously misleading. Imagine someone with early-stage liver disease looking up their test results. The AI tells them their numbers fall within the "normal" range it scraped from some random website. That person might think, "Oh, I'm fine then," and skip a follow-up appointment. In reality, a "normal" number for a 20-year-old might be a warning sign for a 50-year-old. The AI lacks the nuance to know that, and that gap in context can have serious, real-world consequences. Recommended Videos Google's response was pretty standard -- they removed the specific queries that were flagged and insisted that their system is usually helpful. But here is the kicker: health organizations like the British Liver Trust found that if you just reworded the question slightly, the same bad information popped right back up. It's like a game of digital whack-a-mole. You fix one error, and the AI just generates a new one five seconds later. The real issue here is trust Because these AI summaries sit right at the top of the page, above the actual links to hospitals or medical journals, they carry an air of authority. We are trained to trust the top result. When Google presents an answer in a neat little box, our brains subconsciously treat it as the "correct" answer. But it's not. It's just a prediction engine trying to guess what words come next. For now, this is a massive wake-up call. AI is great for summarizing an email or planning a travel itinerary, but when it comes to your health, it clearly isn't ready for prime time. Until these systems can understand context -- or until Google puts stricter guardrails in place -- it is probably safer to scroll past the robot and click on an actual link from a real doctor. Speed is nice, but accuracy is the only thing that matters when you're talking about your health.

[10]

Google's AI Overviews Caught Giving Dangerous "Health" Advice

"If the information they receive is inaccurate or out of context, it can seriously harm their health." In May 2024, Google threw caution to the wind by rolling out its controversial AI Overviews feature in a purported effort to make information easier to find. But the AI hallucinations that followed -- like telling users to eat rocks and put glue on their pizzas -- ended up perfectly illustrated the persistent issues that plague large language model-based tools to this day. And while not being able to reliably tell what year it is or making up explanations for nonexistent idioms might sound like innocent gaffes that at most lead to user frustration, some advice Google's AI Overviews feature is offering up could have far more serious consequences In a new investigation, The Guardian found that the tool's AI-powered summaries are loaded with inaccurate health information that could put people at risk. Experts warn that it's only a matter of time until the bad advice endangers users -- or, in a worst-case scenario, results in someone's death. The issue is severe. For instance, The Guardian found that it advised those with pancreatic cancer to avoid high-fat foods, despite doctors recommending the exact opposite. It also completely bungled information about women's cancer tests, which could lead to people ignoring real symptoms of the disease. It's a precarious situation as those who are vulnerable and suffering often turn to self-diagnosis on the internet for answers. "People turn to the internet in moments of worry and crisis," end-of-life charity Marie Curie director of digital Stephanie Parker told The Guardian. "If the information they receive is inaccurate or out of context, it can seriously harm their health." Others were alarmed by the feature turning up completely different responses to the same prompts, a well-documented shortcoming of large language model-based tools that can lead to confusion. Mental health charity Mind's head of information, Stephen Buckle, told the newspaper that AI Overviews offered "very dangerous advice" about eating disorders and psychosis, summaries that were "incorrect, harmful or could lead people to avoid seeking help." A Google spokesperson told The Guardian in a statement that the tech giant invests "significantly in the quality of AI Overviews, particularly for topics like health, and the vast majority provide accurate information." But given the results of the newspaper's investigation, the company has a lot of work left to ensure that its AI tool isn't dispensing dangerous health misinformation. The risks could continue to grow. According to an April 2025 survey by the University of Pennsylvania's Annenberg Public Policy Center, nearly eight in ten adults said they're likely to go online for answers about health symptoms and conditions. Nearly two-thirds of them found AI-generated results to be "somewhat or very reliable," indicating a considerable -- and troubling -- level of trust. At the same time, just under half of respondents said they were uncomfortable with healthcare providers using AI to make decisions about their care. A separate MIT study found that participants deemed low-accuracy AI-generated responses "valid, trustworthy, and complete/satisfactory" and even "indicated a high tendency to follow the potentially harmful medical advice and incorrectly seek unnecessary medical attention as a result of the response provided." That's despite AI models continuing to prove themselves as strikingly poor replacements for human medical professionals. Meanwhile, doctors have the daunting task of dispelling myths and trying to keep patients from being led down the wrong path by a hallucinating AI. On its website, the Canadian Medical Association calls AI-generated health advice "dangerous," pointing out that hallucinations, as well as algorithmic biases and outdated facts, can "mislead you and potentially harm your health" if they choose to follow the generated advice. Experts continue to advise people to consult human doctors and other licensed healthcare professionals instead of AI, a tragically tall ask given the many barriers to adequate care around the world. At least AI Overviews sometimes appears to be aware of its own shortcomings. When queried if it should be trusted for health advice, the feature happily pointed us to The Guardian's investigation. "A Guardian investigation has found that Google's AI Overviews have displayed false and misleading health information that could put people at risk of harm," read the AI Overviews' reply.

[11]

Google removes AI Overviews for health queries after accuracy concerns

An investigation by the Guardian found that Google's AI-generated summaries gave out inaccurate health information. Google has reportedly removed some AI Overviews for health-related questions following an investigation that found that some of the information was misleading. AI Overviews are summaries generated by artificial intelligence that provide information in response to a topic or question at the top of the search results. An investigation by the Guardian newspaper found that some of the summaries contained inaccurate health information, putting people at risk of harm. The newspaper found that AI Overviews served up masses of numbers with little context in response to questions such as "what is the normal range for liver blood tests?" and "what is the normal range for liver function tests?" The responses also didn't take into account any variances that could come based on nationality, sex, ethnicity, or age. A Google search result for both questions came up with a featured snippet, a paragraph extracted from the most relevant website to the user's question at the top of the search results. For both questions, Google chose to extract the range of healthy levels of various types of substances found in the liver in its snippet, such as alanine transaminase (ALT), which regulates energy production, aspartate aminotransferase (AST), which detects damaged blood cells, and alkaline phosphatase (ALP), which helps break down proteins. Google extracted the numbers from Max Healthcare, an Indian for-profit hospital chain in New Delhi, the Guardian found. Featured snippets are different from AI Overviews because the text in the snippet is not generated by AI. The Guardian's investigation found that variations on those questions, such as "[liver function test] lft reference range" or "lft test reference range," would still generate AI summaries. Liver function tests are blood tests that measure proteins and enzymes to check how well the liver is working. Google reportedly advised people with pancreatic cancer to avoid high-fat foods, which experts told the Guardian is not a good recommendation because it could increase the risk of patients dying from the disease. The Guardian's investigation comes as experts sound the alarm on how AI chatbots "hallucinate," meaning they invent false answers when they don't have the correct information. Euronews Next reached out to Google to ask whether it had removed AI Overviews from some health queries, but did not receive an immediate reply. Google announced this weekend that it was adding AI Overviews to its email service, Gmail. The new feature lets users ask questions and receive an answer about their emails instead of searching through them with a keyword search.

[12]

Google's Recent Misstep Is a Warning for AI-Generated Medical Info

Google removed AI responses for some medical search queries after an investigation by The Guardian found out-of-context or even incorrect answers to questions about everything from pancreatic cancer to liver disease -- answers that could pose a threat to patients' wellbeing. The specific search terms for which Google removed AI Overviews were "what is the normal range for liver blood tests" and "what is the normal range for liver function tests," according to The Guardian. Other related searches, such as for "lft reference range" or "lft test reference range," could still prompt AI responses, however, TechCrunch found. (LFT stands for "liver function tests.") "Our bigger concern with all this is that it is nit-picking a single search result and Google can just shut off the AI Overviews for that but it's not tackling the bigger issue of AI Overviews for health," the director of communications and policy at British Liver Trust told The Guardian. The publication's original investigation, published in early January, found that responses to queries about liver tests returned many numbers without adequate context to take into account crucial factors like a patient's age, gender or ethnicity. Similarly, a search about pancreatic cancer advised patients to avoid fatty foods, which one professional told The Guardian was "completely incorrect," and could lead a patient to weight loss that could threaten their health during treatment. Another search incorrectly described a pap smear as a test for vaginal cancer. In fact, those tests screen for abnormalities that could indicate cervical cancer, according to the Mayo Clinic.

[13]

Google's AI Overviews Said to Provide Wrong Medical Advice

The extent of the incorrect information on AI Overviews is not clear Google's AI Overviews has again stirred a commotion for sharing incorrect medical advice. According to a report, the Mountain View-based tech giant's artificial intelligence (AI) search summary feature was spotted sharing incorrect information when it was asked a very specific medical question. After the error became known publicly, the company reportedly removed the response from the AI Overviews, and the feature is said not to show up when asking certain medical queries. The incident occurs at a time when companies like OpenAI and Anthropic are heavily pushing for AI adoption in healthcare. AI Overviews Shares Erroneous Medical Information According to an investigation conducted by The Guardian, AI Overviews is producing incorrect and harmful responses when users ask medical queries on Google Search. In one particular case, the publication noted that when asking about diet preference for a person with pancreatic cancer, the AI tool recommended avoiding high-fat food. Notably, professionals recommend eating high-fat food regularly, as not doing so can have lethal consequences. Similarly, the publication reported that asking about the normal range for liver blood tests would show numbers that do not factor in important metrics, such as the nationality, gender, age, or ethnicity of the individual. This can lead to an unsuspecting user believing their results were healthy, when in reality they were not. AI Overviews about women's cancer tests reportedly also showed incorrect information, "dismissing genuine symptoms." The Guardian also reached out to a Google spokesperson, who claimed that the examples shared with them came from incomplete screenshots, but added that the cited links belonged to well-known, reputable sources. Gadgets 360 staff members also tested the questions and found that the AI-generated summaries are not showing up, which was also reported by The Guardian separately. But if the AI tool is indeed providing incorrect information, this can be a cause of concern since a large number of people rely on Google Search to find information. Since AI Overviews appear at the top of the search results, many users just refer to this information instead. Notably, the incident comes at a time when companies like OpenAI and Anthropic are pushing for the adoption of their healthcare-focused AI products. While these AI firms are using a custom model geared for medical queries, even the smallest error in this space could have dire consequences.

[14]

Google stops showing AI Overviews for certain health questions

However, variations of these searches, such as "lft reference range" or "lft test reference range," can still trigger AI-generated summaries. Google has removed AI-generated summaries, known as AI Overviews, for certain health-related searches following a report by The Guardian. The investigation highlighted that some AI Overviews were providing misleading information that could affect users' understanding of their health. For example, the Guardian reported that when users asked, "what is the normal range for liver blood tests," the AI Overviews gave numbers that didn't consider important factors such as nationality, sex, ethnicity, or age. This could have led some people to incorrectly assume their test results were normal. After the report, Google has now removed AI Overviews for searches like "what is the normal range for liver blood tests" and "what is the normal range for liver function tests." However, variations of these searches, such as "lft reference range" or "lft test reference range," can still trigger AI-generated summaries. Also read: OpenAI asks contractors to upload real work from past jobs to test AI agents: Report A Google spokesperson told the Guardian that the company does not "comment on individual removals within Search," but emphasised that it is working to "make broad improvements." The spokesperson also said that an internal team of clinicians reviewed the queries highlighted by the Guardian and found that "in many instances, the information was not inaccurate and was also supported by high quality websites." Vanessa Hebditch, director of communications and policy at the British Liver Trust, called the removal "excellent news," but added, "Our bigger concern with all this is that it is nit-picking a single search result and Google can just shut off the AI Overviews for that but it's not tackling the bigger issue of AI Overviews for health." Also read: Instagram denies data breach reports, says user accounts are secure Last year, Google introduced new features to improve healthcare searches. Despite these updates, concerns remain about the reliability of AI-generated medical information.

Share

Share

Copy Link

Google has removed some AI Overviews from health-related searches following a Guardian investigation that exposed dangerous flaws in AI-generated health summaries. The investigation found that Google's generative AI feature delivered inaccurate medical information, including wrong advice about pancreatic cancer and misleading liver function test results that could lead seriously ill patients to mistakenly believe they are healthy.

Google AI Overviews Pulled After Guardian Investigation Exposes Dangerous Health Advice

Google has removed several AI Overviews from medical queries after a Guardian investigation revealed that the AI-generated health summaries were putting users at risk with inaccurate medical information and misleading health information

1

. The investigation, published in early January, found that Google's generative AI feature delivered false guidance at the top of search results, potentially leading seriously ill patients to dangerous conclusions about their health2

.

Source: Gadgets 360

The Guardian documented multiple cases where Google AI Overviews provided dangerous health advice that contradicted established medical guidance. In one particularly alarming example, the AI advised people with pancreatic cancer to avoid high-fat foods—exactly the opposite of standard medical recommendations

3

. Medical experts warned this incorrect pancreatic cancer advice could increase the risk of patient death, as maintaining weight is critical for these patients4

.

Source: ZDNet

Misleading Liver Function Tests Information Raises Alarm

Google disabled specific queries including "what is the normal range for liver blood tests" after experts flagged the results as dangerous

1

. The investigation revealed that searching for liver function tests generated raw data tables listing specific enzymes like ALT, AST, and alkaline phosphatase without essential context. The AI feature failed to adjust these figures for patient demographics such as age, sex, and ethnicity.Vanessa Hebditch, director of communications and policy at the British Liver Trust, told The Guardian that understanding liver function test results "is complex and involves a lot more than comparing a set of numbers."

1

She warned that the AI Overviews fail to inform users that someone can receive normal results for these tests while having serious liver disease requiring further medical care. "This false reassurance could be very harmful," she emphasized.Design Flaw in Page Ranking System Fuels Misinformation

The recurring problems with Google AI Overviews stem from a fundamental design flaw in how the system works

1

. Google built the feature to show information backed up by top search results from its page ranking system, based on the assumption that highly ranked pages contain accurate information. However, Google's algorithm has long struggled with SEO-gamed content and spam. The system now feeds these unreliable results to its language model, which then summarizes them with an authoritative tone that can mislead users seeking expert advice on patient health matters.

Source: Ars Technica

Even when the AI draws from accurate sources, the language model can still draw incorrect conclusions from the data, producing flawed summaries of otherwise reliable information. The technology does not inherently provide factual accuracy but reflects whatever inaccuracies exist on the websites Google's algorithm ranks highly, presenting facts with an authority that makes errors appear trustworthy

1

.Related Stories

Partial Removal Leaves Gaps in Safety Measures

While Google removes AI Overviews for specific queries like "what is the normal range for liver blood tests" and "what is the normal range for liver function tests," The Guardian found that slight variations such as "lft reference range" or "lft test reference range" still prompted AI Overviews to appear

2

. Hebditch called this a major concern, noting that the AI Overviews present lists of tests in bold, making it easy for readers to miss that these numbers might not be the right ones for their specific test1

.Several other examples that The Guardian originally highlighted to Google remain active. When asked why these AI Overviews had not been removed, Google said they linked to well-known and reputable sources and informed people when it was important to seek expert advice. A Google spokesperson told The Verge that the company's internal team of clinicians reviewed what was shared and "found that in many instances, the information was not inaccurate and was also supported by high-quality websites."

1

.Ongoing Concerns About AI-Generated Medical Content

This is not the first controversy for AI Overviews. The feature has previously told people to put glue on pizza and eat rocks

3

. It has proven unpopular enough that users discovered inserting curse words into search queries disables AI Overviews entirely1

. A report from September found that over 10% of Google AI Overviews cite AI-generated content.Google declined to comment on the specific removals to The Guardian, with a spokesperson stating that the company does not "comment on individual removals within Search" but works to "make broad improvements." The company said AI Overviews only appear for queries where it has high confidence in the quality of responses and that it constantly measures and reviews the quality of its summaries across many different categories of information

1

.Hebditch welcomed the removal as "excellent news" but added, "Our bigger concern with all this is that it is nit-picking a single search result and Google can just shut off the AI Overviews for that but it's not tackling the bigger issue of AI Overviews for health."

2

The way users phrase medical queries influences the answer, with the same question asked two different ways potentially yielding one partially accurate response and another that is inaccurate and unhelpful4

.References

Summarized by

Navi

[2]

[3]

Related Stories

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation