Google DeepMind's AlphaQubit: AI-Powered Quantum Error Correction Breakthrough

6 Sources

6 Sources

[1]

Google DeepMind develops an AI-based decoder that identifies quantum computing errors

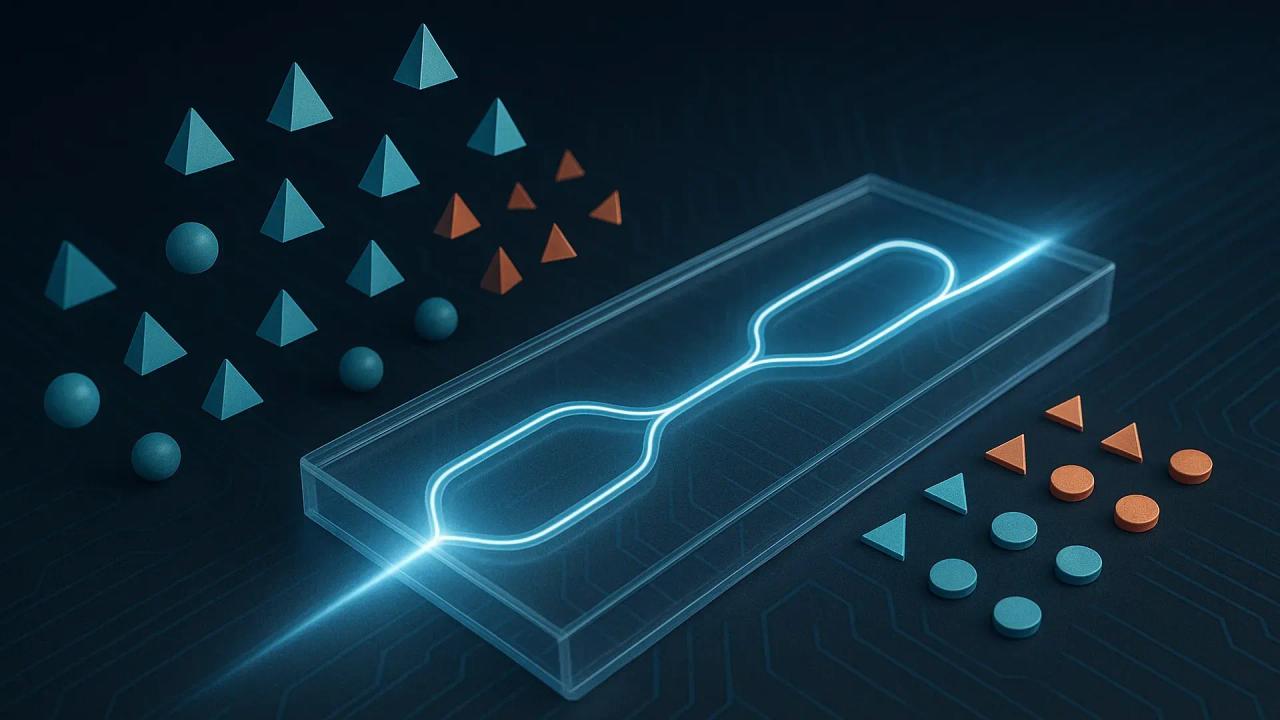

A team of AI researchers at Google DeepMind, working with a team of quantum researchers at Google Quantum AI, announced the development of an AI-based decoder that identifies quantum computing errors. In their paper published in the journal Nature, the group describes how they used machine learning to help find qubit errors more efficiently than other methods. Nadia Haider, with the Delft University of Technology's, Quantum Computing Division of QuTech and the Department of Microelectronics, has published a News and Views piece in the same journal issue outlining the work done by the team at Google. One of the main sticking points preventing the development of a truly useful quantum computer is the issue of error correction. Qubits tend to be fragile, which means their quality can be less than desired, resulting in errors. In this new effort, the combined team of researchers at Google has taken a new approach to solving the problem -- they have developed an AI-based decoder to help identify such errors. Over the past several years, Google's Artificial Intelligence division has developed and worked on a quantum computer called Sycamore. To conduct quantum computing, it creates single logical qubits using multiple hardware qubits, which are used to run programs while also carrying out error correction. In this new effort, the team has developed a new way to find and correct such errors and has named it AlphaQubit The new AI-based decoder is a type of deep learning neural network. The researchers first trained it to recognize errors using their Sycamore computer, running with 49 qubits and a quantum simulator. Together, the two systems generated hundreds of millions of examples of quantum errors. They then reran the Sycamore, this time using AlphaQubit to identify any generated errors, all of which were then corrected. In so doing, they found it resulted in a 6% improvement in error correction during highly accurate but slow tests and a 30% improvement when running less accurate but faster tests. They also tested it using up to 241 qubits and found it exceeded expectations. They suggest their findings indicate machine learning may be the solution to error correction on quantum computers, allowing for concentrating on other problems yet to be overcome.

[2]

Google DeepMind's AlphaQubit tackles quantum error detection with unprecedented accuracy - SiliconANGLE

Google DeepMind's AlphaQubit tackles quantum error detection with unprecedented accuracy Google DeepMind's quantum research team says it's using advanced artificial intelligence algorithms to solve one of the biggest challenges that prevents them from building a reliable quantum computer - error correction. In a paper published in the journal Nature, the Google DeepMind and Quantum AI teams introduced a new, AI-powered decoder system for quantum computers that can identify computing errors with unparalleled accuracy. Called AlphaQubit, it's the result of a collaboration that brings together Google DeepMind's expertise in machine learning with Google Quantum AI's proficiency in quantum machines. In the research paper, the authors explained that the ability to accurately detect quantum computing errors is critical for making reliable machines that can scale to tackle the world's biggest computational challenges. It's a key step that may one day pave the way to numerous scientific breakthroughs that classical computers will never be able to achieve. The team explains that quantum computers have the potential to solve problems in a matter of minutes or hours that would take conventional computers years to do. However, one of the major roadblocks in bringing these next-generation systems online is that qubits, the quantum equivalent of traditional "bits," are extremely unstable and prone to errors. What's needed is a way to detect these errors, so users can rely on the results generated by quantum machines. The instability of qubits stems from the way they rely on quantum properties such as superposition and entanglement to solve complex problems in fewer steps. Qubits can sift through vast numbers of possibilities using quantum inference to find solutions, but their natural state is extremely fragile. They can be disrupted all too easily, by microscopic defects in hardware, or even the slightest variation in heat, minuscule vibrations, electromagnetic interference or even cosmic rays. That explains why existing quantum computers either run at temperatures close to absolute zero, or use novel technologies like lasers to manipulate ion-based qubits held in a vacuum, but while these techniques do help, they don't completely solve the problem of instability. By using quantum error correction systems, it's possible to get around the instability problem by grouping multiple qubits into a single, logical qubit and performing regular consistency checks on them. It then becomes possible to identify logical qubits that deviate from the norm, and correct them when it happens. The difficulty lies in spotting these errors, and that's where AlphaQubit can help - Google DeepMind explains that it's a neural network-based decoder that leverages the same transformer architecture that underpins many of today's large language models. The team trained AlphaQubit on hundreds of consistency checks, so that it can correctly predict when a logical qubit starts behaving incorrectly. As the researchers explained: "We began by training our model to decode the data from a set of 49 qubits inside a Sycamore quantum processor, the central computational unit of the quantum computer. To teach AlphaQubit the general decoding problem, we used a quantum simulator to generate hundreds of millions of examples across a variety of settings and error levels. Then we tuned AlphaQubit for a specific decoding task by giving it thousands of experimental samples from a particular Sycamore processor." In the researchers' tests, AlphaQubit displayed incredible accuracy compared to existing quantum decoders, making 6% fewer errors than tensor network methods in the largest Sycamore experiments. While tensor networks are quite accurate themselves, the problem is that they're extremely slow. AlphaQubit, on the other hand, can identify errors with more accuracy and at much greater speed, more than fast enough to scale up to handle real-world quantum computing operations. The researchers said that today's most powerful quantum computers can achieve only a small fraction of the computing power that the technology will eventually achieve, and so there's a need to show that AlphaQubit can scale up dramatically. To ensure it can, the researchers trained it on data from simulated quantum systems of up to 241 qubits, which vastly exceeds the number available on Sycamore. Once again, AlphaQubit vastly outperformed existing decoders, indicating it will be able to work with midsized quantum machines in the future. AlphaQubit has some other useful features too. For instance, it has the ability to report "condence levels" on inputs and outputs, which means there's lots of potential to improve the performance of quantum processors in future. Moreover, when trained on samples of up to 25 rounds of error correction, the system maintained its high performance for up to 100,000 rounds, meaning it can generalize scenarios far exceeding its training data. Although AlphaQubit can help improve the reliability of quantum computers, the researchers conceded that there's a lot of work ahead. For one thing, the system remains too slow to correct errors on a fast superconducting quantum processor in real time. And as quantum machines grow in size to exceed the millions of qubits necessary to achieve an advantage over classical computers, they'll need to come up with more efficient ways of training the decoder to handle such a large number.

[3]

Google's AI Breakthrough Brings Quantum Computing Closer to Real-World Applications - Decrypt

Google researchers have discovered a new technique that could finally make quantum computing practical in real life, using artificial intelligence to solve one of science's most persistent challenges: more stable states. In a research paper published in Nature, Google Deepmind scientists explain that their new AI system, AlphaQubit, has proven remarkably successful at correcting the persistent errors that have long plagued quantum computers. "Quantum computers have the potential to revolutionize drug discovery, material design, and fundamental physics -- that is, if we can get them to work reliably," Google's announcement reads. But nothing is perfect: quantum systems are extraordinarily fragile. Even the slightest environmental interference -- from heat, vibration, electromagnetic fields, or even cosmic rays -- can disrupt their delicate quantum states, leading to errors that make computations unreliable. A March research paper highlights the challenge: quantum computers need an error rate of just one in a trillion operations (10^-12) for practical use. However, current hardware has error rates between 10^-3 and 10^-2 per operation, making error correction crucial. "Certain problems, which would take a conventional computer billions of years to solve, would take a quantum computer just hours," Google states. "However, these new processors are more prone to noise than conventional ones." "If we want to make quantum computers more reliable, especially at scale, we need to accurately identify and correct these errors." Google's new AI system, AlphaQubit, wants to tackle this issue. The AI system employs a sophisticated neural network architecture that has demonstrated unprecedented accuracy in identifying and correcting quantum errors, showing 6% fewer errors than previous best methods in large-scale experiments and 30% fewer errors than traditional techniques. It also maintained high accuracy across quantum systems ranging from 17 qubits to 241 qubits -- which suggests that the approach could scale to the larger systems needed for practical quantum computing. AlphaQubit employs a two-stage approach to achieve its high accuracy. The system first trains on simulated quantum noise data, learning general patterns of quantum errors, then adapts to real quantum hardware using a limited amount of experimental data. This approach allows AlphaQubit to handle complex real-world quantum noise effects, including cross-talk between qubits, leakage (when qubits exit their computational states), and subtle correlations between different types of errors. But don't get too excited; you won't have a quantum computer in your garage soon. Despite its accuracy, AlphaQubit still faces significant hurdles before practical implementation. "Each consistency check in a fast superconducting quantum processor is measured a million times every second," the researchers note. "While AlphaQubit is great at accurately identifying errors, it's still too slow to correct errors in a superconducting processor in real-time." "Training at larger code distances is more challenging because the examples are more complex, and sample efficiency appears lower at larger distances," a Deepmind spokesperson told Decrypt, " It's important because error rate scales exponentially with code distance, so we expect to need to solve larger distances to get the ultra-low error rates needed for fault-tolerant computation on large, deep quantum circuits. The researchers are focusing on speed optimization, scalability, and integration as critical areas for future development. AI and quantum computing form a synergistic relationship, enhancing the other's potential. "We expect AI/ML and quantum computing to remain complementary approaches to computation. AI can be applied in other areas to support the development of fault-tolerant quantum computers, such as calibration and compilation or algorithm design," the spokesperson told Decrypt, "at the same time, people are looking into quantum ML applications for quantum data, and more speculatively, for quantum ML algorithms on classical data. This convergence might represent a crucial turning point in computational science. As quantum computers become more reliable through AI-assisted error correction, they could, in turn, help develop more sophisticated AI systems, creating a powerful feedback loop of technological advancement. The age of practical quantum computing, long promised but never delivered, might finally be closer -- though not quite close enough to start worrying about a cyborg apocalypse.

[4]

Google's AlphaQubit to Make Quantum Computers More Reliable

During testing, AlphaQubit was shown to reduce errors by 6% compared to tensor network methods, and by 30% compared to correlated matching. Google Deepmind researchers released a paper introducing AlphaQubit, their new AI-based system that can accurately identify errors inside quantum computers. The paper, titled 'Learning high-accuracy error decoding for quantum processors' introduced the system as a neural network decoder designed to decode the surface code and establish state-of-the-art error suppression. The Google Deepmind team announced the technology on LinkedIn, saying that AlphaQubit helps make quantum computers more reliable. The system was trained on data from 49 qubits within the Sycamore quantum processor, a core component of quantum computing. AlphaQubit achieved groundbreaking results while testing on new Sycamore data. This initiative aims to address the inherent errors in quantum systems, thereby advancing the reliability and scalability of quantum computers. AlphaQubit is part of Google DeepMind's plan to combine AI with quantum computing. The company has already made breakthroughs in AI, like predicting protein structures and creating advanced game-playing systems. With AlphaQubit, they're applying this expertise to one of quantum computing's biggest challenges: fixing errors. Although AlphaQubit shows progress, there is still work to do. The system needs to handle more complex quantum problems and work seamlessly with existing quantum hardware. Even so, it's an encouraging step toward making quantum computing practical. To speed up the progress, Google DeepMind will team up with universities and industry partners. These collaborations will help refine AlphaQubit and explore its use on different quantum computing platforms, moving closer to solving problems that traditional computers can't handle. When it comes to testing, the system was shown to reduce errors by 6% compared to tensor network methods, which are accurate but computationally slow, and by 30% compared to correlated matching, a faster but less precise approach. Quantum computers use qubits, which can exist in multiple states at once, giving them incredible computing power. This, however, also makes them prone to errors caused by environmental factors and hardware imperfections. Fixing these errors is crucial to making quantum computers useful. AlphaQubit uses machine learning to improve error detection and correction. It studies large amounts of data on quantum errors, finds patterns, and creates strategies to fix them more effectively, making quantum computing more reliable and practical. The researchers claim that the system maintained superior accuracy when scaled up. These results establish AlphaQubit as a new standard for decoding accuracy in quantum computing. Typically, error correction in quantum computing requires many physical qubits to protect a single logical qubit, which makes systems bulky and complex. AlphaQubit aims to simplify this by reducing the number of physical qubits needed, making the technology more efficient and easier to scale.

[5]

Quantum computing: physics-AI collaboration quashes quantum errors

Quantum computing is often touted as having the potential to solve problems that are beyond the capabilities of classical computers -- from simulating molecules for drug development to optimizing complex logistics. Yet, a major obstacle stands in the way: quantum processors are prone to errors caused by disturbances from their environment and other sources. Overcoming this challenge is crucial to building a practical quantum computer. Writing in Nature, Bausch et al. introduce AlphaQubit, an approach that uses artificial intelligence (AI) to make a huge leap in correcting these quantum errors, pushing researchers closer to achieving scalable quantum computing. Quantum processors use quantum bits, or qubits, which are the basic units of quantum information. These processors can perform complex calculations by leveraging quantum properties, allowing the devices to process information in ways that classical computers cannot. However, the same features that make qubits powerful also make them fragile. Qubits are highly sensitive, meaning they are vulnerable to even the slightest disturbances, such as temperature changes, electromagnetic interference, or simply interactions with other qubits. These disturbances can cause qubits to lose their quantum state, leading to errors that accumulate and compromise computations. To address this issue, researchers have developed strategies that use redundancy to correct quantum errors. The idea is that information is encoded in a 'logical' qubit, which comprises many more physical qubits than are required to perform a computation. This redundancy allows some physical qubits (known as ancilla qubits) to detect and correct errors while other qubits are processing information. This enables quantum computers to perform longer and more reliable computations than they could otherwise. One of the most promising techniques in quantum error correction is known as the surface code, which organizes qubits into a 2D grid and uses frequent measurements to identify and correct errors. The surface code is popular because of its high tolerance for errors and its compatibility with existing quantum hardware. A key challenge in implementing quantum error correction is how to decode the errors: information must be extracted from the qubits detecting the errors, and translated into corrective actions. This decoding process is crucial for determining how to fix errors without disturbing the remaining quantum information. Conventionally, human-designed algorithms have been used for decoding, including one known as minimum-weight perfect matching (MWPM). This algorithm is effective for certain types of error but, as the number of qubits increases and noise becomes more complex, such methods often struggle. Real-world quantum errors can include crosstalk (unwanted interactions between qubits that are physically close to each other) and leakage (in which a qubit takes on states other than those required for a specific computation). AlphaQubit is a quantum error decoder that uses machine learning to tackle quantum error correction in a way that differs fundamentally from human-led approaches. Instead of relying on predefined models of how errors occur, AlphaQubit uses data to learn directly from the quantum system, adapting to the complex and unpredictable noise found in real-world environments. By using machine learning, AlphaQubit can identify patterns and correlations in errors that conventional methods might overlook, making it a more versatile and powerful solution than existing approaches. AlphaQubit is based on a transformer neural network architecture, a type of machine-learning model that has been used successfully in a range of applications, from natural language processing to image recognition. Bausch et al. trained AlphaQubit in two stages: first on synthetic data, which allowed the model to learn the basic structure of quantum errors, and then on real experimental data from Google's Sycamore quantum processor. The second stage enabled the model to adjust to the specific noise encountered in real hardware, improving its overall accuracy. The authors' key innovation lies in their use of soft readout, which is a way of extracting analogue information from a quantum system without disturbing it too much. Whereas in conventional decoders, a measurement is either a 0 or a 1, soft readouts offer more nuanced information about the state of the qubit, which allows AlphaQubit to make more informed decisions about whether an error has occurred and how to correct it (Fig. 1). When tested on both real-world and simulated data, AlphaQubit demonstrated a clear advantage over existing methods. The model was evaluated using the Sycamore processor, and decoded errors in surface codes with distances (the minimum number of simultaneously occurring errors that are needed to make a logical qubit fail) of three and five. This makes it more accurate than existing decoders, such as MWPM. The larger the distance, the more complex the error correction, because there are more physical qubits involved in each logical qubit -- and this provides better protection against errors. Bausch et al. also showed that AlphaQubit could be effective in larger quantum systems, maintaining accuracy for code distances of up to 11 -- scalability that is crucial for future quantum computers. Even when faced with a lot of noise, including crosstalk and leakage, AlphaQubit outperformed the existing state-of-the-art methods. This result suggests that machine learning can handle the complexities of real-world quantum noise better than conventional human-designed algorithms can. AlphaQubit's success is a milestone on the path towards fault-tolerant quantum computing. Fault tolerance means that a quantum computer can continue to operate correctly even when some of its components fail or produce errors. By using machine learning to enhance error correction, AlphaQubit paves the way for quantum processors that can correct their own errors efficiently, making large-scale quantum computations more feasible. AlphaQubit's ability to adapt to new data and improve over time is particularly promising for the evolving landscape of quantum hardware. However, despite these impressive results, logical error rates need to be reduced still further. Ideally, to run complex quantum algorithms comprising thousands or millions of operations -- a requirement for practical quantum computing -- there should be no more than one error for every one trillion logical operations. AlphaQubit managed to limit error to around one in every 35 logical operations, so further improvements will be necessary to meet the demands of real-time quantum computations. One of AlphaQubit's strengths is its ability to learn from data, making it highly adaptable to various types of quantum hardware. This adaptability is important because quantum hardware is still in its early stages of development, and different quantum processors might have different noise characteristics. By learning directly from experimental data, AlphaQubit can optimize its performance for each device, providing a tailored solution for error correction. Bausch and colleagues' feat is not just about correcting errors; it represents a shift towards adaptive learning having a key role in managing quantum systems. The approach allows the model to learn from the nuances of each quantum device -- a feature that will be crucial as quantum hardware continues to evolve. This adaptability could help to bridge the gap between today's noisy, error-prone quantum devices and the fault-tolerant quantum computers of the future by improving the way that errors are controlled, and by helping quantum computers to function correctly as they grow in size and complexity. Moreover, the use of transformer neural networks for quantum error correction highlights the versatility of machine-learning models that were originally developed for entirely different applications. The success of AlphaQubit suggests that other types of machine-learning model could also be adapted to address specific challenges in quantum computing, from optimizing quantum circuits to developing quantum algorithms. This underlines the enormous potential for breakthroughs when ideas from different domains come together. Although there is still much to be done, Bausch and colleagues' work is a step towards the ultimate goal: developing quantum computers that can perform reliable, large-scale computations free from errors. The study not only shows the power of AI to enhance quantum technologies, but also opens fresh research avenues. By combining quantum physics and machine learning, these innovations could unlock the true potential of the quantum realm.

[6]

Google DeepMind AI can expertly fix errors in quantum computers

Quantum computers could get a boost from artificial intelligence, thanks to a model created by Google DeepMind that cleans up quantum errors Google DeepMind has developed an AI model that could improve the performance of quantum computers by correcting errors more effectively than any existing method, bringing these devices a step closer to broader use. Quantum computers perform calculations on quantum bits, or qubits, which are units of information that can store multiple values at the same time, unlike classical bits, which can hold either a 0 or 1. These qubits, however, are fragile and prone to mistakes when disturbed by factors like environmental heat or...

Share

Share

Copy Link

Google DeepMind and Quantum AI teams introduce AlphaQubit, an AI-based decoder that significantly improves quantum error detection and correction, potentially bringing practical quantum computing closer to reality.

Google DeepMind Unveils AlphaQubit for Quantum Error Correction

In a groundbreaking development, researchers at Google DeepMind and Google Quantum AI have introduced AlphaQubit, an artificial intelligence-based decoder designed to identify and correct errors in quantum computing systems. Published in the journal Nature, this innovation represents a significant step towards making quantum computers more reliable and practical for real-world applications

1

2

.The Challenge of Quantum Errors

Quantum computers, while promising revolutionary computational power, face a critical challenge: the instability of qubits. These quantum bits are extremely fragile and prone to errors caused by environmental factors such as heat, vibrations, electromagnetic interference, and even cosmic rays

2

. To achieve practical quantum computing, error rates need to be as low as one in a trillion operations, a far cry from current error rates between 10^-3 and 10^-2 per operation3

.AlphaQubit: An AI-Powered Solution

AlphaQubit employs a sophisticated neural network architecture based on the transformer model used in large language models. The system was trained in two stages:

- On hundreds of millions of simulated quantum error examples

- Fine-tuned on thousands of experimental samples from a Sycamore quantum processor

2

This approach allows AlphaQubit to handle complex real-world quantum noise effects, including cross-talk between qubits, leakage, and subtle error correlations

3

.Impressive Performance and Scalability

In tests, AlphaQubit demonstrated remarkable accuracy:

- 6% fewer errors than tensor network methods in large Sycamore experiments

- 30% improvement over faster but less accurate traditional techniques

2

4

Importantly, AlphaQubit maintained high accuracy across quantum systems ranging from 17 to 241 qubits, suggesting potential scalability to larger systems necessary for practical quantum computing

3

.Related Stories

The Road Ahead

While AlphaQubit represents a significant breakthrough, challenges remain:

- Speed optimization: The system is currently too slow for real-time error correction in fast superconducting quantum processors

3

. - Scalability: Training for larger code distances remains challenging due to increased complexity

3

. - Integration with existing quantum hardware

4

.

Google DeepMind plans to collaborate with universities and industry partners to refine AlphaQubit and explore its applications across different quantum computing platforms

4

.Implications for the Future of Quantum Computing

AlphaQubit's success marks a crucial step towards fault-tolerant quantum computing. By significantly improving error correction, it brings us closer to realizing the potential of quantum computers in fields such as drug discovery, material design, and fundamental physics

3

.The synergy between AI and quantum computing demonstrated by AlphaQubit could create a powerful feedback loop of technological advancement. As quantum computers become more reliable through AI-assisted error correction, they could, in turn, help develop more sophisticated AI systems

3

.While practical quantum computing is not yet a reality, AlphaQubit's breakthrough suggests that the long-promised potential of quantum computers may be closer to fruition than ever before.

References

Summarized by

Navi

[2]

[3]

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology