Google DeepMind's Gemini Robotics 1.5: A Leap Towards 'Thinking' AI-Powered Robots

11 Sources

11 Sources

[1]

Google DeepMind unveils its first "thinking" robotics AI

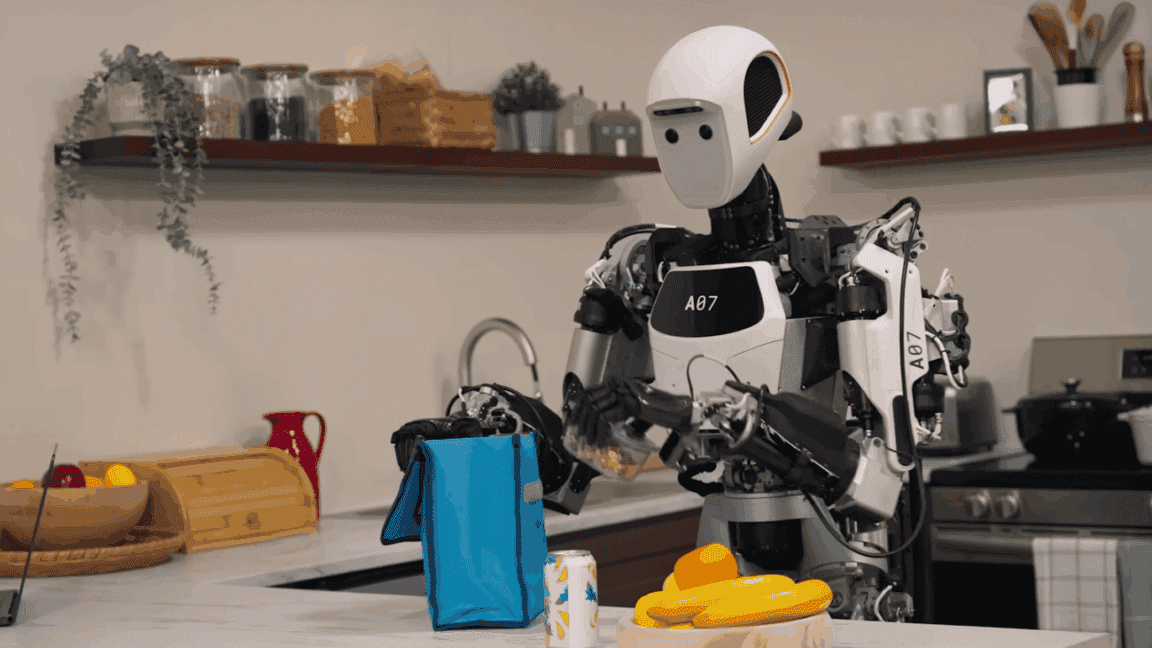

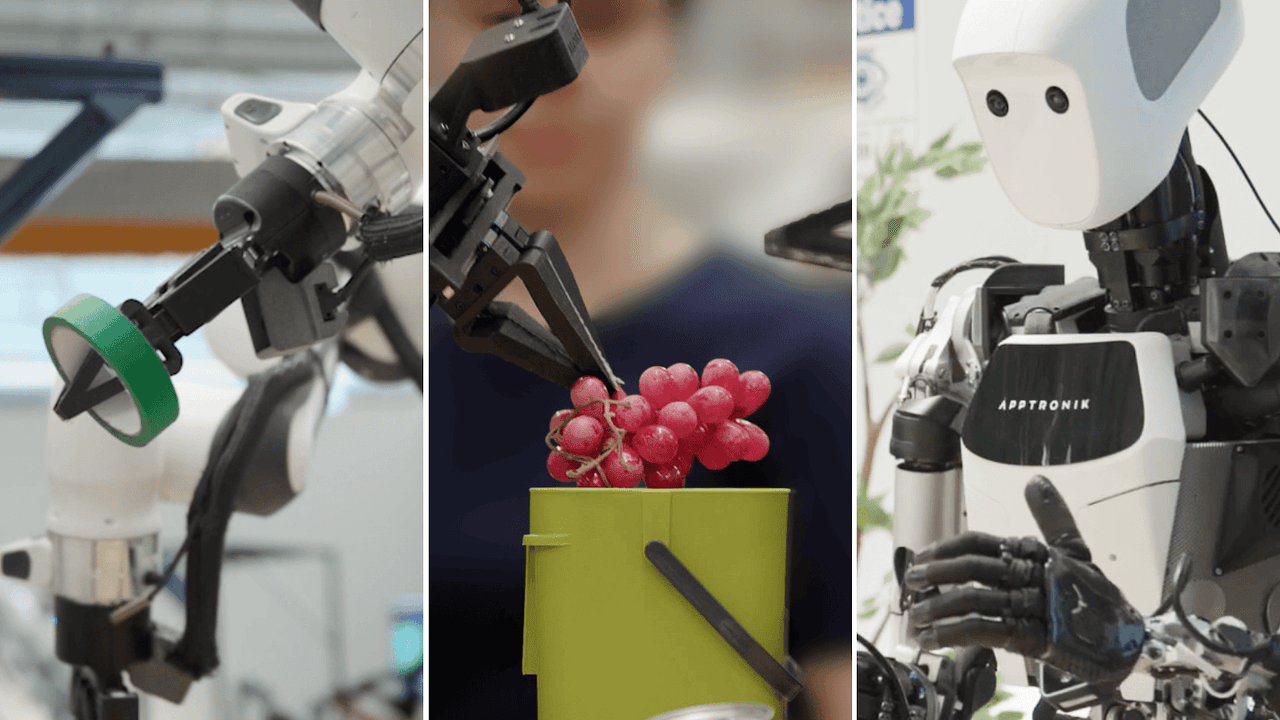

Generative AI systems that create text, images, audio, and even video are becoming commonplace. In the same way AI models output those data types, they can also be used to output robot actions. That's the foundation of Google DeepMind's Gemini Robotics project, which has announced a pair of new models that work together to create the first robots that "think" before acting. Traditional LLMs have their own set of problems, but the introduction of simulated reasoning did significantly upgrade their capabilities, and now the same could be happening with AI robotics. The team at DeepMind contends that generative AI is a uniquely important technology for robotics because it unlocks general functionality. Current robots have to be trained intensively on specific tasks, and they are typically bad at doing anything else. "Robots today are highly bespoke and difficult to deploy, often taking many months in order to install a single cell that can do a single task," said Carolina Parada, head of robotics at Google DeepMind. The fundamentals of generative systems make AI-powered robots more general. They can be presented with entirely new situations and workspaces without needing to be reprogrammed. DeepMind's current approach to robotics relies on two models: one that thinks and one that does. The two new models are known as Gemini Robotics 1.5 and Gemini Robotics-ER 1.5. The former is a vision-language-action (VLA) model, meaning it uses visual and text data to generate robot actions. The "ER" in the other model stands for embodied reasoning. This is a vision-language model (VLM) that takes visual and text input to generate the steps needed to complete a complex task. The thinking machines Gemini Robotics-ER 1.5 is the first robotics AI capable of simulated reasoning like modern text-based chatbots -- Google likes to call this "thinking," but that's a bit of a misnomer in the realm of generative AI. DeepMind says the ER model achieves top marks in both academic and internal benchmarks, which shows that it can make accurate decisions about how to interact with a physical space. It doesn't undertake any actions, though. That's where Gemini Robotics 1.5 comes in. Imagine that you want a robot to sort a pile of laundry into whites and colors. Gemini Robotics-ER 1.5 would process the request along with images of the physical environment (a pile of clothing). This AI can also call tools like Google search to gather more data. The ER model then generates natural language instructions, specific steps that the robot should follow to complete the given task. Gemini Robotics 1.5 (the action model) takes these instructions from the ER model and generates robot actions while using visual input to guide its movements. But it also goes through its own thinking process to consider how to approach each step. "There are all these kinds of intuitive thoughts that help [a person] guide this task, but robots don't have this intuition," said DeepMind's Kanishka Rao. "One of the major advancements that we've made with 1.5 in the VLA is its ability to think before it acts." Both of DeepMind's new robotic AIs are built on the Gemini foundation models but have been fine-tuned with data that adapts them to operating in a physical space. This approach, the team says, gives robots the ability to undertake more complex multi-stage tasks, bringing agentic capabilities to robotics. The DeepMind team tests Gemini robotics with a few different machines, like the two-armed Aloha 2 and the humanoid Apollo. In the past, AI researchers had to create customized models for each robot, but that's no longer necessary. DeepMind says that Gemini Robotics 1.5 can learn across different embodiments, transferring skills learned from Aloha 2's grippers to the more intricate hands on Apollo with no specialized tuning. All this talk of physical agents powered by AI is fun, but we're still a long way from a robot you can order to do your laundry. Gemini Robotics 1.5, the model that actually controls robots, is still only available to trusted testers. However, the thinking ER model is now rolling out in Google AI Studio, allowing developers to generate robotic instructions for their own physically embodied robotic experiments.

[2]

Google DeepMind's new AI models can search the web to help robots complete tasks

Google DeepMind says its upgraded AI models enable robots to complete more complex tasks -- and even tap into the web for help. During a press briefing, Google DeepMind's head of robotics, Carolina Parada, told reporters that the company's new AI models work in tandem to allow robots to "think multiple steps ahead" before taking action in the physical world. The system is powered by the newly launched Gemini Robotics 1.5 alongside the embodied reasoning model, Gemini Robotics-ER 1.5, which are updates to AI models that Google DeepMind introduced in March. Now robots can perform more than just singular tasks, such as folding a piece of paper or unzipping a bag. They can now do things like separate laundry by dark and light colors, pack a suitcase based on the current weather in London, as well as help someone sort trash, compost, and recyclables based on a web search tailored to a location's specific requirements. "The models up to now were able to do really well at doing one instruction at a time in a way that is very general," Parada said. "With this update, we're now moving from one instruction to actually genuine understanding and problem-solving for physical tasks." To do this, robots can use the upgraded Gemini Robotics-ER 1.5 model to form an understanding of their surroundings, and use digital tools like Google Search to find more information. Gemini Robotics-ER 1.5 then translates those findings into natural language instructions for Gemini Robotics 1.5, allowing the robot to use the model's vision and language understanding to carry out each step. Additionally, Google DeepMind announced that Gemini Robotics 1.5 can help robots "learn" from each other, even if they have different configurations. Google DeepMind found that tasks presented to the ALOHA2 robot, which consists of two mechanical arms, "just work" on the bi-arm Franka robot, as well as Apptronik's humanoid robot Apollo. "This enables two things for us: one is to control very different robots -- including a humanoid -- with a single model," Google DeepMind software engineer Kanishka Rao said during the briefing. "And secondly, skills that are learned on one robot can now be transferred to another robot." As part of the update, Google DeepMind is rolling out Gemini Robotics-ER 1.5 to developers through the Gemini API in Google AI Studio, while only select partners can access Gemini Robotics 1.5.

[3]

Google DeepMind unveils new robotics AI model that can sort laundry

Google DeepMind has unveiled artificial intelligence models that further advance reasoning capabilities in robotics, enabling them to solve harder problems and complete more complicated real world tasks like sorting laundry and recycling rubbish. The company's new robotics models, called Gemini Robotics 1.5 and Gemini Robotics-ER 1.5, are designed to help robots complete multi-step tasks by "thinking" before they act, as part of the tech industry's push to make the general-purpose machines more useful in the everyday world. According to Google DeepMind, a robot trained using its new model was able to plan how to complete tasks that might take several minutes, such as folding laundry into different baskets based on colour. The development comes as tech groups, including OpenAI and Tesla, are racing to integrate AI models into robots in the hope that they could transform a range of industries, from healthcare to manufacturing. "Models up to now were able to do really well at doing one instruction at a time," said Carolina Parada, senior director and head of robotics at Google DeepMind. "We're now moving from one instruction to actually genuine understanding and problem solving for physical tasks." In March, Google DeepMind unveiled the first iteration of these models, which took advantage of the company's Gemini 2.0 system to help robots adjust to different new situations, respond quickly to verbal instructions or changes in their environment, and be dexterous enough to manipulate objects. While that version was able to reason how to complete tasks, such as folding paper or unzipping a bag, the latest model can follow a series of instructions and also use tools such as Google search to help it solve problems. In one demonstration, a Google DeepMind researcher asked the robot to pack a beanie into her bag for a trip to London. The robot was also able to tell the researcher that it was going to rain for several days during the trip, and so the robot also packed an umbrella into the bag. The robot was also able to sort rubbish into appropriate recycling bins, by first using online tools to figure out it was based in San Francisco, and then searching the web for the city's recycling guidelines. The Gemini Robotics 1.5 is a vision-language-action model, which combines several different inputs and then translates them into action. These systems are able to learn about the world through data downloaded from the internet. Ingmar Posner, professor of applied artificial intelligence at the University of Oxford, said learning from this kind of internet scale data could help robotics reach a "ChatGPT moment". But Angelo Cangelosi, co-director of the Manchester Centre for Robotics and AI, cautioned against calling what these robots are doing as real thinking. "It's just discovering regularities between pixels, between images, between words, tokens, and so on," he said. Another development with Google DeepMind's new system is a technique called "motion transfer", which allows one AI model to use skills that were designed for a specific type of robot body, such as robotic arms, and transfer it to another, such as a humanoid robot. Traditionally, to get robots to move around in a space and take action requires plenty of meticulous planning and coding, and this training was often specific to a particular type of robot, such as robotic arms. This "motion transfer" breakthrough could help solve a major bottleneck in AI robotics development, which is the lack of enough training data. "Unlike large language models that can be trained on the entire vast internet of data, robotics has been limited by the painstaking process of collecting real [data for robots]," said Kanishka Rao, principal software engineer of robotics at Google DeepMind. The company said it still needed to overcome a number of hurdles in the technology. This included creating the ability for robots to learn skills by watching videos of humans doing tasks. It also said robots needed to become more dexterous as well as reliable and safe before they could be rolled out into environments where they interact with humans. "One of the major challenges of building general robots is that things that are intuitive for humans are actually quite difficult for robots," said Rao.

[4]

Gemini Robotics 1.5 brings AI agents into the physical world

We're powering an era of physical agents -- enabling robots to perceive, plan, think, use tools and act to better solve complex, multi-step tasks. Earlier this year, we made incredible progress bringing Gemini's multimodal understanding into the physical world, starting with the Gemini Robotics family of models. Today, we're taking another step towards advancing intelligent, truly general-purpose robots. We're introducing two models that unlock agentic experiences with advanced thinking: These advances will help developers build more capable and versatile robots that can actively understand their environment to complete complex, multi-step tasks in a general way. Starting today, we're making Gemini Robotics-ER 1.5 available to developers via the Gemini API in Google AI Studio. Gemini Robotics 1.5 is currently available to select partners. Read more about building with the next generation of physical agents on the Developer blog. Most daily tasks require contextual information and multiple steps to complete, making them notoriously challenging for robots today. For example, if a robot was asked, "Based on my location, can you sort these objects into the correct compost, recycling and trash bins?" it would need to search for relevant local recycling guidelines on the internet, look at the objects in front of it and figure out how to sort them based on those rules -- and then do all the steps needed to completely put them away. So, to help robots complete these types of complex, multi-step tasks, we designed two models that work together in an agentic framework. Our embodied reasoning model, Gemini Robotics-ER 1.5, orchestrates a robot's activities, like a high-level brain. This model excels at planning and making logical decisions within physical environments. It has state-of-the-art spatial understanding, interacts in natural language, estimates its success and progress, and can natively call tools like Google Search to look for information or use any third-party user-defined functions. Gemini Robotics-ER 1.5 then gives Gemini Robotics 1.5 natural language instructions for each step, which uses its vision and language understanding to directly perform the specific actions. Gemini Robotics 1.5 also helps the robot think about its actions to better solve semantically complex tasks, and can even explain its thinking processes in natural language -- making its decisions more transparent.

[5]

Google's Robots Can Now Think, Search the Web and Teach Themselves New Tricks - Decrypt

From packing suitcases to sorting trash, robots powered by Gemini-ER 1.5 showed early steps toward general-purpose intelligence. Google DeepMind rolled out two AI models this week that aim to make robots smarter than ever. Instead of focusing on following comments, the updated Gemini Robotics 1.5 and its companion Gemini Robotics-ER 1.5 make the robots think through problems, search the internet for information, and pass skills between different robot agents. According to Google, these models mark a "foundational step that can navigate the complexities of the physical world with intelligence and dexterity" "Gemini Robotics 1.5 marks an important milestone toward solving AGI in the physical world," Google said in the announcement. "By introducing agentic capabilities, we're moving beyond models that react to commands and creating systems that can truly reason, plan, actively use tools, and generalize." And this term "generalization" is important because models struggle with it. The robots powered by these models can now handle tasks like sorting laundry by color, packing a suitcase based on weather forecasts they find online, or checking local recycling rules to throw away trash correctly. Now, as a human, you may say, "Duh, so what?" But to do this, machines require a skill called generalization -- the ability to apply knowledge to new situations. Robots -- and algorithms in general -- usually struggle with this. For example, if you teach a model to fold a pair of pants, it will not be able to fold a t-shirt unless engineers programmed every step in advance. The new models change that. They can pick up on cues, read the environment, make reasonable assumptions, and carry out multi-step tasks that used to be out of reach -- or at least extremely hard -- for machines. But better doesn't mean perfect. For example, in one of the experiments, the team showed the robots a set of objects and asked them to send them into the correct trash. The robots used their camera to visually identify each item, pull up San Francisco's latest recycling guidelines online, and then place them where they should ideally go, all on its own, just as a local human would. This process combines online search, visual perception, and step-by-step planning -- making context-aware decisions that go beyond what older robots could achieve. The registered success rate was between 20% to 40% of the time; not ideal, but surprising for a model that was not able to understand those nuances ever before. The two models split the work. Gemini Robotics-ER 1.5 acts like the brain, figuring out what needs to happen and creating a step-by-step plan. It can call up Google Search when it needs information. Once it has a plan, it passes natural language instructions to Gemini Robotics 1.5, which handles the actual physical movements. More technically speaking, the new Gemini Robotics 1.5 is a vision-language-action (VLA) model that turns visual information and instructions into motor commands, while the new Gemini Robotics-ER 1.5 is a vision-language model (VLM) that creates multistep plans to complete a mission. When a robot sorts laundry, for instance, it internally reasons through the task using a chain of thought: understanding that "sort by color" means whites go in one bin and colors in another, then breaking down the specific motions needed to pick up each piece of clothing. The robot can explain its reasoning in plain English, making its decisions less of a black box. Google CEO Sundar Pichai chimed in on X, noting that the new models will enable robots to better reason, plan ahead, use digital tools like search, and transfer learning from one kind of robot to another. He called it Google's "next big step towards general-purpose robots that are truly helpful." The release puts Google in a spotlight shared with developers like Tesla, Figure AI and Boston Dynamics, though each company is taking different approaches. Tesla focuses on mass production for its factories, with Elon Musk promising thousands of units by 2026. Boston Dynamics continues pushing the boundaries of robot athleticism with its backflipping Atlas. Google, meanwhile, bets on AI that makes robots adaptable to any situation without specific programming. The timing matters. American robotics companies are pushing for a national robotics strategy, including establishing a federal office focused on promoting the industry at a time when China is making AI and intelligent robots a national priority. China is the world's largest market for robots that work in factories and other industrial environments, with about 1.8 million robots operating in 2023, according to the Germany-based International Federation of Robotics. DeepMind's approach differs from traditional robotics programming, where engineers meticulously code every movement. Instead, these models learn from demonstration and can adapt on the fly. If an object slips from a robot's grasp or someone moves something mid-task, the robot adjusts without missing a beat. The models build on DeepMind's earlier work from March, when robots could only handle single tasks like unzipping a bag or folding paper. Now they're tackling sequences that would challenge many humans -- like packing appropriately for a trip after checking the weather forecast. For developers wanting to experiment, there's a split approach to availability. Gemini Robotics-ER 1.5 launched Thursday through the Gemini API in Google AI Studio, meaning any developer can start building with the reasoning model. The action model, Gemini Robotics 1.5, remains exclusive to "select" (meaning "rich," probably) partners.

[6]

Google DeepMind adds agentic AI models to robots

'This is a foundational step toward building robots that can navigate the complexities of the physical world with intelligence and dexterity,' said DeepMind's Carolina Parada. Google DeepMind has revealed two new robotics AI models that add agentic capabilities such as multi-step processing to robots. The models - Gemini Robotics 1.5 and Gemini Robotics-ER 1.5 - were introduced yesterday (25 September) in a blogpost where DeepMind's senior director and head of robotics Carolina Parada described their functionalities. Gemini Robotics 1.5 is a vision-language-action (VLA) model that turns visual information and instructions into motor commands for a robot to perform a task, while Gemini Robotics-ER 1.5 is a vision-language model (VLM) that specialises in understanding physical spaces and can create multi-step processes to complete a task. The VLM model can also natively call tools such as Google Search to look for information or use any third-party user-defined functions. The Gemini Robotics-ER 1.5 model is now available to developers through the Gemini API in Google AI Studio, while the Gemini Robotics 1.5 model is currently available to select partners. The two models are designed to work together to ensure a robot can complete an objective with multiple parameters or steps. The VLM model basically acts as the orchestrator for the robot, giving the VLA model natural language instructions. The VLA model then uses its vision and language understanding to directly perform the specific actions and adapt to environmental parameters if necessary. "Both of these models are built on the core Gemini family of models and have been fine-tuned with different datasets to specialise in their respective roles," said Parada. "When combined, they increase the robot's ability to generalise to longer tasks and more diverse environments." The DeepMind team demonstrated the models' capabilities in a YouTube video by instructing a robot to sort laundry into different bins according to colour, with the robot separating white clothes from coloured clothes and placing the clothes into the allocated bin. A major talking point of the VLA model is its ability to learn across different "embodiments". According to Parada, the model can transfer motions learned from one robot to another, without needing to specialise the model to each new embodiment. "This breakthrough accelerates learning new behaviours, helping robots become smarter and more useful," she said. Parada claimed that the release of Gemini Robotics 1.5 marks an "important milestone" towards artificial general intelligence - also referred to as human‑level intelligence AI - in the physical world. "By introducing agentic capabilities, we're moving beyond models that react to commands and creating systems that can truly reason, plan, actively use tools and generalise," she said. "This is a foundational step toward building robots that can navigate the complexities of the physical world with intelligence and dexterity, and ultimately, become more helpful and integrated into our lives." Google DeepMind first revealed its robotics projects at the start of this year, and has been steadily revealing new milestones in the months since. In March, the company first unveiled its Gemini Robotics project. At the time of the announcement, the company wrote about its belief that AI models for robotics need three principal qualities: they have to be general (meaning adaptive), interactive and dexterous. Don't miss out on the knowledge you need to succeed. Sign up for the Daily Brief, Silicon Republic's digest of need-to-know sci-tech news.

[7]

Google's newest AI models make robots more intelligent and capable than ever before - SiliconANGLE

Google's newest AI models make robots more intelligent and capable than ever before Google LLC's DeepMind research unit today announced a major update to a couple of its artificial intelligence models, which are designed to make robots more intelligent. With the update, intelligent robots can now perform more complex, multistep tasks and even search the web for information to aid in their endeavors. The newly released models include Gemini Robotics 1.5, which drives the robots, and Gemini Robotics-ER 1.5, which is an embodied reasoning model that helps them to think. DeepMind originally released these models in March, but at the time they were only capable of performing singular tasks, such as unzipping a bag or folding a piece of paper. But now they can do a lot more. For instance, they can separate clothes in a laundry basket by light and dark colors, and they can pack a suitcase for someone, choosing clothes that are suitable for the predicted weather conditions in London or New York, DeepMind said. For the latter task they'll need to search the web for the latest weather forecast. They can also search the web for information they need to perform other tasks, such as sorting out the recyclables in a trash basket, based on the guidelines of whatever location they're in. In a blog post, DeepMind Head of Robotics Carolina Parada said the models will help developers to build "more capable and versatile robots" that actively understand their environments. In a press briefing, Parada added that the two models work in tandem with each other, giving robots the ability to think multiple steps ahead before they start taking actions. "The models up to now were able to do really well at doing one instruction at a time in a way that is very general," she said. "With this update, we're now moving from one instruction to actually genuine understanding and problem-solving for physical tasks." Gemini Robotics 1.5 and Gemini Robotics-ER 1.5 are known as "vision-language-action" or VLA models, but they're designed to do different things. The former transforms visual information and instructions into motor commands that enable robots to perform tasks. It thinks before it takes actions, and shows this thinking process, which helps robots to assess and complete complex tasks in the most efficient way. As for Gemini Robotics-ER 1.5, this is designed to reason about the physical environment it's operating in. It has the ability to use digital tools such as a web browser, and then create detailed, multi-step plans on how to fulfill a specific task or mission. Once it has a plan ready, it passes it on to Gemini Robotics 1.5 to carry it out. Parada said the models can also "learn" from each other, even if they're configured to run on different robots. In tests, DeepMind found that tasks only assigned to the ALOHA2 robot, which has two mechanical arms, could later be performed just as well by the bi-arm Franka robot and Apptronik's humanoid robot Apollo. "This enables two things for us," Parada said. "One is to control very different robots, including a humanoid, with a single model. And secondly, skills that are learned on one robot can now be transferred to another robot." Google said Gemini Robotics-ER 1.5 is being made available to any developer that wants to experiment with it through the Gemini application programming interface in Google AI Studio, which is a platform for building and fine-tuning AI models and integrating them with applications. Developers can read this resource to get started with building robotic AI applications. Gemini Robotics 1.5 is more exclusive, and is only being made available to "select partners" at this time, Parada said.

[8]

Google New AI Models Let a Robot Think Before It Acts

Gemini Robotics-ER 1.5 is capable of reasoning and acts as orchestrator Google DeepMind on Thursday unveiled two new artificial intelligence (AI) models in the Gemini Robotics family. Dubbed Gemini Robotics-ER 1.5 and Gemini Robotics 1.5, the two models work in tandem to power general-purpose robots. Compared to any embodied AI models created by the Mountain View-based tech giant, these models offer higher reasoning, vision, and action capabilities across various real-world scenarios. The ER 1.5 model is designed to be the planner or orchestrator, whereas the 1.5 model can perform tasks based on natural language instructions. Google DeepMind's Gemini AI Models Can Act as the Brain of a Robot In a blog post, DeepMind introduced and detailed the two new Gemini Robotics models that are designed for general-purpose robots operating in the physical world. Generative AI technology has brought about a major breakthrough in robotics, replacing the traditional interface to communicate with a robot with natural language instructions. However, when it comes to implementing AI models as the brain of a robot, many challenges remain. For instance, the large language models themselves struggle to understand the spatial and temporal dimensions or make precise movements for different object shapes. This issue existed because a single AI model was both thinking up the plan and executing the plan, making the process error-prone and laggy. Google's solution to this problem is a two-model setup. Here, the Gemini Robotics-ER 1.5, a vision-language model (VLM), comes with advanced reasoning and tool-calling capabilities. It can create multi-step plans for a task. The company says the model excels in making logical decisions within physical environments, and can natively call tools like Google Search to search for information. It is also said to achieve state-of-the-art (SOTA) performance on various spatial understanding benchmarks. After the plan has been created, the Gemini Robotics 1.5 springs into action. The vision-language-action (VLA) model can turn visual information and instructions into motor commands, enabling a robot to perform tasks. The model first thinks and creates the most efficient path towards completing an action, and then executes it. It can also explain its thinking process in natural language to bring more transparency. Google claims this system will allow robots to better understand complex and multi-step commands and then execute them in a single flow. For instance, if a user asks a robot to sort multiple objects into the correct compost, recycling and trash bins, the AI system can first search for local recycling guidelines on the Internet, analyse the objects in front, make a plan to sort them, and execute the action. Notably, the tech giant says that the AI models were designed to work in robots of any shape and size due to their high spatial understanding and wider adaptability. Currently, the orchestrator Gemini Robotics-ER 1.5 is available to developers via the Gemini application programming interface (API) in Google AI Studio. The VLA model, on the other hand, is only available to select partners.

[9]

Google DeepMind Unveils Gemini Robotics 1.5 And ER 1.5 To Help Robots Reason, Plan, And Learn Across Different Tasks

Robots have traditionally been preprogrammed machines that carry out strictly the instructions given to them. But it seems like that is about to change with the launch of Gemini Robotics 1.5 and Gemini Robotics-ER 1.5. Google DeepMind seems to be taking the leap and pushing for a new era by working on more adaptive robots that can reason, learn, and even solve real-world problems. Tech giants seem to be constantly looking for ways to explore the potential of technology by bringing in more advanced AI models and solutions. While robots have had limited application in terms of performing repetitive tasks under controlled situations, such as moving boxes or assembling car parts, Google DeepMind is determined to equip its latest models to handle complex tasks or even look up information online if there is a need. Google introduced two new AI models, Gemini Robotics 1.5 and Gemini Robotics-ER 1.5, during a recent update on its robotic direction. The ER model is meant to focus on reasoning and breaking down tasks by finding more information from the web, while the robotics model is meant to carry out actions. Carolina Parada, the lead robotics head at Google DeepMind, detailed this approach and explained how, due to this pairing, the robots are able to think multiple steps ahead and not focus on single steps alone. So now, with the updated Gemini Robotics models, you could not only get help with loading suitcases for vacations, but also get assistance with packing choices, checking the weather, and basically planning the trip better. Since two-model systems are at work, it allows for a human way of operating that first plans and then acts. The major upgrade is that knowledge transfer, meaning skills developed on one robot, can be transferred to another, even if it is built or designed differently. The implications of this new Gemini-powered robot can be huge, especially in the healthcare sector, where assistive robots can help according to different patient needs. Even for personal use, it can end up being a useful assistant. However, with technological leaps, challenges do remain, especially with AI models rapidly progressing, and this would not be free from complications either. Data privacy, reliability, and safety questions arise, so Google would need to rigorously conduct tests before enabling large-scale deployment. One thing is for certain: Google DeepMind is determined to transform robots from tools into assistants that can work alongside humans by teaching them how to think and act.

[10]

Google DeepMind unveils new AI models that use the web to aid robots (GOOG:NASDAQ)

Google DeepMind (NASDAQ:GOOG) (NASDAQ:GOOGL) unveiled two new artificial intelligence models on Thursday that the tech company says will allow robots to utilize the internet to perform certain tasks. The models, known as Gemini Robotics 1.5 and Gemini Robotics-ER 1.5, will allow the Gemini Robotics models are designed to help robots think, plan, and complete complex physical tasks more transparently and effectively, enhancing their general problem-solving capabilities. Gemini Robotics-ER 1.5 allows developers to build robots that reason, access digital tools for information, and execute detailed multi-step tasks using internet resources. Alphabet aims to lead in the development of versatile, general-purpose robots that can transfer learning between platforms, thereby expanding market opportunities and technological leadership.

[11]

Gemini Robotics 1.5: DeepMind's step toward robots that think, plan & act like humans

Robots reason, strategize, and act safely with Gemini Robotics When robots learn to reason before moving, the line between artificial intelligence and human-like agency begins to blur. With the launch of Gemini Robotics 1.5, DeepMind is positioning its latest AI systems as a bridge between language models and machines that can physically act in the real world. The release introduces two complementary models: Gemini Robotics 1.5, a vision-language-action system, and Gemini Robotics-ER 1.5, an embodied reasoning model. Together, they embody DeepMind's ambition to move from digital chatbots to agents that can think, plan, and manipulate objects in physical environments. Also read: OpenAI's GPT-5 matches human performance in jobs: What it means for work and AI Unlike many robotics models that map instructions directly to motions, Gemini Robotics 1.5 is designed to pause and plan. It generates natural-language reasoning chains before choosing its next move, breaking down complex instructions into smaller, safer substeps. This makes its decision-making process more transparent and, crucially, easier for developers and operators to trust. That reasoning capability is strengthened by Gemini Robotics-ER 1.5, which focuses on high-level planning. It can map spaces, weigh alternatives, call external tools like search, and orchestrate the VLA model's movements. In essence, one model acts as the "mind," while the other executes as the "body." A longstanding problem in robotics is that machines differ widely in shape and mechanics - an arm designed for factory assembly is nothing like a humanoid assistant. DeepMind says Gemini Robotics 1.5 can generalize across different robot embodiments, applying what it learns on one platform to another without needing to be retrained from scratch. Demonstrations include transferring skills from research robots like ALOHA 2 to humanoid prototypes and commercial robotic arms. This adaptability could lower the barrier to deploying AI-driven robots in industries where diversity of hardware has slowed progress. In testing, Gemini Robotics-ER 1.5 set state-of-the-art results across 15 embodied reasoning benchmarks, covering spatial understanding, planning, and interactive problem-solving. While benchmarks are only a proxy for real-world reliability, they suggest DeepMind's models are advancing faster than earlier embodied AI systems. Also read: Unitree R1: The $5,900 humanoid robot that may change everything Bringing AI into the physical world raises risks far beyond the digital domain. DeepMind highlights safety as a core feature: Gemini Robotics systems are trained to reason about potential hazards before acting, while also constrained by low-level safeguards such as collision avoidance. To push this further, DeepMind has expanded its ASIMOV benchmark, a test suite for evaluating semantic safety in robotics. The goal is to ensure that robots not only perform tasks correctly but also align with human values and physical safety standards. Access to the models will be staged. Gemini Robotics-ER 1.5 is being rolled out via the Gemini API in Google AI Studio, allowing developers to start building embodied AI applications. The action-focused Gemini Robotics 1.5 is initially available to select partners, reflecting both its experimental status and the sensitivity of giving machines the power to act in the world. The launch of Gemini Robotics 1.5 marks an inflection point in AI research. For years, large language models have dazzled in digital domains - answering questions, generating code, writing stories. DeepMind's new effort shows the next frontier: merging those reasoning abilities with machines capable of interacting with the messy, unpredictable real world. If successful, the technology could reshape industries from logistics to home assistance, and bring society closer to robots that can learn, adapt, and help in everyday life. But with greater agency comes greater responsibility, a fact DeepMind seems keenly aware of. As the company frames it, Gemini Robotics isn't just about smarter machines. It's about laying the foundations for robots that can think, plan, and act like humans safely.

Share

Share

Copy Link

Google DeepMind unveils new AI models that enable robots to 'think' before acting, complete complex tasks, and use web searches for problem-solving. This advancement marks a significant step towards general-purpose robots with potential applications across various industries.

Google DeepMind's AI Breakthrough in Robotics

Google DeepMind has unveiled a pair of groundbreaking AI models that promise to revolutionize the field of robotics. The new models, Gemini Robotics 1.5 and Gemini Robotics-ER 1.5, work in tandem to create robots that can 'think' before acting, marking a significant leap towards general-purpose intelligent machines

1

.

Source: Wccftech

The 'Thinking' and 'Doing' Models

Gemini Robotics-ER 1.5 serves as the 'brain' of the system, capable of simulated reasoning similar to modern text-based chatbots. This model processes requests, analyzes the physical environment, and generates natural language instructions for complex tasks

1

.Gemini Robotics 1.5, on the other hand, is a vision-language-action (VLA) model that translates these instructions into physical actions. It uses visual input to guide its movements and goes through its own thinking process to approach each step

1

2

.Advanced Capabilities and Real-World Applications

The new models enable robots to complete more complex, multi-step tasks that were previously challenging for machines. Examples include:

- Sorting laundry by color

- Packing a suitcase based on weather forecasts

- Sorting trash, compost, and recyclables according to local guidelines

2

3

Source: DeepMind

Web Integration and Tool Usage

A key feature of the new system is its ability to use digital tools like Google Search to gather information for problem-solving. This allows robots to adapt to new situations and environments without requiring reprogramming

2

4

.

Source: The Verge

Skill Transfer and Generalization

The Gemini Robotics 1.5 model introduces a technique called 'motion transfer,' allowing skills learned on one robot to be transferred to another with different physical configurations. This breakthrough could help solve a major bottleneck in AI robotics development by reducing the need for extensive training data for each robot type

3

5

.Related Stories

Industry Impact and Future Prospects

This development puts Google in the spotlight alongside other robotics innovators like Tesla, Figure AI, and Boston Dynamics. While success rates for complex tasks are currently between 20% to 40%, the potential for improvement is significant

5

.Challenges and Limitations

Despite these advancements, several hurdles remain. The technology needs to become more dexterous, reliable, and safe before widespread deployment in human-interactive environments. Additionally, creating robots that can learn skills by watching human demonstrations is still a work in progress

3

.As the race to integrate AI models into robots intensifies, Google DeepMind's latest innovations represent a significant step towards creating truly intelligent, general-purpose robots that could transform various industries, from healthcare to manufacturing.

References

Summarized by

Navi

[1]

Related Stories

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology