Google Enhances Android's Live Captions with AI-Powered 'Expressive Captions'

6 Sources

6 Sources

[1]

Google Is Making Live Captions on Android More Expressive Using AI

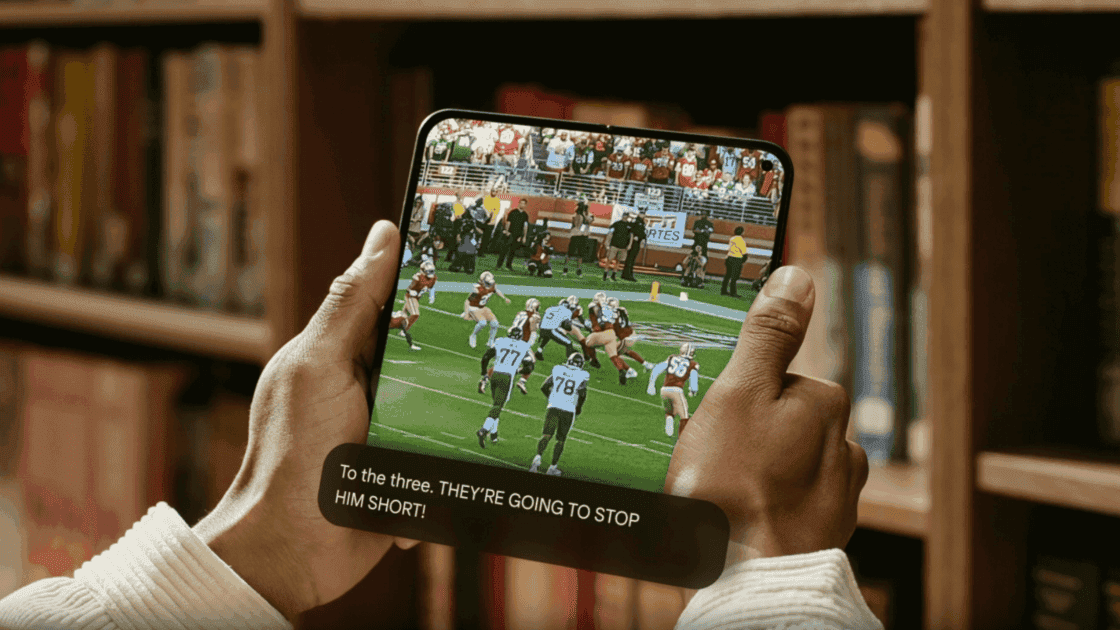

Google says AI processing for Live Captions occurs on-device Google unveiled a new artificial intelligence (AI) upgrade feature called 'Expressive Captions' on Thursday. The feature is being rolled out to its Live Captions feature on Android. With this, users will be able to see live captions of videos played across the device in a new format to better convey the context behind the sounds. The AI feature will convey excitement, shouting, and loudness with text showing in all caps. Currently, Expressive Captions is available in English on Android 14 and Android 15 devices in the US. The search giant shared details of the new AI feature which is being added to Android's Live Captions, and said that while captions were first popularised in the 1970s as an accessibility tool for the deaf and hard-of-hearing community, their presentation has not changed in the last 50 years. Many people today use captions while streaming content online in loud public spaces, to better understand what's being said, or while consuming content in a foreign language. Noting the popularity of captions among Android users, Google said it is now using AI to innovate the information that captions convey. With Expressive Captions, the live subtitles will be able to communicate things like tone, volume, environmental cues as well as human noises. "These small things make a huge difference in conveying what goes beyond words, especially for live and social content that doesn't have preloaded or high-quality captions," Google said. One of the ways Expressive Captions will innovate captions is by showing all capitalised letters to indicate the intensity of speech, be it excitement, loudness, or anger. These captions will also identify sounds such as sighing, grunting, and gasping, helping users better understand the nuances of speech. Further, it will also capture ambient sounds being played in the foreground and background, such as applause and cheers. Google says that Expressive Captions are part of Live Captions, and the feature is built into the operating system and will be available across the Android device, no matter which app or interface the user is on. As a result, users can find real-time AI captions while watching live streams, social media posts, and memories in Google Photos, as well as videos shared on messaging platforms. Notably, the AI processing for Expressive Captions is done on-device, meaning users will see them even when the device is not connected to the Internet or is on the airplane mode.

[2]

Android's Live Captions Can Now Understand Expressions

Android's Live Captions feature is a boon for some content, but since the captions are generated on the fly, the feature only goes so far as to transcribing stuff as-is. Now, Expressive Captions are here to give them a little more pop. Google has introduced a new feature called Expressive Captions for Android. This new feature, powered by AI, acts as an extension to Live Captions and goes beyond traditional captions by conveying not just the words spoken but also the tone, volume, and even ambient sounds. The AI models developed by Google and DeepMind analyze audio in real-time, translating it into stylized captions that reflect the speaker's emotions and the surrounding environment. Imagine seeing captions that not only show someone shouting "HAPPY BIRTHDAY!" in all caps but also indicate laughter, applause, or even a sigh. This added layer of information can be crucial for understanding the nuances of live and social content. These kinds of expressions are common to see in manually-transcribed captions, but having them in AI-generated captions is going to come very in handy since you can gather social cues that would otherwise only be heard by listening to the audio. Being an extension of Live Captions, Expressive Captions are available at a system-level for whatever you watch or listen to on your phone, for something like a live social media stream or video messages sent through IM services. Since it's AI, the captions will evidently not be 100% perfect. It might capture a cue that's not there, or fail to capture others that are actually there. It will likely need finetuning once the feature is available for everyone. Still, if you'd like to give it a shot, make sure to try it out now. Google says that the feature is rolling out now to any Android 14 and above smartphone that has Live Captions, but it might take a few weeks to land for everyone. Source: Google

[3]

Google's Live Captions can now use AI [gasp] to make 'Expressive Captions'

Google's Live captions are becoming richer with new AI-driven "Expressive Captions" that convey more than basic language, including sounds and actions. Google is also bringing Gemini 1.5 to Image Q&A in the Lookout app. Live Caption has been a staple of Google's Pixel lineup since 2019. The feature allows users to insert captions where there are normally none using the phone's Tensor SoC and onboard processing. When a voice is heard through a video or other media playing audio, the Pixel phone will pick up on that speech and display it as it hears it. It's useful for a variety of users, especially those who are deaf/hard of hearing. Live Captions are getting an overhauled mode for processing audio more dynamically. Google announced that Expressive Captions would allow users to see the nuanced speech and actions in media through Live Captions using AI on-device. That includes decoding tone, volume, and environmental cues. The change will dynamically reflect the way speech is presented. Google gives a couple of examples of how this will work. When someone yells something, that intensity is translated to captions in all caps. If someone were to yell, the caption would reflect the volume. Google's expressive captions using AI can also decode vocal bursts, such as sighs and groans, detailing the little sounds in between words. Even ambient sounds are represented to fill in the blacks around speech. In addition, Google announced that image descriptions can now be read aloud. With that, the company is bringing Gemini 1.5 Pro to the Lookout app - an app that aids the vision-impaired. The Q&A feature, which allows users to ask questions about an image, will now be a little more capable. An image can be described in a more natural voice via the Gemini model and will be capable of giving more surrounding information beyond a simple description. It's noted that Google's expressive AI captions are a part of Live Caption, so there is no restriction to which Pixel devices can utilize it. If Live Caption is available, this upgrade will be reflected. Google does note that the feature will not be compatible with phone calls, though that might change over time.

[4]

Android's Expressive Captions Aim to Give You a Better Idea of What's Happening Onscreen

Named a Tech Media Trailblazer by the Consumer Technology Association in 2019, a winner of SPJ NorCal's Excellence in Journalism Awards in 2022 and has three times been a finalist in the LA Press Club's National Arts & Entertainment Journalism Awards. Google on Thursday debuted a new feature that makes captions more true to life. Called Expressive Captions, it not only relays what someone is saying in a video or livestream but can also convey how someone is saying it. For instance, if someone excitedly wishes you a "HAPPY BIRTHDAY!" the captions will appear in all-caps. You'll also see descriptions of ambient sounds like applause or music to get a fuller picture of the environment. Other expressions such as sighing, groaning or gasping will also be relayed via Expressive Captions. The new feature is part of Live Caption, which automatically generates real-time captions across media like videos, phone calls and audio messages. The feature is built into Android's operating system and works across your phone's apps, which means Expressive Captions can work with most things you watch like social media livestreams and video messages. And because captions are generated on-device, they're also available when you're on airplane mode or don't have an internet connection. Captions have traditionally been used by people who are deaf or hard of hearing to follow TV content. But in recent years, the use of captions has expanded across demographics as people opt to watch videos without sound on the subway, for instance, or seek to better understand what's being said in a movie or TV show. In fact, 70% of Gen Z users regularly watch TV with subtitles, according to online language tutor site Preply. But oftentimes, livestreams, social content and videos from friends and family don't include pre-loaded captions. Android and Google DeepMind teams came together to build Expressive Captions, which uses multiple AI models to create stylized captions that can label a wider range of sounds. The goal is to emulate how dynamic listening to audio can be. "It's just one way we're building for the real lived experiences of people with disabilities and using AI to build for everyone," Angana Ghosh, director of product management on Android, said in a blog post. Expressive Captions is available starting Thursday in the US in English on any Android device running Android 14 and above that supports Live Caption. It's just one of several updates Google is announcing for Android and Pixel devices. Google is also adding updates to its Lookout app, which can help blind and low-vision users identify objects and get more information about their surroundings. Lookout now adds Arabic to the dozens of languages it already supports and will tap Gemini AI models to power image descriptions and its Q&A mode that lets people ask follow-up questions about an image. It also includes auto-language detection and more natural-sounding voices on the app. The company is also adding more Gemini extensions to Android for apps like Utilities, Spotify, Messaging and Calling, making them easier to access through Google's virtual assistant. Those with a Pixel device get additional new features, like a Gemini Saved Info feature that lets you ask Gemini to remember your interests and preferences, so it can surface more helpful and relevant responses. There's also an update that lets you save content to Pixel Screenshots when using Circle to Search with a quick tap, making it easier to find later. You can also add credit cards or tickets you've screenshotted to your wallet, and Pixel Screenshots will automatically categorize your screenshots to keep things more organized. And finally, Simple View on Pixel makes seeing and navigating controls, apps and widgets easier by increasing your phone's font size and touch sensitivity. It also shows a simplified home screen layout with a preselected set of essential apps and increases the app grid to a four-by-four display. Simple View is available on Pixel 6 phones and newer. The addition of Expressive Captions is likely to make watching content without audio more engaging both for users who are deaf or hard of hearing, as well as anyone who chooses not to watch something with the volume up.

[5]

Connect and share in more ways with new Android features

Starting today, new AI features and more from Android can help you express yourself authentically and connect your digital life with real-world experiences. From audio captions that capture intensity and emotion, to image descriptions enhanced with Gemini, to clearer scans in Google Drive, check out the latest updates: Expressive Captions automatically capture the intensity and emotion of how someone is speaking -- from volume and tone to sounds that say more than words. You'll see things like the [whispering] of a juicy secret, the [cheers and applause] of a big win and the [groaning] after a dad joke. These will appear across your phone's apps on everything from streaming to social to video messages, only on Android [APPLAUSE]. Read more about Expressive Captions in our blog post.

[6]

Live Captions on Android can now help you read between the lines

The feature is available in the US for English on devices running Android 14 and above. Google introduced Live Caption as a feature back in Google I/O 2019. This underrated accessibility feature can create captions of any speech coming out of your device. While the implementation is quite good by itself, plain and simple text on your screen does a poor job of capturing the intensity and emotions of the scene's audio. Google is now upgrading Live Caption with Expressive Captions, using AI to capture the intensity of emotion of words and sounds and display it through text.

Share

Share

Copy Link

Google introduces 'Expressive Captions', an AI-driven upgrade to Android's Live Captions feature, enhancing the captioning experience by conveying tone, volume, and environmental cues.

Google Introduces AI-Powered 'Expressive Captions' for Android

Google has unveiled a significant upgrade to its Live Captions feature on Android, introducing 'Expressive Captions' powered by artificial intelligence. This new feature aims to revolutionize the way captions convey information, going beyond mere transcription to capture the nuances of speech and ambient sounds

1

.Enhanced Caption Functionality

Expressive Captions utilize AI to interpret and represent various aspects of audio:

- Tone and Volume: The feature uses capitalization to indicate intensity, excitement, or anger in speech

2

. - Vocal Expressions: It identifies and describes non-verbal sounds such as sighing, grunting, and gasping

3

. - Ambient Sounds: Background noises like applause, cheers, or music are captured and described

4

.

Technical Implementation and Availability

The AI processing for Expressive Captions occurs on-device, ensuring functionality even without an internet connection

1

. This feature is currently available in English on Android 14 and Android 15 devices in the US, integrated into the operating system's Live Captions functionality5

.Broader Implications and User Benefits

While initially developed as an accessibility tool for the deaf and hard-of-hearing community, captions have gained widespread popularity among various user groups. Expressive Captions aim to enhance the viewing experience for:

- Users in noisy environments

- Those learning foreign languages

- Viewers of live and social content without pre-loaded captions

4

Related Stories

AI Collaboration and Future Developments

The development of Expressive Captions involved collaboration between Android and Google DeepMind teams, utilizing multiple AI models to create dynamic, stylized captions

4

. While the feature is not yet perfect and may require fine-tuning, it represents a significant step forward in caption technology2

.Additional Android Accessibility Updates

Alongside Expressive Captions, Google has announced other AI-driven accessibility features:

- Lookout App Enhancements: Integration of Gemini 1.5 Pro for improved image descriptions and Q&A capabilities

3

. - Simple View: A new feature for Pixel devices that simplifies screen layout and increases touch sensitivity

4

.

These updates reflect Google's commitment to leveraging AI for improved accessibility and user experience across its Android ecosystem.

References

Summarized by

Navi

[2]

Related Stories

YouTube Expressive Captions use AI to capture emotion, gasps, and sighs in every video

03 Dec 2025•Technology

Google Rolls Out Real-Time Captions for Gemini Live, Enhancing Accessibility and User Experience

26 Jun 2025•Technology

Google Enhances Accessibility with AI-Powered Features for Android and Chrome

16 May 2025•Technology

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology

3

ChatGPT cracks decades-old gluon amplitude puzzle, marking AI's first major theoretical physics win

Science and Research