Google Gemini Introduces AI Image Detection Tool, But Coverage Remains Limited

8 Sources

8 Sources

[1]

Gemini's AI Image Detector Only Scratches the Surface. That's Not Good Enough

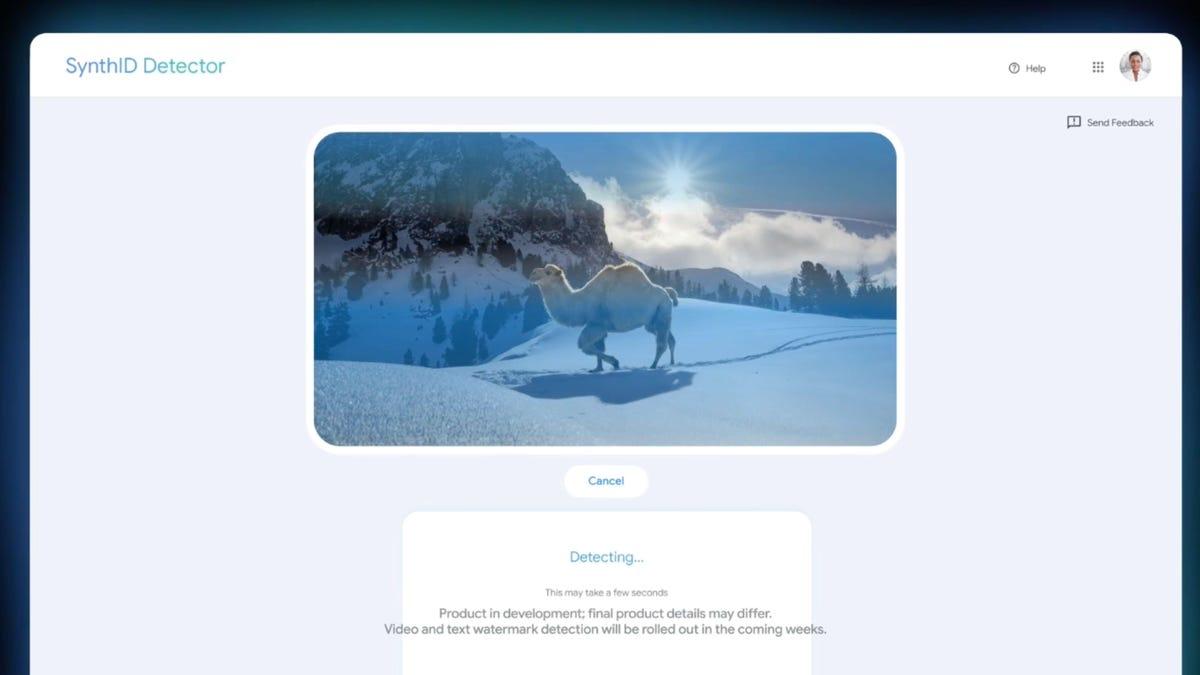

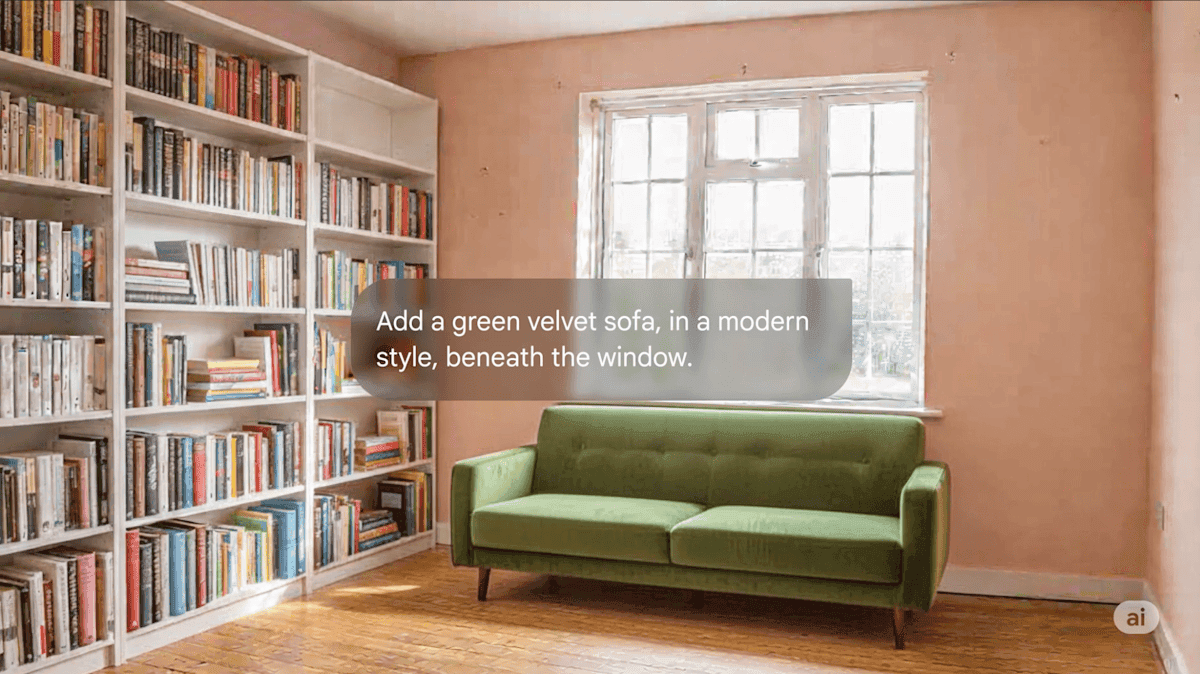

Expertise Artificial intelligence, home energy, heating and cooling, home technology. When you ask AI to look in the mirror, it doesn't always see itself. That's the sense you get when you ask it to determine whether an image is genuine or AI-generated. Google last week took a stab at helping us distinguish real from deepfake, albeit an extremely limited one. In the Gemini app, you can share an image and ask if it's real, and Gemini will check for a SynthID -- a digital watermark -- to tell you whether or not it was made by Google's AI tools. (On the other hand, Google last week also rolled out Nano Banana Pro, its new image model, which makes it even harder to spot a fake with the naked eye.) Within this limited scope, Google's reality check functions pretty well. Gemini works quickly and will tell you if something was made by Google's AI. In my testing, it even worked on a screenshot of an image. And the answer is quick and to the point -- yes, this image, or more than half of it at least, is fake. But ask it about an image made by literally any other image generator and you won't get that smoking gun answer. What you get is a review of the evidence: The model looks for all of the typical tells of something being artificial. In this case, it's basically doing what we do with our own eyes, but we still can't totally trust its results. As reliable and necessary as Google's SynthID check is, asking a chatbot to evaluate something that lacks a watermark is almost worthless. Google has provided a useful tool for checking the provenance of an image, but if we're going to be able to trust our own eyes on the internet again, every AI interface we use should be able to check images from every kind of AI model. I hope that soon we'll be able to just drop an image into, say, Google Search and find out if it's fake. The deepfakes are getting too good not to have that reality check. Checking images with chatbots is a mixed bag There's very little to say about Google's SynthID check. When you ask Gemini (on the app) to evaluate a Google-generated image, it knows what it's looking at. It works. I'd like to see it rolled out across all the places Gemini appears -- like the browser version and Google Search -- and according to Google's blog post on the feature, that's already in the works. The fact that Gemini in the browser doesn't have this functionality yet means we can see how the model (without SynthID) itself responds when asked if an AI-generated image is real. I asked the browser version of Gemini to evaluate an infographic Google provided reporters as a handout showing its new Nano Banana Pro model in action. This was AI-generated -- and even said so in its metadata. Gemini in the app used SynthID to suss it out. Gemini in the browser was wishy-washy: It said the design could be from AI or a human designer. It even said its SynthID tool didn't find anything indicating AI. (Although when I asked it to try again, it said it encountered an error with the tool.) The bottom line? It couldn't tell. What about other chatbots? I had Nano Banana Pro generate an image of a tuxedo cat lying on a Monopoly board. The image, at a glance, was plausibly realistic. Unsuspecting coworkers I sent it to thought it was my cat. But if you look more closely, you'll see the errors: For example, the Monopoly set makes no sense -- Park Place is in multiple wrong places and the colors are off. I asked a variety of AI chatbots and models if the image was AI-generated and the answers were all over the place. Gemini on my phone figured it out instantly using the SynthID checker. Gemini 3, the higher-level reasoning model released this week, offered a detailed analysis showing why it was AI-generated. Gemini 2.5 Flash (the default model you get by picking "Fast") guessed it was a real photograph based on the level of detail and realism. I tried ChatGPT twice on two different days and it gave me two different answers, one with an extensive explanation of how it's obviously real, and another with an equally long dissertation on why it's a fake. Claude, using the Haiku 4.5 and Sonnet 4.5 models, said it looked real. When I tested images generated by non-Google AI tools, chatbots made their assessments based on the quality of the generation. Images with more obvious tells -- for instance, mismatched lighting and poorly rendered text -- were more reliably spotted as AI. But the theme was inconsistency. Really, it wasn't any more accurate than just giving it a deep, critical look with my own eyes. That's not good enough. The future of AI detection Google's newest tool charts one potential path forward, even if it only goes so far. Yes, one solution to the growing problem of deepfakes is having the ability to check an image in a chatbot app. But it needs to work for more images and more apps. It should not require special knowledge to spot a fake. You shouldn't have to find a bespoke app, parse metadata or know offhand what errors might indicate an AI-generated image. As we've seen from the dramatic improvement in image and video models just in the past few months, those tells may be foolproof today and useless tomorrow. If you run across an image on the internet and you have doubts about it, you should be able to go to Gemini, or Google Search, or ChatGPT, or Claude, or whatever tool you choose, and have it do a scan for a universal, hard-to-remove digital watermark. Work toward this is happening through the Coalition for Content Provenance and Authentication, or C2PA. The result should be something that makes it easy for ordinary people to check without needing a special app or expertise. It should be available in something you use every day. And when you ask AI, it should know where to look. We shouldn't have to guess what's real and what isn't. AI companies have a responsibility to give us a foolproof, universal reality check. Maybe this is a way forward.

[2]

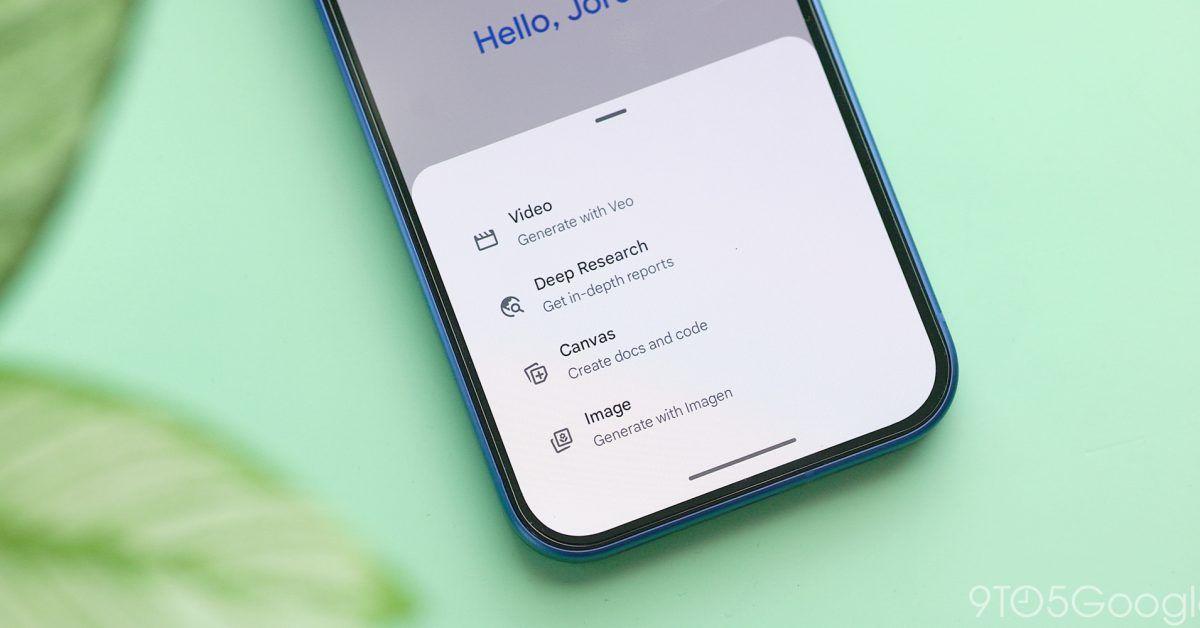

Google Gemini is getting better at identifying AI fakes

Google is making it easier for Gemini users to detect at least some AI-generated content. From today, you'll be able to use the Gemini app to determine if an image was either created or edited by a Google AI tool, simply by asking Gemini "Is this AI-generated?" While the initial launch is limited to images, Google says verification of video and audio will come "soon," and it also intends to expand the functionality beyond the Gemini app, including into Search. The more important expansion will come further down the line, when Google extends verification to support industry-wide C2PA content credentials. The initial image verification is based only on SynthID, Google's own invisible AI watermarking, but an expansion to C2PA would make it possible to detect the source of content generated by a wider variety of AI tools and creative software, including OpenAI's Sora. Google also announced that images generated by its Nano Banana Pro model, also revealed today, will have C2PA metadata embedded. It's the second bit of good news for C2PA this week, after TikTok confirmed it would use C2PA metadata as part of its own invisible watermarking for AI-generated content. Manual content verification in Gemini is a useful step, but C2PA credentials, and other watermarks like SynthID won't be truly useful until social media platforms get better at flagging AI-generated content automatically, rather than putting the onus on users to confirm for themselves.

[3]

Google Says Gemini Will Now Be Able to Identify AI Images, but There's a Big Catch

Google's betting invisible AI watermarks will be just as good as visible ones. The company is continuing its week of Gemini 3 news with an announcement that it's bringing its AI content detector, SynthID detector, out of a private beta for everyone to use. This news comes in tandem with the release of nano banana pro, Google's ultrapopular AI image editor. The new pro model comes with a lot of upgrades, including the ability to create legible text and upscale your images to 4K. That's great for creators who use AI, but it also means it will be harder than ever to identify AI-generated content. We've had deepfakes since long before generative AI. But AI tools, like the ones Google and OpenAI develop, let anyone create convincing fake content quicker and cheaper than ever before. That's led to a massive influx of AI content online, everything from low-quality AI slop to realistic-looking deepfakes. OpenAI's viral AI video app, Sora, was another major tool that showed us how easily these AI tools can be abused. It's not a new problem, but AI has led to a dramatic escalation of the deepfake crisis. Read more: AI Slop Has Turned Social Media Into an Antisocial Wasteland That's why SynthID was created. Google introduced SynthID in 2023, and every AI model it has released since then has attached these invisible watermarks to AI content. Google adds a small, visible, sparkle-shaped watermark, too, but neither really help when you're quickly scrolling your social media feed and not vigorously analyzing each post. To help prevent the deepfake crisis (that the company helped create) from getting worse, Google is introducing a new tool to use to identify AI content. SynthID Detector does exactly what its name implies; it analyzes images and can pick up on the invisible SynthID watermark. So in theory, you can upload an image to Gemini and ask the chatbot whether it was created with AI. But there's a huge catch -- Gemini can only confirm if an image was made with Google's AI, not any other company's. Because there are so many AI image and video models available, that means Gemini likely isn't able to tell you if it was AI-generated with a non-Google program. Right now, you can only ask about images, but Google said in a blog post that it plans to expand the capabilities to video and audio. No matter how limited, tools like these are still a step in the right direction. There are a number of AI detection tools, but none of them are perfect. Generative media models are improving quickly, sometimes too quickly for detection tools to keep up. That's why it's incredibly important to label any AI content you're sharing online and to remain dubious of any suspicious images or videos you see in your feeds.

[4]

How to use Google Gemini's built-in AI image detection tool

Tides have changed with the Gemini 3 update, bringing Nano Banana Pro image generation to a wider range of users. The images are so realistic that Google provides an extension that detects images with hidden watermarks. Here's how to check if an image is AI-generated. In recent iterations of Google's Nano Banana, AI-generated images come with invisible watermarks that indicate the photo was created using a generation engine. The latest update means images are even more realistic, which makes Google's AI detection tool all the more useful. According to Google, every image generated by Gemini 3 Pro carries SynthID markers. Gemini's models can detect them, but it won't be immediately apparent to those who view the image on their devices. The marker is detectable using the SynthID extension in the Gemini app. If any portion of an image contains those markers, Gemini will be able to report back with confidence that the image was created using AI. Using Gemini, users can ask whether an image has been created with Nano Banana or generated with other models in general. Note: You can also type "@synthid" after attaching an image. Gemini can only give a confident answer regarding images generated using Nano Banana Pro. If asked whether an image is real when presented with a photo generated by another AI model, like ChatGPT, Gemini will not be able to tell with 100% certainty. That's because other generation tools don't use the same identifying watermark. Gemini will still be able to examine the image in shockingly granular detail, pointing out nuances of the image that pose as red flags. It will point out visual inconsistencies like hair blending and symmetry, along with taking into account the aesthetic of the image. Still, without a SynthID marker, it can't identify an AI-generated image with absolute certainty.

[5]

You Can Now Ask Google Gemini Whether an Image is AI-Generated or Not

Google has a new feature that allows users to find out whether an image is AI-generated or not -- a much-needed tool in a world of AI slop. The new feature is available via Google Gemini 3, the latest installment of the company's LLM and multi-modal AI. To ascertain whether an image is AI-generated, simply open the Gemini app, upload the image, and ask something like: "Is this image AI-generated?" Gemini will give an answer, but it is predicated on whether that image contains SynthID, Google's digital watermarking technology that "embeds imperceptible signals into AI-generated content." Images that have been generated on one of Google's models, like Nano Banana, for example, will be flagged by Gemini as AI. "We introduced SynthID in 2023," Google says in a blog post. "Since then, over 20 billion AI-generated pieces of content have been watermarked using SynthID, and we have been testing our SynthID Detector, a verification portal, with journalists and media professionals." While SynthID is Google's technology, the company says that it will "continue to invest in more ways to empower you to determine the origin and history of content online." It plans to incorporate the Coalition for Content Provenance and Authority (C2PA) standard so users will be able to check the provenance of an image created by AI models outside of Google's ecosystem. "As part of this, rolling out this week, images generated by Nano Banana Pro (Gemini 3 Pro Image) in the Gemini app, Vertex AI, and Google Ads will have C2PA metadata embedded, providing further transparency into how these images were created," Google adds. "We look forward to expanding this capability to more products and surfaces in the coming months." I put Gemini's latest model to the test to see whether it can accurately spot an AI-generated image. Results below. So far, so good -- and once C2PA is added, the system will feel much more complete. The best part is that it offers a relatively simple way to check whether an image was generated by AI. Photographers should consider adding a C2PA signature to their own photos, which can be done easily in Lightroom or Photoshop.

[6]

How we're bringing AI image verification to the Gemini app

At Google, we've long invested in ways to provide you with helpful context about information you see online. Now, as generative media becomes increasingly prevalent and high-fidelity, we are deploying tools to help you more easily determine whether the content you're interacting with was created or edited using AI. Starting today, we're making it easier for everyone to verify if an image was generated with or edited by Google AI right in the Gemini app, using SynthID, our digital watermarking technology that embeds imperceptible signals into AI-generated content. We introduced SynthID in 2023. Since then, over 20 billion AI-generated pieces of content have been watermarked using SynthID, and we have been testing our SynthID Detector, a verification portal, with journalists and media professionals. If you see an image and want to confirm it has been made by Google AI, upload it to the Gemini app and ask a question such as: "Was this created with Google AI?" or "Is this AI-generated?" Gemini will check for the SynthID watermark and use its own reasoning to return a response that gives you more context about the content you encounter online.

[7]

Google Now Lets You Ask Gemini if an Image Was Created Using AI

Google on Thursday announced the rollout of a new feature in the Gemini app, which allows users to verify if an image was generated or edited using the company's artificial intelligence (AI) tools. The Mountain View-based tech giant said this move is aimed at increasing content transparency using SynthID, its digital watermarking technology. This capability will also be expanded to support additional formats beyond images, such as video and audio clips. SynthID According to Google, SynthID is embedded in all images generated by its tools. It now provides a verification feature in the Gemini app to check whether an image was generated by Google AI. The company said that visible watermarks are used for free and Pro users, while Ultra subscribers and enterprise tools will have the option of removing visible marks for professional work. Google is also testing a verification portal called SynthID Detector with journalists and media professionals. How to Verify if an Image Was Generated or Edited Using Gemini As per Google, SynthID verification is valid for images generated using its proprietary AI tools and won't work with non-Google AI products. The company, notably, recently expanded this feature to academia, researchers, and several media publishers. Google said that it will expand SynthID verification to support additional formats in the future. Currently limited to images, this capability will be extended to video and audio clips, too. Further, the company also has plans to add SynthID verification to more surfaces, such as Search. SynthID, notably, was first unveiled by Google DeepMind in August 2023 as a beta project aimed at correctly labelling AI-generated content.

[8]

Google adds SynthID-based AI image verification to the Gemini app

Google is expanding its tools for identifying AI-generated content by bringing SynthID-based image verification directly to the Gemini app. The update is designed to give users clear context about whether an image was created or edited using Google's AI models. Users can upload an image into the Gemini app and ask questions such as "Was this created with Google AI?" or "Is this AI-generated?" Gemini will then analyze the image, check for the SynthID watermark, and provide contextual information based on the findings. SynthID, introduced in 2023, embeds imperceptible signals into AI-generated or AI-edited content. According to Pushmeet Kohli, VP of Science and Strategic Initiatives at Google DeepMind, more than 20 billion AI-generated items have been watermarked with SynthID to date. Google has also been testing the SynthID Detector portal with journalists and media professionals. Google states that this rollout builds on ongoing work to provide more context for images in Search and on research initiatives such as Backstory from Google DeepMind. The company plans to extend SynthID verification to additional formats including video and audio, and bring it to more Google surfaces like Search. Google is also collaborating with industry partners through the Coalition for Content Provenance and Authenticity (C2PA). Beginning this week, images generated by Nano Banana Pro (Gemini 3 Pro Image) in the Gemini app, Vertex AI and Google Ads will include C2PA metadata. Google says this capability will expand to more products and surfaces in the coming months. Over time, the company will extend verification to support C2PA content credentials, allowing users to check the origins of content produced by models outside of Google's ecosystem. Speaking on the development, Laurie Richardson, Vice President of Trust and Safety at Google, said:

Share

Share

Copy Link

Google launches SynthID detector in Gemini app to identify AI-generated images, but the tool only works with Google's own AI models. The company plans to expand support to industry-wide C2PA standards and other content types.

Google Launches Limited AI Image Detection in Gemini

Google has introduced a new feature in its Gemini app that allows users to determine whether an image was generated by artificial intelligence, marking a significant step in combating the growing challenge of AI-generated content online. The feature, which became available this week alongside the release of Gemini 3, enables users to simply ask "Is this AI-generated?" after uploading an image

1

2

.

Source: 9to5Google

The detection capability relies on SynthID, Google's invisible digital watermarking technology that has been embedded in AI-generated content since 2023. According to Google, over 20 billion AI-generated pieces of content have been watermarked using SynthID, and the company has been testing its SynthID Detector with journalists and media professionals

5

.

Source: The Verge

Significant Limitations in Current Implementation

While the tool represents progress in AI content detection, it comes with substantial limitations that restrict its effectiveness. The SynthID detector can only identify images created by Google's own AI tools, including those generated by the company's Nano Banana Pro model

3

4

.When presented with images generated by other AI platforms like ChatGPT, DALL-E, or Midjourney, Gemini cannot provide definitive answers about their AI origins. Instead, the system falls back on analyzing visual inconsistencies and typical AI generation artifacts, similar to what humans might notice through careful examination

1

.

Source: CNET

Testing revealed inconsistent results when evaluating non-Google AI content. Different AI chatbots provided contradictory assessments of the same images, with some confidently declaring obviously AI-generated images as real photographs, while others correctly identified the artificial nature of the content

1

.Future Expansion Plans and Industry Standards

Google has outlined ambitious plans to expand the detection capabilities beyond their current limitations. The company intends to incorporate support for the Coalition for Content Provenance and Authority (C2PA) standard, which would enable detection of content created by a wider variety of AI tools and creative software, including OpenAI's Sora video generator

2

.Images generated by Google's new Nano Banana Pro model will include C2PA metadata embedded within them, providing additional transparency about their creation process. This represents the second major endorsement of C2PA standards this week, following TikTok's announcement that it would use C2PA metadata as part of its own invisible watermarking system for AI-generated content

2

.Google also plans to extend the verification capabilities to video and audio content "soon" and expand the functionality beyond the Gemini app to include Google Search and other platforms

2

4

.Related Stories

Growing Need for Comprehensive Detection Tools

The launch comes at a critical time as AI-generated content becomes increasingly sophisticated and difficult to distinguish from authentic material. Google's own Nano Banana Pro model, released alongside the detection tool, can now create legible text and upscale images to 4K resolution, making AI-generated content more convincing than ever

3

.The proliferation of AI tools has led to what experts describe as a "deepfake crisis," with realistic fake content appearing across social media platforms at unprecedented scales. This has created an urgent need for reliable detection methods that don't require specialized knowledge or technical expertise from users

1

.References

Summarized by

Navi

Related Stories

Gemini now detects AI-generated videos, but only those made with Google AI

18 Dec 2025•Technology

Google's Gemini 2.0 Flash AI Model Raises Concerns Over Watermark Removal Capabilities

17 Mar 2025•Technology

Google Gemini's AI Image Editing Gets a Powerful Upgrade: Consistency and Advanced Features

27 Aug 2025•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology