Google Unveils Gemini 3 AI Model with Record-Breaking Performance and New Coding IDE

79 Sources

79 Sources

[1]

Google unveils Gemini 3 AI model and AI-first IDE called Antigravity

Google has kicked its Gemini rollout into high gear over the past year, releasing the much-improved Gemini 2.5 family and cramming various flavors of the model into Search, Gmail, and just about everything else the company makes. Now, Google's increasingly unavoidable AI is getting an upgrade. Gemini 3 Pro is available in a limited form today, featuring more immersive, visual outputs and fewer lies, Google says. The company also says Gemini 3 sets a new high-water mark for vibe coding, and Google is announcing a new AI-first integrated development environment (IDE) called Antigravity, which is also available today. Google says the release of Gemini 3 is yet another step toward artificial general intelligence (AGI). The new version of Google's flagship AI model has expanded simulated reasoning abilities and shows improved understanding of text, images, and video. So far, testers like it -- Google's latest LLM is once again atop the LMArena leaderboard with an ELO score of 1,501, besting Gemini 2.5 Pro by 50 points. Factuality has been a problem for all gen AI models, but Google says Gemini 3 is a big step in the right direction, and there are myriad benchmarks to tell the story. In the 1,000-question SimpleQA Verified test, Gemini 3 scored a record 72.1 percent. Yes, that means the state-of-the-art LLM still screws up almost 30 percent of general knowledge questions, but Google says this still shows substantial progress. On the much more difficult Humanity's Last Exam, which tests PhD-level knowledge and reasoning, Gemini set another record, scoring 37.5 percent without tool use. Math and coding are also a focus of Gemini 3. The model set new records in MathArena Apex (23.4 percent) and WebDev Arena (1487 ELO). In the SWE-bench Verified, which tests a model's ability to generate code, Gemini 3 hit an impressive 76.2 percent.

[2]

Google launches Gemini 3 with new coding app and record benchmark scores | TechCrunch

On Tuesday, Google released Gemini 3, its latest and most advanced foundation model, which is now immediately available through the Gemini app and AI search interface. Coming just seven months after the Gemini 2.5 release, the new model is Google's most capable LLM yet, and an immediate contender for the most capable AI tool on the market. The release also comes less than a week after OpenAI released GPT 5.1, and a mere two months after Anthropic released Sonnet 4.5 -- a reminder of the blistering pace of frontier model development. A more research-intensive version of the model, called Gemini 3 Deepthink, will also be made available to Google AI Ultra subscribers in the coming weeks, once it passes further rounds of safety testing. "With Gemini 3, we're seeing this massive jump in reasoning," said Tulsee Doshi, Google's head of product for the Gemini model. "It's responding with a level of depth and nuance that we haven't seen before." Some of that reasoning power is already registering on independent benchmarks. With a score of 37.4, the model marked the highest score on record on the Humanity's Last Exam benchmark, meant to capture general reasoning and expertise. The previous high score, held by GPT-5 Pro, was 31.64. Gemini 3 also topped the leaderboard on LMArena, a human-led benchmark that measures user satisfaction. According to Google, the Gemini app currently has more than 650 million monthly active users, and 13 million software developers have used the model as part of their workflow. Alongside the base model, Google also released a Gemini-powered coding interface called Google Antigravity, allowing for multi-pane agentic coding similar to agentic IDEs like Warp or Cursor 2.0. Specifically, Antigravity combines a ChatGPT-style prompt window with a command-line interface and a browser window that can show the impact of the changes made by the coding agent. "The agent can work with your editor, across your terminal, across your browser to make sure that it helps you build that application in the best way possible," said DeepMind CTO Koray Kavukcuoglu.

[3]

Google's new Gemini 3 vibe-codes its responses and comes with its own agent

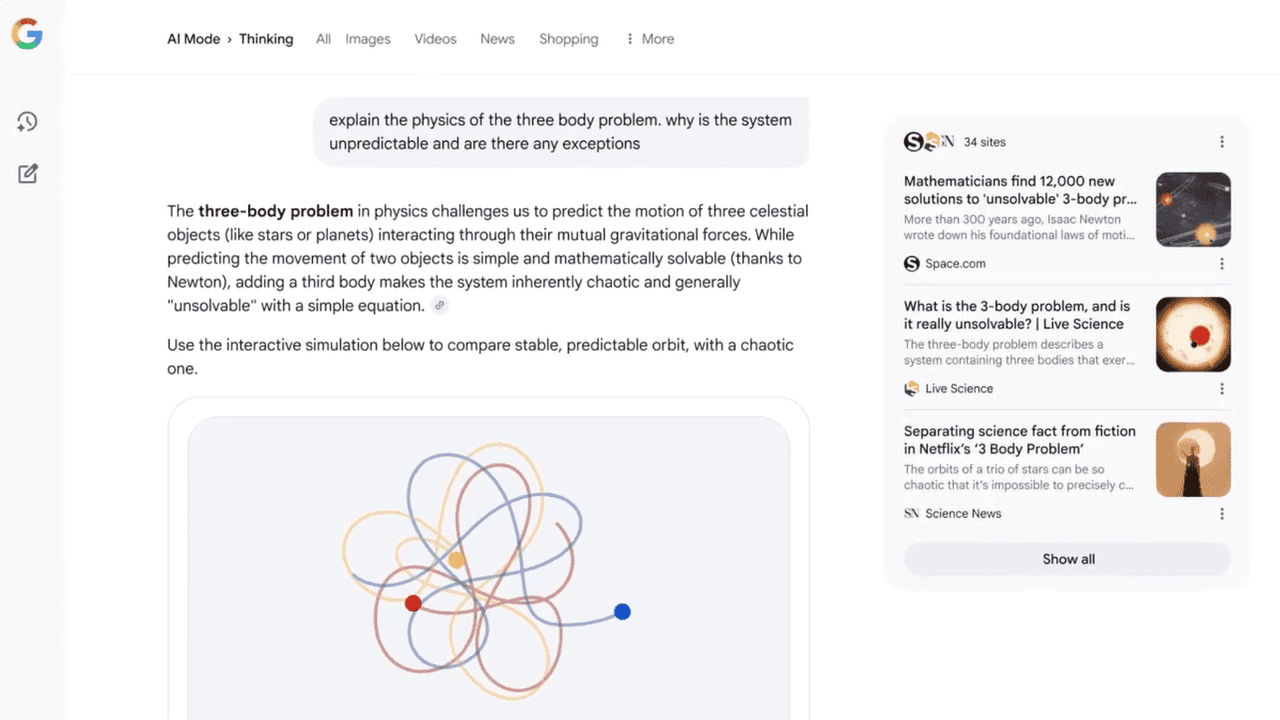

When asked to explain a concept, Gemini 3 may sketch a diagram or generate a simple animation on its own if it believes a visual is more effective. "Visual layout generates an immersive, magazine-style view complete with photos and modules," says Josh Woodward, VP of Google Labs, Gemini, and AI Studio. "These elements don't just look good but invite your input to further tailor the results." With Gemini 3, Google is also introducing Gemini Agent, an experimental feature designed to handle multi-step tasks directly inside the app. The agent can connect to services such as Google Calendar, Gmail, and Reminders. Once granted access, it can execute tasks like organizing an inbox or managing schedules. Similar to other agents, it breaks tasks into discrete steps, displays its progress in real time, and pauses for approval from the user before continuing. Google describes the feature as a step toward "a true generalist agent." It will be available on the web for Google AI Ultra subscribers in the US starting November 18. The overall approach can seem a lot like "vibe coding," where users describe an end goal in plain language and let the model assemble the interface or code needed to get there. The update also ties Gemini more deeply into Google's existing products. In Search, a limited group of Google AI Pro and Ultra subscribers can now switch to Gemini 3 Pro, the reasoning variation of the new model, to receive deeper, more thorough AI-generated summaries that rely on the model's reasoning rather than the existing AI Mode.

[4]

Google's Gemini 3 model keeps the AI hype train going - for now

Google's latest model reportedly beats its rivals in several benchmark tests, but issues with reliability mean concerns remain over a possible AI bubble Google's latest chatbot, Gemini 3, has made significant leaps on a raft of benchmarks designed to measure AI progress, according to the company. These achievements may be enough to allay fears of an AI bubble bursting for the moment, but it is unclear how well these scores translate to real-world capabilities. What's more, persistent factual inaccuracies and hallucinations that have become a hallmark of all large language models show no signs of being ironed out, which could prove problematic for any uses where reliability is vital. In a blog post announcing the new model, Google bosses Sundar Pichai, Demis Hassabis and Koray Kavukcuoglu write that Gemini 3 has "PhD-level reasoning", a phrase that competitor OpenAI also used when it announced its GPT-5 model. As evidence for this, they list scores on several tests designed to test "graduate-level" knowledge, such as Humanity's Last Exam, a set of 2500 research-level questions from maths, science and the humanities. Gemini 3 scored 37.5 per cent on this test, outclassing the previous record holder, a version of OpenAI's GPT-5, which scored 26.5 per cent. Jumps like this can indicate that a model has become more capable in certain respects, says Luc Rocher at the University of Oxford, but we need to be careful about how we interpret these results. "If a model goes from 80 per cent to 90 per cent on a benchmark, what does it mean? Does it mean that a model was 80 per cent PhD level and now is 90 per cent PhD level? I think it's quite difficult to understand," they say. "There is no number that we can put on whether an AI model has reasoning, because this is a very subjective notion." Benchmark tests have many limitations, such as requiring a single answer or multiple choice answers for which models don't need to show their working. "It's very easy to use multiple choice questions to grade [the models]," says Rocher, "but if you go to a doctor, the doctor will not assess you with a multiple choice. If you ask a lawyer, a lawyer will not give you legal advice with multiple choice answers." There is also a risk that the answers to such tests were hoovered up in the training data of the AI models being tested, effectively letting them cheat. The real test for Gemini 3 and the most advanced AI models - and whether their performance will be enough to justify the trillions of dollars that companies like Google and OpenAI are spending on AI data centres - will be in how people use the model and how reliable they find it, says Rocher. Google says the model's improved capabilities will make it better at producing software, organising email and analysing documents. The firm also says it will improve Google search by supplementing AI-generated results with graphics and simulations. Initial reactions online have included people praising Gemini's coding capabilities and ability to reason, but as with all new model releases, there have also been posts highlighting failures to do apparently simple tasks, such as tracing hand-drawn arrows pointing to different people, or simple visual reasoning tests. Google admits, in Gemini 3's technical specifications, that the model will continue to hallucinate and produce factual inaccuracies some of the time, at a rate that is roughly comparable with other leading AI models. The lack of improvement in this area is a big concern, says Artur d'Avila Garcez at City St George's, University of London. "The problem is that all AI companies have been trying to reduce hallucinations for more than two years, but you only need one very bad hallucination to destroy trust in the system for good," he says.

[5]

Gemini 3 Is Here -- and Google Says It Will Make Search Smarter

Google has introduced Gemini 3, its smartest artificial intelligence model to date, with cutting-edge reasoning, multimedia, and coding skills. As talk of an AI bubble grows, the company is keen to stress that its latest release is more than just a clever model and chatbot -- it's a way of improving Google's existing products, including its lucrative search business, starting today. "We are the engine room of Google, and we're plugging in AI everywhere now," Demis Hassabis, CEO of Google DeepMind, an AI-focused subsidiary of Google's parent company, Alphabet, told WIRED in an interview ahead of the announcement. Hassabis admits that the AI market appears inflated, with a number of unproven startups receiving multibillion-dollar valuations. Google and other AI firms are also investing billions in building out new data centers to train and run AI models, sparking fears of a potential crash. But even if the AI bubble bursts, Hassabis thinks Google is insulated. The company is already using AI to enhance products like Google Maps, Gmail, and Search. "In the downside scenario, we will lean more on that," Hassabis says. "In the upside scenario, I think we've got the broadest portfolio and the most pioneering research." Google is also using AI to build popular new tools like NotebookLM, which can auto-generate podcasts from written materials, and AI Studio which can prototype applications with AI. It's even exploring embedding the technology into areas like gaming and robotics, which Hassabis says could pay huge dividends in years to come, regardless of what happens in the wider market. Google is making Gemini 3 available today through the Gemini app and in AI Overviews, a Google Search feature that synthesizes information alongside regular search results. In demos, the company showed that some Google queries, like a request for information about the three-body problem in physics, will prompt Gemini 3 to automatically generate a custom interactive visualization on the fly. Robby Stein, vice president of product for Google Search, said at a briefing ahead of the launch that the company has seen "double-digit" increases in queries phrased in natural language, which are most likely targeted at AI Overviews, year over year. The company has also seen a 70 percent spike in visual search, which relies on Gemini's ability to analyze photos. Despite investing heavily in AI and making key breakthroughs, including inventing the transformer model that powers most large language models, Google was shaken by the sudden rise of ChatGPT in 2022. The chatbot not only vaulted OpenAI to center stage when it came to AI research; it also challenged Google's core business by offering a new and potentially easier way to search the web.

[6]

Google Says New Gemini 3 AI Model Is Its Most Capable Yet

Imad is a senior reporter covering Google and internet culture. Hailing from Texas, Imad started his journalism career in 2013 and has amassed bylines with The New York Times, The Washington Post, ESPN, Tom's Guide and Wired, among others. Gemini 3, the latest AI model from Google, is the company's most intelligent model to date, with more advanced multimodal and vibe coding capabilities, the company said in a blog post on Tuesday. It's available now. Google says Gemini 3 is "built to grasp depth and nuance" and is better at understanding the intent behind a user's request. The company also touted Gemini 3's multimodal capabilities, such as its ability to turn a long video lecture into interactive flash cards or to analyze a person's pickleball match and find areas for improvement. Gemini 3 isn't limited to the app. It'll also be available in AI Mode in Search and, for Pro and Ultra subscribers, in AI Overviews. In AI Overviews, Gemini 3 can generate interactive elements. Google DeepMind's Demis Hassabis and Koray Kavukcuoglu said in a blog post that Gemini 3 Pro is less sycophantic, a problem that's been plaguing AIs and leading to AI psychosis in some. It's also more secure against prompt injection attacks, a type of attack in which bad actors try to make an AI ignore its original instructions and perform unintended actions. The company also unveiled Google Antigravity, a new agentic development platform. Google says Antigravity is like having an active partner while making tools or working on projects, autonomously planning and executing complex software tasks while validating its own code. It works in tandem with Gemini 2.5's Computer Use model for browser control and works with nano banana, Gemini 2.5's image model. Gemini 3's new, more powerful, agentic capabilities will only be available to $250/month Google AI Ultra subscribers at first. This will allow the Gemini Agent to do multi-step workflows, like planning a travel itinerary. Google's release of Gemini 3 comes as an AI war is heating up between it, OpenAI, Anthropic and xAI. Google's consistently been leading AI leaderboards, although other AI models haven't been far behind, sometimes trading spots at the top. With the release of Gemini 3, Google seems to be trying to solve for some of AI's more annoying problems, like hallucinations or sycophancy. It's also trying to prove that AIs can be truly agentic, being able to accomplish tasks on the user's behalf. Other agentic models have proven to be problematic in real-world usage and run into various security concerns, especially in web browsers. The latest AI release from Google also comes at a time when there are fears of an AI bubble forming in the stock market. AI companies, including Nvidia, Google, Meta and Microsoft, account for 30% of the S&P 500. Google currently has a valuation of $3.4 trillion. Even Google CEO Sundar Pichai says the trillion-dollar AI investment boom has "elements of irrationality" and that a burst would affect every AI company, in an interview with the BBC. Still, if Google is to keep its stock price moving upward, it needs to demonstrate that its AI models beat the competition.

[7]

Want to ditch ChatGPT? Gemini 3 shows early signs of winning the AI race

ChatGPT is still the most popular AI, but Gemini is catching up. Watch out, ChatGPT. Another AI is aiming to take over the top spot in the AI world. And that's Google's Gemini. The latest Gemini 3 flavor is already earning kudos for being faster, smarter, and more adept at specialized tasks, leading several tech execs to crown it as the new AI king. Released last week, Gemini 3 is being touted as not only smarter and faster than its predecessors and rival AIs but also better at coding and multimodality. With multimodality as part of its skillset, Gemini can more easily and effectively work with different types of content, such as text, images, audio, video, and computer code. Plus, the new AI model is more skilled with Ph.D.-level reasoning, helping it better solve complex problems in science, math, and other technical areas. Also: Want better Gemini responses? Try these 10 tricks, Google says With its superior skills, Gemini 3 Pro has already landed on LMArena Leaderboard with the top score in virtually every category, based on anonymous voting that pitted it against models from Anthropic, Meta, XAI, DeepSeek, and others. Google's latest AI also grabbed a 91% score on the GPQA Diamond, a benchmark that evaluates Ph.D.-level reasoning. Best of all, Gemini 3 is available to Google users across the Gemini website, the Gemini mobile app, Google Search, AI Studio, Vertex AI, and a new agentic development platform called Google Antigravity. The basic version of Gemini 3 is free to all users. Packed with more advanced features, the Pro flavor is accessible only to Google AI subscribers and certain programs. Those are notable credentials for an AI model that just debuted a week ago. Tech leadersd are also singing its praises, including Salesforce CEO Marc Benioff, former Tesla AI director Andrej Karpathy, and Stripe CEO Patrick Collison. As spotted by Business Insider, Benioff is promising to dump ChatGPT for Gemini. On Sunday, the Salesforce CEO proclaimed on X: "I've used ChatGPT every day for 3 years. Just spent 2 hours on Gemini 3. I'm not going back. The leap is insane -- reasoning, speed, images, video... everything is sharper and faster. It feels like the world just changed, again." In his X post, Karpathy wrote: "I had a positive early impression yesterday across personality, writing, vibe, coding, humor, etc., very solid daily driver potential, clearly a tier 1 LLM, congrats to the team!" Also: Google's Nano Banana image generator goes Pro - how it beats the original Collison said in his own post that he asked Gemini 3 to make an interactive web page summarizing 10 breakthroughs in genetics over the past 15 years. Here was the result, which he said he found pretty cool. Even OpenAI CEO Sam Altman chimed in last week, saying that Gemini 3 "looks like a great model." (Disclosure: Ziff Davis, ZDNET's parent company, filed an April 2025 lawsuit against OpenAI, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.) Based on use, ChatGPT is still the king of the AI castle. At the top of Apple's charts for most popular free iPhone apps, ChatGPT has more than 800 million weekly active users, double the 400 million count from February 2025, according to Altman and data reporting platform Demand Sage. But Gemini has been catching up. Google's AI is the second most popular free iPhone app. Gemini sees around 650 million monthly users, up from around 450 million at the start of 2025, Demand Sage reports. More noteworthy than popularity, though, is performance. That's where Gemini 3 is already making a name for itself. But even there, Gemini's new success is not just about raw power but better usability, according to industry analysts as reported in a Monday story by the Wall Street Journal (subscription required). Also: I let Gemini Deep Research dig through my Gmail and Drive - here's what it uncovered All the power and AI benchmarks in the world wouldn't mean much if the average person couldn't use the AI easily, effectively, and reliably. Those are three areas where an AI often falls down on the job. But here, Gemini 3 seems to be earning kudos. The model has been able to better hold coherent conversations while reducing errors, the Journal said. That has raised expectations for AI in both the business and consumer markets. "This rollout reflects a shift in AI development where innovation focuses on practical deployment and user-centric design," the Journal said. "By closing gaps in understanding and interaction, Gemini makes conversational AI more reliable and applicable." Of course, there's still the race with ChatGPT to see which AI can win over more people. "We have to recognize that Gemini's the biggest threat to ChatGPT we've seen so far," investment guru and media personality Jim Cramer said on his CNBC show. "There's simply no two ways about it -- Gemini's existential for OpenAI," he said. Though Cramer claims that any business reliant on ChatGPT just became more precarious, he said he certainly wouldn't write off ChatGPT. That's because OpenAI may have a "revolutionary version of its own product" in the works. In the midst of Cramer's usual high-octane commentary, he makes a valid point. The battle for AI supremacy is far from over. Also: How to turn off Gemini in your Gmail, Photos, Chrome, and more - it's easy to opt out of AI I wouldn't be surprised if OpenAI does have an innovative new version of ChatGPT waiting in the wings. For now, though, Gemini is enjoying waves of acclaim and excitement. If Google wants to hang onto that buzz, then it also needs to be working on the next iteration of its AI to show that it aims to stay in the game.

[8]

'Holy shit': Gemini 3 is winning the AI race -- for now

When an AI model release immediately spawns memes and treatises declaring the rest of the industry cooked, you know you've got something worth dissecting. Google's Gemini 3 was released Tuesday to widespread fanfare. The company called the model a "new era of intelligence," integrating it into Google Search on day one for the first time. It's blown past OpenAI and other competitors' products on a range of benchmarks and is topping the charts on LMArena, a crowdsourced AI evaluation platform that's essentially the Billboard Hot 100 of AI model ranking. Within 24 hours of its launch, more than one million users tried Gemini 3 in Google AI Studio and the Gemini API, per Google. "From a day one adoption standpoint, [it's] the best we've seen from any of our model releases," Google DeepMind's Logan Kilpatrick, who is product lead for Google's AI Studio and the Gemini API, told The Verge. Even OpenAI CEO Sam Altman and xAI CEO Elon Musk publicly congratulated the Gemini team on a job well done. And Salesforce CEO Marc Benioff wrote that after using ChatGPT every day for three years, spending two hours on Gemini 3 changed everything: "Holy shit ... I'm not going back. The leap is insane -- reasoning, speed, images, video... everything is sharper and faster. It feels like the world just changed, again." "This is more than a leaderboard shuffle," said Wei-Lin Chiang, cofounder and CTO of LMArena. Chiang told The Verge that Gemini 3 Pro holds a "clear lead" in occupational categories including coding, match, and creative writing, and its agentic coding abilities "in many cases now surpass top coding models like Claude 4.5 and GPT-5.1." It also got the top spot on visual comprehension and was the first model to surpass a ~1500 score on the platform's text leaderboard. The new model's performance, Chiang said, "illustrates that the AI arms race is being shaped by models that can reason more abstractly, generalize more consistently, and deliver dependable results across an increasingly diverse set of real-world evaluations." Alex Conway, principal software engineer at DataRobot, told The Verge that one of Gemini 3's most notable advancements was on a specific reasoning benchmark called ARC-AGI-2. Gemini scored almost twice as high as OpenAI's GPT-5 Pro while running at one-tenth of the cost per task, he said, which is "really challenging the notion that these models are plateauing." And on the SimpleQA benchmark -- which involves simple questions and answers on a broad range of topics, and requires a lot of niche knowledge -- Gemini 3 Pro scored more than twice as high as OpenAI's GPT-5.1, Conway flagged. "Use case-wise, it'll be great for a lot more niche topics and diving deep into state-of-the-art research and scientific fields," he said. But leaderboards aren't everything. It's possible -- and in the high-pressure AI world, tempting -- to train a model for narrow benchmarks rather than general-purpose success. So to really know how well a system is doing, you have to rely on real-world testing, anecdotal experience, and complex use cases in the wild. The Verge spoke with professionals across disciplines who use AI every day for work. The consensus: Gemini 3 looks impressive, and it does a great job on a wide breadth of tasks -- but when it comes to edge cases and niche aspects of certain industries, many professionals won't be replacing their current models with it anytime soon. The majority of people The Verge spoke with plan to continue to use Anthropic's Claude for their coding needs, despite Gemini 3's advancements in that space. Some also said that Gemini 3 isn't optimal on the user interaction front. Tim Dettmers, assistant professor at Carnegie Mellon University and a research scientist at Ai2, said that though it's a "great model," it's a bit raw when it comes to UX, meaning "it doesn't follow instructions precisely." Tulsee Doshi, Google DeepMind's senior director of product management for Gemini and Gen Media, told The Verge that the company prioritized bringing Gemini 3 to a variety of Google products in a "very real way." When asked about the instruction-following concerns, she said it's been helpful to see "where folks are hitting some of the sticking points." She also said that since the Pro model is the first release in the Gemini 3 suite, later models will help "round out that concern." Joel Hron, CTO of Thomson Reuters, said that the company has its own internal benchmarks it's developed to rank both its internal models and public ones on the areas that are most relevant to their work -- like comparing two documents up to several hundreds of pages in length, interpreting a long document, understanding legal contracts, and reasoning in the legal and tax spaces. He said that so far, Gemini 3 has performed strongly across all of them and is "a significant jump up from where Gemini 2.5 was." It also outperforms several of Anthropic's and OpenAI's models right now in some of those areas. Louis Blankemeier, cofounder and CEO of Cognita, a radiology AI startup, said that in terms of "pure numbers" Gemini 3 is "super exciting." But, he said, "we still need some time to figure out what the real-world utility of this model is." For more general domains, Blankemeier said, Gemini 3 is a star, but when he played around with it for radiology, it struggled with correctly identifying subtle rib fractures on chest X-rays, as well as uncommon or rare conditions. He calls radiology akin to self-driving cars in many ways, with a lot of edge cases -- so a newer, more powerful model may still not be as effective as an older one that's been refined and trained on custom data over time. "The real world is just so much more difficult," he said. Similarly, Matt Hoffman, head of AI at Longeye, a company providing AI tools for law enforcement investigations, sees promise in the Gemini 3 Pro-powered Nano Banana Pro image generator. Image generators allow Longeye to create convincing synthetic datasets for testing, letting it keep real, sensitive investigation data secure. But although the benchmarks are impressive, they may not map to the company's actual use cases. "I'm not confident Longeye could swap out a model we're using in production for Gemini 3 and see immediate improvements," he said. Other companies also say they're excited about Gemini -- but not necessarily using it to replace everything else. Built, a construction lending startup, currently uses a mix of foundational models from Google, Anthropic, OpenAI, and others to analyze construction draw requests -- a package of documents often sent to a construction lender, like invoices and proof of work done, requesting that funds be paid. This requires multimodal analysis of text and images, plus a large context window for the main agent delegating tasks to the others, VP of engineering Thomas Schlegel told The Verge. That's part of what Google promises with Gemini 3, so the company is currently exploring switching it out for 2.5. "In the past we've found Gemini to be the best at all-purpose tasks, and 3 looks to be a big step forward along those same lines," Schlegel said. "It's everything we love about Gemini on steroids." But he doesn't yet think it will replace all the other models, including Claude for coding tasks and OpenAI products for business reasoning. For Tanmai Gopal, cofounder and CEO of AI agent platform PromptQL, the stir Gemini 3 has caused is valid, but "it's definitely not the end of anything" for Google's competitors. AI models are becoming better and cheaper, and since they're on such quick release cycles, "one is always ahead of the pack for a period of time." (For instance, the day after Gemini 3 came out, OpenAI released GPT-5.1-Codex-Max, an update to a week-old model, ostensibly to challenge Gemini 3 on a few coding benchmarks.) Gopal said PromptQL is still working on internal evaluations to decide how, if at all, the team's model choices will change, but "initial results aren't necessarily showing something drastically better" than their current lineup. He said his current preference is Claude for code generation, ChatGPT for web search, and GPT-5 Pro for "deep brainstorming," but he may incorporate Gemini 3 as a default model, since it's "probably best-in-class for consumer tasks across creative, text, [and] image." And like virtually every model, Gemini 3 has had moments of what I'll dub "robotic hand syndrome" -- when an AI system does something complex with flying colors but gets gobsmacked by the simplest query, akin to the robotic hands of yesteryear having trouble gripping a soda can. Famed researcher Andrej Karpathy, who was a founding member of OpenAI and former director of AI at Tesla, wrote on X after testing Gemini 3 that he "had a positive early impression yesterday across personality, writing, vibe coding, humor, etc., very solid daily driver potential, clearly a tier 1 LLM," but he noted that the model refused to believe him when he said it was 2025 and later said it had forgotten to turn on Google Search. (He ascertained that in early testing, he may have been given a model with a stale system prompt.) In The Verge's own experience testing Gemini 3, we found it "delivers reasonably well -- with caveats." It likely won't stay on top forever, but it's an unmistakable step up for the company. "You're sort of in this leapfrog game from model to model, month to month, when a new one drops," Hron said. "But what stuck to me about Google's release is it makes substantial improvements across many dimensions of models -- so it's not like it just got better at coding or it just got better at reasoning ... It really, across the board, got a good bit better."

[9]

ChatGPT Who? Google Releases Gemini 3, Now in AI Mode

Days after OpenAI released GPT 5.1, Google is introducing its own, potentially more powerful AI model with Gemini 3, which starts rolling out to users today. The company says Gemini 3 is Google's most intelligent AI model. It hyped up Gemini 2.5 with the same language back in March, but this time, Google is confident enough to release Gemini 3 immediately to everyone. "This is the first time we are shipping Gemini in Search on day one," says CEO Sundar Pichai. Gemini 3 is rolling out via AI Mode, Google's ChatGPT-like interface on the Google search engine. One standout feature is how Gemini 3 can display "immersive visual layouts and interactive tools and simulations, all generated completely on the fly based on your query." The new model is also launching for all users in the Gemini app. And Google promises a boost for regular searches, too. For each query, the company plans to use Gemini 3 to perform "even more searches to uncover relevant web content" that may have been missed previously. In addition, the company is signaling that Gemini 3 will eventually power AI Overviews, the Google Search function that automatically summarizes the answer to your query (for better or worse) at the top of the results page. "In the coming weeks, we're also enhancing our automatic model selection in Search with Gemini 3. This means Search will intelligently route your most challenging questions in AI Mode and AI Overviews to this frontier model -- while continuing to use faster models for simpler tasks," the company wrote in a separate blog post. That said, the Gemini 3 integration with AI Overviews will initially be limited to paid subscribers of Google's AI plans. In the US, they can also use a more powerful Gemini 3 Pro model starting today via AI Mode by selecting the "Thinking" option from the model drop-down menu. According to Google, the Gemini 3 model outperforms GPT 5.1 and Anthropic's Claude Sonnet 4.5 across a wide range of AI-related benchmarks, including math, scientific reasoning, and multilingual questions and answers. "It's state-of-the-art in reasoning, built to grasp depth and nuance -- whether it's perceiving the subtle clues in a creative idea, or peeling apart the overlapping layers of a difficult problem," Pichai added. Ironically, though, the same benchmarks also indicate Gemini 3 struggles with "visual reasoning puzzles" and "academic reasoning." However, the Google model still beats the competition in these tests. The company also says Gemini 3 has been built to resist "sycophancy" and "prompt injection" attacks that can manipulate the AI into executing malicious instructions. To attract software developers, the company also announced "Google Antigravity," a suite of AI-powered tools for computer programming, an apparent response to rival coding programs from OpenAI and Anthropic. "Using Gemini 3's advanced reasoning, tool use, and agentic coding capabilities, Google Antigravity transforms AI assistance from a tool in a developer's toolkit into an active partner," Google's CEO said. "Now, agents can autonomously plan and execute complex, end-to-end software tasks simultaneously on your behalf while validating their own code." In addition, Google has developed an even smarter "Gemini 3 Deep Think mode." But the company wants to be careful with its release. "We're taking extra time for safety evaluations and input from safety testers before making it available to Google AI Ultra subscribers in the coming weeks," the company explained.

[10]

Google releases Gemini 3 with new reasoning and automation features

The model is now embedded across Google's core products, introducing new agent features and automation tools that raise strategic questions for enterprise IT leaders. Google has launched Gemini 3 and integrated the new AI model into its search engine immediately, aiming to push advanced AI features into consumer and enterprise products faster as competition in the AI market intensifies. The release brings new agentic capabilities for coding, workflow automation, and search, raising questions about how quickly businesses can adopt these tools and what impact they may have on existing IT operations. Google also introduced new agent features, including Gemini Agent and the Antigravity development platform, designed to automate multi-step tasks and support software teams.

[11]

Google just rolled out Gemini 3 to Search - here's what it can do and how to try it

The new Search is available to Google AI and Ultra subscribers. Google just made the biggest change to its search engine since the company debuted AI Overviews in it last year. The company officially debuted Gemini 3, its latest AI model, on Tuesday morning, and has already integrated it with Search, which Google says will enable deeper contextual awareness, more sophisticated reasoning capabilities, and multimedia responses to help users unlock more useful information from the web. Also: How to get rid of AI Overviews in Google Search: 4 easy ways This marks the first time that a new AI model from Google has been fused with its search engine from the jump, which signals a growing level of confidence from the company as it races against OpenAI, Microsoft, Meta, and Amazon -- both to deploy new models and make them accessible through existing consumer-facing tools. The newly upgraded Google search is designed to simultaneously optimize for both range and specificity: it covers a wider portion of the web to search for all relevant results to a given query, and is also engineered to read between the lines of that query, as it were, to get a clear sense of the user's true intent. "Gemini 3 brings incredible reasoning power to Search because it's built to grasp unprecedented depth for your hardest questions," Elizabeth Reid, VP and Head of Search at Google, wrote in a company blog post. Also: I tried Google's new trip-planning AI tool, and I'll never plan my own trip again Google has growing competition in this regard. AI startups like OpenAI and Perplexity have launched their own AI-powered web browsers with an eye toward stealing a slice of the pie that's long been hoarded by Google with its undisputed dominance over online search. But Google has the obvious advantage: that millions of people already rely on its browser and search engine, which means that it can easily embed its new AI model into those people's daily online routines, just as it did -- like it or not -- with AI Overviews. Reid added in the blog post that, in the coming weeks, Google will also update its automatic model selection feature in Search, so that the most challenging queries automatically get funneled to Gemini 3 while older and faster models tackle easier tasks. Google Search is also getting a multimodal upgrade thanks to the newly released Gemini 3. Also: I found an open-source NotebookLM alternative that's powerful, private - and free Rather than just responding with text, web links, and images, Gemini 3 in AI Mode can automatically generate visual aids to help users gain a more thorough understanding of the information they're seeking. "When the model detects that an interactive tool will help you better understand the topic, it uses its generative capabilities to code a custom simulation or tool in real-time and adds it into your response," Reid wrote in the blog post. If you're trying to wrap your mind around the infamous double-slit experiment, for example -- which is foundational to quantum dynamics and shows that subatomic particles can act as both particles and waves -- the newly upgraded, multimodal Google search might provide you with an interactive simulation so that, rather than just reading about the experiment, you can directly engage with it. Welcome news, no doubt, to the many people out there who consider themselves visual and/or hands-on learners. Gemini 3 Pro, the first of the new family of Gemini 3 family of models, is available now for Google AI Pro and Ultra subscribers. To take the new model's search capabilities for a spin, just select "Thinking" from the drop-down menu in AI Mode. The company plans to release the new model via AI mode to all US users soon, with higher limits for Google AI Pro and Ultra subscribers.

[12]

Gemini 3 is almost as good as Google says it is

Google set the bar high for Gemini 3. It's promising a bunch of upgraded features in its shiny new AI model, from generating code that produces interactive 3D visualizations to "agentic" capabilities that complete tasks. But as we've seen in the past, what's advertised doesn't always match up to reality. So we put some of Google's claims to the test and found that Gemini 3 delivers reasonably well -- with caveats. Google announced the Gemini 3 family of models earlier this week, with the flagship Gemini 3 Pro rolling out to users first. Gemini 3 Pro is supposed to come with big upgrades to reasoning, along with the ability to provide more concise and direct responses compared to Google's previous models. Some of the biggest promised improvements are to Canvas, the built-in workspace inside the Gemini app, where you can ask the AI chatbot to generate code, as well as preview the output. When building in Canvas, Google says Gemini 3 can interpret material from different kinds of sources at the same time, like text, images, and videos. The model can handle more complex prompts as well, allowing it to generate richer, more interactive user interfaces, models, and simulations, according to Google. The company says Gemini 3 is "exceptional" at zero-shot generation, too, which means it's better at completing tasks that it hasn't been trained on. For my first test, I tried out one of the more complex requests that Google showed off in one of its demos: I asked Gemini 3 to create a 3D visualization of the difference in scale between a subatomic particle, an atom, a DNA strand, a beach ball, the Earth, the Sun, and the galaxy, as shown here. Gemini 3 created an interactive visual similar to what Google demonstrated, allowing me to scroll through and compare the size of different elements, which appeared to correctly list each one from small to large, starting at the proton and maxing out at the cosmic web. (To be fair, I'd hope Gemini could figure out that a beach ball is much smaller than the Sun.) It included almost everything shown in the demo, but its image quality fell short in a couple of areas, as the 3D models of the strand of DNA and beach ball were quite dim compared to what Google showed. I saw much of the same when feeding Google's other demos into Gemini. The model spit out the correct concept, but it was always a little shoddier, whether it had lower resolution or was just a little more disorganized. Gemini 3's output didn't quite stack up to Google's demo when I tried something a little simpler, either. I asked it to re-create a model of a voxel-art eagle sitting on a tree branch, and while my results were quite similar to the demo, I couldn't help but notice that the eagle didn't have any eyes, and the trees were trunkless. Branching out from Google's example, a voxel-style panda came out alright, but standard 3D models of a penguin and turtle came out quite primitive, with little to no detail. But Gemini 3 isn't just built for prototyping and modeling; Google is testing a new "generative UI" feature for Pro subscribers that packages its responses inside a "visual" magazine-style interface, or in the form of a "dynamic" interactive webpage. I only got access to Gemini 3's visual layout, which Google showed off as a way to envision your travel plans, like a three-day trip to Rome. When I tried out the Rome trip prompt, Gemini 3 presented me with what looked like a personalized webpage featuring an itinerary, along with options to customize it further, such as whether I'd prefer a relaxed or fast-paced vacation or if I prioritize certain dining styles. Once you submit your preferences, Gemini 3 will redesign the layout to match your selections. I found that this feature can provide interactive guides on other topics, too, like how to build a computer or set up an aquarium. Next up, I did a little experimenting with Gemini Agent, a feature Google is testing for Ultra subscribers inside the Gemini app. Like other agentic features, Gemini Agent aims to perform tasks on your behalf, such as adding reminders to your calendar and creating reservations. One example shared by Google shows Gemini Agent organizing a Gmail inbox, so I asked the tool to do the same -- and, well, it followed my orders. It found 99 unread emails from the last week and displayed them inside an interactive chart. Gemini provided options to set up reminders for what appeared to be the most important ones, such as RSVPs and a bill, while offering buttons to archive emails it identified as promotions. I asked Google Gemini to schedule a reminder to pay my bill, and the AI assistant put it inside Google Tasks with the correct due date. When I asked it to pay the bill, it navigated the billing interface and came close to asking me to enter my payment details, but (given the security concerns around agentic AI) I stopped short of letting it proceed. While you could just organize your inbox manually, I found Gemini 3's assistance somewhat helpful, as it dug up a few forgotten emails that I might've missed. You can also ask Gemini to find and unsubscribe from spammy email providers in bulk, which is nice. Between Perplexity's AI assistant, ChatGPT, and Gemini, Google's AI chatbot (predictably) offers the richest integration with Gmail. Perplexity will pull up emails listed in your inbox, but you'll need to tell it which ones to keep, archive, or delete, instead of just hitting a button like you can with Gemini. For some reason, ChatGPT refused to organize my inbox, claiming its integration with Gmail is in a "read-only" mode despite readily sending an email through the app on my behalf. But while Gemini is directly connected to Gmail, it was still far slower at sending emails in the app when compared to Perplexity. Gemini almost managed to book a restaurant reservation without intervention, only to falsely tell me that there's a "cost" associated with making the booking right before finalizing it. When I asked about the charge, Gemini 3 backpedaled and said it "likely referred to" the restaurant's 16 percent service charge. It then proceeded to ask me to confirm my reservation three times and then told me there was a financial transaction involved again. Sigh. Again, I felt that I could complete these tasks far faster myself. Despite the hiccups with completing tasks, Gemini 3 Pro's interactive visualization features were impressive, and I could see how interactive models or visual layouts could be useful in some scenarios -- though I can't see myself using them on a daily basis, and Gemini's text-based answers are usually informative enough for me. For now, I think I'll just keep using Gemini like I always do: for questions I might not immediately find by browsing the web.

[13]

Google announces Gemini 3 as battle with OpenAI intensifies

Google is debuting its latest artificial intelligence model, Gemini 3, as the search giant races to keep pace with ChatGPT creator OpenAI. The new AI model will allow users to get better answers to more complex questions, "so you get what you need with less prompting," Alphabet CEO Sundar Pichai said in one of several blog posts Google published Tuesday. Gemini 3 will be integrated into the Gemini app, Google's AI search products AI Mode and AI Overviews, as well as its enterprise products. The rollout begins Tuesday for select subscribers and will go out more broadly in the coming weeks. The announcement comes about eight months after Google introduced Gemini 2.5 and 11 months after Gemini 2.0. OpenAI, which kicked off the generative AI boom in late 2022 with the public launch of ChatGPT, introduced GPT-5 in August. "It's amazing to think that in just two years, Al has evolved from simply reading text and images to reading the room," Pichai wrote in one of Tuesday's posts. "Starting today, we're shipping Gemini at the scale of Google." The Gemini app now has 650 million monthly active users and AI Overviews has 2 billion monthly users, the company said. OpenAI said in August that ChatGPT hit 700 million weekly users. Pichai added that the newest model is "built to grasp depth and nuance," and said Gemini 3 is also "much better at figuring out the context and intent behind your request, so you get what you need with less prompting." Google's other AI models may still be used for simpler tasks, the company said. Alphabet and its megacap rivals are spending heavily to build out the infrastructure for AI development and to rapidly create more services for consumers and businesses. In their earnings reports last month, Alphabet, Meta, Microsoft and Amazon each lifted their guidance for capital expenditures, and collectively expect that number to reach more than $380 billion this year. Google said AI responses powered by Gemini 3 will be "trading cliché and flattery for genuine insight -- telling you what you need to hear, not what you want to hear," according to a statement from Demis Hassabis, CEO of Google's AI unit DeepMind. Industry critics have said today's AI chatbots are too sycophantic. Last week, OpenAI issued two updates to GPT-5. One is "warmer, more intelligent, and better at following your instructions," the company said, and the other is "faster on simple tasks, more persistent on complex ones."

[14]

Google's new Gemini 3 model arrives in AI Mode and the Gemini app

A few weeks short of , Google has . Naturally, the company claims the new system is its most intelligent AI model yet, offering state-of-the-art reasoning, class-leading vibe coding performance and more. The good news is you can put those claims to the test today, with Google making Gemini 3 Pro available across many of its products and services. Google is highlighting a couple of benchmarks to tout Gemini 3 Pro's performance. In , widely considered one of the toughest tests AI labs can put their systems through, the model delivered a new top accuracy score of 37.5 percent, beating the previous leader, Grok 4, by an impressive 12.1 percentage points. Notably, it achieved its score without turning to tools like web search. On , meanwhile, Gemini 3 Pro is now on top of the site's leaderboards with a score of 1,501 points. Okay, but what about the practical benefits of Gemini 3 Pro? In the , the new model will translate to answers that are more concise and better formatted. It also enables a new feature Google calls Gemini Agent. The tool builds on , the web-surfing Chrome AI the company debuted at the end of last year. It allows users to ask Gemini to complete tasks for them. For example, say you want help managing your email inbox. In the past, Gemini would have offered some general tips. Now, it can do that work for you. To try Gemini 3 Pro inside of the Gemini app, select "Thinking" from the model picker. The new model is available to everyone, though AI Plus, Pro and Ultra subscribers can use it more often before hitting their rate limit. To make the most of Gemini Agent, you'll need to grant the tool access to your Google apps. In Search, meanwhile, Gemini 3 Pro will debut inside of , with availability of the new model first rolling out to AI Pro and Ultra subscribers. Google will also bring the model to AI Overviews, where it will be used to answer the most difficult questions people ask of its search engine. In the coming weeks, Google plans to roll out a new routing algorithm for both AI Mode and AI Overviews that will know when to put questions through Gemini 3 Pro. In the meantime, subscribers can try the new model inside of AI Mode by selecting "Thinking" from the dropdown menu. In practice, Google says Gemini 3 Pro will result in AI Mode finding more credible and relevant content related to your questions. This is thanks to how the new model augments the fan-out technique that powers AI Mode. The tool will perform even more searches than before and with its new intelligence, Google suggests it may even uncover content previous models may have missed. At the same time, Gemini 3's better multi-modal understanding will translate to AI Mode generating more dynamic and interactive interfaces to answer your questions. For example, if you're researching mortgage loans, the tool can create a loan calculator directly inside of its response. For developers and its enterprise customers, Google is bringing Gemini 3 to all the usual places one can find its models, including inside of the Gemini API, AI Studio and Vertex AI. The company is also releasing a new agentic coding app called Antigravity. It can autonomously program while creating tasks for itself and providing progress reports. Alongside Gemini 3 Pro, Google is introducing Gemini 3 Deep Think. The enhanced reasoning mode will be available to safety testers before it rolls out to AI Ultra subscribers.

[15]

Marc Benioff Joins the Chorus, Says Google Gemini Is Eating ChatGPT's Lunch

Despite its excessive spending on data centers with no clear path to revenue generation in front of it, it seemed that if OpenAI had just one thing it could count on, it was audience capture. ChatGPT seemed like it would get the brand verbification treatment, being the term people used to reference AI. Now it seems like that might be slipping away. Since the release of Google's Gemini 3 model, it's like all anyone on the AI-obsessed corners of the web can talk about is how much better it is than ChatGPT. Marc Benioff, the CEO of Salesforce and longtime ChatGPT fanboy, is perhaps the loudest convert out there. On X, the exec said, "Holy shit. I’ve used ChatGPT every day for 3 years. Just spent 2 hours on Gemini 3. I’m not going back." He called the improvement of the model over past versions "insane," claiming that "everything is sharper and faster." He's not alone in that assessment. Exited OpenAI co-founder Andrej Karpathy called Gemini 3 "clearly a tier 1 LLM" with "very solid daily driver potential." Stripe CEO Patrick Collison went out of his way to praise Google's latest release, too, which is noteworthy given Stripe's partnership with OpenAI to build AI-driven transactions. Apparently, what he saw with Gemini was too hard not to comment on. The feedback from the C-suites around the tech world follows weeks of buzz over on AI Twitter that Gemini was going to be a game-changer. It certainly got presented as such right out of the gate, as Google made a point to highlight how its latest model topped just about every benchmarking test that was thrown at it (though your mileage may vary on just how meaningful any of those are). But even the folks behind the benchmark measures appear to be impressed. According to The Verge, the cofounder and CTO of AI benchmarking firm LMArena, Wei-Lin Chiang, said that the release of Gemini 3 represents "more than a leaderboard shuffle" and “illustrates that the AI arms race is being shaped by models that can reason more abstractly, generalize more consistently, and deliver dependable results across an increasingly diverse set of real-world evaluations.†The timing of Google's resurgence in the AI space could not come at a worse time for OpenAI, which currently cannot shake questions from skeptics who are unclear on how the company is ever going to make good on its multi-billion-dollar financial commitments. The company has been viewed as a linchpin of the AI industry, and that industry has increasingly received scrutiny for what seems to be some circular investments that may be artificially propping up the entire economy. Now it seems that even its image as the ultimate innovator in that space is in question, and it has a new problem: the fact that Google can definitely outspend it without worrying nearly as much about profitability problems.

[16]

Gemini 3 is here: Google's most advanced model promises better reasoning, coding, and more

Gemini 3 is Google's most intelligent AI model to date, promising improvements in reasoning, coding, and multimodal capabilities. Users will be able to ask even more difficult and puzzling questions to Gemini (including logic puzzles and math problems), and find that it aces them with ease. The AI model will also be able to execute even more complex coding tasks with this update. Furthermore, it also promises to excel in multimodal analysis, enabling users to combine media, text, and other formats within the same prompt for a seamless, all-around experience. All of this comes with a better grasp of the context and intent behind your prompt.

[17]

Google unveils Gemini 3 AI model, Antigravity agentic development tool

Gemini 3 excels at coding, agentic workflows, and complex zero-shot tasks, while Antigravity shifts AI-assisted coding from agents embedded within tools to an AI agent as the primary interface, Google said. Google has introduced the Gemini 3 AI model, an update of Gemini with improved visual reasoning, and Google Antigravity, an agentic development platform for AI-assisted software development. Both were announced on November 18. Gemini 3 is positioned as offering reasoning capabilities with robust function calling and instruction adherence to build sophisticated agents. Agentic capabilities in Gemini 3 Pro are integrated into agent experiences in tools such as Google AI Studio, Gemini CLI, Android Studio, and third-party tools. Reasoning and multimodal generation enable developers to go from concept to a working app, making Gemini 3 Pro suitable for developers at any experience level, Google said. Gemini 3 surpasses Gemini 2.5 Pro at coding, mastering both agentic workflows and complex zero-shot tasks, according to the company. Gemini 3 is available in preview at $2 per million input tokens and $12 per million output tokens for prompts of 200K tokens or less through the Gemini API in Google AI Studio and Vertex AI for enterprises. Google Antigravity, meanwhile, shifts from agents embedded within tools to an AI agent as the primary interface. It manages surfaces such as the browser, editor, and the terminal to complete development tasks. While the core is a familiar AI-powered IDE experience, Antigravity is evolving the IDE toward an agent-first future with browser control capabilities, asynchronous interaction patterns, and an agent-first product form factor, according to Google. Together, these enable agents to autonomously plan and execute complex, end-to-end software tasks. Available in public preview, Antigravity is compatible with Windows, Linux, and macOS.

[18]

Google's latest AI model invades Search on day one

For the past week now, the tech (and gambling) sphere has been buzzing with anticipation about Google's latest Gemini model. The speculation surrounding Gemini 3, however, finally ends now. The tech giant, in a new keyword blog post, just made its most intelligent large language model official, paired with 'Generative Interfaces,' and an all-new 'Gemini Agent.' Gemini 3 is official There's a lot that's new with Gemini 3, but more importantly, this marks the first time Google has brought its new flagship AI model straight to Google Search (starting with AI Mode) on day one. According to the tech giant, the new model will set a new bar for AI model performance. "You'll notice the responses are more helpful, better formatted and more concise," wrote Google, adding that Gemini 3 is the best model in the world for multimodal tasks. This means that for tasks like analyzing photos, reasoning, document analysis, transcribing lecture notes, and more, you'll notice better performance from Gemini 3 than its predecessors (and potentially even competitors). On paper, Gemini 3 Pro boasts a score of 1501 on LMArena, ranking higher than Gemini 2.5 Pro's 1451 score. Google AI Pro and Ultra subscribers in the US can start experimenting with Gemini 3 Pro starting today. To do so, head to Google Search > AI Mode > select 'Thinking' from the model drop-down. The model will expand to everyone in the US "soon," with AI Pro and Ultra plan holders retaining higher usage limits. Generative interfaces end Gemini's static UI Think of generative interface as dynamic prompt-based UIs that change depending on your specific requests. The new feature is powered by two experiments, namely visual layout and dynamic view. The former kicks in when manually selected. Instead of answering your queries in a plain text-based format, visual layout triggers an immersive, magazine-style view, complete with photos and module. For reference, prompts like "plan a 3-day trip to Rome next summer" will highlight a visual itinerary -- something like this: Dynamic view, on the other hand, changes the entire Gemini user interface. Leveraging Gemini 3's agentic coding capabilities, the feature essentially designs and codes a custom UI in real-time. The UI it designs is suited to your prompt. For example, prompting something like "explain the Van Gogh Gallery with life context for each piece" will highlight something like this: Dynamic View and visual layout are rolling out now. Gemini Agent arrives Likely the most ambitious of the bunch, Gemini Agent, as Google describes it, is "an experimental feature that handles multi-step tasks directly inside Gemini." The agent, which can connect to your Google apps like Calendar, reminders, and more, can do a lot. For example, you can simply ask it to "organize my inbox," and it will go through your to-dos and even draft replies to emails for your approval. Alternatively, you can give the agent complex multi-command tasks to fulfill. Think something like "Research and help me book a mid-size SUV for my trip next week under $80/day using details from my email." The agent would locate flight information from Gmail, compare rentals within budget, and prepare the booking for you. Powered by Gemini 3, the agent, which needs to be manually selected from the Gemini app's 'tools,' can take action across other Gemini tools like Deep Research, Canvas, connected Workspace apps, live browsing, and more. Gemini Agent is available to try out starting today, but only for US-based Google AI Ultra subscribers on the web. The tech giant did not hint at the feature expanding to more users anytime soon.

[19]

Google launches Gemini 3 with major boost in AI reasoning power

Google just fired the next shot in the AI arms race, and it's a big one. The company has unveiled Gemini 3, calling it its most powerful reasoning and multimodal model yet, and positioning it as the centerpiece of a new era of agentic, deeply interactive AI. The new release marks a leap in performance across nearly every major benchmark, from mathematics to multimodal analysis. Google says Gemini 3 Pro, rolling out today in preview, is now baked across a suite of its consumer and developer products.

[20]

Google's Gemini 3 is finally here and it's smarter, faster, and free to access

The model is faster, smarter, and better at coding and multimodality. With its Gemini AI models, Google has integrated its AI assistant across several of its most popular platforms, including Search, Google Workspace, and Android devices. Now, the company is releasing its latest and greatest model to upgrade user experiences throughout its ecosystem. On Tuesday, Google finally launched Gemini 3, which the company claims is the "best model in the world for multimodal understanding and our most powerful agentic and vibe coding model yet." The claim is supported by benchmark data, crowd-sourced Arena results, and more advanced use cases that the chatbot has not previously been able to tackle. Also: Let Gemini make your next slide presentation for you - here's how The best part? Everyone can try it across Google's suite of tools, including Search, the Gemini App, AI Studio, and Vertex AI, as well as a brand-new agentic development platform, Google Antigravity. To learn about what's new and what you can expect, keep reading below. Google is kicking off the Gemini 3 era with the launch of Gemini 3 Pro. Gemini 3 Pro outperforms its predecessor, Gemini 2.5 Pro, across all major AI benchmarks -- but before I get into the data, here's what it practically means for you. Gemini 3 Pro has been designed to provide users with better quality answers that have a new "level of depth and nuance," according to Google. This includes more smart, concise, and direct answers that focus on helpfulness rather than flattery. Of course, as the benchmarks support, the model is designed to be smarter and reason more effectively, demonstrating improved performance in Ph.D.-level reasoning that enables it to better tackle complex problems, such as those in science and mathematics. Also: How to turn off Gemini in your Gmail, Photos, Chrome, and more - it's easy to opt out of AI Google said the model also pushes the frontiers of multimodal reasoning by being able to synthesize information about a topic across multiple modalities, including text and images, video, audio, and code. For everyday help, this means you can input information in more ways, have the model understand, and output responses in whichever multimodal medium is best fit for your response. Lastly, for developers, the model reflects a major win, as it's the best vibe coding and agentic coding model Google has released, climbing to the top of the WebDev Arena, a leaderboard compiled through user votes after comparing the anonymous models' ability to build a site and voting for the best one. Developers can leverage coding capabilities through Google AI Studio, Vertex AI, Gemini CLI, and third-party platforms such as Cursor, GitHub, JetBrains, and others. The company also launched Google Antigravity, a brand-new agent development platform that functions as an active coding partner, allowing agents to plan and execute complex, end-to-end software tasks and even validate their own code. Also: Gemini is gaining fast on ChatGPT in one particular way, according to new data The agentic capabilities don't end there, with Google sharing that it will be able to take action on your behalf with more complex workflows, such as reorganizing your Gmail inbox. However, these more advanced capabilities are made accessible through the Gemini Agent in the Gemini App for Google AI Ultra subscribers. Gemini 3 Pro skyrocketed to the top of the LMArena Leaderboard with a score of 1,501 points, a noteworthy feat as that score is compiled through anonymous voting against top models from Anthropic, Meta, XAI, DeepSeek, and more. Reflecting its advanced reasoning, the model scored a 91.9% on the GPQA Diamond, a benchmark used to evaluate Ph.D.-level reasoning, a "Top-score" on Humanity's Last Exam (37.5% without usage of tools), a multimodal exam that tests a variety of fields, and an 81% on the MMMU-Pro and 87.6% on the Video MMMU, benchmarks used to test multimodal reasoning. Also: Gemini for Home is finally rolling out for early access - here's how to try it first The advanced reasoning capabilities also translate to higher scores on Math benchmarks, such as the Math-Arena-Apex, where the model scored a state-of-the-art 23.4%, according to Google. The company also launched Gemini 3 Deep Think, which has deeper intelligence, reasoning, and understanding capabilities. The Deep Think version outperformed Gemini 3 on Humanity's Last Exam (41% without using tools) and GPQA Diamond (93.8%). Everyone can access Gemini 3 in the Gemini App. To access Gemini 3 in AI Mode in Search, users must have a Google AI Pro or Ultra subscription. Developers can access it in the Gemini API in AI Studio, Antigravity, and Gemini CLI. Also: I let Gemini Deep Research dig through my Gmail and Drive - here's what it uncovered Lastly, enterprises can access it in Vertex AI in Gemini Enterprise.

[21]

Google is launching Gemini 3, its 'most intelligent' AI model yet

Google is beginning to launch Gemini 3 today, a new series of models the company says is its "most intelligent" and "factually accurate" AI systems yet. They're also a chance for Google to leap ahead of OpenAI following the rocky launch of GPT-5, potentially putting the company at the forefront of consumer-focused AI models. For the first time, Google is giving everyone access to its new flagship AI model -- Gemini 3 Pro -- in the Gemini app on day one. It's also rolling out Gemini 3 Pro to subscribers inside Search. Tulsee Doshi, Google DeepMind's senior director and head of product, says the new model will bring the company closer to making information "universally accessible and useful" as its search engine continues to evolve. "I think the one really big step in that direction is to step out of the paradigm of just text responses and to give you a much richer, more complete view of what you can actually see." Gemini 3 Pro is "natively multimodal," meaning it can process text, images, and audio all at once, rather than handling them separately. As an example, Google says Gemini 3 Pro could be used to translate photos of recipes and then transform them into a cookbook, or it could create interactive flashcards based on a series of video lectures. You'll spot some of these improvements across Google's suite of products, including the Gemini app, where you can build more "full-featured" programs inside the built-in workspace, Canvas. The upgraded AI model will also enable "generative interfaces," a tool Google is testing in Gemini Labs that allows Gemini 3 Pro to create a visual, magazine-style format with pictures you can browse through, or a dynamic layout with a custom user interface tailored to your prompt. Gemini 3 Pro in AI Mode -- the AI-powered Google Search feature -- will similarly present you with visual elements, like images, tables, grids, and simulations based on your query. It's also capable of performing more searches using an upgraded version of Google's "query fan-out technique," which now not only breaks down questions into bits it can search for on your behalf, but is better at understanding intent to help "find new content that it may have previously missed," according to Google's announcement. Google is also not so subtly jabbing at OpenAI, describing Gemini 3 Pro as less prone to the type of empty flattery espoused by ChatGPT. Doshi says you'll see "noticeable" changes to Gemini 3 Pro's responses, which Google describes as offering a "smart, concise and direct, trading cliche and flattery for genuine insight -- telling you what you need to hear, not just what you want to hear." The company says it also shows "reduced sycophany," an issue OpenAI had to address with ChatGPT earlier this year. Along with these improvements, Gemini 3 Pro comes with better reasoning and agentic capabilities, allowing it to complete more complex tasks and "reliably plan ahead over longer horizons," according to Google. The AI model is powering an experimental Gemini Agent feature that can perform tasks on your behalf inside the Gemini app, such as reviewing and organizing emails, or researching and booking travel. Gemini 3 Pro now sits at the top of LMArena's leaderboard, a popular platform used for benchmarking AI models. A Deep Think mode enhances the model's reasoning capabilities even further, though it's currently only available to safety testers. Gemini 3 Pro is available inside the Gemini app for everyone starting today, while Google AI Pro and Ultra subscribers in the US can try out Gemini Agent in the Gemini app, along with Gemini 3 Pro inside AI Mode by selecting "Thinking" from the model dropdown.

[22]

Google enters 'new era' of AI with its advanced Gemini 3 model

Google is launching Gemini 3, its most intelligent AI model yet. From the outset, users will have access to the flagship Gemini 3 Pro model, which is multimodal from the ground up and can process text, image, audio, and video in the same flow. This model is said to top several AI benchmark tests in logic, math, and fact checking. It's also said to provide shorter, more direct, and less biased responses. One new feature is that Gemini 3 can generate interfaces in the form of presentations or web page layouts based on the user's instructions. There is also an improved search feature with extended "query fan-out" that will allow the model to better understand the intent behind a question. In both the Gemini app and Google Search's AI Mode, answers are now also displayed in a way that combines visual elements such as images, tables, grids, and even interactive simulations. On top of that, Gemini 3 Pro has gained sharper reasoning and planning abilities, allowing it to handle more long-term and complex tasks. It powers Google's new experimental tool Gemini Agent, which can, for example, organize emails or book travel for users. Gemini 3 Pro is now available in the Gemini app in preview form. In the US, Google AI Pro and Ultra subscribers can also test the Agent feature as well as the new Advanced AI mode.

[23]

Gemini app rolling out Gemini 3 Pro as 'Gemini Agent' comes to AI Ultra

Google today is introducing new features for the Gemini app, led by Gemini Agent, made possible by Gemini 3 Pro. For starters, responses in the Gemini app are "more helpful, better formatted, and more concise." In Canvas, apps are "more full-featured," with Google calling Gemini 3 its "best vibe coding model ever." Gemini 3 Pro is available for all users starting today. From the model picker, select "Thinking" in a new change also shared by AI Mode. Google AI Plus, Pro, and Ultra subscribers will get higher limits. The "Labs" concept is coming to the Gemini app. Visual layout is the first experiment that creates an "immersive, magazine-style view complete with photos and modules" to answer your prompt. Gemini will show sliders, checkboxes, and other UI to further customize the result. For example, when trip planning, you might get a slider to set the pace, while filters what you select activity type. Dynamic view sees Gemini 3 design and code a "fully customized interactive response for each prompt." At launch, you might only see one of these experiments as Google gathers feedback. Gemini Agent brings what Google has learned with Project Mariner to the Gemini app. This experimental feature "handles multi-step tasks directly inside Gemini." This is made possible by Gemini 3's advanced reasoning, live web browsing, and tool use, including Canvas, Deep Research, Gmail, and Google Calendar. Gemini will have you confirm before sending emails, making purchases, and other critical actions, with users able to take over at any time. The prompt below is "organize my inbox," with the user selecting "Agent" from the Tools menu. Gemini Agent then groups together related emails with a table that lets you quickly archive and mark as read emails by tapping the checkmark. It can also create new Google Tasks reminders. Meanwhile, Google can now generate an email reply and send it directly with the Gemini app. The inline email interface lets you add recipients and change the body as needed. Another example is: "Book a mid-size SUV for my trip next week under $80/day using details from my email." Gemini will "locate your flight information, research rentals within budget and prepare the booking." This is available today for Google AI Ultra subscribers ($249.99 per month) in the US.

[24]

Gemini 3 is here -- 9 prompts to use that make the most of its new features