Google Rolls Out Experimental Gemini 2.0 Advanced: A Leap in AI Capabilities

11 Sources

11 Sources

[1]

Gemini's latest AI feature could be the future of web browsing

Google's new agentic AI feature could be your new research assistant. The web is filled with resources, which makes it possible to find all the answers you need in one place, but this can also make finding exactly what you need a long and overwhelming process. Google's new Deep Research offering aims to help you manage that process. Recently, Google announced several AI advancements and releases. One of the biggest standouts for everyday users is Deep Research, an agentic feature for Gemini Advanced that can conduct thorough research on your behalf. Also: 15 surprising ways I used AI to save me weeks of work in 2024 With Deep Research, you can pose a question about the topic you want to learn more about. The agentic feature will create a multi-step research plan, which you can approve or disapprove. If the plan is approved, it will browse the web for you for a few minutes. The Deep Research process mimics what humans do when engaging in research processes, such as browsing the web, making new searches with their findings, and continuing the process multiple times until it has collected sufficient information to answer your question. Those findings are then used to create a comprehensive report, organized with links to the original sources so that you can dive deeper into a particular area of interest. That report can be refined with follow-up questions, and once you are happy with the result, you can export it into Google Docs. The experience is now live for all Gemini Advanced users on the desktop and mobile web -- with plans to roll out to the app in early 2025. To use it, just select "1.5 pro with Deep Research" from the model toggle picker under the Gemini Advanced logo in the upper left-hand corner. Also: 3 lucrative side hustles you can start right now with OpenAI's Sora video generator Gemini Advanced is part of the Google One AI Premium plan, costing users $20 per month. Other perks of the plan include 2TB of storage, Google Photos editing features, 10% back in Google Store rewards, Google Meet premium video calling features, and Gemini for Workspace. Google also offers users a two-month free trial as part of the new plan.

[2]

Gemini's Deep Research Capability Now Rolling Out to Paid Subscribers

The Deep Research capability can be selected from Gemini's model switcher Google has expanded Gemini's agentic function, Deep Research, to more than 150 countries and 45 languages. The feature was unveiled alongside the Gemini 2.0 family of AI models earlier this month. However, its capabilities are currently tied to the 1.5 Pro artificial intelligence (AI) model. The tech giant said that Deep Research can create multi-step research plans, run web searches, and prepare a detailed report on complex topics. Currently, Deep Research is only available in the web version of Gemini when accessing via a desktop device. In a post on X (formerly known as Twitter), the official handle of Google Gemini App announced that the Deep Research feature is now available in more than 150 countries in 45 languages for paid subscribers. Notably, this means Gemini 1.5 Pro with Deep Research will be available everywhere Gemini works. It will be available in Arabic, Bengali, Chinese (Simplified / Traditional), Dutch, English, French, German, Gujarati, Hindi, Italian, Japanese, Korean, Latvian, Malayalam, Norwegian, Portuguese, Russian, Spanish, 2 Swahili, Swedish, Tamil, Urdu, Vietnamese, and other languages. The Mountain View-based tech giant stated that the Deep Research feature will only be available with the Google One AI Premium Plan subscription which gives access to the Gemini Advanced. In India, a monthly subscription costs Rs. 1,950. The subscription is only available to those above the age of 18. Additionally, the company added that the availability of the feature may vary by device, country, and language. As explained at the time of launch, Deep Research is an agentic feature. The user only has to add a query that requires technical and niche information or is complex and needs to be broken down into easier parts. Once the prompt is fed, the AI agent creates a multi-step research plan. Then, based on the plan, it finds relevant research papers, and articles, looks into recent developments in the field, and more. Based on its learnings, the agent can also run several new web searches to gain a deeper understanding of the topic. Once the agent has enough information, it generates a detailed report. The user has the option to intervene during any step and either edit the research plan or add a follow-up prompt to modify the output.

[3]

Google Gemini -- everything you need to know

Google launched the first Gemini model in December 2023 when its chatbot was still named Bard. Since then, the search giant has gradually adopted the name Gemini for almost everything it does related to AI. The Bard chatbot was the first to fall, becoming simply Gemini earlier this year. This was soon followed by the Gemini Assistant largely replacing the previous assistant on Android. The company also uses Gemini in Docs and for developers. After the initial flurry of activity things seemed to slow down for Google. Rather than a new name, as they'd done previously, the company doubled down on Gemini, adding it to ever more products and services. Then, in December Google released Gemini 2.0. CEO Sundar Pichai described its release as the start of the Agent Era. This is where AI models perform tasks on your behalf based on an initial set of instructions. The Gemini model has been trained not just on text, but as a multimodal model which can process images, video, audio and even computer code. This is similar to OpenAI's GPT-4o and as of Gemini 2 it can also output those modalities. In line with Google's typical mode of operation, the latest version of the model has been quietly developed over the past months and offers some features that more hyped products like ChatGPT have overlooked. For example, there are now over 50,000 variations of Gemini on Hugging Face, covering a multitude of languages and uses. Unfortunately, this variety has generated quite a bit of confusion. The latest flurry of Gemini launches has made things even worse, and so we thought it was time to lay out a clear map of the Gemini universe to make things easier to understand. The first thing to realize is Google likes to mix and match model technology and applications, with variations of the same name. Once you get that clear, everything else starts to slot into place. In the beginning was DeepMind, the AI lab launched in London in 2010. This foundation stone of the whole AI industry delivered the LaMDA, PaLM, and Gato AI models to the world. Gemini is the latest iteration of this generational family. Version 1.0 of the Gemini model was launched in three flavors, Ultra, Pro and Nano. As the names suggest, the models ranged from high power down to petite versions designed to run on phones and other small devices. Note that much of the confusion from the subsequent launches has come about because of Google's philosophical tussle between its search and AI businesses. AI cannibalism of search has always been a sword hanging above the company's head, and has contributed mightily to its 'will they, won't they' attitude towards releasing AI products. Gemini 1.5, released ten months ago, was an incremental improvement of the original model, incorporating mixture of experts (MoE) tech, a one million token context window and new architecture. Since that time we've seen the launch of Gemini 1.5 Flash, Gemini 1.5 Pro-002 and Gemini 1.5 Flash-002 - the latter released just three months ago. At the same time the company also made a surprising foray into open model territory, with the launch of the free Gemma product. These 2B and 7B parameter models were seen as a direct response to Meta's release of the Llama model family. Gemma 2.0 was released five months later. Gemini 2.0 launched in December 2024, and is billed as a model for the agentic era. The first version to be released was Gemini 2.0 Flash Experimental, a high performance multimodal model, which supports tool use like Google search, and function calling for code generation. Within weeks the company launched Gemini 2.0 Experimental Advanced, apparently the full version of the current generation. We say apparently because at this point in time nobody's really sure what's full and what's early code. What can be said with certainty is that Gemini 2.0 Flash Experimental is an extremely capable and performant AI model all round. Google is both a research and a product company. DeepMind and Google AI lead the research and release the models. The other side of Google takes those models and puts them into products. This includes hardware, software and services. Chatbots lead the charge in terms of Google applications, as they do for so many other foundation model suppliers. Again, this being Google, things get a little bit fuzzy in terms of names and functions. Gemini chatbot. This used to be called Bard, and is completely separate to the Gemini model. Ten months ago Bard and Duet AI, another Google product, were merged together under the Gemini brand with the launch of an Android app. Subsequent to that action, Gemini chat has now been integrated into more Google products, including Android Assistant, the Chrome browser, Google Photos and Google Workspace. At the time of writing the Gemini Chatbot and legacy Android Assistant are offered as dual options on the latest versions of the Android phone operating system. Gemini Live is seen as the Google alternative to OpenAI's low latency, high speed Advanced Voice Mode, and is expected to roll out across Google Pixel smartphones in the near future. While Gemini as a chatbot might get most of the new models and attention from AI aficionados, most of the eyes on AI will be going to Gemini on mobile. This comes in two forms, first through the Gemini App on iPhone and Android, and then through its deep integration into the Android operating system. On Android developers can even use the Gemini Nano model in their own apps without having to use a cloud-based, or costly model to perform basic tasks. The deep integration allows for system functions to be triggered from Gemini, as well as the use of Gemini Live -- the AI voice assistant -- to play songs and more. The latest Gemini model launch has been accompanied by a series of major Google application releases or previews tied into the new model. The list is long and impressive. Some of them include: Outside of the mobile and web-based versions of Gemini there are some premium and developer focused products. These usually offer the most advanced models and features such as Deep Research in Gemini Advanced.

[4]

Gemini 2.0 'Experimental Advanced' is now available to paying subscribers

Google has dropped a preview of what 'might' be Gemini 2.0 Pro inside the web version of its popular chatbot -- dubbed Gemini 2.0 'Experimental Advanced'. The move comes less than a week after the company unveiled the Gemini 2.0 Flash Experimental model for free users of the AI platform. This new release caused quite a stir in the market because of its speed and accuracy. You need to subscribe to the $19.99 a month Gemini Advanced plan to use the new 'Experimental Advanced' model. It isn't clear what improvements it offers over Flash 2.0 but Google says it is better at math and coding. Both products are very much in early development, with reports that they can suffer from unexpected rate limits and other glitches. Even Google admitted that these new models "might not work as expected". It is not clear from the company's press release exactly what the difference is between Gemini 2.0 Experimental Advanced (aka gemini-exp-1206) and Gemini 2.0 Flash Experimental under the skin. The main distinction seems to be that the Flash version is very fast in operation and supports real-time tool use, while the latest experiment does not. However, that is common for experimental releases from all AI companies and it will get those features once it becomes a commercial release. It probably doesn't help that the Google product teams seemed to think it was a good idea to adopt a naming convention that is guaranteed to confuse everyone. There are now so many different varieties of Gemini on the AI menu lists, that we're in danger of losing sight of the fact that this is all one major AI model advance. I don't have Gemini Advanced but I was able to test the new Advanced model in the online Google AI Studio platform and found it offers some impressive features. I was able to upload a video, get the AI to accurately describe the content and write some captions. I also uploaded images and received similarly accurate descriptions of the animals they contained. The lack of hallucination was reassuring. There is currently something of a release war going on between the major AI model providers, with both OpenAI and Google releasing products almost every day over the recent weeks. The dust and confusion surrounding these launches is still far from settled, so it's too early to say right now what the eventual result will be, and who will end up the king of the mountain. So far as features go, Google has impressed with its ability to handle video and audio manipulation, as well as real world situations on smartphones using the stunning Gemini vision technology. However OpenAI still retains significant market respect with its o1 and GPT range of AI models, and o1 currently remains the champion of 'reasoning' models across the world. The first quarter of 2025 is likely to be the time when we see these AI goliaths battle it out in earnest, but until then we're all going to have to rely on more than a little conjecture and guesswork.

[5]

Google's experimental Gemini 2.0 Advanced model is here, but not for everyone

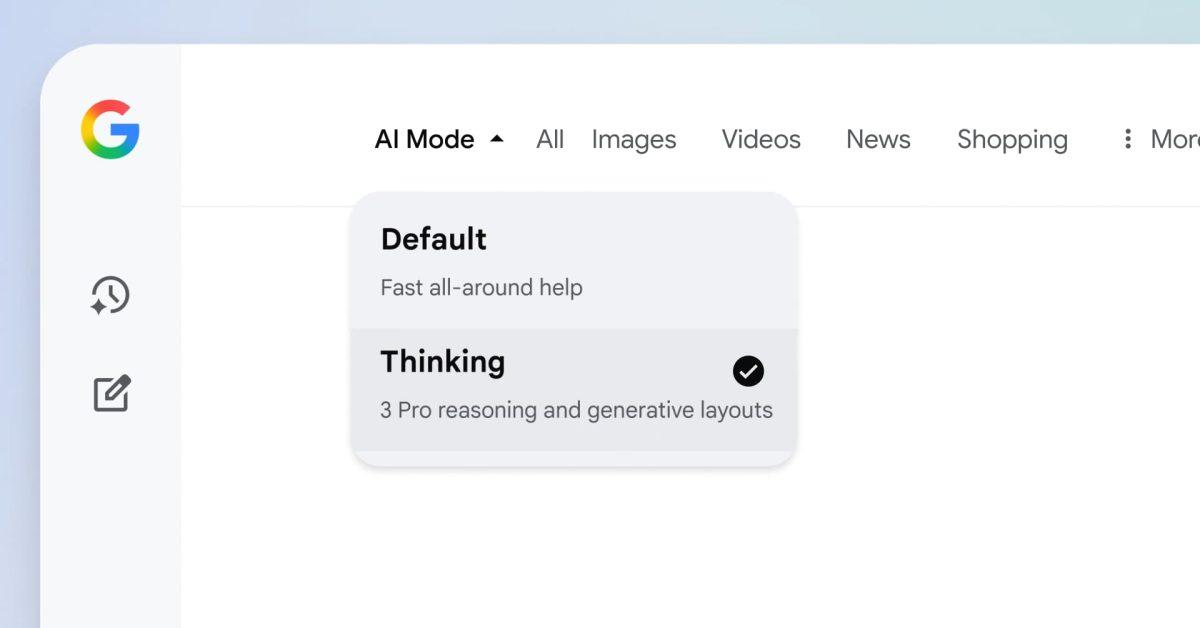

5 best Google Gemini extensions to help supercharge your productivity Summary Google has released an experimental version of Gemini 2.0 Advanced with 'significantly improved performance' in areas like math, coding, reasoning, and following instructions. The new model is exclusively available to Gemini Advanced subscribers. Gemini 2.0 Advanced is currently limited to Gemini on desktop and mobile web, and it should land on the Gemini Android app soon. ✕ Remove Ads Google announced its latest AI breakthrough in the form of Gemini 2.0 last week. For reference, Google released Gemini 1.0 late last year, back when the AI chatbot was still known as Bard. Fast-forward to May this year, and we got access to Gemini 1.5 Pro, featuring a massive 1 million-token context window. The context window was expanded to 2-million tokens later in June, followed by Gemini being updated to Gemini 1.5 Flash (free) in July. Earlier this week, the tech giant's Gemini 2.0 Flash began rolling out on the web and subsequently made its way to the Gemini Android app, complete with a new drop-down model selector. Now, the top-of-the-line Gemini-Exp-1206, which we'll refer to as 'Gemini 2.0 Advanced' for simplicity's sake, is beginning its web rollout too, which means it should start landing on Android devices late this week or early next. Related Gemini 2.0 Flash lands on Android with a new model selector in tow Similar to Gemini on the web Posts1 ✕ Remove Ads The rollout was announced by Google in a blog post, highlighting the new model's "significantly improved performance" when it comes to solving math problems, coding, reasoning, and instruction following. However, the tech giant also cautioned users ready to pounce that the new model is still in testing, which means that it will continue to lack access to real-time information and compatibility with some Gemini features until it leaves the early experimental phase. For what it's worth, the new model, even in its experimental phase, has climbed its way to the #1 spot on the Chatbot Arena LLM Leaderboard, which is essentially an open, community-driven platform for crowdsourced AI benchmarking. Elsewhere, Google has also introduced a new 'FACTS Grounding' benchmark, which essentially evaluates specific models' accuracy and ability to avoid hallucinations. The benchmarking tool is currently topped by Gemini 2.0 Flash and is yet to be updated with data from Gemini 2.0 Advanced. ✕ Remove Ads Exclusive to Gemini Advanced users, at least for now Related Gemini 2.0: Five major changes you need to know Gemini 2.0 brings new AI capabilities to Google's ecosystem Posts1 Unlike Gemini 2.0 Flash, access to the top-of-the-line model is strictly limited to Gemini Advanced, which was handed out for free with the purchase of all new Pixel 9 Pro series devices (including the Pixel 9 Pro Fold). If you don't own a Pixel 9 Pro device, Gemini Advanced is available via the $20/mo Google One AI Premium plan. If you're already a subscriber, toggling to Gemini 2.0 Advanced is as simple as tapping on the model drop-down on the top left and selecting '2.0 Experimental Advanced.' The new experimental model is available to try out on desktop and mobile web. If it follows Gemini 2.0 Flash's pattern, it should land on the Gemini Android app later this week or early next. ✕ Remove Ads

[6]

Gemini Advanced Users Can Now Access a New Gemini 2.0 AI Model

The new model is available on the web version of Gemini It is listed in the model switcher as Gemini-Exp-1206 Google said the new AI model is available in early preview Google is rolling out a new artificial intelligence (AI) model from the Gemini 2.0 family. Dubbed Gemini 2.0 Experimental Advanced, the large language model (LLM) will only be available to the paid subscribers of Gemini. The new model release comes just days after the Mountain View-based tech giant released the Gemini 2.0 Flash model to Gemini's Android app. Notably, the 2.0 Experimental Advanced model can currently only be accessed on the web version of the AI chatbot. In a blog post, the tech giant announced the release of the second Gemini 2.0 AI model. The official codename of the model is Gemini-Exp-1206 and it can be selected from the model switcher option placed at the top of the chatbot's web interface. Notably, only Gemini Advanced subscribers will be able to select this model currently. Although Google has just announced the new AI model, the name Gemini-Exp-1206 was first seen last week when the LLM appeared on the Chatbot Arena (formerly known as LMSYS) LLM Leaderboard at the top position with a score of 1374. It currently outranks the latest version of OpenAI's GPT-4o, Gemini 2.0 Flash, and the o1 series models. Available only on the web version of Gemini, the new AI model is said to offer significant improvements in performance across complex tasks such as coding, mathematics, reasoning, and instruction following. Google said it can provide detailed multi-step instructions for do-it-yourself (DIY) projects, something the previous models have struggled with. However, the tech giant warns that the 2.0 Experimental Advanced model is available in early preview and can sometimes not work as expected. Additionally, the AI model will currently not have access to real-time information and will be incompatible with some Gemini features. The company did not mention the features that will not work with the new LLM. Notably, Gemini Live is part of the Gemini Advanced subscription. It can be subscribed to via the Google One AI Premium plan which costs Rs. 1,950 a month, after one month of free trial.

[7]

You can now try Gemini 2.0 in 'Experimental Advanced' mode if you're a Gemini Advanced subscriber

Gemini 2.0 launched last week with the release of 2.0 Flash Experimental, but now the full version is finally available to the public via the Gemini home page, so long as you are a subscriber to Gemini Advanced, Google's AI subscription service. Both of the Gemini 2.0 LLMs you can choose are still in beta version. The new Gemini 2.0 LLMs available are 2.0 Flash Experimental, the new lightweight LLM designed for everyday help, and 2.0 Experimental Advanced, designed for tackling complex tasks. When Gemini Advanced customers go to the home page they'll now get the options for 2.0 Flash Experimental and 2.0 Experimental Advanced from the drop-down menu at the top of the screen. Options to use the older 1.5 Pro, 1.5 Flash 1.5 Pro with Deep Research, still exist. If you choose either Gemini 2.0 Flash Experimental or Gemini 2.0 Experimental Advanced, you'll continually get warnings that the AI "might not work as expected" before every answer, indicating that this is still very much a beta version. Mobile users of the Gemini app are currently still on the 1.5 Flash LLM. I've tried the new 2.0 Experimental Advanced and it seems to work as well as the older LLMs for most things, although 2.0 Flash Experimental did keep trying to generate an image of whatever I'd asked it, even though I hadn't asked it for an image. The new Gemini 2.0 LLM is described by Google as having "significantly improved performance on complex tasks such as coding, math, reasoning and instruction following." Gemini Advanced costs $19.99 (£18.99/AU$32.99) per month and comes as part of the Google One AI Premium subscription.

[8]

Gemini App on Android Will Now Let You Switch Between AI Models

Google said Gemini 2.0 Flash outperforms 1.5 Pro in several benchmarks Google has started rolling out the new Gemini 2.0 Flash artificial intelligence (AI) model to the Android app of the chatbot. The Mountain View-based tech giant released the first model in the Gemini 2.0 family on December 12. While the model was added to the web version of Gemini on the same day, the mobile apps did not get access to it immediately. However, it has now started rolling out to users, along with a new model switcher feature that lets users pick and choose the AI model. The next generation of Gemini AI models was announced nine months after the arrival of the Gemini 1.5 series. Google said that the new family of models offer improved capabilities, including native support for image and generation. Currently, only the Flash variant is available, which is the series' smallest and fastest model. It is currently available in an experimental preview. Those on the Google app for Android version 15.50 beta will soon see two changes in the Gemini app. First, the model information added at the top of the screen is now tappable. A downward arrow now appears between 'Gemini' and '1.5 Flash' for the free users. This can be used as the model switcher. Gadgets 360 staff members were able to verify the new feature. The second change is the addition of the new Gemini 2.0 Flash experimental model. Once a user taps on the model switcher, a bottom sheet appears listing the available AI models to choose from. While free users will only see 1.5 Flash and 2.0 Flash, those subscribed to Gemini Advanced will also see the 1.5 Pro model. Notably, Google had highlighted that the Gemini 2.0 Flash is available as an early preview and might not work as expected. Additionally, some Gemini features might not be compatible with the AI model till a full version is released. At launch, Google claimed that Gemini 2.0 Flash outperformed the 1.5 Pro model in several benchmarks during internal testing. Some of the benchmarks include the Massive Multitask Language Understanding (MMLU), Natural2Code, MATH, and Graduate-Level Google-Proof Q&A (GPQA).

[9]

Gemini 2.0 Flash lands on Android with a new model selector in tow

Hey Gemini: Google's hotword gets a refresh in official teaser video Summary Google's latest AI model, Gemini 2.0 Flash, is now available on Android devices, offering advanced image and audio capabilities. A new drop-down menu in the Gemini app allows users to easily switch between different AI models, including the experimental Gemini 2.0 Flash. The new model is available without a Gemini Advanced subscription. It is currently rolling out in beta with Google app version (15.50.39). ✕ Remove Ads Last week, Google announced Gemini 2.0, its novel AI model designed with native image and audio abilities in mind. The AI model, which began rolling out on the web version of Gemini on December 11, was slated to arrive on the Gemini mobile app at a later date. Well, it's here now -- and it brings a new and convenient model switcher drop-down with it. Related Google unleashes Gemini 2.0 with new image and audio powers for the AI agent era Almost exactly one year after Gemini 1.0 Posts If you frequent Gemini on the web, the new model switcher on Android will give you a sense of familiarity, appearing top and center on the mobile app's home screen. For reference, previously, Gemini app users only had the option to switch between Gemini and Gemini Advanced, with more granular model control exclusive to the web app. With the new update, users on Android can toggle between three models, namely Gemini 1.5 Pro, Gemini 1.5 Flash, and the all-new Gemini 2.0 Flash (currently in experimental phase). ✕ Remove Ads It's worth noting that the dropdown with Gemini 2.0 Flash is available across tiers, which means you'll gain access to the new model even if you don't pay for Gemini Advanced. However, unlike what is seen in the screenshot below, free users won't have access to Gemini 1.5 Pro. That shouldn't really make a big difference, considering that Gemini 2.0 Flash already outperforms 1.5 Pro in several key metrics -- the only benchmarks where it lags behind are: Novel, diagnostic long-context understanding evaluation, and Automatic speech translation. You can try out the new model now The dropdown menu and access to the new model are still not available in the latest Google app stable build (version 15.39.32), which was also highlighted by credible leaker AssembleDebug in their GApps Flags & Leaks Telegram group. This essentially means that you'll have to sideload Google App version 15.50.39 to get early access to the new features. ✕ Remove Ads Alternatively, you can just wait until the new model rolls out in stable. If you do go the sideload route, post-update, you'll likely have to force stop both the Google app and Gemini for the drop-down and new model to show up. Do so by heading into Settings → Apps → See all apps → Google → Force stop (and then repeat for the Gemini app). Thanks: Moshe ✕ Remove Ads

[10]

Updated Gemini app on iOS just ran laps around Apple Intelligence

Three models are available on iOS for Gemini Advanced users, which requires a Google One AI Premium subscription. With the latest 2.0 Flash model still in testing, you'll probably want to stick to one of the other two models for most inquiries. Here's a quick guide to each model: The update also adds Google Home integration to control your smart home devices. It can also now access your Google Photos directly within the Gemini app, so you can edit your images without having to upload images separately.

[11]

You can now try out the latest Gemini 2.0 Flash model on your phone

This feature gives users more control over their AI experience on their smartphones. Google Gemini is shaping up to be a great AI assistant, especially when you see how little others have progressed. While ChatGPT has an edge in a few areas, Gemini's deeper integration within Android flagships and tie-in with Google Search makes it almost second nature. Google recently announced the new Gemini 2.0 Flash model, which is said to outperform 1.5 Pro on key benchmarks. You can try this out on the web, but if you want to try it on your phone, you can now do so with the latest Google app beta.

Share

Share

Copy Link

Google has released an experimental version of Gemini 2.0 Advanced, offering improved performance in math, coding, and reasoning. The new model is available to Gemini Advanced subscribers and represents a significant step in AI development.

Google Unveils Experimental Gemini 2.0 Advanced

Google has taken a significant leap in artificial intelligence with the release of its experimental Gemini 2.0 Advanced model. This latest iteration of the Gemini AI family promises enhanced capabilities and performance across various domains, marking a new chapter in Google's AI development

1

2

.Key Features and Improvements

Gemini 2.0 Advanced boasts "significantly improved performance" in critical areas such as mathematics, coding, reasoning, and following instructions

5

. This experimental version builds upon the foundation laid by its predecessors, including Gemini 1.0 and 1.5, which were introduced over the past year5

.The new model has already made waves in the AI community, claiming the top spot on the Chatbot Arena LLM Leaderboard, a community-driven platform for AI benchmarking

5

. Additionally, Google has introduced a new 'FACTS Grounding' benchmark to evaluate model accuracy and reduce hallucinations, further demonstrating its commitment to AI reliability5

.Availability and Access

Currently, Gemini 2.0 Advanced is exclusively available to Gemini Advanced subscribers, who can access it through the $20/month Google One AI Premium plan

5

. Pixel 9 Pro series device owners receive complimentary access to Gemini Advanced5

. The model is accessible on desktop and mobile web platforms, with an Android app release expected soon5

.Multimodal Capabilities

One of the standout features of the Gemini model family is its multimodal training, allowing it to process and generate various types of content including text, images, video, audio, and even computer code

3

. This versatility positions Gemini as a powerful tool for a wide range of applications.Deep Research and Web Browsing

Google has also introduced "Deep Research," an agentic feature for Gemini Advanced that can conduct thorough research on behalf of users

1

. This feature creates multi-step research plans, browses the web, and compiles comprehensive reports with source links, mimicking human research processes1

2

.Related Stories

Global Expansion

The Deep Research capability has been expanded to more than 150 countries and is available in 45 languages, making it accessible to a global audience

2

. This expansion underscores Google's commitment to making advanced AI tools widely available.Ongoing Development and Caution

While the release of Gemini 2.0 Advanced is exciting, Google has cautioned users that the model is still in testing. As a result, it currently lacks access to real-time information and compatibility with some Gemini features

5

. This transparency highlights the ongoing nature of AI development and the need for continued refinement.Competition in the AI Landscape

The release of Gemini 2.0 Advanced comes amidst intense competition in the AI sector, with companies like OpenAI also frequently releasing new products and updates

4

. This rapid pace of innovation underscores the dynamic nature of the AI industry and the race to develop more capable and reliable AI models.As the AI landscape continues to evolve, Gemini 2.0 Advanced represents a significant step forward in Google's AI capabilities. Its improved performance, multimodal abilities, and expanding global reach position it as a formidable player in the ongoing development of artificial intelligence technologies.

References

Summarized by

Navi

[3]

Related Stories

Google Teases Exciting Updates for Gemini Advanced: Video Generation, AI Agents, and More

13 Feb 2025•Technology

Google Enhances Gemini AI on iOS: Deep Research Feature and Dedicated App

13 Feb 2025•Technology

Google Unveils Gemini Deep Research: AI-Powered Research Assistant Promises to Revolutionize Information Gathering

12 Dec 2024•Technology

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology