Google Unveils Ironwood: A Powerful AI Chip Focused on Inference

19 Sources

19 Sources

[1]

Google unveils Ironwood, its most powerful AI processor yet

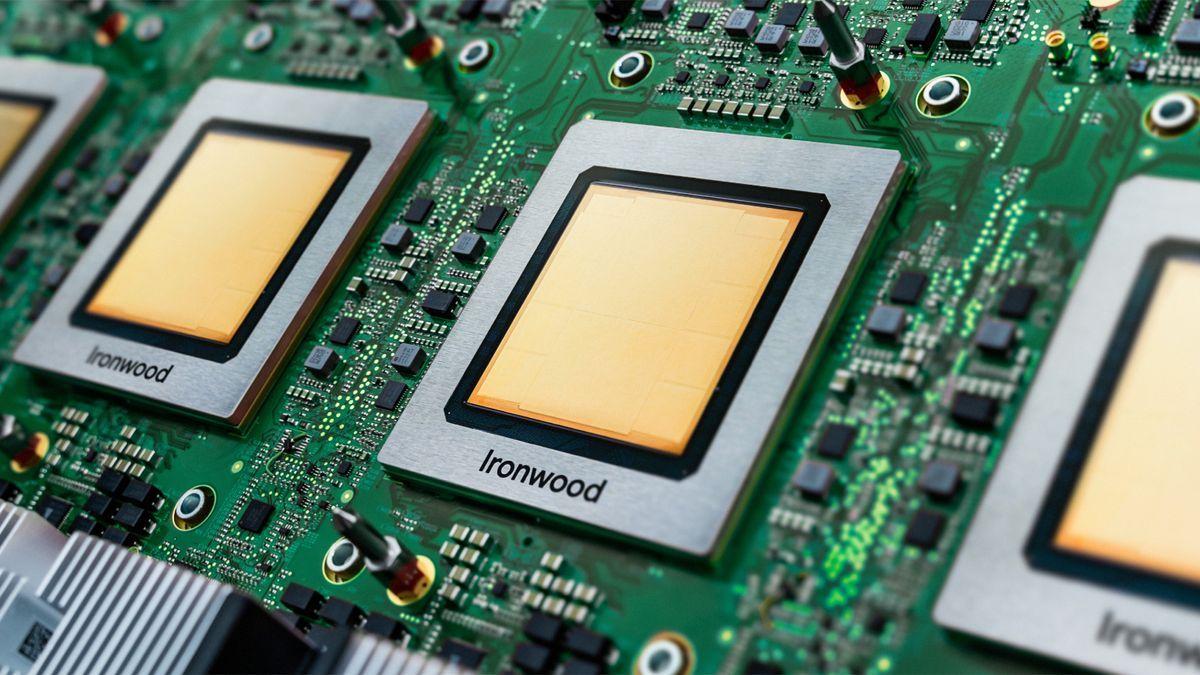

Google has unveiled a new AI processor, the seventh generation of its custom TPU architecture. The chip, known as Ironwood, was reportedly designed for the emerging needs of Google's most powerful Gemini models, like simulated reasoning, which Google prefers to call "thinking." The company claims this chip represents a major shift that will unlock more powerful agentic AI capabilities. Google calls this the "age of inference." Whenever Google talks about the capabilities of a new Gemini version, it notes that the model's capabilities are tied not only to the code but to Google's infrastructure. Its custom AI hardware is a key element of accelerating inference and expanding context windows. With Ironwood, Google says it has its most scalable and powerful TPU yet, which will allow AI to act on behalf of a user to proactively gather data and generate outputs. This is what Google means when it talks about agentic AI. Ironwood delivers higher throughput compared to previous Google Tensor Processing Units (TPUs), and Google really plans to pack these chips in. Ironwood is designed to operate in clusters of up to 9,216 liquid-cooled chips, which will communicate directly with each other through a newly enhanced Inter-Chip Interconnect (ICI). Google says this design will be a boon not only for its own Gemini models but also to developers looking to run AI projects in the cloud. Developers will be able to leverage Ironwood in two different configurations: a 256-chip server or the full-size 9,216-chip cluster.

[2]

Ironwood is Google's newest AI accelerator chip | TechCrunch

During its Cloud Next conference this week, Google unveiled the latest generation of its TPU AI accelerator chip. The new chip, called Ironwood, is Google's seventh-generation TPU and is the first optimized for inference -- that is, running AI models. Scheduled to launch sometime later this year for Google Cloud customers, Ironwood will come in two configurations: a 256-chip cluster and a 9,216-chip cluster. "Ironwood is our most powerful, capable, and energy-efficient TPU yet," Google Cloud VP Amin Vahdat wrote in a blog post provided to TechCrunch. "And it's purpose-built to power thinking, inferential AI models at scale." Ironwood arrives as competition in the AI accelerator space heats up. Nvidia may have the lead, but tech giants including Amazon and Microsoft are pushing their own in-house solutions. Amazon has its Trainium, Inferentia, and Graviton processors, available through AWS, and Microsoft hosts Azure instances for its Cobalt 100 AI chip. Ironwood can deliver 4,614 TFLOPs of computing power at peak, according to Google's internal benchmarking. Each chip has 192GB of dedicated RAM with bandwidth approaching 7.4 Tbps. Ironwood has an enhanced specialized core, SparseCore, for processing the types of data common in "advanced ranking" and "recommendation" workloads (e.g. an algorithm that suggests apparel you might like). The TPU's architecture was designed to minimize data movement and latency on-chip, resulting in power savings, Google says. Google plans to integrate Ironwood with its AI Hypercomputer, a modular computing cluster in Google Cloud, in the near future, Vahdat added. "Ironwood represents a unique breakthrough in the age of inference," Vahdat said, "with increased computation power, memory capacity, [...] networking advancements, and reliability."

[3]

Google's latest chip is all about reducing one huge hidden cost in AI

During its Google Cloud Next 25 event Wednesday, the search giant unveiled the latest version of its Tensor Processing Unit (TPU), the custom chip built to run artificial intelligence -- with a twist. Also: Why Google Code Assist may finally be the programming power tool you need For the first time, Google is positioning the chip for inference, the making of predictions for live requests from millions or even billions of users, as opposed to training, the development of neural networks carried out by teams of AI specialists and data scientists. The Ironwood TPU, as the new chip is called, arrives at an economic inflection point in AI. The industry clearly expects AI moving forward to be less about science projects and more about the actual use of AI models by companies. And the rise of DeepSeek AI has focused Wall Street more than ever on the enormous cost of building AI for Google and its competitors. The rise of "reasoning" AI models, such as Google's Gemini, which dramatically increases the number of statements generated by a large language model, creates a sudden surge in the total computing needed to make predictions. As Google put it in describing Ironwood, "reasoning and multi-step inference is shifting the incremental demand for compute -- and therefore cost -- from training to inference time (test-time scaling)." Also: Think DeepSeek has cut AI spending? Think again Thus, Ironwood is a statement by Google that its focus on performance and efficiency is shifting to reflect the rising cost of inference versus the research domain of training. Google has been developing its TPU family of chips for over a decade through six prior generations. However, training chips are generally considered a much lower-volume chip market than inference. That is because training demands rise only as each new, gigantic GenAI research project is inaugurated, which is generally once a year or so. In contrast, inference is expected to meet the needs of thousands or millions of customers who want day-to-day predictions from the trained neural network. Inference is considered a high-volume market in the chip world. Google had previously made the case that the sixth-generation Trillium TPU, introduced last year, which became generally available in December, could serve as both a training and an inference chip in one part, emphasizing its ability to speed up the serving of predictions. In fact, as far back as the TPU version two, in 2017, Google had talked of a combined ability for training and inference. Also: Google reveals new Kubernetes and GKE enhancements for AI innovation The positioning of Ironwood as mainly an inference chip, first and foremost, is a departure. It's a shift that may also mark a change in Google's willingness to rely on Intel, Advanced Micro Devices, and Nvidia as the workhorse of its AI computing fleet. In the past, Google had described the TPU as a necessary investment to achieve cutting-edge research results but not an alternative to its vendors. In Google's cloud computing operations, based on the number of "instances" run by customers, Intel, AMD, and Nvidia chips make up a combined 99% of processors used, versus less than a percent for the TPU, according to research by KeyBanc Capital Markets. That reliance on three dominant vendors has economic implications for Google and the other giants, Microsoft and Amazon. Also: 10 key reasons AI went mainstream overnight - and what happens next Wall Street stock analysts, who compile measures of Google's individual lines of business, have, from time to time, calculated the economic value of the TPU. For example, in January, stock analyst Gil Luria of the boutique research firm DA Davidson wrote that "Google would have generated as much as $24 billion of revenue last year if it was selling TPUs as hardware to NVDA [Nvidia] customers," meaning in competition with Nvidia. Conversely, at a time when the cost of AI escalates into multi-hundred-billion-dollar projects such as Stargate, Wall Street analysts believe that Google's TPU could offer the company a way to save money on the cost of AI infrastructure. Also: DeepSeek's new open-source AI model can outperform o1 for a fraction of the cost Although Google has paid chip-maker Broadcom to help it take each new TPU into commercial production, Google might still save money using more TPUs versus the price it has to pay to Intel, AMD, and Nvidia to consume ever-larger fleets of chips for inference. To make the case for Ironwood, Google on Wednesday emphasized the technical advances of Ironwood versus Trillium. Google said Ironwood gets twice the "performance per watt" of Trillium, as measured by 29.3 trillion floating-point math operations per second. The Ironwood part has 192GB of DRAM memory, dubbed HBM, or high-bandwidth memory, six times as much as Trillium. The memory bandwidth transmitted is 4.5 times as much, 7.2 terabits per second. Also: Nvidia dominates in gen AI benchmarks, clobbering 2 rival AI chips Google said those enhancements are supposed to support much greater movement of data in and out of the chip and between systems. "Ironwood is designed to minimize data movement and latency on chip while carrying out massive tensor manipulations," said Google. The memory and bandwidth advances are all part of Google's emphasis on "scaling" its AI infrastructure. The meaning of scaling is to be able to fully use each chip when grouping together hundreds or thousands of chips to work on a problem in parallel. More chips dedicated to the same problem should lead to a concomitant speed-up in performance. Again, scaling has an economic component. By effectively grouping chips, the TPUs can achieve greater "utilization," the amount of a given resource that is actually used versus the amount being left idle. Successful scaling means higher utilization of chips, which is good because it means less waste of an expensive resource. Also: 5 reasons why Google's Trillium could transform AI and cloud computing - and 2 obstacles That's why, in the past, Google has emphasized Trillium's ability to "scale to hundreds of thousands of chips" in a collection of machines. While Google didn't give explicit details on Ironwood's scaling performance on inference tasks, it once again emphasized on Wednesday the ability of "hundreds of thousands of Ironwood chips to be composed together to rapidly advance the frontiers of GenAI computation." Also: Intel's new CEO vows to run chipmaker 'as a startup, on day one' Google's announcement came with a significant software announcement as well, Pathways on Cloud. The Pathways software is code that distributes parts of the AI computing work to different computers. It had been used internally by Google and is now being made available to the public. Get the morning's top stories in your inbox each day with our Tech Today newsletter.

[4]

Google's 'Ironwood' TPU aims squarely at the high cost of inference

During its Google Cloud Next 25 event Wednesday, the search giant unveiled the latest version of its Tensor Processing Unit (TPU), the custom chip built to run artificial intelligence--with a twist. For the first time, Google is positioning the chip for inference, the making of predictions for live requests to millions or even billions of users, as opposed to training, the development of neural networks carried out by teams of AI specialists and data scientists. Also: Why Google Code Assist may finally be the programming power tool you need The Ironwood TPU, as the new chip is called, arrives at an economic inflection point in AI. The industry clearly expects AI moving forward to be less about science projects and more about the actual use of AI models by companies. And the rise of DeepSeek AI has focused Wall Street more than ever on the enormous cost of building AI for Google and its competitors. The rise of "reasoning" AI models, such as Google's Gemini, which dramatically increases the number of statements generated by a large language model, creates a sudden surge in the total computing needed to make predictions. As Google put it in describing Ironwood, "reasoning and multi-step inference is shifting the incremental demand for compute --and therefore cost--from training to inference time (test-time scaling.) Thus, Ironwood is a statement by Google that its focus on performance and efficiency is shifting to reflect the rising cost of inference versus the research domain of training. Also: Think DeepSeek has cut AI spending? Think again Google has been developing its TPU family of chips for over a decade through six prior generations. However, training chips are generally considered a much lower-volume chip market than inference. That is because training demands rise only as each new, gigantic GenAI research project is inaugurated, which is generally once a year or so. In contrast, inference is expected to meet the needs of thousands or millions of customers who want day-to-day predictions from the trained neural network. Inference is considered a high-volume market in the chip world. Google had previously made the case that the sixth-generation Trillium TPU, introduced last year, which became generally available in December, could serve as both a training and an inference chip in one part, emphasizing its ability to speed up the serving of predictions. In fact, as far back as the TPU version two, in 2017, Google had talked of a combined ability for training and inference. The positioning of Ironwood as mainly an inference chip, first and foremost, is a departure. Also: Google reveals new Kubernetes and GKE enhancements for AI innovation It's a shift that may also mark a change in Google's willingness to rely on Intel, Advanced Micro Devices, and Nvidia as the workhorse of its AI computing fleet. In the past, Google had described the TPU as a necessary investment to achieve cutting-edge research results but not an alternative to its vendors. In Google's cloud computing operations, based on the number of "instances" run by customers, Intel, AMD and Nvidia chips make up a combined 99% of processors used, versus less than a percent for the TPU, according to research by KeyBanc Capital Markets. That reliance on three dominant vendors has economic implications for Google and the other giants, Microsoft and Amazon. Wall Street stock analysts, who compile measures of Google's individual lines of business, have, from time to time, calculated the economic value of the TPU. For example, in January, stock analyst Gil Luria of the boutique research firm DA Davidson wrote that "Google would have generated as much as $24 billion of revenue last year if it was selling TPUs as hardware to NVDA [Nvidia] customers," meaning in competition with Nvidia. Conversely, at a time when the cost of AI escalates into multi-hundred-billion-dollar projects such as Stargate, Wall Street analysts believe that Google's TPU could offer the company a way to save money on the cost of AI infrastructure. Although Google has paid chip-maker Broadcom to help it take each new TPU into commercial production, Google might still save money using more TPUs versus the price it has to pay to Intel, AMD, and Nvidia to consumer ever-larger fleets of chips for inference. Also: DeepSeek's new open-source AI model can outperform o1 for a fraction of the cost To make the case for Ironwood, Google on Wednesday emphasized the technical advances of Ironwood versus Trillium. Google said Ironwood gets twice the "performance per watt" of Trillium, as measured by 29.3 trillion floating-point math operations per second. The Ironwood part has 192 gigabytes of DRAM memory, dubbed HBM, or high-bandwidth memory, six times as much as Trillium. The memory bandwidth transmitted is 4.5 times as much, 7.2 terabits per second. Google said those enhancements are supposed to support much greater movement of data in and out of the chip and between systems. "Ironwood is designed to minimize data movement and latency on chip while carrying out massive tensor manipulations," said Google. The memory and bandwidth advances are all part of Google's emphasis on "scaling" its AI infrastructure. The meaning of scaling is to be able to fully use each chip when grouping together hundreds or thousands of chips to work on a problem in parallel. More chips dedicated to the same problem should lead to a concomitant speed-up in performance. Again, scaling has an economic component. By effectively grouping chips, the TPUs can achieve greater "utilization," the amount of a given resource that is actually used versus the amount being left idle. Successful scaling means higher utilization of chips, which is good because it means less waste of an expensive resource. Also: 5 reasons why Google's Trillium could transform AI and cloud computing - and 2 obstacles That's why, in the past, Google has emphasized Trillium's ability to "scale to hundreds of thousands of chips" in a collection of machines. While Google didn't give explicit details on Ironwood's scaling performance on inference tasks, it once again emphasized on Wednesday the ability of "hundreds of thousands of Ironwood chips to be composed together to rapidly advance the frontiers of GenAI computation." Google's announcement came with a significant software announcement as well, Pathways on Cloud. The Pathways software is code that distributes parts of the AI computing work to different computers. It had been used internally by Google and is now being made available to the public.

[5]

Google launches new Ironwood chip to speed AI applications

SAN FRANCISCO, April 9 (Reuters) - Alphabet's (GOOGL.O), opens new tab on Wednesday unveiled its seventh-generation artificial intelligence chip named Ironwood, which the company said is designed to speed the performance of AI applications. The Ironwood processor is geared toward the type of data crunching needed when users query software such as OpenAI's ChatGPT. Known in the tech industry as "inference" computing, the chips perform rapid calculations to render answers in a chatbot or generate other types of responses. The search giant's multi-billion dollar, roughly decade-long effort represents one of the few viable alternative chips to Nvidia's (NVDA.O), opens new tab powerful AI processors. Google's tensor processing units (TPUs) can only be used by the company's own engineers or through its cloud service and have given its internal AI effort an edge over some rivals. For at least one generation Google split its TPU family of chips into a version that's tuned for building large AI models from scratch. Its engineers have made a second line of chips that strips out some of the model building features in favor of a chip that shaves costs of running AI applications. The Ironwood chip is a model designed for running AI applications, or inference, and is designed to work in groups of as many as 9,216 chips, said Amind Vahdat, a Google vice president. The new chip, unveiled at a cloud conference, brings functions from earlier split designs together and increases the available memory, which makes it better suited for serving AI applications. "It's just that the relative importance of inference is going up significantly," Vahdat said. The Ironwood chips boast double the performance for the amount of energy needed compared with Google's Trillium chip it announced last year, Vahdat said. The company builds and deploys its Gemini AI models with its own chips. The company did not disclose which chip manufacturer is producing the Google design. Reporting by Max A. Cherney in San Francisco; Editing by Sonali Paul Our Standards: The Thomson Reuters Trust Principles., opens new tab Suggested Topics:Disrupted Max A. Cherney Thomson Reuters Max A. Cherney is a correspondent for Reuters based in San Francisco, where he reports on the semiconductor industry and artificial intelligence. He joined Reuters in 2023 and has previously worked for Barron's magazine and its sister publication, MarketWatch. Cherney graduated from Trent University with a degree in history.

[6]

Ironwood: Google's new AI chip is 24x faster than top supercomputers

Meet the chip that's 24 times faster than the world's fastest super computer. AI is the latest hot technology taking over the world with a storm. That being said, it's natural to see the largest of tech giants tinkering around with this technology and uncovering new inventions as the AI era takes shape. The latest amongst these tech giants is Google, who unveiled its most efficient Tensor Processing Unit(TPU) called Ironwood, designed specifically for AI models. This TPU is built to help AI models work faster and smarter, especially when it's working on tasks that require reasoning or making predictions. This particular activity is known as 'inferencing.' Ironwood is specifically built for such tasks, unlike its predecessors that were designed for training AI models from scratch.

[7]

Google announces 7th-gen Ironwood TPU, Lyria text-to-music model, more

In addition to the latest in Workspace at Cloud Next 2025, Google today announced Ironwood, its 7th-generation Tensor Processing Unit (TPU) and the latest generative models. The Ironwood TPU is Google's "most performant and scalable custom AI accelerator to date," as well as energy efficient, and the "first designed specifically for inference." Specifically: Ironwood represents a significant shift in the development of AI and the infrastructure that powers its progress. It's a move from responsive AI models that provide real-time information for people to interpret, to models that provide the proactive generation of insights and interpretation. This is what we call the "age of inference" where AI agents will proactively retrieve and generate data to collaboratively deliver insights and answers, not just data. Ironwood is designed to manage the demands of thinking models, which "encompass Large Language Models (LLMs), Mixture of Experts (MoEs) and advanced reasoning tasks," that require "massive" parallel processing and efficient memory access. The latter is achieved by minimizing "data movement and latency on chip while carrying out massive tensor manipulations." At the frontier, the computation demands of thinking models extend well beyond the capacity of any single chip. We designed Ironwood TPUs with a low-latency, high bandwidth ICI network to support coordinated, synchronous communication at full TPU pod scale. Google Cloud customers can access a 256 or 9,216-chip -- each individual chip offers peak compute of 4,614 TFLOPs -- configuration. The latter is a pod that has a total of 42.5 Exaflops or: "more than 24x the computer power of the world's largest supercomputer - El Capitan - which offers just 1.7 Exaflops per pod." Ironwood offers performance per watt that is 2x relative to the 6th-gen Trillium announced in 2024, as well as 192 GB of High Bandwidth Memory per chip (6x Trillium). Pathways is Google's distributed runtime that powers internal large-scale training and inference infrastructure. It's now available for Google Cloud customers. Gemini 2.5 Flash is Google's "workhorse model" where low latency and cost are prioritized. Coming soon to Vertex AI, it features "dynamic and controllable reasoning." The model automatically adjusts processing time ('thinking budget') based on query complexity, enabling faster answers for simple requests. You also gain granular control over this budget, allowing explicit tuning of the speed, accuracy, and cost balance for your specific needs. This flexibility is key to optimizing Flash performance in high-volume, cost-sensitive applications Example high-volume use cases include customer service and real-time information processing. Google is now making its Lyria text-to-music model available for enterprise customers "in preview with allowlist" on Vertex AI. This model can generate high-fidelity audio across a range of genres. Companies can use it to quickly create soundtracks that are tailored to a "brand's unique identity." Another use is for video production and podcasting: Lyria eliminates these hurdles, allowing you to generate custom music tracks in minutes, directly aligning with your content's mood, pacing, and narrative. This can help accelerate production workflows and reduce licensing costs. The following is an example prompt: "Craft a high-octane bebop tune. Prioritize dizzying saxophone and trumpet solos, trading complex phrases at lightning speed. The piano should provide percussive, chordal accompaniment, with walking bass and rapid-fire drums driving the frenetic energy. The tone should be exhilarating, and intense. Capture the feeling of a late-night, smoky jazz club, showcasing virtuosity and improvisation. The listener should not be able to sit still." Meanwhile, Veo 2 is getting editing capabilities that let you alter existing footage: Similarly, Imagen 3 Editing features improvements to inpainting "for reconstructing missing or damaged portions of an image," as well as objects removal. Chirp 3 is Google's audio understanding and generation model. It offers "HD voices" with natural and realistic speech in 35+ languages with eight speaker options. The understanding aspect powers a new feature that "accurately separates and identifies individual speakers in multi-speaker recordings" for better transcription. Another new feature lets Chirp 3 "generate realistic custom voices from 10 seconds of audio input." This enables enterprises to personalize call centers, develop accessible content, and establish unique brand voices -- all while maintaining a consistent brand identity. To ensure responsible use, Instant Custom Voice includes built-in safety features, and our allowlisting process involves rigorous diligence to verify proper voice usage permissions. On the safety front, "DeepMind's SynthID embeds invisible watermarks into every image, video and audio frame that Imagen, Veo, and Lyria produce."

[8]

Google's new Ironwood chip is 24x more powerful than the world's fastest supercomputer

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More Google Cloud unveiled its seventh-generation Tensor Processing Unit (TPU) called Ironwood on Wednesday, a custom AI accelerator that the company claims delivers more than 24 times the computing power of the world's fastest supercomputer when deployed at scale. The new chip, announced at Google Cloud Next '25, represents a significant pivot in Google's decade-long AI chip development strategy. While previous generations of TPUs were designed primarily for both training and inference workloads, Ironwood is the first purpose-built specifically for inference -- the process of deploying trained AI models to make predictions or generate responses. "Ironwood is built to support this next phase of generative AI and its tremendous computational and communication requirements," said Amin Vahdat, Google's Vice President and General Manager of ML, Systems, and Cloud AI, in a virtual press conference ahead of the event. "This is what we call the 'age of inference' where AI agents will proactively retrieve and generate data to collaboratively deliver insights and answers, not just data." Shattering computational barriers: Inside Ironwood's 42.5 exaflops of AI muscle The technical specifications of Ironwood are striking. When scaled to 9,216 chips per pod, Ironwood delivers 42.5 exaflops of computing power -- dwarfing El Capitan's 1.7 exaflops, currently the world's fastest supercomputer. Each individual Ironwood chip delivers peak compute of 4,614 teraflops. Ironwood also features significant memory and bandwidth improvements. Each chip comes with 192GB of High Bandwidth Memory (HBM), six times more than Trillium, Google's previous-generation TPU announced last year. Memory bandwidth reaches 7.2 terabits per second per chip, a 4.5x improvement over Trillium. Perhaps most importantly in an era of power-constrained data centers, Ironwood delivers twice the performance per watt compared to Trillium, and is nearly 30 times more power efficient than Google's first Cloud TPU from 2018. "At a time when available power is one of the constraints for delivering AI capabilities, we deliver significantly more capacity per watt for customer workloads," Vahdat explained. From model building to 'thinking machines': Why Google's inference focus matters now The emphasis on inference rather than training represents a significant inflection point in the AI timeline. For years, the industry has been fixated on building increasingly massive foundation models, with companies competing primarily on parameter size and training capabilities. Google's pivot to inference optimization suggests we're entering a new phase where deployment efficiency and reasoning capabilities take center stage. This transition makes sense. Training happens once, but inference operations occur billions of times daily as users interact with AI systems. The economics of AI are increasingly tied to inference costs, especially as models grow more complex and computationally intensive. During the press conference, Vahdat revealed that Google has observed a 10x year-over-year increase in demand for AI compute over the past eight years -- a staggering factor of 100 million overall. No amount of Moore's Law progression could satisfy this growth curve without specialized architectures like Ironwood. What's particularly notable is the focus on "thinking models" that perform complex reasoning tasks rather than simple pattern recognition. This suggests Google sees the future of AI not just in larger models, but in models that can break down problems, reason through multiple steps, and essentially simulate human-like thought processes. Gemini's thinking engine: How Google's next-gen models leverage advanced hardware Google is positioning Ironwood as the foundation for its most advanced AI models, including Gemini 2.5, which the company describes as having "thinking capabilities natively built in." At the conference, Google also announced Gemini 2.5 Flash, a more cost-effective version of its flagship model that "adjusts the depth of reasoning based on a prompt's complexity." While Gemini 2.5 Pro is designed for complex use cases like drug discovery and financial modeling, Gemini 2.5 Flash is positioned for everyday applications where responsiveness is critical. The company also demonstrated its full suite of generative media models, including text-to-image, text-to-video, and a newly announced text-to-music capability called Lyria. A demonstration showed how these tools could be used together to create a complete promotional video for a concert. Beyond silicon: Google's comprehensive infrastructure strategy includes network and software Ironwood is just one part of Google's broader AI infrastructure strategy. The company also announced Cloud WAN, a managed wide-area network service that gives businesses access to Google's planet-scale private network infrastructure. "Cloud WAN is a fully managed, viable and secure enterprise networking backbone that provides up to 40% improved network performance, while also reducing total cost of ownership by that same 40%," Vahdat said. Google is also expanding its software offerings for AI workloads, including Pathways, its machine learning runtime developed by Google DeepMind. Pathways on Google Cloud allows customers to scale out model serving across hundreds of TPUs. AI economics: How Google's $12 billion cloud business plans to win the efficiency war These hardware and software announcements come at a crucial time for Google Cloud, which reported $12 billion in Q4 2024 revenue, up 30% year over year, in its latest earnings report. The economics of AI deployment are increasingly becoming a differentiating factor in the cloud wars. Google faces intense competition from Microsoft Azure, which has leveraged its OpenAI partnership into a formidable market position, and Amazon Web Services, which continues to expand its Trainium and Inferentia chip offerings. What separates Google's approach is its vertical integration. While rivals have partnerships with chip manufacturers or acquired startups, Google has been developing TPUs in-house for over a decade. This gives the company unparalleled control over its AI stack, from silicon to software to services. By bringing this technology to enterprise customers, Google is betting that its hard-won experience building chips for Search, Gmail, and YouTube will translate into competitive advantages in the enterprise market. The strategy is clear: offer the same infrastructure that powers Google's own AI, at scale, to anyone willing to pay for it. The multi-agent ecosystem: Google's audacious plan for AI systems that work together Beyond hardware, Google outlined a vision for AI centered around multi-agent systems. The company announced an Agent Development Kit (ADK) that allows developers to build systems where multiple AI agents can work together. Perhaps most significantly, Google announced an "agent-to-agent interoperability protocol" (A2A) that enables AI agents built on different frameworks and by different vendors to communicate with each other. "2025 will be a transition year where generative AI shifts from answering single questions to solving complex problems through agented systems," Vahdat predicted. Google is partnering with more than 50 industry leaders, including Salesforce, ServiceNow, and SAP, to advance this interoperability standard. Enterprise reality check: What Ironwood's power and efficiency mean for your AI strategy For enterprises deploying AI, these announcements could significantly reduce the cost and complexity of running sophisticated AI models. Ironwood's improved efficiency could make running advanced reasoning models more economical, while the agent interoperability protocol could help businesses avoid vendor lock-in. The real-world impact of these advancements shouldn't be underestimated. Many organizations have been reluctant to deploy advanced AI models due to prohibitive infrastructure costs and energy consumption. If Google can deliver on its performance-per-watt promises, we could see a new wave of AI adoption in industries that have thus far remained on the sidelines. The multi-agent approach is equally significant for enterprises overwhelmed by the complexity of deploying AI across different systems and vendors. By standardizing how AI systems communicate, Google is attempting to break down the silos that have limited AI's enterprise impact. During the press conference, Google emphasized that over 400 customer stories would be shared at Next '25, showcasing real business impact from its AI innovations. The silicon arms race: Will Google's custom chips and open standards reshape AI's future? As AI continues to advance, the infrastructure powering it will become increasingly critical. Google's investments in specialized hardware like Ironwood, combined with its agent interoperability initiatives, suggest the company is positioning itself for a future where AI becomes more distributed, more complex, and more deeply integrated into business operations. "Leading thinking models like Gemini 2.5 and the Nobel Prize winning AlphaFold all run on TPUs today," Vahdat noted. "With Ironwood we can't wait to see what AI breakthroughs are sparked by our own developers and Google Cloud customers when it becomes available later this year." The strategic implications extend beyond Google's own business. By pushing for open standards in agent communication while maintaining proprietary advantages in hardware, Google is attempting a delicate balancing act. The company wants the broader ecosystem to flourish (with Google infrastructure underneath), while still maintaining competitive differentiation. How quickly competitors respond to Google's hardware advancements and whether the industry coalesces around the proposed agent interoperability standards will be key factors to watch in the months ahead. If history is any guide, we can expect Microsoft and Amazon to counter with their own inference optimization strategies, potentially setting up a three-way race to build the most efficient AI infrastructure stack.

[9]

Google Cloud Next '25: New AI chips and agent ecosystem challenge Microsoft and Amazon

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More Google Cloud is making an aggressive play to solidify its position in the increasingly competitive artificial intelligence landscape, announcing a sweeping array of new technologies focused on "thinking models," agent ecosystems, and specialized infrastructure designed specifically for large-scale AI deployments. At its annual Cloud Next conference in Las Vegas today, Google revealed its seventh-generation Tensor Processing Unit (TPU) called Ironwood, which the company claims delivers more than 42 exaflops of computing power per pod -- a staggering 24 times more powerful than the world's leading supercomputer, El Capitan. "The opportunity with AI is as big as it gets," said Amin Vahdat, Google's vice president and general manager of ML systems and cloud AI, during a press conference ahead of the event. "Together with our customers, we're powering a new golden age of innovation." The conference comes at a pivotal moment for Google, which has seen considerable momentum in its cloud business. In January, the company reported that its Q4 2024 cloud revenue reached $12 billion, a 30% increase year over year. Google executives say active users in AI Studio and the Gemini API have increased by 80% in just the past month. How Google's new Ironwood TPUs are transforming AI computing with power efficiency Google is positioning itself as the only major cloud provider with a "fully AI-optimized platform" built from the ground up for what it calls "the age of inference" -- where the focus shifts from model training to actually using AI systems to solve real-world problems. The star of Google's infrastructure announcements is Ironwood, which represents a fundamental shift in chip design philosophy. Unlike previous generations that balanced training and inference, Ironwood was built specifically to run complex AI models after they've been trained. "It's no longer about the data put into the model, but what the model can do with data after it's been trained," Vahdat explained. Each Ironwood pod contains more than 9,000 chips and delivers two times better power efficiency than the previous generation. This focus on efficiency addresses one of the most pressing concerns about generative AI: its enormous energy consumption. In addition to the new chips, Google is opening up its massive global network infrastructure to enterprise customers through Cloud WAN (Wide Area Network). This service makes Google's 2-million-mile fiber network -- the same one that powers consumer services like YouTube and Gmail -- available to businesses. According to Google, Cloud WAN improves network performance by up to 40% while simultaneously reducing total cost of ownership by the same percentage compared to customer-managed networks. This represents an unusual step for a hyperscaler, essentially turning its internal infrastructure into a product. Inside Gemini 2.5: How Google's 'thinking models' improve enterprise AI applications On the software side, Google is expanding its Gemini model family with Gemini 2.5 Flash, a cost-effective version of its flagship AI system that includes what the company describes as "thinking capabilities." Unlike traditional large language models that generate responses directly, these "thinking models" break down complex problems through multi-step reasoning and even self-reflection. Gemini 2.5 Pro, which launched two weeks ago, is positioned for high-complexity use cases like drug discovery and financial modeling, while the newly announced Flash variant adjusts its reasoning depth based on prompt complexity to balance performance and cost. Google is also significantly expanding its generative media capabilities with updates to Imagen (for image generation), Veo (video), Chirp (audio), and the introduction of Lyria, a text-to-music model. During a demonstration during the press conference, Nenshad Bardoliwalla, Director of Product Management for Vertex AI, showed how these tools could work together to create a promotional video for a concert, complete with custom music and sophisticated editing capabilities like removing unwanted elements from video clips. "Only Vertex AI brings together all of these models, along with third-party models onto a single platform," Bardoliwalla said. Beyond single AI systems: How Google's multi-agent ecosystem aims to enhance enterprise workflows Perhaps the most forward-looking announcements focused on creating what Google calls a "multi-agent ecosystem" -- an environment where multiple AI systems can work together across different platforms and vendors. Google is introducing an Agent Development Kit (ADK) that allows developers to build multi-agent systems with less than 100 lines of code. The company is also proposing a new open protocol called Agent2Agent (A2A) that would allow AI agents from different vendors to communicate with each other. "2025 will be a transition year where generative AI shifts from answering single questions to solving complex problems through agented systems," Vahdat predicted. More than 50 partners have signed on to support this protocol, including major enterprise software providers like Salesforce, ServiceNow, and SAP, suggesting a potential industry shift toward interoperable AI systems. For non-technical users, Google is enhancing its Agent Space platform with features like Agent Gallery (providing a single view of available agents) and Agent Designer (a no-code interface for creating custom agents). During a demonstration, Google showed how a banking account manager could use these tools to analyze client portfolios, forecast cash flow issues, and automatically draft communications to clients -- all without writing any code. From document summaries to drive-thru orders: How Google's specialized AI agents are affecting industries Google is also deeply integrating AI across its Workspace productivity suite, with new features like "Help me Analyze" in Sheets, which automatically identifies insights from data without explicit formulas or pivot tables, and Audio Overviews in Docs, which creates human-like audio versions of documents. The company highlighted five categories of specialized agents where it's seeing significant adoption: customer service, creative work, data analysis, coding, and security. In the customer service realm, Google pointed to Wendy's AI drive-through system, which now handles 60,000 orders daily, and The Home Depot's "Magic Apron" agent that offers home improvement guidance. For creative teams, companies like WPP are using Google's AI to conceptualize and produce marketing campaigns at scale. Cloud AI competition intensifies: How Google's comprehensive approach challenges Microsoft and Amazon Google's announcements come amid intensifying competition in the cloud AI space. Microsoft has deeply integrated OpenAI's technology across its Azure platform, while Amazon has been building out its own Anthropic-powered offerings and specialized chips. Thomas Kurian, CEO of Google Cloud, emphasized the company's "commitment to delivering world-class infrastructure, models, platforms, and agents; offering an open, multi-cloud platform that provides flexibility and choice; and building for interoperability." This multi-pronged approach appears designed to differentiate Google from competitors who may have strengths in specific areas but not the full stack from chips to applications. The future of enterprise AI: Why Google's 'thinking models' and interoperability matter for business technology What makes Google's announcements particularly significant is the comprehensive nature of its AI strategy, spanning custom silicon, global networking, model development, agent frameworks, and application integration. The focus on inference optimization rather than just training capabilities reflects a maturing AI market. While training ever-larger models has dominated headlines, the ability to deploy these models efficiently at scale is becoming the more pressing challenge for enterprises. Google's emphasis on interoperability -- allowing systems from different vendors to work together -- may also signal a shift away from the walled garden approaches that have characterized earlier phases of cloud computing. By proposing open protocols like Agent2Agent, Google is positioning itself as the connective tissue in a heterogeneous AI ecosystem rather than demanding all-or-nothing adoption. For enterprise technical decision makers, these announcements present both opportunities and challenges. The efficiency gains promised by specialized infrastructure like Ironwood TPUs and Cloud WAN could significantly reduce the costs of deploying AI at scale. However, navigating the rapidly evolving landscape of models, agents, and tools will require careful strategic planning. As these more sophisticated AI systems continue to develop, the ability to orchestrate multiple specialized AI agents working in concert may become the key differentiator for enterprise AI implementations. In building both the components and the connections between them, Google is betting that the future of AI isn't just about smarter machines, but about machines that can effectively talk to each other.

[10]

Google Cloud unveils Ironwood, its 7th Gen TPU to help boost AI performance and inference

It offers huge advances in power and efficiency, and even outperforms El Capitain supercomputer Google has revealed its most powerful AI training hardware to date as it looks to take another major step forward in inference. Ironwood is the company's 7th-generation Tensor Processing Unit (TPU) - the hardware powering both Google Cloud and its customers AI training and workload handling. The hardware was revealed at the company's Google Cloud Next 25 event in Las Vegas, where it was keen to highlight the great strides forward in efficiency which should also mean workloads can run more cost-effectively. The company says Ironwood marks "a significant shift" in the development of AI, making part of the move from responsive AI models which simply present real-time information for the users to process, towards proactive models which can interpret and infer by themselves. This is essentially the next generation of AI computing, Google Cloud believes, allowing its most demanding customers to set up and establish ever greater workloads. At its top-end Ironwood can scale up to 9,216 chips per pod, for a total of 42.5 exaflops - more than 24x the compute power of El Capitan, the world's current largest supercomputer. Each individual chip offers peak compute of 4,614 TFLOPs, what the company says is a huge leap forward in capacity and capability - even at its slightly less grand configuration of "only" 256 chips. However the scale can get even greater, as Ironwood allows developers to utilize the company's DeepMind-designed Pathways software stack to harness the combined computing power of tens of thousands of Ironwood TPUs. Ironwood also offers a major increase in high bandwidth memory capacity (192GB per chip, up to 6x greater than the previous Trillium sixth-generation TPU) and bandwidth - able to reach 7.2TBps, 4.5x greater than Trillium. "For more than a decade, TPUs have powered Google's most demanding AI training and serving workloads, and have enabled our Cloud customers to do the same," noted Amin Vahdat, VP/GM, ML, Systems & Cloud AI. "Ironwood is our most powerful, capable and energy efficient TPU yet. And it's purpose-built to power thinking, inferential AI models at scale."

[11]

Ironwood: The first Google TPU for the age of inference

Sorry, your browser doesn't support embedded videos, but don't worry, you can download it and watch it with your favorite video player! Today at Next 25, we're introducing Ironwood, our seventh-generation Tensor Processing Unit (TPU) -- our most performant and scalable custom AI accelerator to date, and the first designed specifically for inference. For more than a decade, TPUs have powered Google's most demanding AI training and serving workloads, and have enabled our Cloud customers to do the same. Ironwood is our most powerful, capable and energy efficient TPU yet. And it's purpose-built to power thinking, inferential AI models at scale. Ironwood represents a significant shift in the development of AI and the infrastructure that powers its progress. It's a move from responsive AI models that provide real-time information for people to interpret, to models that provide the proactive generation of insights and interpretation. This is what we call the "age of inference" where AI agents will proactively retrieve and generate data to collaboratively deliver insights and answers, not just data. Ironwood is built to support this next phase of generative AI and its tremendous computational and communication requirements. It scales up to 9,216 liquid cooled chips linked with breakthrough Inter-Chip Interconnect (ICI) networking spanning nearly 10 MW. It is one of several new components of Google Cloud AI Hypercomputer architecture, which optimizes hardware and software together for the most demanding AI workloads. With Ironwood, developers can also leverage Google's own Pathways software stack to reliably and easily harness the combined computing power of tens of thousands of Ironwood TPUs. Here's a closer look at how these innovations work together to take on the most demanding training and serving workloads with unparalleled performance, cost and power efficiency. Ironwood is designed to gracefully manage the complex computation and communication demands of "thinking models," which encompass Large Language Models (LLMs), Mixture of Experts (MoEs) and advanced reasoning tasks. These models require massive parallel processing and efficient memory access. In particular, Ironwood is designed to minimize data movement and latency on chip while carrying out massive tensor manipulations. At the frontier, the computation demands of thinking models extend well beyond the capacity of any single chip. We designed Ironwood TPUs with a low-latency, high bandwidth ICI network to support coordinated, synchronous communication at full TPU pod scale. For Google Cloud customers, Ironwood comes in two sizes based on AI workload demands: a 256 chip configuration and a 9,216 chip configuration.

[12]

Ironwood is Google's Answer to the GPU Crunch

The latest TPUs will be available to Google Cloud customers later this year, the tech giant said. It's no secret anymore that AI is GPU hungry -- and OpenAI's Sam Altman keeps stressing just how urgently they need more. "Working as fast as we can to really get stuff humming; if anyone has GPU capacity in 100k chunks we can get ASAP, please call," he posted on X recently. The demand surged even further when users flooded ChatGPT with Ghibli-style image requests, prompting Altman to ask people to slow down. This is where Google holds a distinct advantage. Unlike OpenAI, it isn't fully dependent on third-party hardware providers. At Google Cloud Next 2025, the company unveiled Ironwood, its seventh-generation tensor processing unit (TPU), designed specifically for inference. It's a key part of Google's broader AI Hypercomputer architecture. "Ironwood is our most powerful, capable and energy-efficient TPU yet. And it's purpose-built to power thinking, inferential AI models at scale," Google said. The tech giant said that today, we live in the "age of inference", where AI agents actively search, interpret, and generate insights instead of just responding with raw data. The company further said that Ironwood is built to manage the complex computation and communication demands of thinking models, such as large language models and mixture-of-experts systems. It added that with Ironwood, customers no longer have to choose between compute scale and performance. Ironwood will be available to Google Cloud customers later this year, the tech giant said.It currently supports advanced models, including Gemini 2.5 Pro and AlphaFold. The company also recently announced that the Deep Research feature in the Gemini app is now powered by Gemini 2.5 Pro. Google stated that over 60% of funded generative AI startups and nearly 90% of generative AI unicorns (startups valued at $1 billion or more) are Google Cloud customers. In 2024, Apple revealed it used 8,192 TPU v4 chips in Google Cloud to train its 'Apple Foundation Model', a large language model powering its AI initiatives. This was one of the first high-profile adoptions outside Google's ecosystem. Ironwood is specifically optimised to reduce data movement and on-chip latency during large-scale tensor operations. As the scale of these models exceeds the capacity of a single chip, Ironwood TPUs are equipped with a low-latency, high-bandwidth Interconnect (ICI) network, enabling tightly coordinated, synchronous communication across the entire TPU pod. The TPU supports two configurations, one with 256 chips and another with 9,216 chips. The full-scale version delivers 42.5 exaflops of compute, over 24 times the performance of the El Capitan supercomputer, which offers 1.7 exaflops per pod. Each Ironwood chip provides 4,614 TFLOPs of peak compute. According to Google, Ironwood is nearly twice as power-efficient as Trillium and almost 30 times more efficient than its first Cloud TPU launched in 2018. Liquid cooling enables consistent performance under sustained load, addressing the energy constraints associated with large-scale AI. It's unfortunate that Google doesn't offer TPUs as a standalone product. "Google should spin out its TPU team into a separate business, retain a big stake, and have it go public. Easy peasy way to make a bazillion dollars," said Erik Bernhardsson, founder of Modal Labs. If Google starts selling TPUs, it will definitely see strong market demand. These chips are capable of training models, too. For instance, Google used Trillium TPUs to train Gemini 2.0, and now, both enterprises and startups can take advantage of the same powerful and efficient infrastructure. Interestingly, TPUs were originally developed for Google's own AI-driven services, including Google Search, Google Translate, Google Photos, and YouTube. A recent report says Google might team up with MediaTek to build its next-gen TPUs. One reason behind this move could be MediaTek's close ties with TSMC, which offers Google lower chip costs than Broadcom. Notably, earlier this year, Google announced an investment of $75 billion in capital expenditures for 2025. In the latest earnings call, Google's CFO Anat Ashkenazi admitted to benefitting from having TPUs when they invest capital in building data centres. "Our strategy is to lean mostly on our own data centers, which means they are more customised to our needs. Our TPUs are customised for our workloads and needs. So, it does allow us to be more efficient and productive with that investment and spend," she said. Google reportedly spent between $6 billion and $9 billion on TPUs in the past year, based on estimates from research firm Omdia. Despite its investment in custom chips, Google remains a major NVIDIA customer. According to a recent report, the search giant is in advanced discussions to lease NVIDIA's Blackwell chips from CoreWeave, a rising player in the cloud computing space. This proves that even top NVIDIA clients like Google are facing difficulty in securing enough chips to satisfy the growing demand from their users. Moreover, integrating GPUs from others like NVIDIA isn't easy either -- cloud providers have to rework their infrastructure. In a recent interaction with AIM, Karan Batta, senior vice president, Oracle Cloud Infrastructure (OCI), said that most centres are not ready for liquid cooling, acknowledging the complexity of managing the heat produced by the new generation of NVIDIA Blackwell GPUs. He added that cloud providers must choose between passive or active cooling, full-loop systems, or sidecar approaches to integrate liquid cooling effectively. Batta further noted that while server racks follow a standard design (and can be copied from NVIDIA's setup), the real complexity lies in data centre design and networking. Not to forget, Oracle is under pressure to finish building a data center in Abilene, Texas -- roughly the size of 17 football fields -- for OpenAI. Right now, the facility is incomplete and sitting empty. If delays continue, OpenAI could walk away from the deal, potentially costing Oracle billions. Much like Google, AWS is building its own chips too. At AWS re:Invent in Las Vegas, the cloud giant announced several new chips, including Trainium2, Graviton4, and Inferentia. Last year, AWS invested $4 billion in Anthropic, becoming its primary cloud provider and training partner. The company also introduced Trn2 UltraServers and its next-generation Trainium3 AI training chip. AWS is now working with Anthropic on Project Rainier -- a large AI compute cluster powered by thousands of Trainium2 chips. This setup will help Anthropic develop its models and optimise its flagship product, Claude, to run efficiently on Trainium2 hardware. Ironwood isn't the only player in the inference space. A number of companies are now competing for NVIDIA's chip market share, including AI chip startups like Groq, Cerebras Systems, and SambaNova Systems. At the same time, OpenAI is progressing in its plan to develop custom AI chips to reduce its reliance on NVIDIA. According to a report, the company is preparing to finalise the design of its first in-house chip in the coming months and intends to send it for fabrication at TSMC.

[13]

Google's cloud-based AI Hypercomputer gets new workhorse with Ironwood TPU - SiliconANGLE

Google's cloud-based AI Hypercomputer gets new workhorse with Ironwood TPU Google Cloud is revving up its AI Hypercomputer infrastructure stack for the next generation of artificial intelligence workloads with its most advanced tensor processing unit chipset. The new Ironwood TPU was announced today at Google Cloud Next 2025, alongside dozens of other hardware and software enhancements designed to accelerate AI training and inference and simplify AI model development. Google's AI Hypercomputer is an integrated, cloud-hosted supercomputer platform that's designed to run the most advanced AI workloads. It's used by the company to power virtually all of its AI services, including Gemini models and its generative AI assistant and search tools. The company claims that its highly integrated approach allows it to squeeze dramatically higher performance out of almost any large language model, especially its Gemini models, which are optimized to run on the AI Hypercomputer infrastructure. The driving force of Google's AI Hypercomputer has always been its TPUs, which are AI accelerators similar to the graphics processing units designed by Nvidia Corp. Launching later this year, Ironwood will be Google's seventh-generation TPU, designed specifically for a new generation of more capable AI models, including "AI agents" that can proactively retrieve and generate data and take actions on behalf of their human users, said Amin Vahdat, Google's vice president and general manager of machine learning, systems and cloud AI. "Only Google Cloud has an AI-optimized platform," he said in a press briefing Monday. It's the most powerful TPU accelerator the company has ever built, and it can scale to a megacluster of 9,216 liquid-cooled chips linked together by its advanced Inter-Chip Interconnect technology. In this way, users can combine the power of many thousands of Ironwood TPUs to tackle the most demanding AI workloads. In a blog post, Vahdat said Ironwood was built specifically to meet the demands of new "thinking models" that use "mixture-of-experts" techniques to perform advanced reasoning. Powering these models requires massive amounts of parallel processing and highly efficient memory access, he explained. Ironwood caters to these needs by minimizing data movement and latency on chip, while performing massive tensor manipulations. "We designed Ironwood TPUs with a low-latency, high bandwidth ICI network to support coordinated, synchronous communication at full TPU pod scale," Vahdat said. Google Cloud customers will be able to choose from two configurations initially, including a 256-chip or 9,216-chip cluster. When Ironwood is scaled to 9,216 chips, it delivers a total performance of 42.5 exaflops, which is more than 24 times the computing power of El Capitan, officially recognized as the world's largest supercomputer, at just 1.7 exaflops per pod. Each individual chip provides a peak compute performance of 4,614 teraflops, and they're supported by a streamlined networking architecture that ensures they always have access to the data they need. Each Ironwood chip sports 192 gigabytes of high-bandwidth memory, representing a six-times gain on Google's previous best TPU, the sixth-generation Trillium, paving the way for much faster processing and data transfers. HBM bandwidth also gets a significant boost at 7.2 terabytes per second, per chip, which is 4.5 times faster than Trillium, ensuring rapid data access for memory-intensive workloads. In addition, Google has introduced an "enhanced" Inter-Chip Interconnect that increases the bidirectional bandwidth to 1.2 Tbps to support 1.5-times faster chip-to-chip communications. Ironwood also has an enhanced "SparseCore," or specialized accelerator that allows it to process the ultra-large embeddings found in advanced ranking and recommendation workloads, making it ideal for real-time financial and scientific applications, Vahdat said. For the most powerful AI applications, customers will be able to leverage Google's Pathways runtime software to dramatically increase the size of their AI compute clusters. With Pathways, customers aren't limited to a single Ironwood pod, but can instead create enormous clusters spanning "hundreds of thousands" of Ironwood TPUs. Vahdat said these improvements enable Ironwood to deliver twice the overall performance per watt compared with the sixth-generation TPU Trillium, and a 30-times gain over its first-generation TPU from 2018. Ironwood is the most significant upgrade in the AI Hypercomputer stack, but it's far from the only thing that's new. In fact, almost every aspect of its infrastructure has been improved, said Mark Lohmeyer, vice president of compute and AI infrastructure at Google Cloud. Besides Ironwood, customers will also be able to access a selection of Nvidia's most advanced AI accelerators, including its B200 and GB200 NVL72 GPUs, which are based on that company's latest-generation Blackwell architecture. Customers will be able to create enormous clusters of those GPUs in various configurations, utilizing Google's new 400G Cloud Interconnect and Cross-Cloud Interconnect networking technologies, which provide up to four times more bandwidth than its predecessor, the 100G Cloud Interconnect and Cross-Cloud Interconnect. Other new hardware improvements include higher-performance block storage, and a new Cloud Storage zonal bucket that makes it possible to colocate TPU and GPU-based clusters to optimize performance. Lohmeyer also discussed a range of software updates that he said will enable developers and engineers to take full advantage of this speedier, more performant AI architecture. For instance, the Pathways runtime gets new features such as disaggregated serving to support more dynamic and elastic scaling of inference and training workloads. The updated Cluster Director for Google Kubernetes Engine tool, formerly known as Hypercompute Cluster, will make it possible for developers to deploy and manage groups of TPU or GPU clusters as a single unit with physically colocated virtual machines to support targeted workload placement and further performance optimizations. Cluster Director for Slurm, meanwhile, is a new tool for simplified provisioning and operating Slurm clusters with blueprints for common AI workloads. Moreover, observability is being improved with newly designed dashboards that provide an overview of cluster utilization, health and performance, plus features such as AI Health Predictor and Straggler Detection for detecting and solving problems at the individual node level. Finally, Lohmeyer talked about some of the expanded inference capabilities in AI Hypercomputer that are designed to support AI reasoning. Available in preview now, they include the new Inference Gateway in Google Kubernetes Engine, which is designed to simplify infrastructure management by automating request scheduling and routing tasks. According to Google, this can help to reduce model serving costs by up to 30%, tail latency by 60%, and increased throughput of 40%. There's also a new GKE Inference Recommendations tool, which simplifies the initial setup in AI Hypercomputer. Users simply choose their underlying AI model and specify the performance level they need, and Gemini Cloud Assist will automatically configure the most suitable infrastructure, accelerators and Kubernetes resources to achieve that. Other capabilities include support for the highly optimized inference engine vLLM on TPUs, plus an updated Dynamic Workload Scheduler that helps customers get affordable access to TPUs and other AI accelerators on an on-demand basis. The Dynamic Workload Scheduler now supports additional virtual machines including TPU v5e, which is powered by Google's Trillium TPUs, A3 Ultra, powered by the Nvidia H200 GPUs, and A4, powered by Nvidia's B200 GPUs.

[14]

Google Unveils Ironwood, Its Most Powerful Chipset for AI Workflows

Ironwood is designed to handle LLMs, MoEs, and reasoning AI models Google introduced the seventh generation of its Tensor Processing Unit (TPU), Ironwood, last week. Unveiled at Google Cloud Next 25, it is said to be the company's most powerful and scalable custom artificial intelligence (AI) accelerator. The Mountain View-based tech giant said the chipset was specifically designed for AI inference -- the compute used by an AI model to process a query and generate a response. The company will soon make its Ironwood TPUs available to developers via the Google Cloud platform. In a blog post, the tech giant introduced its seventh-generation AI accelerator chipset. Google stated that Ironwood TPUs will enable the company to move from a response-based AI system to a proactive AI system, which is focused on dense large language models (LLMs), mixture-of-expert (MoE) models, and agentic AI systems that "retrieve and generate data to collaboratively deliver insights and answers." Notably, TPUs are custom-built chipsets aimed at AI and machine learning (ML) workflows. These accelerators offer extremely high parallel processing, especially for deep learning-related tasks, as well as significantly high power efficiency. Google said each Ironwood chip comes with peak compute of 4,614 teraflop (TFLOP), which is a considerably higher throughput compared to its predecessor Trillium, which was unveiled in May 2024. The tech giant also plans to make these chipsets available as clusters to maximise the processing power for higher-end AI workflows. Ironwood can be scaled up to a cluster of 9,216 liquid-cooled chips linked with an Inter-Chip Interconnect (ICI) network. The chipset is also one of the new components of Google Cloud AI Hypercomputer architecture. Developers on Google Cloud can access Ironwood in two sizes -- a 256 chip configuration and a 9,216 chip configuration. At its most expansive cluster, Ironwood chipsets can generate up to 42.5 Exaflops of computing power. Google claimed that its throughput is more than 24X of the compute generated by the world's largest supercomputer El Capitan, which offers 1.7 Exaflops per pod. Ironwood TPUs also come with expanded memory, with each chipset offering 192GB, sextuple of what Trillium was equipped with. The memory bandwidth has also been increased to 7.2Tbps. Notably, Ironwood is currently not available to Google Cloud developers. Just like the previous chipset, the tech giant will likely first transition its internal systems to the new TPUs, including the company's Gemini models, before expanding its access to developers.

[15]

Google launches new Ironwood chip to speed AI applications

Google's tensor processing units (TPUs) can only be used by the company's own engineers or through its cloud service and have given its internal AI effort an edge over some rivals. For at least one generation Google split its TPU family of chips into a version that's tuned for building large AI models from scratch. Alphabet's on Wednesday unveiled its seventh-generation artificial intelligence chip named Ironwood, which the company said is designed to speed the performance of AI applications. The Ironwood processor is geared toward the type of data crunching needed when users query software such as OpenAI's ChatGPT. Known in the tech industry as "inference" computing, the chips perform rapid calculations to render answers in a chatbot or generate other types of responses. The search giant's multi-billion dollar, roughly decade-long effort represents one of the few viable alternative chips to Nvidia's powerful AI processors. Google's tensor processing units (TPUs) can only be used by the company's own engineers or through its cloud service and have given its internal AI effort an edge over some rivals. For at least one generation Google split its TPU family of chips into a version that's tuned for building large AI models from scratch. Its engineers have made a second line of chips that strips out some of the model building features in favor of a chip that shaves costs of running AI applications. The Ironwood chip is a model designed for running AI applications, or inference, and is designed to work in groups of as many as 9,216 chips, said Amind Vahdat, a Google vice president. The new chip, unveiled at a cloud conference, brings functions from earlier split designs together and increases the available memory, which makes it better suited for serving AI applications. "It's just that the relative importance of inference is going up significantly," Vahdat said. The Ironwood chips boast double the performance for the amount of energy needed compared with Google's Trillium chip it announced last year, Vahdat said. The company builds and deploys its Gemini AI models with its own chips. The company did not disclose which chip manufacturer is producing the Google design.

[16]

Google Unveils Its "7th-Generation" Ironwood AI Accelerator; Chip Cluster Is Said To Be 24 Times Faster Than World's Most Powerful Supercomputer

Google has unveiled its "7th-generation" custom AI accelerator, the Ironwood, which is the first in-house chip to be specifically designed for inference workloads. Announced at Google Cloud Next 25, Ironwood is the firm's most powerful and efficient accelerator to date. It comes with several improvements in generational capabilities, which make it an ideal candidate for inference workloads, an area that Google believes to be the next "phase of AI." The accelerator is set to be offered to Google Cloud customers, reportedly in two different configurations: a 256-chip configuration and a 9,216-chip configuration, which are to be chosen to depend upon the workload and inference power needed. The next part is what makes Google's Ironwood a revolution for modern-day AI markets. It is claimed that under the 9,216-chip configuration, the firm achieves 24 times the computing power of the world's largest supercomputer, El Capitan, achieving 42.5 Exaflops. Apart from this, Ironwood is said to run 2x higher in perf/watt relative to the previous-gen Trillium TPU, which shows that the scaling of performance with each generation has been tremendous. Here are some other interesting facts about Ironwood: Ironwood and Google's achievements show how far custom in-house AI solutions have grown, and it is safe to say that this does challenge NVIDIA's monopoly over the markets, which Jensen already knows. Such performance figures clearly indicate that there's always room to grow, and with solutions popping up from the likes of Microsoft with Maia 100, and Amazon with Graviton chips, it is clear that firms have recognized the opportunities presented by in-house solutions.

[17]

Top Tech News: Google, Nuro Raises, RRB NTPC 2025 Exam and More

Google has unveiled Ironwood, its seventh-generation TPU AI accelerator chip, at the Cloud Next conference. Designed for running AI models, it will be available to Google Cloud customers later this year. Ironwood comes in 256-chip and 9,216-chip clusters, delivering up to 4,614 TFLOPs, 192GB RAM per chip, and 7.4 Tbps bandwidth. It features an enhanced SparseCore for improved efficiency in tasks like recommendations. Integrated with Google's AI Hypercomputer, Ironwood is part of Google's broader cloud strategy. While no specific launch date has been provided, Google has confirmed its release later this year.

[18]

Top Tech News: Google Unveils, AI Chip Startup, ISRO and More

Alphabet unveiled its seventh-generation AI chip, Ironwood, designed to enhance AI application performance. The chip specializes in inference computing, enabling faster responses in chatbots like ChatGPT. Unlike Nvidia's AI chips, Google's tensor processing units (TPUs) are used internally or via Google Cloud. Ironwood, optimized for AI applications, can function in clusters of up to 9,216 chips and offers double the energy efficiency of its predecessor, Trillium. Unveiled at a cloud conference, it integrates earlier designs with increased memory. Alphabet's stock surged 9.7% after a tariff reversal announcement by President Donald Trump.

[19]

Google launches new Ironwood chip to speed AI applications

SAN FRANCISCO (Reuters) - Alphabet's on Wednesday unveiled its seventh-generation artificial intelligence chip named Ironwood, which the company said is designed to speed the performance of AI applications. The Ironwood processor is geared toward the type of data crunching needed when users query software such as OpenAI's ChatGPT. Known in the tech industry as "inference" computing, the chips perform rapid calculations to render answers in a chatbot or generate other types of responses. The search giant's multi-billion dollar, roughly decade-long effort represents one of the few viable alternative chips to Nvidia's powerful AI processors. Google's tensor processing units (TPUs) can only be used by the company's own engineers or through its cloud service and have given its internal AI effort an edge over some rivals. For at least one generation Google split its TPU family of chips into a version that's tuned for building large AI models from scratch. Its engineers have made a second line of chips that strips out some of the model building features in favor of a chip that shaves costs of running AI applications. The Ironwood chip is a model designed for running AI applications, or inference, and is designed to work in groups of as many as 9,216 chips, said Amind Vahdat, a Google vice president. The new chip, unveiled at a cloud conference, brings functions from earlier split designs together and increases the available memory, which makes it better suited for serving AI applications. "It's just that the relative importance of inference is going up significantly," Vahdat said. The Ironwood chips boast double the performance for the amount of energy needed compared with Google's Trillium chip it announced last year, Vahdat said. The company builds and deploys its Gemini AI models with its own chips. The company did not disclose which chip manufacturer is producing the Google design. (Reporting by Max A. Cherney in San Francisco; Editing by Sonali Paul)

Share

Share

Copy Link

Google has introduced its seventh-generation AI chip, Ironwood, designed to enhance AI inference capabilities and reduce costs. This marks a shift in Google's AI hardware strategy, focusing on the growing demands of inference computing.

Google Introduces Ironwood: A New Era in AI Inference

Google has unveiled its latest AI chip, Ironwood, marking a significant shift in the company's approach to artificial intelligence hardware. This seventh-generation Tensor Processing Unit (TPU) is specifically designed to address the growing demands of AI inference, signaling a new focus on the operational aspects of AI deployment

1

.Technical Specifications and Capabilities

Ironwood boasts impressive technical specifications that set it apart from its predecessors:

- 4,614 TFLOPs of peak computing power

- 192GB of dedicated RAM with bandwidth approaching 7.4 Tbps

- Enhanced SparseCore for processing data in advanced ranking and recommendation workloads

- Designed to operate in clusters of up to 9,216 liquid-cooled chips

- Twice the performance per watt compared to the previous generation, Trillium

2

Shift Towards Inference Computing

Google's focus on inference with Ironwood represents a strategic pivot in the AI hardware landscape:

- Inference is the process of running AI models to generate predictions or responses for user queries

- The rise of "reasoning" AI models, like Google's Gemini, has increased the computational demands for inference

- This shift reflects the growing importance of practical AI applications over research-oriented tasks

3

Economic Implications

The introduction of Ironwood has significant economic implications for Google and the AI industry:

- Potential cost savings for Google by reducing reliance on third-party chips from Intel, AMD, and Nvidia

- Addressing the rising costs associated with AI infrastructure, particularly for inference tasks

- Positioning Google to compete more effectively in the high-volume inference chip market

4

Related Stories

Availability and Deployment

Google plans to make Ironwood available to developers and businesses through its cloud services:

- Two configurations: a 256-chip server and a full-size 9,216-chip cluster

- Integration with Google's AI Hypercomputer, a modular computing cluster in Google Cloud

- Scheduled for launch later this year for Google Cloud customers

5

Industry Impact and Competition

Ironwood's release comes amid intensifying competition in the AI accelerator space:

- Nvidia currently leads the market, but tech giants like Amazon and Microsoft are developing their own solutions

- Google's TPUs represent one of the few viable alternatives to Nvidia's AI processors

- The focus on inference could reshape the AI hardware landscape, potentially influencing other companies' strategies

As AI continues to evolve, Google's Ironwood chip represents a significant step towards more efficient and cost-effective AI operations, particularly in the realm of inference computing. This development could have far-reaching implications for the future of AI applications and the broader technology industry.

References

Summarized by

Navi

[1]

Related Stories

Google Unleashes Ironwood TPU v7: Seventh-Generation AI Chips Challenge Nvidia's Dominance

06 Nov 2025•Technology

Google's Trillium AI Chip: A Game-Changer for AI and Cloud Computing

12 Dec 2024•Technology

Intel Challenges AI Cloud Market with Gaudi 3-Powered Tiber AI Cloud and Inflection AI Partnership

08 Oct 2024•Technology

Recent Highlights

1