Google Unveils SynthID Detector: A New Tool to Identify AI-Generated Content

5 Sources

5 Sources

[1]

Google made an AI content detector - join the waitlist to try it

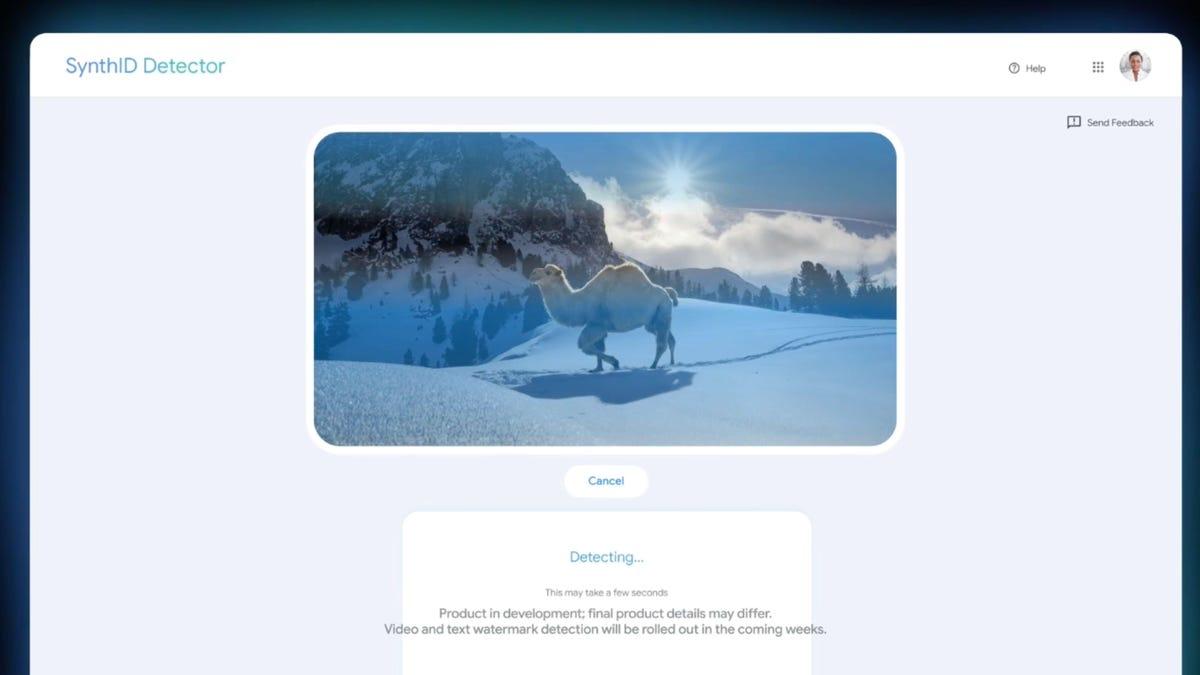

Fierce competition among some of the world's biggest tech companies has led to a profusion of AI tools that can generate humanlike prose and uncannily realistic images, audio, and video. While those companies promise productivity gains and an AI-powered creativity revolution, fears have also started to swirl around the possibility of an internet that's so thoroughly muddled by AI-generated content and misinformation that it's impossible to tell the real from the fake. Many leading AI developers have, in response, ramped up their efforts to promote AI transparency and detectability. Also: Google Flow is a new AI video generator meant for filmmakers - how to try it today Most recently, Google announced the launch of its SynthID Detector, a platform that can quickly spot AI-generated content created by one of the company's generative models: Gemini, Imagen, Lyria, and Veo. Originally released in 2023, SynthID is a technology that embeds invisible watermarks -- a kind of digital fingerprint that can be detected by machines but not by the human eye -- into AI-generated images. SynthID watermarks are designed to remain in place even when images have been cropped, filtered, or undergone some other kind of modification. Since then, AI models have grown increasingly multimodal, or able to interact with multiple forms of content. Gemini, for example -- Google's chatbot that was launched in response to the viral success of ChatGPT -- can respond to a text prompt by generating an image, or to an uploaded image with a text response. Developments in AI-generated audio and video, meanwhile, have also been moving along quickly. Also: I let Google's Jules AI agent into my code repo and it did four hours of work in an instant Google has therefore expanded SynthID so that it can watermark not only AI-generated images, but also text, audio, and video. SynthID Detector is an online portal that makes it easy to detect a SynthID watermark in media generated by one of Google's generative AI tools. Users simply upload a file, and Google's detection system scans it for a watermark. It reports back that a watermark was detected, not detected, or that the results are inconclusive. Images generated by Gemini automatically come with embedded watermarks. SynthID Detector is designed to add another layer of transparency, however, by making it easier to verify the presence of those invisible marks. Google announced Tuesday that it's rolling out SynthID Detector initially to a group of early testers before a broader public launch. It's also offering access to a waitlist for journalists, media professionals, and AI researchers. Also: The best AI chatbots: ChatGPT, Copilot, and notable alternatives "To continue to inform and empower people engaging with AI-generated content, we believe it's vital to continue collaborating with the AI community and broaden access to transparency tools," Pushmeet Kohli, vice president of Science and Strategic Initiatives at Google DeepMind, wrote in a company blog post published Tuesday. The company also announced on Tuesday a new partnership with GetReal Security, a leading cybersecurity firm specializing in detecting digital misinformation. Facing pressure from regulators in Europe and the US who are calling for greater accountability in the AI sector, as well as a growing chorus of public voices warning of the dangers of AI deepfakes, big tech companies are no longer only racing to build the most powerful models -- they're also competing to make it easier to identify AI-generated media. Tools like Google's SynthID Detector, in other words, are part of an ongoing push among AI developers to bolster their reputations as leaders in safety and transparency. Also: The top 20 AI tools of 2025 - and the #1 thing to remember when you use them While Europe's Global Data Protection Regulation (GDPR) and AI Act require AI companies to have some transparency mechanisms in place, no comprehensive federal regulation of the industry currently exists in the US. Companies operating here have therefore largely had to take it on themselves to introduce AI detectability measures. For those building generative models, like Google, those measures have often come in the form of watermark technologies. Social media platforms like TikTok and Instagram, meanwhile, have started to require labels and other disclosures for AI-generated content shared on their platforms. Get the morning's top stories in your inbox each day with our Tech Today newsletter.

[2]

Google Made an AI Detector, but You Can't Use It

Summary AI detectors are currently unreliable due to false positives and negatives. Google's SynthID Detector scans for digital watermarks in AI-generated content. SynthID Detector might not be a 100% reliable silver bullet, but it's probably as reliable as a detector can get for AI. AI detectors, while a potentially useful feature, are currently a mess. Tools like ZeroGPT like to do a lot of false positives and false negatives. Still, the need for an actually reliable AI detector is real, and that's why efforts continue to be made in that direction. Google has its own, though you can't use it yet. Google has just announced SynthID Detector, a new verification portal designed to identify content created using its artificial intelligence tools, such as Gemini, the Imagen image generation model, or the Veo video generation model. The SynthID Detector works by scanning uploaded media for an imperceptible digital watermark, also named SynthID. Google has been developing this watermarking technology to embed directly into content generated by its AI models, including Gemini (text and multimodal), Imagen (images), Lyria (audio), and Veo (video). According to the company, over 10 billion pieces of content have already been watermarked using this system. This is, then, a Google-made tool that looks for that watermark and tells you whether something is AI-generated or not. When you upload a file -- be it an image, audio track, video, or text document -- to the SynthID Detector portal, it looks around to see whether this embedded watermark is present. And if it is, the portal will indicate that the content is likely AI-generated and, in some cases, highlight specific portions where the watermark is most prominently detected. For one, in audio files, it can point out segments containing the watermark, and for images, it can indicate areas where the digital signature is most likely present. Related 6 Best Gemini Features to Try on Your Google Pixel 9 You'll love them all. Posts 1 What I still don't love about this is that it still seems to do a lot of guesswork. The detector can be "unsure" about certain parts, which is not a good omen for a supposedly reliable watermarking method that can withstand alterations and modifications. Just like it can be unsure about some bits, it could detect a watermark where there isn't one, or it could fail to detect something AI-generated. I'd say it would be more prone to false positives than false negatives, but false positives can still be a problem. I'm sure it will continue to be improved upon, though. A first-party tool like this might be the most reliable way right now to find out if something was AI-generated, but I wouldn't say that there's still a 100% reliable, bulletproof method to catch them all. This detector is currently rolling out to a few folks in an early access manner, and it will be followed by a limited rollout for journalists, media professionals, and researchers via a waitlist. Source: Google

[3]

Google Launches SynthID Detector to Catch Cheaters in the Act - Decrypt

It also helps helps identify AI-made text, and video as concerns over cheating grows. With deepfakes, misinformation, and AI-assisted cheating spreading online and in classrooms, Google DeepMind unveiled SynthID Detector on Tuesday. This new tool scans images, audio, video, and text for invisible watermarks embedded by Google's growing suite of AI models. Designed to work across multiple formats in one place, SynthID Detector aims to bring greater transparency by identifying AI-generated content created by Google's AI, including the audio AIs NotebookLM, Lyria, and image generator Imagen, and highlighting the portions most likely to be watermarked. "For text, SynthID looks at which words are going to be generated next, and changes the probability for suitable word choices that wouldn't affect the overall text quality and utility," Google said in a demo presentation. "If a passage contains more instances of preferred word choices, SynthID will detect that it's watermarked," it added. SynthID adjusts the probability scores of word choices during text generation, embedding an invisible watermark that doesn't affect the meaning or readability of the output. This watermark can later be used to identify content produced by Google's Gemini app or web tools. Google first introduced SynthID watermarking in August 2023 as a tool to detect AI-generated images. With the launch of SynthID Detector, Google expanded this functionality to include audio, video, and text. Currently, SynthID Detector is available in limited release and has a waitlist for journalists, educators, designers, and researchers to try out the program. As generative AI tools become more widespread, educators are finding it increasingly difficult to determine whether a student's work is original, even in assignments meant to reflect personal experiences. A technology ethics professor at Santa Clara University assigned a personal reflection essay, only to find that one student had used ChatGPT to complete it. At the University of Arkansas at Little Rock, another professor discovered students relying on AI to write their course introduction essays and class goals. Despite an increase in students using its AI model to cheat in class, OpenAI shut down its AI detection software in 2023, citing a low rate of accuracy. "We recognize that identifying AI-written text has been an important point of discussion among educators, and equally important is recognizing the limits and impacts of AI-generated text classifiers in the classroom," OpenAI said at the time. Compounding the issue of AI cheating are new tools like Cluely, an application designed to bypass AI detection software. Developed by former Columbia University student Roy Lee, Cluely circumvents AI detection on the desktop level. Promoted as a way to cheat on exams and interviews, Lee raised $5.3 million to build out the application. "It blew up after I posted a video of myself using it during an Amazon interview," Lee previously told Decrypt. "While using it, I realized the user experience was really interesting -- no one had explored this idea of a translucent screen overlay that sees your screen, hears your audio, and acts like a player two for your computer." Despite the promise of tools like SynthID, many current AI detection methods remain unreliable. In October, a test of the leading AI detectors by Decrypt found that only two of the four leading AI detectors, Grammarly, Quillbot, GPTZero, and ZeroGPT, could determine if humans or AI wrote the U.S. Declaration of Independence, respectively.

[4]

Google Unveils SynthID Detector Verification Portal to Combat Deepfakes

SynthID Detector is currently rolling out to early testers Google has open-sourced SynthID text watermarking The company first unveiled SynthID technology in 2023 Google I/O 2025 keynote session on Tuesday was focused on new artificial intelligence (AI) updates and features. Alongside, the Mountain View-based tech giant also introduced a new tool to bring more transparency when it comes to AI-generated content. Dubbed SynthID Detector, it is an under-testing verification portal which can detect and identify multimodal AI content made using the company's AI models. The technology can identify audio, video, image, and text, allowing individuals to easily assess if a piece of content is human-made or synthetic. The company first unveiled SynthID in 2023 as a technology that can add an imperceptible watermark into content that cannot be removed or tampered with. In 2024, the company open-sourced the text watermarking technology to businesses and developers. The invisible watermark shows up when analysed using special software. Google is now testing a verification portal dubbed SynthID Detector that will allow individuals to quickly check if a media is generated using AI or not. In a blog post, the tech giant said the portal provides transparency "in a rapidly evolving landscape of generative media." With Veo 3 and Imagen 4 AI models that can generate hyperrealistic images and videos, the risk of deepfakes has also increased significantly. While measures such as the Coalition for Content Provenance and Authenticity (C2PA) standard have offered a way for AI companies to highlight AI-generated content, they are not completely tamper-proof. Advanced watermarking technologies enable users and institutions to protect themselves from misinformation and synthetic abusive content. The portal is straightforward to use, Google explains. Users can upload media they suspect to have been generated using Google's AI tool and SynthID Detector then scans the uploaded media and detects any SynthID watermark. Afterwards, it shares the results, and if a watermark is detected, it highlights which part of the content is likely to be AI-generated. Notably, the tool does not work with non-Google AI products. One of the biggest advantages of SynthID is that the imperceptible watermark does not compromise the quality of the media, and at the same time, it is not possible to remove or alter it. Currently, Google is rolling out the portal to early testers, and it plans to make it available more broadly later this year. Journalists, media professionals and researchers can join the waitlist to gain early access to the SynthID Detector.

[5]

Google unveils SynthID Detector to identify AI-generated content

At Google I/O 2025, Google introduced SynthID Detector, a new tool designed to identify AI-generated content quickly and clearly. This portal aims to help verify digital media created using Google's AI systems, addressing growing concerns about authenticity and trust in AI-created content. Pushmeet Kohli, VP of Science and Strategic Initiatives at Google DeepMind, explained that advances in generative AI have enabled the creation of new types of content. This development brings key questions about authenticity and verification, which Google aims to address with the SynthID Detector -- a tool designed to rapidly identify AI-generated content created with Google's AI systems. SynthID Detector combines detection for various media types in one portal. It can identify parts of an image, audio, video, or text that likely contain a hidden SynthID watermark. In audio files, the portal detects and highlights specific segments where watermarks are found. For images and videos, it shows the regions most likely to contain embedded watermarks. Google has open-sourced the SynthID watermarking for text, allowing developers to integrate this technology into their own AI models. In early 2025, Google partnered with NVIDIA to add SynthID watermarks to videos made with NVIDIA's Cosmos preview microservice, extending SynthID beyond Google's own content, and also teamed up with content verification platform GetReal Security to support wider detection of SynthID watermarked material online. Pushmeet Kohli noted that SynthID aims to reduce misinformation and misattribution by providing reliable technical solutions. He emphasized the importance of transparency in AI-generated media and highlighted ongoing efforts to build partnerships and expand access to detection tools. The SynthID Detector began rolling out to early testers on Tuesday, May 20, 2025.

Share

Share

Copy Link

Google has launched SynthID Detector, a verification portal designed to identify AI-generated content across multiple media formats, addressing concerns about misinformation and deepfakes in the digital age.

Google Introduces SynthID Detector to Combat AI-Generated Content

In a significant move to address the growing concerns surrounding AI-generated content, Google has unveiled SynthID Detector, a new verification portal designed to identify media created by its artificial intelligence tools

1

. This development comes as tech giants race to establish themselves as leaders in AI safety and transparency.How SynthID Detector Works

SynthID Detector is built upon Google's SynthID technology, which embeds invisible watermarks into AI-generated content

2

. The portal can scan for these watermarks in various media types:- Images: Detects watermarks in pictures created by tools like Imagen.

- Audio: Identifies marked segments in audio files.

- Video: Highlights regions likely to contain embedded watermarks.

- Text: Analyzes word choice probabilities to detect watermarked content.

Users can simply upload a file to the portal, which then scans for the presence of a SynthID watermark and reports whether it was detected, not detected, or if the results are inconclusive

1

.Expanding Scope and Partnerships

Source: Gadgets 360

Google has expanded SynthID's capabilities beyond its initial focus on images. The technology now works across multiple AI models, including Gemini, Imagen, Lyria, and Veo

3

. Additionally, Google has:- Open-sourced SynthID text watermarking for developers

4

- Partnered with NVIDIA to add SynthID watermarks to videos made with their Cosmos preview microservice

5

- Collaborated with GetReal Security, a cybersecurity firm specializing in detecting digital misinformation

1

Related Stories

Addressing Concerns and Limitations

Source: ZDNet

While SynthID Detector represents a significant step forward in AI content detection, some limitations and concerns remain:

- The tool only works with Google's AI products, not those from other companies

4

- There's potential for false positives and negatives, as the detector can be "unsure" about certain parts of content

2

- The effectiveness of the watermarking technology against alterations and modifications is still being tested

2

Implications and Future Rollout

The introduction of SynthID Detector comes at a crucial time when concerns about deepfakes, misinformation, and AI-assisted cheating are on the rise

3

. It's particularly relevant in educational settings, where distinguishing between AI-generated and human-created content has become increasingly challenging.Currently, SynthID Detector is being rolled out to early testers, with plans for a limited release to journalists, media professionals, and researchers via a waitlist

1

. Google aims to broaden access to these transparency tools, emphasizing the importance of collaboration within the AI community to empower users engaging with AI-generated content5

.References

Summarized by

Navi

[2]

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology