Google opens Gemini Deep Research AI agent to developers with new Interactions API

10 Sources

10 Sources

[1]

Google launched its deepest AI research agent yet -- on the same day OpenAI dropped GPT-5.2 | TechCrunch

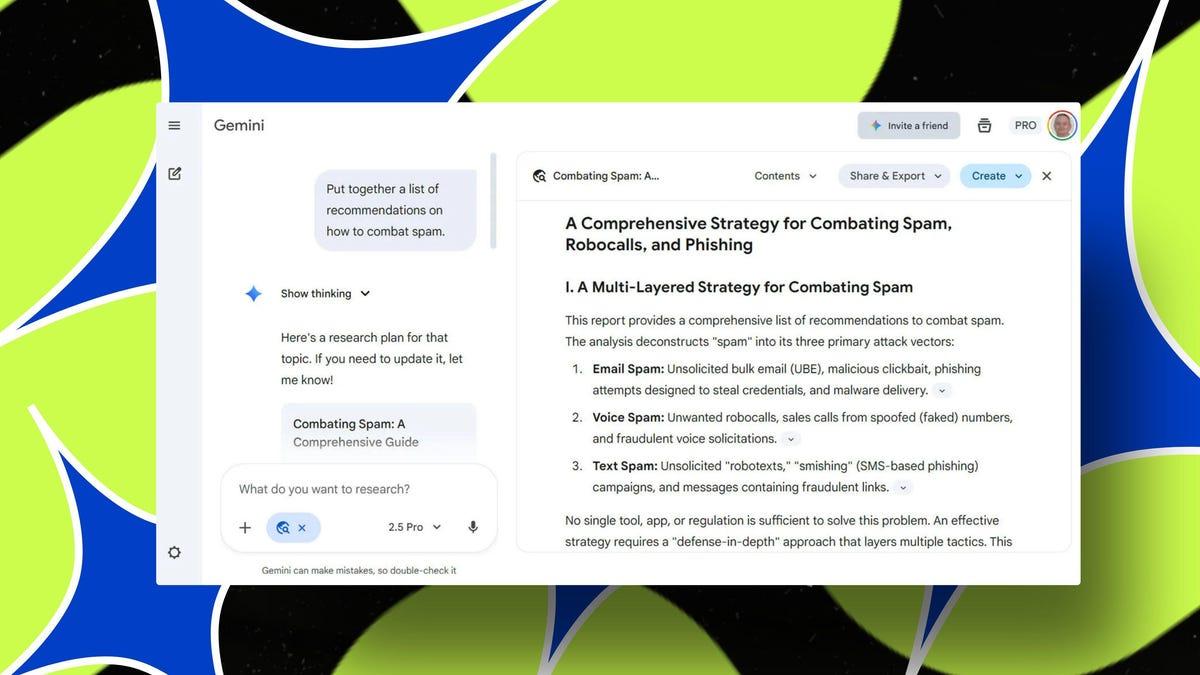

Google released on Thursday a "reimagined" version of its research agent Gemini Deep Research based on its much-ballyhooed state-of-the-art foundation model, Gemini 3 Pro. This new agent isn't just designed to produce research reports - although it can still do that. It now allows developers to embed Google's SATA-model research capabilities into their own apps. That capability is made possible through Google's new Interactions API, which is designed to give devs more control in the coming agentic AI era. The new Gemini Deep Research tool is an agent equipped to synthesize mountains of information and handle a large context dump in the prompt. Google says it's used by customers for tasks ranging from due diligence to drug toxicity safety research. Google also says it will soon be integrating this new deep research agent into services, including Google Search, Google Finance, its Gemini App and its popular NotebookLM. This is another step towards preparing for a world where humans don't Google anything anymore, their AI agents do. The tech giant says that Deep Research benefits from Gemini 3 Pro's status as its "most factual" model that is trained to minimize hallucinations during complex tasks. AI hallucinations - where the LLM just makes stuff up - are an especially crucial issue for long-running, deep reasoning agentic tasks, in which many autonomous decisions are made over minutes, hours, or longer. The more choices an LLM has to make, the greater the chance that even one hallucinated choice will invalidate the entire output. To prove its progress claims, Google has also created yet another benchmark (as if the AI world needs another one). The new benchmark is unimaginatively named DeepSearchQA, and is intended to test agents on complex, multi-step information-seeking tasks. Google has open sourced this benchmark. It also tested Deep Research on Humanity's Last Exam, a much-more interestingly named, independent benchmark of general knowledge filled with impossibly niche tasks; and BrowserComp, a benchmark for browser-based agentic tasks. As you might expect, Google's new agent bested the competition on its own benchmark, and Humanity's. However, OpenAI's ChatGPT 5 Pro was a surprisingly close second all the way around and slightly bested Google on BrowserComp. But those benchmark comparisons were obsolete almost the moment Google published them. Because on the same day, OpenAI launched its highly anticipated GPT 5.2 -- codenamed Garlic. OpenAI says its newest model bests its rivals -- especially Google -- on a suite of the typical benchmarks, including OpenAI's homegrown one. Perhaps one of the most interesting parts of this announcement was the timing. Knowing that the world was awaiting the release of Garlic, Google dropped some AI news of its own.

[2]

Upgraded Deep Research coming to Gemini app, agent now available for devs

Google today announced a "significantly more powerful Gemini Deep Research agent" that will soon be available in consumer apps and is now available for developers. Today's announcement is focused on developers. Google has a new Interactions API that serves as its "unified interface for interacting" with models (like Gemini 3 Pro) and agents. Google's new API reflects the latest model capabilities like "thinking" and advanced tool use that go beyond text generation. We will expand built-in agents and introduce the ability to build and bring your own agents. This will enable you to connect Gemini models, Google's built-in agents, and your custom agents using one API. The first built-in agent is Gemini Deep Research (Preview). Third-party developers can now add "advanced autonomous research capabilities" into their applications. "Optimized for long-running context gathering and synthesis tasks," the Gemini Deep Research agent uses Gemini 3 Pro. Google touts how it is "specifically trained to reduce hallucinations and maximize report quality during complex tasks." In response to your prompt, it "formulates queries, reads results, identifies knowledge gaps, and searches again." There's also "vastly improved web search, allowing it to navigate deep into sites for specific data." By scaling multi-step reinforcement learning for search, the agent autonomously navigates complex information landscapes with high accuracy. On benchmarks, Google notes state-of-the-art results on Humanity's Last Exam (reasoning and knowledge), DeepSearchQA (comprehensive web research), and BrowseComp (locating hard to find facts) that surpass Gemini 3 Pro. Gemini Deep Research achieves 46.4% (versus Gemini 3 Pro's 43.2%) on the full HLE set, 66.1% (versus 56.6%) on DeepSearchQA and a high 59.2% (versus 49.4%) on BrowseComp: All these improvements that developers can start previewing today (Google AI Studio) will "soon" be available for Google's consumer apps, including Gemini, Google Search, and NotebookLM.

[3]

Google's best Gemini AI feature could soon appear in your everyday apps

Deep Research goes beyond Google apps with the new Interactions API Google just upgraded Gemini Deep Research, its most advanced AI research agent, and this time the upgrade is not limited to Google's own products. Google says its AI capabilities can soon appear inside the everyday apps you already use. With the launch of the new Interactions API, Deep Research is no longer a Google-exclusive feature. It can now be built directly into third-party apps, opening the door for a wave of tools on your phone to quietly gain far more powerful AI. Gemini Deep Research is built for long, complex tasks that many chatbots tend to struggle with. Instead of answering a single question, it works like a real researcher. It plans what information it needs, searches the web, reads through results, identifies gaps, and then continues searching until it builds a complete, well-sourced answer. It uses Gemini 3 Pro as its reasoning engine, which Google says is its most factual model yet, trained to reduce hallucinations during long, multi-step tasks. How this upgrade reaches your apps With the Interactions API, any developer can now plug this research agent into their own apps. Deep Research can read uploaded documents, combine them with public web data, and produce structured reports with citations. Developers can also control the structure of the final output, request tables or formatted sections, or receive results in JSON for automation. Such capabilities make the AI suitable for automated analysis tools, finance workflows, scientific research aides, or knowledge apps. Because this feature is now developer-accessible, your favorite apps like finance, study, and productivity platforms can start using Deep Research behind the scenes. Since Google is also integrating Deep Research into apps like Search and Gemini, you may soon see richer, deeper answers without doing the work yourself. Instead of manually checking multiple sources or switching between tabs, your apps could soon pull together verified information automatically. Recommended Videos Google is also preparing to ship it directly into products like Google Search, NotebookLM, Google Finance, and the Gemini app. As this tech quietly slips into the apps you already rely on, the idea of research may soon feel less like a chore and more like something your phone simply handles for you.

[4]

Google's New Deep Research Agent Scores SoTA Results on Benchmarks | AIM

On the Humanity's Last Exam benchmark, Deep Research Agent scored 46.4%, outperforming OpenAI's GPT-5 Pro (38.9%). Google has announced the Gemini Deep Research agent, which is based on the Gemini 3 Pro model via the interactions API. This helps developers integrate autonomous research capabilities within their applications. "By scaling multi-step reinforcement learning for search, the agent autonomously navigates complex information landscapes with high accuracy," the company said. The tool plans its research iteratively, starting with formulating queries, reading sources, identifying gaps and searching again to fill them. "This release features vastly improved web search, allowing it to navigate deep into sites for specific data," Google added. On the Humanity's Last Exam benchmark, which tests AI models on expert-level reasoning and problem-solving across a broad range of academic subjects, the Deep Research Agent scored 46.4%, outperforming OpenAI's GPT-5 Pro (38.9%). Even on the BrowseComp benchmark, which evaluates LLM on locating 'hard-to-find' facts, Google's DeepResearch agent scored 59.2%, only slightly below GPT-5 Pro (59.5%). Alongside the announcement, Google also announced a new benchmark called DeepSearchQA, designed to test agent comprehensiveness on web research tasks. Gemini Deep Research agent scored 66.1%, outperforming GPT-5 Pro (65.2%). DeepSearchQA features 900 hand-crafted tasks across 17 fields, where each step depends on prior analysis. "Unlike traditional fact-based tests, DeepSearchQA measures comprehensiveness, requiring agents to generate exhaustive answer sets. This assesses both research precision and retrieval recall," Google said. Google said the agent will be soon available on Google Search, NotebookLM, Google Finance and the Gemini app. The API pricing matches the Gemini 3 Pro model: it costs $2 per million input tokens, while output tokens are priced at $12 per million for prompts up to 200,000 tokens and $18 per million for prompts that exceed that length.

[5]

Google upgrades Gemini Deep Research's search and problem-solving capabilities - SiliconANGLE

Google upgrades Gemini Deep Research's search and problem-solving capabilities Google LLC today released a new version of Gemini Deep Research, an artificial intelligence agent designed to automate complex tasks such as crafting financial reports. The company first introduced the tool last December. The initial version used Gemini 1.5 Pro, which at the time was Google's flagship large language model. The new version that debuted today is based on Gemini 3 Pro, a significantly more capable model released last month. One of the areas where Gemini 3 Pro performs better than its predecessors is visual reasoning. According to Google, it can perform tasks such as planning the travel paths of a warehouse robot. When the LLM is applied to document processing use cases, it can extract information from handwritten text, charts and mathematical notation. The new release of Gemini Deep Research uses Gemini 3 Pro's visual reasoning features to automate data retrieval tasks. Users can upload documents and have the agent scan them to find a specific piece of information. Alternatively, Gemini Deep Research can be instructed to condense the documents into a report or enrich them with information from the web. Google says that the agent's new release introduces significantly improved web search capabilities. "Deep Research iteratively plans its investigation - it formulates queries, reads results, identifies knowledge gaps, and searches again," Google DeepMind product manager Lukas Haas and group product manager Shrestha Basu Mallick explained in a blog post. Gemini Deep Research is available through a new application programming interface called Interactions API that debuted in conjunction. It enables developers to access the agent and the Gemini model series through a single access point. In the future, Google will add more pre-packaged agents to interactions API along with support for custom agent development. Besides providing centralized access to multiple AI offerings, the API also eases certain programing tasks. It automates some of the work involved in managing the data that users upload to an AI application for processing. Additionally, there's an MCP tool for connecting AI models to third-party systems. Google evaluated Gemini Deep Research's capabilities using two benchmarks called HLE and DeepSearchQA. According to the company, it achieved record performance on both tests. HLE is a particularly difficult AI benchmark that comprises over 2,500 questions. More than half of the questions relate to math, physics and programming. Google says that Gemini Deep Research solved 46.4% of the problems in HLE correctly. The other benchmark that Google used in the evaluation, DeepSearchQA, is an internally-developed dataset it open-sourced today. It comprises 900 multi-step tasks in which each step "depends on prior analysis." Google says that the benchmark measures the precision and comprehensiveness of AI models' research.

[6]

Google releases reimagined Gemini Deep Research on Gemini 3 Pro

Google released a reimagined version of its research agent, Gemini Deep Research, on Thursday, coinciding with OpenAI's announcement of GPT-5.2. The update builds on the Gemini 3 Pro foundation model to enhance factual accuracy and enable developer integration for advanced AI applications. The new Gemini Deep Research agent retains its ability to generate research reports while introducing expanded functionalities. Developers can now embed Google's SATA-model research capabilities directly into their own applications. This integration occurs through the newly launched Interactions API, which provides developers with increased control over AI operations as agentic systems become more prevalent in software development. At its core, the agent processes and synthesizes vast amounts of information efficiently. It manages large context dumps within prompts, allowing it to handle complex data sets without losing coherence. Customers already employ the tool for practical applications, including due diligence processes in business and drug-toxicity safety research in pharmaceuticals, demonstrating its utility in real-world scenarios requiring precise information handling. Google intends to incorporate the deep-research agent into several of its existing services to broaden accessibility. These include Google Search for improved query resolution, Google Finance for detailed market analysis, the Gemini App for user interactions, and NotebookLM for note-taking and knowledge organization. Such integrations aim to leverage the agent's strengths across Google's ecosystem. The agent's performance relies heavily on the Gemini 3 Pro model's design as Google's most factual foundation model. This model undergoes training specifically to minimize hallucinations, instances where large language models generate inaccurate information. Hallucinations pose a significant risk in long-running, deep-reasoning tasks, where agents make numerous autonomous decisions over extended periods such as minutes or hours. A single hallucinated choice in these sequences can compromise the validity of the entire output, making reduced hallucination rates essential for reliable operation. To substantiate its advancements, Google developed a new evaluation benchmark named DeepSearchQA. This benchmark assesses AI agents on complex, multi-step information-seeking tasks that mimic real research challenges. Google has made DeepSearchQA available as an open-source resource, enabling the broader AI community to test and compare agent capabilities using standardized metrics.

[7]

Google just opened up its most powerful AI research agent - and this quietly changes how developers build 'thinking' apps

Deep Research opens up to developersGemini 3 Pro powers reasoning coreNew benchmark tests multi-step AI thinkingGoogle is making a decisive push toward more capable, more autonomous AI and this time, it's putting serious tools directly into developers' hands. The company has rolled out an upgraded version of its Deep Research agent, opening it up to developers for the first time. At its core is Gemini 3 Pro, Google's most advanced multimodal model yet, designed to handle long, complex research workflows rather than quick, one-off answers. Deep Research isn't about surfacing links or summarising a single webpage. It works more like a human researcher: forming queries, scanning results, identifying gaps, and iterating until it reaches a well-reasoned conclusion. Google says this looped, self-checking approach helps reduce hallucinations and improves the quality of long-form insights. Originally launched inside the Gemini app in late 2024, Deep Research is now stepping beyond Google's own products. Developers can embed it into their apps, workflows, and internal tools, effectively turning it into a persistent research assistant rather than a simple chatbot. Under the hood, Gemini 3 Pro acts as the agent's reasoning engine. In Google's internal tests, the Deep Research agent reportedly outperformed standard web search modes even those powered by the same model when it came to multi-step, cross-domain queries. The company is careful to add that the system isn't infallible, but positions it as a strong exploratory tool, especially for unfamiliar or layered topics. Alongside the agent, Google is also releasing DeepSearchQA, an open-source benchmark aimed at testing how well AI systems sustain reasoning across multiple steps. Unlike traditional benchmarks that focus on isolated facts, DeepSearchQA is built around 900 "causal chain" tasks spanning 17 domains, including policy, history, climate science, and health. The focus here is completeness and reasoning continuity, not just correct answers. For developers, the Deep Research API brings practical tools: PDF and CSV parsing, structured report templates, granular source citations, and JSON-based outputs for smoother integration. Google says upcoming updates will add native chart generation and broader support for the Model Context Protocol, allowing developers to plug in their own data sources. The company is also introducing a new Interactions API, replacing the older request-response style interface with something more stateful and session-based. Available in public beta through Google AI Studio, it supports long-running tasks, background execution, and persistent context key building blocks for more autonomous AI agents. Taken together, these updates signal a shift in Google's AI strategy. The focus is no longer just on generating text, but on building systems that can reason, research, and operate with a degree of independence. With Deep Research now open to developers and a benchmark designed to keep models honest Google is clearly betting that the future of AI lies in asking better questions, not just answering them.

[8]

Google Unveils Gemini Deep Research The Same Day As OpenAI's GPT-5.2 Launch, Intensifying AI Face-Off - Alphabet (NASDAQ:GOOG), Alphabet (NASDAQ:GOOGL)

Alphabet's Google (NASDAQ:GOOGL) (NASDAQ:GOOG) has launched an enhanced version of its research agent, Gemini Deep Research, which is designed to revolutionize the way AI is used for research and development. New Gemini Agent Powers AI Research On Thursday, Google unveiled a new and improved version of its research agent, Gemini Deep Research, which is based on the advanced Gemini 3 Pro model. Besides producing research reports, it also allows developers to integrate Google's SATA-model research capabilities into their own applications. The Gemini Deep Research tool is equipped to synthesize vast amounts of information and manage large context dumps in the prompt. Google states that its customers use this tool for various tasks, from due diligence to drug toxicity safety research. Google will embed its new deep research agent across products like Search, Finance, the Gemini app, and NotebookLM, marking a shift toward AI-driven information retrieval. The tool is powered by Gemini 3 Pro, which Google describes as its most accurate model, designed to reduce hallucinations during complex research tasks. See Also: Having Multiple Jobs Finally Caught Up To Him. He Got Fired From Three In One Day. 'Woke Up This Morning To A Fun Impromptu Meeting With HR' OpenAI, Google Intensify AI Competition The launch of the new Gemini Deep Research tool came the same day as OpenAI introduced its most advanced AI model, GPT-5.2, which it claimed to be the best offering yet for everyday professional use. The company also stated that the update improves spreadsheet creation, presentation building, image understanding, coding, and long-context comprehension. After Anthropic and Google rolled out new models last month, OpenAI reportedly declared a "code red," shifting resources toward upgrading ChatGPT and putting other projects on hold. This move by Google is likely to intensify the competition between the two tech giants in the AI space. Earlier in December, CNBC commentator Jim Cramer predicted that OpenAI could fall behind due to the recent advancements in AI technology, particularly the introduction of Google's Gemini 3 AI model. Cramer suggested that this could lead to a surge of tens of millions of users to the Gemini 3 platform. READ NEXT: Scott Galloway Calls SpaceX Incredible Company With 'Bigger Moat' Than OpenAI, But Refuses To Invest In It Image via Shutterstock Disclaimer: This content was partially produced with the help of AI tools and was reviewed and published by Benzinga editors. GOOGAlphabet Inc$314.500.26%OverviewGOOGLAlphabet Inc$313.350.29%Market News and Data brought to you by Benzinga APIs

[9]

Google Takes the Battle for AI Supremacy Back to OpenAI with New Gemini Deep Research

The latest effort would likely help Google integrate its AI apps more tightly with their workplace offerings Barely hours after OpenAI came out with its latest frontier model GPT-5.2 to take on Google's Gemini-3, the search giant pushed right back with a "reimagined" version of its research agent - Gemini Deep Research based on their Gemini 3 Pro foundation model. The new agent based is not only designed to generate research reports, it also allows developers to embed Google's SATA model research capabilities into their own apps. This has been made possible through the use of Google's interactions API that gives developers more control while creating new agentic era solutions. According to a blog post, the Interactions API introduces a native interface specifically designed to handle complex context management when building agentic applications with interleaved messages, thoughts, tool calls and their state. "Alongside our suite of Gemini models, the Interactions API provides access to our first built-in agent: Gemini Deep Research (Preview), a state-of-the-art agent capable of executing long-horizon research tasks and synthesizing findings into comprehensive reports," Google says. The new Gemini Deep Research tool comes equipped with the capability to synthesise massive amounts of data, the ability to handle an equally voluminous context in a prompt, making it perfect for users in tasks ranging from due diligence testing to safety research around drugs. Google also plans to integrate the research agent into its services such as Google Search, Google Finance, the Gemini App and NotebookLM. "Gemini Deep Research is an agent optimized for long-running context gathering and synthesis tasks. The agent's reasoning core uses Gemini 3 Pro, our most factual model yet, and is specifically trained to reduce hallucinations and maximize report quality during complex tasks. By scaling multi-step reinforcement learning for search, the agent autonomously navigates complex information landscapes with high accuracy," Google says in another blog post. This puts Google once again a step ahead in the integration race as the company wants AI agents to do all the work that humans do while using Google's work suite products. They claim that Deep Research is proving to be the most factual model that is trained to minimise hallucinations while attempting complex tasks. In order to prove its claims, the company says it has created another benchmark that is named DeepSearchQA to test agents on complex, multi-step information-heavy tasks. Google has open-sourced this benchmark, though most of us watching their numbers grow would wonder what another one can do in this already crowded arena. Deep Research iteratively plans its investigation - it formulates queries, reads results, identifies knowledge gaps, and searches again. This release features vastly improved web search, allowing it to navigate deep into sites for specific data, Google says. DeepSearchQA features 900 hand-crafted "causal chain" tasks across 17 fields, where each step depends on prior analysis. Unlike traditional fact-based tests, DeepSearchQA measures comprehensiveness, requiring agents to generate exhaustive answer sets. This assesses both research precision and retrieval recall, Google claims. The company has provided developer documentation and wants users to take a stab at building while also promising that future updates would deliver richer outputs such as native chart generation for visual analytical reports, and better connectivity via the model text protocol support that facilitates easier access to custom data sources.

[10]

Google launches upgraded Gemini Deep Research agent: Here's what it can do

At the core of the upgraded agent is Gemini 3 Pro, Google's most factual model yet. Google has rolled out a major upgrade to its Gemini Deep Research agent, giving developers access to a far more powerful system for long-form research, analysis and information gathering. Interestingly, Google's announcement arrived on the same day OpenAI launched GPT-5.2. Instead of returning quick answers, the agent plans its work carefully: it creates search queries, reads through results, identifies what it still doesn't know, and searches again. This process helps it gather deeper, more accurate information from across the web. At the core of the upgraded agent is Gemini 3 Pro, Google's most factual model yet. It has been trained specifically to reduce hallucinations and to produce clearer, more reliable reports. According to the tech giant, the upgraded Gemini Deep Research agent achieves "state-of-the-art results on Humanity's Last Exam (HLE) and DeepSearchQA, and is our best on BrowseComp." "Deep Research is now more useful and intelligent than ever, and will soon be available in Google Search, NotebookLM, Google Finance and upgraded in the Gemini App." Also read: OpenAI brings GPT 5.2 to take on Gemini 3 Pro, Sam Altman says its most capable model yet Developers can use the Deep Research agent to analyse uploaded documents, combine them with web findings, and generate structured reports. It also supports custom formatting, which means you can control the output via prompting. Google says future updates will bring built-in chart generation, better connections to custom data sources through MCP, and availability through Vertex AI for enterprise use. Also read: OpenAI's ChatGPT can now edit your images using Adobe Photoshop: Here is how The tech giant is also open-sourcing DeepSearchQA, a benchmark built to test how well research agents handle long, multi-step tasks. The benchmark includes 900 carefully designed tasks across 17 fields. Unlike simple fact-checking datasets, DeepSearchQA "measures comprehensiveness, requiring agents to generate exhaustive answer sets. This assesses both research precision and retrieval recall," Google explains.

Share

Share

Copy Link

Google upgraded its Gemini Deep Research AI agent with Gemini 3 Pro and launched the Interactions API, enabling developers to embed advanced research capabilities into their apps. The release came the same day OpenAI launched GPT-5.2, intensifying competition in the AI agent space. Deep Research will soon integrate into Google Search, NotebookLM, and other consumer apps.

Google Unveils Upgraded Gemini Deep Research Powered by Gemini 3 Pro

Google released a reimagined version of Gemini Deep Research on Thursday, transforming its AI agent from a standalone research tool into a developer-accessible platform that can be embedded into third-party applications

1

. The upgraded agent runs on Gemini 3 Pro, Google's most factual foundation model designed to minimize hallucinations during complex tasks2

. This release marks a strategic shift for Google, positioning its advanced research capabilities as infrastructure that developers can integrate into everyday apps rather than keeping them confined to Google's ecosystem.

Source: 9to5Google

The timing of the announcement proved notable, arriving on the same day OpenAI launched its highly anticipated GPT-5.2 model, codenamed Garlic

1

. The simultaneous releases underscore the intensifying competition between tech giants racing to dominate the emerging agentic AI era, where autonomous agents handle complex, multi-step tasks without constant human supervision.

Source: Benzinga

Interactions API Opens Advanced Research Capabilities to Third-Party Developers

The launch centers on Google's new Interactions API, which serves as a unified interface for interacting with models and agents

2

. This API reflects the latest model capabilities including thinking and advanced tool use that extend beyond simple text generation. Developers can now add autonomous research capabilities into their applications, enabling finance platforms, productivity tools, and scientific research aides to leverage the same technology Google uses internally3

.The Interactions API provides centralized access to multiple AI offerings while automating data management tasks and supporting connections to third-party systems through MCP tools

5

. Available for developers now through Google AI Studio, the integration into developer apps means users may soon see richer, deeper answers in their favorite apps without manually checking multiple sources3

. The API pricing matches Gemini 3 Pro model rates at $2 per million input tokens, while output tokens cost $12 per million for prompts up to 200,000 tokens and $18 per million for longer prompts4

.How Gemini Deep Research Works Through Iterative Planning and Improved Web Search

Gemini Deep Research operates like a real researcher rather than a simple chatbot. The AI agent formulates queries, reads results, identifies knowledge gaps, and searches again until it builds a complete, well-sourced answer

2

. This release features vastly improved web search capabilities, allowing the agent to navigate deep into sites for specific data4

. By scaling multi-step reinforcement learning for search, the agent autonomously navigates complex information landscapes with high accuracy2

.

Source: SiliconANGLE

The reasoning engine uses Gemini 3 Pro's visual reasoning features to automate data retrieval tasks, enabling users to upload documents for scanning, condensing into reports, or enriching with web information

5

. Developers can control the structure of final outputs, request tables or formatted sections, or receive results in JSON for automation3

. This capability proves especially valuable for tasks ranging from due diligence to drug toxicity safety research, where customers need to synthesize mountains of information1

.Related Stories

Reduced Hallucinations Critical for Long-Running Agentic Tasks

Google emphasizes that Gemini 3 Pro is specifically trained to reduce hallucinations and maximize report quality during complex tasks

2

. AI hallucinations—where the LLM fabricates information—pose an especially crucial issue for long-running, deep reasoning agentic tasks where many autonomous decisions unfold over minutes, hours, or longer1

. The more choices an LLM makes, the greater the chance that even one hallucinated choice will invalidate the entire output.This focus on factual accuracy becomes critical as Google prepares to integrate Deep Research into consumer apps including Google Search, Google Finance, the Gemini App, and NotebookLM

1

. The move represents another step toward a world where humans don't search for information themselves—their AI agents handle context gathering and information synthesis autonomously.AI Benchmarks Show State-of-the-Art Performance Against OpenAI Competition

Google tested Gemini Deep Research on multiple AI benchmarks to demonstrate its capabilities. On Humanity's Last Exam, which tests expert-level reasoning across academic subjects, the agent scored 46.4% compared to OpenAI's GPT-5 Pro at 38.9%

4

. The benchmark comprises over 2,500 questions, with more than half relating to math, physics, and programming5

.Google also introduced DeepSearchQA, a new open-sourced benchmark featuring 900 hand-crafted tasks across 17 fields where each step depends on prior analysis

4

. Unlike traditional fact-based tests, DeepSearchQA measures comprehensiveness, requiring agents to generate exhaustive answer sets that assess both research precision and retrieval recall4

. Gemini Deep Research achieved 66.1% on DeepSearchQA versus GPT-5 Pro's 65.2%, and scored 59.2% on BrowseComp, which evaluates locating hard-to-find facts, just slightly below GPT-5 Pro's 59.5%4

.However, these benchmark comparisons became obsolete almost immediately, as OpenAI launched GPT-5.2 the same day, with claims of besting rivals on its own suite of benchmarks

1

. The rapid-fire releases signal that the competition in agentic AI will likely accelerate, with both companies pushing to establish their platforms as the foundation for the next generation of intelligent applications.References

Summarized by

Navi

[1]

[3]

Related Stories

Google Rolls Out Experimental Gemini 2.0 Advanced: A Leap in AI Capabilities

17 Dec 2024•Technology

Google AI upgrades Gemini 3 Deep Think to tackle advanced scientific research challenges

13 Feb 2026•Technology

Google Expands Deep Research AI Tool to Workspace, Enhancing Research Capabilities

21 Feb 2025•Technology

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology