HarmonyCloak: A New Tool to Protect Musicians from AI Copyright Infringement

3 Sources

3 Sources

[1]

This app is saving musicians by poisoning the AI so it stops stealing music - Softonic

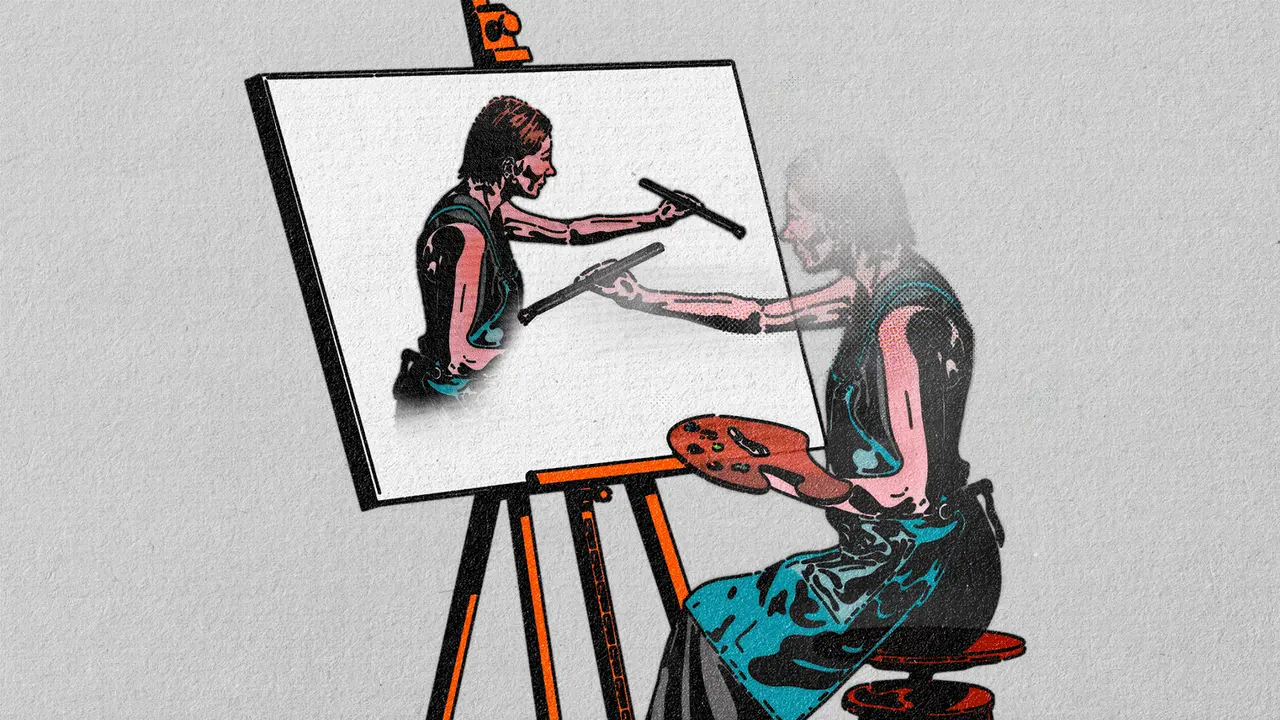

Fighting against AI by poisoning our music so that the current model doesn't copy it We have all heard songs and music created with artificial intelligence this past year. Apps like Suno have changed the musical landscape and millions of artists fear for their future. From this medium, an initiative has emerged that is poisoning the music repositories that AI steals from to create artificial music. As you well know, artificial intelligence systems need to be fed with large amounts of data, which often include materials protected by copyright. Musicians now have a way to defend themselves with HarmonyCloak, a system that embeds data into songs that the human ear cannot detect, but will corrupt any AI that tries to reproduce them. Researchers from the University of Tennessee, in Knoxville, and Lehigh University have developed a new tool that could help musicians protect their work from being fed into the machine It's called HarmonyCloak, and it works by incorporating a new layer of noise into the music that human ears cannot detect, but AI ears cannot ignore. This additional noise is dynamically created to blend with the specific features of any musical piece, remaining below the human auditory threshold. But the wandering AI models that listen to the music don't know which parts to ignore, so they poison the well and ruin their recreation attempts. The idea is that creators can use the tool to add a layer of protection to their music before uploading it to websites or streaming services, where it could get caught in the AI's nets. Something that is already used in image art, paintings, and pictures. HarmonyCloak can be used in two different configurations. It can be adjusted to apply noise targeted at a specific AI model, with better results that continue to apply even after processing the track, such as compression to MP3. Or it can generate noise that affects a series of models, so that the original creation is protected against any AI that tries to replicate it, including those that have not yet been developed.

[2]

HarmonyCloak slips silent poison into music to corrupt AI copies

A new tool called HarmonyCloak can corrupt AI's attempts to reproduce music, without affecting the quality of human listening Generative AI systems need to be fed huge amounts of data, with copyrighted materials often on the menu. Musicians may now have a way to fight back with HarmonyCloak, a system that embeds data into songs that can't be picked up by human ears but will scramble AI trying to reproduce it. In order to generate Facebook images that trick your Nan into praising a fake kid who made a giant Jesus out of eggs, AI systems first need to be trained on eye-watering amounts of data. The more content that's poured into these models, the more detailed, accurate and diverse the end results can be. So companies like OpenAI and Anthropic just scrape the entirety of human creative output - in other words, the internet. But a little thing called copyright keeps cropping up as a thorn in their sides. Nintendo wasn't too pleased that Meta AI allowed users to generate emoji stickers depicting Mario characters brandishing rifles, for instance. But while the big content owners have the resources to either sue AI companies or strike up lucrative licensing deals, small creators often get shafted. Researchers at the University of Tennessee, Knoxville and Lehigh University have now developed a new tool that could help musicians protect their work from being fed into the machine. It's called HarmonyCloak, and it works by effectively embedding a new layer of noise into music that human ears can't detect but AI 'ears' can't tune out. This extra noise is dynamically created to blend into the specific characteristics of any given piece of music, remaining below the human hearing threshold. But any errant AI models that scrape the music can't figure out which bits to ignore, so it kind of poisons the well and ruins their attempts at recreation. The idea is that creators could use the tool to add a layer of protection to their music before uploading it to websites or streaming services, where it might get caught up in AI dragnets. Similar tools are already in use for images. Here's a good example of it in action. These two audio clips are generated by a model called MusicLM, based on the same prompt - "generate indie rock track" - and trained on the same music. The difference is that one source is clean, while the other uses HarmonyCloak. When trained on the clean music, the AI farts out a serviceable but soulless track. It wouldn't sound out of place in the background of a car insurance commercial produced by an agency that doesn't want to pay artists. But then comes the AI-generated track trained on music protected by HarmonyCloak. The difference is stark - this mess of random noise is genuinely uncomfortable to listen to, and sounds like it was recorded by a three-legged cat hopping across a keyboard. HarmonyCloak can be used in two different settings. It can be tuned to apply noise that's targeted at a specific AI model, with better results that still apply even after the track's been processed, such as compression to MP3. Or, it can generate noise that affects a range of models, so the original creation is protected against whichever AI attempts to replicate it, including ones that haven't been developed yet. Normally these kinds of protections would create an arms race, where AI models just adapt to get around barriers like this. But in this case, the team says HarmonyCloak operates differently with every song, so AI would need to know the specific parameters used for each individual track before it could crack the code. It could still be done, but at least it wouldn't be easy for it en masse. HarmonyCloak and other tools might end up helping artists survive until AI either completely destroys the value of human expression, or it chokes to death on its own regurgitations of regurgitations - whichever comes first. The researchers will present their work at the IEEE Symposium on Security and Privacy in May 2025.

[3]

New tool makes songs unlearnable to generative AI

Nearly 200 years after Beethoven's death, a team of musicians and computer scientists created a generative artificial intelligence (AI) that completed his Tenth Symphony so convincingly that music scholars could not differentiate the music originating from the AI or from the composer's handwritten notes. Before such AI tools can generate new types of data, including songs, they need to be trained on huge libraries of that same kind of data. Companies that create generative AI models typically gather this training data from across the internet, often from websites where artists themselves have made their art available. "Most of the high-quality artworks online are copyrighted, but these companies can get the copyrighted versions very easily," said Jian Liu, an assistant professor in the Min H. Kao Department of Electrical Engineering and Computer Science (EECS) who specializes in cybersecurity and machine learning. "Maybe they pay $5 for a song, like a normal user, and they have the full version. But that purchase only gives them a personal license; they are not authorized to use the song for commercialization." Companies will often ignore that restriction and train their AI models on the copyrighted work. Unsuspecting users paying for the generative tool may then generate new songs that sound suspiciously similar to the human-made, copyrighted originals. This summer, Tennessee became the first state in the US to legally protect musical artists' voices from unauthorized generative AI use. While he applauded that first step, Liu saw the need to go further -- protecting not just vocal tracks, but entire songs. In collaboration with his Ph.D. student Syed Irfan Ali Meerza and Lehigh University's Lichao Sun, Liu has developed HarmonyCloak, a new program that makes musical files essentially unlearnable to generative AI models without changing how they sound to human listeners. They will present their research at the 46th IEEE Symposium on Security and Privacy (S&P) in May 2025. "Our research not only addresses the pressing concerns of the creative community but also presents a tangible solution to preserving the integrity of artistic expression in the age of AI," he said. Giving AIs deja vu Liu, Meerza, and Sun were committed to protecting music without compromising listeners' experiences. They decided to find a way to trick generative AIs using their own core learning systems. Like humans, generative AI models can tell whether a piece of data they encounter is new information or something that matches with their existing knowledge. Generative AIs are programmed to minimize that knowledge gap by learning as much as possible from each new piece of data. "Our idea is to minimize the knowledge gap ourselves so that the model mistakenly recognizes a new song as something it has already learned," Liu explained. "That way, even if an AI company can still feed your music into their model, the AI 'thinks' there is nothing to learn from it." Liu's team also had to contend with the dynamic nature of music. Songs often mix multiple instrumental channels with human voices, each channel spanning its own frequency spectrum, and channels can fade from the foreground to the background and change tempo as time goes on. Fortunately, just as there are ways to trick an AI model, there are ways to trick the human ear. Undetectable perturbations Human perception of sounds is dependent on a number of factors. Humans are unable to hear sounds that are very quiet (like music being played a mile away) or outside certain frequencies (like the pitch of a dog whistle). There are also ways to trick the ear into ignoring a sound that is technically audible. For example, a quiet noise played immediately after a louder one will go unnoticed, especially if the notes have similar frequencies. Liu's team built HarmonyCloak to introduce new notes, or perturbations, that can trick AI models but are masked enough by the song's original notes that they evade human detection. "Our system preserves the quality of music because we only add imperceptible noises," Liu said. "We want humans to be unable to tell the difference between this perturbed music and the original." To test HarmonyCloak's effectiveness, Liu, Meerza, and Sun recruited 31 human volunteers along with three state-of-the-art music-generative AI models. The human volunteers gave the original and unlearnable songs similarly high ratings for pleasantness. (They can be compared at the team's website). Meanwhile, the AI models' outputs rapidly deteriorated, earning far worse scores from both humans and statistical metrics as more songs in their training libraries were protected by HarmonyCloak. "These findings underscore the substantial impact of unlearnable music on the quality and perception of AI-generated music," Liu said. "From the music composer's perspective, this is the perfect solution; AI models can't be trained on their work, but they can still make their music available to the public."

Share

Share

Copy Link

Researchers have developed HarmonyCloak, an innovative tool that embeds imperceptible noise into music, making it unlearnable for AI models while preserving the listening experience for humans. This technology aims to protect musicians' copyrighted work from unauthorized use in AI training.

HarmonyCloak: A Shield Against AI Music Theft

In an era where artificial intelligence (AI) is rapidly transforming the music industry, a new tool called HarmonyCloak has emerged as a potential savior for musicians concerned about AI copyright infringement. Developed by researchers from the University of Tennessee, Knoxville, and Lehigh University, HarmonyCloak offers a novel approach to protecting musical creations from being exploited by AI systems

1

2

3

.How HarmonyCloak Works

HarmonyCloak operates by embedding an additional layer of noise into music that is imperceptible to human ears but confounds AI models attempting to learn or reproduce the protected tracks. This innovative technology dynamically creates noise that blends with the specific features of any musical piece, remaining below the human auditory threshold

1

2

.The tool can be used in two configurations:

- Targeted protection against specific AI models

- Broad-spectrum protection against a range of current and future AI systems

In both cases, the protection remains effective even after standard audio processing, such as MP3 compression

1

2

.The AI Copyright Dilemma

The development of HarmonyCloak comes in response to growing concerns about how AI companies acquire training data. Many generative AI systems are trained on vast amounts of data scraped from the internet, often including copyrighted materials

2

3

. This practice has led to legal and ethical debates, with major content owners pursuing litigation or licensing deals, while smaller creators often lack the resources to protect their work2

.Effectiveness and Human Perception

Research has shown that HarmonyCloak is highly effective in corrupting AI-generated music without compromising the listening experience for humans. In a study involving 31 human volunteers and three state-of-the-art music-generative AI models, the protected songs received similar pleasantness ratings from human listeners as the originals. However, AI models trained on HarmonyCloak-protected music produced significantly degraded outputs

3

.Implications for the Music Industry

HarmonyCloak represents a significant development in the ongoing struggle between human creativity and AI replication. It offers musicians a way to share their work publicly while maintaining control over its use in AI training. This tool could potentially reshape the landscape of music distribution and AI development in the creative industries

1

2

3

.Related Stories

Legal and Technological Context

The introduction of HarmonyCloak aligns with recent legal efforts to protect artists' rights in the age of AI. For instance, Tennessee became the first U.S. state to legally protect musical artists' voices from unauthorized generative AI use. HarmonyCloak extends this protection to entire songs, addressing a broader scope of potential infringement

3

.Future Developments and Challenges

While HarmonyCloak presents a promising solution, it may also spark an arms race between protection technologies and AI advancements. However, the researchers note that HarmonyCloak's dynamic nature, which creates unique protections for each song, makes it particularly challenging for AI to circumvent en masse

2

.As the technology continues to develop, it will be presented at the IEEE Symposium on Security and Privacy in May 2025, potentially paving the way for wider adoption and further innovations in music protection against AI exploitation

2

3

.References

Summarized by

Navi

[3]

Related Stories

Music Industry's Uphill Battle Against AI: Deepfakes, Copyright Issues, and Limited Success

07 Apr 2025•Technology

AI-Generated Band "Velvet Sundown" Sparks Debate on Music Industry's Future

08 Jul 2025•Technology

King Gizzard & the Lizard Wizard quits Spotify, only to be replaced by AI music clones

08 Dec 2025•Entertainment and Society

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology