Hidden Threats in Plain Sight: AI Agents Vulnerable to Image-Based Hacking

2 Sources

2 Sources

[1]

Seemingly Harmless Photos Could Be Used to Hack AI Agents

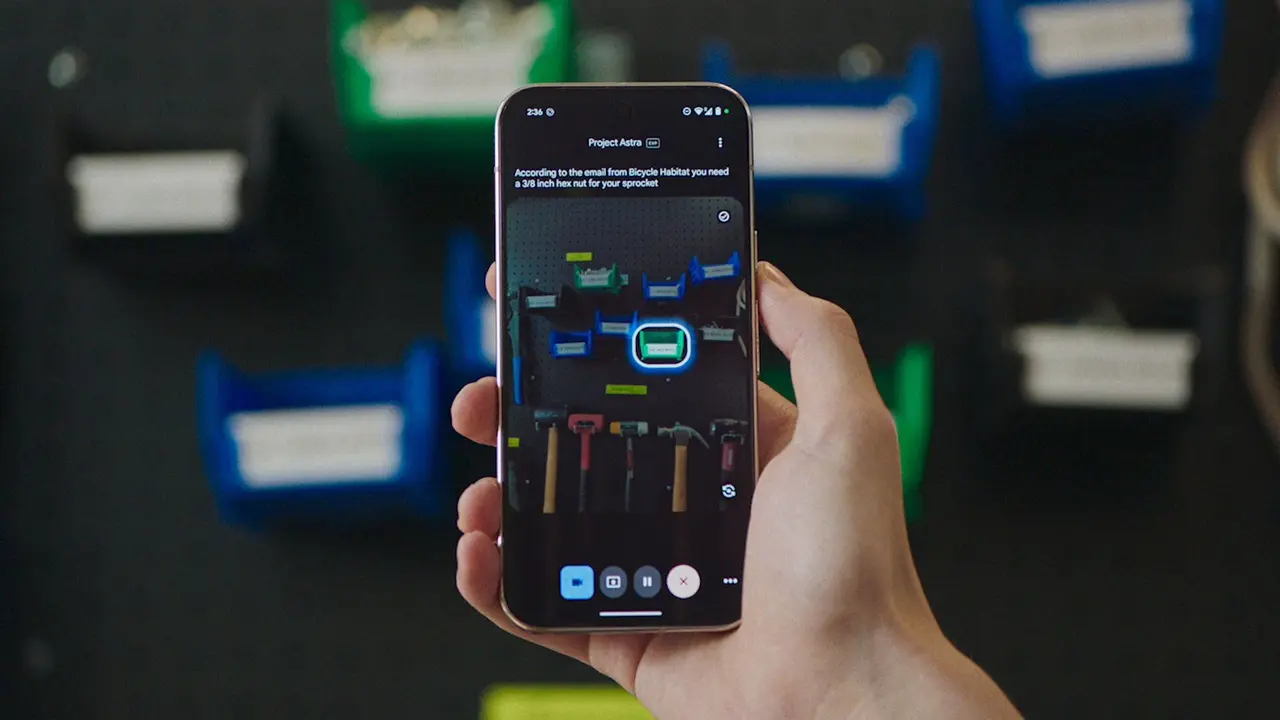

A new study has revealed a new type of cyber threat linked to AI agents, where ordinary-looking photos can be altered to secretly issue malicious commands. AI agents are an advanced version of AI chatbots and are increasingly being seen as the next frontier in technology. Companies like OpenAI recently released their own ChatGPT AI agent. Unlike chatbots, these AI agents not only answer questions but also perform tasks on a user's computer, such as opening tabs, sending emails, and scheduling meetings. However, in a new study by researchers at the University of Oxford found that photos -- such as wallpapers, ad images, or even pictures posted on social media -- can be secretly altered so that, while they look perfectly normal to humans, they contain hidden instructions that only the AI agent can "see." According to a report published by Scientific American, if an AI agent comes across one of these doctored images while working (for example, it notices the image on a user's desktop background in a screenshot), it could misinterpret the pixels as a command. That might make it do things a user didn't ask for, such as share their passwords or spread the malicious image further. For instance, the study's co-author Yarin Gal, an associate professor of machine learning at Oxford University, gives Scientific American the example of how an altered "picture of Taylor Swift on Twitter could be sufficient to trigger the agent on someone's computer to act maliciously." To a human's eyes, the photo looks completely normal. But the AI reads it differently, because computers process images as numbers, and small, invisible pixel tweaks can change what the AI thinks it's seeing. Any sabotaged image, whether it be a photo of Taylor Swift, a kitten, or a sunset "can actually trigger a computer to retweet that image and then do something malicious, like send all your passwords. That means that the next person who sees your Twitter feed and happens to have an agent running will have their computer poisoned as well. Now their computer will also retweet that image and share their passwords." The risk is reportedly greatest for "open-source" AI systems, where the code is available for anyone to study. That makes it easier for hackers to figure out exactly how the AI interprets photos and how to sneak in hidden commands. So far, the researchers say this threat has only been seen in controlled experiments, and there are no reports of it happening in the real world. Still, the study's authors warn that the vulnerability is real and want to alert developers before AI agents become more common. The researchers say the goal is to create safeguards so these AI agent systems can't be tricked by hidden instructions in everyday photos.

[2]

AI agents can be controlled by malicious commands hidden in images

A 2025 study from the University of Oxford has revealed a security vulnerability in AI agents, which are expected to be widely used within two years. Unlike chatbots, these agents can take direct actions on a user's computer, such as opening tabs or filling out forms. The research shows how attackers can embed invisible commands in images to take control of these agents. Researchers demonstrated that by making subtle changes to the pixels in an image -- such as a desktop wallpaper, an online ad, or a social media post -- they could embed malicious commands. While these alterations are invisible to the human eye, an AI agent can interpret them as instructions. The study used a "Taylor Swift" wallpaper as an example. A single manipulated image could command a running AI agent to retweet the image on social media and then send the user's passwords to an attacker. The attack only affects users who have an AI agent active on their computer. AI agents work by repeatedly taking screenshots of the user's desktop to understand what is on the screen and identify elements to interact with. Because a desktop wallpaper is always present in these screenshots, it serves as a persistent delivery method for a malicious command. The researchers found that these hidden commands are also resistant to common image changes like resizing and compression. Open-source AI models are especially vulnerable because attackers can study their code to learn how they process visual information. This allows them to design pixel patterns that the model will reliably interpret as a command. The vulnerability allows attackers to string together multiple commands. An initial malicious image can instruct the agent to navigate to a website, which could host a second malicious image. This second image can then trigger another action, creating a sequence that allows for more complex attacks. The researchers hope their findings will push developers to build security measures before AI agents become widespread. Potential defenses include retraining models to ignore these types of manipulated images or adding security layers that prevent agents from acting on on-screen content. People are rushing to deploy the technology before its security is fully understood. Yarin Gal, an Oxford professor and co-author of the study, expressed concern that the rapid deployment of agent technology is outpacing security research. The authors stated that even companies with closed-source models are not immune, as the attack exploits fundamental model behaviors that cannot be protected simply by keeping code private.

Share

Share

Copy Link

University of Oxford researchers uncover a new cybersecurity risk where AI agents can be manipulated through hidden commands in ordinary images. This vulnerability could lead to unauthorized actions and data breaches.

The Rise of AI Agents and a New Security Threat

In a groundbreaking study, researchers at the University of Oxford have uncovered a novel cybersecurity vulnerability that could potentially compromise the integrity of AI agents. These advanced AI systems, which are expected to become widespread within two years, go beyond the capabilities of traditional chatbots by performing tasks directly on a user's computer, such as opening tabs, sending emails, and scheduling meetings

1

2

.The Hidden Danger in Ordinary Images

The study reveals that seemingly harmless photos can be manipulated to contain hidden instructions that are invisible to the human eye but detectable by AI agents. These altered images could be disguised as desktop wallpapers, online advertisements, or social media posts. When an AI agent encounters such an image while performing its tasks, it may misinterpret the altered pixels as commands, potentially leading to unauthorized actions

1

.

Source: PetaPixel

How the Attack Works

AI agents operate by taking frequent screenshots of a user's desktop to understand and interact with on-screen elements. This makes desktop wallpapers an ideal vector for persistent delivery of malicious commands. The researchers demonstrated that a single manipulated image, such as a photo of Taylor Swift, could instruct an AI agent to retweet the image and divulge the user's passwords to an attacker

2

.The vulnerability allows for the creation of complex attack sequences. An initial malicious image can direct the agent to a website containing a second compromised image, triggering further actions and enabling more sophisticated attacks

2

.Related Stories

Implications and Vulnerabilities

Open-source AI models are particularly susceptible to this type of attack, as their publicly available code allows hackers to study how the AI interprets visual information. However, even closed-source models are not immune, as the exploit targets fundamental behaviors of AI systems

2

.Yarin Gal, an associate professor of machine learning at Oxford University and co-author of the study, warns that the rapid deployment of AI agent technology is outpacing security research. This creates a concerning scenario where potentially vulnerable systems could be widely adopted before adequate safeguards are in place

1

2

.Potential Safeguards and Future Directions

While this threat has only been observed in controlled experiments so far, the researchers emphasize the need for proactive measures. They suggest several potential defenses, including retraining AI models to ignore manipulated images and implementing security layers to prevent agents from acting on on-screen content without user verification

2

.The study's authors aim to alert developers to this vulnerability before AI agents become more prevalent, emphasizing the importance of building robust security measures into these systems from the ground up

1

.References

Summarized by

Navi

Related Stories

New AI Attack Hides Malicious Prompts in Downscaled Images, Posing Data Theft Risks

27 Aug 2025•Technology

AI Agents Under Siege: New Era of Cybersecurity Threats Emerges as Autonomous Systems Face Sophisticated Attacks

11 Nov 2025•Technology

AI Agents Vulnerable to Cryptocurrency Theft Through False Memory Attacks

14 May 2025•Technology

Recent Highlights

1

Elon Musk merges SpaceX with xAI, plans 1 million satellites to power orbital data centers

Business and Economy

2

SpaceX files to launch 1 million satellites as orbital data centers for AI computing power

Technology

3

Google Chrome AI launches Auto Browse agent to handle tedious web tasks autonomously

Technology