iOS 26: Apple's AI-Powered Update Brings New Design and Enhanced Features

9 Sources

9 Sources

[1]

Liquid Glass, Live Translation, and All the Other Important New iOS26 Features Coming to Your iPhone

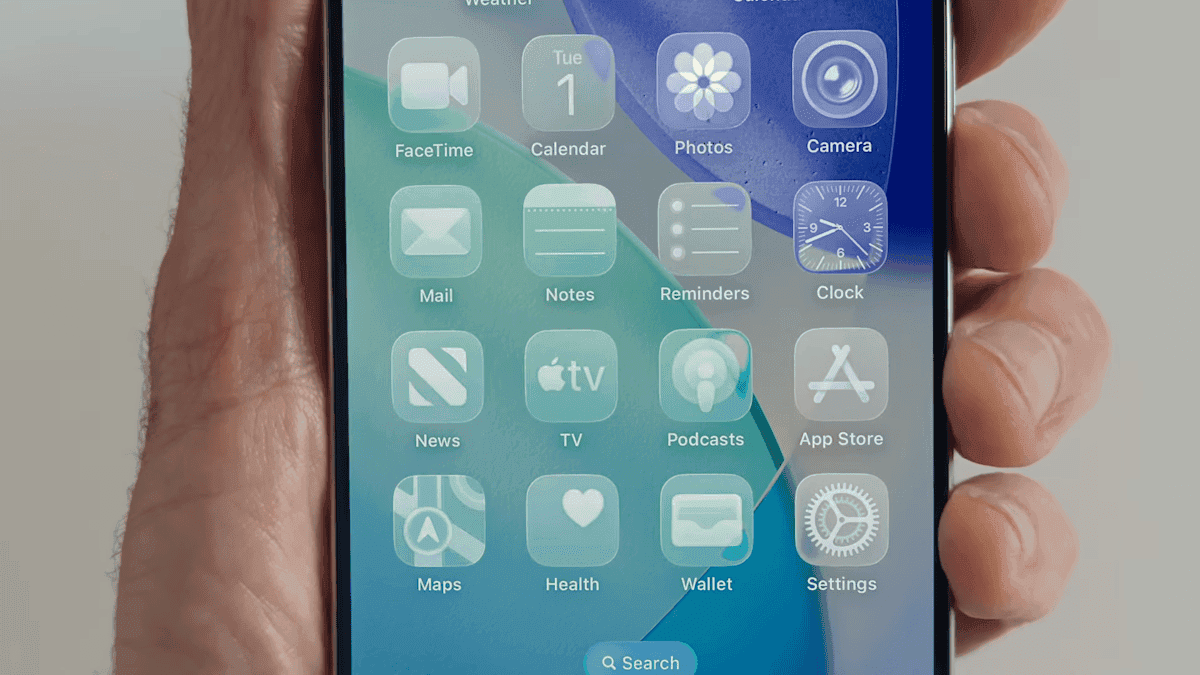

While we still don't know what new hardware the iPhone 17 models might be packing, we have seen the big changes coming to iPhone software with iOS26. Liquid Glass delivers a significant design refresh, and that's just where Apple is starting. The Photos app is getting a functional redesign, while Messages and Phone apps are putting power back in your hands by delivering features around hold, and screening calls. Apple Intelligence is still contributing as well, even if Siri has been delayed. The next version of the operating system is due to ship in September or October (likely with new iPhone 17 models), but developer betas are available now, with a public beta expected this month. After more than a decade of a flat, clean user interface -- an overhaul introduced in iOS 7 when former Apple Chief Design Officer Jony Ive took over the design of software as well as hardware -- the iPhone is getting a new look. The new design extends throughout the Apple product lineup, from iOS to WatchOS, TVOS and iPadOS. The Liquid Glass interface also now enables a third way to view app icons on the iPhone home screen. Not content with Light and Dark modes, iOS 26 now features an All Clear look -- every icon is clear glass with no color. Lock screens can also have an enhanced 3D effect using spatial scenes, which use machine learning to give depth to your background photos. Translucency is the defining characteristic of Liquid Glass, behaving like glass in the real world in the way it deals with light and color of objects behind and near controls. But it's not just a glassy look: The "liquid" part of Liquid Glass refers to how controls can merge and adapt -- dynamically morphing, in Apple's words. In the example Apple showed, the glassy time numerals on an iPhone lock screen stretched to accommodate the image of a dog and even shrunk as the image shifted to accommodate incoming notifications. The dock and widgets are now rounded, glassy panels that float above the background. The Camera app is getting a new, simplified interface. You could argue that the current Camera app is pretty minimal, designed to make it quick to frame a shot and hit the big shutter button. But the moment you get into the periphery, it becomes a weird mix of hidden controls and unintuitive icons. Now, the Camera app in iOS 26 features a "new, more intuitive design" that takes minimalism to the extreme. The streamlined design shows just two modes: Video or Camera. Swipe left or right to choose additional modes, such as Pano or Cinematic. Swipe up for settings such as aspect ratio and timers, and tap for additional preferences. With the updated Photos app, viewing the pictures you capture should be a better experience -- a welcome change that customers have clamored for since iOS 18's cluttered attempt. Instead of a long, difficult-to-discover scrolling interface, Photos regains a Liquid Glass menu at the bottom of the screen. The Phone app has kept more closely to the look of its source than others: a sparse interface with large buttons as if you're holding an old-fashioned headset or pre-smartphone cellular phone. iOS 26 finally updates that look not just with the new overall interface but in a unified layout that takes advantage of the larger screen real estate on today's iPhone models. It's not just looks that are different, though. The Phone app is trying to be more useful for dealing with actual calls -- the ones you want to take. The Call Screening feature automatically answers calls from unknown numbers, and your phone rings only when the caller shares their name and reason for calling. Or what about all the time wasted on hold? Hold Assist automatically detects hold music and can mute the music but keep the call connected. Once a live agent becomes available, the phone rings and lets the agent know you'll be available shortly. The Messages app is probably one of the most used apps on the iPhone, and for iOS 26, Apple is making it a more colorful experience. You can add backgrounds to the chat window, including dynamic backgrounds that show off the new Liquid Glass interface. In addition to the new look, group texts in Messages can incorporate polls for everyone in the group to reply to -- no more scrolling back to find out which restaurant Brett suggested for lunch that you missed. Other members in the chat can also add their own items to a poll. A more useful feature is a feature to detect spam texts better and screen unknown numbers, so the messages you see in the app are the ones you want to see and not the ones that distract you. In the Safari app, the Liquid Glass design floats the tab bar above the web page (although that looks right where your thumb is going to be, so it will be interesting to see if you can move the bar to the top of the screen). As you scroll, the tab bar shrinks. FaceTime also gets the minimal look, with controls in the lower-right corner that disappear during the call to get out of the way. On the FaceTime landing page, posters of your contacts, including video clips of previous calls, are designed to make the app more appealing. Do you like the sound of that song your friend is playing but don't understand the language the lyrics are in? The Music app includes a new lyrics translation feature that displays along with the lyrics as the song plays. And for when you want to sing along with one of her favorite K-pop songs, for example, but you don't speak or read Korean, a lyrics pronunciation feature spells out the right way to form the sounds. AutoMix blends songs like a DJ, matching the beat and time-stretching for a seamless transition. And if you find yourself obsessively listening to artists and albums again and again, you can pin them to the top of your music library for quick access. The iPhone doesn't get the same kind of gaming affection as Nintendo's Switch or Valve's Steam Deck, but the truth is that the iPhone and Android phones are used extensively for gaming -- Apple says half a billion people play games on iPhone. Trying to capitalize on that, a new Games app acts as a specific portal to Apple Arcade and other games. Yes, you can get to those from the App Store app, but the Games app is designed to remove a layer of friction so you can get right to the gaming action. Although not specific to iOS, Apple's new live translation feature is ideal on the iPhone when you're communicating with others. It uses Apple Intelligence to dynamically enable you to talk to someone who speaks a different language in near-real time. It's available in the Messages, FaceTime and Phone apps and shows live translated captions during a conversation. Updates to the Maps app sometimes involve adding more detail to popular areas or restructuring the way you store locations. Now, the app takes note of routes you travel frequently and can alert you of any delays before you get on the road. It also includes a welcome feature for those of us who get our favorite restaurants mixed up: visited places. The app notes how many times you've been to a place, be that a local business, eatery or tourist destination. It organizes them in categories or other criteria such as by city to make them easier to find the next time. Liquid Glass also makes its way to CarPlay in your vehicle, with a more compact design when a call comes in that doesn't obscure other items, such as a directional map. In Messages, you can apply tapbacks and pin conversations for easy access. Widgets are now part of the CarPlay experience, so you can focus on just the data you want, like the current weather conditions. And Live Activities appear on the CarPlay screen, so you'll know when that coffee you ordered will be done or when a friend's flight is about to arrive. The Wallet app is already home for using Apple Card, Apple Pay, electronic car keys and for storing tickets and passes. In iOS 26, you can create a new Digital ID that acts like a passport for age and identity verification (though it does not replace a physical passport) for domestic travel for TSA screening at airports. The app can also let you use rewards and set up installment payments when you purchase items in a store, not just for online orders. And with the help of Apple Intelligence, the Wallet app can help you track product orders, even if you did not use Apple Pay to purchase them. It can pull details such as shipping numbers from emails and texts so that information is all in one place. Although last year's WWDC featured Apple Intelligence features heavily, improvements to the AI tech were less prominent this year, folded into the announcements during the WWDC keynote. As an alternative to creating Genmoji from scratch, you can combine existing emojis -- "like a sloth and a light bulb when you're the last one in the group chat to get the joke," to use Apple's example. You can also change expressions in Genmoji of people you know that you've used to create the image. Image Playground adds the ability to tap into ChatGPT's image generation tools to go beyond the app's animation or sketch styles. Visual Intelligence can already use the camera to try to decipher what's in front of the lens. Now the technology works on the content on the iPhone's screen, too. It does this by taking a screenshot (press the sleep and volume up buttons) and then including a new Image Search option in that interface to find results across the web or in other apps such as Etsy. This is also a way to add event details from images you come across, like posters for concerts or large gatherings. (Perhaps this could work for QR codes as well?) In the screenshot interface, Visual Intelligence can parse the text and create an event in the Calendar app. Not everything fits into a keynote presentation -- even, or maybe especially, when it's all pre-recorded -- but some of the more interesting new features in iOS 26 went unremarked during the big reveal. For instance: The finished version of iOS 26 will be released in September or October with new iPhone 17 models. In the meantime, developers can install the first developer betas now, with an initial public beta arriving this month. (Don't forget to go into any beta software with open eyes and clear expectations.) Follow the WWDC 2025 live blog for details about Apple's other announcements. iOS 26 will run on the iPhone 11 and later models, including the iPhone SE (2nd generation and later). That includes:

[2]

7 helpful AI features in iOS 26 you can try now - and how to access them

Apple finally launched the iOS 26 public beta, and with that comes a slew of new AI features you can try out today -- and their usefulness may surprise you. While the new features may not be as flashy as the promised Siri 2.0 update, which has yet to be released, many address issues Apple users have long had with their devices or in their everyday workflows, while others are downright fun. For example, don't you like waiting for customer service calls? Want your song transitions in Apple Music to sound better? Are you envious of Android's Circle to Search? The new features address all of these points. Also: Apple's iOS 26 and iPadOS 26 public betas are here: How to access and why you'll want to I gathered the AI features announced and ranked them according to what I'm most excited to use and what people on the web have been buzzing about. Apple introduced Visual Intelligence last year with the launch of the iPhone 16. At the time, it allowed users to take photos of objects around them and then use the iPhone's AI capability to search for them and find more information. Last month, Apple upgraded the experience by adding Visual Intelligence to the iPhone screen. To use it, you just have to take a screenshot. Visual Intelligence can use Apple Intelligence to grab details from your screenshot and suggest actions, such as adding an event to your calendar. You can also use the "ask button" to ask ChatGPT for help with a particular image, which is useful for tasks such as solving a puzzle. You can also tap on "Search" to look on the web. Also: My new favorite iOS 26 feature is a supercharged version of Google Lens - and it's easy to use Although Google offered the same capability years ago with Circle to Search, this is a big win for Apple users, as it's functional and was executed well. It leverages ChatGPT's capable models rather than trying to build an inferior one itself. Since generative AI exploded in popularity, a useful application that has emerged is real-time translation. Because LLMs have a deep understanding of language and how people speak, they can translate speech not just literally, but accurately using additional context. Apple will roll out this powerful capability across its own devices with a new real-time translation feature. Also: Your iPhone will translate calls and texts in real time, thanks to AI The feature can translate text in Messages and audio on FaceTime and phone calls. If you use it for verbal conversations, just click a button on your call, which alerts the other person that live translation is about to take place. After a speaker says something, there is a brief pause, and then you get audio feedback with a conversational version of what was said in your language of choice, plus a transcript you can follow along. This feature is valuable because it can help people communicate with each other. It's also easy to access because it's baked into communication platforms people already rely on every day. The AutoMix feature uses AI to add seamless transitions from one song to another, mimicking what a DJ would do with time stretching and beat matching. In the Settings app on the beta, Apple says, "Songs transition at the perfect moment, based on analysis of the key and tempo of the music." Also: How to use the viral AutoMix feature on iOS 26 (and which iPhone models support it) It works in tandem with the Autoplay feature, so if you're playing a song like a DJ, it can play another song that matches the vibes while seamlessly transitioning to it. Some users who tried it during the developer beta posted their results on social media, and they were pretty impressive. The Shortcuts update was easy to miss during the keynote, but it's one of the best use cases for AI. If you're like me, you typically avoid programming Shortcuts because they seem too complicated to create. This is where Apple Intelligence can help. Also: My favorite iPhone productivity feature just got a major upgrade with iOS 26 (and it's not Siri) With the new intelligent actions features, you can tap into Apple Intelligence models either on-device or in Private Cloud Compute within your Shortcut, unlocking a much more advanced set of capabilities. For example, you could set up a Shortcut that takes all the files you add to your home screen and then sorts them into files for you using Apple Intelligence. The process is meant to be as intuitive as possible, with dedicated actions and the ability to use Apple Intelligence models either on-device or with Private Cloud Compute to generate responses that feed into the rest of a shortcut, according to Apple. However, if even this seems too daunting, there's a gallery feature available to try out some of the options and get inspiration for building. The Hold Assist feature is a prime example of a tool that isn't over the top but has the potential to save you a lot of time in your everyday life. The way it works is simple: if you're placed on hold and your phone detects hold music, it will ask if you want your spot held in line and notify you when it's your turn to speak with someone, alerting the person on the other end that you'll be right there. Also: Your iPad is getting a huge upgrade for free. 4 features I can't wait to use on iPadOS 26 Imagine how much time you'll get back from calls with customer service. If the feature seems familiar, it's because Google has a similar "Hold for me" feature, which uses Call Assist to wait on hold for you and notify you when they're back. That said, even though it may not be an original feature, it's one Apple users will be happy to have. The Apple Vision Pro introduced the idea of enjoying your precious memories in an immersive experience that places you in the scene. However, to take advantage of this feature, you had to take spatial photos and record spatial videos. Now, a similar feature is coming to iOS, allowing users to transform any picture they have into a 3D-like image that separates the foreground and background for a spatial effect. Since it's easier to explain visually, I included a quick video below that previews what spatial photos look like. The best part is that you can add these photos to your lock screen, and as you move your phone, the 3D element looks like it moves with it. It may seem like there's no AI involved, but according to Craig Federighi, Apple's SVP of software engineering, it can transform your 2D photo into a 3D effect by using "advanced computer vision techniques running on the Neural Engine." Using AI for workout insights isn't new, as most fitness wearable companies, including Whoop and Oura, have implemented a feature of that sort before. However, Workout Buddy is a unique application of AI I haven't seen before. Essentially, it uses your fitness data, such as history, paces, Activity Rings, and Training Load, to give you feedback as you're working out. Also: watchOS 26 public beta is out right now - 8 new Apple Watch features you can try Even though this feature is part of the WatchOS upgrade -- and I don't happen to own one -- it does seem like a fun and original way to use AI. As someone who lacks all desire to work out, I can see that having a motivational reminder can have a positive impact on the longevity of my workout. The list above is already extensive, and yet Apple unveiled a lot more AI features: Apple released the official public beta on July 24, which users can download to test the features until the final, most stable version is released in the fall with the launch of its latest devices. To enroll in the public beta, visit the Apple Beta Software Program site and click the blue sign-up button. The program is free and open to anyone with an Apple Account who accepts the Apple Beta Software Program Agreement when signing on. Also: iOS 26 envy? 5 iPhone features you can already use on your Android (Samsung included) When downloading a beta, it's worth keeping in mind that while the beta gives users access to all the latest features available in iOS 26, they aren't fully developed and, as a result, are buggy. Since you won't get the best experience, a good practice is to use either a secondary phone or make sure your data is properly backed up in case something goes wrong. Get the morning's top stories in your inbox each day with our Tech Today newsletter.

[3]

We tested iOS 26 on the iPhone 16 Pro - these 5 features make the update worthwhile

Apple introduced iOS 26 to the public at its annual Worldwide Developers Conference event in early June, and since then, has been polishing the software with developers for the eventual beta release. Now that it's finally available for everyone to test, you may be wondering if it's worth diving straight into or waiting for the official software launch -- likely sometime in September. That's what we're here to answer. Also: You can download iOS 26 public beta right now - how to install (and which iPhones support it) To set the stage, Apple's iOS 26 offers a refreshing new look, more streamlined first-party app layouts, more functionalities across popular services, and some smaller Easter egg features that greatly enhance your iPhone's usability. My ZDNET colleague Kerry Wan and I have been testing the public beta (via Developer Beta 4) for the past few weeks, and here are our biggest takeaways so far. When I first tested Visual Intelligence on iOS 18, I mainly used the feature to translate signage and menus when traveling abroad. Compared to competing multimodal tools on other phones, the feature was noticeably barebones. However, I found myself using it more often on iOS 26, thanks to the ability to better process on-screen information and follow up with relevant tasks. Specifically, being able to add a calendar event from my email has been a blessing. Apple now allows you to take a screenshot of an invitation (whether from email, messages, or anything else) and run Visual Intelligence on it. This suggests the options to Add to Calendar, Read Aloud, and Summarize. Using the first option creates an event in the Calendar app with details such as event name, time, and duration. Also: First look at iPhone 17 Pro? Public images seemingly confirm big design and camera changes While both Kerry and I find Samsung's AI Select and Google's Circle to Search easier to recognize on-screen items and perform functions based on AI suggestions, some users will prefer that Apple's AI service also lets you save screenshots for future reference. You can, of course, delete the screenshot right after an event is created, but the process of taking a screenshot and then waiting for suggestions to show the 'Add to Calendar' option is something we'd love to see with future updates. It'd be great if I could enable on-screen Visual Intelligence with a press (or double press) of the Camera Control instead of taking a screenshot. The Cupertino tech giant redesigned the iPhone's Photos app in iOS 18 last year and touted it as "the biggest-ever redesign." Over the course of 2024, I, like many others, never got used to the new UI and almost always felt frustrated when looking through my vacation photos. Apple listened to user feedback to give us an updated layout, and I love it. Also: Apple finally added my most-requested iPhone feature with iOS 26 (and you'll love it, too) The new Photos app is now compartmentalized by Library and Collections, so if I scroll to the end of my library, it doesn't automatically show any outstanding folders. Instead, those have been moved to a separate Collections tab. Overall, the app interface looks cleaner and doesn't frustrate me anymore. Fewer pathways are required to access certain albums, bringing iOS 26 closer to the burdenless software experience we've come to expect from Apple. While we're on this note, the ZDNET team has agreed that the ability to quickly transform virtually any still image into one with spatial depth is the "gimmicky" feature we love the most. It's fun, easy to use, and works reliably well -- now more than ever, on the public beta. It's the same technology that gives lock screen wallpapers that gyroscopic portrait effect. The biggest app redesign in iOS 26 is a simplified Camera app layout. Apple now gives you two options for Video and Photo at the bottom, where you can swipe up to reveal more settings like Flash, Live Photo, Timer, and more. These can also be found by tapping the settings button at the top-right corner. You can press, hold, and drag to change to other modes like Cinematic Video, Portrait, etc. At first, I found the new look too minimal for my taste; even if you're familiar with all the tools and settings in the camera app, there's no clear indication -- like a menu button or expand symbol -- of how to access them. I'd imagine that more casual users will struggle, at least in the beginning, to find certain settings. Also: Five 'new' iOS 26 features that feel familiar (I've been using them on Android for years) Having a more minimalist design does give you the benefit of a fast and responsive UI, which is a major advantage for photographers and users looking to grab quick shots. Hiding more of the interface also makes framing and capturing subjects easier. My colleague Kerry recently went on a trip to Barcelona and Lisbon, two cities filled with architecture and tilework with Mediterranean influence. He found the revamped camera app very intuitive when quickly switching between photo and video modes and framing scenic views. When every little detail on buildings, streets, and statues matters so much, the ability to easily see how things fit within the viewfinder was appreciated. Safari's new Liquid Glass-enhanced look makes a big difference for reading and browsing web pages. Apple's browser on iOS 26 not only looks better but is more usable than before. For example, the emphasis on minimizing distracting UI means what was previously a two-row menu at the bottom (which took up a good portion of the web page) is now simply a floating three-button navigation bar -- which includes the back button, URL bar, and three-dot button. Also: Should you buy a refurbished iPad? I tried one from Back Market and here's my verdict The nav bar minimizes when you scroll through a web page, and switching from one tab to another with a swipe feels faster than ever. I also love that Apple bundled the additional web page options in a three-dot menu, which houses shortcuts like Share, Add to favorites, Add Bookmark too, etc. One of our favorite new Safari features is the introduction of haptic queues. For example, you'll feel a gentle buzz when an image or file has successfully downloaded from the web browser, whereas previous, it would appear as a hidden notification. These subtle software tweaks go a long way, so much so that my colleague Kerry has switched back from primarily using Chrome on his iPhone. I have waited for this feature for years, and it's finally here. No, it's not the expandable clock widget that adapts to the wallpaper. Nor is it the ability to now place ticker widgets on the bottom half of the screen. Instead, it's the fact that when you charge your iPhone now, iOS 26 displays the estimated battery charging time. It's a small touch, but one that proves surprisingly useful, giving me glanceable info on exactly when my iPhone will be fully charged when I'm short on time. I'm also a fan of the refreshed album artwork, which now fills the entire lock screen when streaming from Apple Music, Spotify, and other services. As a solo traveler, there are times when I miss having my Apple Watch -- which I often leave at home to reduce charging needs -- when I have to record or capture an image of myself. But now, I can trigger video recording wirelessly with my AirPods Pro 2. Also: Your AirPods are getting 2 major upgrades for free thanks to iOS 26 Apple's iOS 26 allows you to start recording video in Camera by pressing and holding on AirPods or AirPods Pro with the H2 chip. It should come especially handy for content creators and people like me who prefer wearing an analog watch to an Apple Watch. Keep in mind that all the features mentioned here could change before the stable release later this year, including how they're accessed, how they look, and how they work. Even so, iOS 26 is shaping up to be one of the most significant updates to Apple's operating system in years, and we can't wait to see what more consumers think in the near future.

[4]

5 iPhone Features I'm Most Excited to Test in the iOS 26 Public Beta

Apple held back on futuristic announcements at this year's Worldwide Developer Conference (WWDC), drawing criticism from AI insiders (including me), but the small tweaks it debuted for iOS look like they'll be a pleasure to use on a daily basis. The formal iOS 26 update arrives this fall, but Apple just dropped the beta version. It's usually a solid preview of the final version, and ideal for testing out the tech. Here's how to check it out, and the five iPhone features I'm most excited to try. 1. Group Text Polls to Save Us From the Slog Group chats often create a million message notifications. One study found that 42% of people say managing them feels like a part-time job, especially when the group needs to make a choice. With the new polling feature inside Messages, you can create a quick survey that others can respond to without needing all that back and forth. That would've been a great feature for a bachelorette trip I was on recently, where we voted on what restaurant to order dinner from in a much more cumbersome way. One person would send individual texts with each option, which everyone else would vote on by "liking" their favorite. A formal group chat poll function would've been much cleaner and easier. You can also now settle up the bill with Apple Pay right in the group chat. At that bachelorette, we used Splitwise, which it sounds like iOS 26 could replace, saving time and mental energy. 2. Liquid Glass Is Elegant, If Slightly Underwhelming Apple's "Liquid Glass" UI overhaul wasn't as dramatic as I expected, but it's a welcome upgrade. Because let's face it: iPhones are eye candy and status symbols. It's important for Apple to keep them looking elegant and fresh, and the redesign accomplishes that to some degree. New translucent buttons and dynamic menus make the interface look more fluid. Apple mentioned in the keynote that the new UI could influence future app development, which I would've liked to hear more about. I also expected the redesign to radically change the way the devices looked, making iOS 18 feel old in an instant. It didn't go that far, but I think there's room for Apple to keep pushing the design over the next year and get it there. 3. Spam Is Ruining iPhones: Enter 'Call Screening,' Unknown Message Folder Constant pings from unknown numbers, spammers, and AI impersonators are ruining the smartphone experience, and in iOS 26, Apple is rightly trying to get a handle on the situation with new tools for screening callers and filtering out unwanted texts. Call Screening builds on the Live Voicemail feature introduced in iOS 17. It will "answer" a call for you, see who's calling and what they want, and then ring your phone with the details and give you the option to pick up or send to voicemail. I worry it might be a little restrictive, especially in the scenario where someone you know happens to be calling from an unknown number, so we'll have to see how it plays out over time. (Google and Samsung already offer a similar feature, CNET points out.) For texts, Apple introduced a feature that keeps messages from unknown senders "silenced until a user accepts them." They'll appear in a dedicated folder so they don't clutter up your texts; access them on the top-right corner above your texts. Better check it if you've exchanged numbers with someone, so it doesn't get lost! 4. Live Translation Tears Down Language Barriers This one impressed me most from a technical perspective. A new Live Translation feature works in real time during phone and video calls. You can also translate texts without needing to leave the screen. I legitimately need these features for travel and speaking with my in-laws. Here's how it works: If you call someone who doesn't speak your language, you activate Live Translation once you're on the line with them. It notifies the other person that you've turned it on, like Zoom does when you press "record" on a video call. Then, when you speak, they will hear your words in their language and vice versa after a delay of just a few seconds. You can also see a written transcription on the phone screen, which will pop up just before the audio starts. This could truly change the way people interact across borders. Even though Google debuted a similar feature in May, I say the more the merrier. 5. Spatial Images Make 2D a Thing of the Past This is a fun one, although, admittedly, pretty minor, but it could be the start of a new era for digital photos. A new 3D spatial photo feature brings lock screen images to life, borrowing tech from the Vision Pro. As you move your phone around, the image transforms from 2D to 3D. Will this change the way we approach photography? It could also make iPhones even prettier, which as I mentioned in the Liquid Glass section is actually pretty important for the brand. It relies on "advanced computer vision techniques running on the Neural Engine," Craig Federighi, Apple's senior vice president of software engineering, said on stage at WWDC. You can also try it out within the Photos app. I didn't find the other Lock Screen changes Apple introduced as compelling, such as one that expands the clock to fit the available space of the image you select. Big whoop. Apple also enhanced the spatial photos feature on the Vision Pro with visionOS 26. The new experience makes you feel like you're looking around the photo, into a bigger scene. It relies on a "new generative AI algorithm and computational depth to create spatial scenes with multiple perspectives, letting users feel like they can lean in and look around," Apple says.

[5]

iOS 26 preview: Liquid Glass is more than just a visual refresh

At WWDC 2025, Apple revealed a major visual shake-up for iOS (not to mention the rest of the company's operating systems). This is the biggest change, aesthetically, since the shift away from the stitching, textures and skeuomorphic design of the iOS 4. It also comes with significantly fewer AI and Siri updates this time around. However, it's the smaller touches that make iOS 26 seem like a notable improvement over its predecessor. I've been running the iOS 26 developer beta for the last two weeks and here's how Apple's new Liquid Glass design -- and iOS 26 broadly -- stacks up. iOS 26 looks new and modern. And for once, how Apple describes it -- liquid glass -- makes sense: it's a lot of layers of transparent elements overlapping and, in places, the animations are quite... liquidy. Menus and buttons will respond to your touch, with some of them coalescing around your finger and sometimes separating out into new menus. Liquid Glass encompasses the entire design of iOS. The home and lock screens have been redesigned once again, featuring a new skyscraping clock font that stretches out from the background of your photos, with ever-so-slight transparency. There's also a new 3D effect that infuses your photos with a bit of spatial magic, offering a touch of Vision Pro for iPhone users. The experience in the first few builds of the iOS 26 beta was jarring and messy, especially with transparent icons and notifications, due to those overlapping elements making things almost illegible. Updates across subsequent releases have addressed this issue by making floating elements more opaque. There is also a toggle within the Accessibility tab in Settings to reduce transparency further, but I hope Apple offers a slider so that users can choose exactly how "liquid" they want their "glass" to be. If you own other Apple products, then you'll come to appreciate the design parity across your Mac, iPad and Apple Watch. One noticeable change I'd been waiting for was the iOS search bar's relocation to the bottom of the screen. I first noticed it within Settings, but it reappears in Music, Podcasts, Photos and pretty much everywhere you might need to find specific files or menu items now. If, like me, you're an iPhone Pro or Plus user, you may have struggled to reach those search bars when they were at the top of the screen. It's a welcome improvement. With iOS 26 on iPhones powerful enough to run Apple Intelligence, the company is bringing Visual Intelligence over to your screenshots. (Previously it was limited to Camera.) Once you've grabbed a shot by pressing the power and volume up buttons, you'll get a preview of your image, surrounded by suggested actions that Apple Intelligence deduced would be relevant based on the contents of your screenshot. Managing Editor Cherlynn Low did a deep dive on what Visual Intelligence is capable of. From a screenshot, you can transfer information to other apps without having to switch or select them manually. This means I can easily screenshot tickets and emails, for example, to add to my calendar. Apple Intelligence can also identify types of plants, food and cars, even. If there are multiple people or objects in your screenshot, you can highlight what you want to focus on by circling it. There aren't many third-party app options at this point, but that's often the case with a beta build. These are features that Android users have had courtesy of Gemini for a year or two, but at least now we get something similar on iPhones. One quick tip: Make sure to tap the markup button (the little pencil tip icon) to see Visual Intelligence in your screenshots. I initially thought my beta build was missing the feature, but it was just hidden behind the markup menu. More broadly, Apple Intelligence continues to work well, but doesn't stand out in any particular way. We're still waiting for Siri to receive its promised upgrades. Still, iOS 26 appears to have improved the performance of many features that use the iPhone's onboard machine learning models. Since the first developer build, voice memos and voice notes are not only much faster, but also more accurate, especially with accents that the system previously struggled with. Apple Intelligence's Writing tools -- which I mainly use for summarizing meetings, conference calls and even lengthy PDFs -- doesn't choke with more substantial reading. On iOS 18, it would struggle with voice notes longer than 10 minutes, trying to detangle or structure the contents of a meeting. I haven't had that issue with iOS 26 so far. Genmoji and Image Playground both offer up different results through the update. Image Playground can now generate pictures using ChatGPT. I'll be honest, I hadn't used the app since I tested it on iOS 18, but the upgrades mean it has more utility when I might want to generate AI artwork, which can occasionally reach photorealistic levels. One useful addition is ChatGPT's "any style" option, meaning you can try to specify the style you have in mind, which can skirt a little closer to contentious mimicry -- especially if you want, say, a frivolous image of you splashing in a puddle, Studio Ghibli style. Apple also tweaked Genmoji to add deeper customization options, but these AI-generated avatars don't look like me? I liked the original Genmoji that launched last year, which had the almost-nostalgic style of 2010 emoji, but still somehow channeled the auras of me, my friends and family. This new batch are more detailed and elaborate, sure, but they don't look right. Also, they make me look bald. And contrary to my detractors, I am not bald. Yet. This feels like a direct attack, Apple. You might feel differently, however. For example, Cherlynn said that the first version of Genmoji did not resemble her, frequently presenting her as someone with much darker skin or of a different ethnicity, regardless of the source picture she submitted. Still, the ability to change a Genmoji's expression, as well as add and remove glasses and facial hair through the new appearance customization options, is an improvement. Apple has revisited the camera app, returning to basics by stripping away most of the previously offered modes and settings -- at least initially -- to display only video and photo modes. You can swipe up from the bottom to see additional options, like flash, the timer, exposure, styles and more. You can also tap on the new six-dot icon in the upper right of the interface for the same options, though that requires a bit more of a reach. These behave in line with the new Liquid Glass design and you'll see the Photo pill expand into the settings menu when you press either area. Long-pressing on icons lets you go deeper into shooting modes, adjusting frame rates and even recording resolutions. What I like here is that it benefits casual smartphone photographers while keeping all the settings that more advanced users demand. None of the updates here are earth-shattering, though. I hope Apple takes a good look at what Adobe's Project Indigo camera app is doing -- there are a lot of good ideas there. One extra improvement if you use AirPods: Pressing and holding the stem of your AirPod (if it has an H2 chip) can now start video recording. Alongside the Liquid Glass design touches, the big addition to Apple Music this year is AutoMix. Like a (much) more advanced version of the crossfade feature found on most music streaming apps, in iOS 26, Music tries to mix between tracks, slowing or speeding up tempos, gently fading in drums or bass loops before the next song kicks in. Twenty percent of the time, it doesn't work well -- or Apple Music doesn't even try. But the new ability to pin playlists and albums is useful, especially for recommendations from other folks that you never got around to listening to. Apple is making Messages more fun. One of the ways it's doing so is by enabling custom backgrounds in chats, much like in WhatsApp. I immediately set out to find the most embarrassing photo of my colleague (and frenemy) Cherlynn Low and make it our chat background. I know she's also running iOS 26 in beta, so she will see it. [Ed. note: Way to give me a reason to ignore your messages, Mat!] Apple's Live translation now works across Messages, voice calls and FaceTime. Setting things up can be a little complicated -- you'll first need to download various language files to use the feature. There's also some inconsistency in the languages supported across the board. For instance, Mandarin and Japanese are supported in Messages, but not on FaceTime yet. In chats, if your system language is set to English or Spanish, then you'll only be able to translate into English or Spanish. For those polyglots out there, if you want to translate incoming Japanese texts into German, you'll need to set your device's language to German. While I didn't get to flex my Japanese abilities on voice calls and FaceTime, iOS 26 was more than capable of keeping up with some rudimentary German and Spanish. I'm not sure if I'd rely on it for serious business translation or holiday bookings, but I think it could be a very useful tool for basics. There's also the ability to filter spam messages to their own little folder (purgatory). Spam texts remain a nightmare, so I appreciate any potential weapons in the fight. Sadly, it hasn't quite manage to deal with the TikTok marketing agencies and phone network customer services that continue to barrage my Messages. Still, hopefully Apple will continue to improve its detection algorithms. One more tool in the battle against spam: You can mute notifications for Messages from unknown numbers, although time-sensitive alerts from delivery services and rideshare apps will still reach you. Not everything in the beta lands, however. I've already touched on how Liquid Glass was initially a semi-transparent mess. The Games App, too, seems like an unnecessary addition. Because it's a blend of the Games tab of the App Store and a silo of your preinstalled games, I'm not sure what it's adding. It's not any easier to navigate, nor does it introduce me to games I want to buy. Cherlynn did want to highlight that for a casual gamer like herself, it's intriguing to see if the Games app might start to recommend more mind-numbing puzzles or farming simulations. She was also intrigued by the idea of a more social gaming experience on iOS, issuing challenges to her friends. Still, because the phone she has been testing the beta on doesn't have access to all her contacts or her gaming history, the recommendations and features are fairly limited at the moment. Games is one of two new apps that will automatically join your home screen. (Fortunately, they can be uninstalled). The other is Preview, which should be a familiar addition to any Mac user. It offers an easy way to view sent or downloaded files, like menus, ticket QR codes and more. During the developer beta, the app pulled in a handful of my documents that previously lived in the Files app. Navigation across both those apps is identical, although Preview is limited to files you can actually open, of course. This is more iPhone-adjacent, but iOS 26 includes several quality-of-life improvements for some of Apple's headphones. First up: notifications when your AirPods are fully charged, finally! The Apple Watch got this kind of notification back in iOS 14, so it's great to see Apple's headphones catch up. Apple is also promising "studio-quality sound recording" from the AirPods, augmenting recordings with computational audio improvements. There's a noticable bump in audio quality. It appears that AirPods 4 and AirPods Pro 2 will record files at a sample rate of 48 kHz, which is double the rate used in the past. The sample rate bump happened last year, but it is dependent on what the app you're using. Is it "studio quality"? I don't think so, but it's an improvement. While recordings sound slightly better in quiet locations, the bigger change is in loud environments. The algorithm doesn't appear to be degrading audio quality as much while trying to reduce background noise. iOS 26 also adds sleep detection to the buds. If the AirPods detect minimal movement, they'll switch off automatically, which could be helpful for the next time I'm flying long-haul. In iOS 26, Apple has prioritized design changes and systemwide consistency over AI-centric software and features. While Liquid Glass is a big change to how your iPhone looks, Apple has drawn from user feedback to finesse the design into feeling less jarring and gelling better when the home screen, Control Center and Notification drop-downs overlap with each other. There are numerous quality of life improvements, including Messages and Visual Intelligence, in particular. If anything, the AI elephant in the room is the lack of any substantial updates on Siri. After the company talked up advanced Siri interactions over a year ago, I'm still waiting for its assistant to catch up with the likes of Google.

[6]

iOS 26 beta preview: Liquid Glass is better than you think

At WWDC 2025, Apple revealed a major visual shake-up for iOS (not to mention the rest of the company's operating systems). This is the biggest change, aesthetically, since the shift away from the stitching, textures and skeuomorphic design of the iOS 4. It also comes with significantly fewer AI and Siri updates this time around. However, it's the smaller touches that make iOS 26 seem like a notable improvement over its predecessor. I've been running the iOS 26 developer beta for the last two weeks and here's how Apple's new Liquid Glass design -- and iOS 26 broadly -- stacks up. (Ed. note: Apple just released the public betas for iOS 26, iPadOS 26, macOS 26 and watchOS 26. This means you can run the preview for yourself, if you are willing to risk potentially buggy or unstable software that could cause some of your apps to not work. As usual, we highly recommend backing up all your date before running any beta, and you can follow our guide on how to install Apple's public betas to do so.) iOS 26 looks new and modern. And for once, how Apple describes it -- liquid glass -- makes sense: it's a lot of layers of transparent elements overlapping and, in places, the animations are quite... liquidy. Menus and buttons will respond to your touch, with some of them coalescing around your finger and sometimes separating out into new menus. Liquid Glass encompasses the entire design of iOS. The home and lock screens have been redesigned once again, featuring a new skyscraping clock font that stretches out from the background of your photos, with ever-so-slight transparency. There's also a new 3D effect that infuses your photos with a bit of spatial magic, offering a touch of Vision Pro for iPhone users. The experience in the first few builds of the iOS 26 beta was jarring and messy, especially with transparent icons and notifications, due to those overlapping elements making things almost illegible. Updates across subsequent releases have addressed this issue by making floating elements more opaque. There is also a toggle within the Accessibility tab in Settings to reduce transparency further, but I hope Apple offers a slider so that users can choose exactly how "liquid" they want their "glass" to be. If you own other Apple products, then you'll come to appreciate the design parity across your Mac, iPad and Apple Watch. One noticeable change I'd been waiting for was the iOS search bar's relocation to the bottom of the screen. I first noticed it within Settings, but it reappears in Music, Podcasts, Photos and pretty much everywhere you might need to find specific files or menu items now. If, like me, you're an iPhone Pro or Plus user, you may have struggled to reach those search bars when they were at the top of the screen. It's a welcome improvement. With iOS 26 on iPhones powerful enough to run Apple Intelligence, the company is bringing Visual Intelligence over to your screenshots. (Previously it was limited to Camera.) Once you've grabbed a shot by pressing the power and volume up buttons, you'll get a preview of your image, surrounded by suggested actions that Apple Intelligence deduced would be relevant based on the contents of your screenshot. Managing Editor Cherlynn Low did a deep dive on what Visual Intelligence is capable of. From a screenshot, you can transfer information to other apps without having to switch or select them manually. This means I can easily screenshot tickets and emails, for example, to add to my calendar. Apple Intelligence can also identify types of plants, food and cars, even. If there are multiple people or objects in your screenshot, you can highlight what you want to focus on by circling it. There aren't many third-party app options at this point, but that's often the case with a beta build. These are features that Android users have had courtesy of Gemini for a year or two, but at least now we get something similar on iPhones. One quick tip: Make sure to tap the markup button (the little pencil tip icon) to see Visual Intelligence in your screenshots. I initially thought my beta build was missing the feature, but it was just hidden behind the markup menu. More broadly, Apple Intelligence continues to work well, but doesn't stand out in any particular way. We're still waiting for Siri to receive its promised upgrades. Still, iOS 26 appears to have improved the performance of many features that use the iPhone's onboard machine learning models. Since the first developer build, voice memos and voice notes are not only much faster, but also more accurate, especially with accents that the system previously struggled with. Apple Intelligence's Writing tools -- which I mainly use for summarizing meetings, conference calls and even lengthy PDFs -- doesn't choke with more substantial reading. On iOS 18, it would struggle with voice notes longer than 10 minutes, trying to detangle or structure the contents of a meeting. I haven't had that issue with iOS 26 so far. Genmoji and Image Playground both offer up different results through the update. Image Playground can now generate pictures using ChatGPT. I'll be honest, I hadn't used the app since I tested it on iOS 18, but the upgrades mean it has more utility when I might want to generate AI artwork, which can occasionally reach photorealistic levels. One useful addition is ChatGPT's "any style" option, meaning you can try to specify the style you have in mind, which can skirt a little closer to contentious mimicry -- especially if you want, say, a frivolous image of you splashing in a puddle, Studio Ghibli style. Apple also tweaked Genmoji to add deeper customization options, but these AI-generated avatars don't look like me? I liked the original Genmoji that launched last year, which had the almost-nostalgic style of 2010 emoji, but still somehow channeled the auras of me, my friends and family. This new batch are more detailed and elaborate, sure, but they don't look right. Also, they make me look bald. And contrary to my detractors, I am not bald. Yet. This feels like a direct attack, Apple. You might feel differently, however. For example, Cherlynn said that the first version of Genmoji did not resemble her, frequently presenting her as someone with much darker skin or of a different ethnicity, regardless of the source picture she submitted. Still, the ability to change a Genmoji's expression, as well as add and remove glasses and facial hair through the new appearance customization options, is an improvement. Apple has revisited the camera app, returning to basics by stripping away most of the previously offered modes and settings -- at least initially -- to display only video and photo modes. You can swipe up from the bottom to see additional options, like flash, the timer, exposure, styles and more. You can also tap on the new six-dot icon in the upper right of the interface for the same options, though that requires a bit more of a reach. These behave in line with the new Liquid Glass design and you'll see the Photo pill expand into the settings menu when you press either area. Long-pressing on icons lets you go deeper into shooting modes, adjusting frame rates and even recording resolutions. What I like here is that it benefits casual smartphone photographers while keeping all the settings that more advanced users demand. None of the updates here are earth-shattering, though. I hope Apple takes a good look at what Adobe's Project Indigo camera app is doing -- there are a lot of good ideas there. One extra improvement if you use AirPods: Pressing and holding the stem of your AirPod (if it has an H2 chip) can now start video recording. Alongside the Liquid Glass design touches, the big addition to Apple Music this year is AutoMix. Like a (much) more advanced version of the crossfade feature found on most music streaming apps, in iOS 26, Music tries to mix between tracks, slowing or speeding up tempos, gently fading in drums or bass loops before the next song kicks in. Twenty percent of the time, it doesn't work well -- or Apple Music doesn't even try. But the new ability to pin playlists and albums is useful, especially for recommendations from other folks that you never got around to listening to. Apple is making Messages more fun. One of the ways it's doing so is by enabling custom backgrounds in chats, much like in WhatsApp. I immediately set out to find the most embarrassing photo of my colleague (and frenemy) Cherlynn Low and make it our chat background. I know she's also running iOS 26 in beta, so she will see it. [Ed. note: Way to give me a reason to ignore your messages, Mat!] Apple's Live translation now works across Messages, voice calls and FaceTime. Setting things up can be a little complicated -- you'll first need to download various language files to use the feature. There's also some inconsistency in the languages supported across the board. For instance, Mandarin and Japanese are supported in Messages, but not on FaceTime yet. In chats, if your system language is set to English or Spanish, then you'll only be able to translate into English or Spanish. For those polyglots out there, if you want to translate incoming Japanese texts into German, you'll need to set your device's language to German. While I didn't get to flex my Japanese abilities on voice calls and FaceTime, iOS 26 was more than capable of keeping up with some rudimentary German and Spanish. I'm not sure if I'd rely on it for serious business translation or holiday bookings, but I think it could be a very useful tool for basics. There's also the ability to filter spam messages to their own little folder (purgatory). Spam texts remain a nightmare, so I appreciate any potential weapons in the fight. Sadly, it hasn't quite manage to deal with the TikTok marketing agencies and phone network customer services that continue to barrage my Messages. Still, hopefully Apple will continue to improve its detection algorithms. One more tool in the battle against spam: You can mute notifications for Messages from unknown numbers, although time-sensitive alerts from delivery services and rideshare apps will still reach you. Not everything in the beta lands, however. I've already touched on how Liquid Glass was initially a semi-transparent mess. The Games App, too, seems like an unnecessary addition. Because it's a blend of the Games tab of the App Store and a silo of your preinstalled games, I'm not sure what it's adding. It's not any easier to navigate, nor does it introduce me to games I want to buy. Cherlynn did want to highlight that for a casual gamer like herself, it's intriguing to see if the Games app might start to recommend more mind-numbing puzzles or farming simulations. She was also intrigued by the idea of a more social gaming experience on iOS, issuing challenges to her friends. Still, because the phone she has been testing the beta on doesn't have access to all her contacts or her gaming history, the recommendations and features are fairly limited at the moment. Games is one of two new apps that will automatically join your home screen. (Fortunately, they can be uninstalled). The other is Preview, which should be a familiar addition to any Mac user. It offers an easy way to view sent or downloaded files, like menus, ticket QR codes and more. During the developer beta, the app pulled in a handful of my documents that previously lived in the Files app. Navigation across both those apps is identical, although Preview is limited to files you can actually open, of course. This is more iPhone-adjacent, but iOS 26 includes several quality-of-life improvements for some of Apple's headphones. First up: notifications when your AirPods are fully charged, finally! The Apple Watch got this kind of notification back in iOS 14, so it's great to see Apple's headphones catch up. Apple is also promising "studio-quality sound recording" from the AirPods, augmenting recordings with computational audio improvements. There's a noticable bump in audio quality. It appears that AirPods 4 and AirPods Pro 2 will record files at a sample rate of 48 kHz, which is double the rate used in the past. The sample rate bump happened last year, but it is dependent on what the app you're using. Is it "studio quality"? I don't think so, but it's an improvement. While recordings sound slightly better in quiet locations, the bigger change is in loud environments. The algorithm doesn't appear to be degrading audio quality as much while trying to reduce background noise. iOS 26 also adds sleep detection to the buds. If the AirPods detect minimal movement, they'll switch off automatically, which could be helpful for the next time I'm flying long-haul. In iOS 26, Apple has prioritized design changes and systemwide consistency over AI-centric software and features. While Liquid Glass is a big change to how your iPhone looks, Apple has drawn from user feedback to finesse the design into feeling less jarring and gelling better when the home screen, Control Center and Notification drop-downs overlap with each other. There are numerous quality of life improvements, including Messages and Visual Intelligence, in particular. If anything, the AI elephant in the room is the lack of any substantial updates on Siri. After the company talked up advanced Siri interactions over a year ago, I'm still waiting for its assistant to catch up with the likes of Google.

[7]

iOS 26 public beta now available with new design and more - 9to5Mac

The iOS 26 public beta is now available, the next major update for iPhone software. iOS 26 brings an all-new Liquid Glass design, updates to your favorite iPhone apps and features, and much more. If you haven't been paying attention, you might be a little confused when you see "iOS 26" pop up on your iPhone. After all, the last major software update for iPhone was iOS 18. What happened to the other numbers? Well, the gist is that Apple is unifying all of its software platforms with the "26" version number to align with the calendar year. iOS 26 will be used primarily throughout 2026, hence the new "26" naming scheme. I'm personally a huge fan of this rebrand, as someone who has made countless typos over the years trying to keep track of the varying version numbers across each of Apple's platforms. Here are the devices compatible with iOS 26: iOS 26 is the biggest redesign to the iPhone's software since iOS 13 debuted in 2013. Apple says that the new interface, which Apple calls Liquid Glass, is designed to bring "brings more focus to content and a new level of vitality while maintaining the familiarity of Apple's software." Here's Alan Dye, Apple's vice president of Human Interface Design, describing Liquid Glass: "This is our broadest software design update ever. Meticulously crafted by rethinking the fundamental elements that make up our software, the new design features an entirely new material called Liquid Glass. It combines the optical qualities of glass with a fluidity only Apple can achieve, as it transforms depending on your content or context. It lays the foundation for new experiences in the future and, ultimately, it makes even the simplest of interactions more fun and magical." The Liquid Glass redesign touches every aspect of the iPhone's software experience. You'll see new icons, new buttons, new menus, and much more. Liquid Glass also extends to the animations throughout iOS. Animations are more fluid and responsive to your touch, bubbling up to the surface as you tap and scroll. iOS 26 is chock full of new features beyond the Liquid Glass redesign. It might sound boring, but the Phone app has been completely revamped in iOS 26. First, there's a new unified layout in the Phone app that combines Favorites, Recents, and Voicemails into a single interface. I'm a big fan of this new design, but Apple has also added an option to switch back to the old interface if the new one doesn't click for you. iOS 26 also brings a pair of new features to the Phone app. There's a Call Screening feature that Apple says "helps eliminate interruptions by gathering information from the caller and giving users the details they need to decide if they want to pick up or ignore the call." When an unknown number calls you, your iPhone will pick up the call and ask the person for their name and reason for calling. Based on the information they provide, you can decide whether to answer the call. There's also a new Hold Assist feature that kicks in when you're placed on hold. It detects when a human has picked up the call on the other end, so you don't have to sit and awkwardly listen to hold music. More new features in the Phone app: Messages is the most important app for many iPhone users, and it's getting a wide array of new features as part of iOS 26. From a visual perspective, the flagship change is the ability to set custom backgrounds for conversations in the Messages app. You can choose backgrounds from a default collection of options or from your Photos library. You can also generate a completely unique background using the Image Playgrounds feature of Apple Intelligence. iOS 26 also adds polls to the Messages app. You can use this for things like picking a restaurant for dinner, deciding which movie to see, and much more. Polls display votes in real-time, making it easy to follow along with people's preferences. There's also Apple Intelligence integration, which can detect when a poll could be useful and automatically generate options. The new Messages app in iOS 26 also makes it easier to filter out spam and unknown senders. There's a new filtering option in the upper-right corner with a handful of different settings designed to make it easier to combat the ever-increasing spam messages problem. For group messages, iOS 26 has several notable upgrades, including typing indicators (finally!), support for Apple Cash, and the ability to quickly add new contacts in groups with a dedicated "Add Contact" button." More new features in the Messages app: The Camera app has received a dramatic overhaul as part of iOS 26. The new design aims to streamline your access to the most common features, like simply taking a photo or video. Apple, however, hasn't removed the other features from the Camera app; you can access all of the same features as before by swiping left, right, up, and down on the interface. More new features in the Camera app: The Photos app underwent a significant redesign with iOS 18 last year, implementing a unique single-tab design. With iOS 26, Apple listened to user feedback and added a two-tabbed design to split up your "Library" and "Collections" into separate interfaces. More new features in the Photos app: Apple Music continues to leapfrog Spotify in iOS 26 with several new features. First, there's a new AutoMix capability that uses AI to analyze the audio features of your music to seamlessly create transitions between songs, resulting in what Apple describes as a DJ-like listening experience for all Apple Music users. The lyrics feature in iOS 26 is also even more powerful. When listening to a song in a foreign language, you'll now see translations for the lyrics. Meanwhile, a Lyrics Pronunciation feature makes it easy to sing along to songs even if you don't know the language. Finally, you can now pin your favorite songs, playlists, albums, artists, and more to the top of your "Library" tab in the Music app. This makes it easier to have quick access to your favorites and ensures that nothing gets lost in the shuffle. iOS 26 brings massive improvements to CarPlay. CarPlay gets the Liquid Glass redesign, with new icons, refreshed user interface elements, and more. Most notably, iOS 26 adds support for widgets to CarPlay. You now have a dedicated screen on your CarPlay interface where you can add any widget from your iPhone, including things like the Home app, Calendar, Weather, and more. The number of widgets you can have will vary based on the size of your CarPlay display. iOS 26 also brings Live Activities to CarPlay for the first time, so Live Activities will show up your CarPlay screen just like they do on iPhone. More CarPlay changes in iOS 26: iOS 26 also adds a brand new app to your iPhone's Home Screen: "Apple Games." The new Apple Games app is meant to serve as a central location for all your App Store games, leaderboards, challenges, and more. Here's how Apple describes it: Apple Games is a new app that gives players an all-in-one destination for their games. It helps players jump back into titles they love, find their next favorite, and have even more fun with friends. They'll find out what's happening across all their games, including major events and updates, so they never miss a moment. The Games app is also the best way to experience Apple Arcade, Apple's game subscription service with more than 200 award-winning and highly rated games for the whole family. Apple Maps is adding two big new features as part of iOS 26 this year: Apple launched Apple Intelligence to the public last year, and this year's release of iOS 26 continues the platform's evolution. We have a full post on everything new with Apple Intelligence in this year's Apple software updates, so check that out for more details on these features. Visual Intelligence upgrades Visual Intelligence is even more powerful in iOS 26, now with the ability to learn more about the content on your iPhone's screen by taking a screenshot. When you take a screenshot, you'll have the option to send it to Visual Intelligence to add events to your calendar, search Google and third-party apps, send it to ChatGPT, and more. You can also highlight something on your screen and specify that you want to search for it. Apple explains: Building on Apple Intelligence, visual intelligence extends to a user's iPhone screen so they can search and take action on anything they're viewing across apps. Users can ask ChatGPT questions about what they're looking at onscreen to learn more, as well as search Google, Etsy, or other supported apps to find similar images and products. Visual intelligence also recognizes when a user is looking at an event and suggests adding it to their calendar, repopulating key details like date, time, and location. Live Translation Live Translation is perhaps the most impressive new Apple Intelligence feature in iOS 26. The feature is enabled by Apple-built models that run entirely on your device, so your conversations stay private. Live Translation is available in Messages, Phone, and FaceTime to help you communicate with others in different languages. Audio and text is translated in real-time. In the Messages app, Live Translation will automatically translate incoming texts to your preferred language. Then, you can type your response in your preferred language and it is translated when it's delivered to the other person. In FaceTime, Live Translation shows translated captions that appear on your screen, while you continue to hear the other person's voice. In the Phone app, you can translate a phone call and hear the other person's words translated and spoken in real time. Shortcuts Shortcuts is supercharged by Apple Intelligence in iOS 26. There's a collection of new intelligent actions that let you create shortcuts with actions for Writing Tools and Image Playground. The most notable addition to Shortcuts, however, is a new "Use Model" action to communicate directly with Apple's models as well as ChatGPT: Users will be able to tap directly into Apple Intelligence models, either on-device or with Private Cloud Compute, to generate responses that feed into the rest of their shortcut, maintaining the privacy of information used in the shortcut. For example, a student can build a shortcut that uses the Apple Intelligence model to compare an audio transcription of a class lecture to the notes they took, and add any key points they may have missed. Users can also choose to tap into ChatGPT to provide responses that feed into their shortcut. Upgrades to Genmoji and Image Playground iOS 26 makes updates to two of the flagship Apple Intelligence features. With Genmoji, you can now combine multiple emoji to make new Genmoji, add expressions, and adjust personal attributes for your Genmoji creations. Image Playground also now supports adding expressions and changing personal attributes like hairstyles and facial hair. Most notably, however, Image Playground now integrates with ChatGPT. This means you have multiple options when creating images: More Apple Intelligence changes In addition to those flagship changes, you'll find Apple Intelligence features sprinkled in throughout the rest of iOS 26: This is just the tip of the iceberg when ti comes to new features in iOS 26. The update is crammed full of updates to all your favorite Apple apps and features. Perhaps this year, more than any other year, I'm on the edge of my seat waiting to see the public's reaction to iOS 26. It's by far Apple's most ambitious update to the iPhone's software in years, headlined, of course, by the new Liquid Glass design. Throughout the beta testing process with developers, it would be an understatement to say that Liquid Glass has been polarizing. Watching Apple respond to that feedback over the last 6 weeks has been fascinating. I think Apple would be the first to admit that some aspects of the design in iOS 26 beta 1 were unusable. There were issues with text legibility, jarring and aggressive animations, and a complete mess of Control Center. Apple made several changes to the design with iOS 26 beta 2, honing in things like Control Center to improve usability. With iOS 26 beta 3, Apple made drastic changes to the entire Liquid Glass interface. It swapped out transparent toolbars for a more frosted and opaque design. These changes were seemingly meant to address concerns around text legibility. The problem, however, at least in my opinion, was that beta 3 went too far. It strayed too far from the Liquid Glass vision that Apple debuted at WWDC. So much of the whimsy and joy of using iOS 26 was stripped away. That brings us to where we are today. Apple shipped iOS 26 beta 4 to developers on Tuesday, and it tries to find a middle ground between beta 2 and beta 3. iOS 26 beta 4 also serves as the basis of today's iOS 26 public beta, so it represents the first experience that many iPhone users will have with Liquid Glass. The version of Liquid Glass in iOS 26 beta 4 is ambitious, fun, and whimsical. I'm actually surprised that Apple made such a course correction. I expected the interface to be closer to beta 3 than beta 2, and I imagine that might be where we ultimately land when iOS 26 ships to the public in September. For example, there are still text legibility issues that Apple needs to solve, specifically when you have navigation bars overlapping with text behind them. I also think it's important not to get caught up in the variations from one beta to the next, and instead zoom out and look at iOS 26 and Liquid Glass as a whole. Is this new design language strong enough to serve as the basis of the iPhone's software experience for the next decade? I think the answer is a resounding yes. The iPhone hasn't seen a fundamental software redesign since iOS 7 in 2013. There have been gradual iterations and changes in the last twelve years, but there hasn't been a drastic year-over-year change like what we saw from iOS 6 to iOS 7. It's incredibly ambitious for Apple. The iPhone install base is even bigger now than it was in 2013. The iPhone is a more mature platform with more features and intricacies than in 2013. Liquid Glass is a risk, but a risk that I think comes with enormous payoff in giving Apple the ability to set the design standard for the next decade. Looking beyond Liquid Glass, iOS 26 has a number of features that stand out to me. The CarPlay updates in iOS 26 are fantastic. I wrote earlier this year that I was worried about CarPlay becoming abandonware as Apple shifted its focus to CarPlay Ultra. With iOS 26, Apple has proven that it's still invested in the version of CarPlay that's available on millions of cars today, not just Aston Martins. I think AutoMix in Apple Music has the legs to become a viral feature. I've thoroughly enjoyed it throughout my time with iOS 26, and it does a great job at crafting unique and fun transitions from one song to the next. There are also just a ton of small features that I've already come to appreciate in iOS 26. The new filtering options in Messages, Visited Places in Apple Maps, estimated charge times in the Lock Screen, and much more. What are you most excited to try in iOS 26? Are you going to install the public beta? Let us know down in the comments.

[8]

7 iOS 26 game-changing features -- here's what you need to check out with the public beta