Instagram Chief Warns AI Images Are Outpacing Our Ability to Distinguish Real from Fake

16 Sources

16 Sources

[1]

Instagram Chief Says AI Images Are Evolving Fast and He's Worried About Us Keeping Up

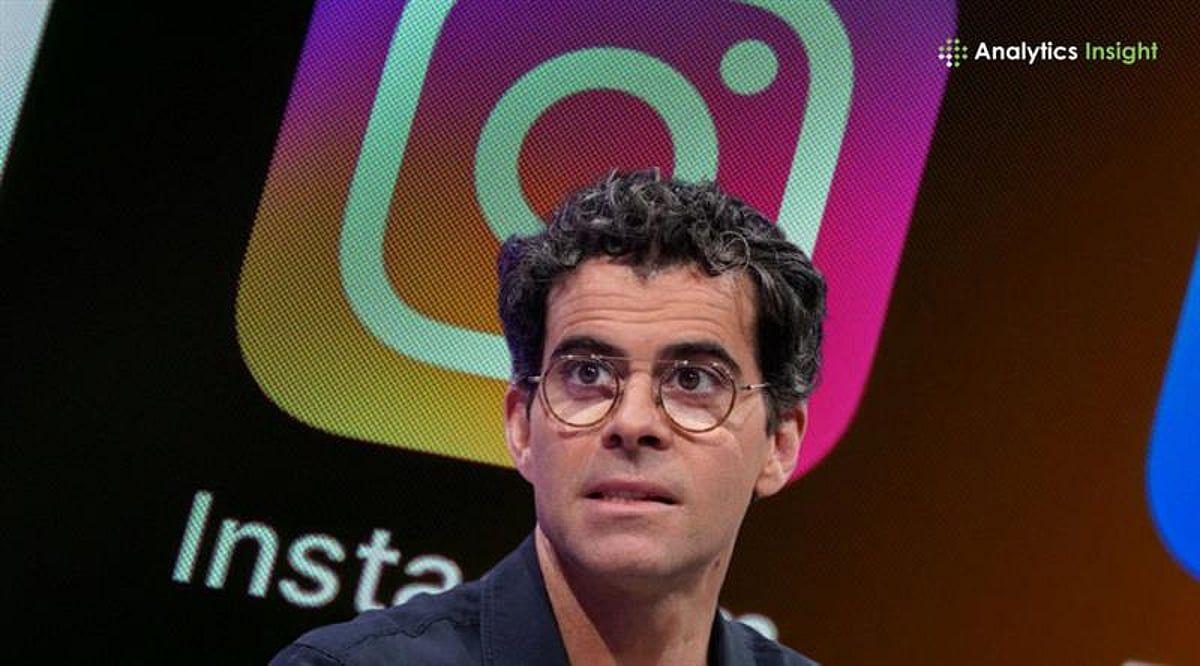

We need a whole new approach to "credibility signals" so we know who to trust, says Adam Mosseri. In a 2025 year-end post, Instagram chief Adam Mosseri addressed the massive shifts AI is causing in photography, stressing that authenticity will be harder and harder to come by -- and offering thoughts on how creators, camera makers and Instagram itself will need to adapt. "The key risk Instagram faces is that, as the world changes more quickly, the platform fails to keep up. Looking forward to 2026, one major shift: authenticity is becoming infinitely reproducible," Mosseri wrote in the post, which took the form of 20 text slides -- no images at all. (He also posted a somewhat expanded version on Threads.) Don't miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source. Mosseri said that AI is making it impossible to distinguish real photos from AI-generated images and that as more "savvy creators are leaning into unproduced, unflattering images," AI itself will follow with images that lean into that "raw aesthetic" as well. That will force us, he said, to change how we approach images from the jump. "At that point we'll need to shift our focus to who says something instead of what is being said," Mosseri said. But it will take us "years to adapt" and to get away from assuming that what we see is real. "This will be uncomfortable -- we're genetically predisposed to believing our eyes." On the technical side, Mosseri predicted that makers of camera equipment will begin offering ways to cryptographically sign photos to establish a chain of ownership, proving that images aren't AI generated. He also warned that those camera makers are going the wrong direction by offering ways to help amateur photographers create polished images. "They're competing to make everyone look like a pro photographer from 2015," Mosseri said. "Flattering imagery is cheap to produce and boring to consume. People want content that feels real." Instagram is owned by Meta, which also owns Facebook and WhatsApp, and like those platforms, Instagram added AI features in 2025. It also surprised some users who saw AI versions of themselves popping up in ads. Like other platforms, Instagram has struggled with the flood of AI-generated content, including slop, crowding out content from humans. Just look at the powerful AI image and video generators that emerged in 2025, from Google's Nano Bananas to OpenAI's Sora. In his posts, Mosseri said he hopes that the struggle to figure out what's fake and what's real will be addressed by labeling "real media" and rewarding originality in how that content is ranked. Mosseri concluded by listing steps that Instagram will have to take, driven by a need to "surface credibility signals about who's posting so people can decide who to trust." "Instagram is going to have to evolve in a number of ways," he said, "and fast."

[2]

You can't trust your eyes to tell you what's real anymore, says the head of Instagram

The key risk Instagram faces is that, as the world changes more quickly, the platform fails to keep up. Looking forward to 2026, one major shift: authenticity is becoming infinitely reproducible. Everything that made creators matter-the ability to be real, to connect, to have a voice that couldn't be faked-is now accessible to anyone with the right tools. Deepfakes are getting better. Al generates photos and videos indistinguishable from captured media. Power has shifted from institutions to individuals because the internet made it so anyone with a compelling idea could find an audience. The cost of distributing information is zero. Individuals, not publishers or brands, established that there's a significant market for content from people. Trust in institutions is at an all-time low. We've turned to self-captured content from creators we trust and admire. We like to complain about "AI slop," but there's a lot of amazing AI content. Even the quality AI content has a look though: too slick, skin too smooth. That will change - we're going to see more realistic AI content. Authenticity is becoming a scarce resource, driving more demand for creator content, not less. The bar is shifting from "can you create?" to "can you make something that only you could create?" Unless you are under 25, you probably think of Instagram as feed of square photos: polished makeup, skin smoothing, and beautiful landscapes. That feed is dead. People stopped sharing personal moments to feed years ago. The primary way people share now is in DMs: blurry photos and shaky videos of daily experiences. Shoe shots. and unflattering candids. This raw aesthetic has bled into public content and across artforms. The camera companies are betting on the wrong aesthetic. They're competing to make everyone look like a pro photographer from 2015. But in a world where AI can generate flawless imagery, the professional look becomes the tell. Flattering imagery is cheap to produce and boring to consume. People want content that feels real. Savvy creators are leaning into unproduced, unflattering images. In a world where everything can be perfected, imperfection becomes a signal. Rawness isn't just aesthetic preference anymore -- it's proof. It's defensive. A way of saying: this is real because it's imperfect. Relatively quickly, AI will create any aesthetic you like, including an imperfect one that presents as authentic. At that point we'll need to shift our focus to who says something instead of what is being said. For most of my life I could safely assume photographs or videos were largely accurate captures of moments that happened. This is clearly no longer the case and it's going to take us years to adapt. We're going to move from assuming what we see is real by default, to starting with skepticism. Paying attention to who is sharing something and why. This will be uncomfortable - we're genetically predisposed to believing our eyes. Platforms like Instagram will do good work identifying AI content, but they'll get worse at it over time as AI gets better. It will be more practical to fingerprint real media than fake media. Camera manufacturers will cryptographically sign images at capture, creating a chain of custody. Labeling is only part of the solution. We need to surface much more context about the accounts sharing content so people can make informed decisions. Who is behind the account? In a world of infinite abundance and infinite doubt, the creators who can maintain trust and signal authenticity - by being real, transparent, and consistent - will stand out. We need to build the best creative tools. Label AI-generated content and verify authentic content. Surface credibility signals about who's posting. Continue to improve ranking for originality. Instagram is going to have to evolve in a number of ways, and fast.

[3]

In the AI Slop Era, Instagram's CEO Says New Tools Are Needed to Support 'Authentic' Creators

It doesn't matter whether you're scrolling YouTube, TikTok, Facebook, Spotify, or even browsing for educational articles online -- there's more AI slop in our feeds than ever before, and less human-made content. Instagram has been one of the clearest examples of AI content's rise on social media, with some AI-generated models racking up more followers than most aspiring influencers could dream of. In response, Instagram boss Adam Mosseri has promised to support authentic and "raw" creators on the platform, hinting at new tools to help human creators. In a post on Threads, Mosseri argued that authenticity is "fast becoming a scarce resource," but that this will "drive more demand for creator content, not less." Mosseri said "flattering imagery" -- for example, edited selfies with no blemishes or high-contrast travel snaps -- "is cheap to produce and boring to consume." "People want content that feels real," he added. "We are going to see a significant acceleration of a more raw aesthetic over the next few years," Mosseri said, predicting that savvy creators will increasingly lean into explicitly unproduced and unflattering images of themselves. "In a world where everything can be perfected, imperfection becomes a signal." Mosseri also acknowledged the scale of the slop problem facing social media platforms, predicting that although "all the major platforms will do good work identifying AI content," they will "get worse at it over time as AI gets better at imitating reality." In terms of practical takeaways, Mosseri suggested it may be "more practical to fingerprint real media than fake media" on Instagram. He suggested that camera manufacturers could cryptographically sign images at capture, creating a technical seal of authenticity. Mosseri said Instagram needs to "surface credibility signals about who's posting so people can decide who to trust," as well as "continue to improve ranking for originality." Finally, he said Instagram also needs to roll out new creative tools, both AI-enabled and traditional, to help human creators compete with increasingly advanced AI. But Mosseri didn't officially announce any new features or timelines for when they could be introduced. It's not just Instagram that seems to be acknowledging the slop epidemic. In November, TikTok rolled out a new feature as part of its Manage Topics menu, allowing users to see less AI-generated content (even if it doesn't remove it entirely). This is something Instagram hasn't yet rolled out a specific option for as of the time of writing. Instagram, alongside Facebook and Threads, did roll out labels for AI-generated content in 2024, but the labeling depends on user disclosure or the platform being able to detect it, meaning large amounts of AI content still appears without AI labels.

[4]

Instagram chief: AI is so ubiquitous 'it will be more practical to fingerprint real media than fake media'

It's no secret that AI-generated content took over our social media feeds in 2025. Now, Instagram's top exec Adam Mosseri has made it clear that he expects AI content to overtake non-AI imagery and the significant implications that shift has for its creators and photographers. Mosseri shared the thoughts in a lengthy post about the broader trends he expects to shape Instagram in 2026. And he offered a notably candid assessment on how AI is upending the platform. "Everything that made creators matter -- the ability to be real, to connect, to have a voice that couldn't be faked -- is now suddenly accessible to anyone with the right tools," he wrote. "The feeds are starting to fill up with synthetic everything." But Mosseri doesn't seem particularly concerned by this shift. He says that there is "a lot of amazing AI content" and that the platform may need to rethink its approach to labeling such imagery by "fingerprinting real media, not just chasing fake." From Mosseri (emphasis his): On some level, it's easy to understand how this seems like a more practical approach for Meta. As we've previously reported, technologies that are meant to identify AI content, like watermarks, have proved unreliable at best. They are easy to remove and even easier to ignore altogether. Meta's own labels are far from clear and the company, which has spent tens of billions of dollars on AI this year alone, has admitted it can't reliably detect AI-generated or manipulated content on its platform. That Mosseri is so readily admitting defeat on this issue, though, is telling. AI slop has won. And when it comes to helping Instagram's 3 billion users understand what is real, that should largely be someone else's problem, not Meta's. Camera makers - presumably phone makers and actual camera manufacturers -- should come up with their own system that sure sounds a lot like watermarking to "to verify authenticity at capture." Mosseri offers few details about how this would work or be implemented at the scale required to make it feasible. Mosseri also doesn't really address the fact that this is likely to alienate the many photographers and other Instagram creators who have already grown frustrated with the app. The exec regularly fields complaints from the group who want to know why Instagram's algorithm doesn't consistently surface their posts to their on followers. But Mosseri suggests those complaints stem from an outdated vision of what Instagram even is. The feed of "polished" square images, he says, "is dead." Camera companies, in his estimation, are "are betting on the wrong aesthetic" by trying to "make everyone look like a professional photographer from the past." Instead, he says that more "raw" and "unflattering" images will be how creators can prove they are real, and not AI. In a world where Instagram has more AI content than not, creators should prioritize images and videos that intentionally make them look bad.

[5]

Instagram says in the age of AI, you can't assume what you see online is real

Serving tech enthusiasts for over 25 years. TechSpot means tech analysis and advice you can trust. Big quote: At the close of 2025, Instagram head Adam Mosseri used his personal account to post a twenty-image presentation examining what he called the "new era of infinite synthetic content." The slideshow, which reads like a digital memo to the future of photography, argues that technology has permanently blurred the distinction between authentic and artificial imagery - and that Instagram, once defined by its personal photo diaries, has already moved beyond that stage. Mosseri said the traditional, more intimate feed was "dead" years ago. What replaces it now, he suggested, is a world in which users must adapt to a new default assumption: that not everything they see is real. "For most of my life I could safely assume photographs or videos were largely accurate captures of moments that happened. This is clearly no longer the case and it's going to take us years to adapt," he wrote. He described a shift from trust to verification as the foundation of visual culture online. "We're going to move from assuming what we see is real by default, to starting with skepticism. Paying attention to who is sharing something and why. This will be uncomfortable - we're genetically predisposed to believing our eyes." Also read: More than 20% of YouTube's feed is now "AI slop," report finds Mosseri's comments reflect a growing unease among technology observers about the collapse of photographic truth. Last year, The Verge's Sarah Jeong wrote that the default assumption about a photo is about to become that it's faked, because creating realistic and believable fake photos is now trivial to do. Mosseri's reflections, though focused on Instagram's role, echo that prediction. The Instagram chief outlined what he described as a necessary evolution in platform design: "We need to build the best creative tools. Label AI-generated content and verify authentic content. Surface credibility signals about who's posting. Continue to improve ranking for originality." These steps, if implemented, would shift more responsibility to Meta's systems for distinguishing between synthetic and authenticated visual media. This problem is increasingly pressing as generative AI models drive new waves of image production at scale. He also addressed the ongoing debate over the aesthetics of AI imagery, pushing back on the idea that all generative output should be dismissed as low-value filler. People like to complain about AI slop, but there's a lot of amazing AI content, Mosseri claimed, though he gave no examples or specific reference to Meta's own AI initiatives. In a subtle critique of conventional camera makers, he argued that companies chasing hyperrealistic effects - tools that can "make everyone look like a pro photographer from 2015" - may be missing the larger transformation already underway. The presentation concludes a year in which discussions about authenticity, attribution, and misinformation in visual media intensified across the tech industry. As 2026 begins, Mosseri's warning - that audiences must learn to see with skepticism first - signals not just a design challenge for social platforms but a cultural recalibration of what counts as proof in an age of infinite synthesis.

[6]

Instagram says AI killed the curated feed -- now it's scrambling to prove what's real

Instagram admits AI broke visual trust -- and Gen Z may have already moved on Instagram helped define the look of social media: aesthetic feeds, filtered selfies and perfectly curated grid posts. But according to Instagram head Adam Mosseri, that era is over -- and AI is to blame. Could social media be becoming too fake? In a year-end essay, Mosseri posted on Threads that AI-generated content has shattered the visual trust that once powered the platform. When images can be generated in seconds, a perfectly lit photo no longer proves that something happened. In fact, he says, it now sparks "suspicion." Interesting sentiment considering Meta AI, Instagram's parent company, just purchased Manus AI. It's clear that the most trustworthy content has shifted to what looks the least produced -- blurry candid photos, random DMs and anything that feels too messy to fake. But as we begin 2026, AI is only becoming more integrated across the web. Mosseri points out that most users under 25 no longer care about posting to their public grid. Instead, they're sharing "unflattering candids" in private group chats or disappearing stories -- a quiet rebellion against algorithm-chasing perfection. That shift isn't just about preference, it actually reflects a deeper behavioral change: people don't trust how things look anymore -- they trust who's posting them. If a photo is too smooth, too symmetrical or too sharp, younger users assume it's AI. Visual perfection now reads like a red flag. The shift to immediately assuming the post was created using AI might be the theme of 2026. The irony of Instagram's authenticity crisis is that social media was the birthplace of filters. It's almost poetic how the platform is now calling time on the aesthetic era it helped create. But Mosseri's take feels accurate, essentially stating that we've hit peak polish, and it no longer feels personal. AI-generated influencers, product shots and aspirational scenes dominate the feed. The things that once signaled effort now signal automation. And the only thing that still feels undeniably human? A blurry, badly lit photo your friend sent in a group chat. Rather than trying to verify every individual image, Mosseri says the platform is now focused on verifying people as the shift is moving from "is this real?" to "who shared this?" For this reason, Instagram plans to: In one of his more forward-looking ideas, Mosseri suggested that camera manufacturers should cryptographically sign images at capture, embedding proof that they were taken by a real device, at a real time, in a real place. This kind of hardware-level authenticity would mark a major change -- moving beyond detecting fakes after the fact to preventing doubt before it begins. Google's Nano Banana Pro can detect AI images, and even if you upload an image to ChatGPT, it can determine the qualities of AI within an image. It's a clear signal that even platform leaders don't believe content moderation alone can keep up with AI. In 2026, the mess might be the message.

[7]

AI Slop won in 2025 -- fingerprinting real content might be the answer in 2026

The battle against AI content is lost. Slop fills our feeds, and it's up to us to discern what is real. But what if 2026 represents the dawn of a new approach, a flipping of the script where we no longer chase identifying what's artificially created and instead fingerprint the real? This is the not-necessarily-novel concept presented in a recent New Year's Threads post from Instagram CEO Adam Mosseri. In it, he acknowledges that "authenticity is fast becoming a scarce resource," and that the bar is shifting from "'can you create?' to 'can you make something that only you could create?'" Mosseri also writes about more creators shifting to unpolished content that flies in direct conflict with what camera manufacturers are pitching, a sort of idealized world with artificially created bokeh effects. He doesn't say it, but I think Mosseri is actually referring to smartphone companies that are making ever-smarter image processing pipelines that can make any image, object, animal, or person look unnaturally beautiful. If Mosseri is right, not only do we want more authentic, unvarnished content in our feeds, but we'll also be using AI to help us create this aesthetic. He writes, "We'll go from the Midjourney realistic video game aesthetic and imitating Wes Anderson and Studio Ghibli films to being able to direct an AI to create any aesthetic you like, including an imperfect one that presents as authentic." That, naturally, sounds awful, and Meta, Instagram's parent company, will be among those who provide those tools (along with OpenAI, Google Gemini, Perplexity, and others). It's a nightmare scenario because the platforms where we will post and try to discern truth will be the same ones offering tools to create a more realistic world that will still be fake. Perhaps that's why Mosseri is now pitching a different approach. "There is already a growing number of people who believe, as I do, that it will be more practical to fingerprint real media than fake media," he writes. Instead of watermarking AI-generated content, which Mosser's platforms are still very much committed to doing, Instagram and other platforms might find ways to label real content before it appears online. How, of course, is the big question. Photos coming out of digital cameras and smartphones all get what's known as EXIF (Exchangeable Image Format) data, which can describe the camera, lenses, and settings used, as well as available location and date data. It's not something that can easily bee faked. Video content has similar XMP data. I'm not certain about how we might fingerprint human-written content or real audio recordings. Mosseri, though, talks about using some old-school authenticity markers, like checking on the author and what other content they've posted. I don't know if that's something Instagram and others could easily automate, or if that's a job best left to individuals. Still, I like the intention, especially because the war on fake content is already all but lost. Recently, I came across an X post from one of my favorite bird photographers. Carl Bovis presented four images and asked which one was AI-generated. I think I accurately identified the too-polished feathers and almost metallic-looking beak of the sparrow eating a red berry, but I honestly couldn't be sure. What I really needed was confirmation that the other three images were, in fact, real. Having followed Bovis for years, I've done my own form of fingerprinting with the pro photographer. I've seen hundreds of his photos, I know his background, and I trust that what he posts is coming directly from one of his many long-lensed SLRs. A broader fingerprint effort, like the one proposed by Mosseri, will fail or be largely ineffectual if only one platform adopts it. We need a standard for content IDs that allows any platform to offer us one-touch filters to see only human-generated posts. That right there would be transformative. Here's hoping 2026 is truly the year of authentic content and fingerprinting real people's posts - it might almost make social media fun and useful again.

[8]

Instagram Head Calls Out Camera Companies for Going in the Wrong Direction

Instagram head Adam Mosseri has shared some end-of-year thoughts on the state of photos on social media amid an avalanche of AI slop, and has said "camera companies are betting on the wrong aesthetic." In a lengthy post shared to Threads and Instagram, Mosseri addressed the issue of autheticity in a world where "AI is generating photographs and videos indistinguishable from captured media." Moserri takes issue with the term "AI slop," saying there is "a lot of amazing AI content," without giving any examples. "Unless you're under 25 and use Instagram, you probably think of the app as a feed of square photos. The aesthetic is polished: lots of make up, skin smoothing, high contrast photography, beautiful landscapes," Moserri says before declaring that feed "is dead." "People largely stopped sharing personal moments to feed years ago," he continues. "Stories are alive and well as they provide a less pressurized way to share with your followers, but the primary way people share, even photos and videos, is in DMs." It is here where Mosseri takes aim at camera companies. "The camera companies are betting on the wrong aesthetic," he says. "They're competing to make everyone look like a professional photographer from the past. Every year we see phone cameras boast about more megapixels and image processing. We are romanticising the past. Portrait mode is artificially blurring the background of a photograph to reproduce the soft glow you get from the shallow depth of field of a fixed lens. It looks good, and we like to look good." "But flattering imagery is cheap to produce and boring to consume," he continues. "People want content that feels real. We are going to see a significant acceleration of a more raw aesthetic over the next few years. Savvy creators are going to lean into explicitly unproduced and unflattering images of themselves. In a world where everything can be perfected, imperfection becomes a signal. Rawness isn't just aesthetic preference anymore -- it's proof. It's defensive. A way of saying: this is real because it's imperfect." But Mosseri then immediately follows the above by saying AI will soon be able to recreate imperfect imagery anyway. "For most of my life I could safely assume that the vast majority of photographs or videos that I see are largely accurate captures of moments that happened in real life. This is clearly no longer the case and it's going to take us, as people, years to adapt," he says before offering some possible solutions. "Social media platforms are going to come under increasing pressure to identify and label AI-generated content as such. All the major platforms will do good work identifying AI content, but they will get worse at it over time as AI gets better at imitating reality. There is already a growing number of people who believe, as I do, that it will be more practical to fingerprint real media than fake media. Camera manufacturers could cryptographically sign images at capture, creating a chain of custody."

[9]

Instagram CEO: More practical to label real content versus AI

When Instagram CEO Adam Mosseri looks into the rapidly approaching future of AI-generated content, he sees a massive problem: how to tell authentic media apart from the kind made with AI technology. In a recent Threads post on the topic, Mosseri said that social media platforms like Instagram will be under mounting pressure to help users tell the difference. Mosseri argued that major platforms will initially succeed at spotting and labeling AI content, but that they'll begin to falter as AI imitates reality with more precision. "There is already a growing number of people who believe, as I do, that it will be more practical to fingerprint real media than fake media," Mosseri wrote. That "fingerprint" could be created from within cameras themselves, if their manufacturers "cryptographically sign images at capture, creating a chain of custody." "We need to label AI-generated content clearly, and work with manufacturers to verify authenticity at capture -- fingerprinting real media, not just chasing fake," Mosseri added. Such labeling could help people navigate the AI slop that's flooding the internet. (Mashable's Tim Marcin has explained how we got to this moment.) Mosseri also wrote that identifying the authenticity of creator content will shape the way people relate to that media: "We need to surface credibility signals about who's posting so people can decide who to trust."

[10]

Instagram goes raw: AI slop floods social media platform

AI technology is changing how content is created and presented on Meta Platforms' Instagram, shifting away from the platform's historically polished style to a "raw aesthetic" marked by imperfections, according to Instagram director Adam Mosseri. This reflects a broader transformation in social media content fueled by AI integration. Mosseri recently discussed on Meta's Threads platform that Instagram's emphasis on highly edited, high-contrast posts is fading. He noted that users have increasingly stopped sharing polished personal content publicly, opting instead for unfiltered snapshots and voice messages in private chats. The rise of AI-generated material has led Merriam-Webster to dub "AI slop" its 2025 Word of the Year, highlighting concerns over the proliferation of low-quality AI content and questions about authenticity. Meta has actively incorporated AI into its platforms, launching tools like AI Studio for building customized chatbots and experimenting with virtual AI influencers modeled after real celebrities. Mosseri emphasized that as AI-generated content becomes more common, maintaining trust will require new methods of verifying authenticity. He suggested future technologies might involve cryptographic tagging of images at the time of capture to confirm their origin, helping distinguish genuine content from AI fabrications. Industry leaders have also noted the challenges posed by AI in social media content creation and consumption. Elon Musk anticipates that generative AI will soon produce the majority of music and video content, profoundly changing human communication. OpenAI CEO Sam Altman remarked on a growing convergence between human and AI communication styles, with social media posts taking on an "AI flavor" that complicates the search for truth online. As AI continues to influence digital platforms, Instagram and other social networks face the challenge of balancing creative innovation with the need to preserve authenticity and user trust, in an environment where the boundaries between human- and machine-generated content are increasingly blurred. Article edited by Jerry Chen

[11]

Instagram Head Says AI Images Have Forced the Platform to Evolve Fast

Mosseri said authenticity will soon be the biggest marker of a creator Instagram Head Adam Mosseri believes that the rise of artificial intelligence (AI)-generated images and videos is forcing the platform to evolve quickly. The Meta-owned social media platform gained popularity for its image and video-centric feed, and is a major driver of the creator economy, which is run by influencers and content creators. However, the executive thinks that AI-generated content is improving so fast that soon it might become impossible to distinguish it from camera-captured content. Mosseri also emphasised that the value of authenticity is going to increase in the coming days. Adam Mosseri Shares a Pessimistic Outlook Towards AI-Generated Content AI-powered image and video generation dominated the space in 2025, with Google's Nano Banana and OpenAI's Sora app creating new waves of viral trends and exponential user adoption. Instagram also tapped into the growing AI-led content creation demand with its Edits app. However, in a recent post, Mosseri believes AI-generated content could emerge as a key risk for the social media platform. "The key risk Instagram faces is that, as the world changes more quickly, the platform fails to keep up," Mosseri wrote in a 20-slide-long carousel post on December 31. "Looking forward to 2026, one major shift: authenticity is becoming infinitely reproducible," he added. Mosseri, who joined Meta's Instagram division as the Vice President of Product in May 2018 and became the Head of the platform in October of the same year, has seen several major technological shifts and trends that impacted how users interact with the social media app. However, he hinted that the AI-generated images and videos might be the most transformative of them all. "For most of my life, I could safely assume photographs or videos were largely accurate captures of moments that happened. This is clearly no longer the case, and it's going to take us years to adapt. We are going to move from assuming what we see is real by default to starting with scepticism," the Instagram head said. He also highlighted that while Instagram might do a good job at identifying AI content in the short run, over time, it will become increasingly difficult to do so. He believes that soon, camera manufacturers will cryptographically sign images at capture, so as to fingerprint real media instead of synthetic content. Mosseri also highlighted that the old perception of Instagram, that users post polished images of their personal moments on their feed, is dead. He revealed that most of these moments are now only shared in Direct Messages (DMs) and are marked by blurry photos, shaky videos, and unflattering candids. Calling it "raw aesthetic," the executive claimed that flattering imagery is no longer valuable as it is "cheap to produce and boring to consume." He said that this will make authentic content creation more valuable as the bar shifts from "can you create?" to "can you make something that only you could create?" Mosseri also believes that, as a platform, Instagram will have to evolve in a number of ways, and quickly, if it wants to stay ahead of the ongoing AI content rise. "We need to build the best reactive tools. Label AI-generated content and verify authentic content. Surface credibility signals about who's posting. Continue to improve ranking for originality," he added.

[12]

'Authenticity is reproducible' Instagram boss laughably claims, as he enables AI Slop takeover

Instagram is seemingly disassociating with the idea it can control whether the content that is posted to its platform is real. That's dangerous. The main dude in charge of Instagram reckons it makes more sense to label "real" content in 2026, as the AI slop takeover continues on its unstoppable Army of The Dead-like march. In a threads post, Adam Mosseri says "authenticity is becoming infinitely reproducible." He's massively wrong by the way. Clearly. Obviously. Authenticity is still authentic. The slop is instantly identifiable as slop by anyone with a functioning brain. But, for argument's sake, let's continue this post on the premise that what Zuckerberg's Lackyberg is saying holds water. It doesn't. But let's pretend, yeah? The palatable face in charge of 'Insta' reckons it's more practical to tell people what they're seeing is real, rather than labelling what isn't. He isn't planning on doing all that much to let people know about obscene fakery, but he will tell you what's real. "There is already a growing number of people who believe, as I do, that it will be more practical to fingerprint real media than fake media," Mosseri wrote in the post that refers to a problem Instagram is actively contributing to by welcoming an obscene amount of AI-generated content onto its platform. The AI slop problem exists because Instagram allows it to exist. So, with respect Adam, this is your problem. Wholly and indisputably. In comments that read like he's an outside observer rather than an active contributor, Mosseri adds: "We haven't truly grappled with synthetic content yet. We are now seeing an abundance of AI generated content, and there will be much more content created by AI than captured by traditional means in a few years time. "We like to talk about "AI slop," but there is a lot of amazing AI content that thankfully lacks the disturbing properties of twisted limbs and absent physics. Even the quality AI content has a look though: it tends to feel fabricated somehow. The imagery today is too slick, people's skin is too smooth. That will change; we are going to start to see more and more realistic AI content." Yeah, OK Adam. The "amazing AI content" isn't all that amazing. It's absolute dogger. Time to stop looking at this fella as the a friendly face.

[13]

Instagram CEO Mosseri says social media platform will struggle to spot AI slop as tech improves

He says that scepticism will be the default mode for online content, which is going to be uncomfortable since we're genetically predisposed towards believing what we're seeing. Instagram chief Adam Mosseri has acknowledged that the platform may fail to identify AI slop, as the latter gets better in faking reality. "Platforms like Instagram will do good work identifying AI content, but they'll get worse at it over time as AI gets better. It will be more practical to fingerprint real media than fake media," Mosseri wrote in his December 31 post on Instagram. Mosseri's predictions for 2026 He underlines that authenticity is becoming reproducible on the one hand, yet scarce on the other. "Looking forward to 2026, one major shift: authenticity is becoming infinitely reproducible." This is because of the advances AI has made in bringing high-quality, perfected content to everyone. He says that power shifted from institutions to individuals when the internet enabled anyone to reach an audience, and trust in institutions collapsed. With zero information distribution cost, people turned to creators and consumed content they trusted. However, the kind of democratisation AI has extended now threatens this dynamic as well, by making "real-looking" content cheap and ubiquitous. He says this democratisation has led to fewer people sharing on their feeds, reinforcing the view that users no longer post frequently on social media. "Unless you are under 25, you probably think of Instagram as a feed of square photos: polished makeup, skin smoothing, and beautiful landscapes. That feed is dead. People stopped sharing personal moments to feed years ago," Mosseri says. Instead, people have started to use personal chats as the mode of sharing their experiences. Mosseri argues that as AI-driven perfection loses appeal to raw aesthetics, authenticity is becoming scarce and demand for creator content is rising. "The bar is shifting from 'can you create?' to 'can you make something that only you could create?'." Why are camera companies at fault? Mosseri claims that camera companies have significantly been at fault since 2015, by making professional-looking photography their main selling point. "The camera companies are betting on the wrong aesthetic. They're competing to make everyone look like a pro photographer from 2015. But in a world where AΙ can generate flawless imagery, the professional look becomes the tell," he explains. He says this will force users to question their instinctive trust in perception, and that adapting to this shift will take time. How is Instagram gearing up for these challenges Voicing his concerns, Mosseri outlined the roadmap Instagram plans to follow in the new year. While the platform's focus will be on building better tools for content creators, labelling will gain significance by specifying which content is AI-generated and by verifying authenticity. The platform will emphasise something called 'surface credibility' to show who's posting (who is behind an account), and improve ranking for those who choose originality over savviness. Will scepticism be on the rise? While it is optimistic to see a platform's head come forward and highlight the challenges AI-generated content poses today, it also shows that scepticism is only going to grow. "We're going to move from assuming what we see is real by default, to starting with skepticism. Paying attention to who is sharing something and why. This will be uncomfortable -- we're genetically predisposed to believing our eyes," he added.

[14]

Instagram boss admits AI slop has won, but where does that leave creatives?

AI slop was all over social media in 2025, and Meta's head of Instagram Adam Mosseri has finally recognised something that was pretty apparent. Authenticity is going to be an issue in 2026. A lot of AI-generated content still has glitchy artifacts and that glossy, almost plastic sheen that gives it away. But it will get harder to distinguish as AI models become better at replicating less hyper-real styles. While Adam says Meta's still trying to improve its identification of AI-generated media, he seems keen to pass the buck. AI content is becoming so ubiquitous that it will be more practical to signpost real media, he now reckons. And in the meantime, its up to creatives to prove they're not fake. "Everything that made creators matter -- the ability to be real, to connect, to have a voice that couldn't be faked -- is now suddenly accessible to anyone with the right tools," Adam writes in a long post on Threads. "The feeds are starting to fill up with synthetic everything." This makes him sound like they come from a disinterested observer expressing surprised interest at what's happening. That's implausible when Instagram's been encouraging people to use Meta's own AI models and let loose AI users on Instagram a year ago. Meta's made an attempt to flag AI-generated media with its 'AI info' tag, but a vast amount goes undetected, while genuine photos with minor AI retouching were getting flagged. Adam's recent post seems like an unreluctant admittance of defeat. "All the major platforms will do good work identifying AI content, but they will get worse at it over time as AI gets better at imitating reality. There is already a growing number of people who believe, as I do, that it will be more practical to fingerprint real media than fake media," he writes. One idea for how this could work is that camera manufacturers could "cryptographically sign images at capture, creating a chain of custody." That's actually already in process. Many camera manufacturers have already integrated or announced plans to integrate tamper-evident metadata via the Content Authenticity Initiative (CAI) and/or the Coalition for Content Provenance and Authenticity (C2PA) to verify the origin of images. Meta needs to find a way to read that. Adam doesn't mention other media. Maybe he thinks all the illustrators and digital artists have already left Instagram for anti-AI Cara. But here too, potential solutions for identifying real content already exist. Adobe software can verify authenticity through Content Credentials. Again, Instagram needs to be able to read that data if it's serious about wanting to signpost non-AI material. What can creatives do in the meantime? Make things uglier is Adam's suggestion. His reasoning is that AI and cameraphones have together made professional-looking imagery ubiquitous, cheapening it. He goes as far to say that camera companies "are betting on the wrong aesthetic". "They're competing to make everyone look like a professional photographer from the past. Every year we see phone cameras boast about more megapixels and image processing. We are romanticising the past. Portrait mode is artificially blurring the background of a photograph to reproduce the soft glow you get from the shallow depth of field of a fixed lens. It looks good, and we like to look good." The suggestion that camera companies were wrong to improve their products is bizarre, as if the sole purpose of cameras were to produce the latest trending Instagram aesthetic in body. But the prediction of where Instagram is going is probably accurate: creatives will have to prove they're real. "Flattering imagery is cheap to produce and boring to consume. People want content that feels real," Adam says. "We are going to see a significant acceleration of a more raw aesthetic over the next few years. "Savvy creators are going to lean into explicitly unproduced and unflattering images of themselves. In a world where everything can be perfected, imperfection becomes a signal. Rawness isn't just aesthetic preference anymore -- it's proof. It's defensive. A way of saying: this is real because it's imperfect." What that means for artists and designers is that the clever Instagram layouts we raved about back in 2018 are now irrelevant. Instead, creatives have a couple of options. The first is to decide Instagram isn't worth it and stop worrying about it (see our pick of the best social media for artists and designers for alternatives). But if Instagram is still important for your visibility as an artist, it might be time to start sharing behind-the-scenes videos and works in progress if you're not already doing so. Instead consider the approach that many creatives have already switched to. Instead of posting final edits or renders, show processes and content that says something about you as an artist. Instead of showing what you created, show how you created it, how hard it was to create, and how only you could create it. Until Instagram can identify what's AI, or what's not, creatives will have to get used to proving that their work is real and that they made it.

[15]

Instagram Head Cautions That AI Content May Soon Overwhelm Social Media Feeds

AI Content Surge May Overwhelm User Feeds, Instagram Head Cautions In a candid year-end reflection for 2026, Instagram head Adam Mosseri issued a stark warning about AI-generated content, stating that synthetic media will soon overwhelm traditional social feeds. The executive noted that, for the first time in history, users can no longer assume whether photographs or videos are accurately capturing real-life moments. As AI-generated content on Instagram becomes indistinguishable from reality, Mosseri emphasized that the platform must shift toward effective media fingerprinting to protect digital authenticity and user trust.

[16]

Instagram head Adam Mosseri says era of believing images is over as AI advances

Mosseri admits people often complain about AI slop, but he argues that some AI-made content is "amazing." Instagram head Adam Mosseri has said that the time when people could trust photos and videos at first glance is ending as AI-generated content is becoming more realistic. Mosseri shared his thoughts in a long Instagram post near the end of 2025, using 20 images to explain how online content is changing. His main point was clear: images can no longer be treated as simple records of real moments. "For most of my life I could safely assume photographs or videos were largely accurate captures of moments that happened. This is clearly no longer the case and it's going to take us years to adapt," Mosseri wrote. "We're going to move from assuming what we see is real by default, to starting with skepticism. Paying attention to who is sharing something and why. This will be uncomfortable - we're genetically predisposed to believing our eyes." Also read: After OpenAI's code red, Microsoft CEO Satya Nadella enters into founder mode to beat AI rivals According to Mosseri, Instagram and other platforms need to evolve quickly. He said companies must create better creative tools, clearly label AI-made content, and verify real photos and videos. Platforms should also show signals that help users understand who is posting something and whether they are trustworthy. Another goal, he says, is to improve the ranking of original work. Mosseri admitted that people often complain about AI slop, but he said that some AI-made content is "amazing." For now, raw and imperfect photos can act as a signal that something is real. But Mosseri warned that this will not last. Once AI can copy flaws and mistakes, he believes trust will shift away from the image itself. Then "we'll need to shift our focus to who says something instead of what is being said." Also read: Oppo Find X9s leaks: Launch timeline, camera, battery, processor and other details This conversation is not new. For years, photographers, journalists, and creators have been asking a simple question: what even counts as a photo anymore? As AI tools become more powerful, that question is becoming harder to answer.

Share

Share

Copy Link

Instagram head Adam Mosseri says AI-generated content has made authenticity infinitely reproducible, forcing a shift from trusting what we see to verifying who shares it. The platform plans to fingerprint real media and surface credibility signals as AI slop floods social feeds and deepfakes become indistinguishable from reality.

Instagram Faces Existential Challenge as AI Images Blur Reality

Instagram chief Adam Mosseri issued a stark warning as 2025 closed: authenticity is becoming infinitely reproducible, and the platform risks falling behind if it doesn't adapt quickly

1

. In a 20-slide post on his account, Mosseri addressed how AI images and deepfakes have fundamentally altered the landscape for Instagram's 3 billion users4

. "Everything that made creators matter—the ability to be real, to connect, to have a voice that couldn't be faked—is now accessible to anyone with the right tools," he wrote2

. The feeds are filling with synthetic content, and AI-generated content now produces photos and videos indistinguishable from captured media.

Source: Analytics Insight

The Shift Towards Skepticism and New Trust Models

Mosseri acknowledged an uncomfortable truth: "For most of my life I could safely assume photographs or videos were largely accurate captures of moments that happened. This is clearly no longer the case and it's going to take us years to adapt"

2

. The shift towards skepticism means users will need to move from assuming what they see is real by default to starting with doubt5

. "We're genetically predisposed to believing our eyes," he noted, making this transition particularly challenging1

. The Meta executive stressed that platforms must shift focus from what is being said to who says something, requiring Instagram to surface credibility signals about who's posting so people can decide who to trust2

.Fingerprint Real Media Instead of Chasing Fake Content

In a notable admission of defeat against AI slop, Mosseri declared it will be "more practical to fingerprint real media than fake media"

4

. He predicted that while social media platforms will initially do good work identifying AI content, they'll get worse at it over time as AI gets better at imitating reality3

. Camera manufacturers will need to cryptographically sign images at capture, creating a chain of custody to verify authenticity1

. This approach essentially shifts responsibility for verifying authentic content away from Meta and toward hardware makers4

. Labeling AI content, which Instagram rolled out in 2024, depends on user disclosure or platform detection, meaning large amounts of AI content still appears without labels3

.

Source: The Verge

Related Stories

Raw Aesthetic Becomes Proof of Authenticity

Mosseri declared that the Instagram feed of polished, square photos with perfect makeup and smooth skin "is dead"

2

. People stopped sharing personal moments to feed years ago, now preferring to share blurry photos and shaky videos in DMs2

. This raw aesthetic has bled into public content as savvy creators lean into unproduced, unflattering images1

. "In a world where everything can be perfected, imperfection becomes a signal," Mosseri explained3

. He criticized camera manufacturers for betting on the wrong aesthetic, trying to make everyone look like a professional photographer from 20151

. "Flattering imagery is cheap to produce and boring to consume. People want content that feels real," he stated3

. However, Mosseri acknowledged that AI will eventually create any aesthetic, including imperfect ones that present as authentic2

.

Source: Stuff

Platform Evolution and Support Authentic Creators

Mosseri promised that Instagram needs to evolve in multiple ways to support authentic creators competing against increasingly advanced AI

3

. The platform must build better creative tools, label AI-generated content, verify authentic content, and continue to improve ranking for originality5

. Content creators who can maintain trust and signal authenticity by being real, transparent, and consistent will stand out in a world of infinite abundance and infinite doubt2

. While TikTok rolled out features allowing users to see less AI-generated content in November, Instagram hasn't yet implemented a specific option for this3

. Mosseri didn't announce specific features or timelines but emphasized the urgency, stating Instagram "is going to have to evolve in a number of ways, and fast"1

. His warning signals not just a design challenge for social media platforms but a cultural recalibration of what counts as proof in an age of infinite synthesis, as misinformation through visual media becomes harder to combat5

.References

Summarized by

Navi

[3]

[4]

Related Stories

Instagram Head Warns of AI-Generated Content Risks, Urges User Vigilance

16 Dec 2024•Technology

AI Slop Floods Social Media as Platforms Introduce Filters Amid Growing User Backlash

29 Jan 2026•Entertainment and Society

Meta Plans Massive AI Content Push Across Social Platforms as Third Era of Social Media

30 Oct 2025•Entertainment and Society

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Anthropic and Pentagon clash over AI safeguards as $200 million contract hangs in balance

Policy and Regulation