John Oliver Sounds Alarm on AI-Generated Content Flooding the Internet

5 Sources

5 Sources

[1]

John Oliver finds a creative way to get revenge on AI spam

'The Last of Us' stars Bella Ramsey and Kaitlyn Dever break down Ellie and Abby's quests for revenge 3:25 If you've been anywhere near the internet in the past couple of years, you'll probably have stumbled across some "AI slop" -- the name for mass-produced, AI-generated content that's now all over social media. In the Last Week Tonight clip above, John Oliver unpacks the issues with this new type of spam, from fake images dominating Pinterest to AI disinformation about war and natural disasters causing a problem for first responders. "AI slop can be somewhat lucrative for its creators, massively lucrative for the platforms that use it to drive engagement, and worryingly corrosive to the general concept of objective reality," says Oliver. "And look, I'm not saying some of this stuff isn't fun to watch -- what I'm saying is some of it's potentially very dangerous, and even when it isn't the technology that makes it possible only works because it trains on the work of actual artists. So any enjoyment you may get from weird, funny AI slop tends to be undercut when you know that someone's hard work was stolen in order to create it." Oliver's response? "Create real art by ripping off AI slop." In this case, that means hiring chainsaw sculptor Michael Jones, whose real work has been used to generate viral AI imagery, and pay him to create a wood sculptor based on what Oliver considers "the finest and most inexplicable piece of slop produced to date."

[2]

John Oliver on AI slop: 'Some of this stuff is potentially very dangerous'

The Last Week Tonight host went deep on the creative bankruptcy and long-term concerns over AI images and videos flooding the internet John Oliver covered the dangers of AI on his weekly HBO show, calling it "worryingly corrosive" for society. On Last Week Tonight, Oliver said that the "spread of AI generation tools has made it very easy to flood social media sites with cheap, professional-looking, often deeply weird content" using the term AI slop to describe it all. He referred to it as the "newest iteration of spam" with weird images and videos flooding people's feed with some people having "absolutely no idea that it isn't real". Oliver said that it's "extremely likely that we are gonna be drowning in this shit for the foreseeable future". With content such as this, "the whole point is to grab your attention" and given how easy it's become to make it, the barrier of entry has been reduced. Meta has not only joined the game with its own tool but it's also tweaked the algorithm meaning that more than a third of content in your feed is now from accounts you don't follow. "That's how slop sneaks in without your permission," he said. There are monetisation programs for people who successfully go viral and now a range of AI slop gurus who will teach people what to do for a small fee. It's "ultimately a volume game like any form of spam" and has led to AI generators ripping off the work of actual artists without crediting them. But "for all the talk of riches in those slop guru videos, the money involved here can be relatively small". It can be as little as a few cents and then sometimes hundreds of dollars if it goes mega-viral which means that a lot of it comes from countries where money goes further like India, Thailand, Indonesia and Pakistan, One of the downsides is having to explain to parents that content isn't real. "If you see an animal that's so cute it defies reality and it's not Moo Deng, odds are it's AI," he said. There's also an environmental impact to the resources needed to produce it as well as the worrying spread of misinformation. Oliver spoke about the many fake disasters that have been created with images and videos showing tornados and explosions and plane crashes that don't exist. "Air travel is scary enough now without people making up new disasters," he said. Generative AI has also been used during the Israel-Iran conflict and posed problems for first responders with the flooding in North Carolina last year. It was also used by Republicans to show that Biden was not handling the latter situation well with fake images used on the right despite them being told they weren't real. "It's pretty fucking galling for the same people who spent the past decade screaming 'fake news' at any headline they didn't like to be confronted with actual fake news and suddenly be extremely open to it," he said. While the spread wasn't as damaging as some head feared during last year's US election, AI is "already significantly better than it was then". He added: "It's not just that we can get fooled by fake stuff, it's that the very existence of it then empowers bad actors to dismiss real videos and images as fake." Oliver said it's all "worryingly corrosive for the concept of objective reality" with platforms finding it harder to detect AI. "I'm not saying some of this stuff isn't fun to watch, I'm saying that some of this stuff is potentially very dangerous," he said.

[3]

Watch John Oliver and a wood carver turn the tables on AI slop

Last Week Tonight frontman John Oliver put AI slop in the crosshairs in the latest edition of the popular HBO show. AI slop, for the uninitiated, is all the AI-generated imagery that's starting to take over your feeds on sites like TikTok, Instagram, Facebook, X, and Pinterest. The slop can also be AI-generated videos on YouTube, music on platforms like Spotify, and even e-books, news articles, and games. As Oliver notes in his monologue, the growth of powerful AI-generation tools over the last couple of years has made it easier than ever to "mass produce and flood social media sites with cheap, professional-looking, often deeply weird content." Oliver gave a few examples: "Images of Jesus made out of shrimp ... videos like Barron Trump wowing the judges on America's Got Talent while his dad plays backup piano, a pug raising a baby on a desert island, or Pope Francis taking a selfie with Jesus while flying through heaven." The Last Week Tonight host also points to some deeply strange though highly creative videos showing people transforming into various fruits and vegetables (6:51), with his favorite one showing a man changing into a red cabbage (yes, it's as wacky as it sounds). But the show takes a more troubling turn when Oliver highlights a photo of a man beside a wood carving of a dog (14:49). The photo, which has more than a million likes, is AI-generated, and is very close to another (real) image of a wood sculptor next to a wood carving of a dog. The issue is that generative-AI models are trained on content -- including real artworks, books, music, and so on -- scraped from the internet, and the artists whose work has been scraped, like this wood sculptor's efforts, are not being compensated. And worse still, the platforms hosting the slop are raking in revenue, as are some of the folks posting AI slop. "So any enjoyment you may get from weird, funny AI slop tends to be undercut when you know that someone's hard work was stolen in order to create it," Oliver said. In a neat move at the end of the show (27:02), Oliver brings in the wood carver, Michael Jones, to show off a piece of his own work based on the previously mentioned red cabbage man, neatly turning the tables on AI slop. "I don't have a big fix for all of this, or indeed, any of it," Oliver said. "What I do have, though, is a petty way to respond. Because perhaps one small way to get back at all the AI slop ripping off artists would be to create real art by ripping off AI slop."

[4]

John Oliver Aghast at How AI Slop Is Devouring the Web

It's safe to say that comedian and "Last Week Tonight" host John Oliver isn't a fan of AI slop -- and he's got a compelling argument for why you shouldn't be, either. As the comedian laid out in the latest episode of his popular HBO show, AI-generated content is suddenly everywhere online. It's going viral on social media, crowding Google Search results and discovery platforms like Pinterest, and has made churning out spam, misinformation, and otherwise empty, low-quality digital stuff easier and cheaper than ever. The speed at which spammers and slop farmers can blast out AI content is clearly overwhelming for platforms, a problem backdropped by competing tech economy incentives: players like Google and Meta, for example, are actively trying to win the AI arms race at the same time as low-quality AI slop is polluting their existing platforms, while popular web search and discovery services writ large have generally declined to ban AI content outright. Which means that, right now, it certainly looks like slop isn't going anywhere, at least anytime soon. As Oliver puts it, the "spread of AI generation tools has made it very easy to mass produce and flood social media sites with cheap, professional-looking, often deeply weird content." "If that's starting to give you an uneasy feeling in your stomach right now, get used to it," he adds, "because it seems extremely likely that we're gonna be drowning in this sh*t for the foreseeable future." As Oliver also points out, the problem isn't just that the internet is an increasingly murky, crowded place to spend time. While some AI slop is kooky enough that it's legitimately fun, a lot of folks are having a difficult time parsing what's real from what's fake. That consensus deficit has serious implications for AI-spun misinformation, which has started to accelerate the confusion and chaos already inherent to breaking news scenarios like natural disasters and armed conflicts. Spammers are using this gap in digital literacy around AI to their advantage, too. Earlier this year, Futurism published a story about a slop farmer named Jesse Cunningham who uses AI to churn out fake, low-quality clickbait on social media platforms like Facebook and Pinterest. In an unlisted video we obtained, Cunningham -- who was also highlighted in Oliver's "Last Week Tonight" slop segment -- brags that his target audience for his churn-and-burn content is older women, who likely don't know what they're clicking on is AI. In other words, it's content like Cunningham's that's negatively impacting the livelihoods of artists, content creators, and other creatives who rely on social media and the internet to share and monetize their real, time-consuming work. Sorry, folks: the web is melting.

[5]

'We Are F**ked!: John Oliver Wades Through 'The S**t' We're Now 'Drowning In'

"Last Week Tonight" host John Oliver on Sunday sounded the alarm over the rapid rise of artificial intelligence-generated content that is increasingly appearing online. At one point, he summed it up: "We are fucked!" Oliver explained how the explosion of new AI tools has made it easier than ever to produce so-called "AI slop," the low-quality music, images, videos and even news articles that are now dominating people's social media feeds. It's "the newest iteration of spam" and is making some platforms "unusable" because of their sheer volume, Oliver lamented. Many users don't realize the content isn't even real and "bad actors" are also seizing on some of it, he added, pointing to when then-presidential candidate Donald Trump last year falsely dismissed photos of big crowds at a Kamala Harris campaing event as being AI-generated. "We're going to be drowning in this shit for the foreseeable future," Oliver warned. The comedian acknowledged there's no easy fix. AI models are often trained on the work of real-life creators, effectively ripping them off, he noted. He didn't offer a solution but did suggest "a petty way" to seek revenge, by making genuine art by stealing from the slop.

Share

Share

Copy Link

John Oliver, host of "Last Week Tonight," delves into the pervasive issue of AI-generated content, dubbed "AI slop," highlighting its impact on social media, creativity, and information integrity.

The Rise of "AI Slop"

John Oliver, host of HBO's "Last Week Tonight," has turned his satirical lens on a growing digital phenomenon: "AI slop." This term refers to the flood of AI-generated content inundating social media platforms and the wider internet

1

. Oliver describes it as "the newest iteration of spam," highlighting how easy it has become to "mass produce and flood social media sites with cheap, professional-looking, often deeply weird content"2

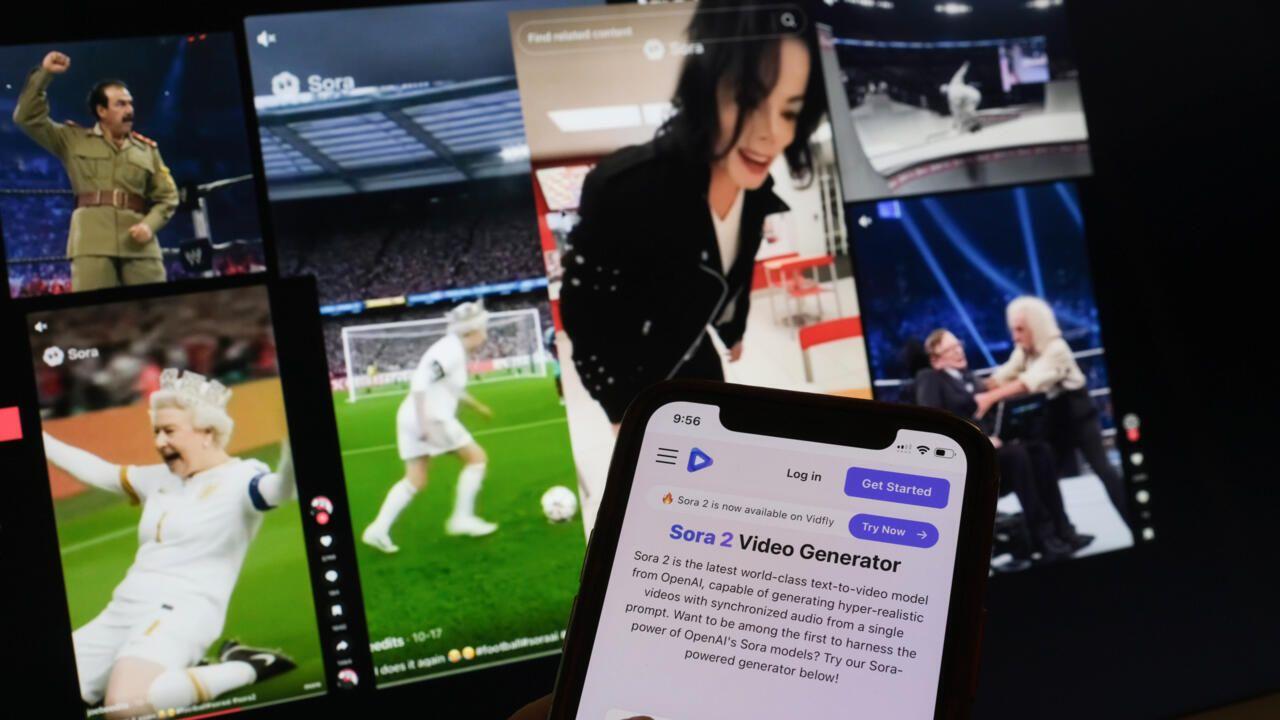

.The Pervasiveness of AI-Generated Content

Source: HuffPost

The comedian points out that AI-generated imagery and videos are increasingly dominating feeds on popular platforms like TikTok, Instagram, Facebook, X, and Pinterest

3

. This content ranges from bizarre images like "Jesus made out of shrimp" to more deceptive creations such as fake videos of public figures in unlikely scenarios.Monetization and Platform Engagement

Oliver explains that AI slop can be "somewhat lucrative for its creators, massively lucrative for the platforms that use it to drive engagement"

1

. Some platforms, like Meta, have even adjusted their algorithms to promote more of this content, with over a third of users' feeds now consisting of posts from accounts they don't follow2

.Ethical Concerns and Artist Exploitation

A significant issue raised by Oliver is the exploitation of real artists' work. AI models are often trained on content scraped from the internet, including artworks, without compensating the original creators

3

. This practice undercuts the value of genuine artistic effort and raises serious ethical questions about the AI industry.Misinformation and Social Impact

Source: Digital Trends

Oliver warns that AI slop is "worryingly corrosive to the general concept of objective reality"

1

. The spread of AI-generated misinformation, particularly during crises or conflicts, poses significant challenges. For instance, fake disaster images have caused problems for first responders, and AI-generated content has been used to spread disinformation during political events2

.Related Stories

The Future of AI Content

The comedian grimly predicts that "we're gonna be drowning in this shit for the foreseeable future"

4

. With the rapid advancement of AI technology and the difficulty platforms face in detecting and moderating such content, the problem is likely to persist and potentially worsen.Oliver's Creative Response

Source: Futurism

In a unique twist, Oliver concludes his segment by hiring Michael Jones, a chainsaw sculptor whose work has been used to generate viral AI imagery, to create a real wood sculpture based on an AI-generated image

1

3

. This act serves as a symbolic "petty way to respond" to the AI slop phenomenon, turning the tables on AI by creating real art inspired by AI-generated content.Conclusion

While Oliver acknowledges that some AI-generated content can be entertaining, he emphasizes the potential dangers and ethical issues surrounding this technology. The comedian's exploration of AI slop serves as a wake-up call to the challenges posed by the proliferation of AI-generated content and its impact on creativity, information integrity, and society at large.

References

Summarized by

Navi

[3]

Related Stories

AI Slop Floods Social Media as Platforms Introduce Filters Amid Growing User Backlash

29 Jan 2026•Entertainment and Society

AI Slop: The Rise of Low-Quality AI-Generated Content Flooding the Internet

03 Sept 2025•Technology

Over 21% of YouTube Shorts is AI slop, with creators earning millions farming views

27 Dec 2025•Entertainment and Society

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation