Liquid AI Launches LEAP: A New Platform for On-Device AI Development

2 Sources

2 Sources

[1]

Finally, a dev kit for designing on-device, mobile AI apps is here: Liquid AI's LEAP

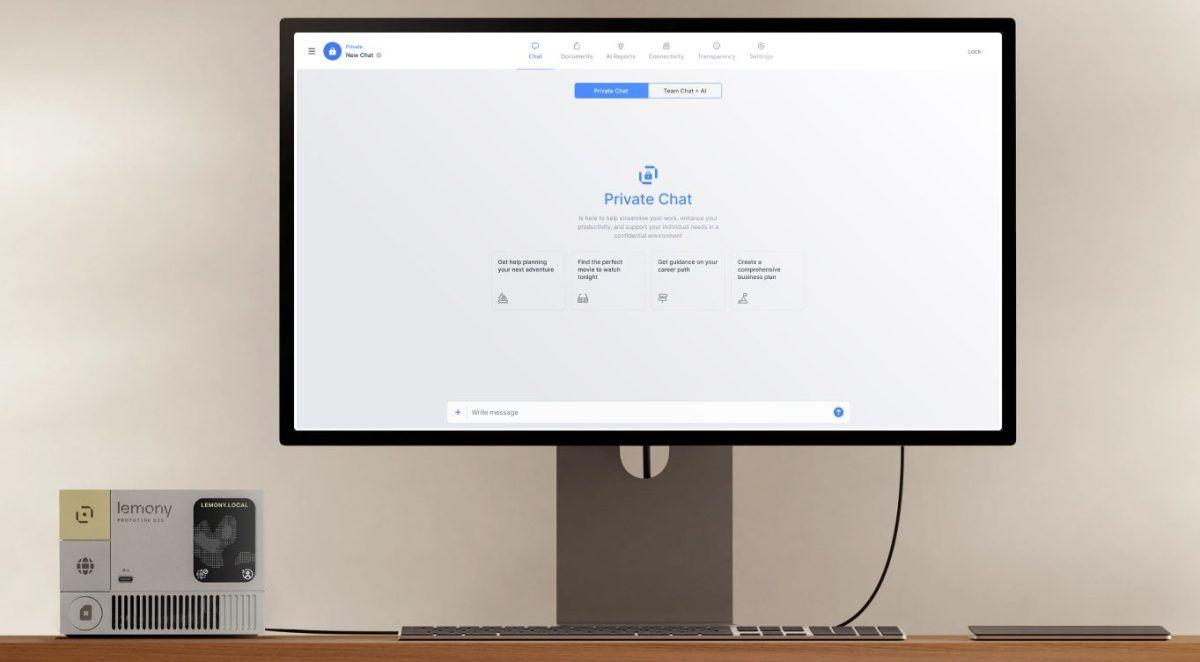

Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now Liquid AI, the startup formed by former Massachusetts Institute of Technology (MIT) researchers to develop novel AI model architectures beyond the widely-used "Transformers", today announced the release of LEAP aka the "Liquid Edge AI Platform," a cross-platform software development kit (SDK) designed to make it easier for developers to integrate small language models (SLMs) directly into mobile applications. Alongside LEAP, the company also introduced Apollo, a companion iOS app for testing these models locally, furthering Liquid AI's mission to enable privacy-preserving, efficient AI on consumer hardware. The LEAP SDK arrives at a time when many developers are seeking alternatives to cloud-only AI services due to concerns over latency, cost, privacy, and offline availability. LEAP addresses those needs head-on with a local-first approach that allows small models to run directly on-device, reducing dependence on cloud infrastructure. Built for mobile devs with no prior ML experience required LEAP is designed for developers who want to build with AI but may not have deep expertise in machine learning. According to Liquid AI, the SDK can be added to an iOS or Android project with just a few lines of code, and calling a local model is meant to feel as familiar as interacting with a traditional cloud API. "Our research shows developers are moving beyond cloud-only AI and looking for trusted partners to help them build on-device," said Ramin Hasani, co-founder and CEO of Liquid AI, in a blog post announcing the news today on Liquid's website. "LEAP is our answer -- a flexible, end-to-end deployment platform built from the ground up to make powerful, efficient, and private edge AI truly accessible." Once integrated, developers can select a model from the built-in LEAP model library, which includes compact models as small as 300MB -- lightweight enough for modern phones with as little as 4GB of RAM (!!) and up. The SDK handles local inference, memory optimization, and device compatibility, simplifying the typical edge deployment process. LEAP is OS- and model-agnostic by design. At launch, it supports both iOS and Android, and offers compatibility with Liquid AI's own Liquid Foundation Models (LFMs) as well as many popular open-source small models. The goal: a unified ecosystem for edge AI Beyond model execution, LEAP positions itself as an all-in-one platform for discovering, adapting, testing, and deploying SLMs for edge use. Developers can browse a curated model catalog with various quantization and checkpoint options, allowing them to tailor performance and memory footprint to the constraints of the target device. Liquid AI emphasizes that large models tend to be generalists, while small models often perform best when optimized for a narrow set of tasks. LEAP's unified system is structured around that principle, offering tools for rapid iteration and deployment in real-world mobile environments. The SDK also comes with a developer community hosted on Discord, where Liquid AI offers office hours, support, events, and competitions to encourage experimentation and feedback. Apollo: like Testflight for local AI models To complement LEAP, Liquid AI also released Apollo, a free iOS app that lets developers and users interact with LEAP-compatible models in a local, offline setting. Originally a separate mobile app startup that allowed users to chat with LLMs privately on device, which Liquid acquired earlier this year, Apollo has been rebuilt to support the entire LEAP model library. Apollo is designed for low-friction experimentation -- developers can "vibe check" a model's tone, latency, or output behavior right on their phones before integrating it into a production app. The app runs entirely offline, preserving user privacy and reducing reliance on cloud compute. Whether used as a lightweight dev tool or a private AI assistant, Apollo reflects Liquid AI's broader push to decentralize AI access and execution. Built on the back of the LFM2 model family announced last week LEAP SDK release builds on Liquid AI's July 10 announcement of LFM2, its second-generation foundation model family designed specifically for on-device workloads. LFM2 models come in 350M, 700M, and 1.2B parameter sizes, and benchmark competitively with larger models in speed and accuracy across several evaluation tasks. These models form the backbone of the LEAP model library and are optimized for fast inference on CPUs, GPUs, and NPUs. Free and ready for devs to start building LEAP is currently free to use under a developer license that includes the core SDK and model library. Liquid AI notes that premium enterprise features will be made available under a separate commercial license in the future, but that it is taking inquiries from enterprise customers already through its website contact form. LFM2 models are also free for academic use and commercial use by companies with under $10 million in revenue, with larger organizations required to contact the company for licensing. Developers can get started by visiting the LEAP SDK website, downloading Apollo from the App Store, or joining the Liquid AI developer community on Discord.

[2]

Liquid AI Launches LEAP: Ushering in a New Era for Edge AI Deployment Begins

Bring AI to your fingertips: LEAP & Apollo deliver fast, private, and always-on AI experiences on local devices Liquid AI, the foundation model company setting new standards for performance and efficiency, today announced the launch of their early-stage developer platform called Liquid Edge-AI Platform (LEAP) v0 for building and deploying AI on devices such as smartphones, laptops, wearables, drones, cars, and other local hardware - without cloud infrastructure. The company also introduced Apollo, an updated, lightweight, iOS-native application powered by LEAP that offers an interactive interface for experiencing private AI with Liquid's latest breakthrough models. "Our research shows developers are frustrated by the complexity, feasibility, and privacy trade-offs of current edge AI solutions," said Ramin Hasani, co-founder and CEO of Liquid AI. "LEAP is our answer -- a deployment platform designed from the ground up to make powerful, efficient, and private edge AI easy and accessible. We're also excited to give users the ability to test our new groundbreaking models through the iOS-native app Apollo." LEAP v0 represents a breakthrough for edge AI, pairing a small language model (SLM) library with a developer-first interface and platform-agnostic toolchain. LEAP allows developers to deploy foundation models directly in their Android and iOS applications in 10 lines of code. Liquid AI's goal with the new platform is to make it easy for AI novices and full-stack app developers, not just inference engineers or AI experts, to deploy local models. To provide the true experience of private and on-device AI, Liquid has acquired Apollo designed by Aaron Ng, and has substantially evolved it into an interactive interface to utilize, vibe-check, and pair small foundation models for private use-cases of Artificial Intelligence. Apollo provides private, secure, low-latency AI interactions fully on-device, demonstrating what's possible when enterprises and developers aren't constrained by internet access, cloud requirements, or bulky models. Among other open-source SLMs, both LEAP and Apollo now include access to Liquid AI's next-generation Liquid Foundation Models (LFM2), open-source small foundation models announced just last week that set new records in speed, energy efficiency, and instruction-following performance in the edge model class. The integration makes these high-performance models immediately available for developers to test and build edge-native AI applications. LFM2 is designed based on Liquid AI's first-principles approach to model design. Unlike traditional transformer-based models, LFM2 is composed of structured, adaptive operators that allow for more efficient training, faster inference, and better generalization, especially in long-context or resource-constrained scenarios.

Share

Share

Copy Link

Liquid AI introduces LEAP, a software development kit for integrating small language models into mobile applications, along with Apollo, an iOS app for testing these models locally. This marks a significant step towards privacy-preserving, efficient AI on consumer hardware.

Liquid AI Introduces LEAP: A New Frontier in Edge AI Development

Liquid AI, a startup founded by former MIT researchers, has unveiled LEAP (Liquid Edge AI Platform), a revolutionary software development kit (SDK) designed to simplify the integration of small language models (SLMs) into mobile applications

1

. This launch marks a significant step towards making on-device AI more accessible and efficient, addressing growing concerns over cloud-based AI services.Key Features of LEAP

Source: VentureBeat

LEAP is engineered to cater to developers with varying levels of machine learning expertise. The SDK can be integrated into iOS or Android projects with minimal code, making the process of calling a local model as straightforward as interacting with a cloud API

1

.Some notable features include:

- Cross-platform compatibility (iOS and Android)

- A built-in model library with compact models as small as 300MB

- Support for both Liquid AI's proprietary Liquid Foundation Models (LFMs) and popular open-source small models

- Tools for local inference, memory optimization, and device compatibility

Apollo: A Companion App for Model Testing

Alongside LEAP, Liquid AI has introduced Apollo, a free iOS app that allows developers and users to interact with LEAP-compatible models in an offline, local environment

1

. Originally a separate startup acquisition, Apollo has been redesigned to support the entire LEAP model library, offering a "TestFlight-like" experience for local AI models2

.The LFM2 Model Family: Powering LEAP

The LEAP SDK is built on the foundation of Liquid AI's recently announced LFM2 model family. These models, designed specifically for on-device workloads, come in sizes ranging from 350M to 1.2B parameters

1

. The LFM2 models are optimized for efficient inference on various hardware types, including CPUs, GPUs, and NPUs2

.Addressing Industry Needs

Source: Analytics Insight

LEAP and Apollo arrive at a crucial time when developers are actively seeking alternatives to cloud-only AI services. The platform addresses several key concerns:

- Latency: By running models locally, LEAP reduces the delay associated with cloud-based processing

- Cost: On-device processing can be more cost-effective than relying on cloud infrastructure

- Privacy: Local execution ensures user data remains on the device

- Offline availability: Apps can function without an internet connection

Related Stories

Accessibility and Licensing

LEAP is currently available for free under a developer license, which includes access to the core SDK and model library. Liquid AI has indicated that premium enterprise features will be offered under a separate commercial license in the future

1

.The LFM2 models are free for academic use and commercial use by companies with under $10 million in revenue. Larger organizations are required to contact Liquid AI for licensing details

1

.Industry Impact and Future Prospects

The introduction of LEAP and Apollo represents a significant shift in the AI development landscape. By making on-device AI more accessible, Liquid AI is paving the way for a new generation of privacy-preserving, efficient AI applications

2

.As the demand for edge AI solutions continues to grow, platforms like LEAP are likely to play a crucial role in shaping the future of mobile and IoT applications. The emphasis on small, efficient models that can run on consumer hardware opens up new possibilities for AI integration across a wide range of devices and use cases.

References

Summarized by

Navi

[2]

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology