Meta expands Nvidia partnership with multiyear deal for millions of AI chips and standalone CPUs

8 Sources

8 Sources

[1]

Meta already deploying Nvidia's standalone CPUs at scale

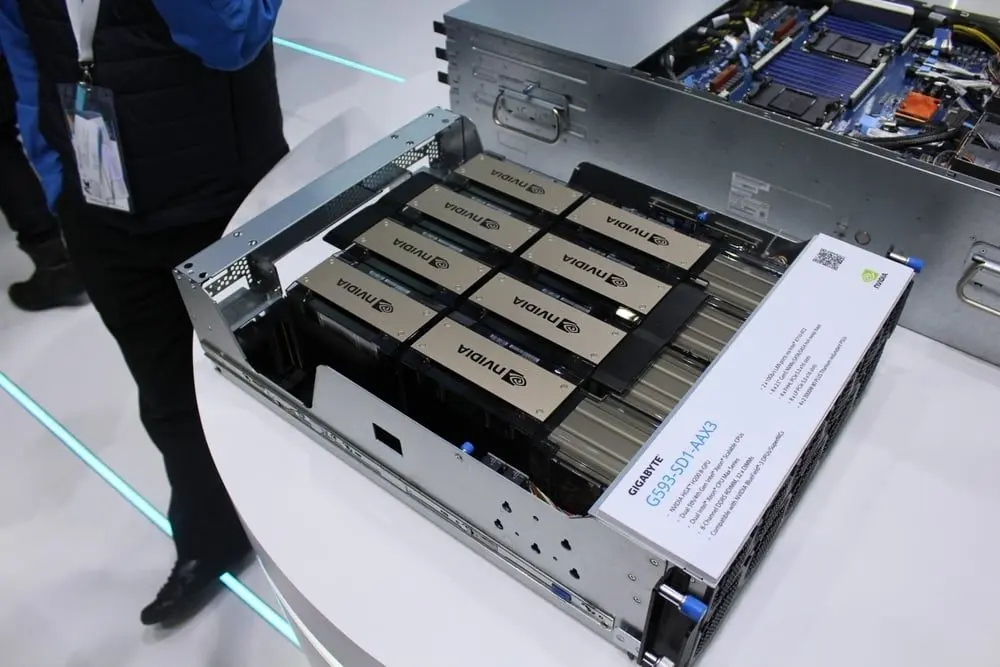

CPU adoption is part of deeper partnership between the Social Network and Nvidia which will see millions of GPUs deployed over next few years Move over Intel and AMD -- Meta is among the first hyperscalers to deploy Nvidia's standalone CPUs, the two companies revealed on Tuesday. Meta has already deployed Nvidia's Grace processors in CPU-only systems at scale and is working with the GPU slinger to field its upcoming Vera CPUs beginning next year. Apart from a few scientific institutions, Nvidia's nearly three-year-old Grace CPUs have predominantly shipped as part of so-called "Superchips" which featured integrated Hopper and Blackwell GPUs onboard. These are nothing new for Meta. The Social Network has previously deployed Nvidia's Grace-Hopper Superchips as part of its Andromeda recommender system and now plans to field "millions" of Nvidia GB300 and Vera Rubin Superchips, the companies said today. What has changed is Meta is now using Nvidia's CPU-only Grace systems to power both general purpose and agentic AI workloads that don't require a GPU. "What we found is that Grace is an excellent backend datacenter CPU. It can actually deliver 2x the performance per watt on those back end workloads," Ian Buck, Nvidia's VP and General Manager of Hyperscale and HPC, said during a press briefing ahead of Tuesday's announcement. "Meta has already had a chance to get on Vera and run some of those workloads, and the results look very promising." As a refresher, Nvidia's Grace CPUs are equipped with 72 Arm Neoverse V2 cores clocked at up to 3.35 GHz. The chip is available in a standalone config with up to 480 GB of memory or two of the processors can be combined with up to 960 GB of LPDDR5x memory to form the Grace-CPU Superchip. LPDDR5x isn't something you typically see in server platforms, but offers several advantages in terms of space and bandwidth. The Grace-CPU Superchip boasts up to 1 TB of memory bandwidth between the two dies. Nvidia's Vera CPU, officially unveiled at CES earlier this year, boosts core counts to 88 custom Arm cores and adds support for simultaneous multi-threading, A.K.A Hyperthreading, and confidential computing functionality. According to Nvidia, Meta will take advantage of the latter capability for private processing and AI features in its WhatsApp encrypted messaging service. Meta's adoption of Nvidia CPUs runs counter to the broader industry, which has increasingly pivoted to custom Arm CPUs like Amazon's Graviton or Google's Axion. Alongside Meta's new CPU deployments, the hyperscaler will deploy more Nvidia GPUs and Spectrum-X network, something we'd kind of figured considering the tech titan's $115 billion to $135 billion capex target for 2026. Nvidia is being rather tight-lipped about the scale of the expanded collab, but has said the deal will contribute tens of billions to its bottom line. A little back of the napkin math tells us that at north of $3.5 million per rack, a million GPUs works out to about $48 billion, which is a big number even for Nvidia. By way of comparison, the company earned $31.9 billion in net income on revenues of $57 billion in the quarter ended Oct. 26. It'll report Q4 earnings later this month. Meta isn't just an Nvidia shop these days. The company has a not insignificant fleet of AMD Instinct GPUs humming away in its datacenters and was directly involved in the design of AMD's Helios rack systems, which are due out later this year. Meta is widely expected to deploy AMD's competing rack systems, though no formal commitment has yet been made. ®

[2]

Meta Deepens Nvidia Ties With Pact to Use 'Millions' of Chips

Meta Platforms Inc. has agreed to deploy "millions" of Nvidia Corp. processors over the next few years, tightening an already close relationship between two of the biggest companies in the artificial intelligence industry. Meta, which accounts for about 9% of Nvidia's revenue, is committing to use more AI processors and networking equipment from the supplier, according to a statement Tuesday. For the first time, it also plans to rely on Nvidia's Grace central processing units, or CPUs, at the heart of standalone computers. The rollout will include products based on Nvidia's current Blackwell generation and the forthcoming Vera Rubin design of AI accelerators. "We're excited to expand our partnership with Nvidia to build leading-edge clusters using their Vera Rubin platform to deliver personal superintelligence to everyone in the world," Meta Chief Executive Officer Mark Zuckerberg said in the statement. The pact reaffirms Meta's loyalty to Nvidia at a time when the AI landscape is shifting. Nvidia's systems are still considered the gold standard for artificial intelligence infrastructure -- and generate hundreds of billions of dollars in revenue for the chipmaker. But rivals are now offering alternatives, and Meta is working on building its own in-house components. Shares of Nvidia and Meta both rose more than 1% in late trading after the agreement was announced. Advanced Micro Devices Inc., Nvidia's rival in AI processors, fell more than 3%. Ian Buck, Nvidia's vice president of accelerated computing, said the two companies aren't putting a dollar figure on the commitment or laying out a timeline. He said it's proper that Meta and others should test out other alternatives but argued that only Nvidia is able to offer the breadth of components, systems and software that a company wishing to be a leader in AI needs. Zuckerberg, meanwhile, has made AI the top priority at Meta, pledging to spend hundreds of billions of dollars to build the infrastructure needed to compete in this new era. Meta has already projected record spending for 2026, with Zuckerberg saying last year that the company would put $600 billion toward US infrastructure projects over the next three years. Meta is building several gigawatt-sized data centers around the country, including in Louisiana, Ohio and Indiana. One gigawatt is roughly the amount of energy needed to power 750,000 homes. Buck stressed that Meta will be the first large data center operator to use Nvidia's CPUs in standalone servers. Typically, Nvidia offers this technology in combination with its high-end AI accelerators -- chips that owe their lineage to graphics processors. This shift represents an encroachment into territory dominated by Intel Corp. and AMD. It also provides an alternative to some of the in-house chips that are designed by large data center operators, such as Amazon.com Inc.'s Amazon Web Services. Buck said the uses for such chips are only growing. Meta, owner of Facebook and Instagram, will use the chips itself and also rely on Nvidia-based computing capacity offered by other companies. Nvidia CPUs will be increasingly used for tasks such as data manipulation and machine learning, Buck said. "There's many different kinds of workloads for CPUs," Buck said. "What we've found is Grace is an excellent back-end data center CPU," meaning it handles the behind-the-scenes computing tasks. "It can actually deliver two times the performance per watt on those back-end workloads," he said.

[3]

Nvidia to sell Meta millions of chips in multiyear deal

SAN FRANCISCO, Feb 17 (Reuters) - Nvidia (NVDA.O), opens new tab on Tuesday said it has signed a multiyear deal to sell Meta Platforms (META.O), opens new tab millions of its current and future artificial intelligence chips, including central processing units that compete with products from Intel (INTC.O), opens new tab and Advanced Micro Devices (AMD.O), opens new tab. Nvidia did not disclose a value for the deal, but said it includes its current Blackwell chips as well as its forthcoming Rubin AI chips. It also includes standalone installations of its Grace and Vera central processors. Nvidia introduced those central processors, based on technology from Arm Holdings (O9Ty.F), opens new tab, as companions to its AI chips starting 2023. But the announcement Tuesday signaled that Nvidia aims to push those chips for emerging fields such as running AI agents as well as into markets for processors used in workaday technical tasks such as running databases. Nvidia's announcement also comes as Meta is developing its own AI chips and is in discussions with Google about using that company's Tensor Processing Unit chips, or TPUs, for AI work. Ian Buck, the general manager of Nvidia's hyperscale and high-performance computing unit, said that Nvidia's Grace central processors have shown they can use half the power for some common tasks such as running databases, with more gains expected for the next generation, Vera. "It actually continues down that path and makes it an excellent data center-only CPU for those high-intensity data processing back-end operations," Buck said. "Meta has already had a chance to get on Vera and run some of those workloads. And the results look very promising." While Nvidia has never disclosed its sales to Meta, it is widely believed to be among four customers that made up 61% of its revenue, opens new tab in its most recent fiscal quarter. Moorhead said Nvidia likely highlighted the deal to show that it has retained a large business with Meta and is gaining traction with its central processor chips. Reporting by Stephen Nellis in San Francisco; Ethan Smith Our Standards: The Thomson Reuters Trust Principles., opens new tab

[4]

Meta expands Nvidia deal to use millions of AI chips in data center build-out, including standalone CPUs

Meta CEO Mark Zuckerberg said in a statement that the expanded partnership continues his company's push "to deliver personal superintelligence to everyone in the world," a vision he announced in July. Financial terms of the deal were not provided. In January, Meta announced plans to spend up to $135 billion on AI in 2026. "The deal is certainly in the tens of billions of dollars," said chip analyst Ben Bajarin of Creative Strategies. "We do expect a good portion of Meta's capex to go toward this Nvidia build-out." The partnership is nothing new, as Meta has been using Nvidia graphics processing units for at least a decade, but the deal marks a significantly broader technology partnership between the two Silicon Valley-based giants. Standalone CPUs are the biggest new thing in the deal, with Meta becoming the first to deploy Nvidia's Grace central processing units as standalone chips in its data centers, as opposed to incorporated alongside GPUs in a server. Nvidia said it's the first large-scale deployment of Grace CPUs on their own. "They're really designed to run those inference workloads, run those agentic workloads, as a companion to a Grace Blackwell/Vera Rubin rack," Bajarin said. "Meta doing this at scale is affirmation of the soup-to-nuts strategy that Nvidia's putting across both sets of infrastructure: CPU and GPU." The next-generation Vera CPUs are planned to be deployed by Meta in 2027. The multiyear deal is part of Meta's overall commitment to spend $600 billion in the U.S. by 2028 on data centers and the infrastructure the facilities require. Meta has plans for 30 data centers, 26 of which will be based in the U.S. Its two largest AI data centers are under construction now: the Prometheus 1-gigawatt site in New Albany, Ohio, and the 5-gigawatt Hyperion site in Richland Parish, Louisiana.

[5]

Meta commits billions to Nvidia chips

Why it matters: Even though Meta is already one of Nvidia's biggest buyers, the deal is so big that it stands to boost both companies. Driving the news: Meta will buy millions of chips from Nvidia, ranging from standalone Grace CPUs to next-gen Blackwell GPUs and upcoming Vera Rubin systems, to use across its U.S. data center buildout. * This makes Meta the first Big Tech firm to commit to buying standalone central processing units from Nvidia, which are used to run AI rather than train AI. * This signals a shift toward inference over training, with the latter typically requiring more intense and expensive general processing units, or GPUs * Financial details of the deal were not disclosed. Between the lines: Meta is locking in scarce next-generation compute at a time when Nvidia's Blackwell GPUs are back-ordered and rivals are scrambling for supply. Zoom out: It's a signal that there's still strong demand for compute power amid the AI buildout. * Hyperscalers -- the companies behind the largest data center buildouts -- are on track to spend $650 billion this year. * Chip companies like Nvidia stand to benefit from that, as their chips are seen as the best -- and most expensive -- on the market. * Any sign of a slowdown in spending tends to hurt Nvidia shares, but a deal like this is a balm for investors who've been increasingly skittish about how long the AI spending spree can last. Follow the money: "The question of why Meta [is] deploying Nvidia's CPUs at scale is the most interesting thing in this announcement," Ben Bajarin, chief executive and principal analyst at tech consultancy Creative Strategies, told The Financial Times. Threat level: It's yet another example of circular funding and more spending from AI players. * Meta is expected to spend about $135 billion on its AI ambitions this year, a number that's expected to continue going up. The intrigue: Google, Amazon, Microsoft and even Meta have all announced new in-house chips in the past few months, which are seen as more affordable alternatives. * Meta was reportedly considering using Google's TPUs, according to CNBC. * Shares of AMD, a competitor to Nvidia, fell after the deal was announced. The bottom line: This agreement makes clear that, at least for the next phase of the AI race, Nvidia is the backbone of Meta's compute strategy.

[6]

Meta Builds AI Infrastructure With NVIDIA

Meta has adopted NVIDIA Confidential Computing, enabling AI capabilities while protecting user privacy. NVIDIA today announced a multiyear, multigenerational strategic partnership with Meta spanning on-premises, cloud and AI infrastructure. Meta will build hyperscale data centers optimized for both training and inference in support of the company's long-term AI infrastructure roadmap. This partnership will enable the large-scale deployment of NVIDIA CPUs and millions of NVIDIA Blackwell and Rubin GPUs, as well as the integration of NVIDIA Spectrum-X™ Ethernet switches for Meta's Facebook Open Switching System platform. "No one deploys AI at Meta's scale -- integrating frontier research with industrial-scale infrastructure to power the world's largest personalization and recommendation systems for billions of users," said Jensen Huang, founder and CEO of NVIDIA. "Through deep codesign across CPUs, GPUs, networking and software, we are bringing the full NVIDIA platform to Meta's researchers and engineers as they build the foundation for the next AI frontier." "We're excited to expand our partnership with NVIDIA to build leading-edge clusters using their Vera Rubin platform to deliver personal superintelligence to everyone in the world," said Mark Zuckerberg, founder and CEO of Meta. Expanded NVIDIA CPU Deployment for Performance Boost Meta and NVIDIA are continuing to partner on deploying Arm-based NVIDIA Grace™ CPUs for Meta's data center production applications, delivering significant performance-per-watt improvements in its data centers as part of Meta's long-term infrastructure strategy. The collaboration represents the first large-scale NVIDIA Grace-only deployment, supported by codesign and software optimization investments in CPU ecosystem libraries to improve performance per watt with every generation. The companies are also collaborating on deploying NVIDIA Vera CPUs, with the potential for large-scale deployment in 2027, further extending Meta's energy-efficient AI compute footprint and advancing the broader Arm software ecosystem. Unified Architecture Supports Meta's AI Infrastructure Meta will deploy industry-leading NVIDIA GB300-based systems and create a unified architecture that spans on-premises data centers and NVIDIA Cloud Partner deployments to simplify operations while maximizing performance and scalability. In addition, Meta has adopted the NVIDIA Spectrum-X Ethernet networking platform across its infrastructure footprint to provide AI-scale networking, delivering predictable, low-latency performance while maximizing utilization and improving both operational and power efficiency. Confidential Computing for WhatsApp Meta has adopted NVIDIA Confidential Computing for WhatsApp private processing, enabling AI-powered capabilities across the messaging platform while ensuring user data confidentiality and integrity. NVIDIA and Meta are collaborating to expand NVIDIA Confidential Compute capabilities beyond WhatsApp to emerging use cases across Meta's portfolio, supporting privacy-enhanced AI at scale. Codesigning Meta's Next-Generation AI Models Engineering teams across NVIDIA and Meta are engaged in deep codesign to optimize and accelerate state-of-the-art AI models across Meta's core workloads. These efforts combine NVIDIA's full-stack platform with Meta's large-scale production workloads to drive higher performance and efficiency for new AI capabilities used by billions around the world.

[7]

Meta agrees to buy millions more AI chips for Nvidia, raising doubts over its in-house hardware - SiliconANGLE

Meta agrees to buy millions more AI chips for Nvidia, raising doubts over its in-house hardware Meta Platforms Inc. has agreed a new deal with Nvidia Corp. to buy millions of its next-generation Vera Rubin graphics processing units and a similar number of Grace central processing units to fuel its artificial intelligence ambitions. The deal, announced today, is likely to be worth billions of dollars. In a statement, Meta Chief Executive Mark Zuckerberg said the expanded partnership is crucial to enabling his company's previously announced vision of "delivering personal superintelligence to everyone in the world." Meta is already one of Nvidia's biggest customers and is believed to have been using its GPUs for years already, but the deal suggests a much deeper partnership between the two companies. It's notable because Meta has also committed to buying substantial volumes of Nvidia's Grace CPUs and deploying them as standalone chips, rather than use them in tandem with its GPUs. Typically, CPUs are combined with GPUs in a server to improve efficiency: the CPUs handle complex tasks and manage system operations, while the GPUs focus purely on AI processing. By offloading the management to CPUs, GPUs can run much faster. Nvidia said Meta will be the first company to deploy Grace CPUs on their own, and from next year, it will deploy the next-generation Vera CPUs too. Ben Bajarin, a chip analyst at Creative Strategies, said this is significant. "Meta doing this at scale is affirmation of the soup-to-nuts strategy that Nvidia's putting across both sets of infrastructure: CPU and GPU," he told the Financial Times. Neither company disclosed the terms of the deal, but it's likely to be worth tens of billions of dollars, analysts say. Last month, Meta told investors that it's planning to invest $135 billion in its continued AI infrastructure build out this year, and the money spent on chips will account for a significant portion of that amount. Meta has committed to spending $600 billion on AI infrastructure by 2028. Its plans include building 26 data centers in the U.S. and four internationally. The two largest facilities include the 1-gigawatt Prometheus site in New Albany, Ohio, and the 5-gigawatt Hyperion site in Richland Parish, Louisiana, which are both currently under construction. Many of Nvidia's chips are destined to end up in those locations. Meta won't be buying chips alone. The deal also includes Nvidia's Spectrum-X Ethernet switches and InfiniBand interconnect technologies, which are used to link massive clusters of GPUs so they can work together in tandem. In addition, it will also use Nvidia's security products to secure the AI features on platforms such as Facebook, Instagram and WhatsApp. While Meta is doubling-down on Nvidia, it has been careful to diversify its chip strategy too. It's a major customer of Nvidia's rival Advanced Micro Devices Inc., which also makes GPUs, and in November, a report in The Information revealed that the company is also considering using Google LLC's tensor processing units for some of its AI workloads. That report made shareholders nervous at the time, with Nvidia's stock falling more than 4%, although no deal with Google has been announced so far. Perhaps the most telling aspect of today's deal is what it means for Meta's attempts to develop its own, in-house processors for AI. The company first deployed the Meta Training and Inference Accelerator or MTIA chip in 2023 and updated it in 2024, as part of an effort to reduce its reliance on external suppliers. It's primarily used for AI inference, running AI models in production to power applications such as content recommendations on its social media feeds. Last year, Meta revealed it's also working on a new version of MTIA that's specifically designed for model training, which is an area where its first chips have struggled. At the time, it said it was aiming for a broad roll out of its new chips in 2026. It's reportedly working with Taiwan Semiconductor Manufacturing Co. to manufacture the chips, and late last year it was said to have completed the "tape-out" phase, meaning it has apparently finalized the design ahead of testing. Zuckerberg has previously said the new MTIA training chips are designed to be more energy efficient and optimized for the company's "unique workloads," which should allow it to reduce its AI operating costs. But the deal with Nvidia suggests that its planned rollout this year might be delayed. The Financial Times reported that Meta has experienced "technical challenges" with the new chips, citing anonymous sources. While its in-house chip ambitions may have been frustrated, Meta has at least secured a plentiful supply of GPUs to make up for those delays. It said it's planning to work closely with Nvidia to optimize the processors for its AI models, which are thought to include its upcoming frontier model Avocado, which is the successor to its flagship LLM Llama 4. The company needs to make a good impression with Avocado, because Llama 4 was largely viewed as a disappointment, failing to match the capabilities of its rival's most advanced models.

[8]

Meta deepens Nvidia ties with pact for 'millions' of chips

Meta Platforms has agreed to deploy "millions" of Nvidia processors over the next few years, tightening an already close relationship between two of the biggest companies in the artificial intelligence industry. Meta, which accounts for about 9% of Nvidia's revenue, is committing to use more AI processors and networking equipment from the supplier, according to a statement Tuesday. For the first time, it also plans to rely on Nvidia's Grace central processing units, or CPUs, at the heart of standalone computers. The rollout will include products based on Nvidia's current Blackwell generation and the forthcoming Vera Rubin design of AI accelerators.

Share

Share

Copy Link

Meta commits to deploy millions of Nvidia chips over the next few years in a deal worth tens of billions of dollars. The expanded Meta Nvidia partnership includes current Blackwell GPUs, future Vera Rubin systems, and marks Meta as the first major hyperscaler to deploy Nvidia Grace CPUs as standalone processors at scale, signaling a strategic shift toward AI inference workloads.

Meta Becomes First Hyperscaler to Deploy Nvidia Grace CPUs at Scale

Meta has become the first major hyperscaler to deploy Nvidia Grace CPUs as standalone processors at scale, marking a significant expansion of the Meta Nvidia partnership announced Tuesday. The multiyear deal will see Meta deploy millions of Nvidia chips across its massive data center buildout, including current Blackwell GPUs, upcoming Vera Rubin CPUs, and standalone Grace processors for AI workloads that don't require graphics processing units

1

. While financial terms weren't disclosed, Nvidia indicated the deal would contribute tens of billions of dollars to its bottom line, with analysts estimating that at over $3.5 million per rack, a million GPUs alone could represent approximately $48 billion in value1

.

Source: SiliconANGLE

Meta accounts for about 9% of Nvidia's revenue and is widely believed to be among four customers that made up 61% of the chipmaker's revenue in its most recent fiscal quarter

2

3

. Mark Zuckerberg stated the expanded partnership continues Meta's push "to deliver personal superintelligence to everyone in the world," a vision he announced in July4

.

Source: Japan Times

Strategic Shift Toward AI Inference and Agentic Workloads

The deployment of standalone CPUs represents a strategic shift in AI infrastructure, with Meta using Nvidia Grace CPUs to power general purpose and agentic AI workloads alongside AI inference tasks. Ian Buck, Nvidia's VP and General Manager of Hyperscale and HPC, explained that Grace delivers twice the performance per watt on backend workloads compared to traditional processors

1

2

. The Nvidia Grace CPUs feature 72 Arm Neoverse V2 cores clocked at up to 3.35 GHz, with the Grace-CPU Superchip offering up to 960 GB of LPDDR5x memory and 1 TB of memory bandwidth1

.

Source: The Register

The next-generation Vera Rubin CPUs, planned for deployment by Meta in 2027, boost core counts to 88 custom Arm cores and add support for simultaneous multi-threading and confidential computing functionality

1

. Meta will leverage this confidential computing capability for private processing and AI features in WhatsApp encrypted messaging service1

.Nvidia Encroaches on Intel and AMD Territory

Meta's adoption of standalone CPUs from Nvidia marks a direct challenge to Intel and AMD, which have traditionally dominated the data center CPU market. This move runs counter to the broader industry trend toward custom Arm CPUs like Amazon's Graviton or Google's Axion

1

. Buck emphasized that Grace CPUs excel at data manipulation and machine learning tasks, with Vera continuing down that path as "an excellent data center-only CPU for those high-intensity data processing back-end operations"3

.The announcement sent Nvidia and Meta shares up more than 1% in late trading, while AMD shares fell more than 3%

2

5

. Ben Bajarin, chief executive and principal analyst at Creative Strategies, noted that "Meta doing this at scale is affirmation of the soup-to-nuts strategy that Nvidia's putting across both sets of infrastructure: CPU and GPU"4

.Related Stories

Massive Capex Drives AI Infrastructure Spending

The deal aligns with Meta's projected capex of $115 billion to $135 billion for 2026, with Bajarin estimating "a good portion of Meta's capex to go toward this Nvidia build-out"

1

4

. Meta has committed to spend $600 billion in the U.S. by 2028 on data centers and supporting infrastructure, with plans for 30 data centers, 26 of which will be U.S.-based4

. The company is building gigawatt-sized facilities in Louisiana, Ohio, and Indiana, including the 1-gigawatt Prometheus site in New Albany, Ohio, and the 5-gigawatt Hyperion site in Richland Parish, Louisiana4

.Hyperscalers are on track to spend $650 billion this year on compute power and AI infrastructure

5

. The timing proves strategic for Meta, as it locks in scarce next-generation compute at a time when Blackwell GPUs remain back-ordered and rivals scramble for supply5

.Diversification Strategy Amid In-House Chip Development

Despite this expanded partnership, Meta isn't exclusively an Nvidia shop. The company maintains a significant fleet of AMD Instinct GPUs in its datacenters and was directly involved in designing AMD's Helios rack systems, due out later this year

1

. Meta was also reportedly considering using Google's Tensor Processing Unit chips for AI work3

5

. Buck acknowledged that companies should test alternatives but argued that only Nvidia offers the breadth of components, systems, and software needed to lead in AI . The agreement also includes Nvidia's Spectrum-X network technology1

.References

Summarized by

Navi

[1]

Related Stories

Meta Tests In-House AI Training Chip, Challenging Nvidia's Dominance

12 Mar 2025•Technology

Meta Partners with Arm to Scale AI Efforts, Boosting Efficiency and Performance

15 Oct 2025•Technology

Meta and Nvidia forge long-term AI infrastructure partnership to build hyperscale data centers

Today•Technology

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology