Meta Launches AI-Powered Voice Translation for Global Creators on Facebook and Instagram

11 Sources

11 Sources

[1]

Meta rolls out AI-powered translations to creators globally, starting with English and Spanish | TechCrunch

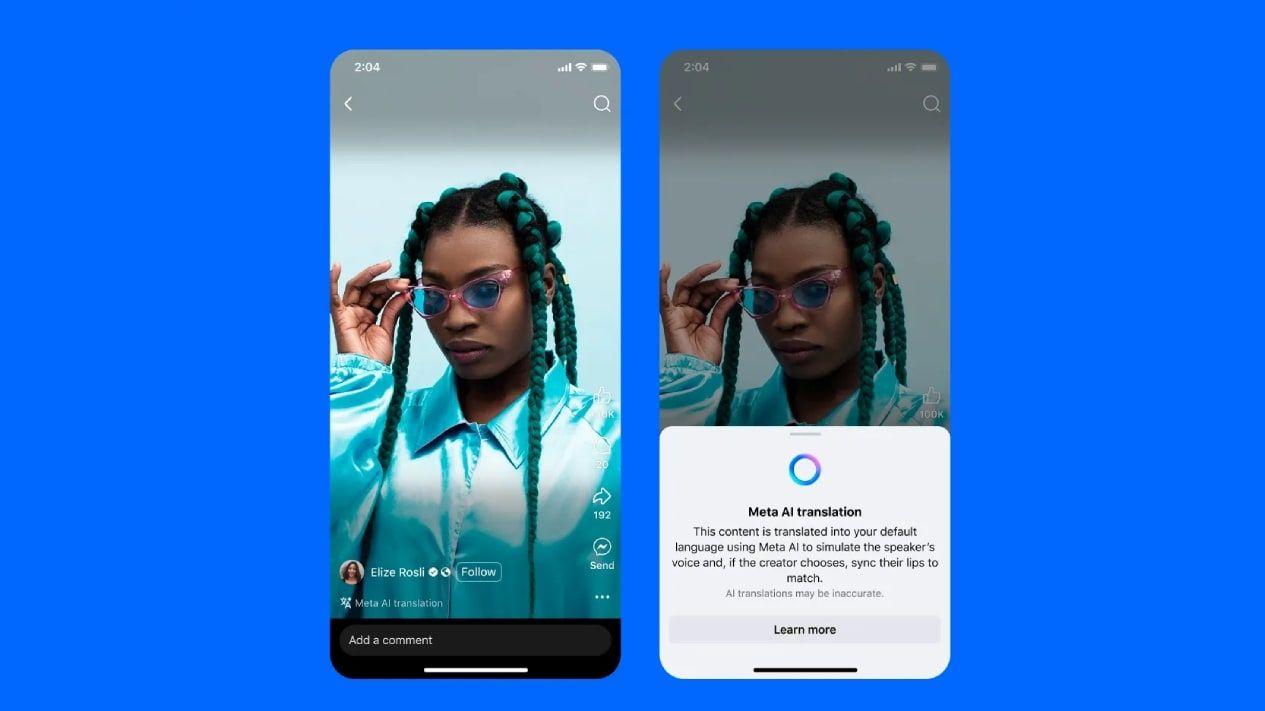

Meta is rolling out an AI-powered voice translation feature to all users on Facebook and Instagram globally, the company announced on Tuesday. The new feature, which is available in any market where Meta AI is available, allows creators to translate content into other languages so it can be viewed by a broader audience. The feature was first announced at Meta's Connect developer conference last year, where the company said it would pilot test automatic translations of creators' voices in reels across both Facebook and Instagram. Meta notes that the AI translations will use the sound and tone of the creator's own voice to make the dubbed voice sound authentic when translating the content to a new language. In addition, creators can optionally use a lip sync feature to align the translation with their lip movements, which makes it seem more natural. At launch, the feature supports translations from English to Spanish and vice versa, with more languages to be added over time. These AI translations are available to Facebook creators with 1,000 or more followers and all public Instagram accounts globally, where Meta AI is offered. To access the option, creators can click on "Translate your voice with Meta AI" before publishing their reel. Creators can then toggle the button to turn on translations and choose if they want to include lip syncing, too. When they click "Share now" to publish their reel, the translation will be available automatically. Creators can view translations and lip syncs before they're posted publicly, and can toggle off either option at any time. (Rejecting the translation won't impact the original reel, the company notes.) Viewers watching the translated reel will see a notice at the bottom that indicates it was translated with Meta AI. Those who don't want to see translated reels in select languages can disable this in the settings menu. Creators are also gaining access to a new metric in their Insights panel, where they can see their views by language. This can help them better understand how their content is reaching new audiences via translations -- something that will be more helpful as additional languages are supported over time. Meta recommends that creators who want to use the feature face forward, speak clearly, and avoid covering their mouth when recording. Minimal background noise or music also helps. The feature only supports up to two speakers, and they should not talk over each other for the translation to work. Plus, Facebook creators will be able to upload up to 20 of their own dubbed audio tracks to a reel to expand their audience beyond those in English or Spanish-speaking markets. This is offered in the "Closed captions and translations" section of the Meta Business Suite, and supports the addition of translations both before and after publishing, unlike the AI feature. Meta says more languages will be supported in the future, but did not detail which ones would be next to come or when. "We believe there are lots of amazing creators out there who have potential audiences who don't necessarily speak the same language," explained Instagram head Adam Mosseri, in a post on Instagram. "And if we can help you reach those audiences who speak other languages, reach across cultural and linguistic barriers, we can help you grow your following and get more value out of Instagram and the platform."

[2]

Meta Rolls Out AI Translations for Facebook and Instagram User-Generated Content

Expertise Community solar, state solar policy, solar cost and accessibility, renewable energy, electric vehicles, video games, home internet for gaming. Meta's AI team just pushed new translation tools to Facebook and Instagram, allowing user-generated content to be converted into other languages on the fly. These new tools, which were announced at Meta Connect in 2024, are purportedly meant to bridge the gap between people who speak different languages so that videos and reels can be understood by more audiences internationally. Currently, the one-click translations effectively translate English reels into Spanish (or vice versa), though other languages will be added at a later date. But other languages aren't left out completely for now. A Facebook-only feature lets creators add multiple audio reels to a single video, allowing it to be accessed in as many languages as the uploader provides tracks for. It's unknown whether this feature will come to Instagram at a later date. A representative for Meta did not immediately respond to a request for comment. Additionally, uploaders can opt into an AI lip sync feature that works in tandem with the translations to create what Meta claims are seamless mouth movements in AI-edited videos. The AI lip sync can be turned off using creator controls at any time, which is helpful for anyone who is nervous about AI-generated visual elements in their content. Anyone who creates content for these platforms will also have additional control over how they spread with new tools on the backend. A new metric in the Insights tab will let creators sort through their view count by language, presumably as a way to prove the effectiveness of these AI translations. The suite of AI translation tools follow closely on the heels of a report that signals that Google could soon be adding Duolingo-like AI tools to Google Translate. During the 2025 Google I/O presentation, an on-stage demonstration of Gemini integration for smart glasses showed off what an AI-assisted real-time conversation between people that speak different languages could look like in the near future.

[3]

Meta's AI translation tool can dub your Instagram videos

Meta is bringing its AI translation tool to more users on Facebook and Instagram, which automatically dubs your reels into another language. The feature also uses AI to make its dub match up with the sound of your voice and the movement of your mouth. For now, you can only translate your reels from English to Spanish (and vice versa), similar to what Meta previewed during its Connect event last year. You can enable the tool by selecting the Translate voices with Meta AI toggle on the menu that appears before you publish a reel on Instagram and Facebook. Meta also gives you the option to add lip-syncing to your dubbed video, as well as review it before publishing. Facebook and Instagram will automatically surface translated reels to users in their preferred language. Your videos will also have a tag that discloses that it was translated using Meta AI. The translation tool is rolling out now to Facebook creators with 1,000 or more followers and all public Instagram accounts.

[4]

Meta AI Translation Tool Automatically Dubs and Lip-Syncs Your Reels

The tool only supports English and Spanish, but more languages will be added soon. Don't miss out on our latest stories. Add PCMag as a preferred source on Google. Meta has rolled out a new AI translation tool that will help creators dub and lip-sync their reels in a different language. The feature "helps you reach and better connect with audiences all over the world so you can grow a global following," Meta says. Meta AI Translations was first previewed at the Meta Connect conference last year and is now rolling out to all regions where Meta AI is available. To use the feature, though, you'll need either 1,000 Facebook followers or a public Instagram account. At launch, the feature will only support English-to-Spanish and Spanish-to-English translations. Meta says support for more languages will be added in the future. To get started, tap the "Translate your voice with Meta AI" option before publishing a reel. Here, you'll see three separate options to translate your voice, add lip sync, and see a preview. If you select the last option, you'll receive a notification when the reel is ready, and then you can accept or reject the AI-generated dubbing. Meta says the tool will mimic your sound and tone to make the dubbed audio feel authentic. If you choose to lip-sync, the tool will also match your mouth's movements to the translated audio. Once the translated reel is published, it will be marked as Meta AI-generated. Viewers will see it in their preferred language and have the option to remove the translation in the three-dot menu. Creators, on the other hand, will also see a change in metrics. The views will be dissected based on language "to help you better understand how your translations are performing." For now, the tool works best for face-to-face camera videos, Meta notes. It supports dubbing of up to two people in a video, but for accurate outputs, the two speakers shouldn't overlap with each other or have excessive noise in the background. Apart from automated audio tracks, Meta now also allows creators to upload 20 of their own dubbed audio tracks to a reel. To upload them, head to the Meta Business Suite and follow the instructions mentioned in a separate support page. However, there's a minor caveat here. If you have already dubbed your reel using Meta AI, you won't be allowed to add your own dubbed audio tracks manually.

[5]

Meta's AI voice translation feature rolls out globally

On Tuesday, Meta rolled out its new voice dubbing feature globally. The Reels feature uses generative AI to translate your voice, with optional lip-syncing. Mark Zuckerberg first previewed the feature at Meta Connect 2024. At launch, the translations are only available for English to Spanish (and vice versa). The company says more languages will arrive later. At least at first, it's restricted to Facebook creators with 1,000+ followers. However, anyone with a public Instagram account can use it. The tool trains on your original voice and generates a translated audio track to match your tone. The lip-syncing add-on then matches your mouth's movements to the translated speech. The demo clip the company showed last year was spot-on -- eerily so. To use the feature, choose the "Translate your voice with Meta AI" option before publishing a reel. That's also where you can choose to add lip syncing. There's an option to review the AI-translated version before publishing. Viewers will see a pop-up noting that it's an AI translation. Meta says the feature works best for face-to-camera videos. The company recommends avoiding covering your mouth or including excessive background music. It works for up to two speakers, but it's best to avoid overlapping your speech. The company frames the feature as a way for creators to expand their audiences beyond their native tongues. As such, it included a by-language performance tracker, so you can see how well it's doing in each language.

[6]

Meta now offers AI-powered dubbing for video creators - 9to5Mac

Meta announced today a new AI-based translation tool for creators on Instagram and Facebook. The company uses artificial intelligence to automatically dub videos in other languages, with support for lip-syncing and voice cloning that promises to preserve the creator's tone and delivery. Here's how to use it. Right now, the feature supports two-way translations between English and Spanish, and Meta says that "more languages coming in the future". Creators can have their Reels automatically dubbed into the other language, and viewers see the dubbed version in their preferred language, along with a subtle indicator that the video was translated using Meta AI. Meta says that all Instagram users with public accounts and Facebook creators with 1,000+ followers are eligible to use the tool, which is rolling out "in countries where Meta AI is available (excluding the European Union, United Kingdom, South Korea, Brazil, Australia, Nigeria, Turkey, South Africa, Nigeria, Texas and Illinois)." For creators posting on Facebook, this is how you enable the feature: Meta says that once the translation, dubbing, and lip-syncing process is done, you'll be notified so you can review, approve, optionally remove the lip-sync, or delete the translation entirely. You'll also have the option to report translation errors. The company also says that it will provide metrics to help creators understand performance by language so that creators can track which languages their audience is watching. Alternatively, creators can also upload up to 20 translated audio tracks for a single Facebook Reel through the Meta Business Suite platform. Are you a Facebook or Instagram creator? Do you plan on using this feature? Let us know in the comments.

[7]

Instagram Reels are getting AI live translation but users have concerns

Meta is bringing its AI voice translation tool to Instagram Reels, meaning you could soon see people in your feed appearing to speak a different language to what they recorded the video with. Announced by Instagram head Adam Mosseri on Wednesday, the Llama 4-powered Meta AI feature that provides live, lip-synched translations, will be added to all public Instagram accounts, as well as Facebook. It's not clear exactly when this will go live, but when it does, the only language available will be Spanish to English and vice versa, with more languages to come. "We believe there are lots of amazing creatives out there who have potential audiences who don't necessarily speak the same language," Mosseri said in a Threads video. "If we can help you reach those audiences who speak other languages, reach across cultural and linguistic barriers, we can help you grow your following and get more value out of Instagram in the platform." In the video, Mosseri includes the AI-translated version of his video announcement, and the results are undeniably wild. The Instagram head's voice and mouth appear in synch with the Spanish translation, and honestly, if this video landed in my feed, I'd probably assume Mosseri had recorded it in Spanish. Meta announced its AI live translation tool in September 2024, powered by the company's Large Language Model (LLM) Llama. In a blog post last year, Meta said it had been testing "translating some creators' videos from Latin America and the U.S. in English and Spanish." Live AI language translation isn't unique to Meta, with competitors Google Gemini, OpenAI's ChatGPT, and myriad multilingual translation companies providing such tools. Google Pixel 10's new Voice Translate feature, announced yesterday, live-translates phone calls with matched voices. Apple's iOS 26 real-time translation tools will be ones to contend with. TikTok's translation tools, for a social media comparison, are largely text based for captions, with some users using third-party apps to translate TikTok Lives. However, seeing live AI translation in an Instagram Reel feels a little jarring to many users. In the comments of Mosseri's video, people have raised concerns over Meta's AI tool, including the pitfalls of potential mistranslation and suggesting that AI subtitles could be a better move. "As a person who Spanish is her mother tongue, I must say this sounds and looks creepy. AI subtitles are more than enough," wrote @singlutenismo. "AI subtitles would be great, but automatically altering and reprocessing human voices is as questionable as altering and reprocessing their skin color," wrote @cuernomalo. "It's kind of disconcerting because how do I know the translation is accurate or the way I phrase things is appropriate for that culture and language," wrote @drinksbywild. "I do a lot of recipe content so I don't want somebody to make my recipe incorrectly because of a bad translation." Mashable has reached out to Meta for further information, and we'll update this story if we hear back.

[8]

You Can Now Dub and Lip-Sync Instagram, Facebook Reels In These Languages

Meta says the AI Translations mimics the sound and tone of your own voice Meta is now expanding its AI-powered voice translation feature to Facebook and Instagram users worldwide. This tool enables creators to reach a wider audience by automatically translating spoken content into different languages. Initially, the translations are available in select languages only. Using generative AI, the feature can translate voice and optionally sync the lip movements to match. Translated Reels will appear in viewers' preferred language, along with a note indicating that Meta AI handled the translation. Instagram, Facebook Now Support Meta AI Translations in Two Languages Through a blog post on Tuesday, Meta announced that it is expanding Meta AI Translations that, once enabled, can automatically dub and lip sync reels into another language. This tool allows users to speak to viewers in their own languages and helps creators to reach and better connect with audiences all over the world. Meta says the AI Translations mimics the sound and tone of your own voice, making the translated audio feel natural and true to you. The lip-syncing feature aligns your mouth movements with the translated speech, creating the effect that you're speaking the language. Users can enable or disable the feature, and review or remove translations whenever they like. The new Meta AI Translations are initially available for English-to-Spanish and Spanish-to-English. The social media giant has plans to support more languages soon. The feature is accessible to Facebook creators with at least 1,000 followers and all public Instagram accounts. How to use Meta AI Translations on Facebook * Before publishing your reel, tap Translate your voice with Meta AI, then toggle the options to enable voice translation and lip syncing if desired. * Click Share now to publish the reel with translation in English or Spanish. * If you'd like to preview the translation first, enable the review toggle. * You'll then get a notification (or check the Professional Dashboard) to review and either approve or reject it. This won't affect your original reel. Translated reels are shown to users in their preferred language, with a note indicating they were translated by Meta AI. Viewers can opt out of translations for specific languages by selecting Don't translate in the audio and language section within the three-dot settings menu. Photo Credit: Meta After publishing translated content, you'll get a new view breakdown by language. This lets you quickly see which languages your audience is watching in, helping you gauge the performance of your translations. Meta recommends using the AI Translations feature mainly for face-to-camera videos for the best results. Users should face forward, speak clearly, and keep their mouths visible. On Facebook, translations support up to two speakers, and it is best to avoid overlapping speech. Also, keeping background noise and music minimal helps ensure more accurate translations. Additionally, Meta confirmed that creators with a Facebook Page can now upload up to 20 dubbed audio tracks to a single Reel to reach a wider audience. Viewers will hear your reel in their preferred language. Users can head to the Reels composer in Meta Business Suite, check Upload your own translated audio tracks under Closed captions & translations, then upload and tag each track with the corresponding language. You can edit these tracks before or after publishing. Once live, the reel will play in the viewer's chosen language, just like with Meta AI translations.

[9]

Meta rolls out AI voice translation feature on Facebook and Instagram - The Economic Times

Meta has launched an AI-powered voice translation tool for Facebook and Instagram reels, allowing creators to translate their voice into Spanish or English with lip-syncing. The feature offers full control, audience insights by language, and supports global reach. More languages and enhancements are expected in future updates.Meta has announced that its new AI-powered voice translation feature is now available to all users worldwide on Facebook and Instagram. "Meta AI Translations uses the sound and tone of your own voice so it feels authentically you," the company explained in a blog post. "And the lip syncing feature matches your mouth's movements to the translated audio, so that it looks like you're really speaking the language in the reel." The company said that creators have full control over the feature; they can switch it on or off, and review or delete translations whenever they wish. Translations are available from English to Spanish and vice-versa, with plans to add more languages over time. The AI translations are accessible to Facebook creators with at least 1,000 followers and all public Instagram accounts globally where Meta AI is available. This feature was first revealed at Meta's Connect developer conference last year, where the firm said it would trial automatic voice translations for creators' reels on both platforms. How to use the feature Before publishing a reel, creators will see an option labelled "Translate your voice with Meta AI." They can activate the translation and decide whether to include lip-syncing. Once a user clicks "Share now," the translated version will be automatically generated. Creators can also choose to review the translation before it goes live by enabling the review toggle. They will receive a notification (or can visit the Professional Dashboard) to approve or reject the translation. Either way, the original, non-translated reel will remain unchanged. Additionally, creators can also access a new Insights metric, showing views by language. This will help them understand how translations are expanding their audience, particularly as more languages become available. Viewers will see translated reels in their preferred language and will be informed that the translation was done by Meta AI. They can also choose to disable translations for certain languages via the audio and language settings in the menu. Meta recommended practices The Facebook parent has recommended the following practices for the best output: Instagram head Adam Mosseri said in a post on the platform, "We believe there are lots of amazing creators out there who have potential audiences who don't necessarily speak the same language. And if we can help you reach those audiences who speak other languages, reach across cultural and linguistic barriers, we can help you grow your following and get more value out of Instagram and the platform."

[10]

Meta AI Brings Real-Time Dubbing to Reels on Instagram and Facebook

Meta is also adding metrics that will allow creators to see how their content is doing in different languages. Meta has launched a new AI tool for Facebook and Instagram creators called Meta AI Translations, which will auto-translate your reels, so you can take your content to the global stage. Meta announced the new AI tool in a blog post today, explaining how creators can enable this feature from the 'Translate your voice with Meta AI' option before posting their content. Meta AI translation will automatically dub the content to match your voice and sync your lip movement, so the dubbing feels natural to the audience. Creators can also add lip syncing and review the AI-generated dub before sharing their content. Meta says the new feature currently supports only two-way translation between English and Spanish. However, Meta says support for more languages is coming soon. Moreover, creators must have a public Instagram account or more than 1000 followers on Facebook to use AI translation. Reels with translated dubbing will show a "Meta AI translation" label for transparency. Meta is also adding metrics that will allow creators to see how their content is doing in different languages. Meta AI translations will be available in all the regions where Meta AI is available, except for the European Union, the United Kingdom, South Korea, Brazil, Australia, Nigeria, Turkey, South Africa, Texas, and Illinois.

[11]

Meta Introduces AI Voice Translation and Lip Syncing for Instagram and Facebook Reels

Meta AI Reels Translation Helps Creators Reach Global Audiences With Authentic Voice in English and Spanish: Want to Know How? Meta has launched a new AI-powered translation feature for Instagram Reels and Facebook. The feature will enable reels to be dubbed in real time with synchronized lip movements, according to . Unlike traditional dubbing, the technology preserves the creator's own voice. It makes sure that tone and expression remain authentic while lips match the translated audio. The feature is currently available for English-to-Spanish and Spanish-to-English translations. Meta plans to expand to more languages soon. On Facebook, with at least 1,000 followers can access the tool, while all public Instagram accounts are eligible. Viewers will automatically see reels in their preferred language, though the option to disable translations remains available in settings. Creators can further through Meta Business Suite, which allows up to 20 custom audio tracks per reel. This gives users greater control over translations rather than relying solely on automation. Additionally, new audience insights segmented by language will help creators analyze performance across regions, enhancing global reach. Meta has advised creators to focus on face-to-camera content with clear speech for optimal translation quality. Reels with noisy backgrounds or overlapping dialogue may not achieve accurate dubbing results. The update follows improvements in, which recently added real-time previews, silence-cutting, and over 150 new fonts to enhance editing capabilities.

Share

Share

Copy Link

Meta rolls out an AI-powered voice translation feature for creators on Facebook and Instagram, starting with English and Spanish translations, aiming to help content reach broader, multilingual audiences.

Meta Introduces AI-Powered Voice Translation for Global Creators

Meta has rolled out a groundbreaking AI-powered voice translation feature for creators on Facebook and Instagram, marking a significant advancement in cross-language content creation

1

. This new tool, initially announced at Meta's Connect developer conference in 2024, is now available globally in markets where Meta AI is offered2

.How the AI Translation Feature Works

Source: 9to5Mac

The AI translation tool allows creators to automatically dub their content into different languages, starting with translations between English and Spanish

3

. The system uses advanced AI to replicate the creator's voice tone and style, ensuring that the translated content sounds authentic and natural1

. Additionally, creators have the option to enable a lip-sync feature that aligns the translated audio with the speaker's mouth movements, further enhancing the viewing experience4

.Availability and Requirements

Currently, the feature is accessible to Facebook creators with 1,000 or more followers and all public Instagram accounts in regions where Meta AI is available

5

. To use the tool, creators can select the "Translate your voice with Meta AI" option before publishing their reel, where they can toggle translations and lip-syncing on or off1

.Impact on Content Creation and Audience Reach

Source: Gadgets 360

This new feature has the potential to significantly expand creators' reach across linguistic barriers. Instagram head Adam Mosseri emphasized the tool's ability to help creators grow their following by accessing audiences who speak different languages

1

. To support this goal, Meta has introduced a new metric in the Insights panel that allows creators to view their content performance by language3

.Related Stories

Additional Features and Best Practices

Meta has also introduced a separate feature for Facebook creators, allowing them to upload up to 20 of their own dubbed audio tracks to a reel

1

. This option is available through the Meta Business Suite and supports translations both before and after publishing1

.For optimal results, Meta recommends that creators face the camera directly, speak clearly, and minimize background noise or music

5

. The feature currently supports up to two speakers in a video, but they should avoid talking over each other for the best translation outcome1

.Future Developments and Industry Trends

Source: Analytics Insight

While the AI translation feature currently only supports English and Spanish, Meta has announced plans to add more languages in the future

2

. This development aligns with broader industry trends, as other tech giants like Google are also exploring AI-assisted language translation tools2

.As AI technology continues to advance, these translation features have the potential to revolutionize global content creation and consumption, breaking down language barriers and fostering more inclusive online communities.

References

Summarized by

Navi

[1]

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology