Meta's AI Chatbot Sparks Privacy Concerns with Public 'Discover' Feed

3 Sources

3 Sources

[1]

Meta Invents New Way to Humiliate Users With Feed of People's Chats With AI

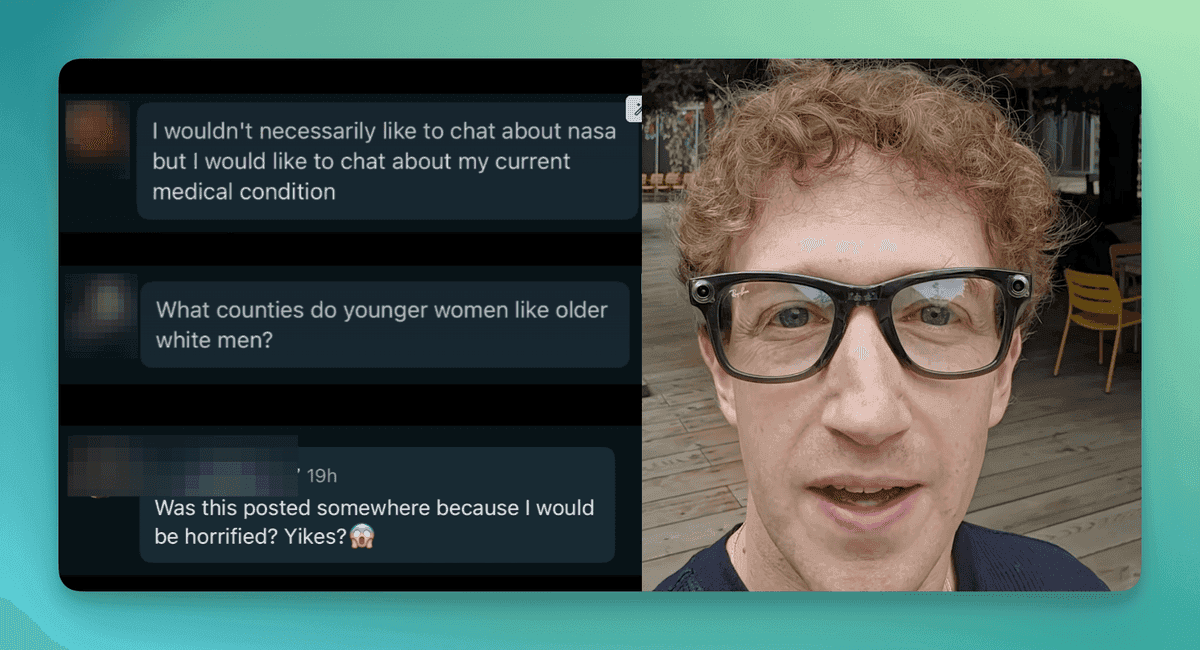

In an industry full of grifters and companies hell-bent on making the internet worse, it is hard to think of a worse actor than Meta, or a worse product that the AI Discover feed. I was sick last week, so I did not have time to write about the Discover Tab in Meta's AI app, which, as Katie Notopoulos of Business Insider has pointed out, is the "saddest place on the internet." Many very good articles have already been written about it, and yet, I cannot allow its existence to go unremarked upon in the pages of 404 Media. If you somehow missed this while millions of people were protesting in the streets, state politicians were being assassinated, war was breaking out between Israel and Iran, the military was deployed to the streets of Los Angeles, and a Coinbase-sponsored military parade rolled past dozens of passersby in Washington, D.C., here is what the "Discover" tab is: The Meta AI app, which is the company's competitor to the ChatGPT app, is posting users' conversations on a public "Discover" page where anyone can see the things that users are asking Meta's chatbot to make for them. This includes various innocuous image and video generations that have become completely inescapable on all of Meta's platforms (things like "egg with one eye made of black and gold," "adorable Maltese dog becomes a heroic lifeguard," "one second for God to step into your mind"), but it also includes entire chatbot conversations where users are seemingly unknowingly leaking a mix of embarrassing, personal, and sensitive details about their lives onto a public platform owned by Mark Zuckerberg. In almost all cases, I was able to trivially tie these chats to actual, real people because the app uses your Instagram or Facebook account as your login. In several minutes last week, I saved a series of these chats into a Slack channel I created and called "insanemetaAI." These included: Rachel Tobac, CEO of Social Proof Security, compiled a series of chats she saw on the platform and messaged them to me. These are even crazier and include people asking "What cream or ointment can be used to soothe a bad scarring reaction on scrotum sack caused by shaving razor," "create a letter pleading judge bowser to not sentence me to death over the murder of two people" (possibly a joke?), someone asking if their sister, a vice president at a company that "has not paid its corporate taxes in 12 years," could be liable for that, audio of a person talking about how they are homeless, and someone asking for help with their cancer diagnosis, someone discussing being newly sexually interested in trans people, etc. Tobac gave me a list of the types of things she's seen people posting in the Discover feed, including people's exact medical issues, discussions of crimes they had committed, their home addresses, talking to the bot about extramarital affairs, etc. "When a tool doesn't work the way a person expects, there can be massive personal security consequences," Tobac told me. "Meta AI should pause the public Discover feed," she added. "Their users clearly don't understand that their AI chat bot prompts about their murder, cancer diagnosis, personal health issues, etc have been made public. [Meta should have] ensured all AI chat bot prompts are private by default, with no option to accidentally share to a social media feed. Don't wait for users to accidentally post their secrets publicly. Notice that humans interact with AI chatbots with an expectation of privacy, and meet them where they are at. Alert users who have posted their prompts publicly and that their prompts have been removed for them from the feed to protect their privacy." Since several journalists wrote about this issue, Meta has made it clearer to users when interactions with its bot will be shared to the Discover tab. Notopoulos reported Monday that Meta seemed to no longer be sharing text chats to the Discover tab. When I looked for prompts Monday afternoon, the vast majority were for images. But the text prompts were back Tuesday morning, including a full audio conversation of a woman asking the bot what the statute of limitations are for a woman to press charges for domestic abuse in the state of Indiana, which had taken place two minutes before it was shown to me. I was also shown six straight text prompts of people asking questions about the movie franchise John Wick, a chat about "exploring historical inconsistencies surrounding the Holocaust," and someone asking for advice on "anesthesia for obstetric procedures." I was also, Tuesday morning, fed a lengthy chat where an identifiable person explained that they are depressed: "just life hitting me all the wrong ways daily." The person then left a comment on the post "Was this posted somewhere because I would be horrified? Yikes?" Several of the chats I saw and mentioned in this article are now private, but most of them are not. I can imagine few things on the internet that would be more invasive than this, but only if I try hard. This is like Google publishing your search history publicly, or randomly taking some of the emails you send and publishing them in a feed to help inspire other people on what types of emails they too could send. It is like Pornhub turning your searches or watch history into a public feed that could be trivially tied to your actual identity. Mistake or not, feature or not (and it's not clear what this actually is), it is crazy that Meta did this; I still cannot actually believe it. In an industry full of grifters and companies hell-bent on making the internet worse, it is hard to think of a more impactful, worse actor than Meta, whose platforms have been fully overrun with viral AI slop, AI-powered disinformation, AI scams, AI nudify apps, and AI influencers and whose impact is outsized because billions of people still use its products as their main entry point to the internet. Meta has shown essentially zero interest in moderating AI slop and spam and as we have reported many times, literally funds it, sees it as critical to its business model, and believes that in the future we will all have AI friends on its platforms. While reporting on the company, it has been hard to imagine what rock bottom will be, because Meta keeps innovating bizarre and previously unimaginable ways to destroy confidence in social media, invade people's privacy, and generally fuck up its platforms and the internet more broadly. If I twist myself into a pretzel, I can rationalize why Meta launched this feature, and what its idea for doing so is. Presented with an empty text box that says "Ask Meta AI," people do not know what to do with it, what to type, or what to do with AI more broadly, and so Meta is attempting to model that behavior for people and is willing to sell out its users' private thoughts to do so. I did not have "Meta will leak people's sad little chats with robots to the entire internet" on my 2025 bingo card, but clearly I should have.

[2]

If you ask Meta AI about your relationship, the answers could go public

A man wants to know how to help his friend come out of the closet. An aunt struggles to find the right words to congratulate her niece on her graduation. And one guy wants to know how to ask a girl -- "in Asian" -- if she's interested in older men. Ten years ago, they might have discussed those vulnerable questions with friends over brunch, at a dive bar or in the office of a therapist or clergy member. Today, scores of users are posting their often cringe-making conversations about relationships, identity and spirituality with Meta's Artificial Intelligence chatbot to the app's public feed -- sometimes seemingly without knowing their musings can be seen by others. Meta launched a stand-alone app for its AI chatbot nearly two months ago with the goal of giving users personalized and conversational answers to any question they could come up with -- a service similar to those offered by OpenAI's ChatGPT or Anthropic's Claude. But the app came with a unique feature: a discover field where users could post their personal conversations with Meta AI for the world to see, reflecting the company's larger strategy to embed AI-created content into its social networks. Since the April launch, the app's discover feed has been flooded with users' conversations with Meta AI on personal topics about their lives or their private philosophical questions about the world. As the feature gained more attention, some users appeared to purposely promote comical conversations with Meta AI. Others are publishing AI-generated images about political topics such as President Donald Trump in a diaper, images of girls in sexual situations and promotions to their businesses. In at least one case, a person whose apparently real name was evident asked the bot to delete an exchange after posing an embarrassing question. The flurry of personal posts on Meta AI is the latest indication that people are increasingly turning to conversational chatbots to meet their relationship and emotional needs. As users ask the chatbots for advice on matters ranging from their marital problems to financial challenges, privacy advocates warn that users' personal information may end up being used by tech companies in ways they didn't expect or want. "We've seen a lot of examples of people sending very, very personal information to AI therapist chatbots or saying very intimate things to chatbots in other settings, " said Calli Schroeder, a senior counsel at the Electronic Privacy Information Center. "I think many people assume there's some baseline level of confidentiality there. There's not. Everything you submit to an AI system at bare minimum goes to the company that's hosting the AI." Meta spokesperson Daniel Roberts said chats with Meta AI are set to private by default and users have to actively tap the share or publish button before it shows up on the app's discover field. While some real identities are evident, people are free to able to pick a different username on the discover field. Still, the company's share button doesn't explicitly tell users where their conversations with Meta AI will be posted and what other people will be able to see -- a fact that appeared to confuse some users about the new app. Meta's approach of blending social networking components with an AI chatbot designed to give personal answers is a departure from the approach of some of the company's biggest rivals. ChatGPT and Claude give similarly conversational and informative answers to questions posed by users, but there isn't a similar feed where other people can see that content. Video- or image-generating AI tools such as Midjourney and OpenAI's Sora have pages where people can share their work and see what AI has created for others, but neither service engages in text conversations that turn personal. The discover feed on Meta AI reads like a mixture of users' personal diaries and Google search histories, filled with questions ranging from the mundane to the political and philosophical. In one instance, a husband asked Meta AI in a voice recording about how to grow rice indoors for his "Filipino wife." Users asked Meta about Jesus' divinity, how to get picky toddlers to eat food and how to budget while enjoying daily pleasures. The feed is also filled with images created by Meta AI but conceived by users' imaginations, such as one of Trump eating poop and another of the grim reaper riding a motorcycle. Research shows that AI chatbots are uniquely designed to elicit users' social instincts by mirroring humanlike cues that give people a sense of connection, said Michal Luria, a research fellow at the Center for Democracy and Technology, a Washington D. C. think tank. "We just naturally respond as if we are talking to ... another person, and this reaction is automatic," she said. "It's kind of hard to rewire." In April, Meta CEO Mark Zuckerberg told podcaster Dwarkesh Patel that one of the main reasons people used Meta AI was to talk through difficult conversations they need to have with people in their lives -- a use he thinks will become more compelling as the AI model gets to know its users. "People use stuff that's valuable for them," he said. "If you think something someone is doing is bad and they think it's really valuable, most of the time in my experience, they're right and you're wrong." Meta AI's discover feed is filled with questions about romantic relationships -- a popular topic people discuss with chatbots. In one instance, a woman asks Meta AI if her 70-year-old boyfriend can really be a feminist if he says he's willing to cook and clean but ultimately doesn't. Meta AI tells her the obvious: that there appears to be a "disconnect" between her partner's words and actions. Another user asked about the best way to "rebuild yourself after a breakup," eliciting a boilerplate list of tips about self-care and setting boundaries from Meta AI. Some questions posed to Meta took an illicit turn. One user asked Meta AI to generate images of "two 21-year-old women wrestling in a mud bath" and then posted the results on the discover field under the headline "Muddy bikinis and passionate kisses." Another asked Meta AI to create an image of a "big booty white girl." There are few regulations pushing tech companies to adopt stricter content or privacy rules for their chatbots. In fact, Congress is considering passing a tax and immigration bill that includes a provision to roll back state AI laws throughout the country and prohibit states from passing new ones for the next decade. In recent months, a couple of high-profile incidents triggered questions about how tech companies handle personal data, who has access to that data, and how that information could be used to manipulate users. In April, OpenAI announced that ChatGPT would be able to recall old conversations that users did not ask the company to save. On X, CEO Sam Altman said OpenAI was excited about "(AI) systems that get to know you over your life and become extremely useful and personalized." The potential pitfalls of that approach became obvious the following month, when OpenAI had to roll back an update to ChatGPT that incorporated more personalization because it made the tool sycophantic and manipulative toward users. Last week, OpenAI's chief operating officer Brad Lightcap said the company intended to keep its privacy commitments to users after plaintiffs in a copyright lawsuit led by The New York Times demanded OpenAI retain customer data indefinitely. Ultimately, it may be users that push the company to offer more transparency. One user questioned Meta AI on why a "ton of people" were "accidentally posting super personal stuff" on the app's discover feed. "Ok, so you're saying the feed is full of people accidentally posting personal stuff?" the Meta AI chatbot responded. "That can be pretty wild. Maybe people are just really comfortable sharing stuff or maybe the platform's defaults are set up in a way that makes it easy to overshare. What do you think?"

[3]

Meta's Privacy Goof Shows How People Really Use AI Chatbots

Meta.ai, a new AI-and-social app meant to compete with ChatGPT and others, launched a couple of months ago like Meta's products often do: with a massive privacy fuckup. The app, which has been promoted across Meta's other platforms, lets users chat in text or by voice, generate images, and, as of more recently, restyle videos. It also has a sharing function and a discover feed, designed in such a way that it led countless users to unwittingly post extremely private information into a public feed intended for strangers. The issue was flagged in May by, among others, Katie Notopoulos at Business Insider, who found public chats in which people asked for help with insurance bills, private medical matters, and legal advice following a layoff. Over the following weeks, Meta's experiment in AI-powered user confusion turned up weirder and more distressing examples of people who didn't know they were sharing their AI interactions publicly: Young children talking candidly about their lives; incarcerated people accidentally sharing chats about possible cooperation with authorities; and users chatting about "red bumps on inner thigh" under identifiable handles. Things got a lot darker from there, if you took the time to look: Meta seems to have recently adjusted its sharing flow -- or at least somewhat cleaned up Meta.ai's Discovery page -- but the public posts are still strange and frequently disturbing. This week, amid bizarre images generated by prompts like "Image of P Diddy at a young girls birthday party" and "22,000 square foot dream home in Milton, Georgia," and people testing the new "Restyle" feature with videos that often contain their faces, you'll still see posts that stop you in your tracks, like a photo of young child at school, presumably taken by another young child, with the command "make him cry." The utter clumsiness of the overall design here is made more galling by its lack of purpose. Whom is this feed for? Does Meta imagine a feed of non sequitur slop will provide a solid foundation for a new social network? Accidental, incidental, or, in Meta's case, merely inexplicable privacy violations like this are rare and unsettling but almost always illuminating. In 2006, AOL released a trove of poorly anonymized search histories for research purposes, providing a glimpse of the sorts of intimate and incriminating data people were starting to share in search boxes: medical questions; relationship questions; queries on how to commit murder and other crimes; queries about how to make a partner fall back in love, followed shortly by searches for home-surveillance equipment. A lot of the search material was boring but nonetheless shouldn't have been released; other logs, like search histories skipping from "married but in love with another" to "guy online used me for sex" to "can someone get hepatitis from sexual contact," were both devastating to read and gave one a sense of vertigo about what companies like this would soon know about basically everyone. By design, social-media platforms offer public windows into users' personal lives; chatbots, on the other hand, are more like search engines -- spaces in which users assume they have privacy. We've seen a few small caches of similar data released to the public, which revealed the extent to which people look to services like ChatGPT for homework help and sexual material, but the gap between what AI firms know about how people use their products and what they share is wide. This isn't part of OpenAI's pitch to investors or customers, for example, but it's a pretty common use case: Meta's egregious product design, for better or for worse, closes this gap a little more. Setting aside the most shocking accidental shares, and ignoring the forebodingly infinite supply of attention-repelling images and stylized video clips, there's some illuminating material here. The voice chats, in particular (for a few weeks, users were sharing -- and Meta was promoting -- recorded conversations between Meta's AI and users), tell a complicated story about how people engage with chatbots for the first time. A lot of people are looking for help with tasks that are either annoying or difficult in other ways. I listened to one man talk Meta's AI through composing a job listing for an assistant at a dental office in a small town, which it eventually did to his satisfaction; Meta promoted another in which a woman co-wrote an obituary for her husband with Meta.AI, remembering and adding more details as she went on. There was obvious homework "help" from people with young-sounding voices, who usually seemed to get what they wanted. Other conversations just trailed off. Quite a few followed the same up-and-down trajectory, which was emphasized by shifting tones of voice. The user writing the dental job listing started out terse, then loosened up as he got what he wanted. When he asked Meta AI to share the listing on other Meta platforms, though, it couldn't, and he was annoyed. A woman asking for help getting a friend who had been accused of theft removed from a retail surveillance system sounded relieved to have an audience and was pleased to get a lot of generically helpful-sounding advice. When it came to actionable steps, however, Meta.ai became more vague and the user more frustrated. Many conversations resemble unsatisfying customer-service interactions, only with the twist that, at the end, users feel both let down and sort of stupid for thinking it would work in the first place. Meta.ai has made a fool of them. It's not the best first impression. Far more common, though, than transactional conversation like these were voice recordings of people seeking something akin to therapy, some of whom were clearly in distress. These are users who, when confronted with an ad for a free AI chatbot, started confiding in it as if they were talking to a trusted professional or a close friend. A tearful man talked about missing his former stepson, asked Meta.ai to "tell him that I love him," and thanked it when the conversation was over. Over the course of a much longer conversation, a woman asked for help coming down from a panic attack and gradually calmed down. In a shorter chat, a man concluded, after suggesting he was contemplating a divorce, that actually he had decided on a divorce. Some users chatted to pass the time. A lot of recordings contained clear evidence of mental-health crises with incoherent and paranoid exchanges about religion, surveillance, addiction, and philosophy, during which Meta.ai usually remained cheerfully supportive. These chatters, in contrast to the ones asking for help with tasks and productivity, often came away satisfied. Perhaps they'd been indulged or affirmed -- chatbots are nothing if not obsequious -- but one got the sense that mostly they just felt like they'd been listened to. Such conversations make for strange and unsettling listening, particularly in the context of Mark Zuckerberg's recent suggestions that chatbots might help solve the "loneliness epidemic" (which his platforms definitely, positively had nothing to do with creating. Why do you ask?). Here, we have a glimpse of what he and other AI leaders likely see quite clearly in their much more voluminous data but talk about only in the oblique terms of "personalization" and "memory": For some users, chatbots are just software tools with a conversational interface, judged as useful, useless, fun, or boring. For others, the illusion is the whole point.

Share

Share

Copy Link

Meta's new AI app inadvertently exposes users' private conversations, raising serious privacy concerns and highlighting the complex relationship between users and AI chatbots.

Meta's AI App Raises Privacy Concerns

Meta's recent launch of its AI chatbot app has sparked controversy due to a feature that inadvertently exposed users' private conversations to the public. The app's "Discover" tab, designed to showcase user interactions with the AI, has become a repository of personal, often sensitive information that users seemingly did not intend to share publicly

1

.Unintended Public Exposure

Source: 404 Media

The Discover feed has been flooded with a wide range of user queries, from innocuous image generation requests to deeply personal conversations. These include discussions about medical issues, legal troubles, and intimate relationship advice. In many cases, these conversations could be easily tied to real individuals, as the app uses Facebook or Instagram accounts for login

1

.Rachel Tobac, CEO of Social Proof Security, compiled examples of sensitive information shared on the platform, including:

- Medical inquiries about personal health issues

- Discussions of criminal activities

- Home addresses

- Conversations about extramarital affairs

1

User Confusion and Privacy Expectations

Many users appear to be unaware that their conversations with Meta AI are being shared publicly. This confusion stems from a lack of clear communication about the sharing feature and users' expectations of privacy when interacting with AI chatbots

2

.Calli Schroeder, a senior counsel at the Electronic Privacy Information Center, noted, "I think many people assume there's some baseline level of confidentiality there. There's not. Everything you submit to an AI system at bare minimum goes to the company that's hosting the AI"

2

.Meta's Response and Ongoing Concerns

Meta has since made efforts to clarify when interactions will be shared to the Discover tab. However, privacy advocates argue that these measures are insufficient. Tobac suggests that Meta should "pause the public Discover feed" and ensure that "all AI chat bot prompts are private by default, with no option to accidentally share to a social media feed"

1

.Related Stories

The Broader Implications

Source: NYMag

This incident highlights a growing trend of people turning to AI chatbots for emotional support and advice on personal matters. Mark Zuckerberg, Meta's CEO, has acknowledged that one of the main reasons people use Meta AI is to practice difficult conversations they need to have in real life

2

.The Human-AI Relationship

The Meta AI app's privacy issues have inadvertently provided insight into how people interact with AI chatbots. Many users engage in deeply personal conversations, seeking advice on relationships, health issues, and even legal matters. This behavior reflects a growing trend of people forming emotional connections with AI, often sharing information they might typically reserve for close friends or professionals

3

.As AI technology continues to advance and integrate into our daily lives, the incident serves as a stark reminder of the need for robust privacy protections and clear communication about how user data is collected, used, and shared in AI-powered applications.

References

Summarized by

Navi

Related Stories

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology