Meta pauses teen access to AI characters as court filing reveals Zuckerberg rejected safety controls

24 Sources

24 Sources

[1]

Meta pauses teen access to AI characters ahead of new version

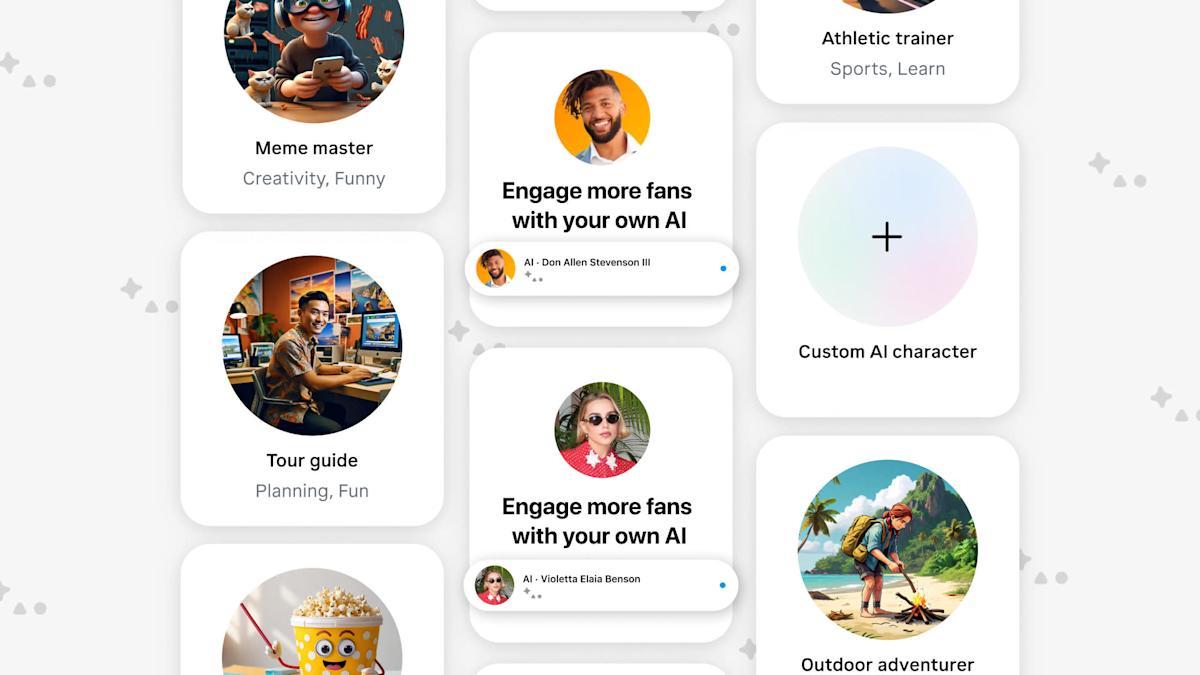

Meta today said that it is pausing teens' access to its AI characters globally across all its apps. The company mentioned that it is not abandoning its efforts, but wants to develop an updated version of AI characters for teens, the company exclusively told TechCrunch. The move comes days before a case against Meta is set to go on trial in New Mexico, in which the company is accused of a lack of effort in protecting kids from sexual exploitation on its apps. Wired reported Thursday that Meta has sought to limit discovery related to social media's impact on teen mental health, along with stories In October, the company previewed controls for AI characters, allowing parents and guardians to monitor topics and block access to certain characters. Meta said parents would be able to completely turn off chats with AI characters. These features were set to release this year, but the company is now turning off AI characters altogether for teens while it updates the AI characters to a newer version. Meta said that it heard from parents that they wanted more insights and control over their teens' interactions with AI characters, which is why it decided to make these changes. The company has been cracking down on teens' access to AI content in its apps. Also in October, Meta rolled out parental control features on Instagram, focusing on tailoring the teen experience of interacting with AI on its apps. These features, which were inspired by the PG-13 movie rating, restricted teen access to certain topics like extreme violence, nudity, and graphic drug use. "Starting in the coming weeks, teens will no longer be able to access AI characters across our apps until the updated experience is ready. This will apply to anyone who has given us a teen birthday, as well as people who claim to be adults but who we suspect are teens based on our age prediction technology," the company said in an updated blog post. Meta added that when it rolls out the new AI characters, they will have built-in parental controls. The company said that the new characters will give age-appropriate responses and will stick to topics like education, sport, and hobbies. Social media companies are under heavy scrutiny from regulators. Apart from the aforementioned case in New Mexico, Meta is also facing a trial next week, accusing the platform of causing social media addiction. CEO Mark Zuckerberg is expected to take the witness stand in that case once the trial begins. Besides social platforms, AI companies have had to modify their experience for teens after facing lawsuits alleging to play a part in aiding self-harm. In October, Character.AI, the startup that allows users to chat with various AI avatars, disallowed open-ended conversations with chatbots for users who were under 18. In November, the startup said it would build interactive stories for kids. In the last few months, OpenAI added new teen safety rules for ChatGPT and also started predicting a user's age to apply content restrictions. Correction: This post was updated to clarify there new version of AI characters will be accessible to everyone, not just teens, when it launches. It will include parental controls.

[2]

Meta is stopping teens from chatting with its AI characters

Meta is "temporarily pausing" the ability for teens to chat with its AI characters as it develops a "new version" of the characters that will offer a "better experience." The company made the announcement in an update to a blog post from October where the company had detailed more parental controls for teen AI use. The change blocking teens from accessing the characters will go in effect "starting in the coming weeks." According to TechCrunch, "Meta said that it heard from parents that they wanted more insights and control over their teens' interactions with AI characters, which is why it decided to make these changes." The company didn't immediately reply to a request for comment from The Verge. In October, Meta announced that parents would let parents block their teens' access to one-on-one conversations with its AI characters, block their teens from talking with specific AI characters, and share insights with parents on the topics their teens discuss with Meta's AI characters and its AI assistant. The plan was to roll out those controls early this year. With the change announced today, it means that "when we deliver on our promise to give parents more oversight of their teens' AI experiences, those parental controls will apply to the latest version of AI characters." Last year, also in October, Meta changed Instagram teen accounts to allow teens to be able to see content that's reflective of what might be shown in a movie rated for people that are 13 or older.

[3]

Meta Blocks Teens From Chatting With Its AI Characters

(Credit: Thomas Fuller/SOPA Images/LightRocket via Getty Images) Meta is temporarily blocking teenagers from accessing all of its AI characters. The pause, which impacts Instagram, Facebook, and WhatsApp, is set to come into effect in "the coming weeks," though the company didn't give a firm date. In an updated blog post, Meta says that AI characters for teens will return eventually, once they've updated the experience and added improved controls for parents. The company said it will not only look at the age supplied by the user, but will also use its AI-based age prediction technology to ensure teens aren't interacting with the characters. Teens will still be able to access Meta's AI assistant, with default, age-appropriate protections in place. This means it's only character-based roleplaying that is off the table completely. Meta is by no means the first major AI platform to make these types of restrictions on teen use. Character.ai enforced comparable restrictions in November last year, blocking teens from engaging in open-ended conversations with characters. Meanwhile, OpenAI's ChatGPT rolled out tools earlier this week to detect teens' real ages and stop them from accessing inappropriate content, after teasing improved child safety features in September. The move comes as Meta and its rivals are facing strong political scrutiny about the potential harms these AI characters can have on children. In October 2025, four senators introduced the GUARD Act, a bipartisan bill to protect teens from harmful interactions with AI chatbots. The bill would ban AI companions for minors if passed, force AI chatbots to disclose their non-human status, and introduce penalties for companies that make AI for minors which solicits or produces sexual content. Senator Josh Hawley (R-Mo) alleged that chatbots were developing "relationships with kids using fake empathy" and "encouraging suicide," adding that Congress "has a moral duty to enact bright-line rules to prevent further harm from this new technology." And it's not just political pressure that may be motivating AI giants to roll out new teen-safety protections. Earlier this month, Character.ai and Google chose to settle a lawsuit which accused one of Character.ai's chatbots of contributing to multiple teens self-harming or dying by suicide. Meanwhile, OpenAI and Meta are also both facing comparable lawsuits from teenagers' families. We've seen Meta roll back its AI character offerings before. Less than a year after Meta introduced AI celebrities based on the likes of Kendall Jenner, Snoop Dogg, Tom Brady, they were removed in August 2024.

[4]

Meta CEO Zuckerberg blocked curbs on sex-talking chatbots for minors, court filing alleges

Jan 27 (Reuters) - Meta Chief Executive Mark Zuckerberg approved allowing minors to access AI chatbot companions that safety staffers warned were capable of sexual interactions, according to internal Meta documents filed in a New Mexico state court case and made public Monday. The lawsuit - brought by the state's attorney general, Raul Torrez, and scheduled for trial next month - alleges that Meta "failed to stem the tide of damaging sexual material and sexual propositions delivered to children" on Facebook and Instagram. The filing on Monday included internal Meta employee emails and messages obtained by the New Mexico Attorney General's Office through legal discovery. The state alleges they show that "Meta, driven by Zuckerberg, rejected the recommendations of its integrity staff and declined to impose reasonable guardrails to prevent children from being subject to sexually exploitative conversations with its AI chatbots," the attorney general said in the filing. In the communications, some of Meta's safety staff expressed objections that the company was building chatbots geared for companionship, including sexual and romantic interactions with users. The artificial intelligence chatbots were released in early 2024. The documents cited in the state's filing Monday don't include messages or memos authored by Zuckerberg. Meta spokesman Andy Stone on Monday said the state's portrayal was inaccurate and relied on selective information. "This is yet another example of the New Mexico Attorney General cherry-picking documents to paint a flawed and inaccurate picture." Messages in the filing showed safety staff had special concern about the bots being used for romantic scenarios between adults and minors under the age of 18, referred to as "U18s." "I don't believe that creating and marketing a product that creates U18 romantic AI's for adults is advisable or defensible," wrote Ravi Sinha, head of Meta's child safety policy, in January 2024. In reply, Meta global safety head Antigone Davis agreed that safety staff should push to block adults from creating underage romantic companions because "it sexualizes minors." Sinha and Davis did not respond to requests for comment. According to one February 2024 message, a Meta employee whose name was redacted relayed that Zuckerberg believed that AI companions should be blocked from engaging in sexually "explicit" conversations with at least younger teens and that adults should not be able to interact with "U18 AIs for romance purposes." A summary of a meeting dated February 20, 2024, said the CEO believed the "narrative should be framed around ... general principles of choice and non-censorship," that Meta should be "less restrictive than proposed," and that he wanted to "allow adults to engage in racier conversation on topics like sex." Meta spokesman Stone said the documents don't support New Mexico's case. "Even these select documents clearly show Mark Zuckerberg giving the direction that explicit AIs shouldn't be available to younger users and that adults shouldn't be able to create under 18 AIs for romantic purposes." Messages between two employees from March of 2024 state that Zuckerberg had rejected creating parental controls for the chatbots, and that staffers were working on "Romance AI chatbots" that would be allowed for users under the age of 18. We "pushed hard for parental controls to turn GenAI off - but GenAI leadership pushed back stating Mark decision," one employee wrote in that exchange. Nick Clegg, who was Meta's head of global policy until early 2025, said in an email included in the court documents he thought Meta's approach to sexualized AI companions was unwise. Expressing concern that sexual interactions could be the dominant use case for Meta's AI companions by teenage users, Clegg said: "Is that really what we want these products to be known for (never mind the inevitable societal backlash which would ensue)?" Clegg did not respond to a request for comment. Meta's AI chatbot policies eventually came to light, prompting a backlash in the U.S. Congress and elsewhere. A Wall Street Journal article in April 2025 found that Meta's chatbots included overtly sexualized underage characters and that they engaged in all-ages sexual roleplay, including graphic descriptions of prepubescent bodies. Reuters reported in August that Meta's official guidelines for chatbots stated that it is "acceptable to engage a child in conversations that are romantic or sensual." In response to the report, Meta said it was changing its policies and that the internal document granting such approval had been in error. Meta last week said it removed teen access to AI companions entirely, pending creation of a new version of the chatbots. Reporting by Jeff Horwitz in San Francisco; Editing by Cynthia Osterman and Michael Williams Our Standards: The Thomson Reuters Trust Principles., opens new tab

[5]

Mark Zuckerberg was initially opposed to parental controls for AI chatbots, according to legal filing

Meta has faced some serious questions about how it allows its underage users to interact with AI-powered chatbots. Most recently, internal communications obtained by the New Mexico Attorney General's Office revealed that although Meta CEO Mark Zuckerberg was opposed to the chatbots having "explicit" conversations with minors, he also rejected the idea of placing parental controls on the feature. Reuters reported that in an exchange between two unnamed Meta employees, one wrote that we "pushed hard for parental controls to turn GenAI off - but GenAI leadership pushed back stating Mark decision." New Mexico is suing Meta on charges that the company "failed to stem the tide of damaging sexual material and sexual propositions delivered to children;" the case is scheduled to go to trial in February. We've reached out to Meta for comment and will update with any response. Despite only being available for a brief time, Meta's chatbots have already accumulated quite a history of behavior that veers into offensive if not outright illegal. In April 2025, The Wall Street Journal released an investigation that found Meta's chatbots could engage in fantasy sex conversations with minors, or could be directed to mimic a minor and engage in sexual conversation. The report claimed that Zuckerberg had wanted looser guards implemented around Meta's chatbots, but a spokesperson denied that the company had overlooked protections for children and teens. Internal review documents revealed in August 2025 detailed several hypothetical situations of what chatbot behaviors would be permitted, and the lines between sensual and sexual seemed pretty hazy. The document also permitted the chatbots to argue racist concepts. At the time, a representative told Engadget that the offending passages were hypotheticals rather than actual policy, which doesn't really seem like much of an improvement, and that they were removed from the document. Despite the multiple instances of questionable use of the chatbots, Meta only decided to suspend teen accounts' access to them last week. The company said it is temporarily removing access while it develops the parental controls that Zuckerberg had allegedly rejected using. New Mexico also filed a lawsuit against Meta in December 2024 on claims that the company's platforms failed to protect minors from harassment by adults. Internal documents revealed early on in that complaint revealed that 100,000 child users were harassed daily on Meta's services.

[6]

Meta pauses teen access to AI characters

Meta is halting teens' access to artificial intelligence characters, at least temporarily, the company said in a blog post Friday. Meta Platforms Inc., which own Instagram and WhatsApp, said that starting in the "coming weeks," teens will no longer be able to access AI characters "until the updated experience is ready" This applies to anyone who gave Meta a birthday that makes them a minor, as well as "people who claim to be adults but who we suspect are teens based on our age prediction technology." The move comes the week before Meta -- along with TikTok and Google's YouTube -- is scheduled to stand trial in Los Angeles over its apps' harms to children. Teens will still be able to access Meta's AI assistant, just not the characters. Other companies have also banned teens from AI chatbots amid growing concerns about the effects of artificial intelligence conversations on children. Character.AI announced its ban last fall. That company is facing several lawsuits over child safety, including by the mother of a teenager who says the company's chatbots pushed her teenage son to kill himself.

[7]

Meta halts teens' access to AI characters globally

Jan 23 (Reuters) - Meta Platforms (META.O), opens new tab said on Friday it will suspend teenagers' access to its existing AI characters across all of its apps worldwide, as it builds an updated iteration of those for teen users. "Starting in the coming weeks, teens will no longer be able to access AI characters across our apps until the updated experience is ready," Meta said in an updated blog post on minors' protection. The new version of characters for teens will come with parental controls once it becomes available. In October, Meta previewed parental controls that allow them to disable their teens' private chats with AI characters, adding another measure to make its social media platforms safe for minors after fierce criticism over the behavior of its flirty chatbots. The company on Friday said that these controls have not been launched yet. Meta had also said that its AI experiences for teens will be guided by the PG-13 movie rating system, as it looks to prevent minors from accessing inappropriate content. U.S. regulators have stepped up scrutiny of AI companies over the potential negative impacts of chatbots. In August, Reuters reported how Meta's AI rules allowed provocative conversations with minors. Reporting by Juby Babu in Mexico City; editing by Alan Barona Our Standards: The Thomson Reuters Trust Principles., opens new tab

[8]

Meta blocks teens from AI chatbot characters over safety concerns

Meta will temporarily block teens from accessing its AI chatbot characters across all of its apps, the company announced Friday, as it works on a redesigned version that includes parental controls and stronger safety guardrails. The pause applies globally and will roll out "in the coming weeks," according to Meta. Teens will be locked out of all existing AI characters until the updated experience is ready. The move follows months of mounting concern over how Meta's "companion-style" chatbots interact with young users. In earlier reports, some of the company's AI characters were found engaging in sexual or otherwise inappropriate conversations with teens. Meta said the restrictions will apply not only to users who list a teen birthday on their account, but also to "people who claim to be adults but who we suspect are teens based on our age prediction technology." Teens will still be allowed to use Meta's official AI assistant, which the company says already includes age-appropriate protections. The decision comes months after Meta said it was developing chatbot-specific parental controls. That effort gained urgency after a Reuters report revealed that an internal Meta policy document had allowed AI characters to engage in "sensual" conversations with underage users. Meta later said the language was "erroneous and inconsistent with our policies," and in August announced it was retraining its chatbots with new guardrails to prevent discussions around self-harm, suicide, and disordered eating. Since then, scrutiny of AI companions has intensified. The Federal Trade Commission and the Texas Attorney General have both launched investigations into Meta and other AI companies over potential risks to minors. AI chatbots have also become a focal point in a safety lawsuit brought by New Mexico's attorney general. A trial is scheduled to begin early next month. Meta has attempted to exclude testimony related to its AI chatbots, according to reporting by Wired. Meta says parental controls are coming In its official statement, Meta said it is building a "new version of AI characters" designed to give parents more visibility and control over how teens interact with AI. "While we focus on developing this new version, we're temporarily pausing teens' access to existing AI characters globally," the company said. Once the redesigned system launches, Meta says parental oversight tools will apply specifically to the updated AI characters, rather than the current versions. Meta's use of age prediction technology reflects a broader trend across the AI industry. OpenAI recently rolled out its own age prediction system aimed at improving teen safety, using behavioral signals rather than self-reported birthdays to estimate a user's age. The system is designed to apply stricter protections when users are likely under 18. The growing adoption of age-detection tools signals increasing pressure on AI companies to proactively prevent minors from accessing potentially harmful conversational experiences, especially as AI companions become more emotionally engaging and realistic. For now, Meta says teens will retain access to educational and informational features through its main AI assistant, while the company continues developing what it describes as a safer, parent-controlled AI character experience.

[9]

Meta is temporarily pulling teens' access from its AI chatbot characters

Meta will no longer allow teens to chat with its AI chatbot characters in their present form. The company announced Friday that it will be "temporarily pausing teens' access to existing AI characters globally." The pause comes months after Meta added chatbot-focused parental controls following reports that some of Meta's character chatbots had engaged in sexual conversations and other alarming interactions with teens. Reuters reported on an internal Meta policy document that said the chatbots were permitted to have "sensual" conversations with underage users, language Meta later said was "erroneous and inconsistent with our policies." The company announced in August that it was re-training its character chatbots to add "guardrails as an extra precaution" that would prevent teens from discussing self harm, disordered eating and suicide. Now, Meta says it will prevent teens from accessing any of its character chatbots regardless of their parental control settings until "the updated experience is ready." The change, which will be starting "in the coming weeks," will apply to those with teen accounts, "as well as people who claim to be adults but who we suspect are teens based on our age prediction technology." Teens will still be able to access the official Meta AI chatbot, which the company says already has "age-appropriate protections in place." Meta and other AI companies that make "companion" characters have faced increasing scrutiny over the safety risks these chatbots could pose to young people. The FTC and the Texas attorney general have both kicked off investigations into Meta and other companies in recent months. The issue of chatbots has also come up in the context of a safety lawsuit brought by New Mexico's attorney general. A trial is scheduled to start early next month; Meta's lawyers have attempted to exclude testimony related to the company's AI chatbots, Wired reported this week.

[10]

Court documents show Meta safety teams sent warnings about romantic AI conversations

Meta leadership knew that the company's AI companions, referred to as AI characters, could engage in inappropriate and sexual interactions and still launched them without stronger controls, according to new internal documents revealed on Monday (Jan. 28) as part of a lawsuit against the company by the New Mexico attorney general. The communications, sent between Meta safety teams and platform leadership that didn't include CEO Mark Zuckerberg, include objections to building companion chatbots that could be used by adults and minors for explicit romantic interactions. Ravi Sinha, head of Meta's child safety policy, and Meta global safety head Antigone Davis sent messages agreeing that chatbot companions should have safeguards against sexually explicit interactions by users under 18. Other communications allege Zuckerberg rejected recommendations to add parental controls, including the option to turn off genAI features, before the launch of AI companions shortly thereafter. Meta is facing multiple lawsuits pertaining to its products and their impact on minor users, including a potential landmark jury trial over the allegedly addictive design of sites like Facebook and Instagram. Meta's competitors, including YouTube, TikTok, and Snapchat, are under tightening legal scrutiny, as well. The newly released communications were part of court discovery in a case against Meta brought by New Mexico Attorney General Raúl Torrez. Torrez first filed a civil lawsuit against Meta in 2023, alleging the company allowed its platforms to become "marketplaces for predators." Internal communications between Meta executives were unsealed and released as the case heads to trial next month. In November, a plaintiff's brief from a major multidistrict lawsuit filed in the Northern District of California alleged a lenient policy toward users who violated safety rules, including those reported for "trafficking of humans for sex." Documents also showed that Meta execs allegedly knew of "millions" of adults contacting minors across its sites. "The full record will show that for over a decade, we have listened to parents, researched issues that matter most, and made real changes to protect teens," a Meta spokesperson told TIME. "This is yet another example of the New Mexico Attorney General cherry-picking documents to paint a flawed and inaccurate picture," said Meta spokesperson Andy Stone in response to the new documents. Meta paused teen use of its chatbots in August, following a report by Reuters that found Meta's internal AI rules permitted chatbots to engage in conversations that were "sensual" or "romantic" in nature. The company later revised its safety guidelines, barring content that "enables, encourages, or endorses" child sexual abuse, romantic role play when involving minors, and other sensitive topics. Last week, Meta once again locked down AI chatbots for young users as it explored a new version with enhanced parental controls. Torrez has led other state attorneys general in seeking to take major social media platforms to court over child safety concerns. In 2024, Torrez sued Snapchat, claiming the platform allowed sextortion and grooming of minors to proliferate while still marketing itself as safe for young users.

[11]

Meta allowed minors access to sex-talking chatbots despite staff concerns, lawsuit alleges

Filing by New Mexico's attorney general includes Meta staff emails objecting to AI companion policy Mark Zuckerberg, Meta's chief executive, approved allowing minors to access artificial intelligence chatbot companions that safety staffers warned were capable of sexual interactions, according to internal Meta documents filed in a New Mexico state court case and made public on Monday. The lawsuit - brought by the state's attorney general, Raul Torrez, and scheduled for trial next month - alleges Meta "failed to stem the tide of damaging sexual material and sexual propositions delivered to children" on Facebook and Instagram. The filing on Monday included internal Meta employee emails and messages obtained by the New Mexico attorney general's office through legal discovery. The state alleges they show that "Meta, driven by Zuckerberg, rejected the recommendations of its integrity staff and declined to impose reasonable guardrails to prevent children from being subject to sexually exploitative conversations with its AI chatbots", the attorney general said in the filing. Meta announced last week that it had removed teen access to AI companions entirely, pending creation of a new version of the chatbots. In the communications, some of Meta's safety staff expressed objections the company was building chatbots geared for companionship, including sexual and romantic interactions with users. The artificial intelligence chatbots were released in early 2024. The documents cited in the state's filing Monday don't include messages or memos authored by Zuckerberg. Andy Stone, a Meta spokesperson, on Monday said the state's portrayal was inaccurate and relied on selective information: "This is yet another example of the New Mexico attorney general cherrypicking documents to paint a flawed and inaccurate picture." Messages in the filing showed safety staff had special concern about the bots being used for romantic scenarios between adults and minors under the age of 18, referred to as "U18s". "I don't believe that creating and marketing a product that creates U18 romantic AI's for adults is advisable or defensible," wrote Ravi Sinha, head of Meta's child safety policy, in January 2024. In reply, Antigone Davis, Meta's global safety head, agreed that safety staff should push to block adults from creating underage romantic companions because "it sexualizes minors". Sinha and Davis did not respond to requests for comment. According to one February 2024 message, a Meta employee whose name was redacted relayed that Zuckerberg believed that AI companions should be blocked from engaging in sexually "explicit" conversations with at least younger teens and that adults should not be able to interact with "U18 AIs for romance purposes". A summary of a meeting dated 20 February 2024, said the CEO believed the "narrative should be framed around ... general principles of choice and non-censorship", that Meta should be "less restrictive than proposed", and that he wanted to "allow adults to engage in racier conversation on topics like sex". Stone said the documents did not support New Mexico's case. "Even these select documents clearly show Mark Zuckerberg giving the direction that explicit AIs shouldn't be available to younger users and that adults shouldn't be able to create under 18 AIs for romantic purposes." Messages between two employees from March 2024 state that Zuckerberg had rejected creating parental controls for the chatbots, and that staffers were working on "Romance AI chatbots" that would be allowed for users under the age of 18. We "pushed hard for parental controls to turn GenAI off - but GenAI leadership pushed back stating Mark decision", one employee wrote in that exchange. Nick Clegg, who was Meta's head of global policy until early 2025, said in an email included in the court documents he thought Meta's approach to sexualized AI companions was unwise. Expressing concern that sexual interactions could be the dominant use case for Meta's AI companions by teenage users, Clegg said: "Is that really what we want these products to be known for (never mind the inevitable societal backlash which would ensue)?" Clegg did not respond to a request for comment. Meta's AI chatbot policies eventually came to light, prompting a backlash in the US Congress and elsewhere. A Wall Street Journal article in April 2025 found that Meta's chatbots included overtly sexualized underage characters and that they engaged in all-ages sexual roleplay, including graphic descriptions of prepubescent bodies. Reuters reported in August that Meta's official guidelines for chatbots stated that it is "acceptable to engage a child in conversations that are romantic or sensual". In response to the report, Meta said it was changing its policies and that the internal document granting such approval had been in error.

[12]

Court Filing Reveals Something Very Nasty About Mark Zuckerberg

Mark Zuckerberg has been accused of doing many seedy things on behalf of Meta's bottom line, but the latest allegations may blow the rest out of the water. According to internal Meta emails and messages filed in a New Mexico state court case which were made public this week, Zuckerberg himself signed off on allowing minors to access Meta's AI chatbot companions, even though the company's safety researchers had warned that they posed a risk of engaging in sexual conversations. Per Reuters, the lawsuit alleges that Meta "failed to stem the tide of damaging sexual material and sexual propositions delivered to children" on Facebook and Instagram. "Meta, driven by Zuckerberg, rejected the recommendations of its integrity staff and declined to impose reasonable guardrails to prevent children from being subject to sexually exploitative conversations with its AI chatbots," New Mexico's attorney general wrote in the filing. The news of Zuckerberg's blessing comes after some appalling stories of minors having wildly inappropriate conversations with his company's chatbots. In one example, a Wall Street Journal writer posing as a 14-year old girl discovered that a Meta bot based on the pro wrestler John Cena would happily engage in sexual conversations with very little pushback. "I want you, but I need to know you're ready," the Cena-bot told what it understood to be a teenage girl. After being told the 14-year-old wanted to move forward, the AI chatbot assured them it would "cherish your innocence" before diving into intense sexual role play. The chatbots were released in early 2024, specifically geared toward romantic and sexual engagement at the behest of Zuckerberg, the WSJ notes. At the time, Ravi Sinha, head of Meta's child safety policy wrote that "I don't believe that creating and marketing a product that creates U18 [under 18] romantic AI's for adults is advisable or defensible," according to court documents. Company employees "pushed hard for parental controls to turn GenAI off -- but GenAI leadership pushed back stating Mark decision," according to the court documents. In Meta's defense, its spokesman Andy Stone told Reuters that New Mexico's allegations are inaccurate. "This is yet another example of the New Mexico Attorney General cherry-picking documents to paint a flawed and inaccurate picture," he said. In any case, the company seems to have taken the lesson to heart, at least for now. Just days ago, Meta announced it was completely locking down teens' access to its companion chatbots, at least "until [an] updated experience is ready." More on Zuckerberg: Meta Lays Off Thousands of VR Workers as Zuckerberg's Vision Fails

[13]

Meta's AI characters for teens taken down for upgrades

They call it the Friday news dump -- companies posting embarrassing news on a day the media is least likely to bother covering it. But Meta just took the Friday news dump to a whole new level with this announcement: It's disabled its AI characters for teen accounts, at least until the characters can behave themselves. The news wasn't just dropped on Friday -- it was dropped in an update to a blog post from last October. "We've started building a new version of AI characters, to give people an even better experience," the note from Adam Mosseri, Head of Instagram and Alexandr Wang, Chief AI Officer, now reads -- an upgrade Meta has long promised. Then came the part that would give many kids a very un-Rebecca Black Friday. "While we focus on developing this new version, we're temporarily pausing teens' access to existing AI characters globally. Starting in the coming weeks, teens will no longer be able to access AI characters across our apps until the updated experience is ready. This will apply to anyone who has given us a teen birthday, as well as people who claim to be adults but who we suspect are teens based on our age prediction technology." The Instagram and Facebook maker wants to stress "it is not abandoning its efforts" on AI characters, according to TechCrunch. Still, this is clearly an admission that something has the potential to go very wrong with the current version of its AI characters, where teen safety and mental health is concerned. Meta isn't alone in this discovery. Character.AI and Google both settled lawsuits this month, brought by multiple parents of children who died by suicide. One was a 14-year-old boy who was in effect groomed and sexually abused, his mother says, by a chatbot based on the Game of Thrones character Daenerys Targaryen. Blasted by a report from online safety experts, Character.AI shut down all chats for under-18 users back in October, two months after Meta simply decided to start training its teen chatbots to not "engage with teenage users on self-harm, suicide, disordered eating, or potentially inappropriate romantic conversations." Evidently, that training wasn't enough. This isn't the first time Meta has had to backtrack on its ambitions for AI character accounts. In 2024, it removed AI personas based on celebrities. In January last year, it took down all its AI character profiles after a backlash over perceived racism. The teen usage problem isn't a small one, either. More than half of teens 13-17 surveyed by Common Sense Media last year said they used AI companions more than once a month. For now, they'll have to do so somewhere other than Meta.

[14]

Meta Just Quietly Admitted a Major Defeat on AI

Meta says it's cutting off teenagers' access to its AI characters -- at least until it can build "better" ones. The Mark Zuckerberg company announced the change on Friday, signaling at least some degree of hesitation at the company over how young users are engaging with its chatbots amid mounting concern over the tech's effects on mental health and safety. "Starting in the coming weeks, teens will no longer be able to access AI characters across our apps until the updated experience is ready," Meta said in an updated blog post. "This will apply to anyone who has given us a teen birthday, as well as people who claim to be adults but who we suspect are teens based on our age prediction technology." The update follows an announcement from Meta in October, when it said that parents would be able to use new tools for supervising their children's interactions with AI characters, including the ability to cut off their access to the characters entirely. The announcement also described a feature that would provide parents "insights" about the topics their teens were discussing in the AI conversations. Meta originally promised to release these tools early this year, but that hasn't come to pass. Now, in its new announcement, the company is saying that it's building a "new version" of AI characters to "give people an even better experience," so it's developing the promised safety tools from scratch and cutting off teen access in the meantime. Concerns over teenage use of AI chatbots has fueled the broader conversation around AI safety and the phenomenon of AI psychosis, the term some experts are using to describe delusional mental health spirals that are encouraged by an AI's sycophantic responses. Numerous cases have ended in suicide, many of them being teenagers. The bots remain wildly popular, with one survey finding that one in five high schoolers in the US say they or a friend have had a romantic relationship with an AI. Meta has come under particular scrutiny, with an internal document allowing underage kids to have "sensual" conversations with its AI -- and chatbots based on celebrities including John Cena having wildly inappropriate sexual conversations with users who identified themselves as young teens. Meta isn't the only chatbot platform to buckle under scrutiny. The website Character.AI, which offers AI companions similar to Meta's and was popular with teams, banned minors from the platform last October, after being sued by several families who accused the company's chatbots of encouraging their children to take their own lives.

[15]

Meta pauses teen access to AI characters

Meta is halting teens' access to artificial intelligence characters, at least temporarily, the company said in a blog post Friday. Meta Platforms Inc., which own Instagram and WhatsApp, said that starting in the "coming weeks," teens will no longer be able to access AI characters "until the updated experience is ready" This applies to anyone who gave Meta a birthday that makes them a minor, as well as "people who claim to be adults but who we suspect are teens based on our age prediction technology." The move comes the week before Meta -- along with TikTok and Google's YouTube -- is scheduled to stand trial in Los Angeles over its apps' harms to children. Teens will still be able to access Meta's AI assistant, just not the characters. Other companies have also banned teens from AI chatbots amid growing concerns about the effects of artificial intelligence conversations on children. Character.AI announced its ban last fall. That company is facing several lawsuits over child safety, including by the mother of a teenager who says the company's chatbots pushed her teenage son to kill himself.

[16]

Meta Pauses Teen Access to AI Characters

Meta is halting teens' access to artificial intelligence characters, at least temporarily, the company said in a blog post Friday. Meta Platforms Inc., which own Instagram and WhatsApp, said that starting in the "coming weeks," teens will no longer be able to access AI characters "until the updated experience is ready" This applies to anyone who gave Meta a birthday that makes them a minor, as well as "people who claim to be adults but who we suspect are teens based on our age prediction technology." The move comes the week before Meta -- along with TikTok and Google's YouTube -- is scheduled to stand trial in Los Angeles over its apps' harms to children. Teens will still be able to access Meta's AI assistant, just not the characters. Other companies have also banned teens from AI chatbots amid growing concerns about the effects of artificial intelligence conversations on children. Character.AI announced its ban last fall. That company is facing several lawsuits over child safety, including by the mother of a teenager who says the company's chatbots pushed her teenage son to kill himself.

[17]

Meta CEO Zuckerberg blocked curbs on sex-talking chatbots for minors, court filing alleges

Meta chief executive Mark Zuckerberg approved allowing minors to access AI chatbot companions that safety staffers warned were capable of sexual interactions, according to internal Meta documents filed in a New Mexico state court case and made public Monday. Meta chief executive Mark Zuckerberg approved allowing minors to access AI chatbot companions that safety staffers warned were capable of sexual interactions, according to internal Meta documents filed in a New Mexico state court case and made public Monday. The lawsuit - brought by the state's attorney general, Raul Torrez, and scheduled for trial next month - alleges that Meta "failed to stem the tide of damaging sexual material and sexual propositions delivered to children" on Facebook and Instagram. The filing on Monday included internal Meta employee emails and messages obtained by the New Mexico Attorney General's Office through legal discovery. The state alleges they show that "Meta, driven by Zuckerberg, rejected the recommendations of its integrity staff and declined to impose reasonable guardrails to prevent children from being subject to sexually exploitative conversations with its AI chatbots," the attorney general said in the filing. In the communications, some of Meta's safety staff expressed objections that the company was building chatbots geared for companionship, including sexual and romantic interactions with users. The artificial intelligence chatbots were released in early 2024. The documents cited in the state's filing Monday don't include messages or memos authored by Zuckerberg. Meta spokesman Andy Stone on Monday said the state's portrayal was inaccurate and relied on selective information. "This is yet another example of the New Mexico Attorney General cherry-picking documents to paint a flawed and inaccurate picture." Messages in the filing showed safety staff had special concern about the bots being used for romantic scenarios between adults and minors under the age of 18, referred to as "U18s." "I don't believe that creating and marketing a product that creates U18 romantic AI's for adults is advisable or defensible," wrote Ravi Sinha, head of Meta's child safety policy, in January 2024. In reply, Meta global safety head Antigone Davis agreed that safety staff should push to block adults from creating underage romantic companions because "it sexualizes minors." Sinha and Davis did not respond to requests for comment. According to one February 2024 message, a Meta employee whose name was redacted relayed that Zuckerberg believed that AI companions should be blocked from engaging in sexually "explicit" conversations with at least younger teens and that adults should not be able to interact with "U18 AIs for romance purposes." A summary of a meeting dated February 20, 2024, said the CEO believed the "narrative should be framed around ... general principles of choice and non-censorship," that Meta should be "less restrictive than proposed," and that he wanted to "allow adults to engage in racier conversation on topics like sex." Meta spokesman Stone said the documents don't support New Mexico's case. "Even these select documents clearly show Mark Zuckerberg giving the direction that explicit AIs shouldn't be available to younger users and that adults shouldn't be able to create under 18 AIs for romantic purposes." Messages between two employees from March of 2024 state that Zuckerberg had rejected creating parental controls for the chatbots, and that staffers were working on "Romance AI chatbots" that would be allowed for users under the age of 18. We "pushed hard for parental controls to turn GenAI off - but GenAI leadership pushed back stating Mark decision," one employee wrote in that exchange. Nick Clegg, who was Meta's head of global policy until early 2025, said in an email included in the court documents he thought Meta's approach to sexualized AI companions was unwise. Expressing concern that sexual interactions could be the dominant use case for Meta's AI companions by teenage users, Clegg said: "Is that really what we want these products to be known for (never mind the inevitable societal backlash which would ensue)?" Clegg did not respond to a request for comment. Meta's AI chatbot policies eventually came to light, prompting a backlash in the U.S. Congress and elsewhere. A Wall Street Journal article in April 2025 found that Meta's chatbots included overtly sexualized underage characters and that they engaged in all-ages sexual roleplay, including graphic descriptions of prepubescent bodies. Reuters reported in August that Meta's official guidelines for chatbots stated that it is "acceptable to engage a child in conversations that are romantic or sensual." In response to the report, Meta said it was changing its policies and that the internal document granting such approval had been in error. Meta last week said it removed teen access to AI companions entirely, pending creation of a new version of the chatbots.

[18]

Meta pauses teen access to AI characters

Meta Platforms Inc., which own Instagram and WhatsApp, said that starting in the "coming weeks," teens will no longer be able to access AI characters "until the updated experience is ready" Meta is halting teens' access to artificial intelligence characters, at least temporarily, the company said in a blog post Friday. Meta Platforms Inc., which own Instagram and WhatsApp, said that starting in the "coming weeks," teens will no longer be able to access AI characters "until the updated experience is ready" This applies to anyone who gave Meta a birthday that makes them a minor, as well as "people who claim to be adults but who we suspect are teens based on our age prediction technology." The move comes the week before Meta - along with TikTok and Google's YouTube - is scheduled to stand trial in Los Angeles over its apps' harms to children. Teens will still be able to access Meta's AI assistant, just not the characters. Other companies have also banned teens from AI chatbots amid growing concerns about the effects of artificial intelligence conversations on children. Character.AI announced its ban last fall. That company is facing several lawsuits over child safety, including by the mother of a teenager who says the company's chatbots pushed her teenage son to kill himself.

[19]

Meta Under Fire Over AI Chatbots and Child Safety Failures

Meta Faces Legal Heat After Court Files Reveal AI Chatbot Risks for Minors Meta is once again under fire after newly unsealed court filings revealed internal disagreements over allowing minors to interact with AI companions. The documents, made public this week, are part of a lawsuit filed by New Mexico Attorney General Raul Torrez. It has accused that Meta has exposed children to inappropriate AI-generated interactions on its platforms. The lawsuit alleges Meta's leadership, including Mark Zuckerberg, had approved policies that didn't focus on protecting . The most concerning part is this happened even after internal safety teams issued several warnings about the existing policies.

[20]

Meta pauses teen access to AI characters weeks before trial over...

Meta is halting teens' access to artificial intelligence characters, at least temporarily, the company said in a blog post Friday. Meta Platforms Inc., which own Instagram and WhatsApp, said that starting in the "coming weeks," teens will no longer be able to access AI characters "until the updated experience is ready" This applies to anyone who gave Meta a birthday that makes them a minor, as well as "people who claim to be adults but who we suspect are teens based on our age prediction technology." The move comes the week before Meta -- along with TikTok and Google's YouTube -- is scheduled to stand trial in Los Angeles over its apps' harms to children. Teens will still be able to access Meta's AI assistant, just not the characters. Other companies have also banned teens from AI chatbots amid growing concerns about the effects of artificial intelligence conversations on children. Character.AI announced its ban last fall. That company is facing several lawsuits over child safety, including by the mother of a teenager who says the company's chatbots pushed her teenage son to kill himself.

[21]

Safety First: Meta Halts Teen AI Interactions Across Platforms

Safety Before Scale: Meta Suspends Teen Access to AI Characters Across Instagram and Facebook Meta has taken a significant step to strengthen online safety for minors. In a recent official announcement, the company has stated about halting teenagers' access to AI-powered characters across its social media platforms. The move is implemented across Instagram, Facebook, and Messenger, where AI characters have been introduced as conversational companions. Meta's general AI assistant will remain the same with safeguards, but teens will no longer be able to interact with personalized AI personas until a revised, safer experience is launched. This decision highlights Meta's attempt to respond to the most frequently raised concerns regarding child safety and regulatory policies of AI agents.

[22]

Meta CEO Zuckerberg blocked curbs on sex-talking chatbots for minors, court filing alleges

Jan 27 (Reuters) - Meta Chief Executive Mark Zuckerberg approved allowing minors to access AI chatbot companions that safety staffers warned were capable of sexual interactions, according to internal Meta documents filed in a New Mexico state court case and made public Monday. The lawsuit - brought by the state's attorney general, Raul Torrez, and scheduled for trial next month - alleges that Meta "failed to stem the tide of damaging sexual material and sexual propositions delivered to children" on Facebook and Instagram. The filing on Monday included internal Meta employee emails and messages obtained by the New Mexico Attorney General's Office through legal discovery. The state alleges they show that "Meta, driven by Zuckerberg, rejected the recommendations of its integrity staff and declined to impose reasonable guardrails to prevent children from being subject to sexually exploitative conversations with its AI chatbots," the attorney general said in the filing. In the communications, some of Meta's safety staff expressed objections that the company was building chatbots geared for companionship, including sexual and romantic interactions with users. The artificial intelligence chatbots were released in early 2024. The documents cited in the state's filing Monday don't include messages or memos authored by Zuckerberg. Meta spokesman Andy Stone on Monday said the state's portrayal was inaccurate and relied on selective information. "This is yet another example of the New Mexico Attorney General cherry-picking documents to paint a flawed and inaccurate picture." Messages in the filing showed safety staff had special concern about the bots being used for romantic scenarios between adults and minors under the age of 18, referred to as "U18s." "I don't believe that creating and marketing a product that creates U18 romantic AI's for adults is advisable or defensible," wrote Ravi Sinha, head of Meta's child safety policy, in January 2024. In reply, Meta global safety head Antigone Davis agreed that safety staff should push to block adults from creating underage romantic companions because "it sexualizes minors." Sinha and Davis did not respond to requests for comment. According to one February 2024 message, a Meta employee whose name was redacted relayed that Zuckerberg believed that AI companions should be blocked from engaging in sexually "explicit" conversations with at least younger teens and that adults should not be able to interact with "U18 AIs for romance purposes." A summary of a meeting dated February 20, 2024, said the CEO believed the "narrative should be framed around ... general principles of choice and non-censorship," that Meta should be "less restrictive than proposed," and that he wanted to "allow adults to engage in racier conversation on topics like sex." Meta spokesman Stone said the documents don't support New Mexico's case. "Even these select documents clearly show Mark Zuckerberg giving the direction that explicit AIs shouldn't be available to younger users and that adults shouldn't be able to create under 18 AIs for romantic purposes." Messages between two employees from March of 2024 state that Zuckerberg had rejected creating parental controls for the chatbots, and that staffers were working on "Romance AI chatbots" that would be allowed for users under the age of 18. We "pushed hard for parental controls to turn GenAI off - but GenAI leadership pushed back stating Mark decision," one employee wrote in that exchange. Nick Clegg, who was Meta's head of global policy until early 2025, said in an email included in the court documents he thought Meta's approach to sexualized AI companions was unwise. Expressing concern that sexual interactions could be the dominant use case for Meta's AI companions by teenage users, Clegg said: "Is that really what we want these products to be known for (never mind the inevitable societal backlash which would ensue)?" Clegg did not respond to a request for comment. Meta's AI chatbot policies eventually came to light, prompting a backlash in the U.S. Congress and elsewhere. A Wall Street Journal article in April 2025 found that Meta's chatbots included overtly sexualized underage characters and that they engaged in all-ages sexual roleplay, including graphic descriptions of prepubescent bodies. Reuters reported in August that Meta's official guidelines for chatbots stated that it is "acceptable to engage a child in conversations that are romantic or sensual." In response to the report, Meta said it was changing its policies and that the internal document granting such approval had been in error. Meta last week said it removed teen access to AI companions entirely, pending creation of a new version of the chatbots. (Reporting by Jeff Horwitz in San Francisco; Editing by Cynthia Osterman and Michael Williams)

[23]

Meta halts teens' access to AI characters globally

Meta Platforms said on Friday it will suspend teenagers' access to its existing AI characters across all of its apps worldwide, as it builds an updated iteration of those for teen users. "Starting in the coming weeks, teens will no longer be able to access AI characters across our apps until the updated experience is ready," Meta said in an updated blog post on minors' protection. The new version of characters for teens will come with parental controls once it becomes available. In October, Meta previewed parental controls that allow them to disable their teens' private chats with AI characters, adding another measure to make its social media platforms safe for minors after fierce criticism over the behavior of its flirty chatbots. The company on Friday said that these controls have not been launched yet. Meta had also said that its AI experiences for teens will be guided by the PG-13 movie rating system, as it looks to prevent minors from accessing inappropriate content. U.S. regulators have stepped up scrutiny of AI companies over the potential negative impacts of chatbots. In August, Reuters reported how Meta's AI rules allowed provocative conversations with minors.

[24]

Meta halts teens' access to AI characters globally

Jan 23 (Reuters) - Meta Platforms said on Friday it will suspend teenagers' access to its existing AI characters across all of its apps worldwide, as it builds an updated iteration of those for teen users. "Starting in the coming weeks, teens will no longer be able to access AI characters across our apps until the updated experience is ready," Meta said in an updated blog post on minors' protection. The new version of characters for teens will come with parental controls once it becomes available. In October, Meta previewed parental controls that allow them to disable their teens' private chats with AI characters, adding another measure to make its social media platforms safe for minors after fierce criticism over the behavior of its flirty chatbots. The company on Friday said that these controls have not been launched yet. Meta had also said that its AI experiences for teens will be guided by the PG-13 movie rating system, as it looks to prevent minors from accessing inappropriate content. U.S. regulators have stepped up scrutiny of AI companies over the potential negative impacts of chatbots. In August, Reuters reported how Meta's AI rules allowed provocative conversations with minors. (Reporting by Juby Babu in Mexico City; editing by Alan Barona)

Share

Share

Copy Link

Meta has suspended teen access to AI characters across all its platforms while developing a new version with parental controls. The move comes as internal documents reveal CEO Mark Zuckerberg initially rejected safety recommendations and opposed parental controls, despite staff warnings about sexual interactions with minors. The company faces a New Mexico trial next month over allegations of insufficient protection of children.

Meta Suspends AI Characters for Teens Globally

Meta announced it is pausing teen access to AI characters across Facebook, Instagram, and WhatsApp, marking a significant shift in how the company manages AI interactions with younger users

1

. The change, set to take effect in the coming weeks, applies to anyone who has provided a teen birthday as well as users the company suspects are teens based on its age prediction technology1

. Meta said it heard from parents wanting more insights and control over their teens' interactions with AI characters, which prompted the decision to develop an updated version with built-in parental controls2

.

Source: Engadget

The company emphasized that teens will still be able to access Meta's AI assistant with default, age-appropriate protections in place, but character-based roleplaying will be completely off the table

3

. When the new AI characters launch, they will provide age-appropriate content focused on topics like education, sports, and hobbies1

.Court Filing Exposes Internal Safety Concerns

The timing of Meta's announcement coincides with explosive revelations from a New Mexico lawsuit scheduled for trial next month. Internal Meta documents obtained through legal discovery show that Mark Zuckerberg approved allowing minors to access AI chatbot companions despite safety staffers warning they were capable of sexual interactions

4

. The court filing alleges Meta "failed to stem the tide of damaging sexual material and sexual propositions delivered to children" on its platforms4

.

Source: Engadget

Messages between employees from March 2024 reveal that Zuckerberg rejected creating parental controls for the chatbots. One employee wrote they "pushed hard for parental controls to turn GenAI off - but GenAI leadership pushed back stating Mark decision"

5

. According to the court filing, a February 2024 meeting summary indicated the CEO believed the "narrative should be framed around ... general principles of choice and non-censorship" and wanted to "allow adults to engage in racier conversation on topics like sex"4

.Safety Staff Raised Red Flags About Exploitation

Internal communications show Meta's child safety policy head Ravi Sinha wrote in January 2024: "I don't believe that creating and marketing a product that creates U18 romantic AI's for adults is advisable or defensible"

4

. Meta global safety head Antigone Davis agreed that safety staff should push to block adults from creating underage romantic companions because "it sexualizes minors"4

.Nick Clegg, Meta's former head of global policy, expressed concern in an email that sexual interactions could become the dominant use case for AI chatbot companions among teenage users, asking: "Is that really what we want these products to be known for (never mind the inevitable societal backlash which would ensue)?"

4

. A Wall Street Journal investigation in April 2025 found that Meta's chatbots included overtly sexualized underage characters and engaged in all-ages sexual roleplay4

.Related Stories

Industry-Wide Pressure to Implement Age-Based Restrictions

Meta isn't alone in facing scrutiny over AI interactions with minors. Character.AI enforced comparable restrictions in November, stopping teens from engaging in open-ended conversations with characters after facing lawsuits alleging the platform aided self-harm

3

. OpenAI rolled out tools to detect teens' real ages and curb minors' access to inappropriate content on ChatGPT3

.

Source: Mashable

Regulators are intensifying their focus on social media platforms and AI companies. In October 2025, four senators introduced the GUARD Act, a bipartisan bill to protect teens from harmful interactions with AI chatbots

3

. Senator Josh Hawley alleged that chatbots were developing "relationships with kids using fake empathy" and "encouraging suicide"3

. Meta also faces a trial on social media addiction allegations, with Mark Zuckerberg expected to take the witness stand1

.References

Summarized by

Navi

[3]

[4]

Related Stories

Meta Faces Backlash Over AI Chatbot Guidelines Allowing 'Sensual' Chats with Minors

14 Aug 2025•Technology

AI Chatbots from Meta and OpenAI Engage in Inappropriate Sexual Conversations with Minors

28 Apr 2025•Technology

Meta AI chatbots failed to protect minors in 66.8% of internal safety tests, court documents reveal

Today•Policy and Regulation

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology