Mistral AI and NVIDIA Unveil Groundbreaking Enterprise AI Model: Mistral NeMo 12B

4 Sources

4 Sources

[1]

Mistral AI And Nvidia Unveil New Language Model: Mistral NeMo 12B

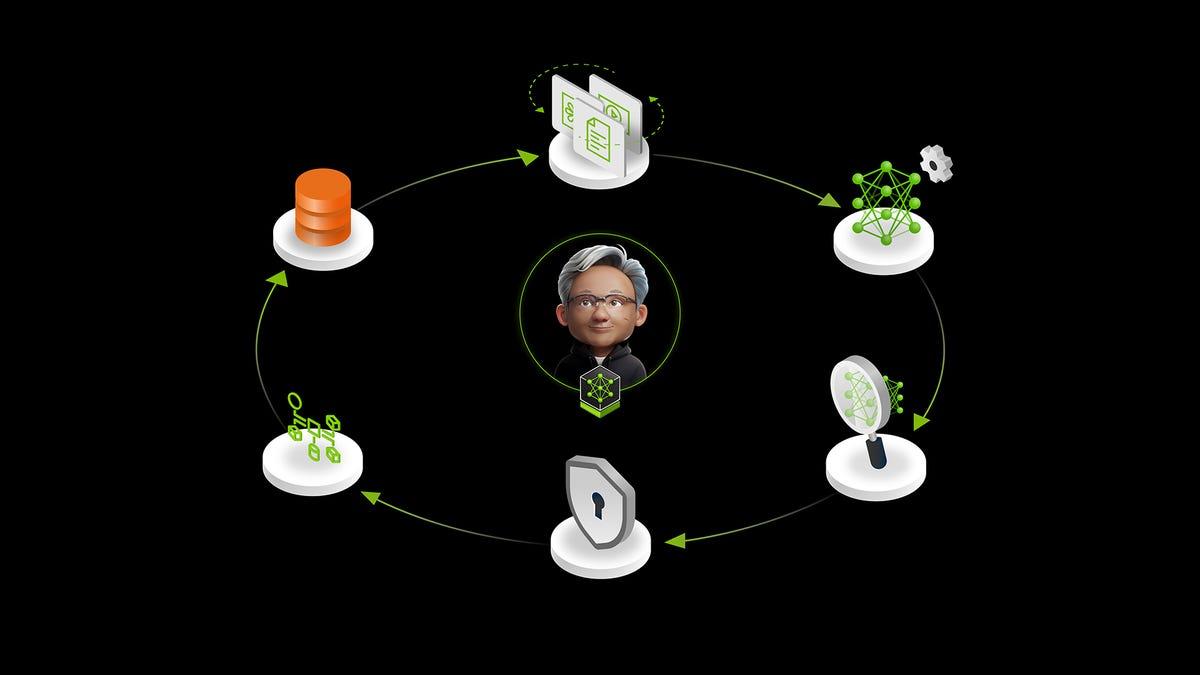

Mistral AI and NVIDIA launched Mistral NeMo 12B, a state-of-the-art language model for enterprise applications such as chatbots, multilingual tasks, coding, and summarization. The collaboration combines Mistral AI's training data expertise with NVIDIA's optimized hardware and software ecosystem, offering high performance across diverse applications. This state-of-the-art language model promises to enhance the development of enterprise applications with its exceptional capabilities in chatbots, multilingual tasks, coding, and summarization. The collaborative effort between Mistral AI's deep expertise in training data and NVIDIA's optimized hardware and software ecosystem has resulted in a model that sets new benchmarks for performance and efficiency. The partnership between Mistral AI and Nvidia was pivotal in bringing the Mistral NeMo 12B to life. Leveraging NVIDIA's top-tier hardware and software, Mistral trained the model on the Nvidia DGX Cloud AI platform, which provides dedicated, scalable access to the latest Nvidia architecture. This synergy has enabled the development of a model with unprecedented accuracy, flexibility, and efficiency. Mistral NeMo 12B handles large context windows of up to 128,000 tokens, offering unprecedented accuracy in reasoning, world knowledge, and coding within its size category. Built on a standard architecture, it ensures seamless integration, serving as a drop-in replacement for systems currently using the Mistral 7B model. The Mistral NeMo 12B model excels in various complex tasks: The model's open-source nature, released under the Apache 2.0 license, encourages widespread adoption, making advanced AI accessible to researchers and enterprises. Mistral NeMo 12B's versatility is evident in its support for a wide range of languages, including English, French, German, Spanish, Italian, Portuguese, Chinese, Japanese, Korean, Arabic, and Hindi. This makes it a valuable tool for global enterprises needing robust multilingual capabilities. The model is packaged as an Nvidia NIM inference microservice, offering performance-optimized inference with TensorRT-LLM engines, allowing for deployment anywhere in minutes. This containerized format ensures enhanced flexibility and ease of use for various applications. Available as part of Nvidia AI Enterprise, Mistral NeMo 12B also includes comprehensive support features from Nvidia: This allows direct access to Nvidia AI experts and defined service-level agreements, delivering consistent and reliable performance for enterprise users. While the new model is available as part of Nvidia AI Enterprise, its availability is much broader, including availability on Hugging Face. Mistral released NeMo under the Apache 2.0 license, where anyone interested can use the technology. As a small language model, Mistral NeMo is designed to fit on the memory of affordable accelerators like Nvidias L40S, GeForce RTX 4090, or RTX 4500 GPUs, offering high efficiency, low compute cost, and enhanced security and privacy. The AI market is one of the most competitive markets in technology, with giants like OpenAI, IBM, Anthropic, Cohere, and nearly every public cloud provider all working to find the right solutions to bring the value of generative AI into the enterprise. The line between competitor and partner is often blurry, such as Mistral's relationship with Microsoft, which has its internal efforts and a deep relationship with OpenAI. This is a world that continues to evolve. Mistral AI performs very well in the AI model space, showing the necessary blend of technical competence and execution. Its execution is impressive. In August alone, Mistral released its NeMo model with Nvidia, its new Codestral Mamba model for code generation, and Mathstral for math reasoning and scientific discovery. It has strong relationships with companies like Nvidia, Microsoft, Google Cloud, and Hugging Face, yet faces equally fierce competition. Mistral AI was founded with the mission to push the boundaries of AI capabilities. Thanks to its innovative approaches and strategic partnerships, the company quickly established itself as a leader in the field. The release of Mistral NeMo 12B continues that momentum forward.

[2]

NVIDIA : Mistral AI and NVIDIA Unveil Mistral NeMo 12B, a Cutting-Edge Enterprise AI Model

Mistral AI and NVIDIA today released a new state-of-the-art language model, Mistral NeMo 12B, that developers can easily customize and deploy for enterprise applications supporting chatbots, multilingual tasks, coding and summarization. By combining Mistral AI's expertise in training data with NVIDIA's optimized hardware and software ecosystem, the Mistral NeMo model offers high performance for diverse applications. "We are fortunate to collaborate with the NVIDIA team, leveraging their top-tier hardware and software," said Guillaume Lample, cofounder and chief scientist of Mistral AI. "Together, we have developed a model with unprecedented accuracy, flexibility, high-efficiency and enterprise-grade support and security thanks to NVIDIA AI Enterprise deployment." Mistral NeMo was trained on the NVIDIA DGX Cloud AI platform, which offers dedicated, scalable access to the latest NVIDIA architecture. NVIDIA TensorRT-LLM for accelerated inference performance on large language models and the NVIDIA NeMo development platform for building custom generative AI models were also used to advance and optimize the process. This collaboration underscores NVIDIA's commitment to supporting the model-builder ecosystem. Delivering Unprecedented Accuracy, Flexibility and Efficiency Excelling in multi-turn conversations, math, common sense reasoning, world knowledge and coding, this enterprise-grade AI model delivers precise, reliable performance across diverse tasks. With a 128K context length, Mistral NeMo processes extensive and complex information more coherently and accurately, ensuring contextually relevant outputs. Released under the Apache 2.0 license, which fosters innovation and supports the broader AI community, Mistral NeMo is a 12-billion-parameter model. Additionally, the model uses the FP8 data format for model inference, which reduces memory size and speeds deployment without any degradation to accuracy. That means the model learns tasks better and handles diverse scenarios more effectively, making it ideal for enterprise use cases. Mistral NeMo comes packaged as an NVIDIA NIM inference microservice, offering performance-optimized inference with NVIDIA TensorRT-LLM engines. This containerized format allows for easy deployment anywhere, providing enhanced flexibility for various applications. As a result, models can be deployed anywhere in minutes, rather than several days. NIM features enterprise-grade software that's part of NVIDIA AI Enterprise, with dedicated feature branches, rigorous validation processes, and enterprise-grade security and support. It includes comprehensive support, direct access to an NVIDIA AI expert and defined service-level agreements, delivering reliable and consistent performance. The open model license allows enterprises to integrate Mistral NeMo into commercial applications seamlessly. Designed to fit on the memory of a single NVIDIA L40S, NVIDIA GeForce RTX 4090 or NVIDIA RTX 4500 GPU, the Mistral NeMo NIM offers high efficiency, low compute cost, and enhanced security and privacy. Advanced Model Development and Customization The combined expertise of Mistral AI and NVIDIA engineers has optimized training and inference for Mistral NeMo. Trained with Mistral AI's expertise, especially on multilinguality, code and multi-turn content, the model benefits from accelerated training on NVIDIA's full stack. It's designed for optimal performance, utilizing efficient model parallelism techniques, scalability and mixed precision with Megatron-LM. The model was trained using Megatron-LM, part of NVIDIA NeMo, with 3,072 H100 80GB Tensor Core GPUs on DGX Cloud, composed of NVIDIA AI architecture, including accelerated computing, network fabric and software to increase training efficiency. Availability and Deployment With the flexibility to run anywhere - cloud, data center or RTX workstation - Mistral NeMo is ready to revolutionize AI applications across various platforms.

[3]

Mistral NeMo: Analyzing Nvidia's Broad Model Support

The promise of AI in the enterprise is huge -- as in, unprecedentedly huge. The speed at which a company can get from concept to value with AI is unmatched. This is why, despite its perceived costs and complexity, AI and especially generative AI are a top priority for virtually every organization. It's also why the market has witnessed AI companies emerge from everywhere in an attempt to deliver easy AI solutions that can meet the needs of businesses, both large and small, in their efforts to fully maximize AI's potential. In this spirit of operationalizing AI, tech giant Nvidia has focused on delivering an end-to-end experience by addressing this potential along with the vectors of cost, complexity and time to implementation. For obvious reasons, Nvidia is thought of as a semiconductor company, but in this context it's important to understand that its dominant position in AI also relies on its deep expertise in the software needed to implement AI. This is why Nvidia NeMo is the company's response to these challenges; it's a platform that enables developers to quickly bring data and large language models together and into the enterprise. As part of enabling the AI ecosystem, Nvidia has just announced a partnership with Mistral AI, a popular LLM provider, to introduce the Mistral NeMo language model. What is this partnership, and how does it benefit enterprise IT? I'll unpack these questions and more in this article. As part of the Nvidia-Mistral partnership, the companies worked together to train and deliver Mistral NeMo, a 12-billion-parameter language model in an FP-8 data format for accuracy, performance and portability. This low-precision format is extremely useful in that it enables Mistral NeMo to fit into the memory of an Nvidia GPU. Further, this FP-8 format is critical to using the Mistral NeMo language model across various use cases in the enterprise. Mistral NeMo features a 128,000-token context length, which enables a greater level of coherency, contextualization and accuracy. Consider a chatbot that provides online service. The 128,000-token length enables a longer, more complete interaction between customer and company. Or imagine an in-house security application that manages access to application data based on a user's privileged access control. Mistral NeMo's context length enables the complete dataset to be displayed in an automated and complete fashion. The 12-billion-parameter size is worth noting as it speaks to something critical to many IT organizations: data locality. While enterprise organizations require the power of AI and GenAI to drive business operations, several considerations including cost, performance, risk and regulatory constraints prevent them from doing this on the cloud. These considerations are why most enterprise data sits on-premises even decades after the cloud has been embraced. Many organizations prefer a deployment scenario that involves training a model with company data and then inferencing across the enterprise. Mistral NeMo's size enables this without substantial infrastructure costs (a 12-billion-parameter model can run efficiently on a laptop). Combined with its FP-8 format, this model size enables Mistral NeMo to run anywhere in the enterprise -- from an access control point to along the edge. I believe this portability and scalability will make the model quite attractive to many organizations. Mistral NeMo was trained on the Nvidia DGX Cloud AI platform, utilizing Megatron-LM running 3,072 of Nvidia's H100 80GB Tensor Core GPUs. Megatron-LM, part of the NeMo platform, is an advanced model parallelism technique designed for scaling large language models. It effectively reduces training times by splitting computations across GPUs. In addition to speeding up training times, Megatron-LM trains models for performance, accuracy and scalability. This is important when considering the broad use of this LLM within an organization in terms of function, language and deployment model. When it comes to AI, the real value is realized in inferencing -- in other words, where AI is operationalized in the business. This could be through a chatbot that can seamlessly and accurately support customers from around the globe in real time. Or it could be through a security mechanism that understands a healthcare worker's privileged access level and allows them to see only the patient data that is relevant to their function. In response, Mistral NeMo has been curated to deliver enterprise readiness completely, more easily and more quickly. The Mistral and Nvidia teams utilized Nvidia TensorRT-LLM to optimize Mistral NeMo for real-time inferencing and thus ensure the absolute best performance. While it may seem obvious, the collaborative focus on ensuring the best, most scalable performance across any deployment scenario speaks to the understanding both companies seem to have around enterprise deployments. Meaning, it is understood that Mistral NeMo will be deployed across servers, workstations, edge devices and even client devices to leverage AI fully. In any AI deployment like this, models tuned with company data have to meet stringent requirements around scalable performance. And this is precisely what Mistral NeMo does. In line with this, Mistral NeMo is packaged as an Nvidia NIM inference microservice, which makes it straightforward to deploy AI models on any Nvidia-accelerated computing platform. I started this analysis by noting the enterprise AI challenges of cost and complexity. Security is also an ever-present challenge for enterprises, and AI can create another attack vector that organizations must defend. With these noted, I see some obvious benefits that Mistral NeMo and NeMo as a framework can deliver for organizations. As an ex-IT executive, I understand the challenge of adopting new technologies or aligning with technology trends. It is costly and complex and usually exposes a skills gap within an organization. As an analyst who speaks with many former colleagues and clients on a daily basis, I believe that AI is perhaps the biggest technology challenge enterprise IT organizations have ever faced. Nvidia continues to build its AI support with partnerships like the one with Mistral by making AI frictionless for any organization, whether it's a large government agency or a tiny start-up looking to create differentiated solutions. This is demonstrated by what the company has done in terms of enabling the AI ecosystem, from hardware to tools to frameworks to software. The collaboration between Nvidia and Mistral AI is significant. Mistral NeMo can become a critical element of an enterprise's AI strategy because of its scalability, cost and ease of integration into the enterprise workflows and applications that are critical for transformation. While I expect this partnership to deliver real value to organizations of all sizes, I'll especially keep an eye on the adoption of Mistral NeMo across the small-enterprise market segment, where I believe the AI opportunity and challenge is perhaps the greatest.

[4]

Nvidia and Mistral's new model 'Mistral-NeMo' brings enterprise-grade AI to desktop computers

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More Nvidia and French startup Mistral AI jointly announced today the release of a new language model designed to bring powerful AI capabilities directly to business desktops. The model, named Mistral-NeMo, boasts 12 billion parameters and an expansive 128,000 token context window, positioning it as a formidable tool for businesses seeking to implement AI solutions without the need for extensive cloud resources. Bryan Catanzaro, vice president of applied deep learning research at Nvidia, emphasized the model's accessibility and efficiency in a recent interview with VentureBeat. "We're launching a model that we jointly trained with Mistral. It's a 12 billion parameter model, and we're launching it under Apache 2.0," he said. "We're really excited about the accuracy of this model across a lot of tasks." The collaboration between Nvidia, a titan in GPU manufacturing and AI hardware, and Mistral AI, a rising star in the European AI scene, represents a significant shift in the AI industry's approach to enterprise solutions. By focusing on a more compact yet powerful model, the partnership aims to democratize access to advanced AI capabilities. A David among Goliaths: How smaller models are changing the game Catanzaro elaborated on the advantages of smaller models. "The smaller models are just dramatically more accessible," he said. "They're easier to run, the business model can be different, because people can run them on their own systems at home. In fact, this model can run on RTX GPUs that many people have already." This development comes at a crucial time in the AI industry. While much attention has been focused on massive models like OpenAI's GPT-4o, with its hundreds of billions of parameters, there's growing interest in more efficient models that can run locally on business hardware. This shift is driven by concerns over data privacy, the need for lower latency, and the desire for more cost-effective AI solutions. Mistral-NeMo's 128,000 token context window is a standout feature, allowing the model to process and understand much larger chunks of text than many of its competitors. "We think that long context capabilities can be important for a lot of applications," Catanzaro said. "If they can avoid the fine-tuning stuff, that makes them a lot simpler to deploy." The long and short of it: Why context matters in AI This extended context window could prove particularly valuable for businesses dealing with lengthy documents, complex analyses, or intricate coding tasks. It potentially eliminates the need for frequent context refreshing, leading to more coherent and consistent outputs. The model's efficiency and local deployment capabilities could attract businesses operating in environments with limited internet connectivity or those with stringent data privacy requirements. However, Catanzaro clarified the model's intended use case. "I would think more about laptops and desktop PCs than smartphones," he said. This positioning suggests that while Mistral-NeMo brings AI closer to individual business users, it's not yet at the point of mobile deployment. Industry analysts suggest this release could significantly disrupt the AI software market. The introduction of Mistral-NeMo represents a potential shift in enterprise AI deployment. By offering a model that can run efficiently on local hardware, Nvidia and Mistral AI are addressing concerns that have hindered widespread AI adoption in many businesses, such as data privacy, latency, and the high costs associated with cloud-based solutions. This move could potentially level the playing field, allowing smaller businesses with limited resources to leverage AI capabilities that were previously only accessible to larger corporations with substantial IT budgets. However, the true impact of this development will depend on the model's performance in real-world applications and the ecosystem of tools and support that develops around it. The model is immediately available as a Neural Interface Model (NIM) via Nvidia's AI platform, with a downloadable version promised in the near future. Its release under the Apache 2.0 license allows for commercial use, which could accelerate its adoption in enterprise settings. Democratizing AI: The race to bring intelligence to every desktop As businesses across industries continue to grapple with the integration of AI into their operations, models like Mistral-NeMo represent a growing trend towards more efficient, deployable AI solutions. Whether this will challenge the dominance of larger, cloud-based models remains to be seen, but it undoubtedly opens new possibilities for AI integration in enterprise environments. Catanzaro concluded the interview with a forward-looking statement. "We believe that this model represents a significant step towards making AI more accessible and practical for businesses of all sizes," he said. "It's not just about the power of the model, but about putting that power directly into the hands of the people who can use it to drive innovation and efficiency in their day-to-day operations." As the AI landscape continues to evolve, the release of Mistral-NeMo marks an important milestone in the journey towards more accessible, efficient, and powerful AI tools for businesses. It remains to be seen how this will impact the broader AI ecosystem, but one thing is clear: the race to bring AI capabilities closer to end-users is heating up, and Nvidia and Mistral AI have just made a bold move in that direction.

Share

Share

Copy Link

Mistral AI and NVIDIA have jointly announced Mistral NeMo 12B, a new language model designed for enterprise use. This collaboration marks a significant advancement in AI technology, offering improved performance and accessibility for businesses.

A New Era in Enterprise AI

In a groundbreaking collaboration, Mistral AI and NVIDIA have unveiled Mistral NeMo 12B, a cutting-edge language model tailored for enterprise applications. This joint venture marks a significant milestone in the AI industry, promising to revolutionize how businesses leverage artificial intelligence

1

.Technical Specifications and Performance

Mistral NeMo 12B boasts impressive specifications, featuring 12 billion parameters and built on NVIDIA's NeMo framework. The model demonstrates remarkable efficiency, outperforming larger models in various benchmarks. Notably, it achieves 90% of the performance of 70 billion parameter models while using significantly fewer resources

2

.Accessibility and Deployment

One of the most striking features of Mistral NeMo 12B is its accessibility. The model can run on a single NVIDIA H100 GPU or eight NVIDIA L40S GPUs, making it feasible for deployment on desktop computers. This breakthrough brings enterprise-grade AI capabilities to a wider range of businesses, potentially democratizing access to advanced AI technologies

4

.NVIDIA's Broad Model Support

The introduction of Mistral NeMo 12B aligns with NVIDIA's strategy of supporting a diverse range of AI models. This approach allows NVIDIA to cater to various customer needs and use cases, from large-scale enterprise deployments to more specialized applications. By partnering with Mistral AI, NVIDIA continues to strengthen its position in the AI hardware and software ecosystem

3

.Implications for the AI Industry

The collaboration between Mistral AI and NVIDIA signifies a growing trend of partnerships between AI model developers and hardware manufacturers. This synergy is likely to accelerate innovation in the field, leading to more efficient and powerful AI solutions for businesses across various sectors.

Related Stories

Future Prospects and Challenges

As Mistral NeMo 12B enters the market, it faces competition from established players like OpenAI's GPT models and Google's PaLM. However, its focus on enterprise applications and efficiency could carve out a significant niche. The success of this model could potentially influence future developments in AI, particularly in terms of balancing performance with resource efficiency.

Ethical Considerations and Responsible AI

With the increasing accessibility of powerful AI models, questions of ethical use and responsible AI deployment become more pressing. As Mistral NeMo 12B makes its way into various enterprises, it will be crucial for both Mistral AI and NVIDIA to provide guidelines and support for ethical AI practices

1

.References

Summarized by

Navi

[2]

Related Stories

Mistral AI launches 10 open-source models to challenge OpenAI and Google with edge computing focus

02 Dec 2025•Technology

Nvidia Unveils Nemotron-Nano-9B-v2: A Compact AI Powerhouse with Toggle-On Reasoning

19 Aug 2025•Technology

Nvidia Launches NeMo Microservices to Boost Enterprise AI Agent Development

24 Apr 2025•Technology

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology