MIT Researchers Develop "Periodic Table of Machine Learning" to Fuel AI Innovation

2 Sources

2 Sources

[1]

"Periodic table of machine learning" could fuel AI discovery

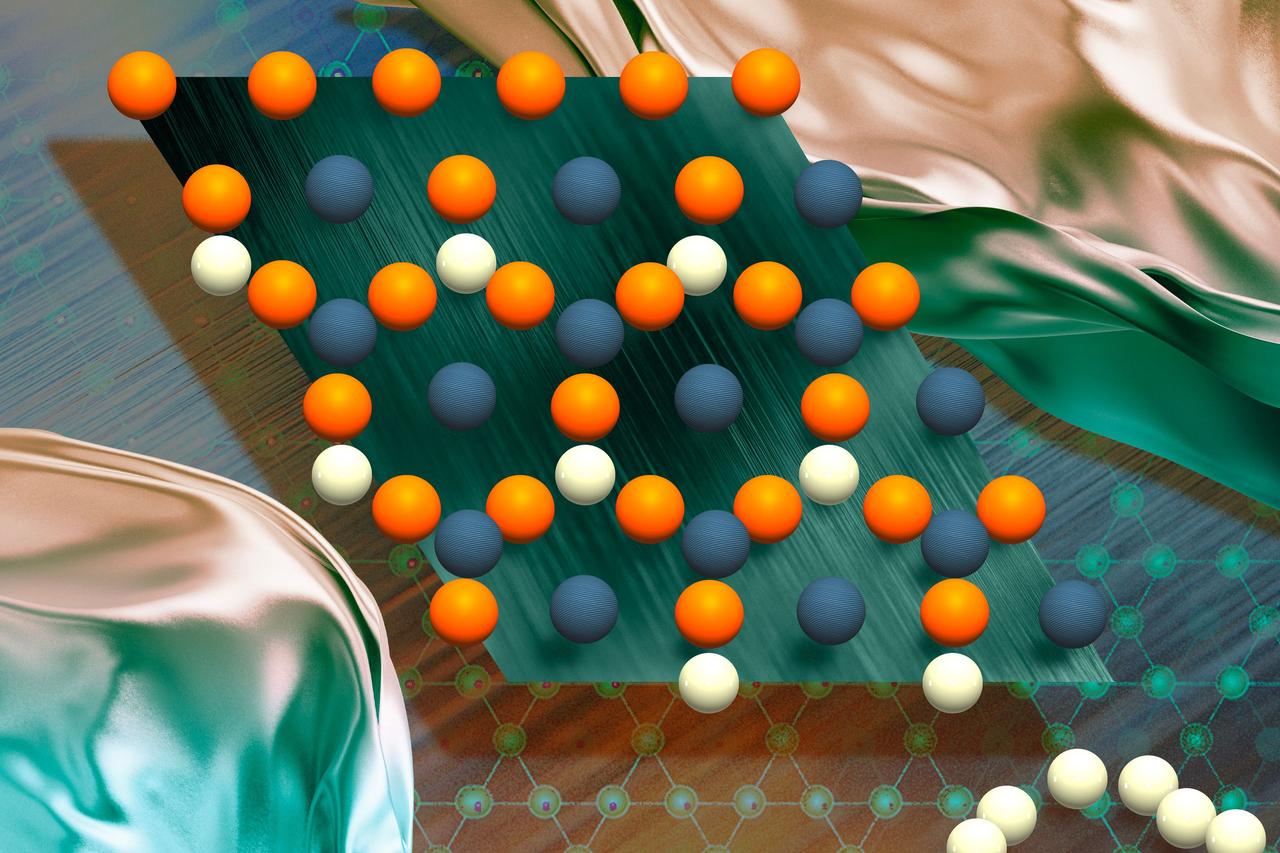

Caption: MIT researchers created a periodic table of machine learning that shows how more than 20 classical algorithms are connected. The new framework sheds light on how scientists could fuse strategies from different methods to improve existing AI models or come up with new ones. MIT researchers have created a periodic table that shows how more than 20 classical machine-learning algorithms are connected. The new framework sheds light on how scientists could fuse strategies from different methods to improve existing AI models or come up with new ones. For instance, the researchers used their framework to combine elements of two different algorithms to create a new image-classification algorithm that performed 8 percent better than current state-of-the-art approaches. The periodic table stems from one key idea: All these algorithms learn a specific kind of relationship between data points. While each algorithm may accomplish that in a slightly different way, the core mathematics behind each approach is the same. Building on these insights, the researchers identified a unifying equation that underlies many classical AI algorithms. They used that equation to reframe popular methods and arrange them into a table, categorizing each based on the approximate relationships it learns. Just like the periodic table of chemical elements, which initially contained blank squares that were later filled in by scientists, the periodic table of machine learning also has empty spaces. These spaces predict where algorithms should exist, but which haven't been discovered yet. The table gives researchers a toolkit to design new algorithms without the need to rediscover ideas from prior approaches, says Shaden Alshammari, an MIT graduate student and lead author of a paper on this new framework. "It's not just a metaphor," adds Alshammari. "We're starting to see machine learning as a system with structure that is a space we can explore rather than just guess our way through." She is joined on the paper by John Hershey, a researcher at Google AI Perception; Axel Feldmann, an MIT graduate student; William Freeman, the Thomas and Gerd Perkins Professor of Electrical Engineering and Computer Science and a member of the Computer Science and Artificial Intelligence Laboratory (CSAIL); and senior author Mark Hamilton, an MIT graduate student and senior engineering manager at Microsoft. The research will be presented at the International Conference on Learning Representations. An accidental equation The researchers didn't set out to create a periodic table of machine learning. After joining the Freeman Lab, Alshammari began studying clustering, a machine-learning technique that classifies images by learning to organize similar images into nearby clusters. She realized the clustering algorithm she was studying was similar to another classical machine-learning algorithm, called contrastive learning, and began digging deeper into the mathematics. Alshammari found that these two disparate algorithms could be reframed using the same underlying equation. "We almost got to this unifying equation by accident. Once Shaden discovered that it connects two methods, we just started dreaming up new methods to bring into this framework. Almost every single one we tried could be added in," Hamilton says. The framework they created, information contrastive learning (I-Con), shows how a variety of algorithms can be viewed through the lens of this unifying equation. It includes everything from classification algorithms that can detect spam to the deep learning algorithms that power LLMs. The equation describes how such algorithms find connections between real data points and then approximate those connections internally. Each algorithm aims to minimize the amount of deviation between the connections it learns to approximate and the real connections in its training data. They decided to organize I-Con into a periodic table to categorize algorithms based on how points are connected in real datasets and the primary ways algorithms can approximate those connections. "The work went gradually, but once we had identified the general structure of this equation, it was easier to add more methods to our framework," Alshammari says. A tool for discovery As they arranged the table, the researchers began to see gaps where algorithms could exist, but which hadn't been invented yet. The researchers filled in one gap by borrowing ideas from a machine-learning technique called contrastive learning and applying them to image clustering. This resulted in a new algorithm that could classify unlabeled images 8 percent better than another state-of-the-art approach. They also used I-Con to show how a data debiasing technique developed for contrastive learning could be used to boost the accuracy of clustering algorithms. In addition, the flexible periodic table allows researchers to add new rows and columns to represent additional types of datapoint connections. Ultimately, having I-Con as a guide could help machine learning scientists think outside the box, encouraging them to combine ideas in ways they wouldn't necessarily have thought of otherwise, says Hamilton. "We've shown that just one very elegant equation, rooted in the science of information, gives you rich algorithms spanning 100 years of research in machine learning. This opens up many new avenues for discovery," he adds. "Perhaps the most challenging aspect of being a machine-learning researcher these days is the seemingly unlimited number of papers that appear each year. In this context, papers that unify and connect existing algorithms are of great importance, yet they are extremely rare. I-Con provides an excellent example of such a unifying approach and will hopefully inspire others to apply a similar approach to other domains of machine learning," says Yair Weiss, a professor in the School of Computer Science and Engineering at the Hebrew University of Jerusalem, who was not involved in this research. This research was funded, in part, by the Air Force Artificial Intelligence Accelerator, the National Science Foundation AI Institute for Artificial Intelligence and Fundamental Interactions, and Quanta Computer.

[2]

'Periodic table of machine learning' could fuel AI discovery

MIT researchers have created a periodic table that shows how more than 20 classical machine-learning algorithms are connected. The new framework sheds light on how scientists could fuse strategies from different methods to improve existing AI models or come up with new ones. For instance, the researchers used their framework to combine elements of two different algorithms to create a new image-classification algorithm that performed 8 percent better than current state-of-the-art approaches. The periodic table stems from one key idea: All these algorithms learn a specific kind of relationship between data points. While each algorithm may accomplish that in a slightly different way, the core mathematics behind each approach is the same. Building on these insights, the researchers identified a unifying equation that underlies many classical AI algorithms. They used that equation to reframe popular methods and arrange them into a table, categorizing each based on the approximate relationships it learns. Just like the periodic table of chemical elements, which initially contained blank squares that were later filled in by scientists, the periodic table of machine learning also has empty spaces. These spaces predict where algorithms should exist, but which haven't been discovered yet. The table gives researchers a toolkit to design new algorithms without the need to rediscover ideas from prior approaches, says Shaden Alshammari, an MIT graduate student and lead author of a paper on this new framework. "It's not just a metaphor," adds Alshammari. "We're starting to see machine learning as a system with structure that is a space we can explore rather than just guess our way through." She is joined on the paper by John Hershey, a researcher at Google AI Perception; Axel Feldmann, an MIT graduate student; William Freeman, the Thomas and Gerd Perkins Professor of Electrical Engineering and Computer Science and a member of the Computer Science and Artificial Intelligence Laboratory (CSAIL); and senior author Mark Hamilton, an MIT graduate student and senior engineering manager at Microsoft. The research will be presented at the International Conference on Learning Representations. An accidental equation The researchers didn't set out to create a periodic table of machine learning. After joining the Freeman Lab, Alshammari began studying clustering, a machine-learning technique that classifies images by learning to organize similar images into nearby clusters. She realized the clustering algorithm she was studying was similar to another classical machine-learning algorithm, called contrastive learning, and began digging deeper into the mathematics. Alshammari found that these two disparate algorithms could be reframed using the same underlying equation. "We almost got to this unifying equation by accident. Once Shaden discovered that it connects two methods, we just started dreaming up new methods to bring into this framework. Almost every single one we tried could be added in," Hamilton says. The framework they created, information contrastive learning (I-Con), shows how a variety of algorithms can be viewed through the lens of this unifying equation. It includes everything from classification algorithms that can detect spam to the deep learning algorithms that power LLMs. The equation describes how such algorithms find connections between real data points and then approximate those connections internally. Each algorithm aims to minimize the amount of deviation between the connections it learns to approximate and the real connections in its training data. They decided to organize I-Con into a periodic table to categorize algorithms based on how points are connected in real datasets and the primary ways algorithms can approximate those connections. "The work went gradually, but once we had identified the general structure of this equation, it was easier to add more methods to our framework," Alshammari says. A tool for discovery As they arranged the table, the researchers began to see gaps where algorithms could exist, but which hadn't been invented yet. The researchers filled in one gap by borrowing ideas from a machine-learning technique called contrastive learning and applying them to image clustering. This resulted in a new algorithm that could classify unlabeled images 8 percent better than another state-of-the-art approach. They also used I-Con to show how a data debiasing technique developed for contrastive learning could be used to boost the accuracy of clustering algorithms. In addition, the flexible periodic table allows researchers to add new rows and columns to represent additional types of datapoint connections. Ultimately, having I-Con as a guide could help machine learning scientists think outside the box, encouraging them to combine ideas in ways they wouldn't necessarily have thought of otherwise, says Hamilton. "We've shown that just one very elegant equation, rooted in the science of information, gives you rich algorithms spanning 100 years of research in machine learning. This opens up many new avenues for discovery," he adds. This research was funded, in part, by the Air Force Artificial Intelligence Accelerator, the National Science Foundation AI Institute for Artificial Intelligence and Fundamental Interactions, and Quanta Computer.

Share

Share

Copy Link

MIT researchers have created a periodic table of machine learning algorithms, showcasing connections between classical methods and potentially paving the way for new AI discoveries and improvements.

MIT Researchers Unveil Groundbreaking "Periodic Table of Machine Learning"

In a significant breakthrough for artificial intelligence research, MIT scientists have developed a "periodic table of machine learning" that illustrates the connections between more than 20 classical machine-learning algorithms. This innovative framework, dubbed Information Contrastive Learning (I-Con), promises to revolutionize the way researchers approach AI development and optimization

1

2

.The Unifying Equation: A Key to Algorithm Connections

At the heart of this discovery lies a unifying equation that underpins many classical AI algorithms. The researchers found that despite their apparent differences, these algorithms share a common mathematical foundation. This insight allowed them to reframe popular methods and arrange them into a table, categorizing each based on the approximate relationships it learns

1

.Shaden Alshammari, the lead author of the study, explains, "We're starting to see machine learning as a system with structure that is a space we can explore rather than just guess our way through"

1

.Accidental Discovery Leading to Profound Insights

The journey to this breakthrough began unexpectedly when Alshammari, while studying clustering algorithms, noticed similarities with contrastive learning techniques. This observation led to a deeper mathematical investigation, revealing that these seemingly disparate algorithms could be reframed using the same underlying equation

1

2

.Mark Hamilton, the senior author of the paper, adds, "We almost got to this unifying equation by accident. Once Shaden discovered that it connects two methods, we just started dreaming up new methods to bring into this framework"

1

.Practical Applications and Future Potential

The I-Con framework has already demonstrated its practical value. By combining elements from different algorithms, the researchers created a new image-classification algorithm that outperformed current state-of-the-art approaches by 8 percent

1

2

.Moreover, the periodic table structure reveals gaps where new algorithms could potentially exist, opening up exciting avenues for future research and discovery. The team has also shown how techniques from one area of machine learning can be applied to enhance performance in another, such as using contrastive learning methods to improve clustering algorithms

1

2

.Related Stories

Implications for the AI Research Community

This new framework provides researchers with a powerful toolkit for designing new algorithms without reinventing the wheel. It encourages thinking outside the box and combining ideas in novel ways, potentially accelerating the pace of AI innovation

1

2

.Hamilton emphasizes the significance of this work, stating, "We've shown that just one very elegant equation, rooted in the science of information, gives you rich algorithms spanning 100 years of research in machine learning. This opens up many new avenues for discovery"

1

.Collaborative Effort and Future Directions

The research team includes members from MIT, Google AI Perception, and Microsoft, highlighting the collaborative nature of this groundbreaking work. Their findings will be presented at the International Conference on Learning Representations, potentially inspiring new directions in AI research worldwide

1

2

.As the field of artificial intelligence continues to evolve rapidly, frameworks like I-Con may prove instrumental in guiding researchers towards more efficient and effective AI solutions, potentially revolutionizing various sectors from healthcare to technology and beyond.

References

Summarized by

Navi

[2]

Related Stories

MIT's SCIGEN: Steering AI to Create Breakthrough Quantum Materials

22 Sept 2025•Science and Research

MIT Researchers Develop Graph-Based AI Model to Uncover Hidden Links Across Disciplines

13 Nov 2024•Science and Research

AI in Chemistry breakthrough: Two systems accelerate molecule design and drug discovery by 10x

29 Jan 2026•Science and Research

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology